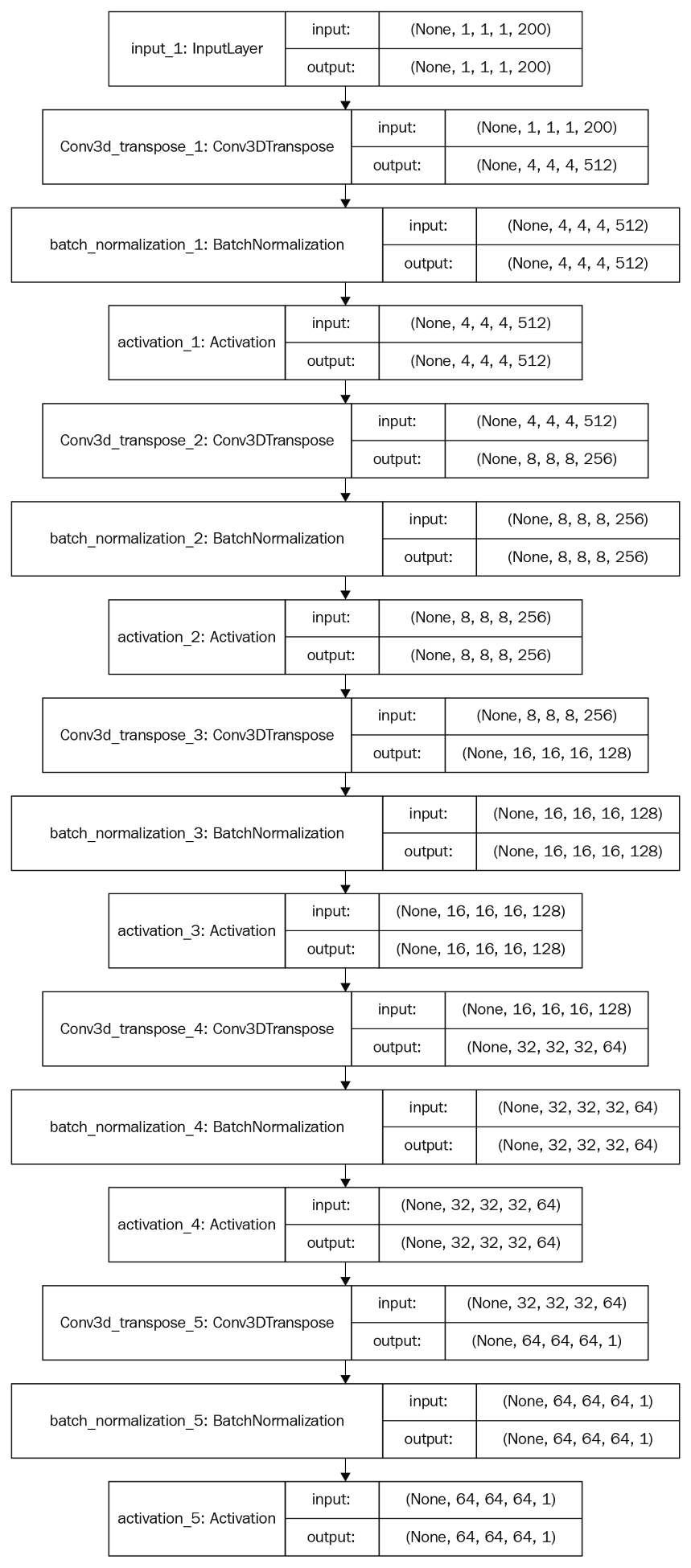

The generator network contains five volumetric, fully convolutional layers with the following configuration:

- Convolutional layers: 5

- Filters: 512, 256, 128, 64, 1

- Kernel size: 4 x 4 x 4, 4 x 4 x 4, 4 x 4 x 4, 4 x 4 x 4, 4 x 4 x 4

- Strides: 1, 2, 2, 2, 2 or (1, 1), (2, 2), (2, 2), (2, 2), (2, 2)

- Batch normalization: Yes, Yes, Yes, Yes, No

- Activations: ReLU, ReLU, ReLU, ReLU, Sigmoid

- Pooling layers: No, No, No, No, No

- Linear layers: No, No, No, No, No

The input and output of the network are as follows:

- Input: A 200-dimensional vector sampled from a probabilistic latent space

- Output: A 3D image with a shape of 64x64x64

The architecture of the generator can be seen in the following image:

The flow of the tensors and the input and output shapes of the tensors for each layer in the discriminator network are shown in the following diagram. This will give you a better understanding of the network:

A fully convolutional network is a network without fully connected dense layers at the end of the network. Instead, it just consists of convolutional layers and can be end-to-end trained, like a convolutional network with fully connected layers. There are no pooling layers in a generator network.