1

A New Paradigm Is Needed

“The significant problems we face cannot be solved at the same level of thinking we were at when we created them.”

—Albert Einstein

The history of Six Sigma goes back to Motorola in about the year 1987 (Harry and Schroeder, 2000). Motorola was facing stiff foreign competition in the pager market and desperately needed to both improve quality and lower costs to stay in business. By applying Six Sigma, the company was able to do both. Other electronics manufacturing companies, including Honeywell and AlliedSignal, saw Motorola’s success and soon launched their own initiatives. In late 1995, GE CEO Jack Welch publicly announced that Six Sigma would be the biggest initiative in GE’s history—and would be his own personal number one priority for the next five years (Snee and Hoerl, 2003). GE reported billions of dollars of savings in its annual reports over the next several years, resulting in a significant increase in GE stock price and prompting many more organizations to adopt Six Sigma.

Honeywell, GE, and others realized that a different approach was needed for designing new products and services. That is, when designing a new process, there’s nothing to improve because the process itself doesn’t exist yet. Based on earlier design work by Honeywell, GE developed the Define, Measure, Analyze, Design, Verify (DMADV) approach to design for Six Sigma (Snee and Hoerl, 2003). The GE LightSpeed CT scanner was the first new product GE developed using the DMADV process. The product, introduced in 1998, used multislice technology to reduce typical scanning time from about 3 minutes to 30 seconds. This was a big win for patients as well as hospitals because it significantly enhanced throughput in CT scanning. GE gained $60 million in sales during the first 90 days (GE, 1999).

Several major health networks launched Six Sigma initiatives during this time frame. Commonwealth Health Corporation reported $1.6 million in savings in the radiology department alone during the first year (Snee and Hoerl, 2005). Among other innovations to the Six Sigma methodology, GE developed an approach to applying Six Sigma outside of manufacturing, including to financial processes at GE Capital. Partly because of the success GE Capital demonstrated with Six Sigma, Bank of America became the first major bank to launch a Six Sigma initiative in 2001 (Snee and Hoerl, 2005). By the end of 2003, its cumulative financial benefits exceeded $2 billion (Jones, 2004). This development was important in demonstrating the universal application of Six Sigma: It is not simply a manufacturing initiative, but instead is a generic improvement methodology.

The Expansion to Lean Six Sigma

As early as 2003, practitioners were noticing limitations of Six Sigma (we return to this shortly). For example, Toyota had developed generally accepted principles of manufacturing excellence over several decades of intense improvement efforts on the assembly line. These principles, referred to as Lean Manufacturing, were often overlooked in Six Sigma projects because they were simply not well known. George (2002) suggested integrating Lean principles with Six Sigma to create the broader improvement initiative Lean Six Sigma. GE and others quickly transitioned their Six Sigma initiatives to Lean Six Sigma. Results continued to roll in.

Through deployments across diverse organizations, Six Sigma practitioners and researchers have deepened the body of knowledge driving continuous improvement in both theory and practice. For example, several journals have emerged to focus specifically on extending research on Six Sigma and continuous improvements; these include the International Journal of Six Sigma and Competitive Advantage (http://www.inderscience.com/jhome.php?jcode=ijssca), Six Sigma Forum Magazine (http://asq.org/pub/sixsigma/), and the International Journal of Lean Six Sigma (http://www.emeraldinsight.com/journal/ijlss).

Extending the theory and practice of continuous improvement is important, but the most easily quantifiable impact of Lean Six Sigma is financial. A diverse array of organizations spanning the globe have added billions of dollars to their bottom lines. Of course, financial results are perhaps more easily quantified than others; the impact on the quality of healthcare, safety of products and food supply, and even protection of the environment should not be overlooked. We argue that Lean Six Sigma applications have saved countless lives.

In short, based on the documented results in healthcare, finance, manufacturing, and other organizational areas, Lean Six Sigma clearly is the single most impactful improvement methodology in history. Therefore, we might logically ask, why change anything? As the old saying goes, “If it ain’t broke, don’t fix it.” We answer this question in the next section.

Macro Societal Shifts Since 1987

Lean Six Sigma definitely has been a tremendous success, but the world has changed considerably since Six Sigma was originally developed in 1987. For that matter, the world has changed considerably since 2003, when we published the first edition of this book (Snee and Hoerl, 2003). Six Sigma has morphed and evolved along with the world, but in our opinion, this evolution has not been to the extent needed to move the discipline of continuous improvement to a new level. Before discussing the evolution of Six Sigma and its ongoing limitations, we briefly review a few of these macro societal shifts, discussed in the subsequent sections:

![]() Accelerated globalization

Accelerated globalization

![]() Massive immigration into North America and Europe

Massive immigration into North America and Europe

![]() Growth of IT and Big Data analytics

Growth of IT and Big Data analytics

![]() Recognition of the uniqueness of large, complex unstructured problems

Recognition of the uniqueness of large, complex unstructured problems

![]() Modern security concerns such as terrorism and computer hacking

Modern security concerns such as terrorism and computer hacking

Accelerated Globalization

Obviously, massive shifts have occurred in the global economy since 1987. Motorola was already facing stiff global competition at that time, primarily from Japan, and globalization has accelerated even more dramatically since then. For example, India has become globally recognized for its vast resources in information technology, China has become a dominant player in global manufacturing, and large numbers of call centers supporting customers in Europe and North America have sprung up in developing countries such as the Philippines.

In fact, although globalization is not the focus of this book, it has become a critical issue in recent national elections, as with the “Brexit” vote in 2016 leading Great Britain to leave the European Union, the U.S. presidential election in the same year, and subsequent referendums in Italy and other European countries. There’s no denying that we live in a truly global economy today. This was not the case in 1987, and it has obvious implications for the need to improve to meet global standards of excellence.

Massive Immigration into North America and Europe

Similarly, the matter of immigration has been a hot button issue in many elections and referendums. In addition to immigration concerns in the United States, large waves of immigrants have come into Europe from war-torn countries in the Middle East. Our point here is not political: We are simply noting that these waves of immigration have created more diverse workforces in many countries. Having staff with different cultural backgrounds and viewpoints impacts how teams go about problem solving in improvement projects. Unquestionably, employees in Western countries have a more diverse approach toward improvement than in the past. In our view, this can have a positive effect on teamwork, but it needs to be effectively managed.

Growth of IT and Big Data Analytics

In 1987, the Internet was in an embryonic stage of development. Today, of course, the Internet is ubiquitous in society, including healthcare, education, and business. Google and Amazon have become major players in the business world, despite having minimal brick-and-mortar facilities. Similarly, the state of information technology (IT) in 1987 is considered antiquated by today’s standards. These developments in IT have led to a rapid expansion of data acquisition, storage, and analysis, a phenomenon commonly referred to as Big Data or data science (Davenport and Patil, 2012). We discuss Big Data analytics in more detail later in this chapter. Do these developments in IT, the Internet, and Big Data have implications for how we should go about continuous improvement? We feel that they clearly do.

Recognition of Uniqueness of Large, Complex, Unstructured Problems

The world has also recognized the need to collectively address large, complex, and unstructured problems. For example, the issue of global climate change cannot be addressed by any one organization or even one country; it is simply too big of an issue. Likewise, addressing emerging pandemics such as Zika, Ebola, or drug-resistant tuberculosis requires a global effort.

In the year 2000, the United Nations announced a set of ambitious development goals, referred to as the Millennium Development Goals (http://www.un.org/millenniumgoals/), which require global cooperation to achieve. These goals included such large, complex, unstructured issues as addressing extreme poverty, providing universal access to education, reducing instances of HIV/AIDS, and enhancing the status of girls and women. We further discuss the issue of large, complex, unstructured problems later in this chapter.

Modern Security Concerns

Security has always been a concern for both societies and businesses. However, the origins of Six Sigma predate the terrorist attacks on the United States on September 11, 2001. Before 2001, many people in the United States and some western European counties believed that terrorism occurred only elsewhere, not in their own countries. Since 2001, we have learned that terrorism is a serious concern everywhere; it’s a global problem. Those of us who travel by air realize that we will never go back to the days of casually walking onto airplanes with minimal security delays.

Beyond terrorism, individuals and businesses both have serious security concerns over computer hacking. Providing protection from identity theft has become a billion-dollar industry in the United States alone. Businesses across the globe received a wake-up call on December 18, 2013, in the heart of the Christmas shopping season, when someone hacked into the computer records of the retail giant Target and downloaded more than 40 million credit card numbers (http://money.cnn.com/2013/12/18/news/companies/target-credit-card/). Target spent vast amounts of money doing damage control, including providing free identity theft protection to customers whose numbers were stolen. Of course, the damage to Target’s reputation was even worse. We discuss the issue of security and risk management in greater detail shortly.

Clearly, we live in a very different world—and, most people would say, also a much more dangerous world—than in 1987. How should we think about continuous improvement in such a world? Is Lean Six Sigma the best approach to take for all problems, including large, complex, unstructured problems, such as climate change or the Millennial Development Goals? We argue that a different paradigm is needed to take continuous improvement to a new level in today’s world. We refer to this paradigm as holistic improvement, and we recommend Lean Six Sigma 2.0 as the best methodology based on this paradigm.

Before describing what we mean by holistic improvement in the next chapter, we briefly review the evolution of Six Sigma here and explain what we mean by Lean Six Sigma 2.0.

Current State of the Art

What exactly do we mean by Lean Six Sigma 2.0? We borrow the numbering system of information technology and its numbering approach to software revisions. The first version of a software system is usually Version 1.0, for obvious reasons. Next, when minor updates are made, such as fixing bugs or providing incremental enhancements, the new version typically is listed as 1.1, or perhaps even 1.0.1, to indicate an even smaller change than 1.1 (https://en.wikipedia.org/wiki/Software_versioning). The version is not listed as 2.0 until it has a major enhancement, which often involves a total rewrite of the code.

In our view, Six Sigma has undergone considerable evolution during its lifetime. However, these enhancements have been primarily incremental in nature and have not rethought the fundamental paradigm of Six Sigma. We therefore refer to these enhancements as Six Sigma 1.1, 1.2, 1.3, and so on, as shown in Table 1.1. We feel that because of the radical changes in the world since 1987, a rethinking of the fundamental paradigm of Six Sigma is now required. We refer to this approach as Lean Six Sigma 2.0, and we discuss this in more detail in Chapter 2, “What Is Holistic Improvement?”.

Let’s take another look at how Six Sigma has evolved since 1987.

Table 1.1 Versions of Six Sigma to Date

Version |

Description |

1.0 |

Original rollout at Motorola: 1987 |

1.1 |

GE enhancements: circa 2000 |

1.2 |

Lean Six Sigma: circa 2005 |

1.3 |

Lean Six Sigma and Innovation (ambidextrous organizations): circa 2010 |

Versions 1.0 and 1.1

As noted in the previous section, we refer to the original development of Six Sigma at Motorola as Six Sigma 1.0. Recall that this methodology focused on manufacturing and ways to improve existing processes. GE made several enhancements to Six Sigma, as discussed previously. These included adding a Define stage, developing the DMADV approach to designing projects, and broadening the effort beyond manufacturing to also include finance, healthcare, and administrative processes. GE referred to applications outside of manufacturing as Commercial Quality (Hahn et al., 2000). Each of these enhancements was noteworthy and important in its own right. However, we could also argue that each enhancement was a logical extension of what came previously. Therefore, the GE version of Six Sigma around the turn of the millennium might accurately be referred to as Six Sigma 1.1. Version 1.1 was a significant improvement over Version 1.0, but it was based on the same fundamental paradigm.

Version 1.2: Lean Six Sigma

The first major integration effort occurred when Lean Manufacturing principles were integrated with Six Sigma, creating Lean Six Sigma (George, 2002). Before this integration, proponents of one of these methodologies commonly viewed the other methodology as the enemy. Six Sigma proponents tended to disparage Lean as simplistic and unscientific, and Lean proponents disparaged Six Sigma as expensive and academic, essentially trying to use dynamite to kill an ant. This competition confused management at many organizations, who tried to filter through the smoke to determine what approaches to use. A major advantage of Lean Six Sigma (which took several years to become popular enough to replace Six Sigma) is that it minimized this competition and put both sets of proponents on the same team. Of course, it also broadened the scope of problems that could be tackled effectively by offering a more diverse toolkit. We therefore refer to Lean Six Sigma as Version 1.2, a significant enhancement over Version 1.1.

Version 1.3: Lean Six Sigma and Innovation

More recently, both researchers and practitioners have investigated the relationship between Lean Six Sigma and innovation. Hindo (2007) evaluated issues in Six Sigma deployment at 3M and suggested that Six Sigma and innovation are antagonistic. That is, Hindo contended that although deploying Six Sigma offered important incremental improvements, it would damage creative, innovative cultures, such as the one at 3M, because it was too rigorous and disciplined.

We did not take this contention seriously because, throughout our careers, we have seen that virtually all Six Sigma projects require creative thought to be completed successfully. However, we understand that this claim needs to be answered via research. Hoerl and Gardner (2010) demonstrated that Lean Six Sigma can actually enhance the innovation of an organization. They pointed out that the scientific method, upon which Lean Six Sigma is based, has sparked creativity and accelerated innovation for centuries. More specifically, they suggested that a proper relationship exists between the development of business strategy and idea generation based on this strategy, and Six Sigma projects. This relationship incorporates both Design for Six Sigma and more traditional Lean Six Sigma projects.

Birkinshaw and Gibson (2004) noted that, to be successful in the long term, organizations need to be operationally efficient (that is, continuously improve existing operations) and also able to innovate to develop new products and services. Doing only one of these well is not sufficient over time. Birkinshaw and Gibson refer to organizations that can both optimize operations (“exploitation”) and innovate (“exploration”) as ambidextrous. Significant research has shown that when Six Sigma is properly implemented (for example, with a good balance of design projects and improvement projects), it does help organizations achieve ambidexterity. In addition, Six Sigma can enhance rather than stifle creativity and innovation (He et al., 2015; Gutierrez et al., 2012; Gutierrez et al., 2016).

We view the effective integration of Lean Six Sigma with creative efforts to innovate new products and services to be a significant step forward in the overall evolution of Lean Six Sigma. Therefore, we refer to this integration that produces ambidextrous organizations as Lean Six Sigma 1.3. Of course, additional skills and tools are needed to effectively innovate. This includes the theory of inventive problem solving, which is often referred to in the literature as TRIZ, the transliteration of its original Russian acronym (Altshuller, 1992).

We now consider the limitations of Lean Six Sigma 1.3 and look at a potential new paradigm for overcoming these limitations using a fundamentally new approach to improvement that is suitable for modern times.

The Limitations of Lean Six Sigma 1.3

Lean Six Sigma 1.3 offers many commendable improvements. For example, it covers a diverse array of application areas, from Internet commerce and other high-tech industries to healthcare, finance, and, of course, manufacturing. It has incorporated key Lean principles from the Toyota Production System, such as line of sight and 5S, to drive improvement even before data is collected. Furthermore, research has documented a clearer, more synergistic relationship between Lean Six Sigma and disruptive innovation, and also demonstrated how to use Design for Six Sigma projects to take innovative concepts to market. Despite these advantages, we feel that Lean Six Sigma 1.3 still needs to overcome the following limitations:

![]() Still not appropriate for all problems

Still not appropriate for all problems

![]() Does not incorporate routine problem solving

Does not incorporate routine problem solving

![]() Does not provide a complete quality management system

Does not provide a complete quality management system

![]() Cannot efficiently handle large, complex, and unstructured problems

Cannot efficiently handle large, complex, and unstructured problems

![]() Does not take advantage of Big Data analytics

Does not take advantage of Big Data analytics

![]() Does not address modern risk management issues

Does not address modern risk management issues

In this section, we highlight the importance of each limitation in greater detail. In our view, minor adjustments to Lean Six Sigma 1.3 will not address these limitations. Instead, a new paradigm is needed. Kuhn (1962) noted that the need for a new paradigm becomes apparent when the list of problems that existing paradigms cannot solve becomes too large to ignore. We feel that Lean Six Sigma 1.3 is now at this place.

Still Not Appropriate for All Problems

Six Sigma, not even in the form of Lean Six Sigma 1.3, is the most appropriate approach for all projects. For example, the second author of this book (Hoerl) was asked years ago to help a computer scientist with his Six Sigma project at GE. When Hoerl asked him about the project, he stated that it involved installing an Oracle database. Hoerl asked if he knew how to install an Oracle database, and he replied yes. Hoerl asked if he had already done this successfully, and the computer scientist again replied yes. Now with a puzzled look on his face, Hoerl asked what problem required a solution. The computer scientist replied that there was no problem to be solved, but his boss had told him to use Six Sigma on this installation, so this is what he was going to do.

This is a classic case of a “solution known” problem (Hoerl and Snee, 2013). We have a problem, but the solution is already known. This does not necessarily mean that the solution is easy to implement—properly installing databases is not trivial. However, there is no need to analyze data to search for a solution. We just need to ensure that the people doing the work have the right skills and experience, and perhaps procedures, to properly implement the known solution. The question of whether the solution is known or unknown is a key consideration in choosing a methodology, as we see in the next chapter.

The key point, we hope, is clear. Six Sigma was not needed, and perhaps not even helpful, for that installation. Of course, some type of formal project management system, and possibly database protocols, were needed to ensure success. But Six Sigma was not needed. Over the years, we authors have both had numerous similar conversations with people who were trying to force-fit Six Sigma where it was not needed—and sometimes where it was not appropriate. For example, as in this case, Six Sigma is helpful only for “solution unknown” problems. Simpler methods can address more straightforward problems. These include Work-Out, a team problem-solving method developed at GE (Hoerl, 2008), so-called “Nike projects” (Just Do It!), and “Is–Is Not” analysis (Kepner and Tregoe, 2013), to name just a few. We discuss each of these techniques within the context of a holistic approach to improvement in subsequent chapters.

Of course, integrating Lean into Six Sigma helps avoid force-fitting Six Sigma because Lean might be an appropriate methodology for a given problem when Six Sigma is not. For example, Lean has proven principles that provide excellent guidance on “solution known” problems (Hoerl and Snee, 2013). However, just as Six Sigma is not appropriate for all problems, Lean also is not appropriate for all problems. In short, any time we select the problem-solving methodology before we have clearly documented the problem, we are prone to force-fitting. Shouldn’t we learn about the problem first and only then determine the best approach to find a solution? After all, no one would continue to see a physician who recommends treatment before learning about the patient’s condition.

Does Not Incorporate Routine Problem Solving

Lean Six Sigma 1.3 does not incorporate routine problem solving. Suppose that a manufacturing line begins leaking oil at 3:30 AM. Clearly, this is not the time to put together a Six Sigma team to gather data and study the problem for a few months; someone needs to promptly stop the leak! Similarly, if someone notices mislabeled medication in a pharmacy, we wouldn’t want the pharmacy to put together a Six Sigma team; it needs to identify and remove the mislabeled medication immediately, before anyone receives it.

By “routine problem solving,” we mean the normal, day-to-day problem solving that occurs in all organizations, typically in real time. Of course, some people and some organizations are particularly good at it, and others aren’t. The ideal is to have each employee well trained in how to approach routine problems, diagnose root causes, and identify and test solutions. Employees should also understand when to call for help. Most of the problems faced in the workplace, and even in our private lives, can be solved in a short amount of time with no data or minimal data. In the case of the leaking oil, you would just follow the oil to find the source of the leak.

Of course, some problems are not easily solved on a routine basis. For example, suppose that this is the fifth time this year that one of the machines in the plant has begun leaking oil. Why is this problem reoccurring? Is the fundamental root cause the oil itself, the equipment, the way we are operating the equipment, the way we are maintaining the equipment, or something else? To solve this higher-level problem, a team and some formal methodology (perhaps Six Sigma) is likely needed. The key point we are making is that routine problem solving is an important aspect of continuous improvement; however, we typically do not need Lean Six Sigma, nor is there time to conduct a lengthy project. Immediate solutions are needed.

Not a Complete Quality Management System

Fundamentally, Lean Six Sigma 1.3 is a project-based methodology for driving improvement, but it is not a complete quality management system. That is, it does not replace ISO 9000 quality systems or provide the same breadth as national quality awards, such as the Malcolm Baldrige National Quality Award (MBNQA) in the United States. For example, individual Six Sigma projects might lead to calibrating measurement equipment in a lab during the Measure phase, but they would not provide an overall laboratory calibration system. Similarly, one element of the MBNQA addresses strategic planning; however, we do not advise organizations to develop their strategic plan through Six Sigma projects. Of course, we hope that continuous improvement and enabling methods such as Lean Six Sigma are key elements of the strategic plan.

We could give many other examples here. Our point is simply that an organization’s overall quality system should be developed in a top-down manner, based on its philosophy and strategic plan, to help meet business objectives. Six Sigma projects, on the other hand, are narrowly focused on specific problems that have been identified and that typically can be solved in roughly three to six months. Conversely, an overall quality management system should not be developed from the bottom up, based on a set of individual projects that were chosen for other purposes. If this is the case, then, by definition, the quality management system is not strategic in nature.

Before describing a recommended paradigm to address the limitations of Lean Six Sigma 1.3, we elaborate further on a few related phenomenon that we mentioned briefly previously: the issue of large, complex, unstructured problems; the emergence of Big Data analytics; and the increased importance of risk management in today’s world.

Inefficient at Handling Large, Complex, and Unstructured Problems

We noted earlier that, since 1987, there has been a growing awareness that some problems are too large, complex, and unstructured to be solved with traditional problem-solving methods (including Lean Six Sigma). As noted by Hoerl and Snee (2017), applications in such areas as genomics, public policy, and national security often present significant challenges, even in terms of precisely defining the specific problem to be solved. For example, in obtaining and utilizing data to protect national security, we could perhaps develop an excellent system relative to surveillance and threat identification, but it would essentially result in a police state limiting privacy and individual rights. Few people would consider this a successful or desirable system.

Similarly, the system currently in place for approving new pharmaceuticals in the United States involves a series of clinical trials and analyses guided by significant subject matter knowledge, such as identifying likely drug interactions. No single experimental design or statistical analysis results in a new approved pharmaceutical. Furthermore, the system must balance the need for public safety with the urgent need for new medications to combat emerging diseases such as Ebola or Zika. This problem is complex.

Such problems are unique from most others that can be solved through routine problem solving, or even through Lean Six Sigma. In the following subsections, we briefly discuss some of the important attributes of these types of problems:

Too Large to Tackle with One Method

In terms of size, the problem is simply too large to be solved with any one methodology. Several tools, and perhaps several different disciplines, are required to address the full scope. The problem cannot be resolved in the usual three to six months needed for a Lean Six Sigma project. Hoerl and Snee (2017) gave the example of developing a default prediction methodology to protect a $500 billion portfolio at GE Capital. Several disciplines, including quantitative finance, statistics, operations research, and computer science, were needed to find an effective solution to this problem.

Complex and Challenging

The problem has significant complexity; it is not only technically difficult, but it has many facets (for example, political, legal, or organizational challenges in addition to technical challenges). Kandel et al. (2012) noted the dearth of research on how analytics are actually utilized within an organizational context and pointed out that this is critical to results. Typically, the technical problem cannot be addressed without understanding and addressing the nontechnical challenges. In the case of the default predictor, GE Capital insisted that, no matter how complex the predictor technology was, it had to provide clear and relatively simple advice to portfolio managers, without technical jargon.

Lack of Structure

The problem itself is not well defined, at least not initially. Many of GE’s original Six Sigma projects faced this issue, which led to the addition of the Define step in the DMAIC process. However, large, complex, unstructured problems often have an even greater lack of structure initially, requiring more up-front effort to structure the problem. In the case of the default prediction system, the word default does not have a generally accepted definition within financial circles. For example, Standard and Poor’s (S&P) uses a different definition of default than Dunn and Bradstreet (D&B). Therefore the team was asked to predict something that was not even defined.

Data Challenges

Most textbook problems in virtually all quantitative disciplines, from statistics to mechanical engineering, to econometrics, come with “canned” data sets. These might, of course, involve real data, but they are typically freely given with no effort required to obtain them. Generally, the data is of unquestionable quality, often presented in statistics texts as a “random sample.” Naturally, researchers and practitioners who have to collect their own data understand how challenging it is to obtain high-quality data. Random sampling is an ideal that is rarely accomplished in practice. For many significant problems, the existing data either is wholly inadequate or comes from disparate data sources of different quality and quantity. In the default prediction case study, minimal data existed because GE Capital was largely built by acquisition; there was no “master” set of data.

Lack of a Single “Correct” Solution

Most textbook problems also have a single correct answer, often given in the appendix. Many real problems also have a single correct answer. Even in online data competitions, such as those on kaggle.com, an objective metric, such as residual standard error when predicting a holdout data set, typically is used to define the “best” model. However, complex problems do not have a “correct” solution that we can look up in the appendix or even a reference text. They are too big, too complicated, and too constrained because of the issues discussed previously. Certainly, some solutions might be better than others, but the problem also could be solved in multiple ways; saying that one particular solution was “correct” or “best” would be virtually impossible.

The Need for a Strategy

Given the issues noted earlier, it is not possible to theoretically derive the correct solution for large, complex, and unstructured problems. Readers with a theoretical mind-set might be frustrated with the inability to find an optimal solution. Conversely, however, working solely by experience (trying to replicate previous solutions, for example) is generally hit-or-miss because each problem has specific complexities that make it different. A unique strategy needs to be developed to attack this specific problem, based on its unique circumstances. Both theory and experience help quite a bit, but an overall strategy that involves multiple tools applied in some logical sequence (and perhaps one that integrates multiple disciplines, especially computer science) is required.

In this sense, we argue that a solution needs to be engineered using known science, and often including statistics, as the components. In our opinion, textbooks and academic courses across quantitative disciplines do not discuss strategy enough, if at all. Engineering solutions to large, complex, unstructured problems requires a problem solving mind-set, but it must be guided by both theory and experience. A problem-solving mind-set typically looks for an effective solution, realizing that an optimal solution does not exist for such problems. As we discuss shortly, this typically requires an engineering paradigm versus a pure science paradigm.

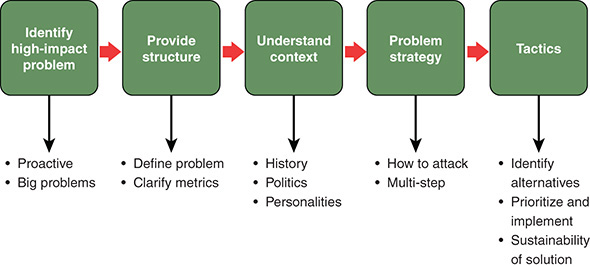

Statistical engineering (Hoerl and Snee 2017) has been proposed as an overall approach to developing a strategy to attack such problems. Statistical engineering is defined as “the study of how to best utilize statistical concepts, methods and tools, and integrate them with information technology and other relevant disciplines, to achieve enhanced results” (Hoerl and Snee, 2010, p.12). Note that statistical engineering is not a problem solving methodology, per se, as Lean Six Sigma is, but rather is a discipline. However, a generic statistical engineering framework to attack large, complex, unstructured problems was given by DiBenedetto et al. (2014). Figure 1.1 shows this framework.

Figure 1.1 Framework for attacking large, complex, unstructured problems

In Figure 1.1, note that once the high-impact problem has been identified, it needs to be properly structured. Significant time and effort are typically required to understand the context of the problem. Large, complex problems have defied solution for a reason; a thorough understanding of the context of the problem is critical to finding a solution. By context, we mean such aspects as these:

![]() The full scope of the problem, including technical, political, legal, and social aspects, to name just a few

The full scope of the problem, including technical, political, legal, and social aspects, to name just a few

![]() How the problem came into existence in the first place

How the problem came into existence in the first place

![]() What solutions have been attempted previously

What solutions have been attempted previously

![]() Why these solutions have been inadequate

Why these solutions have been inadequate

Clearly, fixing a leak in an oil line does not require understanding this depth of context. However, addressing problems such as the Millennium Development Goals, discussed previously, certainly does. This is another illustration of why the solution needs to be tailored to the problem. Once the context is properly understood, the team is in a position to develop a strategy to attack this particular problem. The unique strategy typically entails several tactics or elements of the overall strategy. We discuss this framework in greater detail in Chapter 3, “Key Methodologies in a Holistic Improvement System.” See DiBenedetto et al. (2014) or Hoerl and Snee (2017) for further details on this framework.

Does Not Take Advantage of Big Data Analytics

Recent advances in information technology (IT) have led to a revolution in the capability to acquire, store, and process data. Data is being collected at an ever-increasing pace through social media, online transactions, and scientific research. According to IBM, 1.6 zetabytes (1021 bytes) of digital data are now available. That’s a lot of data—enough to watch high-definition TV for 47,000 years straight (Ebbers, 2013)! Hardware, software, and statistical technologies to process, store, and analyze this data deluge have also advanced, creating new opportunities for analytics.

At the beginning of the new millennium, the book Competing on Analytics (Davenport and Harris, 2007) foretold the potential impact data analytics might have in the business world. Shortly thereafter, Netflix announced a $1,000,000 prize for anyone who could develop a model to predict its movie ratings at least 10 percent better than its current model (Amartriain and Basilico, 2012). Picking up on the popularity of this challenge, the website kaggle.com emerged as a host to online data analysis competitions and became what might be called the “eBay of analytics.” Through kaggle, organizations that lack high-powered analytics teams can still benefit from sophisticated analytics by sponsoring data analysis competitions involving their data.

Further demonstrating the power of data and analytics, in 2011, the IBM computer Watson defeated human champions in the televised game show Jeopardy!. Data science has emerged and grown rapidly as a discipline to help address the technical challenges of Big Data (Hardin et al., 2015). In fact, Davenport and Patil (2012) have described data scientists as having the “sexiest job of the 21st century.” Programming languages such as R and Python have grown in popularity, and new methods have been developed to handle the massive data sets that are becoming more common. We’re referring here to computer-intensive methods such as neural networks, support vector machines, and random forests, to name just a few (James et al., 2013).

There is a “dark side” to Big Data analytics, however. As noted by Hoerl et al. (2014), the initial success and growth of Big Data led many to believe that combining large data sets and sophisticated analytics guarantees success. This naïve approach has proven false, with several highly publicized failures of Big Data. For example, Google developed a model to rapidly predict outbreaks of the flu based on people googling words related to the flu, such as flu, fever, sneezing, and so on (Lazar et al., 2014). This model, which appeared to detect flu outbreaks faster than hospitals detected them, was an early poster child for the power of Big Data analytics. However, Lazar et al. went on to point out that the predictive capabilities of the Google model have deteriorated significantly since its original development, to the point at which now a simple weighted moving average performs better.

Our point is not to disparage the potential of Big Data analytics, but rather to point out that coding and sophisticated analytics have not replaced the need for critical thinking and fundamentals. Studying coding and algorithm development is important and quite useful in practice. However, such study does not replace study of the problem solving process, statistical engineering, or continuous improvement principles. For example, as we said during our previous discussion of large, complex, unstructured problems, such problems have no optimal solutions. Therefore, we cannot develop an optimal algorithm to solve them; an overall, sequential approach involving several disciplines is typically required. To be sure, computer science is a key discipline that needs to be involved, but it is not the only needed discipline.

Cathy O’Neil, a self-described data scientist with a Ph.D. in mathematics from Harvard, wrote in more detail about the dark side of Big Data analytics in her uniquely named book Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy (O’Neil, 2016). Her fundamental point is not that data analytics or large data sets are inherently bad, but rather that programmers who are not properly trained in the fundamentals of modeling (including the limitations and caveats of models) are rapidly producing “opaque, unregulated, and uncontestable” models that are often blindly accepted as valid. Such invalid models are often applied to such important decisions as approving loans, granting parole, and evaluating employees or candidates.

Big Data and data science are frequently discussed in the context of analytics, statistics, machine learning, or computer science. In our experience, however, Big Data is rarely discussed in the context of continuous improvement. The reasons for this omission are not clear to us. Certainly, massive data sets provide unique opportunities to make improvements—assuming, of course, that they contain the right data to solve the problem at hand and that subsequent models go through appropriate vetting. Furthermore, newer, more sophisticated analytics, such as those mentioned earlier, provide additional options to consider when attacking problems, particularly the large, complex, and unstructured problems that cannot be easily solved with traditional methods. We feel strongly the Big Data analytics provide a significant opportunity for expanding both the scope and impact of continuous improvement initiatives.

Does Not Address Modern Risk Management Issues

As noted previously, the world certainly seems to be a more dangerous place than in the past, especially for business. Clearly, concerns over terrorism are not restricted to military or government institutions. For example, could Walt Disney have ever imagined the need for families coming to see Mickey Mouse to go through metal detectors and security checks? Yet they do, and for good reason. Financial institutions, energy companies, and businesses performing medical research on animals are frequent targets of threats of violence.

Of course, terrorism is not the only cause for concern from a risk management point of view. Identity theft is now a billion-dollar criminal enterprise in the United States alone. In many cases, the cost of illegal transactions, whether they are purchases with fake credit cards, fraudulent loans, or some other means, is borne not by the individual whose identity was stolen, but instead by the business that provided the loan or guaranteed the credit card purchase.

A more modern phenomenon is computer systems being hacked to obtain confidential information. As noted previously, the hack of Target’s credit card database not only allowed 40 million credit card numbers to be stolen, but also did irreparable harm to Target’s image. Beyond credit card numbers, organizations such as WikiLeaks (wikileaks.org) are more than eager to obtain and make public damaging information about businesses, including email exchanges, financial reports, and confidential legal documents. WikiLeaks generally obtains its material from other sources. Edward Snowden’s top-secret information (https://en.wikipedia.org/wiki/Edward_Snowden) concerning the National Security Agency (NSA) is perhaps the most obvious example.

Along the same lines, confidential emails among members of the Democratic National Committee (DNC) were hacked in 2016 and published on WikiLeaks. This caused significant embarrassment for the DNC, including the revelation of efforts by Chair Debbie Wasserman Schultz to favor Hillary Rodham Clinton’s campaign at the expense of Bernie Schultz’s. On July 25, 2016, Wasserman Schultz resigned her position because of the revelation on WikiLeaks. Considerable drama surrounded the original DNC hack, including accusations that the Russian government orchestrated the hack in an effort to enhance Donald Trump’s chances in the U.S. election (Sanger and Savage, 2016).

Clearly, businesses in the twenty-first century face unique security challenges, in addition to traditional business risks such as major lawsuits, environmental disasters, and catastrophic product failures. Therefore, risk management has become an even more critical business priority. What methods and approaches should be used for managing these types of risks, both the traditional ones and also the newer risks? We argue that the answer to this question is not obvious. However, it must be answered because the cost of failure in risk management is too high. Therefore, we consider risk management to be an integral element within a holistic improvement system.

A New Paradigm Is Needed

The proceeding discussion shows that Lean Six Sigma, even in its more modern form of Version 1.3, is not sufficient to address today’s business improvement needs. We have reached the point noted by Kuhn (1962): Too many problems remain unaddressed by Lean Six Sigma 1.3 to ignore. For example, Lean Six Sigma was never intended as a means of managing modern business risks, integrating the power of Big Data analytics, or addressing large, complex, unstructured problems that cannot be solved in three to six months.

![]() Lean Six Sigma does not incorporate simpler methods, such as Work-Out or Nike projects.

Lean Six Sigma does not incorporate simpler methods, such as Work-Out or Nike projects.

![]() Lean Six Sigma does not guide routine process control efforts or provide an overall quality management system.

Lean Six Sigma does not guide routine process control efforts or provide an overall quality management system.

![]() A new paradigm, or way of thinking about improvement, is required to make significant progress going forward.

A new paradigm, or way of thinking about improvement, is required to make significant progress going forward.

Fortunately, Six Sigma is excellent at what it was designed to do: solve medium-size “solution unknown” problems. Version 1.3 also incorporates innovation efforts, new product and process design, and Lean concepts and methods. Furthermore, the supportive infrastructure developed for Six Sigma is, in our view, the best and most complete continuous improvement infrastructure developed to date (Snee and Hoerl, 2003). Therefore, if this same infrastructure can be applied to a holistic version of Lean Six Sigma that addresses the limitations noted earlier, the resulting improvement system would be the most complete to date.

As already noted, addressing all these limitations would not be a minor upgrade, but rather would require a fundamental redesign based on a much broader paradigm. In other words, it would be Version 2.0, not simply Version 1.4. What paradigm would be required to develop Lean Six Sigma 2.0? In our view, the answer is clear: We need a holistic paradigm of improvement. By holistic, we mean a system that is not based on a particular method, whether it is Six Sigma, Lean, Work-Out, or some other method. Development would need to start with the totality of improvement work needed and then create a suite of methods and approaches that would enable the organization to address all the improvement work identified.

Note that a holistic paradigm would reverse the typical way of thinking about improvement. Traditionally, books, articles, and conference presentations on improvement focus on a particular method and promote that method over others, at least for specific types of problems. For example, it is easy to find books on Six Sigma, Lean, or TRIZ. However, very few, if any, books focus on general improvement. With a holistic paradigm, on the other hand, the focus is not on methods, but on the improvement work—that is, on the problems to be solved. Only after the problems have been identified and diagnosed are methods brought into discussion. The individual methods can then be applied to the specific problems for which they are most appropriate. Holistic improvement is essentially tool agnostic: The tools are hows, not whats. Improvement is our what, the focus of our efforts.

References

Altshuller, G. (1992) And Suddenly the Inventor Appeared. Worcester, MA: Technical Innovation Center.

Amartriain, X., and J. Basilico. (2012) “Netflix Recommendations: Beyond the 5 Stars, Part I” Netflix Tech Blog, April 6. Available at http://techblog.netflix.com/2012/04/netflix-recommendations-beyond-5-stars.html.

Birkinshaw, J., and C. Gibson. (2004) “Building Ambidexterity into an Organization.” MIT Sloan Management Review 45, no. 4: 47–55.

Davenport, T. H., and J. G. Harris. (2007) Competing on Analytics: The New Science of Winning . Cambridge, MA: Harvard Business Review Press.

Davenport, T. H., and D. J. Patil. (2012) “Data Scientist: The Sexiest Job of the 21st Century.” Harvard Business Review (October): 70–76.

DiBenedetto, A., R. W. Hoerl, and R. D. Snee. (2014) “Solving Jigsaw Puzzles,” Quality Progress (June): 50–53.

Ebbers, M. (2013) “5 Things to Know About Big Data in Motion,” IBM Developer Works blog. Available at: www.ibm.com/developerworks/community/blogs/5things/entry/5_things_to_know_about_big_data_in_motion?lang=en.

General Electric Company (1999), 1998 Annual Report, available at: http://www.annualreports.com/HostedData/AnnualReportArchive/g/NYSE_GE_1998.pdf

George, M. L. (2002) Lean Six Sigma: Combining Six Sigma Quality with Lean Production Speed . New York: McGraw-Hill.

Gutierrez, G., L. J. Bustinza, and V. Barales. (2012) “Six Sigma, Absorptive Capacity and Organization Learning Orientation.” International Journal of Production Research 50, no. 3: 661–675.

Gutierrez, G., J. Leopoldo, V. Barrales Molina, and J. Tamayo Torres. (2016) “The Knowledge Transfer Process in Six Sigma Subsidiary Firms.” Total Quality Management & Business Excellence 27, no. 6: 613–627.

Hahn, G. J., N. Doganaksoy, and R. W. Hoerl. (2000) “The Evolution of Six Sigma.” Quality Engineering 12, no. 3: 317–326.

Hardin, J., R. Hoerl, N. J. Horton, D. Nolan, B. Baumer, O. Hall-Holt, P. Murrell, R. Peng, D. Roback, D. Temple Land, and M. D. Ward. (2015) “Data Science in Statistics Curricula: Preparing Students to ‘Think with Data.’” The American Statistician 69, no. 4: 343–353.

Harry, M., and R. Schroeder. (2000) Six Sigma: The Breakthrough Management Strategy Revolutionizing the World’s Top Corporations. New York: Currency Doubleday.

He, Z., Y. Deng, M. Zhang, X. Zu, and J. Antony. (2015) “An Empirical Investigation of the Relationship Between Six Sigma Practices and Organizational Innovation.” Total Quality Management and Business Excellence (October): 1–22.

Hindo, B. (2007) “3M’s Innovation Crisis: How Six Sigma Almost Smothered an Idea Culture.” Business Week (June 11): 8–14.

Hoerl, R. (2008) “Work Out.” In Encyclopedia of Statistics in Quality and Reliability , edited by F. Ruggeri, R. Kenett, and F. W. Faltin, 2103–2105. Chichester, UK: John Wiley & Sons.

Hoerl, R. W., and M. M. Gardner. (2010) “Lean Six Sigma, Creativity, and Innovation,” International Journal of Lean Six Sigma 1, no. 1: 30–38.

Hoerl, R. W., and R. D. Snee. (2010) “Moving the Statistics Profession Forward to the Next Level.” The American Statistician 64, no. 1: 10–14.

Hoerl, R. W., and R. D. Snee. (2013) “One Size Does Not Fit All: Identifying the Right Improvement Methodology.” Quality Progress (May 2013): 48–50.

Hoerl, R. W., and R. D. Snee. (2017) “Statistical Engineering: An Idea Whose Time Has Come,” The American Statistician , Vol. 71, no. 3, 209–219.

Hoerl, R. W., R. D. Snee, and R. D. De Veaux. (2014) “Applying Statistical Thinking to ‘Big Data’ Problems.” Wiley Interdisciplinary Reviews: Computational Statistics (July/August): 22–232. (doi: 10.1002/wics.1306)

James, G., D. Witten, T. Hastie, and R. Tibshirani. (2013) An Introduction to Statistical Learning . New York: Springer.

Jones, M. H. (2004) “Six Sigma…at a Bank?” Six Sigma Forum Magazine (February): 13–17.

Kandel, S., A. Paepcke, J. M. Hellerstein, and J. Heer. (2012) “Enterprise Data Analysis and Visualization: An Interview Study.” IEEE Transactions Visualization and Computer Graphics 18, no. 12: 2917–2926.

Kepnar, C. H., and B. B. Tregoe. (2013) The New Rational Manager . New York: McGraw-Hill.

Kuhn, T. S. (1962) The Structure of Scientific Revolutions . Chicago: University of Chicago Press.

Lazar, D., R. Kennedy, G. King, and A. Vespignani. (2014) “The Parable of Google Flu: Traps in Big Data Analysis.” Science 343: 1203–1205.

O’Neil, C. (2016) Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy . New York: Crown Publishing Group.

Sanger, C., and D. E. Savage. (2016) “U.S. Accuses Russia of Directing Hacks to Influence the Election.” New York Times , October 6: A1.

Snee, R. D., and R. W. Hoerl. (2003) Leading Six Sigma: A Step-by-Step Guide Based on Experience with GE and Other Six Sigma Companies. Upper Saddle River, NJ: Financial Times/Prentice Hall.

Snee, R. D., and R. W. Hoerl. (2005) Six Sigma Beyond the Factory Floor; Deployment Strategies for Financial Services, Health Care, and the Rest of the Real Economy. Upper Saddle River, NJ: Financial Times/Prentice Hall.