In the most basic words, topic modeling is the process of finding out the hidden topics that exist in any document. We will try to explain the basic concept of topic modeling by using an analogy of a lavish buffet spread.

Suppose you go to a wedding that features a large number of items from various cuisines. You, being an absolute foodie, go to all the counters and collect a very large number of items at your table (with the obvious disapproving looks of other guests). Now one of your other friends arrives at the table and takes a look at the large number of food items at your table. He tries to guess the various cuisines on offer from the snapshot of items you have collected. He is able to do so because there is an association between the food item and the cuisine it comes from. For example, if you have various types of pasta and sea foods on your table, he could guess that a major cuisine at the buffet was Italian. This is very much similar to the thought process of exploring topic modeling.

We assume that, while the content was being developed, a news article in our case, the writer had some major themes in his mind. Each of these themes can be linked to a collection of words. The process of writing then becomes deciding on the themes, selecting words from those themes, and composing those using rules of grammar and language. The end result of this process is our article.

Topic modeling assumes that there is a hidden structure in the data, which is a direct result of the various themes the writer had selected at the time of its writing (as explained previously). Then, we assume that every topic is identified by a distribution over a collection of words. Topics have different words with associated probabilities, and these probabilities can be used to distinguish topics. For example, a topic about cricket will have higher probabilities for words such as "bat", "ball", etc., whereas a topic about finance will have higher probabilities for words such as "money", "stocks", and so on.

For the process to find out these topics, along with the words associated with each topic, we will follow the following set of steps:

- We assign topics with a random set of words for the initiation.

- We go through the collection word by word and reassign them to topics in which similar words occur. This reassignment is the most mathematical and tough to understand part of the algorithm. We can demonstrate this with an example. Suppose we assign a topic randomly and then encounter the word "stock". Now, in the random collection of topics we will have some document in which the word "stock" already appears. So, initially, we can assign the word "stock" to a similar topic to that of the other document containing "stock".

- We reiterate the process and keep reassigning words using similar logic. The actual logic is a bit of advanced math and is hence excluded from this book.

- Once we reach a point where the reassignment of words to different topics doesn't change our overall topic model distribution, we break our process.

So, this completes our basic introduction to topic modeling. Please bear in mind that this is as vanilla as it gets. The interested reader can refer to the many excellent papers on the topic, including the one introducing Latent Dirichlet Allocation (LDA) by David Blei, Andrew Ng and Michael Jordan.

In our second use case for textual data, we plan to perform topic modeling on an interesting collection of news articles. We all know that in the last US presidential election the victory of Donald Trump was a surprising event for almost all of the world. There were three major events in his election campaign:

- Official announcement of his candidacy: June 16, 2015

- Confirmation of Mr. Trump as the republican candidate: July 16, 2016

- Election date: November 8, 2016

We want to collect news articles written about Mr. Trump in the opinion section of The Guardian in two phases:

- Phase 1: June 16, 2015–July 16, 2016

- Phase 2: July 17, 2016–November 8, 2016

Once we have collected the data for these two periods, we will try to build topic models on both. This will help us to investigate whether the major topics of discussion changed or not in the course of Mr. Trump's campaign.

For the topic modeling exercise we have planned, we will extract news articles in the different time periods. For once in our journey, the data extraction part is a little more straightforward, mostly due to the extensive work we have done on developing the data extraction pipeline in the previous section. The only part of the process that we have to adapt for this section is the query and the date range part of the query. Once we have made the necessary changes to the API endpoint, we can use the same three functions we developed in the last section for the data extraction. We will give the changed API end point and then the function calls that are required for the data extraction:

endpoint_url = http://content.guardianapis.com/search?from-date=2016-04-01&to-date=2016-07-01§ion=commentisfree&page=1&page-size=200&q=brexit&api-key=xxxxxxxx # Extract all links data frame links_df <- extract_all_links() # Seperate the links from the data frame links <- links_df[,"url"] # Get me all the data data_df <- extract_all_data(links)

This will get us all the data for a particular date range. As our analysis will deal with finding topics in different time periods, we will have to execute the same code with appropriate values for the from-date and to-date sections. Once we have extracted all the textual data for all time intervals we can start with our analysis.

As always, we will start with some basic descriptive analysis because we know that starting simple is the right start for all analytics use cases. The first article of interest is usually the distribution of articles. Our first time interval starts from the point where Mr. Trump officially announced his candidacy for the post of president and ends when he was officially selected by the Republican Party as its candidate. Our second time interval starts from his official selection as their candidate and ends just before when the results were announced. We are interested to see if there is a stark difference in the average number of total articles in these two time periods.

The following code snippet will do the task of creating this summarization for the two phases and plot the result for us. We will also create some date-type columns as we did in the last section:

trump_phase1[,'time_interval'] <- 'Phase 1'

trump_phase2[,'time_interval'] <- 'Phase 2'

trump_df <- rbind(trump_phase1,trump_phase2)

articles_df <- trump_df

for (i in 1: nrow(articles_df)){

m <- regexpr("\d{1,2} \w{3,9} \d{4}", articles_df[i, "content"], perl=TRUE)

if(m !=-1){

articles_df[i,"article_date"] <- regmatches(articles_df[i, "content"],m)

}

}

articles_df[,"article_date_formatted"] <- as.Date(articles_df[,"article_date"],"%d %B %Y")

articles_df[,"date_year_month"] = as.yearmon(articles_df[,"article_date_formatted"])

articles_across_phases <- articles_df %>%

group_by(time_interval) %>%

summarise(average_article_count = n()/n_distinct(date_year_month))

p <- ggplot(data=articles_across_phases, mapping=aes(x=time_interval, y=average_article_count)) + geom_bar(aes(fill = time_interval), stat = "identity")

p <- p + theme_bw() + guides(fill = FALSE) +

theme(axis.text.x = element_text(size = 15)) +

ylab("Mean number of articles") + xlab("Phase") +

ggtitle("Average article count across phases") + theme(strip.text = (element_text(size = 15)))

print(p)The graph generated by the code snippet is given here. The result is as we would expect: the average number of articles goes way up when Mr. Trump is confirmed by the Republican Party as their official candidate:

Average articles across phases

Another interesting descriptive analysis is to take a look at the average number of words per article and see how that has changed as we go along the timeline. This will give us a feel of how much coverage was given to Mr. Trump by the writers of the Opinion section of The Guardian. The following code will generate the chart that we want to observe:

trump_wc <- Corpus(VectorSource(articles_df[,"content"]))

temp_articles <-tm_map(trump_wc,content_transformer(tolower))

dtm <- DocumentTermMatrix(temp_articles)

word_count <- rowSums(as.matrix(dtm))

articles_df[,"word_count"] <- word_count

wordcount_across_time <- articles_df %>%

group_by(date_year_month) %>%

summarise(average_article_count = mean(word_count))

ggplot(data=wordcount_across_time, aes(x=as.Date(date_year_month), y=average_article_count)) +

geom_line(colour="orange", size=1.5) +

ylab("Average word count") + xlab("Month - Year") +

ggtitle("Word count across the time line")+ theme_bw() +

scale_x_date(date_labels = "%b %Y",date_breaks = "2 month") +

geom_vline(xintercept = as.numeric(as.Date("2016-07-01")), linetype=4)The image generated by the preceding code snippet is given here. We have marked the month of the confirmation of Mr. Trump's candidacy with a vertical line so that we are able to clearly differentiate the average word count before and after that event:

Time variation of article word count

We observe that once the candidacy was confirmed the word count has a slight upward swing. Immediately after the confirmation there is a stark rise in the average word count, which probably reflects the in-depth discussion of Mr. Trump once it was confirmed that he was officially in the running for the title of Most Powerful Person in the World. This is quite expected and we assume this pattern to be consistent across other news sources too.

We will separate our data into the appropriate phases, and perform the cleaning and pre-processing tasks for both of those DataFrames. Once we have cleaned and pre-processed the data for both the phases, we will fit topic models to both of them and try to analyze the results of those models.

The first step in any data-mining task is to perform some data-cleaning tasks. The specific methods selected for cleaning will often depend on the nature of the data. We will perform a few very basic steps to achieve the required sanity of our data. The following code will perform the basic cleaning tasks for us. The code is commented to give the function that we are performing. We have already explained the logic of these functions in Chapter 2, Twitter – What's Happening with 140 Characters, and the user can refer to it for more details:

articles_phase1 <- articles_df %>% filter(time_interval == "Phase 1")

articles_phase1 <- Corpus(VectorSource(articles_phase1[,"content"]))

# Convert all text to lower case

articles_phase1 <-tm_map(articles_phase1,content_transformer(tolower))

# Remove all punctuations from the text

articles_phase1 <- tm_map(articles_phase1, removePunctuation)

# Remove all numbers from the text

articles_phase1 <- tm_map(articles_phase1, removeNumbers)

# Remove all english stopwords from the text

articles_phase1 <- tm_map(articles_phase1, removeWords, stopwords("english"))

# Remove all whitespaces from the text

articles_phase1 <- tm_map(articles_phase1, stripWhitespace)The most important decision to be taken at this stage is the representation of the documents we want to use. This numerical representation is used by text-mining algorithms. The simplest representation is the bag-of-words representation. In this representation, we find out the total words in the corpus, and each document is represented as the numerical vector of its constituent words.

Here is a very simple example of a term matrix. Suppose we have two documents in the corpus, as given here:

- Document 1: Red roses

- Document 2: Black cloud

For this simple document corpus, the document term matrix will be as given here:

Red

Roses

Black

Cloud

Document 1

1

1

0

0

Document 2

0

0

1

1

Before we convert our text data into such a representation, we will also stem the data; that is, we will reduce all the words to their base forms. Once we have completed these two steps, we will have a representation of our document, which we can then use for topic modeling. The following code will perform these two actions:

# Stem all word in the text articles_phase1 <- tm_map(articles_phase1,stemDocument) # Creating a Document term Matrix dtm_trump_phase2 <- DocumentTermMatrix(articles_phase1)

Please note that we will not include the code for cleaning and processing the second phase of articles. The reader can easily do this themself using the preceding sequence of code.

Once we are done with both of these two tasks, we can proceed with our topic modeling task. We will be using the topicmodels package to fit one of the most famous topic models, LDA, using an algorithm called Gibbs sampling. The process of fitting a topic model onto our dataset is quite straightforward. It can simply be achieved by calling the LDA function of the package. The following code will generate the topic models for both phases of our data. First, we will analyze the results that we get out of our initial models and then we will discuss what the tuning process may look like if the reader is looking for a more involved reiteration of the process:

burnin <- 4000

iter <- 200

thin <- 500

seed <-list(2003,5,63,100001,765)

nstart <- 5

best <- TRUE

#Number of topics

k <- 5

#Run LDA using Gibbs sampling

ldaOut <-LDA(dtm_trump_phase1,k, method="Gibbs",

control=list(nstart=nstart, seed = seed, best=best, burnin = burnin, iter = iter))To develop our model, we set some parameters (mostly defaults from the vignette of the package) and then call the LDA function with the method set as "Gibbs". This selects the particular model-fitting procedure from the set of algorithms that can be used to perform the task. Another important parameter, and also a limitation of LDA, is the number of topics value. It is unlikely that you will be able to know how many types of topics there are in the corpus, so this value is often selected by the practitioners on the basis of domain-knowledge-based intuition. Once we get the initial results, we can go ahead and try different values of this parameter to get better topics.

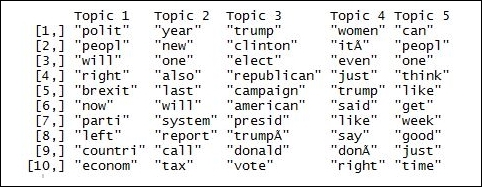

The output of the preceding function will include the probability of each topic for every document in the corpus and the terms associated with each of the topics, among other things. Now comes the tricky part, which makes topic modeling useful but slightly tough to use. Before we come to that, let's see what the top 10 terms associated with each of the topics are for both our phases. The following code will generate those terms:

ldaOut.terms <- as.matrix(terms(ldaOut,10)) ldaOut.terms

The output of the code is given in the following image:

Topics in phase 1

The output will not give us very identifiable topics by itself. It will help us with the terms that can be associated with the topics, and then the user can make a call and label the topic accordingly. For example, it is tough to label Topic 1 here, but we can see common themes in the other topics. Topic 5 probably deals with Mr. Trump's major promotion theme. Topic 2 can be termed Mr. Trump's comparison to the opponent. Topic 3 is again tough to classify, but Topic 4 can be attributed to Mr. Trump's appeal to the voters. This is a very subjective task and hence, although the procedure is very powerful, it is far from a magic wand.

Let us complete this discussion by taking a look at the topics from phase 2. The following image gives the similar terms output for the second phase of articles:

Topics in phase 2

The topics in the second phase seem a little more defined. We still have some similar topics to the first phase but the biggest difference is the terms for Topic 1. We clearly see that this topic deals with some policy-related questions. Also gone is the topic from phase 1 that dealt with some controversial topics (refer to Topic 5 in the earlier image). The story it paints is an interesting one. Post the confirmation of his candidacy, the opinion writers shifted to focus on the policies of Mr. Trump, whereas earlier the focus was not so much on those issues. This capability of topic modeling is what makes it a powerful tool for analyzing a large corpus of textual data.

The results of our primitive experiments are quite interesting. The reader can go back and tinker with the process to arrive at better/different results. There are broadly two ways in which we can achieve a little more refinement of the results:

- Based on the terms we observe in the topics, we can find words that are not very helpful. For example, words such as year can be safely removed from the corpus. This may give us better structured topics.

- Another way to modify the process is to fine tune the algorithm aspects. This is an area that usually requires a very good understanding of the algorithm. But we can also achieve a better set of results by tinkering with the parameter space of the algorithm. You can refer to the document for the

topicmodelspackage for experimenting with those parameters (https://cran.r-project.org/web/packages/topicmodels/topicmodels.pdf).

As with our other modeling-related tasks, we will not go into the detailed process, but will give the reader a gentle introduction that can serve as a starting point for the future endeavors of the users.