There aren't many devices released these days that don't feature a touch screen. Most new devices have adopted this as the primary input method and have dropped physical buttons almost entirely.

In Marmalade we detect touch screen events using the

s3ePointer API, which I have to admit is perhaps not the most obvious name for an API that handles touch screen input. To use this API in our own program we just need to include the s3ePointer.h file.

The reason for this slightly bizarre naming is that when this API was first developed, touch screens were not commonplace. Instead, some devices had little joystick-style nubs that were able to move a pointer around the screen, much like a mouse on a computer.

Due to the fact that touch screen input is primarily concerned with a screen coordinate and that it was unlikely that a device would arrive that had both touch screen and pointer inputs, the Marmalade SDK simply adapted the existing s3ePointer API to accommodate touch screens as well, since your finger or stylus is effectively a pointer anyway.

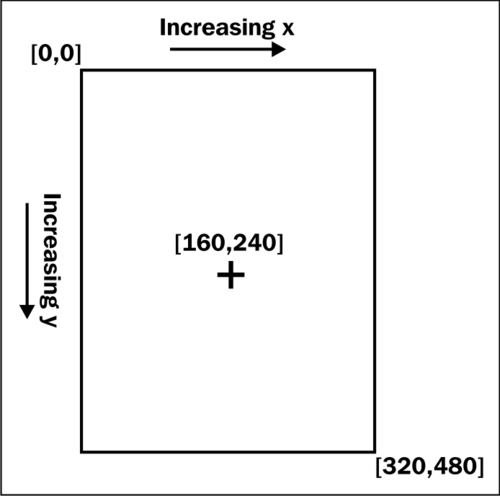

For the purpose of this chapter, whenever we talk about a position being "pointed at", we mean either an on-screen cursor has been moved to that position or a touch screen has had a contact made at that position. Positions are always returned as pixel positions relative to the top-left corner of the screen, as shown in the following diagram that shows what to expect on a device with a portrait HVGA screen size, such as a non-retina display iPhone:

In the following sections, we will learn how to discover the capabilities available for use on the device we are running on and how to handle both single and multi-touch screens.

We use the function s3ePointerGetInt to determine the properties of the hardware we are running on. We pass in one of the values in the following table, and we can then use the result to tailor our input methodology accordingly.

|

Property |

Description |

|---|---|

|

Returns | |

|

If the system has some kind of mouse pointer-like cursor displayed on screen, this property will return 1 if the pointer is currently visible, otherwise it returns | |

|

This will return the type of pointer we have at our disposal. See the next sub-section for more information on this. | |

|

This will return the type of stylus our device uses. See the next sub-section for more information on this. | |

|

If the device supports multi-touch (being able to detect more than one press on the touch screen at a time) the value |

For most game code, it is usually enough to first use the S3E_POINTER_AVAILABLE property to see if we have pointer capability available and the S3E_POINTER_MULTI_TOUCH_AVAILABLE property to configure our input methodology appropriately.

When supplying

the property type S3E_POINTER_TYPE to s3ePointerGetInt, the return value is one from the s3ePointerType enumeration.

|

Return Value |

Description |

|---|---|

|

Invalid request. The most likely cause is that the s3ePointer API is not available on this device. | |

|

Pointer input is coming from a device that features an on-screen cursor to indicate position. The cursor may be controlled by a mouse or some other input device, such as a joystick. | |

|

Pointer input is from a stylus-based input method, most likely a touch screen of some sort. |

In the majority of cases this distinction is not normally that important, but it might be relevant if you need to track the movement of the pointer.

With a mouse, our code will receive events whenever the pointer is moved across the screen, whether a mouse button is held or not. On a touch screen, we will obviously only receive movement events when the screen is being touched.

If we

use s3ePointerGetInt with the property S3E_POINTER_TYPE and get the return type S3E_POINTER_TYPE_STYLUS, we can interrogate a little further to find out what type of stylus we will be using by calling s3ePointerGetInt again with the property S3E_POINTER_STYLUS_TYPE. The return values possible are in the following table:

|

Return Value |

Description |

|---|---|

|

Call was invalid; most likely because we are not running on a hardware that uses a stylus. | |

|

Inputs are made by touching a stylus to the input surface. | |

|

Inputs are made by touching a finger to the input surface. |

This is probably not a distinction that we will need to worry about in most cases, but it might be useful to know so that games can be made more forgiving about inputs when they are made with a finger, since a stylus has a much smaller contact surface and should therefore allow for a far more accurate input.

In order to keep the s3ePointer API up-to-date with current touch screen inputs, it is necessary to call the s3ePointerUpdate function once per frame. This will update the cache of the current pointer status that is maintained within the s3ePointer API.

If the s3ePointer API is available on our device, we are guaranteed to be able to detect and respond to the user touching the screen and moving their stylus or finger about, or moving an on-screen cursor around and pressing some kind of selection button.

Even if our hardware supports multi-touch, we can still make use of single touch input if our game has no need to know about multiple simultaneous touch points. This may make it a little simpler to code our game, as we don't need to worry about issues such as two buttons on our user interface being pressed at the same time.

As with key input, we can choose to use either a polled or callback-based approach.

We can determine the

current on-screen position being pointed at (either by the on-screen cursor or a touch on the screen) by using the s3ePointerGetX and s3ePointerGetY functions, which will return the current horizontal and vertical pixel positions being pointed at.

In the case of a touch screen, the current position returned by these functions will be the last known position pointed at if the user is not currently making an input. The default value before any touches have been made will be (0,0)—the top-left corner of the screen.

To determine whether an input is currently in progress, we can use the function s3ePointerGetState, which takes an element from the s3ePointerButton enumeration and returns a value from the s3ePointerState enumeration. The following table shows the values that make up the s3ePointerButton enumeration:

|

Value |

Description |

|---|---|

|

Returns the status of either the left mouse button or a touch screen tap. | |

|

An alternative name for | |

|

Returns the status of the right mouse button. | |

|

Returns the status of the middle mouse button. | |

|

Used to determine if the user has scrolled the mouse wheel upwards. | |

|

Used to determine if the user has scrolled the mouse wheel downwards. |

The next table

shows the members of the s3ePointerState enumeration, which indicate the current status of the requested pointer button or touch screen tap:

|

Value |

Description |

|---|---|

|

The button is not depressed or contact is not currently made with the touch screen. | |

|

The button is being held down or contact has been made with the touch screen. | |

|

The button or touch screen has just been pressed. | |

|

The button or touch screen has just been released. | |

|

Current status of this button is not known. For example, the middle mouse button status was requested but there is no middle mouse button present on the hardware. |

With this information we now have the ability to track the pointer or touch screen position and determine when the user has touched or released the touch screen or pressed a mouse button.

It is also possible to keep track of pointer events using a callback-based system. For single touch input, there are two event types that we can register callback functions for; these are button and motion events.

We can start receiving pointer events by calling the s3ePointerRegister function, and we can stop them by calling s3ePointerUnRegister. Both functions take a value to identify the type of event we are concerned with, and a pointer to a callback function.

When registering a callback function, we can also provide a pointer to our own custom data structure that will be passed into the callback function whenever an event occurs.

The following code snippet shows how we can register a callback function that will be executed whenever the touch screen or a mouse button is pressed or released:

// Callback function that will receive pointer button notifications

int32 ButtonEventCallback(s3ePointerEvent* apButtonEvent,

void* apUserData)

{

if (apButtonEvent->m_Button == S3E_POINTER_BUTTON_SELECT)

{

if (apButtonEvent->m_Pressed)

{

// Left mouse button or touch screen pressed

}

else

{

// Left mouse button or touch screen released

}

}

return 0;

}

// We use this to register the callback function…

s3ePointerRegister(S3E_POINTER_BUTTON_EVENT,

(s3eCallback) ButtonEventCallback, NULL);

// …and this to cancel notifications

s3ePointerUnRegister(S3E_POINTER_BUTTON_EVENT,

(s3eCallback) ButtonEventCallback);The button event callback's first parameter is a pointer to an s3ePointerEvent structure that contains four members. The button that was pressed is stored in a member called m_Button that is of the type s3ePointerButton (see the table in the Detecting single touch input using polling section earlier in this chapter for more details on this enumerated type).

The m_Pressed member will be 0 if the button was released and 1 if it was pressed. You might expect this to be of type bool rather than an integer but it isn't, because this is a C-based API, not C++-based and bool is not a part of the standard C language.

We can also discover the screen position where the event occurred by using the structure's m_x and m_y members.

It is also possible to register a callback that will inform us when the user has performed a pointer motion. We again use the s3ePointerRegister/s3ePointerUnRegister functions, but this time use S3E_POINTER_MOTION_EVENT as the callback type.

The callback

function we register will be passed a pointer to an s3ePointerMotionEvent structure that consists of just m_x and m_y members containing the screen coordinate that is now being pointed at.

A multi-touch capable display allows us to detect more than one touched point on the screen at a time. Every time the screen is touched, the device's OS will assign that touch point an ID number. As the user moves their finger around the screen, the coordinates associated with that ID number will be updated until the user removes their finger from the screen, whereupon that touch will become inactive and the ID number becomes invalid.

While Marmalade does provide a polling-based approach to handling multi-touch events, the callback approach is possibly the better choice as it leads to slightly more elegant code and is a little more efficient.

Marmalade provides us

with a set of functions to allow multi-touch detection. The functions s3ePointerGetTouchState, s3ePointerGetTouchX, and s3ePointerGetTouchY are equivalent to the single touch functions s3ePointerGetState, s3ePointerGetX, and s3ePointerGetY, except that the multi-touch versions take a single parameter—the touch ID number.

The s3ePointer API also declares a preprocessor define S3E_POINTER_TOUCH_MAX that indicates the maximum possible value for the touch ID number (plus one!). As the user touches and releases the display, the touch ID numbers will be re-used. It is important to bear this in mind.

The following code snippet shows a loop that will allow us to process the currently active touch points:

for (uint32 i = 0; i < S3E_POINTER_TOUCH_MAX; i++)

{

// Find position of this touch id. Position is only valid if the

// state for the touch ID is not S3E_POINTER_STATE_UNKNOWN or

// S3E_POINTER_STATE_UP

int32 x = s3ePointerGetTouchX(i);

int32 y = s3ePointerGetTouchY(i);

switch(s3ePointerGetTouchState(i))

{

case S3E_POINTER_STATE_RELEASED:

// User just released the screen at x,y

break;

case S3E_POINTER_STATE_DOWN:

// User just pressed or moved their finger to x,y

// We need to know if we've already been tracking this

// touch ID to tell whether this is a new press or a move

break;

default:

// This touch ID is not currently active

break;

}

}The biggest issue with this approach is that Marmalade never sends us an explicit notification that a touch event has just occurred. The s3ePointerGetTouchState function never returns S3E_POINTER_STATE_PRESSED, so instead we need to keep track of all touch IDs we have seen active so far when handling S3E_POINTER_STATE_DOWN. If a new touch ID is seen, we have detected the just-pressed condition.

While this code will work, I hope you will find that the callback-based approach that we are about to consider leads to a slightly more elegant solution.

As with the polling

approach, multi-touch detection using callbacks is almost exactly the same as the single touch callback method. We still use s3ePointerRegister and s3ePointerUnRegister to start and stop events being sent to our code, but instead we use S3E_POINTER_TOUCH_EVENT to receive notifications of the user pressing or releasing the screen, and S3E_POINTER_TOUCH_MOTION_EVENT to find out when the user has dragged their finger across the screen.

The callback function registered to S3E_POINTER_TOUCH_EVENT will be sent a pointer to an s3ePointerTouchEvent structure. This structure contains the screen coordinates where the event occurred (the m_x and m_y members), whether the screen was touched or released (the m_Pressed member, which will be set to 1 if the screen was touched), and most importantly the ID number for this touch event (the m_TouchID member), which we can use to keep track of this touch as the user moves their finger around the display.

The S3E_POINTER_TOUCH_MOTION_EVENT callback will receive a pointer to an s3ePointerTouchMotionEvent structure. This structure contains the ID number of the touch event that has been updated and the new screen coordinate values. These structure members have the same names as their equivalent members in the s3ePointerTouchEvent structure.

Marmalade provides us with no way of adjusting the frequency of touch events. Instead, it is really just dependant on how often the underlying operating system code dispatches such events.

Hopefully you can see that the callback-based method is a little neater than the polled method. Firstly, we can say goodbye to the truly nasty loop needed in the polled method to detect all currently active touches.

Secondly, with careful coding we can use the same code path to handle both single and multi-touch input. If we code first for multi-touch input, then making single touch work is simply a case of adding a fake touch ID to incoming single touch events and passing them through to the multi-touch code.

The arrival of the touch screen to mobile devices brought with it a new set of terminology related to making inputs to our programs. For years we have been using a mouse, clicking and dragging to interact with programs, and now with touch screens we have quickly become comfortable with the idea of swiping and pinching.

These methods of interaction have become known as gestures and users have become so used to them now that if your application doesn't respond as they expect, they may get quickly frustrated with your application.

Unfortunately, Marmalade does not provide any support for detecting these gestures, so instead we have to code for them ourselves. The following sections aim to provide some guidance on how to easily detect both swipes and pinches.

A swipe occurs when the user touches the screen and then slides that touch point quickly across the screen before releasing the screen.

To detect a swipe we must therefore first keep track of the screen coordinates where the user touched the screen and the time at which this occurred. When this touch event comes to an end due to the user releasing the screen, we first check the time it lasted for. If the length of time is not too long (say less than a quarter of a second), we check the distance between the start and end points. If this distance is large enough (perhaps a hundred pixels in length, or a fraction of the screen display size), then we have detected a swipe.

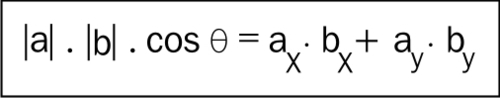

Often we only want to respond to a swipe if it is in a certain direction. We can determine this by using the dot product, the formula for which is shown in the following diagram:

The dot product is calculated by multiplying the x and y components of the two vectors together and summing the results, or by multiplying the length of the two vectors together and then multiplying by the cosine of the angle between the two vectors.

To check if the user's swipe lies in a particular direction, we first make the direction of the swipe into a unit vector, then dot product this with a unit vector in the desired swipe direction. By using unit vectors we reduce the formula on the left-hand side of the previous diagram to just the cosine of the angle between the vectors, so it is now very simple to see if our swipe lies along the desired direction.

If the dot product value is very close to 1, then our two direction vectors are close to being parallel, since cos(0°) = 1, and we've detected a swipe in the required direction. Similarly, if the dot product is close to -1, we've detected a swipe in the opposite direction, as cos(180°) = -1.

Pinch gestures can only be used on devices featuring multi-touch displays, since they require two simultaneous touch points. A pinch gesture is often used to allow zooming in and out to occur and is performed by placing two fingers on the screen and then moving them together or apart. This is most easily achieved using the thumb and index finger.

Detecting a pinch gesture in code is actually quite simple. As soon as we have detected two touch points on the screen, we calculate a vector from one point to the other and find the distance of this vector. This is stored as the initial distance and will represent no zooming.

As the user moves their fingers around the screen, we just keep calculating the new distance between the two touch points, and then divide this distance by the original distance. The end result of this calculation is a zoom scale factor. If the user moves their fingers together, the zoom factor will be less than one; if they move them apart, the zoom value will be greater than one.

The pinch gesture is complete once the user removes at least one finger from the display.