Chapter 16 Majorization Theory and Applications

‡ KTH Royal Institute of Technology, Stockholm, Sweden

# Hong Kong University of Science and Technology, Hong Kong

In this chapter we introduce a useful mathematical tool, namely Majorization Theory, and illustrate its applications in a variety of scenarios in signal processing and communication systems. Majorization is a partial ordering and precisely defines the vague notion that the components of a vector are “less spread out” or “more nearly equal” than the components of another vector. Functions that preserve the ordering of majorization are said to be Schur-convex or Schur-concave. Many problems arising in signal processing and communications involve comparing vector-valued strategies or solving optimization problems with vector- or matrix-valued variables. Majorization theory is a key tool that allows us to solve or simplify these problems.

The goal of this chapter is to introduce the basic concepts and results on majorization that serve mostly the problems in signal processing and communications, but by no means to enclose the vast literature on majorization theory. A complete and superb reference on majorization theory is the book by Marshall and Olkin [1]. The building blocks of majorization can be found in [2], and [3] also contains significant material on majorization. Other textbooks on matrix and multivariate analysis, e.g., [4] and [5], may also include a part on majorization. Recent applications of majorization theory to signal processing and communication problems can be found in two good tutorials [6] and [7].

The chapter contains two parts. The first part is devoted to building the framework of majorization theory. The second part focuses on applying the concepts and results introduced in the first part to several problems arising in signal processing and communication systems.

To explain the concept of majorization, let us first define the following notations for increasing and decreasing orders of a vector.

Definition 16.1.1. For any vector x ∈ ℝn, let

x[1]≥⋯≥x[n]

denote its components in decreasing order, and let

x(1)≤⋯≤x(n)

denote its components in increasing order.

Majorization1 defines a partial ordering between two vectors, say x and y, and precisely describes the concept that the components of x are “less spread out” or “more nearly equal” than the components of y.

Definition 16.1.2. (Majorization [1, 1.A.1]) For any two vectors x, y ∈ ℝn, we say x is majorized by y (or y majorizes x), denoted by x ≺ y (or y ≻ x), if

k∑i = 1x[i]≤k∑i = 1y[i],1≤k<nn∑i = 1x[i] = n∑i = 1y[i].

Alternatively, the previous conditions can be rewritten as

k∑i = 1x(i)≥k∑i = 1y(i),1≤k<nn∑i = 1x(i) = n∑i = 1y(i).

There are several equivalent characterizations of the majorization relation x ≺ y in addition to the conditions given in Definition 16.1.2. One alternative definition of majorization given in [2] is that x ≺ y if

(16.1) |

for all continuous convex functions ϕ. Another interesting characterization of x ≺ y, also from [2], is that x = Py for some doubly stochastic matrix2 P. In fact, the latter characterization implies that the set of vectors x that satisfy x ≺ y is the convex hull spanned by the n! points formed from the permutations of the elements of y.3 Yet another interesting definition of y ≻ x is given in the form of waterfilling as

n∑i = 1(xi − a) + ≤n∑i = 1(yi − a) + |

(16.2) |

for any a ∈ ℝ and ∑ni = 1xi = ∑ni = 1yi

Observe that the original order of the elements of x and y plays no role in the definition of majorization. In other words, x ≺ Пx for all permutation matrices Π.

Example 16.1.1. The following are simple examples of majorization:

(1n,1n,…,1n)≺(1n − 1,1n − 1,…,1n − 1,0) ≺⋯≺(12,12,0,…,0)≺(1,0,…,0).

More generally

(1n,1n,…,1n)≺(x1,x2,…,xn)≺(1,0,…,0)

whenever xi ≥ 0 and ∑ni = 1xi = 1.

□

It is worth pointing out that majorization provides only a partial ordering, meaning that there exist vectors that cannot be compared within the concept of majorization. For example, given x = (0.6, 0.2,0.2) and y = (0.5, 0.4, 0.1), we have neither x ≺ y nor x ≻ y.

To extend Definition 16.1.2, which is only applicable to vectors with the same sum, the following definition provides two partial orderings between two vectors with different sums.

Definition 16.1.3. (Weak majorization [1, 1.A.2]) For any two vectors x, y ∈ ℝn, we say x is weakly submajorized by y (or y submajorizes x), denoted by x ≺w y (or y ≻wx), if

k∑i = 1x[i]≤k∑i = 1y[i], 1≤k≤n.

We say x is weakly supermajorized by y (or y supermajorizes x), denoted by x ≺w y (or y ≻ wx), if

k∑i = 1x(i)≤k∑i = 1y(i), 1≤k≤n.

In either case, we say x is weakly majorized by y (or y weakly majorizes x).

For nonnegative vectors, weak majorization can be alternatively characterized in terms of linear transformation by doubly substochastic and superstochastic matrices (see [1, Ch. 2]). Note that x ≺ y implies x ≺w y and x ≺w y, but the inverse does not hold. In other words, majorization is a more restrictive definition than weak majorization. A useful connection between majorization and weak majorization is given as follows.

Lemma 16.1.1. ([1, 5.A.9, 5.A.9.a]) If x ≺w y, then there exist vectors u and v such that

x≤u and u≺y, v≤y and x≺v.

If x ≺w y, then there exist vectors u and v such that

x≥u and u≺y, u≥y and x≺v.

The notation x ≤ u means the componentwise ordering xi ≤ ui, i = 1,…, n, for all entries of vectors x, u.

16.1.2 Schur-Convex/Concave Functions

Functions that are monotonic with respect to the ordering of majorization are called Schur-convex or Schur-concave functions. This class of functions is of particular importance in this chapter, as it turns out that many design objectives in signal processing and communication systems are Schur-convex or Schur-concave functions.

Definition 16.1.4. (Schur-convex/concave functions [1, 3.A.1]) A real-valued function ϕ defined on a set A

x≺y on A ⇒ ϕ(x)≤ϕ(y).

If, in addition, ϕ (x) < ϕ (y) whenever x ≺ y but x is not a permutation of y, then ϕ is said to be strictly Schur-convex on A

x≺y on A ⇒ ϕ(x)≥ϕ(y),

and ϕ is strictly Schur-concave on A

Clearly, if ϕ is Schur-convex on A

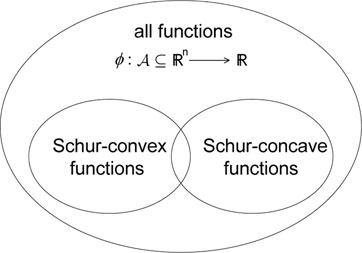

It is important to remark that the sets of Schur-convex and Schur-concave functions do no form a partition of the set of all functions from A

Figure 16.1: Illustration of the sets of Schur-convex and Schur-concave functions within the set of all functions ϕ : A

Example 16.1.2. The simplest example of a Schur-convex function, according to the definition, is ϕ (x) = maxk{xk} = x[1], which is also strictly Schur-convex.

Example 16.1.3. The function ϕ(x) = ∑ni = 1xi

Example 16.1.4. The function ϕ (x) = x1 + 2x2 + x3 is neither Schur-convex nor Schur-concave, as can be seen from the counterexample that for x =(2, 1, 1), y =(2, 2, 0) and z =(4, 0, 0), we have x ≺ y ≺ z but ϕ (x) < ϕ (y) > ϕ (z).

To distinguish Schur-convexity/concavity from common monotonicity, we also define increasing and decreasing functions that will be frequently used later.

Definition 16.1.5. (Increasing/decreasing functions) A function f : ℝn → ℝ is said to be increasing if it is increasing in each argument, i.e.,

x≤y ⇒ f(x)≤f(y),

and to be decreasing if it is decreasing in each argument, i.e.,

x≤y ⇒ f(x)≥f(y).

Using directly Definition 16.1.4 to check Schur-convexity/concavity of a function may not be easy. In the following, we present some immediate results to determine whether a function is Schur-convex or Schur-concave.

Theorem 16.1.1. ([1, 3.A.3]) Let the function ϕ : D

Theorem 16.1.2. (Schur’s condition [1, 3.A.4]) Let ℐ

(xi − xj)(∂ϕ∂xi − ∂ϕ∂xj)≥0, 1≤i,j≤n. |

(16.3) |

ϕ is Schur-concave on on ℐn if and only if ϕ is symmetric and the inequality (16.3) is reversed.

In fact, to prove Schur-convexity/concavity of a function using Theorem 16.1.1 and Theorem 16.1.2, one can take n = 2 without loss of generality (w.l.o.g.), i.e., check only the two-argument case [1, 3.A.5]. Based on Theorem 16.1.1 and Theorem 16.1.2, it is possible to obtain some sufficient conditions guaranteeing Schur-convexity/concavity of different composite functions.

Proposition 16.1.1. (Monotonic composition [1, 3.B.1]) Consider the composite function ϕ(x) = f (g1 (x) ,…,gk (x)), where f is a real-valued function defined on ℝk. Then, it follows that

• f is increasing and gi is Schur-convex ⇒ ϕ is Schur-convex;

• f is decreasing and gi is Schur-convex ⇒ ϕ is Schur-concave;

• f is increasing and gi is Schur-concave ⇒ ϕ is Schur-concave;

• f is decreasing and gi is Schur-concave ⇒ ϕ is Schur-convex.

Proposition 16.1.2. (Convex5 composition [1, 3.B.2]) Consider the composite function ϕ(x) = f (g(x1),…,g(xn)), where f is a real-valued function defined on ℝn. Then, it follows that

• f is increasing Schur-convex and g convex ⇒ ϕ is Schur-convex;

• f is decreasing Schur-convex and g concave ⇒ ϕ is Schur-convex.

For some special forms of functions, there exist simple conditions to check whether they are Schur-convex or Schur-concave.

Proposition 16.1.3. (Symmetric convex functions [1, 3.C.2]) If ϕ is symmetric and convex (concave), then ϕ is Schur-convex (Schur-concave).

Corollary 16.1.1. ([1, 3.C.1]) Let ϕ(x) = ∑ni = 1g(xi)

Proposition 16.1.3 can be generalized to the case of quasi-convex functions.6

Proposition 16.1.4. (Symmetric quasi-convex functions [1, 3.C.3]) If ϕ is symmetric and quasi-convex, then ϕ is Schur-convex.

Schur-convexity/concavity can also be extended to weak majorization through the following fact.

Theorem 16.1.3. ( [1, 3.A.8]) A real-valued function ϕ defined on a set A

x≺wy on A ⇒ ϕ(x)≤ϕ(y)

if and only if ϕ is increasing and Schur-convex on A

x≺wy on A ⇒ ϕ(x)≤ϕ(y)

if and only if ϕ is decreasing and Schur-convex on A

By using the above results, we are now able to find various Schurconvex/concave functions. Several such examples are provided in the following, while the interested reader can find more Schur-convex/concave functions in [1].

Example 16.1.5. Consider the lp norm |x|p = (Σi |xi|p)1/p, which is symmetric and convex when p ≥ 1. Thus, from Proposition 16.1.3, |x|p is Schur-convex for p ≥ 1.

Example 16.1.6. Suppose that xi > 0. Since xa is convex when a ≥ 1 and a ≤ 0 and concave when 0 ≤ a < 1, from Corollary 16.1.1, ϕ(x) = ∑ixai

Example 16.1.7. Consider ϕ:ℝ2 + →ℝ

16.1.3 Relation to Matrix Theory

There are many interesting results that connect majorization theory to matrix theory, among which a crucial finding by Schur is that the diagonal elements of a Hermitian matrix are majorized by its eigenvalues. This fact has been frequently used to simplify optimization problems with matrix-valued variables.

Theorem 16.1.4. (Schur’s inequality [1, 9.B.1]) Let A be a Hermitian matrix with diagonal elements denoted by the vector d and eigenvalues denoted by the vector λ. Then λ ≻ d.

Theorem 16.1.4 provides an “upper bound” on the diagonal elements of a Hermitian matrix in terms of the majorization ordering. From Exercise 16.1.1, a natural “lower bound” of a vector x ∈ ℝn would be 1 ≺ x, where 1 ∈ ℝn denote the vector with equal elements given by 1i≜∑nj = 1xj/n

(16.4) |

which is formally described in the following corollary.

Corollary 16.1.2. Let A be a Hermitian matrix and U a unitary matrix. Then

1(A)≺d(U†AU)≺λ(A)

where 1 (A) denotes the vector of equal elements whose sum equal to tr (A), d (A) is the vector of the diagonal elements of A, and λ (A) is the vector of the eigenvalues of A.

Proof: It follows directly from (16.44), as well as the fact that 1 (U†AU) = 1 (A) and λ (U†AU) = λ (A).

□

Corollary 16.1.2 “bounds” the diagonal elements of U†AU for any unitary matrix U. However, it does not specify what can be achieved. The following result will be instrumental for that purpose.

Theorem 16.1.5. ( [1, 9.B.2]) For any two vectors x, y ∈ ℝn satisfying x ≺ y, there exists a real symmetric (and therefore Hermitian) matrix with diagonal elements given by x and eigenvalues given by y.

Corollary 16.1.3. For any vector λ ∈ ℝn, there exists a real symmetric (and therefore Hermitian) matrix with equal diagonal elements and eigenvalues given by λ.

Corollary 16.1.4. Let A be a Hermitian matrix and x ∈ ℝn be a vector satisfying x ≺ λ (A). Then, there exists a unitary matrix U such that

d(U†AU) = x.

Proof: The proofs of Corollary 16.1.3 and Corollary 16.1.4 are straightforward from Corollary 16.1.2 and Theorem 16.1.5.

□

Theorem 16.1.5 is the converse of Theorem 16.1.4 (in fact it is stronger than the converse since it guarantees the existence of a real symmetric matrix instead of just a Hermitian matrix). Now, we can provide the converse of Corollary 16.1.2.

Corollary 16.1.5. Let A be a Hermitian matrix. There exists a unitary matrix U such that

d(U†AU) = 1(A),

and also another unitary matrix U such that

d(U†AU) = λ(A).

We now turn to the important algorithmic aspect of majorization theory which is necessary, for example, to compute a matrix with given diagonal elements and eigenvalues. The following definition is instrumental in the derivation of transformations that relate vectors that satisfy the majorization relation.

Definition 16.1.6. (T-transform [1, p. 21]) A T-transform is a matrix of the form

(16.5) |

for some α ∈ [0, 1] and some n × n permutation matrix Π with n − 2 diagonal entries equal to 1. Let [Π]ij = [Π]ji = 1 for some indices i < j, then

Πy = [y1,…,yi − 1,yj,yi + 1,…,yj − 1,yi,yj + 1,…,yn]T

and hence

Ty = [y1,…,yi − 1,αyi + (1 − α)yj,yi + 1,…, yj − 1,αyj + (1 − α)yi,yj + 1,…,yn]T.

Lemma 16.1.2. ([1, 2.B.1]) For any two vectors x, y ∈ ℝn satisfying x ≺ y, there exists a sequence of T-transforms T(1),…, T(K) such that x = T(K) ⋯ T(1)y and K < n.

An algorithm to obtain such a sequence of T-transforms is introduced next.

Algorithm 16.1.1. ( [1, 2.B.1]) Algorithm to obtain a sequence of T-transforms such that x = T(K) ⋯ T(1)y.

Input: Vectors x, y ∈ ℝn satisfying x ≺ y (it is assumed that the components of x and y are in decreasing order and that x ≠ y).

Output: Set of T-transforms T(1),…, T(K).

0. Let y(0) = y and k = 1 be the iteration index.

1. Find the largest index i such that y(k − 1)i>xi

2. Let δ = min δ = min(xj − y(k − 1)j,y(k − 1)i − xi)

3. Use a to compute T(k) as in (16.5) and let y(k) = T(k)y(k−1).

4. If y(k)≠ x, then set k = k + 1 and go to step 1; otherwise, finish.

Recursive algorithms to obtain a matrix with given eigenvalues and diagonal elements are provided in [1, 9.B.2] and [8]. Here, we introduce the practical and simple method proposed in [8] as follows.

Algorithm 16.1.2. ( [8]) Algorithm to obtain a real symmetric matrix A with diagonal values given by x and eigenvalues given by y provided that x ≺ y.

Input: Vectors x, y ∈ ℝn satisfying x ≺ y (it is assumed that the components of x and y are in decreasing order and that x ≠ y).

Output: Matrix A.

1. Using Algorithm 16.1.1, obtain a sequence of T-transforms such that x = T(K) ⋯ T(1)y.

2. Define the Givens rotation U(k) as

[U(k)]ij = {√[T(k)]ij,for i<j − √[T(k)]ij,otherwise.

3. Let A(0) = diag (y) and A(k) = U(k)TA(k−1)U(k). The desired matrix is given by A = A(K). Define the unitary matrix U = U(1) ⋯ U(K) and the desired matrix is given by A = UT diag (y) U.

Algorithm 16.1.2 obtains a real symmetric matrix A with given eigenvalues and diagonal elements. For the interesting case in which the diagonal elements must be equal and the desired matrix is allowed to be complex, it is possible to obtain an alternative much simpler solution in closed form as given next.

Lemma 16.1.3. ( [9]) Let U be a unitary matrix satisfying the condition |[U]ik| = |[U]il| ∀i, k, l. Then, the matrix A = UH diag (λ) U has equal diagonal elements (and eigenvalues given by λ). Two examples of U are the unitary DFT matrix and the Hadamard matrix (when the dimensions are appropriate such as a power of two).

Nevertheless, Algorithm 16.1.2 has the nice property that the obtained matrix U is real-valued and can be naturally decomposed (by construction) as the product of a series of rotations. This simple structure plays a key role for practical implementation. Interestingly, an iterative approach to construct a matrix with equal diagonal elements and with a given set of eigenvalues was obtained in [10], based also on a sequence of rotations.

16.1.4 Multiplicative Majorization

Parallel to the concept of majorization introduced in Section 16.1.1, which is often called additive majorization, is the notion of multiplicative majorization (also termed log-majorization) defined as follows.

Definition 16.1.7. The vector x∈ℝn +

k∏i = 1x[i]≤k∏i = 1y[i],1≤k<nn∏i = 1x[i] = n∏i = 1y[i].

To differentiate the two types of majorization, we sometimes use the symbol ≺+ rather than ≺ to denote (additive) majorization. It is easy to see the relation between additive majorization and multiplicative majorization: x ≺+ y if and only if exp(x) ≺× exp(y).7

Example 16.1.8. Given x∈ℝn +

Similar to the definition of Schur-convex/concave functions, it is also possible to define multiplicatively Schur-convex/concave functions.

Definition 16.1.8. A function ϕ : A

x≺×y on A ⇒ ϕ(x)≤ϕ(y),

and multiplicatively Schur-concave on A

x≺×y on A ⇒ ϕ(x)≥ϕ(y).

However, considering the correspondence between additive and multiplicative majorization, it may not be necessary to use the notion of multiplicatively Schur-convex/concave functions. Instead, the so-called multiplicatively Schurconvex/concave functions in Definition 16.1.8 can be equivalently referred to as functions such that ϕ ∘ exp is Schur-convex and Schur-concave, respectively, where the composite function is defined as ϕ ∘ exp(x)≜ϕ(ex1,…,exn)

The following two lemmas relate Schur-convexity/concavity of a function f with that of the composite function ϕ ∘ exp.

Lemma 16.1.4. If ϕ is increasing and Schur-convex, then ϕ ∘ exp is Schur-convex.

Proof: It is an immediate result from Proposition 16.1.2.

Lemma 16.1.5. If the composite function ϕ ∘ exp is Schur-concave on D

Proof: It can be easily proved using Theorem 16.1.1.

The following two examples show that the implication in Lemmas 16.1.4 and 16.1.5 does not hold in the opposite direction.

Example 16.1.9. The function ϕ(x) = ∏ni = 1xi

Example 16.1.10. The function ϕ(x) =∑ni=1αixi

In contrast with (additive) majorization that leads to some important relations between eigenvalues and diagonal elements of a Hermitian matrix (see Section 16.1.3), multiplicative majorization also brings some insights to matrix theory but mostly on the relation between singular values and eigenvalues of a matrix. In the following, we introduce one recent result that will be used later.

Theorem 16.1.6. (Generalized triangular decomposition (GTD) [11]) Let H ∈ ℂm × n be a matrix with rank k and singular values σ1 ≥ σ2 ≥ ⋯ ≥ σk. There exists an upper triangular matrix R ∈ ℂk×k and semi-unitary matrices Q and P such that H = QRPH if and only if the diagonal elements of R satisfy |r|≺×σ, where |r| is a vector with the absolute values of r element-wise. Vectors σ and r stand for the singular values of H and diagonal entries of R, respectively.

The GTD is a generic form including many well-known matrix decompositions such as the SVD, the Schur decomposition, and the QR factorization [4]. Theorem 16.1.6 implies that, given |r|≺×σ, there exists a matrix H with its singular values and eigenvalues being r and σ, respectively. A recursive algorithm to find such a matrix was proposed in [11].

16.1.5 Stochastic Majorization

A comparison of some kind between two random variables X and Y is called stochastic majorization if the comparison reduces to the ordinary majorization x ≺ y in case X and Y are degenerate at x and y, i.e., Pr (X = x) = 1 and Pr (Y = y) = 1. Random vectors to be compared by stochastic majorization often have distributions belonging to the same parametric family, where the parameter space is a subset of ℝn. In this case, random variables X and Y with corresponding distributions Fθ and Fθ′ are ordered by stochastic majorization if and only if the parameters θ and θ′ are ordered by ordinary majorization.

Specifically, let A

(16.6) |

denote the expectation of ϕ(X) when X has distribution Fθ, and let

(16.7) |

denote the tail probability that ϕ(X) is less than or equal to t when X has distribution Fθ. We are particularly interested in investigating whether or in what conditions E {ϕ(X)} and Pr(ϕ(X) ≤ t) are Schur-convex/concave in θ.

The following results provide the conditions in which E {ϕ(X)} is Schur-convex in θ for exchangeable random variables.8

Proposition 16.1.5. ([1, 11.B.1]) Let X1,… ,Xn be exchangeable random variables and suppose that Φ : ℝ2n → ℝ satisfies: (i) Φ(x, θ) is convex in θ for each fixed x; (ii) Φ(Πx, Πθ) = Φ(x, θ) for all permutations Π; (iii) Φ(x, θ) is Borel measurable in x for each fixed θ. Then,

ψ(θ) = E{Φ(X1,…,Xn,θ)}

is symmetric and convex (and thus Schur-convex).

Corollary 16.1.6. ([1, 11.B.2, 11.B.3]) Let X1,…,Xn be exchangeable random variables and ϕ : ℝn → ℝ be symmetric and convex. Then,

ψ(θ) = E{ϕ(θ1X1,…,θnXn)}

and

ψ(θ) = E{ϕ(X1 + θ1,…,Xn + θn)}

are symmetric and convex (and thus Schur-convex).

Corollary 16.1.7. ([1, 11.B.2.c]) Let X1,…, Xn be exchangeable random variables and g be a continuous convex function. Then,

ψ(θ) = E[g(∑iθiXi)]

is symmetric and convex (and thus Schur-convex).

Compared to the expectation form, there are only a limited number of results on the Schur-convexity/concavity of the tail probability P{ϕ(Χ) ≤ t} in terms of θ, which are usually given in some specific form of ϕ or distribution of X. In the following is one important result concerning linear combinations of some random variables.

Theorem 16.1.7. ( [12]) Let θi ≥ 0 for all i, and X1,…, Xn be independent and identically distributed (iid) random variables following a Gamma distribution with the density

f(x) = xk − 1exp( − x)Γ(k)

where Γ(k) = (k − 1)!. Suppose that g : ℝ → ℝ is nonnegative and the inverse function g−1 exists. Then,

P{g(∑iθiXi)≤t}

is Schur-concave in θ for t ≥ g(2) and Schur-convex in θ for t ≤ g(1).

Corollary 16.1.8. Let θi ≥ 0 for all i, and X1,…, Xn be iid exponential random variables with the density f (x) = exp(− x). Then, P {∑i θiXi ≤ t} is Schurconcave in θ for t ≥ 2 and Schur-convex in θ for t ≤ 1.

For more examples of stochastic majorization in the form of expectations or tail probabilities, we refer the interested reader to [1] and [7].

16.2 Applications of Majorization Theory

The code division multiple access (CDMA) system is an important multiple-access technique in wireless networks. In a CDMA system, all users share the same bandwidth and they are distinguished from each other by their signature sequences or codes. A fundamental problem in CDMA systems is to optimally design signature sequences so that the system performance, such as the sum capacity, is maximized.

Consider the uplink of a single-cell synchronous CDMA system with K users and processing gain N. In the presence of additive white Gaussian noise, the sampled baseband received signal vector in one symbol interval is

(16.8) |

where, for each user i, pi is the received power, bi is the transmitted symbol, and si ∈ ℝN is the unit-energy signature sequence, i.e., ‖si‖ = 1, and n is a zero-mean Gaussian random vector with covariance matrix σ2ΙΝ, i.e., n ~ N

(16.9) |

There are different criteria to measure the performance of a CDMA system, among which the most commonly used one may be the sum capacity given by [13]

Csum = 12log det(IN + σ − 2SPST). |

(16.10) |

In practice, the system performance may also be measured by the total MSE of all users, which, assuming that each uses a LMMSE filter at his receiver, is given by [14]

(16.11) |

Another important global quantity that measures the total interference in the CDMA system is the total weighted square correlation (TWSC), which is given by [15]

(16.12) |

The goal of the sequence design problem is to optimize the system performance, e.g., maximize Csum or minimize MSE or TWSC, by properly choosing the signature sequences for all users or, equivalently, by choosing the optimal signature sequence matrix S.

Observe that the aforementioned three performance measures are all determined by the eigenvalues of the matrix SPST. To be more exact, denoting the eigenvalues of SPST by λ≜(λi)Ni = 1, it follows that

Csum = 12∑Ni = 1log(1 + λiσ2)MSE = K − ∑Ni = 1λiλi+σ2TWSC = ∑Ni = 1λ2i.

Now, we can apply majorization theory. Indeed, since log(1 + σ−2x) is a concave function, it follows from Corollary 16.1.1 that Csum is a Schur-concave function with respect to λ. Similarly, given that −x/(x + σ2) and x2 are convex functions, both MSE and TWSC are Schur-convex in λ. Therefore, if one can find a signature sequence matrix yielding the Schur-minimal eigenvalues, i.e., one with eigenvalues that are majorized by all other feasible eigenvalues, then the resulting signature sequences will not only maximize Csum but also minimize MSE and TWSC at the same time.

To find the optimal signature sequence matrix S, let us first define the set of all feasible S

(16.13) |

and correspondingly the set of all possible λ

(16.14) |

Now the question is how to find a Schur-minimal vector within L, which is, however, not easy to answer given the form of L in (16.14). To overcome this difficulty, we transform L to a more convenient equivalent form by utilizing the majorization relation.

Lemma 16.2.1. When K ≤ N, L is equal to

ℳ≜{λ∈ℝN:(λ1,…,λK)≻(p1,…,pK),λK + 1 = ⋯ = λN = 0}.

When K > N, L is equal to

N≜{λ∈ℝN:(λ1,…,λN,0,…,0︸K − N)≻(p1,…,pK)}.

Proof: Consider the case K ≤ N. We first show that if λ ∈ ℒ then λ ∈ ℳ. Since K ≤ N, λ(SPST) has at most K non-zero elements, which are denoted by λa. Let P≜(pi)ki = 1. Observe that, for S ∈ S, p and λa are the diagonal elements and the eigenvalues of the matrix P1/2STSP1/2, respectively. From Theorem 16.1.4 we have λa ≻ p, implying that λ ∈ ℳ.

To see the other direction, let λ ∈ ℳ and thus λa ≻ p. According to Theorem 16.1.5, there exists a symmetric matrix Z with eigenvalues λa and diagonal elements p. Denote the eigenvalue decomposition (EVD) of Z by Z = UzΛzUTz and introduce Λ = diag{Λz, 0(N−K)×(N−K)} and U = [Uz 0K×(N−K)]. Then we can choose S = Λ1/2UTP−1/2. It is easy to check that the eigenvalues of SPST = Λ are λ, and ‖si‖2, i = 1,…, K, coincide with the diagonal elements of STS = P−1/2ZP−1/2 and thus are all ones, so we have λ ∈ L.

The equivalence between L and N when K > N can be obtained in a similar way, for which a detailed proof was provided in [8].

When K ≤ N, the Schur-minimal vector in L (or M), from Lemma 16.2.1, is λ* = (p1,… ,PL, 0,…, 0), which can be achieved by choosing arbitrary K orthonormal sequences, i.e., STS = IK.

When K > N, the problem of finding a Schur-minimal vector in L (or N) is, however, not straightforward. It turns out that in this case the Schur-minimal vector is given in a complicated form based on the following definition.

Definition 16.2.1. (Oversized users [8]) User i is defined to be oversized if

pi>∑Ki = 1pj1{pi>pj}N − ∑Kj = 11{pj>pi}

where 1{·} is the indication function. Intuitively, a user is oversized if his power is large relative to those of the others.

Theorem 16.2.1. ([8]) Assume w.l.o.g. that the users are ordered according to their powers p1 ≥ ⋯ ≥ pK, and the first L users are oversized. Then, the Schurminimal vector in L (or N) is given by

λ∗ = (p1,…,pL,∑Kj = L + 1pjN − L,…,∑Kj = L + 1pjN − L).

The left question is how to find an S ∈ S such that the eigenvalues of SPST are λ*. Note that the constraint S ∈ S is equivalent to saying that the diagonal elements of STS are all equal to 1. Therefore, given the optimal S, the matrix P1/2STSP1/2 has the diagonal elements p = (p1,…,pK) and the eigenvalues λb = (λ*, 0). From Theorem 16.1.5, there exists a K × K symmetric matrix M such that its diagonal elements and eigenvalues are given by p and λb, respectively. Denote the (EVD) of M by M = UΛUT, where Λ = diag {λ*} and U ∈ ℝK × N contains the N eigenvectors corresponding to λ*. Then, the optimal signature sequence matrix can be obtained as S = Λ1/2UTP−1/2. It can be verified that the eigenvalues of SPST are λ* and S ∈ S.

Finally, to construct the symmetric matrix M with the diagonal elements p and the eigenvalues λb (provided p ≺ λb), one can exploit Algorithm 16.1.2 introduced in Section 16.1.3. Interestingly, an iterative algorithm was proposed in [14, 15] to generate the optimal signature sequences. This algorithm updates each user’s signature sequence in a sequential way, and was proved to converge to the optimal solution.

16.2.2 Linear MIMO Transceiver Design

MIMO channels, usually arising from using multiple antennas at both ends of a wireless link, have been well recognized as an effective way to improve the capacity and reliability of wireless communications [16]. A low-complexity approach to harvest the benefits of MIMO channels is to exploit linear transceivers, i.e., a linear precoder at the transmitter and a linear equalizer at the receiver. Designing linear transceivers for MIMO channels has a long history, but mostly focused on some specific measure of the global performance. It has recently been found in [9, 17] that the design of linear MIMO transceivers can be unified by majorization theory into a general framework that embraces a wide range of different performance criteria. In the following we briefly introduce this unified framework.

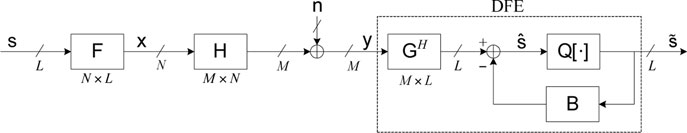

Figure 16.2: Linear MIMO transceiver consisting of a linear precoder and a linear equalizer.

Consider a communication link with N transmit and M receive antennas. The signal model of such a MIMO channel is

(16.15) |

where x ∈ ℂN is the transmitted signal vector, H ∈ ℂM × N is the channel matrix, y ∈ ℂM is the received signal vector, and n ∈ ℂM is a zero-mean circularly symmetric complex Gaussian random vector with covariance matrix I,9 i.e., n ~ CN(0, I). In the linear transceiver scheme as illustrated in Figure 16.2, the transmitted signal x results from the linear transformation of a symbol vector s ∈ ℂL through a linear precoder F ∈ ℂN × L and is given by x = Fs. Assume w.l.o.g. that L ≤ min{M, N} and E {ssH} = I. The total average transmit power is

(16.16) |

At the receiver is a linear equalizer GH ∈ ℂL × M used to estimate s from y resulting in ŝ = GH y. Therefore, the relation between the transmitted symbols and the estimated symbols can be expressed as

(16.17) |

An advantage of MIMO channels is the support of simultaneously transmitting multiple data streams, leading to significant capacity improvement.10 Observe from (16.17) that the estimated symbol at the ith data stream is given by

(16.18) |

where fi and gi are the ith columns of F and G, respectively, and ni = ∑j≠iHf j s j + n is the equivalent noise seen by the ith data stream with covariance matrix Rni = ∑j≠iHfjfHjHH + I. In practice, the performance of a data stream can be measured by the MSE, signal-to-interference-plus-noise ratio (SINR), or bit error rate (BER), which according to (16.18) are given by

MSEi≜E{|ˆsi − si|2} = |gHiHfi − 1|2 + gHiRnigiSINRi≜desired componentundesired component = |gHiHfi|2gHiRnigiBFRi≜#bits in error#transmitted bits≈φi(SINRi)

where φi is a decreasing function relating the BER to the SINR at the ith stream [6, 9]. Any properly designed system should attempt to minimize the MSEs, maximize the SINRs, or minimize the BERs.

Measuring the global performance of a MIMO system with several data streams is tricky as there is an inherent tradeoff among the performance of the different streams. Different applications may require a different balance on the performance of the streams, so there are a variety of criteria in the literature, each leading to a particular design problem (see [6] for a survey). However, in fact, all these particular problems can be unified into one framework using the MSEs as the nominal cost. Specifically, suppose that the system performance is measured by an arbitrary global cost function of the MSEs f0({MSEi}Li = 1) that is increasing in each argument.11 The linear transceiver design problem is then formulated as

(16.19) |

where Tr(FFH) ≤ P represents the transmit power constraint.

To solve (16.19), we first find the optimal G for a fixed F. It turns out that the optimal equalizer is the LMMSE filter, also termed the Wiener filter [19]

(16.20) |

To see this, let us introduce the MSE matrix

(16.21) |

from which the MSE of the ith data stream is given by MSEi = [E]ii. It is not difficult to verify that

(16.22) |

for any G, meaning that G* simultaneously minimizes all diagonal elements of E or all MSEs. At the same time, one can verify that g*i, i.e., the ith column of G*, also maximizes SINRi (or equivalently minimizes BERi) [6, 9]. Hence, the Wiener filter is optimal in the sense of both minimizing all MSEs and maximizing all SINRs (or minimizing all BERs). Observe that the optimality is regardless of the particular choice of the cost function f0 in (16.19).

Using the Wiener filter as the equalizer, we can easily obtain

MSEi = [(I + FHHHHF) − 1]iiSINRi = 1MSEi − 1BERi = φi(MSE − 1i − 1).

This means that different performance measures based on the MSEs, the SINRs, or the BERs can be uniformly represented by the MSE-based criteria, thus indicating the generality of the problem formulation (16.19).

Now, the transceiver design problem (16.19) reduces to the following precoder design problem:

(16.23) |

Solving such a general problem is very challenging and the solution hinges on majorization theory.

Theorem 16.2.2. ( [6, Theorem 3.13]) Suppose that the cost function f0 : ℝL ↦ ℝ is increasing in each argument. Then, the optimal solution to (16.23) is given by

F⋆ = Vhdiag(√p)Ω

where

(i) |

Vh ∈ ℂN × L is a semi-unitary matrix with columns equal to the right singular vectors of H corresponding to the L largest singular values in increasing order; |

(ii) |

p∈ℝL + is the solution to the following power allocation problem:12 |

minimizep,ρf0(ρ1,…,ρL)subject to(11 + p1γ1,…,11 + pLγL)≻w(ρ1,…,ρL)p≥0,1Tp≤P |

(16.24) |

where {γi}Li = 1 are the L largest eigenvalues of HHH in increasing order;

(iii) |

Ω ∈ ℂL × L is a unitary matrix such that [(I + F*HHHHF*)−1]ii = ρi for all i, which can be computed with Algorithm 16.1.2. |

Proof: We start by rewriting (16.23) into the equivalent form

(16.25) |

Note that, given any F, we can always find another F̃ = FΩH with a unitary matrix Ω such that F̃HHHHF̃ = ΩFHHHHFΩH is diagonal with diagonal elements in increasing order. The original MSE matrix is given by (I + FHHHHF)−1 = ΩH(I + F̃HHHHF̃)−1Ω. Thus we can rewrite (16.25) in terms of F̃ and Ω as

minimize˜F,Ω,ρf0(ρ)subject to˜FHHHH˜F diagonald(ΩH(I + ˜FHHHH˜F) − 1Ω)≤ρTr(˜F˜FH)≤P. |

(16.26) |

It follows from Lemma 16.1.1 and Corollary 16.1.2 that, for a given F̃, we can always find a feasible Ω if and only if

λ((I + ˜FHHHH˜F) − 1)≻wρ.

Therefore, using the diagonal property of F̃HHHHF̃, (16.26) is equivalent to

minimize˜F,ρf0(ρ)subject to˜FHHHH˜F diagonald((I + ˜FHHHH˜F) − 1)≻wρTr(˜F˜FH)≤P. |

(16.27) |

Given that F̃HHHHF̃ is diagonal with diagonal elements in increasing order, we can invoke [9, Lemma 12] or [6, Lemma 3.16] to conclude that the optimal F̃ can be written as ˜F = Vhdiag(√p), implying that F* = Vhdiag(√p)Ω. Now, by using the weak supermajorization relation as well as the structure ˜F = Vhdiag(√p), (16.27) can be expressed as (16.24).

If, in addition, f0 is minimized when the arguments are sorted in decreasing order,13 then (16.24) can be explicitly written as

minimizep,ρf0(ρ1,…,ρL)subject to∑Lj = i11 + piγi≤∑Lj = iρj, 1≤i≤Lρi≥ρi + 1, 1≤i≤L − 1p≥0,1Tp≤P |

(16.28) |

which is a convex problem if f0 is a convex function, and thus can be efficiently solved in polynomial time [20]. In fact, the optimal precoder can be further simplified or even obtained in closed form, when the objective f0 falls into the class of Schur-convex/concave functions.

Corollary 16.2.1. ([9, Theorem 1]) Suppose that the cost function f0 : ℝL ↦ ℝ is increasing in each argument.

(i) If f0 is Schur-concave, then the optimal solution to (16.23) is given by

F⋆ = Vhdiag(√p)

where p is the solution to the following power allocation problem:

minimizepf0({(1 + piγi) − 1}Li = 1)subject top≥0,1Tp≤P.

(ii) If f0 is Schur-convex, then the optimal solution to (16.23) is given by

F⋆ = Vhdiag(√p)Ω

where the power allocation p is given by

pi = (μγ − 1/2i − γ − 1i) + , 1≤i≤L

with μ chosen to satisfy 1Tp = P, and Ω is a unitary matrix such that (I + F*H HH HF*)−1 has equal diagonal elements. Ω can be any unitary matrix satisfying |[Ω]ik| = |[Ω]il|, ∀i, k, l, such as the unitary DFT matrix or the unitary Hadamard matrix (see Lemma 16.1.3).

Although Schur-convex/concave functions do not form a partition of all L- dimensional functions, they do cover most of the frequently used global performance measures. An extensive account of Schur-convexity/concavity of common performance measures was provided in [6] and [9] (see also Exercise 16.4.3). For Schur-concave functions, a nice property is that the MIMO channel is fully diagonalized by the optimal transceiver, whereas for Schur-convex functions, the channel is diagonalized subject to a specific rotation Ω on the transmit symbols.

16.2.3 Nonlinear MIMO Transceiver Design

In this section, we introduce another paradigm of MIMO transceivers, consisting of a linear precoder and a nonlinear decision feedback equalizer (DFE). The DFE differs from the linear equalizer in that the DFE exploits the finite alphabet property of digital signals and recovers signals successively. Thus, the nonlinear decision feedback (DF) MIMO transceivers usually enjoy superior performance than the linear transceivers. Using majorization theory, the DF MIMO transceiver designs can also be unified, mainly based on the recent results in [11, 21, 22], into a general framework covering diverse design criteria, as was derived independently in [6, 23, 24]. Different from the linear transceiver designs that are based on additive majorization, the DF transceiver designs rely mainly on multiplicative majorization (see Section 16.1.4).

Figure 16.3: Nonlinear MIMO transceiver consisting of a linear precoder and a decision feedback equalizer (DFE).

Considering the MIMO channel in (16.15), we use a linear precoder F ∈ ℂN × L at the transmitter to generate the transmitted signal x = Fs from a symbol vector s satisfying E {ssH} = I. For simplicity, we assume that L ≤ rank(H). The receiver exploits, instead of a linear equalizer, a DFE that detects the symbols successively with the Lth symbol (sL) detected first and the first symbol (s1) detected last. As shown in Figure 16.3, a DFE consists of two components: a feed-forward filter GH ∈ ℂL × M applied to the received signal y, and a feedback filter B ∈ ℂL × L that is a strictly upper triangular matrix and feeds back the previously detected symbols. The block Q[·] represents the mapping from the “analog” estimated ŝi to the closest “digital” point in the signal constellation. Assuming no error propagation,14 the “analog” estimated ŝi can be written as

(16.29) |

where gi is the ith column of G and bij = [B]ij. Compactly, the estimated symbol vector can be written as

(16.30) |

Let fi be the ith column of F. The performance of the ith data stream can be measured by the MSE or the SINR as

MSEi≜E{|ˆsi − si|2} = |gHiHfi − 1|2 + L∑j = i + 1|gHiHfj − bij|2 + i − 1∑j = 1|gHiHfj|2 + ‖gi‖2SINRi≜desired componentundesired component = |gHiHfi|2∑Lj = i + 1|gHiHfj − bij|2 + ∑i − 1j = 1|gHiHfj|2 + ‖gi‖2.

Alternatively, the performance can also be measured by BERi ≈ φi(SINRi) with a decreasing function φi. Similar to the linear transceiver case, we consider that the system performance is measured by a global cost function of the MSEs f0({MSE}Li = 1) that is increasing in each argument. Then the nonlinear DF MIMO transceiver design is formulated as the following problem:

(16.31) |

where Tr(FFH) ≤ P denotes the transmit power constraint.

It is easily seen that to minimize MSEi, the DF coefficients should be bij=gHiHfj, 1 ≤ i ≤ j ≤ L, or, equivalently,

(16.32) |

where u(·) stands for keeping the strictly upper triangular entries of the matrix while setting the others zero. To obtain the optimal feed-forward filter, we let W ≜ HF be the effective channel, and denote by Wi ∈ ℂM × i the submatrix consisting of the first i columns of W and by wi the ith column of W. Then, with bij = gHiHfj, the feed-forward filter minimizing MSEi is given by [6, Sec. 4.3]

(16.33) |

In fact, there is a more computationally efficient expression of the optimal DFE given as follows.

Lemma 16.2.2. ([25]) Let the QR decomposition of the augmented matrix be

Wa≜[WIL](M + L) × L = QR

and partition Q into

Q = [ˉQQ_]

where Q̄ ∈ ℂM × L and Q ∈ ℂL × L. The optimal feed-forward and feedback matrices that minimize the MSˉEs are

(16.34) |

where DR is a diagonal matrix with the same diagonal elements as R. The resulting MSE matrix is diagonal:

E≜E[(ˆs − s)(ˆs − s)H] = D − 2R

By using the optimal DFE in (16.34), the MSE and the SINR at the ith data stream are related by

SINRi = 1MSEi − 1

which is the same as in the linear equalizer case. Therefore, we can focus w.l.o.g. on the MSE-based performance measures, which, according to Lemma 16.2.2, depend on the diagonal elements of R. The optimal precoder is then given by the solution to the following problem:

(16.35) |

This complicated optimization can be simplified by using multiplicative majorization.

Theorem 16.2.3. ( [6, Theorem 4.3]) Suppose that the cost function f0 : ℝL ↦ ℝ is increasing in each argument. Then, the optimal solution to (16.35) is given by

F⋆ = Vhdiag(√p)Ω

where

(i) Vh ∈ ℂN × L is a semi-unitary matrix with columns equal to the right singular vectors of matrix H corresponding to the L largest singular values in increasing order;

(ii) p∈ℝL + is the solution to the following power allocation problem:

minimizep,rf0(r − 21,…,r − 2L)subject to(r21,…,r2L)≺×(1 + p1γ1,…,1 + pLγL)p≥0,1Tp≤P |

(16.36) |

where {γi}Li = 1 are the L largest eigenvalues of HHH in decreasing order;

(iii) Ω ∈ ℂL × L is a unitary matrix such that the matrix R in the QR decomposition

[HF⋆IL] = QR

has diagonal elements {ri}Li = 1. To obtain Ω, it suffices to compute the generalized triangular decomposition (GTD) [11]

[HVhdiag(√p)IL] = QJRPHJ

and then set Ω = PJ.

Proof: The proof is involved, so we provide only a sketch of it and refer the interested reader to [6, Appendix 4.C] for the detailed proof.

Denote the diagonal elements of R by {ri}Li = 1, and the singular values of the effective channel W and the augmented matrix Wa by {σw,1}Li = 1 and {σwa,1}Li = 1 in decreasing order, respectively. One can easily see that

σwa,i = √1 + σ2w,i 1≤i≤L.

Consider the SVD F = Ufdiag(√p)Ω. By using Theorem 16.1.6 on the GTD, one can prove that there exists an Ω such that Wa = QR if and only if {r2i}≺× {σ2wa,i} [6, Lemma 4.9]. Therefore, the constraint

[HFIL] = QR

can be equivalently replaced by

(r21,…,r2L)≺×(σ2wa,1,…,σ2wa,L).

Next, by showing that

k∏i = 1σ2wa,i = k∏i = 1(1 + σ2w,i)≤k∏i = 1(1 + γipi), 1≤k≤L

where the equality holds if and only if Uf = Vh, one can conclude that the optimal F occurs when U f = Vh.

Theorem 16.2.3 shows the solution to the general problem with an arbitrary cost function has a nice structure. In fact, when the composite objective function

(16.37) |

is either Schur-convex or Schur-concave, the nonlinear DF transceiver design problem admits a simpler or even closed-form solution.

Corollary 16.2.2. ([6, Theorem 4.4]) Suppose that the cost function f0 : ℝL ↦ ℝ is increasing in each argument.

(i) if f0 ∘ exp is Schur-concave, then the optimal solution to (16.35) is given by

F⋆ = Vhdiag(√p)

where p is the solution to the following power allocation problem:

minimizepf0({(1 + γipi)})subject top≥0,1Tp≤P.

(ii) if f0 ∘ exp is Schur-convex, then the optimal solution to (16.35) is given by

F⋆ = Vhdiag(√p)Ω

where the power allocation p is given by

pi = (μ − γ − 1i) + , 1≤i≤L

with μ chosen to satisfy 1Tp = P, and Ω is a unitary matrix such that the QR decomposition

[HF⋆IL] = QR

yields R with equal diagonal elements.

It is interesting to relate the linear and nonlinear DF transceivers by the Schurconvexity/concavity of the cost function. From Lemma 16.1.5, f0 oexp being Schurconcave implies that f0 is Schur-concave, but not vice versa. From Lemma 16.1.4, if f0 is Schur-convex, then f0 ∘ exp is also Schur-convex, but not vice versa. The examples of the cost function for which f0 ∘ exp is either Schur-concave or Schurconvex were provided in [6] (see also Exercise 16.4.4 and a recent survey [26]).

A Measure of Correlation

Consider two n-dimensional random vectors x and y following the same family/class of distributions with zero means and covariance matrices Rx and Ry, respectively. One question arising in many practical scenarios is how to compare x and y in terms of the degree of correlation. Majorization provides a natural way to measure correlation of a random vector.

Definition 16.2.2. ([7, Sec. 4.1.2]) Let λ(Α) denote the eigenvalues of a positive semidefinite matrix A. Then, we say x is more correlated than y, or the covariance matrix Rx is more correlated than Ry, if λ(Rx) ≻ λ(Ry).

Note that comparing x and y (or equivalently Rx and Ry ) through the majorization ordering imposes an implicit constraint on Rx and Ry that requires ∑ni = 1λi(Rx) = ∑ni = 1λi(Ry), or equivalently, Tr(Rx) = Tr(Ry). This requirement is actually quite reasonable. If we consider E{|xi|2} as the “power” of the ith element of x, then Tr(Rx) = ∑ni = 1E{|xi|2} is the sum power of all elements of x. Therefore, the comparison is conducted in a fair sense that the sum power of the two vectors is equal. Nevertheless, Definition 16.2.2 can be generalized to the case where Tr(Rx) ≠ Tr(Ry) by using weak majorization.

From Example 16.1.1, the most uncorrelated covariance matrix has equal eigenvalues, whereas the most correlated covariance matrix has only one non-zero eigenvalue. In the next, we demonstrate through several examples how to use majorization theory along with Definition 16.2.2 to analyze the effect of correlation on communication systems.

Colored Noise in CDMA Systems

Consider the uplink of a single-cell synchronous CDMA system with K users and processing gain N similar to the one that has been considered in Section 16.2.1 but with colored noise. More exactly, with the received signal at the base station given by (16.8), the zero-mean noise n is now correlated with the covariance matrix Rn. In this case, the sum capacity of the CDMA system is given by [27]

(16.38) |

where S is the signature sequence matrix and P = diag {p1,… ,pK} contains the received power of each user. The maximum sum capacity is obtained as Copt = maxS∈S Csum, where S is defined in (16.13).

Denote the EVD of Rn Rn = UnΛnUHn with eigenvalues σ21,…,σ2N. Then, Copt can be characterized as follows.

Lemma 16.2.3. ([27, Lemma 2.2]) The maximum sum capacity of the synchronous CDMA system with colored noise is given by

(16.39) |

Proposition 16.2.1. Copt obtained in (16.39) is Schur-convex in σ2 ≜ (σ21,…,σ2N).

Proof: Let ϕ(σ2) = 12∑Ni = 1log(1 + λi/σ2i). Since g(xi) = log (1 + λi/xi) is a convex function and f(x) = 12∑Ni = 1xi is increasing and Schur-convex, it follows from Proposition 16.1.2 that ϕ(σ2) = f(g(σ21),…,g(σ2N)) is Schur-convex. Therefore, given σ2a≺σ2b, we have

Copt(σ2a) = maxS∈Sϕ(σ2a)≤maxS∈Sϕ(σ2b) = Copt(σ2b).

Proposition 16.2.1 indicates that the more correlated (according to Definition 16.2.2) the noise is, the higher the sum capacity could be. Intuitively, if one of the noise variances, say σ2N, is much larger than the rest, the users can avoid using signals in the direction of Rn corresponding to σ2N and benefit from a reduced average noise variance (since the sum of all variances keeps unchanged). Apparently, white noise with equal σ2i = σ2, i = 1,…, N, is one of the worst cases that lead to the minimum Copt.

Spatial Correlation in MISO Channels

A multiple-input single-output (MISO) channel usually arises in using multiple transmit antennas and a single receive antenna in a wireless link. Consider a block-flat-fading15 MISO channel with N transmit antennas. The channel model is given by

(16.40) |

where x ∈ ℂN is the transmitted signal, y ∈ ℂ is the received signal, the complex Gaussian noise n has zero mean and variance σ2, and the channel h ∈ ℂN is a circular symmetric Gaussian random vector with zero-mean and covariance matrix Rh, i.e., h ~ CN(0, Rh).

In MISO (as well as MIMO) channels, the transmit strategy is determined by the transmit covariance matrix Q = E {xxH}. Denote the EVD of Q by Q = UqΛqUHq with the diagonal matrix Λq = diag{p1,… ,pN}. Then, the eigenvectors of Q, i.e., the columns of Uq, can be regarded as the transmit directions, and the eigenvalue pi represents the power allocated to the ith data stream or eigenmode. Assuming that the receiver knows the channel perfectly and the transmitter uses a Gaussian codebook with zero mean and covariance matrix Q, the maximum average mutual information, also termed the ergodic capacity, of the MISO channel is given by [16]

(16.41) |

where γ is the signal-to-noise ratio, Q≜{Q:Q≥0,Tr(Q) = 1} represents the normalized transmit power constraint, and the expectation is taken over h.

The ergodic capacity depends on what kind of channel state information at the transmitter (CSIT) is available. In the following, we consider three types of CSIT:

• No CSIT. Neither h nor its statistics are known by the transmitter, and it is usually assumed that h ~ CN(0, I), i.e., Rh = I (see Section 16.2.5).

• Perfect CSIT. That is, h is perfectly known by the transmitter.

• Imperfect CSIT with covariance feedback. In this case, it is assumed that h ~ CN(0, Rh) with Rh known by the transmitter.

Denote the EVD of Rh Rh = UhΛhUHh with eigenvalues μ1 ≥ ⋯ ≥ μΝ sorted w.l.o.g. in decreasing order, and let w1,…,wN be standard exponentially iid random variables. In the case of no CSIT, the optimal transmit covariance matrix is given by Q = 1NI [16], which results in

(16.42) |

With perfect CSIT, the optimal Q is given by Q = hhH/ ‖h‖2 [16], leading to

(16.43) |

For imperfect CSIT with covariance feedback (i.e., Rh known), the optimal Q is given in the form Q = UhΛqUHh [28], so the ergodic capacity is obtained by

(16.44) |

where P ≜ {p : p ≥ 0, 1Tp = 1} is the power constraint set. The channel capacities of the three types of CSIT all depend on the eigenvalues of Rh, i.e., μ, which is exactly characterized by the following result.

Theorem 16.2.4. ( [29]) While CnoCIST(μ) and CpCSIT(μ) are both Schur-concave in μ, CcfCSIT(μ) is Schur-convex in μ.

Proof: The Schur-concavity of CnoCIST(μ) and CpCSIT(μ) follows readily from Corollary 16.1.6, since f(x) = log(1 + a∑Ni = 1xi) is a symmetric and concave function for a > 0 and xi > 0. The proof of the Schur-convexity of CcfCSIT(μ) is based on Theorem 16.1.2 but quite involved. We refer the interested reader to [29] for more details.

□

Theorem 16.2.4 completely characterizes the impact of correlation on the ergodic capacity of a MISO channel. To see this, assume that Tr(Rh) = N (for a fair comparison under Definition 16.2.2) and the correlation vector μ2 majorizes μ1, i.e., μ1 ≺ μ2. We define the fully correlated vector ψ = (N, 0,…, 0) that majorizes all other vectors, and the least correlated vector χ = (1, 1,…, 1) that is majorized by all other vectors. Then, according to Theorem 16.2.4, the impact of different types of CSIT and different levels of correlation on the MISO capacity is provided in the following inequality chain [29]:

CnoCSIT(ψ)≤CnoCSIT(μ2)≤CnoCSIT(μ1)≤CnoCSIT(χ) = CcfCSIT(χ)≤CcfCSIT(μ1)≤CcfCSIT(μ2)≤CcfCSIT(ψ) = CpCSIT(ψ)≤CpCSIT(μ2)≤CpCSIT(μ1)≤CpCSIT(χ). |

(16.45) |

Simply speaking, correlation helps in the covariance feedback case, but degrades the channel capacity when there is either perfect or no CSIT. Nevertheless, the more amount of CSIT is available, the better the performance could be.

The performance of MIMO communication systems depends, to a substantial extent, on the channel state information (CSI) available at both ends of the communication link. While CSI at the receiver (CSIR) is usually assumed to be perfect, CSI at the transmitter (CSIT) is often imperfect due to many practical issues. Therefore, when devising MIMO transmit strategies, the imperfectness of CSIT has to be considered, leading to the so-called robust designs. A common philosophy of robust designs is to achieve worst-case robustness, i.e., to guarantee the system performance in the worst channel [30]. In this section, we use majorization theory to prove that the uniform power allocation is the worst-case robust solution for two kinds of imperfect CSIT.

Deterministic Imperfect CSIT

Consider the MIMO channel model in (16.15), where the transmit strategy is given by the transmit covariance matrix Q. Indeed, assuming the transmit signal x is a Gaussian random vector with zero mean and covariance matrix Q, i.e., x ~ CN(0, Q), the mutual information is given by [16]

(16.46) |

If H is perfectly known by the transmitter, i.e., perfect CSIT, the channel capacity can be achieved by maximizing Ψ(Q, H) under the power constraint Q∈Q≜{Q:Q≥0, Tr(Q) = P}.

In practice, however, the accurate channel value is usually not available, but belongs to a known set of possible values, often called an uncertainty region. Since Ψ(Q, H) depends on H through RH = HHH, we can conveniently define an uncertainty region H as

(16.47) |

where the set RH could, for example, contain any kind of spectral (eigenvalue) constraints as

(16.48) |

where ℒRH denotes arbitrary eigenvalue constraints. Note that H defined in (16.47) and (16.48) is an isotropic set in the sense that for each H ∈ H we have HU ∈ H for any unitary matrix U.

Following the philosophy of worst-case robustness, the robust transmit strategy is obtained by optimizing Ψ(Q, H) in the worst channel within the uncertainty region H, thus resulting in a maximin problem

(16.49) |

The optimal value of this maximin problem is referred to as the compound capacity [31]. In the following, we show that the compound capacity is achieved by the uniform power allocation.

Theorem 16.2.5. ( [32, Theorem 1]) The optimal solution to (16.49) is Q* = PNI and the optimal value is

C(ℋ) = minH∈ℋlog det(I + PNHHH).

Proof: Denote the eigenvalues of Q by p1 ≥ •⋯ ≥ pN w.l.o.g. in decreasing order. From [31, Lemma 1], the optimal Q depends only on {pi} and thus the inner minimization of (16.49) is equivalent to

minimize{λi(RH)}∈ℒRHN∑i = 1log(1 + piλi(RH))

with λ1(RH) ≤ ⋯ ≤ λN(RH) in increasing order. Consider the function f(x) = ∑Ni = 1gi(xi) = ∑Ni = 1log(1 + aixi) with {ai} in increasing order. It is easy to verify that g′i(x) ≤ g′i+1(y) whenever x ≥ y. Thus, from Theorem 16.1.1, f (x) is a Schurconcave function, whose maximum is achieved by a uniform vector x. Under the power constraint ∑Ni = 1pi = P, it follows that

min{λi(RH)}∈ℒRHN∑i = 1log(1 + piλi(RH))≤min{λi(RH)}∈ℒRHN∑i = 1log(1 + PNλi(RH))

where the equality holds for the uniform power allocation.

□

The optimality of the uniform power allocation is actually not very surprising. Due to the symmetry of the problem, if the transmitter does not uniformly distribute power over the eigenvalues of Q, then the worst channel will align its highest singular value (or eigenvalue of RH ) to the lowest eigenvalue of Q. Therefore, to avoid such a situation and achieve the best performance in the worst channel, an appropriate way is to use equal power on all eigenvalues of Q, which is formally proved in Theorem 16.2.5.

Stochastic Imperfect CSIT

Tracking the instantaneous channel value may be difficult when the channel varies rapidly. The stochastic imperfect CSIT model assumes that the channel is a random quantity with its statistics such as mean or/and covariance known by the transmitter. Sometimes, even the channel statistics may not be perfectly known. The interests of this model would be on optimizing the average system performance using the channel statistics.

For simplicity, we consider the MISO channel in (16.40), where the channel h is a circular symmetric Gaussian random vector with zero-mean and covariance matrix Rh, i.e., h ~ CN(0, Rh). Mathematically, the channel can be expressed as

(16.50) |

where z ~ CN(0, I). Different from the covariance feedback case where Rh is assumed to be known by the transmitter (see Section 16.2.4), here we consider an extreme case where the transmitter does not even know exactly Rh. Instead, we assume that Rh ∈ Rh with

(16.51) |

where ℒRh denotes arbitrary constraints on the eigenvalues of Rh. In the case of no information on Rh, we have ℒRh = ℝN + . To combat with the possible bad channels, the robust transmit strategy should take into account the worst channel covariance, thus leading to the following maximin problem

(16.52) |

where Q≜{Q:Q≥0,Tr(Q) = P} and z ~ CN(0, I). The following result indicates that the uniform power allocation is again the robust solution.

Theorem 16.2.6. The optimal solution to (16.52) is Q* = PNI and the optimal value is

C(ℛh)minRh∈ℛhE[log(1 + PNN∑i = 1λi(Rh)wi)]

where w1, … ,WN are standard exponentially iid random variables.

Proof: Denote the eigenvalues of Q by p1 ≥ ⋯ ≥ pN w.l.o.g. in decreasing order. Considering that Q and Rh impose no constraint on the eigenvectors of Q and Rh, respectively, and that Uz has the same distribution as z for any unitary matrix U, the optimal Q should be a diagonal matrix depending on the eigenvalues {pi} (see, e.g., [28]) and thus (16.52) is equivalent to

(16.53) |

with wi = |zi|2, where zi is the ith element of z.

Given {pi} in decreasing order, the minimum of the inner minimization of (16.53) must be achieved with {λi(Rh)} in increasing order, otherwise a smaller objective value can be obtained by changing the order of {λi(Rh)}. Then, following the similar steps in the proof of Theorem 16.2.5, one can show that E{log(1 + ∑Ni = 1piλi(Rh)wi)} is a Schur-concave function with respect to (pi)Ni = 1. Hence, the maximum of (16.53) is achieved by a uniform power vector that is majorized by all other power vectors under the constraint ∑Ni = 1pi = P.

Another interesting problem is to investigate the worst channel correlation for all possible transmit strategies, which is given by the solution to the following minimax problem:

(16.54) |

Through the similar steps in the proof of Theorem 16.2.6, one can find that the solution to (16.54) is proportional to an identity matrix, i.e., Rh = αI with α > 0. This provides a robust explanation for the assumption that h ~ CN(0, I) in the case of no CSIT (see Section 16.2.4): Rh = I is the worst correlation among Rh = {Rh : Tr(Rh) = N} [29].

16.3 Conclusions and Further Readings

This chapter introduced majorization as a partial order relationship for real-valued vectors and described its main properties. This chapter also presented applications of majorization theory in proving inequalities and solving various optimization problems in the fields of signal processing and wireless communications. For a more comprehensive treatment of majorization theory and its applications, the readers are directed to Marshall and Olkins book [1]. Applications of majorization theory to signal processing and wireless communications are also described in the tutorials [6] and [7] .

Exercise 16.4.1. Schur-convexity of sums of functions.

a. Let ϕ(x) = ∑ni = 1gi(xi), where each gi is differentiable. Show that ϕ is Schur-convex on Dn if and only if

g′i(a)≥g′i + 1(b) whenever a≥b, i = 1,…,n − 1.

b. Let ϕ(x) = ∑ni = 1aig(xi), where g (x) is decreasing and convex, and 0 ≤ a1 ≤ ⋯ ≤ an. Show that ϕ is Schur-convex on Dn.

Exercise 16.4.2. Schur-convexity of products of functions.

a. Let g : I → ℝ+ be continuous on the interval I ⊆ ℝ. Show that ϕ(x) = ∏ni = 1g(xi) is (strictly) Schur-convex on In if and only if log g is (strictly) convex on I.

b. Show that ϕ(x) = ∏ni = 1Γ(xi), where Γ(x) = ∫∞0ux − 1e − udu denotes the Gamma function, is strictly Schur-convex on ℝn + + .

Exercise 16.4.3. Linear MIMO Transceiver.

a. Prove Corollary 16.2.1, which shows that when the cost function f0 is either Schur-concave or Schur-convex, the optimal linear MIMO transceiver admits an analytical structure.

b. Show that the following problem formulations can be rewritten as minimizing a Schur-concave cost function of MSEs:

• Minimizing f({MSEi}) = ∑Li = 1αiMSEi.16

• Minimizing f({MSEi}) = ∏Li = 1MSEαii.

• Maximizing f({SINRi}) = ∑Li = 1αiSINRi.

• Maximizing f({SINRi}) = ∏Li = 1SINRαii.

• Minimizing f({BERi}) = ∏Li = 1BERi.

c. Show that the following problem formulations can be rewritten as minimizing a Schur-convex cost function of MSEs:

• Minimizing f({MSEi}) = maxi{MSEi}.

• Maximizing f({SINRi}) = (∏Li = 1SINR − 1i) − 1.

• Maximizing f({SINRii}) = mini{SINRi}.

• Minimizing f({BERi}) = ∑Li = 1BERi.

• Minimizing f({BERi}) = maxi{BERi}.

Exercise 16.4.4. Nonlinear MIMO Transceiver.

a. Prove Corollary 16.2.2, which shows that the optimal nonlinear DF MIMO transceiver can also be analytically characterized if the composite cost function f0 ∘ exp is either Schur-concave or Schur-convex.

b. Show that the following problem formulations can be rewritten as minimizing a Schur-concave f0 ∘ exp of MSEs:

• Minimizing f({MSEi}) = ∏Li = 1MSEαii.

• Maximizing f({SINRi}) = ∑Li = 1αiSINRi.

Show that, in addition to all problem formulations in Exercise 16.4.3.c, the following ones can also be rewritten as minimizing a Schur-convex f0 ∘ exp of MSEs:

• Minimizing f({MSEi}) = ∑Li = 1MSEi.

• Minimizing f({MSEi}) = ∏Li = 1MSEi.

• Maximizing f({SINRi}) = ∏Li = 1SINRi.

[1] A. W. Marshall and I. Olkin, Inequalities: Theory of Majorization and Its Applications. New York: Academic Press, 1979.

[2] G. H. Hardy, J. E. Littlewood, and G. Pólya, Inequalities, 2nd ed. London and New York: Cambridge University Press, 1952.

[3] R. Bhatia, Matrix Analysis. New York: Springer-Verlag, 1997.

[4] R. A. Horn and C. R. Johnson, Matrix Analysis. New York: Cambridge University Press, 1985.

[5] T. W. Anderson, An Introduction to Multivariate Statistical Analysis, 3rd ed. Hoboken, NJ: Wiley, 2003.

[6] D. P. Palomar and Y. Jiang, “MIMO transceiver design via majorization theory,” Foundations and Trends in Communications and Information Theory, vol. 3, no. 4–5, pp. 331–551, 2006.

[7] E. A. Jorswieck and H. Boche, “Majorization and matrix-monotone funcstions in wireless communications,” Foundations and Trends in Communications and Information Theory, vol. 3, no. 6, pp. 553–701, Jul. 2006.

[8] P. Viswanath and V. Anantharam, “Optimal sequences and sum capacity of synchronous CDMA systems,” IEEE Trans. Inform. Theory, vol. 45, no. 6, pp. 1984–1993, Sep. 1999.

[9] D. P. Palomar, J. M. Cioffi, and M. A. Lagunas, “Joint Tx-Rx beamforming design for multicarrier MIMO channels: A unified framework for convex optimization,” IEEE Trans. Signal Process., vol. 51, no. 9, pp. 2381–2401, Sep. 2003.

[10] C. T. Mullis and R. A. Roberts, “Synthesis of minimum roundoff noise fixed point digital filters,” IEEE Trans. on Circuits and Systems, vol. CAS-23, no. 9, pp. 551–562, Sept. 1976.

[11] Y. Jiang, W. Hager, and J. Li, “The generalized triangular decomposition,” Mathematics of Computation, Nov. 2006.

[12] E. A. Jorswieck and H. Boche, “Outage probability in multiple antenna systems,” European Transactions on Telecommunications, vol. 18, no. 3, pp. 217–233, Apr. 2007.

[13] S. Verdú, Multiuser Detection. New York, NY: Cambridge University Press, 1998.

[14] S. Ulukus and R. D. Yates, “Iterative construction of optimum signature sequence sets in synchronous CDMA systems,” IEEE Trans. Inform. Theory, vol. 47, no. 5, pp. 1989–1998, Jul. 2001.

[15] C. Rose, S. Ulukus, and R. D. Yates, “Wireless systems and interference avoidance,” IEEE Trans. Wireless Commun., vol. 1, no. 3, pp. 415–428, Jul. 2002.

[16] I. E. Telatar, “Capacity of multi-antenna Gaussian channels,” European Trans. Telecommun., vol. 10, no. 6, pp. 585–595, Nov.-Dec. 1999.

[17] D. P. Palomar, M. A. Lagunas, and J. M. Cioffi, “Optimum linear joint transmit-receive processing for MIMO channels with QoS constraints,” IEEE Trans. Signal Process., vol. 52, no. 5, pp. 1179–1197, May 2004.

[18] L. Zheng and D. N. C. Tse, “Diversity and multiplexing: A fundamental tradeoff in multiple-antenna channels,” IEEE Trans. Inform. Theory, vol. 49, no. 5, pp. 1073–1096, May 2003.

[19] S. M. Kay, Fundamentals of Statistical Signal Processing: Estimation Theory. Englewood Cliffs, NJ, USA: Prentice-Hall, 1993.

[20] S. Boyd and L. Vandenberghe, Convex Optimization. Cambridge, U.K.: Cambridge University Press, 2004.

[21] Y. Jiang, W. Hager, and J. Li, “The geometric mean decomposition,” Linear Algebra and Its Applications, vol. 396, pp. 373–384, Feb. 2005.

[22] Y. Jiang, W. Hager, and J. Li, “Tunable channel decomposition for MIMO communications using channel state information,” IEEE Trans. Signal Process., vol. 54, no. 11, pp. 4405–4418, Nov. 2006.

[23] F. Xu, T. N. Davidson, J. K. Zhang, and K. M. Wong, “Design of block transceivers with decision feedback detection,” IEEE Trans. Signal Process., vol. 54, no. 3, pp. 965–978, Mar. 2006.

[24] A. A. D’Amico, “Tomlinson-Harashima precoding in MIMO systems: A unified approach to transceiver optimization based on multiplicative schurconvexity,” IEEE Trans. Signal Process., vol. 56, no. 8, pp. 3662–3677, Aug. 2008.

[25] B. Hassibi, “A fast square-root implementation for BLAST,” in Proc. of Thirty-Fourth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, Nov. 2000.

[26] P. P. Vaidyanathan, S.-M. Phoong, and Y.-P. Lin, Signal Processing and Optimization for Transceiver Systems. New York, NY: Cambridge University Press, 2010.

[27] P. Viswanath and V. Anantharam, “Optimal sequences for CDMA under colored noise: A Schur-saddle function property,” IEEE Trans. Inform. Theory, vol. 48, no. 6, pp. 1295–1318, Jun. 2002.

[28] S. A. Jafar and A. Goldsmith, “Transmitter optimization and optimality of beamforming for multiple antenna systems,” IEEE Trans. Wireless Commun., vol. 3, no. 4, pp. 1165–1175, Jul. 2004.

[29] E. A. Jorswieck and H. Boche, “Optimal transmission strategies and impact of correlation in multiantenna systems with different types of channel state information,” IEEE Trans. Signal Process., vol. 52, no. 12, pp. 3440–3453, Dec. 2004.

[30] J. Wang and D. P. Palomar, “Worst-case robust MIMO transmission with imperfect channel knowledge,” IEEE Trans. Signal Process., vol. 57, no. 8, pp. 3086–3100, Aug. 2009.

[31] A. Lapidoth and P. Narayan, “Reliable communication under channel uncertainty,” IEEE Trans. Inform. Theory, vol. 44, no. 6, pp. 2148–2177, Oct. 1998.

[32] D. P. Palomar, J. M. Cioffi, and M. A. Lagunas, “Uniform power allocation in MIMO channels: A game-theoretic approach,” IEEE Trans. Inform. Theory, vol. 49, no. 7, pp. 1707–1727, Jul. 2003.

1The majorization ordering given in Definition 16.1.2 is also called additive majorization, to distinguish it from multiplicative majorization (or log-majorization) introduced in Section 16.1.4.

2A square matrix P is said to be stochastic if either its rows or columns are probability vectors, i.e., if its elements are all nonnegative and either the rows or the columns sums are one. If both the rows and columns are probability vectors, then the matrix is called doubly stochastic. Stochastic matrices can be considered representations of the transition probabilities of a finite Markov chain.

3The permutation matrices are doubly stochastic and, in fact, the convex hull of the permutation matrices coincides with the set of doubly stochastic matrices [1, 2].

4A function is said to be symmetric if its arguments can be arbitrarily permuted without changing the function value.

5A function f : χ → ℝ is convex if χ is a convex set and for any x,y ∈ χ and 0 ≤ α ≤ 1, f (αx + (1 − α) y) ≤ αf (x) + (1 − α) f (y). f is concave if −f is convex.

6A function f : χ → ℝ is quasi-convex if χ is a convex set and for any x,y ∈ χ and 0 ≤ α ≤ 1, f (αx + (1 − α)y) ≤ max{f (x), f (y)}. A convex function is also quasi-convex, but the converse is not true.

7Indeed, using the language of group theory, we say that the groups (ℝ, +) and (ℝ+, ×) are isomorphic since there is a bijection function exp : ℝ → ℝ+ such that exp(x+y) = exp(x) × exp(y) for ∀ x, y ∈ ℝ.

8X1 ,…,Xn are exchangeable random variables if the distribution of Χπ(1),…, Xπ(n) does not depend on the permutation π. In other words, the joint distribution of X1,…, Xn is invariant under permutations of its arguments. For example, independent and identically distributed random variables are exchangeable.

9If the noise is not white, say with covariance matrix Rn, one can always whiten it by y̅ = R − 1/2ny = ˉHx + ˉn, where ˉH = R − 1/2nH is the equivalent channel.

10This kind of improvement is often called the multiplexing gain [18].

11The increasingness of f is a mild and reasonable assumption: if the performance of one stream improves, the global performance should improve too.

12≻w denotes weak supermajorization (see Definition 16.1.3).

13In practice, most cost functions are minimized when the arguments are in a specific ordering (if not, one can always use instead the function f̃0(x) = minp∈Ƥ f0(Px), where Ƥ is the set of all permutation matrices) and, hence, the decreasing order can be taken without loss of generality.

14Error propagation means that if the detection is erroneous, it may cause more errors in the subsequent detections. By using powerful coding techniques, the influence of error propagation can be made negligible.

15Block flat-fading means that the channel keeps unchanged for a block of T symbols, and then the channel changes to an uncorrelated channel realization.

16Assume w.l.o.g. that 0 ≤ α1 ≤ ⋯ ≤ αL.