We have introduced a basic system for facial expression. If you are really interested in this topic, you may want to read this section for more guidance on how to improve the performance of the system. In this section, we will introduce you to compiling the opencv_contrib module, the Kaggle facial expression dataset, and the k-cross validation approach. We will also give you some suggestions on how to get better feature extraction.

In this section, we will introduce the process for compiling opencv_contrib in Linux-based systems. If you use Windows, you can use the Cmake GUI with the same options.

First, clone the opencv repository to your local machine:

git clone https://github.com/Itseez/opencv.git --depth=1

Second, clone the opencv_contrib repository to your local machine:

git clone https://github.com/Itseez/opencv_contrib --depth=1

Change directory to the opencv folder and make a build directory:

cd opencv mkdir build cd build

Build OpenCV from source with opencv_contrib support. You should change OPENCV_EXTRA_MODULES_PATH to the location of opencv_contrib on your machine:

cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local -D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib/ .. make -j4 make install

Kaggle is a great community of data scientists. There are many competitions hosted by Kaggle. In 2013, there was a facial expression recognition challenge.

The dataset consists of 48x48 pixel grayscale images of faces. There are 28,709 training samples, 3,589 public test images and 3,589 images for final test. The dataset contains seven expressions (Anger, Disgust, Fear, Happiness, Sadness, Surprise and Neutral). The winner achieved a score of 69.769 %. This dataset is huge so we think that our basic system may not work out of the box. We believe that you should try to improve the performance of the system if you want to use this dataset.

In our facial expression system, we use face detection as a pre-processing step to extract the face region. However, face detection is prone to misalignment, hence, feature extraction may not be reliable. In recent years, one of the most common approaches has been the usage of facial landmarks. In this kind of method, the facial landmarks are detected and used to align the face region. Many researchers use facial landmarks to extract the facial components such as the eyes, mouth, and so on, and do feature extractions separately.

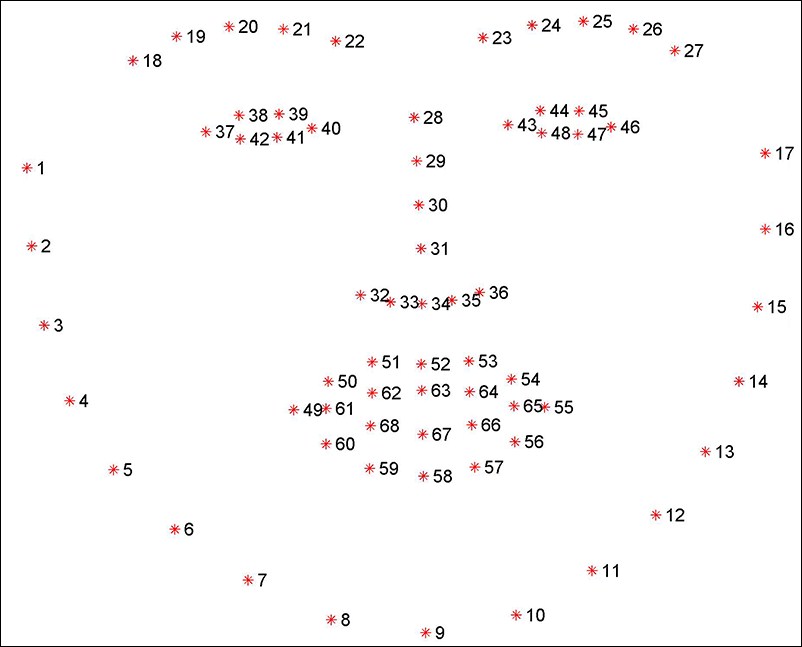

Facial landmarks are predefined locations of facial components. The figure below shows an example of a 68 points system from the iBUG group (http://ibug.doc.ic.ac.uk/resources/facial-point-annotations)

An example of a 68 landmarks points system from the iBUG group

There are several ways to detect facial landmarks in a face region. We will give you a few solutions so that you can start your project easily

- Active Shape Model: This is one of the most common approaches to this problem. You may find the following library useful:

- Face Alignment by Explicit Regression by Cao et al: This is one of the latest works on facial landmarks. This system is very efficient and highly accurate. You can find an open source implementation at the following hyperlink: https://github.com/soundsilence/FaceAlignment

You can use facial landmarks in many ways. We will give you some guides:

- You can use facial landmarks to align the face region to a common standard and extract the features vectors as in our basic facial expression system.

- You can extract features vectors in different facial components such as eyes and mouths separately and combine everything in one feature vector for classification.

- You can use the location of facial landmarks as a feature vector and ignore the texture in the image.

- You can build classification models for each facial component and combine the prediction in a weighted manner.

Feature extraction is one of the most important parts of facial expression. It is better to choose the right feature for your problem. In our implementation, we have only used a few features in OpenCV. We recommend that you try every possible feature in OpenCV. Here is the list of supported features in Open CV: BRIEF, BRISK, FREAK, ORB, SIFT, SURF, KAZE, AKAZE, FAST, MSER, and STAR.

There are other great features in the community that might be suitable for your problem, such as LBP, Gabor, HOG, and so on.

K-fold cross validation is a common technique for estimating the performance of a classifier. Given a training set, we will divide it into k partitions. For each fold i of k experiments, we will train the classifier using all the samples that do not belong to fold i and use the samples in fold i to test the classifier.

The advantage of k-fold cross validation is that all the examples in the dataset are eventually used for training and validation.

It is important to divide the original dataset into the training set and the testing set. Then, the training set will be used for k-fold cross validation and the testing set will be used for the final test.

Cross validation combines the prediction error of each experiment and derives a more accurate estimate of the model. It is very useful, especially in cases where we don't have much data for training. Despite a high computation time, using a complex feature is a great idea if you want to improve the overall performance of the system.