15 System Approach to Simulations for Training: Instruction, Technology, and Process Engineering

Sae Schatz, Denise Nicholson and Rhianon Dolletski

CONTENTS

15.1.1 What Is Distributed Real-Time SBT?

15.2 Brief History of Distributed SBT

15.3.2.1 Expanding Fidelity into New Domains

15.3.3 Instructional Strategies

15.3.4.1 Human Effort Required for Distributed AAR

15.3.5 Lack of Effectiveness Assessment

15.1 Introduction

Parallel and distributed simulation (PADS) emerged in the early 1980s, and over the past three decades, PADS systems have matured into vital technologies, particularly for military applications. This chapter provides a basic overview of one specific PADS application area: distributed real-time simulation-based training (SBT). The chapter opens with a brief description of distributed real-time SBT and its development. It then lists the recurrent challenges faced by such systems with respect to instructional best practices, technology, and use.

15.1.1 What Is Distributed Real-Time SBT?

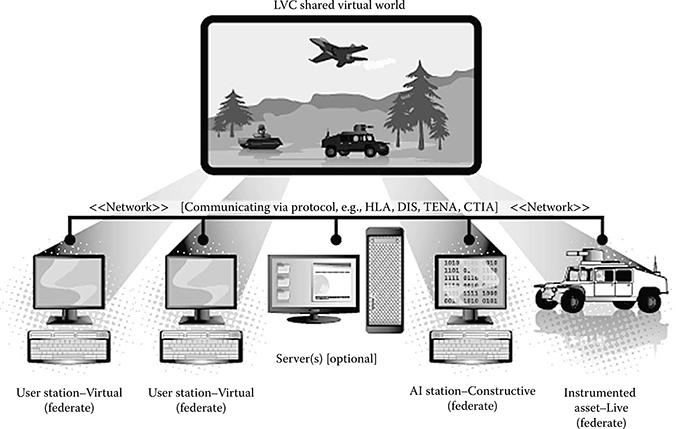

We begin by clarifying the phrase “distributed real-time SBT.” Consider each key word, in turn. First, distributed simulations are networks of geographically dispersed simulators (often called “federates”) that execute a single overall model, or more colloquially, that share a single “place.” Next, real-time simulations are those that advance at the same rate as actual time [1]. Finally, training simulations are instructional tools that employ training strategies (such as scenario-based training) and represent a problem-solving context to facilitate guided practice [2,3]. Thus, for this chapter, consider the following definition (see Figure 15.1 for an illustration):

Distributed real-time SBT involves two or more geographically distributed computer-based simulations that are interconnected through a network and track the passing of real time; they are used concurrently by multiple trainees, whose individual inputs affect the overall shared environment, and they include instructionally supported, sufficiently realistic problem-solving environments in which trainees can learn or practice their knowledge, skills, and attitudes.

Distributed real-time SBT is considered a primary enabling technology for the U.S. military. It supports Joint (i.e., across military branches) and interagency training and mission rehearsal, development and testing of new tactics and techniques, and assessment of personnel skills [4]. In turn, these capabilities reduce risks, save lives and money, and engender increased operational capabilities. Section 15.2 offers a sketch of the historic milestones that have led to the development of distributed real-time SBT and contributed to its widespread use within the Defense sector.

FIGURE 15.1 Illustration of a generic live, virtual, and constructive (LVC) distributed real-time simulation-based training system.

15.2 Brief History of Distributed SBT

Simulation has existed for centuries. As early as 2500 bc, ancient Egyptians used figurines to simulate warring factions. However, “modern” simulation only began around the turn of the past century with the invention of mechanical flight simulators. These started appearing around 1910 and are often considered the forerunners of contemporary simulation, in general. The best known early flight simulator, called the Link Trainer (or colloquially, the “Blue Box”), was patented in 1930 [5]. In the years that followed, thousands of Blue Boxes were put into service, particularly by the U.S. government in their effort to train military aviators during World War II.

In general, World War II served as a major catalyst for modern modeling and simulation (M&S). In the United States, the war encouraged the federal government to release unprecedented funding for research, a significant portion of which went to advancing computer development as well as early M&S. The war effort also created the need to analyze large-volume data sets and to perform real-time operations. These computational needs, coupled with the flood of federal money, created a frenzy of competition in computer, modeling, and simulation sciences [6].

The research led to a series of technology breakthroughs throughout the 1940s and 1950s [7], and use of simulation swelled. NASA and the U.S. military, in particular, developed large complex simulators for training purposes [8]. The growth of M&S continued throughout the 1960s, and, by the 1970s, the combination of less-expensive computing technology and its improved effectiveness ushered in an era of widespread simulation use—particularly within the Defense training community.

A new outlook on training also began to surface. Before the 1970s, SBT had been primarily applied to individual tasks and viewed as substitution training (i.e., a substitution for real-life training used for cost, safety, or other purely logistical reasons). However, during the 1970s, the training community began to value instructional simulation beyond mere substitution [9]. New efforts focused on collective training, the use of realistic and measurable training requirements, and the development of expertise.

Spurred, in part, by the demand for collective and improved training, military investigators began exploring distributed simulation technology. In 1983, the Defense Advanced Research Projects Agency (DARPA) started development of the futuristic Simulation Network (SIMNET). This effort, championed by Jack A. Thorpe, promised to provide affordable, networked SBT for U.S. warfighters. In the 1980s, the idea of networked simulators was viewed, at best, as high-risk research—or as an impossible pipedream, at worse! However, history now reveals that SIMNET, and the notion of distributed simulation that it represented, were major leaps forward for SBT [10].

By the 1990s, SIMNET had given birth to the era of networked real-time SBT, and although still considered highly experimental, the military started sponsoring more distributed SBT efforts. In addition to SIMNET, the Aggregate-Level Simulation Protocol (ALSP) emerged as another early distributed SBT success [11], and around the same time, software developers released the Distributed Interactive Simulation (DIS), a more mature version of the original SIMNET design [12]. Both ALSP and DIS were intended to support flexible exchange of data among federated military simulation systems. However, these protocols failed to meet emerging requirements.

Therefore, to address a wider set of needs, military M&S leaders developed the High Level Architecture (HLA) protocol. Released in 1996, HLA combined the best features of ALSP and DIS, while also including support for the analysis and acquisition communities [13]. Yet, technologists began to “perceive HLA as a ‘jack of all trades, but master of none’ ” [12, p. 8], and various user communities started developing their own distinct protocols, including the Extensible Modeling and Simulation Framework (XMSF), Test and Training Enabling Architecture (TENA), Virtual Reality Transfer Protocol (VRTP), Common Training Instrumentation Architecture (CTIA), Distributed Worlds Transfer and Communication Protocol (DWTP), and Multi-user 3D Protocol (Mu3D) (for an overview of these middleware technologies, see the work by Richbourg and Lutz [12]).

Meanwhile, as the development community advanced the technological capabilities of distributed simulation, the training community was identifying new opportunities to improve collective training. Throughout the 1980s, behavioral scientists worked to systemically enhance SBT (in general) by developing Instructional Systems Design (ISD) approaches to simulation content creation, methodically pairing simulation features with desired training outcomes, analyzing instructional strategies for simulation, creating performance assessment techniques, and designing feedback delivery mechanisms—just to name a few topics [14].

Naturally, these instructional advances were also applied to distributed SBT. Plus, distributed systems offered their own challenges for the training research community, such as how to build team cohesion among distributed personnel or deliver feedback to geographically distributed trainees.

As the technological and instructional R&D communities created leap-ahead advancements in distributed SBT throughout the 1980s and 1990s, the administrative communities debated and refined the employment of distributed system. Notably, the Defense Modeling and Simulation Office (DMSO; later renamed the Modeling and Simulation Coordination Office [MSCO]) was established in late 1991 to solve coordination gaps among the Services. Before DMSO, military M&S efforts were described as “out of control” with “no identified POC (point of contact) for M&S nor any real focus to the dollars being spent in this area” [15, para. 4]. As Col (ret.) Fitzsimmons, the first DMSO Director, illustrates,

. . . I placed four toilet paper cardboard rolls on [the] table and said that if you look down each roll you see what the individual Services are doing . . . basically good stuff. However there is no way to work across the Services to coordinate activities (such as reduce development costs, share ideas etc). Duplication of efforts was obvious from even our short look into all this. I then pointed out that the main mission of the Services was to provide “interoperable” force packages . . . which meant there needed to be a way to do joint training, which also meant there needed to be interoperable training systems. Bottom line was that we felt there needed to be someone guiding development of M&S from the perspective of joint interoperable training and helping coordinate reuse. . .

Fitzsimmons [15, para. 7]

Thus, DMSO developed into a policy-level office focused on reuse and Joint interoperability for training. Early in DMSO’s existence, its leaders recognized the benefits of integrating live, virtual, and constructive (LVC) simulations through distributed technologies, and LVC became a cornerstone of DMSO efforts [15]. DMSO also championed distributed simulation verification, validation, and accreditation (VV&A) efforts, publishing standards and policies for the Defense community, as well as guiding M&S policy through return-on-investment analyses.

Looking back upon the emergence of distributed real-time SBT, it becomes clear that the military community pioneered this discipline. More specifically, military technologists, training scientists, and visionary policy leaders together made distributed SBT a functional, effective, and usable tool for the Defense community. Yet, despite widespread use of distributed SBT for military training, it remains a fairly nascent capability. In Section 15.3, we outline various issues commonly associated with distributed real-time SBT.

15.3 Persistent Challenges

Over the past 30 years, the pipedream of distributed real-time simulation has transformed into a fundamental training technology; still, challenges persist. These include long-standing technology issues, questions regarding how to best attain distributed simulation instructional goals, and discussions on how to best employ this distributed real-time SBT.

15.3.1 Interoperability

Interoperability concerns the interlinking of the many diverse systems involved with distributed simulation. In other words, an interoperable simulation must be able to both pass and interpret data among its federates [16]. Naturally, interoperability was among the first issues distributed SBT developers confronted, and, although great advances have been made, significant technological gaps remain.

15.3.1.1 Software Protocols

Generally speaking, many interoperability gaps result from limitations found in the software protocols used to communicate data among interlinked simulations. Common protocol issues include the following:

Scalability limitations

Challenges regarding time synchronization

Lack of interoperability among different protocols

Lack of true plug-and-play capabilities

Lack of support for semantic interoperability

DIS and HLA remain the most common interoperability protocols; yet, they still only achieve moderate ratings of practical relevance: “(3.5 and 3.4 respectively [out of 5.0]), a value which is relatively high, but might be expected to be even higher considering that both standards have been on the market for more than 10 years (HLA) or 15 years (DIS)” [17]. Both DIS and HLA are most notability affected by scalability, plug-and-play, and semantic interoperability problems [12].

More recent protocols attempt to address the difficulties of DIS and HLA; however, the introduction of new protocols, ironically, contributes to another significant challenge—lack of interoperability among protocols. To use simulations that employ different protocols, developers must use special bridges or gateways, which introduce “increased risk, complexity, cost, level of effort, and preparation time into the event” [12, pp. 8–9]. Plus, the ability to reuse models and applications across different protocols is limited.

To help address these issues, the MSCO recently completed a large-scale effort to identify the best way forward for interoperability. The Live Virtual Constructive Architecture Roadmap (LVCAR) study examined technical architectures, business models, and standards and then considered various strategies for improving the state of simulation interoperability [18]. The study produced 13 documents, including an extensive main report and several companion papers that were delivered in 2008. Although formal next steps have not yet been announced, the results of this effort will undoubtedly shape MSCO policy in the years to come, directly affecting which protocols are developed and/or used.

15.3.1.2 Domain Architectures

At the component level, various domain architectures have been established to maximize the potential for interoperability of simulations. Such frameworks attempt to create common software platforms for use across the (mainly government) simulation community. For example, created in the early 1990s, the Joint Simulation System (JSIMS) and One Semi-Automated Forces (OneSAF) systems aim to provide common architectures for synthetic environments and computer generated forces, respectively.

New components are commonly added to government domain architectures, and as a result, these packages are routinely upgraded. Regrettably, many simulation practitioners fail to upgrade their simulations, and now a hodgepodge of versions are found across the user community. Moreover, different versions of an architecture do not necessarily support federation. In other words, the variety of versions actually creates a hindrance to interoperability [19].

No clear solution yet exists to this challenge. However, unifying agencies, such as Simulation Interoperability Standards Organization (SISO), attempt to encourage more standardized architecture use throughout the M&S community. SISO, whose roots can be traced back to SIMNET, is an international organization that hosts regular meetings as well as annual Simulation Interoperability Workshops. SISO also maintains interoperability standards and common components packages, such as SEDRIS, an enabling technology that supports the representation of environmental data and the interchange of environmental data sets among distributed simulation systems [20].

Agencies such as SISO can help remove some barriers to interoperability and promote more homogeneous use of common domain architectures. However, for change to truly occur, user communities must participate with standard activities and commit to building a more cohesive sense of community among distributed SBT practitioners [18].

15.3.1.3 Fair Play

Finally, a range of other interoperability issues can be roughly grouped under the concept of “fair play.” Fair play means ensuring that no trainee has an unfair advantage because of technical issues outside of the training, such as improved graphics giving an undue visual advantage. Fair play issues often involve time synchronization or model composability.

Achieving optimal time synchronization (sometimes called “time coherence”) is a complicated, ongoing struggle for software developers. In brief, time synchronization concerns controlling the timing of simulation events so that they are reflected in the proper order at each participating federate [21]. As of the penning of this chapter, no clear mitigation strategy has been established to overcome distributed synchronization issues; however, investigators continue to search for a solution.

Model composability, another as-yet unsolved technological conundrum, is concerned with using the data models of a simulation to effectively represent the common virtual environment in which the distributed participants interact [22]. When simply stated, this challenge seems manageable, but the complexity becomes apparent once one considers the array of intricate, heterogeneous systems—often LVC—that must be capable of expressing the same environment, agents, and behaviors. As with synchronization challenges, no clear resolution has yet emerged, and researchers continue to publish extensively, seeking a more optimal solution.

15.3.2 Fidelity

Since the early days of simulation, the M&S community has debated the necessary degree of fidelity. Originally, many practitioners believed that the more physical fidelity a simulator offered, the better its learning environment became. Toward that end, early SBT efforts often focused on perfecting the realism of the simulator, so as to mimic the real world as closely as possible. Consider this quotation describing (but not necessarily condoning) early views on simulation:

The more like the real-world counterpart, the greater is the confidence that performance in the simulator will be equivalent to operational performance and, in the case of training, the greater is the assurance that the simulator will be capable of supporting the learning of the relevant skills . . . Designing a simulator to realistically and comprehensively duplicate a real-world item of equipment or system is a matter of achieving physical and functional correspondence. The characteristics of the human participant can be largely ignored.

National Research Council [23, p. 27]

The idea of selected fidelity was articulated in the late 1980s, and it suggests that individual stimuli within a simulation can be more-or-less realistic (depending on the specific task) and still support effective training [24]. Later research led to the notion that psychological fidelity was more important than physical fidelity. With psychological fidelity, simulations could be successful without high physical fidelity—so long as the overall fidelity configuration supported the educational goals of the system (e.g., [2,23,25,26]).

Today, effective simulators come in an array of different fidelity levels, and social scientists better understand (although not completely) how to use more limited degrees of fidelity appropriately. Still, the idea that “more fidelity is better” remains entrenched among many practitioners and simulation users, as a recent U.S. Army Research Institute survey discovered [27].

15.3.2.1 Expanding Fidelity Into New Domains

In addition to meeting basic fidelity constraints, new requirements call for M&S capabilities in novel application domains. For instance, the U.S. Marine Corps has recently embarked on an effort to deliver pilot-quality SBT to infantry personnel:

Today, there are cockpit trainers that are so immersive—for both pilot training and evaluation—that the Services and the Federal Aviation Administration (FAA) allow their substitution for much of the actual flying syllabus. Unfortunately, this level of maturity has not been reached for immersive small unit infantry training which necessarily includes an almost limitless variety of localities, environments, and threats.

NRAC [28, p. 58]

Other Defense simulation goals are concerned with replicating the stress, cognitive load, and emotional fatigue found in the real-life combat situations, or refining human social, cultural, and behavioral models to the point where agents act realistically—down to their body language [29]. These new requirements challenge developers, who must extend the supporting technologies. Even more, they challenge instructional and behavioral scientists, who must define the features of each new domain and determine what levels of fidelity support their instruction.

The Defense sector has apportioned substantial resources to meet these challenges, from both the technological and the behavioral science perspectives. For example, U.S. Joint Forces Command (USJFCOM) recently began the Future Immersive Training Environment (FITE) effort, which seeks to deliver highly realistic infantry training to ensure that the first combat action of personnel is no more difficult or stressful than their last simulated mission. As of mid-2010, FITE investigators had successfully demonstrated a wearable, dismounted immersive simulation system (see Figure 15.2). The body-worn system provides interconnectivity among users, who interact with a virtual environment through synchronized visual, auditory, olfactory, and even haptic events. If shot, the participant even feels a slight electrical charge so that they know they are “injured” or “dead” [30].

Other military programs seek to more faithfully model humans. For instance, the Human Social Culture Behavior (HSCB) initiative is a Defense-wide effort to “develop a military science base and field technologies that support socio-cultural understanding and human terrain forecasting in intelligence analysis, operations analysis/planning, training, and Joint experimentation” [29, p. 4]. Under this venture, numerous projects are improving the fidelity of human models, cognitive/behavioral models, and the interaction among the behaviors of agents. By increasing the fidelity of such components, next-generation simulations will be able to support unique “nonkinetic” (i.e., noncombat) training, such as the acquisition of cultural competence, which is not presently well supported in SBT.

FIGURE 15.2 Camp Lejeune, North Carolina (February 24, 2010). Marines from the 2d Battalion, 8th Marine Regiment, train with the Future Immersive Training Environment (FITE) Joint Capabilities Technology Demonstration (JCTD) virtual reality system in the simulation center at Camp Lejeune, North Carolina. Sponsored by the U.S. Joint Forces Command, with technical management provided by the Office of Naval Research, the FITE JCTD allows an individual wearing a self-contained virtual reality system, with no external tethers and a small joystick mounted on the weapon, to operate in a realistic virtual world displayed in a helmet mounted display. (U.S. Navy photo by John F. Williams/Released.)

These are just two of many Defense efforts designed to expand the range of high-fidelity SBT. These two instances exemplify a trend with respect to SBT fidelity endeavors. Specifically, much of the “low-hanging fruit” has been plucked, leaving instead requirements for nuanced capability improvements (such as precision tuning of character models), expansion into new content domains (such as cultural training), and expansion into new delivery modalities (such as wearable, immersive systems).

15.3.3 Instructional Strategies

As discussed in Section 15.2, a number of factors converged through the 1970s to reshape the general approach of practitioners to SBT. The cognitive revolution had finally taken hold in the United States, and constructivist theories were gaining attention. Instructional designers were beginning to apply ISD approaches to simulation curricula, and behavioral researchers were finally invited to help address the negative training potential of simulations.

These factors contributed to the development and widespread use of simulation-specific instructional strategies such as the event-based [31] or scenario-based training approach [3]. Proponents of such event-based strategies first debunked “the widely held belief that just more practice automatically leads to better skills” by demonstrating that unstructured simulation-based practice engenders “rote behaviors and an inflexibility to recognize errors” [32, p. 15]. They then called for the scenarios to be systematically organized around predictable training objectives and employ guided-practice principles [31].

Thanks to these efforts, contemporary SBT is more effective. However, three areas of concern remain with respect to instructional strategies. First, while current practice provides some general recommendations for instructional design, few, if any, specific instructional strategies have been identified that span the entire simulation-based learning process (from design to execution, feedback, and remediation). Second, instructional strategies for unique problem domains, such as improving infantry personnel’s perceptual abilities or more rapidly imbuing novice trainees with sophisticated higher-order cognitive abilities have not been well developed. Third, the impact of many uncommon and/or combined strategies has not yet been well documented [33]. Consequently, SBT instructors are often left to choose their own instructional approach, which contributes to wide variations in training effectiveness and puts a greater task burden on SBT facilitators [34].

The military has funded several projects intended to identify theoretical frameworks for improving the pedagogy (or more accurately, “andragogy”) of its SBT. For example, the Office of Naval Research (ONR) recently sponsored the Next-generation Expeditionary Warfare Intelligent Training (NEW-IT) initiative, which seeks to improve SBT instructional effectiveness, in part, by including automated instructional strategies within distributed SBT software. NEW-IT is scheduled to complete in 2011; however, its investigators already report training performance gains of 26–50% in their empirical field testing [35]. Another ONR effort, called Algorithms Physiologically Derived to Promote Learning Efficiency (APPLE), aims to systematically inform the selection of instructional strategies across a wide range of domains, including distributed SBT [36]. The APPLE effort just began, but it has potential to greatly improve our collective understanding of optimum instructional methods. Programs such as NEW-IT and APPLE promise to improve the state of instructional strategy use within the military SBT community, but many more investigations into methods for improving the effectiveness of SBT are required before a full understanding of SBT instructional best practices is achieved.

15.3.4 Instructor Workload

To be effective, SBT systems rely on significant involvement from expert instructors, who often have all-encompassing duties. The workload of instructors becomes even more pronounced in distributed training contexts, where additional human effort is required to configure and initialize system setup, monitor distributed trainees and LVC entities during the exercise, and manage the delivery of distributed postexercise feedback (e.g., [37,38]). This has led some to argue that a good instructor is the primary determinant of the effectiveness of SBT (e.g., [26,23]) or that “simulators without instructors are virtually useless for training” [39, p. 5]. For distributed real-time SBT, these workload dilemmas become especially pronounced during After Action Review (AAR).

15.3.4.1 Human Effort Required for Distributed AAR

AAR became a formalized military training technique in the 1940s, and it is intended to provide a structured, nonpunitive approach to feedback delivery. Naturally, once the capacity existed, the objective data generated by simulations began to inform AARs, and as simulators have grown in complexity, greater and greater amounts of objective data have became available. With the introduction of SIMNET in the 1980s, the amount of information that could be utilized in the AAR increased dramatically and so too did the workload on instructors [40].

Typically, an instructor must attend to each distributed training “cell.” This means that the instructors, like the trainees, are geographically dispersed and cannot readily respond to all trainees or interact with one another. During a training exercise, instructors are responsible for observing actions of trainees to identify critical details not objectively captured by the simulation. These details may include radio communications that occur among trainees (outside of the simulation itself) or strategic behaviors too subtle for the simulation to analyze [41]. After observing an exercise, instructors typically facilitate the AAR delivery; they assemble the AAR material, find and discuss key points, explain performance outcomes, and lead talks among the trainees [42].

Heavy reliance on instructors in distributed AAR is not merely an issue of individuals’ tasking. It affects the cost of deployment and, depending on the quantity and ability of the facilitators, can limit training effectiveness. An apparent mitigation for these issues involves supporting AAR through improved automated performance capture, analysis, and debrief. Such systems could also serve as collaborative tools for the distributed instructors.

The Services have been working toward such solutions for decades. For instance, the Army Research Institute funded development of the Dismounted Infantry Virtual After Action Review System (DIVAARS), which supports “DVD-like” replay of simulation and provides some data analysis support. A similarly focused effort, called Debriefing Distributed Simulation-Based Exercises (DDSBE), was sponsored by ONR.

Other, more particular, AAR systems also exist—from AAR support for analyzing the physical positioning of infantrymen on a live range (e.g., BASE-IT, sponsored by ONR [43]) to interpretation of trainees’ cognitive states through neurophysiological sensors (e.g., AITE, sponsored by ONR [44]).

A number of other AAR tools, both general and specifically targeted, can be readily discovered. On the one hand, the variety of tools gives practitioners a range of options; however, on the other hand, many of these tools operate within narrow domains and software specifications, have been mainly demonstrated in carefully scripted use-case settings, and fail to interoperate with one another. In practice, current AAR technologies rarely provide adequate support for trainers and fail to provide deep diagnosis of performance [41]. Nonetheless, ongoing efforts continue to attempt to remedy these problems.

15.3.5 Lack of Effectiveness Assessment

In contrast to the AAR challenge (upon which many researchers and developers are concentrating), few investigators have addressed the question of effectiveness assessment. A recent Army Research Institute report explains:

More often than not, the simulators are acquired without knowing their training effectiveness, because no empirical research has been done. The vendors, who manufacture and integrate these devices, do not conduct such research because they are in the business of selling simulators, not research. Occasionally training effectiveness research is conducted after the simulators have been acquired and integrated, but this is narrowly focused on these specific simulators, training specific tasks, in this specific training environment. The research tends to produce no general guidance to the training developer, because of its narrow focus, and because it is conducted on a noninterference basis, making experimental control difficult if not impossible.

Stewart, Johnson, and Howse [27, p. 3]

Effectiveness assessments fall roughly into two categories. They may consider specific instances or approaches, as the preceding quotation suggests, or they may be carried out at the enterprise level (i.e., strategy-wide impact assessment). Such strategic impact assessments involve identifying future consequences of a course of action, such as the expected return-on-investment from pursuing large-scale SBT curriculum.

Assessing the impact and value of distributed SBT—at either the project or the enterprise levels—is challenging and costly. Many benefits of distributed SBT, such as avoidance of future errors, are difficult to measure, and simulation analysts lack the ability to conduct upfront impact assessments or communicate their results [45]. In addition, developers and sponsors are often reluctant to spend resources on verifying the effectiveness of a system that they already expect (or at least very much hope) to work.

Although Defense organizations encourage researchers and developers to conduct effectiveness evaluations, such efforts are carried out intermittently. Fortunately, MSCO is leading attempts to increase such testing. MSCO publishes standards for project-level VV&A evaluation, and the office recently completed a strategy-level evaluation of return-on-investment for Defense M&S [46]. Nonetheless, many more researchers will have to follow the example set by MSCO before this gap is mitigated.

15.3.6 Lack of Use Outside of the Defense Sector

The roots of distributed simulation can be traced back primarily to the Defense sector [47], and unfortunately, its use has remained largely confined to that community [46,48]. Around 2002, academic papers began routinely appearing asking why commercial industry has yet to embrace distributed simulation (e.g., Ref. [49]). This publishing trend continues to date, and the obstacles to adoption outside of the military sector include the following (for more details see Refs. [48] and [50]):

Insufficient integration with commercial off-the-shelf (COTS) simulation packages

Technical difficultly (or perceived technical difficulty) of federating systems

Inefficiency of synchronization algorithms

Bugs and lack of verification in distributed models

Overly complex runtime management

Perceived lack of practical return-on-investment

Too much functionality in existing distributed packages not relevant for industry

The dearth of effectiveness testing also contributes to the reluctance of some to embrace distributed SBT. As Randall Gibson explains:

I would have to conclude that the simulation community is not doing an adequate job of selling simulation successes. Too often I see management deciding not to commit to simulation for projects where it could be a significant benefit.

Gibson et al. [45, p. 2027]

In short, money is the major driver in business, and most businesses fail to see sufficient return-on-investment for their use of distributed SBT. Additionally, most commercial practitioners claim to be interested in low-cost, throw-away COTS tools, instead of more robust enterprise systems. However, current COTS simulation tools do not adequately support distributed simulation applications, and most industry practitioners are not willing to pay much more than a 10% increase in cost to upgrade current technologies [48].

Recently, several pilot projects investigated the benefits of distributed SBT for businesses such as car manufacturing (see the work by Boer [51]). These studies help advance the cause of distributed SBT for business. However, greater numbers of use cases and return-on-investment analyses are required. A 2008 survey of simulation practitioners also suggests that more “success stories” are necessary to overcome industry representatives’ “psychological barriers” to accepting distributed SBT. Other responses in this survey indicate that “ready and robust solutions” and “technological advances” must also be made before the commercial sector fully embraces distributed SBT [17].

15.4 Conclusion

Distributed real-time SBT fills a vital training gap, and it is actively used by various organizations. However, as this chapter has illustrated, employment of distributed real-time SBT still involves challenges, including technological, instructional, and logistical obstacles. Investigators continue to address these gaps; however, practitioners must make trade-offs due to these limitations.

This chapter was intended to provide readers with a general overview of the history and challenges of distributed real-time SBT. The chapter did not contain a comprehensive listing of all gaps or related projects. Instead, we attempted to identify a general set of challenges that are unique to, or otherwise exacerbated by, distributed systems, as well as some formal initiatives attempting to address these issues. We hope that this chapter has provided a broad perspective of the field and that readers have noticed the integrated nature of the distributed SBT challenges discussed herein. Distributed real-time SBT involves technology, people, and policies; thus, by its very nature, this field is a systems engineering challenge. As innovations continue to be made in this domain, it is critical that technologists, researchers, and policy leaders maintain a broad perspective—carefully balancing the resources and outcomes of individual efforts with the impact to the overall system.

Acknowledgments

This work is supported by the Office of Naval Research Grant N0001408C0186, the Next-generation Expeditionary Warfare Intelligent Training (NEW-IT) program. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the ONR or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation hereon.

References

1. MSCO. 2010. DoD Modeling and Simulation (M&S) Glossary. Alexandria, VA: Modeling and Simulation Coordination Office (MSCO).

2. Salas, E., L. Rhodenizer, and C. A. Bowers. 2000. “The Design and Delivery of Crew Resource Management Training: Exploiting Available Resources.” Human Factors 42 (3): 490–511.

3. Oser, R. L., J. A. Cannon-Bowers, D. J. Dwyer, and H. Miller. 1997. An Event Based Approach for Training: Enhancing the Utility of Joint Service Simulations. Paper Presented at the 65th Military Operations Research Society Symposium, Quantico, VA, June 1997.

4. Joint Staff/J-7. 2008. The Joint Training System: A Primer for Senior Leaders (CJCS Guide 3501). Washington, DC: Department of Defense.

5. Link, E. A. 1930. Jnr U.S. Patent 1,825,462, filed 1930.

6. Owens, L. 1986. “Vannevar Bush and the Differential Analyzer: The Text and Context of an Early Computer.” Technology and Culture 27 (1): 63–95.

7. Dutton, J. M. 1978. “Information Systems for Transfer of Simulation Technology.” In Proceedings of the 10th Conference on Winter Simulation, 995–99. New York: ACM.

8. Nance, R. E. 1996. “A History of Discrete Event Simulation Programming Languages.” In History of Programming Languages, vol. 2, edited by T. J. Bergin and R. G. Gibson, 369–427. New York: ACM.

9. Miller, D. C., and J. A. Thorpe. 1995. “SIMNET: The Advent of Simulator Networking.” Proceedings of the IEEE 83 (8): 1114–23.

10. Cosby, L. N. 1999. “SIMNET: An Insider’s Perspective.” Simulation Technology 2 (1).

11. Miller, G., A. Adams, and D. Seidel. 1993. Aggregate Level Simulation Protocol (ALSP) (1993 Confederation Annual Report; Contract No. DAAB07-94-C-H601). McLean, VA: MITRE Corporation.

12. Richbourg, R., and Lutz, R. 2008. Live Virtual Constructive Architecture Roadmap (LVCAR) Comparative Analysis of the Middlewares (Interim Report Appendix D; Case No. 09-S-2412 / M&S CO Project No. 06OC-TR-001). Alexandria, VA: Institute for Defense Analyses.

13. DMSO. 1998. High-Level Architecture (HLA) Transition Report (ADA402321). Alexandria, VA: Defense Modeling and Simulation Office.

14. Stout, R. J., C. Bowers, and D. Nicholson. 2008. “Guidelines for Using Simulations to Train Higher Level Cognitive and Teamwork Skills.” In The PSI Handbook of Virtual Environments for Training and Education, edited by D. Nicholson, D. Schmorrow, and J. Cohn, 270–96. Westport, CT: PSI.

15. Fitzsimmons, E. 2000. “The Defense Modeling and Simulation Office: How It Started.” Simulation Technology Magazine 2 (2a). http://www.stanford.edu/dept/HPS/TimLenoir/MilitaryEntertainment/Simnet/SISO%20News%20-%20Preview%20Article.htm, accessed: 10 April, 2011.

16. Searle, J., and J. Brennan. 2006. “General Interoperability Concepts.” In Integration of Modelling and Simulation, Educational Notes (RTO-EN-MSG-043, Paper 3), 3-1–3-8. Neuilly-sur-Seine, France: RTO. http://www.dtic.mil/cgi-bin/GetTRDoc?AD=ADA470923

17. Straßburger, S., T. Schulze, and R. Fujimoto. 2008. Future Trends in Distributed Simulation and Distributed Virtual Environments: Peer Study Final Report, Version 1.0. Ilmenau, Magdeburg, Atlanta: Ilmenau University of Technology, University of Magdeburg, Georgia Institute of Technology.

18. Henninger, A. E., D. Cutts, M. Loper, R. Lutz, R. Richbourg, R. Saunders, and S. Swenson. 2008. Live Virtual Constructive Architecture Roadmap (LVCAR) Final Report (Case No. 09-S-2412 / M&S CO Project No. 06OC-TR-001). Alexandria, VA: Institute for Defense Analyses.

19. Hassaine, F., N. Abdellaoui, A. Yavas, P. Hubbard, and A. L. Vallerand. 2006. “Effectiveness of JSAF as an Open Architecture, Open Source Synthetic Environment in Defence Experimentation.” In Transforming Training and Experimentation through Modelling and Simulation (Meeting Proceedings RTO-MP-MSG-045, Paper 11; 11-1– 11-6). Neuilly-sur-Seine, France: RTO.

20. SISO. 2009. “A Brief Overview of SISO.” www.sisostds.org, accessed: 1 April, 2011.

21. Fujimoto, R. M. 2001. “Parallel and Distributed Simulation Systems.” In Proceedings of the 33th Conference on Winter Simulation, edited by B. A. Peters, J. S. Smith, D. J. Medeiros, and M. W. Rohrer, 147–57. New York: ACM.

22. Sarjoughian, H. S. 2006. “Model Composability.” In Proceedings of the 38th Conference on Winter Simulation, edited by L. F. Perrone, F. P. Wieland, J. Liu, B. G. Lawson, D. M. Nicol, and R. M. Fujimoto, 149–58. New York: ACM.

23. National Research Council, Committee on Modeling and Simulation for Defense Transformation. 2006. Defense Modeling, Simulation, and Analysis: Meeting the Challenge. Washington, DC: The National Academies Press.

24. Andrews, D. A., L. A. Carroll, and H. H. Bell. 1995. The Future of Selective Fidelity in Training Devices (AL/HR-TR-1995-0195). Mesa, AZ: Human Resources Directorate Aircrew Training Research Division.

25. Beaubien, J. M., and D. P. Baker. 2004. “The Use of Simulation for Training Teamwork Skills in Health Care: How Low Can You Go?” Quality and Safety in Health Care 13 (Suppl 1): i51–i56.

26. Ross, K. G., J. K. Phillips, G. Klein, and J. Cohn. 2005. “Creating Expertise: A Framework to Guide Simulation-Based Training.” In Proceedings of I/ITSEC. Orlando, FL: NTSA.

27. Stewart, J. E., D. M. Johnson, and W. R. Howse. 2008. Fidelity Requirements for Army Aviation Training Devices: Issues and Answers (Research Report 1887). Arlington, VA: U.S. Army Research Institute.

28. NRAC. 2009. Immersive Simulation for Marine Corps Small Unit Training (ADA523942). Arlington, VA: Naval Research Advisory Committee.

29. Biggerstaff, S. 2007. Human Social Culture Behavior Modeling (HSCB) Program. Paper Presented at the 2007 Disruptive Technologies Conference, Washington, DC, September 2007.

30. Lawlor, M. 2010. “Infusing FITE into Simulations.” SIGNAL Magazine 64 (9): 45–50.

31. Fowlkes, J., D. J. Dwyer, R. J. Oser, and E. Salas. 1998. “Event-Based Approach to Training (EBAT).” The International Journal of Aviation Psychology 8 (3): 209–221.

32. van der Bosch, K., and J. B. J. Riemersma. 2004. “Reflections on Scenario-Based Training in Tactical Command.” In Scaled Worlds: Development, Validation, and Applications, edited by S. Schiflett, L. R. Elliott, E. Salas, and M. D. Coovert, 22–36. Burlington, VT: Ashgate.

33. Vogel-Walcutt, J. J., T. Marino-Carper, C. A. Bowers, and D. Nicholson. 2010. “Increasing Efficiency in Military Learning: Theoretical Considerations and Practical Applications.” Military Psychology 22 (3): 311–39.

34. Schatz, S., C.A. Bowers, and D. Nicholson. 2009. “Advanced Situated Tutors: Design, Philosophy, and a Review of Existing Systems.” In Proceedings of the 53rd Annual Conference of the Human Factors and Ergonomics Society, 1944–48. Santa Monica, CA: Human Factors and Ergonomics Society.

35. Vogel-Walcutt, J. J., Marshall, R., Fowlkes, J., Schatz, S., Dolletski-Lazar, R., & Nicholson, D. (2011). Effects of instructional strategies within an instructional support system to improve knowledge acquisition and application. In Proceedings of the 55th Annual Meeting of the Human Factors and Ergonomics Society. Santa Monica, CA: Human Factors and Ergonomics Society. September 19–23, 2011 Las Vegas, Nevada.

36. Nicholson, D., and J. J. Vogel-Walcutt. 2010. Algorithms Physiologically Derived to Promote Learning Efficiency (APPLE) 6.3. http://active.ist.ucf.edu.

37. Ross, P. 2008. “Machine Readable Enumerations for Improved Distributed Simulation Initialisation Interoperability.” In Proceedings of the SimTect 2008 Simulation Conference. http://hdl.handle.net/1947/9101, accessed 5 April, 2011.

38. Weinstock, C. B., and J. B. Goodenough. 2006. On system scalability (Technical Note, CMU/SEI-2006-TN-012). Pittsburgh, PA: Carnegie Mellon University.

39. Stottler, R. H., R. Jensen, B. Pike, and R. Bingham. 2006. Adding an Intelligent Tutoring System to an Existing Training Simulation (ADA454493). San Mateo, CA: Stottler Henke Associates Inc.

40. Morrison, J. E., and L. L. Meliza. 1999. Foundations of the After Action Review Process (ADA368651). Alexandria, VA: Institute for Defense Analyses.

41. Wiese, E., J. Freeman, W. Salter, E. Stelzer, and C. Jackson. 2008. “Distributed After Action Review for Simulation-Based Training.” In Human Factors in Simulation and Training, edited by D. A. Vincenzi, J. A. Wise, M. Mouloua and P. A. Hancock. Boca Raton, FL: CRC Press.

42. Freeman, J., W. J. Salter, and S. Hoch. 2004. “The Users and Functions of Debriefing in Distributed, Simulation-Based Training.” In Proceedings of the Human Factors and Ergonomics Society 48th Annual Meeting, 2577–81. Santa Monica, CA: Human Factors and Ergonomics Society.

43. Rowe, N. C., J. P. Houde, M. N. Kolsch, C. J. Darken, E. R. Heine, A. Sadagic, C. Basu, F. Han. 2010. Automated Assessment of Physical-Motion Tasks for Military Integrative Training. Paper Presented at the 2nd International Conference on Computer Supported Education (CSEDU 2010), Noordwijkerhout, The Netherlands, May 2010.

44. Vogel-Walcutt, J. J., D. Nicholson, and C. Bowers. 2009. Translating Learning Theories into Physiological Hypotheses. Paper Presented at the Human Computer Interaction Conference, San Diego, CA, July 19–24, 2009.

45. Gibson, R., D. J. Medeiros, A. Sudar, B. Waite, and M. W. Rohrer. 2003. “Increasing Return on Investment from Simulation (Panel).” In Proceedings of the 35th Conference on Winter Simulation, edited by S. Chick, P. J. Sánchez, D. Ferrin, and D. J. Morrice, 2027–32. New York: ACM.

46. MSCO. 2009. Metrics for M&S Investment (REPORT No. TJ-042608-RP013). Alexandria, VA: Modeling and Simulation Coordination Office (MSCO).

47. Fujimoto, R. M. 2003. “Distributed Simulation Systems.” In Proceedings of the 35th Conference on Winter Simulation, edited by S. Chick, P. J. Sánchez, D. Ferrin, and D. J. Morrice, 2027–32. New York: ACM.

48. Boer, C. A., A. de Bruin, and A. Verbraeck. 2009. “A Survey on Distributed Simulation in Industry.” Journal of Simulation 3: 3–16.

49. Taylor, S. J. E., A. Bruzzone, R. Fujimoto, B. P. Gan, S. Straßburger, and R. J. Paul. 2002. “Distributed Simulation and Industry: Potentials and Pitfalls.” In Proceedings of the 34th Conference on Winter Simulation, edited by E. Yücesan, C.-H.Chen, J. L. Snowdon, and J. M. Charnes, 688–94. New York: ACM.

50. Lendermann, P., M. U. Heinicke, L. F. McGinnis, C. McLean, S. Straßburger, and S. J. E. Taylor. 2007. “Panel: Distributed Simulation in Industry—A Real-World Necessity or Ivory Tower Fancy?” In Proceedings of the 39th Conference on Winter Simulation, edited by S. G. Henderson, B. Biller, M.-H. Hsieh, J. Shortle, J. D. Tew, and R. R. Barton, 1053–62. New York: ACM.

51. Boer, C. A. 2005. Distributed Simulation in Industry. Rotterdam, The Netherlands: Erasmus University Rotterdam.