Chapter 39. Running SQL Server on Hyper-V

Virtualization is a popular term covering several technologies. In the server space, virtualization is beneficial for several reasons:

- Disaster recovery is simple. You merely copy a small number of files from your normal production environment to your disaster recovery environment. Your disaster recovery hardware doesn’t have to match your production hardware.

- Provisioning a virtual machine is simpler and faster than provisioning a physical machine. Virtualization tools make it simple to clone a production server and run it on different hardware. Development and test servers can be provisioned quickly, which can boost the efficiency of regression testing.

- With fewer physical servers needed, less rack space, cooling, and electricity are consumed, making for a greener and more affordable infrastructure.

- Microsoft offers savings on licenses. A single copy of Windows 2008 Server Standard Edition on a physical machine allows you to also run one virtual copy of Windows 2008 Server Standard Edition at no additional licensing cost. With Windows 2008 Server Enterprise Edition, up to four virtual copies of Windows 2008 Server can be run at no additional cost. One copy of Windows 2008 Server Datacenter Edition allows an unlimited number of Windows Server virtual machines to be run on the same physical machine, all covered by the one datacenter license. Licensing can be complicated by assignment and downgrade rights which are explained here: http://blogs.technet.com/mattmcspirit/archive/2008/11/13/licensing-windows-server-in-a-virtual-environment.aspx.

In this chapter, we will begin with an overview of virtualization architecture before exploring a number of key issues such as configuration, clock drift, and backups.

Virtualization architecture

Virtualization technologies can be organized by technology type, as you can see in table 1.

Table 1. Types and examples of virtualization technologies

|

Server hardware virtualization (hypervisor) |

Microsoft Hyper-V, VMware ESX, Xen Hypervisor |

|---|---|

|

Server software virtualization |

Microsoft Virtual Server 2005 R2, VMware Virtual Server (formerly GSX) |

|

Presentation virtualization |

Terminal Services, Citrix XenApp |

|

Application virtualization |

Microsoft App-V, VMware ThinApp, Citrix XenApp streaming |

|

Desktop virtualization |

Microsoft Virtual PC and MED-V, VMware Workstation, Parallels Desktop |

A hypervisor is a small software layer installed directly on physical hardware. It allows multiple and disparate operating systems to be installed on the hypervisor layer. Hypervisors introduce little overhead, allowing the performance of the virtual machines to be close to the performance of a physical machine. They are currently the enterprise standard in virtualization because they offer better performance and higher capacity than server virtualization applications such as Microsoft Virtual Server 2005 R2 Service Pack 1 (SP1) and VMware Server.

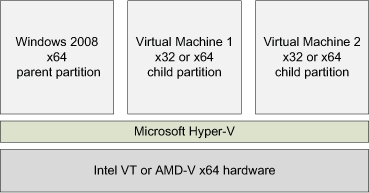

When the Hyper-V role is enabled on Windows 2008 Server, the original Windows 2008 operating system is transformed into a virtual machine, which is called the parent partition. The virtual machines are called child partitions. Each partition is isolated from the other. Figure 1 highlights this relationship.

Figure 1. A virtual machine running on Hyper-V only passes through a thin hypervisor layer to access the physical hardware.

For supported operating systems, additional software may be installed into a virtual machine to facilitate interaction with the physical hardware devices. For Hyper-V, this software is called Integration Services. It provides special device drivers call synthetic drivers, which are optimized for the virtual world and which are necessary for achieving near native performance. With synthetic drivers, the overhead of hardware emulation is avoided.

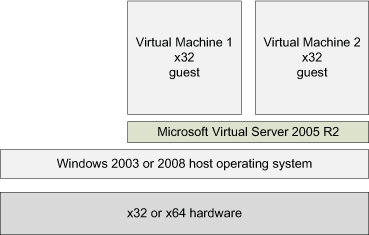

Server software virtualization products such as Microsoft Virtual Server 2005 R2 SP1, as shown in figure 2, incur more overhead than hypervisors and are slower. Virtual Server 2005 R2 SP1 is installed as an application running on the physical machine’s operating system. Virtual operating systems are installed into Virtual Server 2005 R2 SP1. The virtual operating system must pass all of its hardware calls to the virtualization application, which in turns passes them to the host operating system. For example, if Windows 2003 Server is installed as a guest operating system in Virtual Server 2005 R2 SP1, which is installed on Windows Server 2008, operating system calls in 2003 Server are passed through Virtual Server 2005 SP1 to Windows Server 2008 to finally be executed. The path is less direct than that of a hypervisor and this adversely impacts performance.

Figure 2. A virtual machine running on Virtual Server 2005 R2 passes its operating system calls to the host operating system for execution, which results in a longer, slower path to the hardware.

Even with the inherent overhead of software virtualization, small volume SQL Servers with limited demands on the hardware can perform acceptably using server software virtualization such as Virtual Server 2005 R2. For more demanding database applications, hypervisor-based server hardware virtualization is needed. Hyper-V offers significantly improved disk I/O performance when compared to Microsoft Virtual Server 2005 R2.

Benefits of isolation

When a virtual machine crashes, it doesn’t affect the other virtual machines or the physical machine because each virtual machine is isolated from the others and the physical machine. Processes in one virtual machine (VM) can’t read, corrupt, or access processes running in other virtual machines. Because of this complete isolation, it is possible to have multiple default instances of SQL Server running on a single physical server by having each default instance in its own virtual machine. This is particularly important when trying to consolidate third-party applications which require default instances of SQL Server. Each application can run in its own virtual machine with each virtual machine running a default instance of SQL Server.

Configuring virtual machines

To obtain the best possible results from a virtual machine, it must be configured properly. Configuration of virtual disks, virtual processors, and virtual network adapters affect performance.

Configuring disks

Microsoft Hyper-V offers the following choices for its virtual disks:

- Passthrough disks

- Virtual hard disks (VHDs)

- Dynamically expanding

- Fixed size

- Differencing

Passthrough disks are physical disks directly accessed by the virtual machine. Because they offer the most direct path, they provide the best performance and are well suited for large data volumes. They lack flexibility and portability.

A virtual hard disk is a file that resides on the parent partition’s file system or storage area network (SAN).

Dynamically expanding VHDs are best suited for development and test environments because they conserve disk space. Dynamically expanding VHDs grow as needed, which minimizes space usage but tends to cause fragmentation. Because the virtual machine’s operating system is isolated from the physical machine, it has no knowledge of the amount of physical disk space available. The virtual machine only knows the maximum allowable size of the dynamic VHD. This can create a disparity between what the virtual machine sees as free space and the reality of free space on the physical machine.

In figure 3, the virtual machine running in the window on the right shows 117 GB of free space in its VHD. But because this dynamically expanding VHD resides on physical disk D, which has no free space left, the 117 GB of free space doesn’t actually exist. Hyper-V places the virtual machine into a paused state because it has no physical space to continue operating. SQL Server can be configured to provide you with low free disk space alerts, but if you are using dynamically expanding VHDs, you may not get the alert. The virtual machine doesn’t know when there isn’t room for a dynamically expanding disk to grow.

Figure 3. A dynamically expanding virtual hard disk is seen by the virtual machine as having 117 GB of free space when there is actually no disk space left on the physical file system. This forces the virtual machine into a paused state..

Fixed-size VHDs perform better than dynamically expanding VHDs because all of their space is preallocated, although the performance difference has been lessened with the R2 release of Hyper-V. When a fixed VHD is created and its size specified, it takes all of its space from the physical machine. If there isn’t enough space, an error occurs and it is not created.

Differencing VHDs also grow as needed, but they are linked to another VHD in a parent- child relationship. When a differencing VHD is used, all changes are written to the differencing VHD instead of the parent VHD. Although this causes an overall slight decrease in disk performance, it allows changes to be made without altering the parent VHD. Differencing VHDs are useful in test environments because extensive changes to a virtual machine can be made without changing the original VHD. When differencing VHDs are used, any changes to the parent VHD breaks the parent-child relationship rendering the children differencing VHDs nonfunctional. To prevent this from happening accidentally, it is advisable to set a parent VHD to read only. You can create more than one differencing VHD from the same parent VHD. Doing this allows you to create different branches sharing a common ancestor. For example, you might have a parent VHD with a client application that accesses SQL Server. You could use two different differencing VHDs for testing two independent and different enhancements to the client application.

Hyper-V allows you to take a snapshot of a running virtual machine. After creating a snapshot, all changes to the virtual machine are written to a separate virtual disk file. This is similar to a differencing disk in that performance is reduced slightly because of the additional overhead of a file depending on another file. Snapshots are particularly useful in testing service packs. Before applying a service pack, create a snapshot. After testing the service pack you can create another snapshot, revert to your snapshot made before the service pack was applied, or merge the snapshot’s changes to the initial VHD. Although snapshots allow recovery of a virtual machine to a particular prior state, they are not substitutes for backup and should not be considered as such.

Although Windows 2008 Server is generally considered a server operating system, many developers and people who demonstrate software use Windows Server 2008 so that they can use Hyper-V. For these use cases, performance is usually less of an issue than minimizing physical disk space usage; therefore dynamically expanding virtual hard disks are a good compromise on minimizing space while maintaining good performance.

Virtual hard disks can be attached to either IDE or SCSI buses. Hyper-V virtual machines must boot from a VHD attached to an IDE bus. A virtual SCSI bus supports more devices than a virtual IDE bus. If you need more than four VHDs, you’ll have to use a virtual SCSI bus.

Virtual machines and physical machines are more alike than not. The same principles of maximizing disk performance that apply to physical machines also apply to virtual machines. When there is concurrent disk access, separate spindles or logical unit numbers (LUNs) should be used to avoid disk contention. On a physical machine, to maximize performance by minimizing contention, you might put tempdb on a spindle or LUN by itself. Translating this to the virtual world, tempdb would exist by itself in its own VHD. This VHD containing tempdb would in turn be placed on its own spindle or LUN to avoid I/O contention. If other VHDs were stored on the same physical device, I/O contention could occur.

CPU configuration

Hyper-V allows up to four processor cores to be allocated to a virtual machine and refers to them as logical processors. If your SQL Server workload requires more than four processors, it is not a suitable candidate for virtualization with Hyper-V.

If you have two SQL Server instances running on identical hardware, you might want to consolidate them onto one physical machine by migrating both of them into virtual machines. If they typically run at under 40 percent of the available CPU resources on the physical machines, having two of these running as virtual machines on the same hardware will not overtax the available physical resources. If a third SQL Server instance is added to the physical machine, and it uses as much CPU as the other virtual machines, the total CPU demand from all three virtual machines exceeds the physical CPU available. In this example, with three virtual machines each needing 40 percent of the available CPU, they will all perform suboptimally because there isn’t enough physical resource available.

Conventional approaches to performance monitoring are not adequate for identifying all performance problems in virtualized SQL Server instances. Task Manager shows the CPU usage within the virtual machine in isolation. An individual SQL Server may show only minimal CPU usage but actually be starved for CPU. If the running virtual machines collectively are overtaxing the physical machine, all virtual machines will suffer.

In figure 4, Task Manager in the child partition (shown on the right side of the screen capture) gives the appearance of ample CPU resource availability, which is clearly not the case. The child partition has no visibility of the load in other partitions. As you can see, the parent partition is using 83 percent of the available CPU resources. If a process running in the child increases to take 40 percent of the available CPU, competition for the overtaxed CPU resources occurs. The child takes resources from the parent, reducing the resources for the parent. There isn’t an overall Task Manager for the entire physical machine that shows the sum of all of the individual Task Managers. Hyper-V provides its own set of Perfmon counters to provide a view of both what is happening at the overall physical server level as well as within the Hyper-V environment. These Hyper-V counters are exposed in the parent partition (that is, physical machine). You may choose from many different categories of Hyper-V counters. Refer to the Hyper-V documentation and product team blogs to determine which counters are most useful to you. Tony Voellm’s blog at http://blogs.msdn.com/tvoellm/ is an excellent source of in-depth information on Hyper-V performance monitoring. Use the Hyper-V counters to make sure that the total physical CPU resources on your Hyper-V server are adequate to service all of your running virtual machines.

Figure 4. Task Manager results are local to the partition in which it runs.

Configuring networking

Hyper-V offers both legacy network adapters and synthetic network adapters. Legacy network adapters are emulated devices and as such offer lower performance than synthetic adapters, but offer greater compatibility with older operating systems. Synthetic adapters provide near-native performance and require that Integration Services be installed in the virtual machine, which is not possible in all cases. When Integration Services is installed, it installs drivers that enable synthetic (virtual) devices to work. A legacy network driver is slower because it emulates a physical device instead of being an outright substitute like a synthetic device. Integration Services cannot be installed in Windows Server operating systems prior to Windows Server 2003 R2 Service Pack 2. For example, a SQL Server 6.5 instance running in an NT 4 virtual machine must use a legacy network adapter.

The minimum number of recommended physical network adapters for a Hyper-V physical machine is two (although it is possible to run with a single network adapter). One should be reserved for use only for administering the physical machine. The other is used for the virtual machines. If there is substantial network traffic, it may be necessary to have one or more physical network adapters for each virtual machine. To ensure optimal network performance, check with your hardware vendor to ensure that you have all of the latest drivers. Network-intensive workloads use more CPU in a virtual machine than on a physical machine. For deeper technical information and best practices, see Microsoft’s whitepaper Running SQL Server 2008 in a Hyper-V Environment, downloadable from http://download.microsoft.com/download/d/9/4/d948f981-926e-40fa-a026-5bfcf076d9b9/SQL2008inHyperV2008.docx.

Memory configuration

Hyper-V does not allow memory to be overallocated. If 2 GB of memory is allocated to a virtual machine, but only 1 GB is ever actually used, the 1 GB of unused RAM is not available for use by other virtual machines. This imposes a limit on how many virtual machines can be running at any given time. The sum of the memory allocation for all running virtual machines should be 1-2 GB less than the server’s physical memory. Enough memory has to be left available so that the hypervisor itself (and any antivirus software running in the parent partition) has enough RAM to avoid paging.

Addressing clock drift issues

It is common for database applications to need accurate timestamps for data. Operating systems employ various techniques to minimize clock drift where the time on the computer diverges from the time. Multiprocessor virtual machines can introduce additional clock drift because of how processor resources are shared. By default, Hyper-V Integration Services synchronize the virtual machines with the physical machine. If you want to treat the virtual machine more like a physical machine, you can disable the time synchronization in Integration Services and instead use synchronization with an external time source or a primary domain controller (PDC) emulator.

Backup considerations

SQL Server backup strategies have to consider both data loss as well as catastrophic loss of the server. The Hyper-V Volume Shadow Copy Service (VSS) Writer Integration Component enables virtual machines to be backed up so that VSS-aware applications running in virtual machines are backed up in an application-consistent manner. When Integration Services are installed, VSS on the physical machine coordinates with VSS in the virtual machine. Having backups of virtual hard disk files and configuration files allows a virtual machine to be restored to a different physical machine.

When planning a data backup strategy, it is best to think of a virtual SQL Server instance as being like a physical SQL Server instance. The real value of SQL Server backups is the ability to do point-in-time recovery. This requires true SQL Server backup tools and strategies. Running SQL Server inside a virtual machine doesn’t change that. Backups of the Hyper-V server do not eliminate the need for traditional SQL Server backups.

Advantages of physical to virtual migration

Existing physical machines can be converted to virtual machines and continue operating just as they did when they were physical machines. This also opens up new possibilities. When a physical or virtual machine isn’t functioning properly, a virtual copy can be made. The problematic machine can be fixed or replaced and brought back online while preserving the problem condition in the virtual copy. The virtual machine can be debugged at length without disturbing the original environment from which it came.

System Center Virtual Machine Manager (SCVMM) 2008 has a tool for converting physical machines to virtual machines, which is known as P2V. It can also do virtual-to-virtual or V2V conversions of Microsoft Virtual Server or VMware virtual machines. You may also use third-party conversion tools that can be purchased or even obtained for free.

System Center Virtual Machine Manager (SCVMM) conversion can be valuable in troubleshooting difficult-to-reproduce problems. Instead of telling your end user that a problem can’t be reproduced, SCVMM 2008 can be used to do a P2V of the problem machine. You can provide the virtual machine to support personnel to analyze the problem at length without disrupting or inconveniencing the user.

Test environments and virtualization

Virtual machines are well suited for creating test environments. To obtain the maximum value of a test environment, it must be valid and complete. Although a successful P2V provides a valid representation of a physical machine, it may not by itself comprise a complete test environment. For example, to test a global deployment of a SQL Server distributed database application, you would probably need a complete environment consisting of many virtual machines. Multiple virtual SQL Servers would be utilized. Each would be configured to the time zones used at corporate datacenters around the world. End user client experience testing requires multiple client virtual machines. Regional and language settings would be different in the various client VMs to match the corporate environment.

Test environments are generally much more dynamic than production environments. To enable agility, templates and prebuilt building blocks such as virtual hard disks and ISO files are needed. ISO files are image copies of physical optical media such as DVDs and CDs. Although Hyper-V supports installing from physical optical media, ISO image files are a better choice because they are read at the speed of the hard drive they reside on. Optical drives are much slower than hard drives. SCVMM 2008 provides an organized framework for managing virtual building blocks and assets such as ISO files and virtual machine templates. Visual Studio Team System 2010 has the Microsoft Test and Lab Manager which builds upon SCVMM 2008 and provides a comprehensive test environment.

Summary

Although this is the end of our discussion of running SQL Server in a virtual machine, it is also the beginning of planning an effective virtualization strategy. Remember that a virtual machine is still a machine. The same design patterns for physical machines apply to virtual machines and effective management policies are as applicable (perhaps more so) than in a physical environment. Extend your design patterns by taking into consideration the unique characteristics of virtual machines to ensure success.

About the author

John Paul Cook is a SQL Server application DBA based in Houston, Texas. In addition to writing about database application development and virtualization, he speaks about SQL Server at conferences and user group meetings. John is particularly interested in using version control and virtualization to bring agility and reliability to the systems development lifecycle. His interests also include database performance tuning and deployment. You can read his blog at http://sqlblog.com/blogs/john_paul_cook/default.aspx.