Hour 22 Power Scripting with awk and Perl

The evolutionary path of a good system administrator usually goes from commands to pipes to aliases to shell scripts to something bigger. The something bigger step is usually C, C++, or Perl, but many system administrators opt to take the intermediate step of learning how to use the powerful awk language along the way.

Throughout this book, we’ve explored sophisticated Unix interaction, and the last hour (or perhaps hour and a half!) was focused on learning how to get the most out of shell scripts, including a number of programming-like structures and examples.

At some point in your long journey as a sysadmin, you’ll reach the point where shell scripts are too confining. Maybe you’ll be doing complex math, and all the calls to expr make your head swim. Maybe you’ll be working with a data file with many columns, and repeated calls to cut no longer, ahem, cut it. At this point, you’ve outgrown shell scripts, and you’re ready to move to awk or Perl.

In this hour we’ll have a brief overview of awk, the original pattern-matching programming language in the Unix environment, then spend some time looking at how the popular Perl language can help with many common system administration tasks. Needless to say, it’s impossible to convey the depth and sophistication of either language in this brief space, so we’ll also have some pointers to other works, online and off, to continue the journey.

In this hour, you will learn about

• The oft-forgotten awk language

• How to write basic Perl programs

• Advanced Perl programming tips and tricks

The Elegant awk Language

Named after its inventors Aho, Weinberg, and Kernighan, awk has been a part of the Unix environment since the beginning, and is a part of every current shipping distro today.

awk is ideally suited to the analysis of text and log files, using its ″match and act″ method of processing. An awk program is basically a series of conditions, with a set of actions to perform if those conditions are met or a string is found. Think of the -exec clause in the find command, combined with the searching capabilities of grep. That’s the power of awk.

Task 22.1: An Overview of awk

Conditions in awk are defined using regular expressions, similar to those available with the grep command. The statements to perform upon matching the condition are delimited with open and closing curly brackets.

1. Let’s say we want to scan through /etc/passwd to find user accounts that don’t have a login shell defined. To do this, we look for lines that end with a colon. In awk, for all lines that end with a colon, print that line:

$ awk ′/:$/ {print $0}′ /etc/passwd

bin:x:1:1:bin:/bin:

daemon:x:2:2:daemon:/sbin:

adm:x:3:4:adm:/var/adm:

lp:x:4:7:lp:/var/spool/lpd:

mail:x:8:12:mail:/var/spool/mail:

The pattern to match is a regular expression, surrounded by slashes. In this instance, the pattern /:$/ should be read as ″match a colon followed by the end-of-line delimiter.″ The action for the pattern follows immediately, in curly braces: {print $0}. Prior examples in this book have shown that you can print a specific field by specifying its field number (for example, $3 prints the third field in each line), but here you can see that $0 is a shorthand for the entire input line.

2. Printing the matching line is sufficiently common that it is the default action. If your pattern doesn’t have an action following it, awk assumes that you want {print $0}. We can shorten up our program like so:

$ awk ′/:$/′ /etc/passwd

bin:x:1:1:bin:/bin:

daemon:x:2:2:daemon:/sbin:

adm:x:3:4:adm:/var/adm:

lp:x:4:7:lp:/var/spool/lpd:

mail:x:8:12:mail:/var/spool/mail:

At this point, our awk program acts exactly like a simple call to grep (grep -E ′:$′ /etc/passwd).

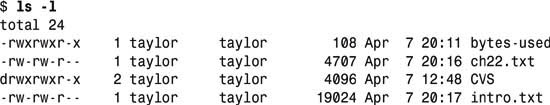

3. Much of the text processing you’ll be faced with in Unix is field- or column-based. awk does its best to make handling this input as easy as possible by automatically creating special variables for the fields of a line. For example, take the output from ls -l:

Fed as input, awk would see each line as a series of fields, with each field separated by a series of whitespace characters. The first line, total 24, has two fields, and the other four each have nine fields.

As awk reads in the lines of its input, it splits the input line into special variables $1, $2, $3, and so on, one for each field in the input. $0 is the special variable that holds the current line, and NF (notice the absence of a dollar sign variable name delimiter) holds the number of fields on the current line.

It’s simple, then, to calculate the total number of bytes used by the files in the directory. Sum the values of the fifth column:

$ ls -l | awk ′( NF >= 9 && $1 !~ /^d/ ) { total += $5 } END { print total }′

33310

4. A valuable improvement to this is to drop the awk commands into a separate .awk file:

$ cat bytes.awk

( NF >= 9 && $1 !~ /^d/ ) { total += $5}

END { print total ″ bytes in this directory.″ }

This can then be easily used as:

$ ls -l | awk -f bytes.awk

33310 bytes in this directory.

A further refinement would be to exploit the fact that executable scripts in Unix can specify the command interpreter they prefer as part of the first line of the file. Make sure you have the right path for your awk interpreter by using locate:

$ cat bytes-used

#!/usr/bin/awk -f

( NF >= 9 && $1 !~ /^d/ ) { total += $5 }

END { print total ″ bytes used by files″ }

$ chmod +x bytes-used

$ ls -l | ./bytes-used

33310 bytes used by files

In this more sophisticated awk program, the pattern isn’t just a regular expression, but a logical expression. To match, awk checks to ensure that there are at least nine fields on the line (NF >= 9), so that we skip that first total line in the ls output. The second half of the pattern checks to ensure that the first field doesn’t begin with d, to skip directories in the ls output.

If the condition is true, then the value of the fifth field, the number of bytes used, is added to our total variable.

Finally, at the end of the program, awk runs a special block of code marked with the END label and prints the value of total. Naturally, awk also allows a corresponding block BEGIN that is run at the beginning of the program, which you’ll see in the following example.

5. You’re not limited to just having fields separated by whitespace. awk’s special FS (field separator) variable lets you define anything as your field separator. Let’s look at improving our previous example of finding entries in /etc/passwd that don’t have a login shell specified. It’s now called noshell:

$ cat noshell

#!/usr/bin/awk -f

BEGIN { FS = ″:″ }

( $7 == ″″ ) { print $1 }

The code in the BEGIN block, executed prior to any input, sets FS to the colon. As each line is read and the seventh field is found to be blank or nonexistent, awk prints the first field (the account name):

$ ./noshell /etc/passwd

bin

daemon

adm

lp

mail

There’s quite a bit more you can do with awk, and there are even a few books on how to get the most out of this powerful scripting and pattern processing language. A Web site worth exploring for more awk tips is IBM’s developerWorks site (www.ibm.com/developerworks/), where there’s a very good tutorial on advanced awk programming at www.ibm.com/developerworks/linux/library/l-awk1.html.

Basic Perl Programming

Designed as a hybrid programming environment that offered the best of shell scripting, awk, and lots of bits and pieces stolen from other development environments, Perl has quickly grown to be the language of choice in the system administration community.

There are a couple of reasons for this, but the greatest reason for using Perl is that it’s incredibly powerful, while reasonably easy to learn.

Task 22.2: Basic Perl

Perl takes the power of awk and expands it even further. Perl’s abilities include the most powerful regular expressions available, sophisticated string handling, easy array manipulation, lookup tables with hashes, tight integration with SQL databases—and those are just the basics!

1. Perl doesn’t automatically iterate through a file like awk does, but it’s not much more difficult. Here’s our noshell program in Perl:

$ cat noshell.pl

#!/usr/bin/perl -w

use strict;

while (<>) {

chomp;

my @fields = split /:/;

print $fields[0], ″

″ unless $fields[6];

}

$ ./noshell.pl /etc/passwd

bin

daemon

adm

lp

mail

The program steps through either standard input or the files specified on the command line. Each line has the trailing linefeed removed with chomp, and then the line is split into individual fields using a colon as the field delimiter (the /:/ argument after the split). The my preface to the @fields line ensures that the variable is only alive within its own loop (the while loop), and the split on that line breaks the line at the colon. This statement breaks down the line into separate fields, then pours those fields into the fields array.

Finally, we print the first field (like many programming languages, Perl indexes starting at zero, not one, so $fields[0] is the equivalent of the awk $1 variable) if the seventh field doesn’t exist or is blank.

Note that the variable @fields starts with an @ sign, yet we refer to the 0th element of fields with a dollar-sign prefix: $fields[0]. This is one of those quirks of Perl that constantly trips up beginners. Just remember to use the @ when referring to an entire array, and the $ when referring to a single element.

2. Perl uses a special variable called $_ as the default variable for many different blocks of code. Think of $_ as meaning it, where it is whatever the current block of Perl code is working on at the moment.

To be explicit, the preceding program can be written as

#!/usr/bin/perl -w

use strict;

while ( $_ = <> ) {

chomp $_;

my @fields = split /:/, $_;

print $fields[0], ″

″ unless $fields[6];

}

You can also use your own variables instead of using $_ if you find that to be more readable.

#!/usr/bin/perl -w

use strict;

while ( my $line = <> ) {

chomp $line;

my @fields = split /:/, $line;

print $fields[0], ″

″ unless $fields[6];

}

Which style you use depends on your needs and how you conceptualize programming in Perl. In general, the larger the program or block of code, the more likely you are to use your own variables instead of the implicit $_.

3. Perl was built on Unix systems, and is specifically geared to many of the common system administration tasks you’ll face. A typical problem might be checking to ensure that none of the users have a .rhosts file in their home directory with inappropriate permissions (a common security hole on multiuser systems).

Here’s how we’d solve this problem in Perl:

$ cat rhosts-check

#!/usr/bin/perl -w

use strict;

while ( my ($uid,$dir) = (getpwent())[2,7] ) {

next unless $uid >= 100;

my $filename = ″$dir/.rhosts″;

if ( -e $filename ) {

my $permissions = (stat($filename))[2] & 0777;

if ( $permissions != 0700 ) {

printf( ″%lo %s

″, $permissions, $filename );

chmod( 0700, $filename );

} # if

} # if file exists

} # while walking thru /etc/passwd

This script introduces quite a few Perl mechanisms that we haven’t seen yet. The main while loop makes repeated calls to the Perl function getpwent(), which returns entries from the /etc/passwd file. This is safer and easier than parsing /etc/passwd yourself, and allows the code to be more graceful.

The getpwent() function returns a list of information about the user, but we’re only interested in the 3rd and 8th elements, so we specify elements 2 and 7 (remember indexing starts with zero, not one), and assign those to $uid and $dir, respectively. Perl assigns these in one easy operation.

The next line skips to the next iteration of the loop if the $uid isn’t at least 100. This sort of ″do this unless that″ way of expressing logic is one of the ways in which Perl allows flexible code. This specific line lets us automatically skip checking system and daemon accounts—user accounts should always have a UID that’s greater than 100.

After that, we build a filename using the user’s home directory and the .rhosts filename, and check to see if it exists with the -e operator1. If it does, we extract the file’s permissions using the stat() function, and make sure that they’re set to 0700 as desired. Finally, if the permissions aren’t up to snuff, we print out a message so we can see whose files we’ve modified, and call Perl’s chmod() function to set the permissions for us.

1Exactly as we’d use with test in shell scripts. It’s not a coincidence—Perl contains many best practices of this nature.

Running this script produces

$ rhosts-check

711 /home/shelley/.rhosts

777 /home/diggle/.rhosts

This just scratches the surface of the system administration capabilities of Perl within the Unix environment. Depending on how you look at it, the depth and sophistication of Perl is either a great boon, or an intimidating iceberg looming dead ahead.

Like any other development environment, however, Perl is malleable—but if you choose to create obscure, cryptic and ultra-compact code, you’ll doubtless have everyone else baffled and confused.

Take your time and learn how to program through the many excellent examples available online and you’ll soon begin to appreciate the elegance of the language.

Advanced Perl Examples

Perl’s regular expressions make searching for patterns in text simple, and hashes enable us to easily create lookup tables, named numeric accumulators, and more.

Task 22.3: Advanced Perl Capabilities

There are many directions in which you can expand your Perl knowledge once you get the basics. Let’s look at a few.

1. We’ll combine searching and a hash-based lookup table in the following example to analyze the Apache Web server’s access_log file.

#!/usr/bin/perl -w

use strict;

use Socket;

use constant NDOMAINS => 20;

my %domains;

while (<>) {

/^(d{1,3}.d{1,3}.d{1,3}.d{1,3}) / or warn ″No IP found″, next;

++$domains{ domain($1) };

}

my @domains = reverse sort { $domains{$a} <=> $domains{$b} } keys %domains;

@domains = @domains[0..NDOMAINS] if @domains > NDOMAINS;

for my $key ( @domains ) {

printf( ″%6d %s

″, $domains{$key}, $key );

}

our %domain_cache;

sub domain {

my $ip = shift;

if ( !defined $domain_cache{$ip} ) {

my @quads = split( /./, $ip );

my $domain = gethostbyaddr( pack(′C4′, @quads), AF_INET );

if ( $domain ) {

my @segments = split( /./, $domain );

$domain_cache{$ip} = join( ″.″, @segments[-2,-1] );

} else {

$domain_cache{$ip} = $ip;

}

}

return $domain_cache{$ip};

}

When run, the output is quite interesting:

$ topdomains access_log

781 aol.com

585 cox.net

465 attbi.com

456 pacbell.net

434 net.sg

409 rr.com

286 209.232.0.86

267 Level3.net

265 funknetz.at

260 194.170.1.132

243 takas.lt

200 com.np

196 mindspring.com

186 209.158.97.3

160 uu.net

160 ca.us

155 202.54.26.98

148 att.net

146 ops.org

145 ena.net

We’re interested in domain names here, not actual hostnames. Many ISPs like Aol.com have dozens of different hosts (say, proxy-02.ca.aol.com) that generate hits, so 500 hits might be spread out over 50 different hosts. Using domains gives a more accurate view.

2. The Apache access_log file has a line for each request on the server, and the IP address is the first field on each line:

$ head -1 /var/log/httpd/access_log

24.154.127.122 - - [19/Apr/2002:04:30:31 -0700] ″GET /house/ HTTP/1.1″

200 769 ″-″ ″Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; .NET

![]() CLR 1.0.3705)″

CLR 1.0.3705)″

The while loop in the preceding Perl code walks through whatever file or files are specified on the command line. Specifying files on the command line instead of piping them through standard input is a good idea, because we can send many files at once.

The regular expression line checks to see whether there’s an IP address at the start of the line that was just read by checking that the first four characters in the line are three digits and a period. If the match fails, the program outputs a warning to standard error and skips to the next input line.

Once we have the IP address, stored in the first field, we call the domain() subroutine, described later, to do a reverse lookup of IP address to domain name. The resultant domain name is used as a key to the hash table of domain names and either increments the value, or sets it to an initial value of 1.

Once the while loop has exhausted its input, it’s time to find the top 20 domains. The keys function extracts the key values from the hash into an array. We can then sort that list, but not by the domain name. We’re interested in sorting by the value of the hash that corresponds to the domain. The special code block after sort specifies this. Then, the entire list of domains is reversed, so that the domains with the most hits are at the top of the list.

Now that we have a list of domains, we extract and loop over the top 20 domains. (Of course, we could have make it 10, 100, or 47 domains by changing the NDOMAINS constant at the top of the program.) Just as in shell scripts, Perl enables us to loop through the values of an array. Inside our loop, we use the printf() function to nicely format a line showing the number of hits and the domain name.

3. What about the translation from IP address to domain name? It’s complex enough that it makes sense to wrap it in a subroutine, and the calling program doesn’t need to know about the sneakiness we pull off to speed things up, as you’ll see later.

The first thing we do is check to see whether we’ve found a value for this given IP address by looking it up in the hash %domains. If a value exists, there’s no need to look it up again, so we return the matched value. If there isn’t a match, we do a reverse DNS lookup by breaking apart the IP address, packing it into a special format, and using Perl’s gethostbyaddr() function. If gethostbyaddr fails, we fall back to using the IP address, or else we use the last two segments of the hostname. Finally, the calculated domain name is stored in the %domains hash for the next time this function is called.

4. Even given that sophisticated example, Perl’s greatest strength might not lie in the language itself, but in its huge community and base of contributed code. The Comprehensive Perl Archive Network, or CPAN (www.cpan.org), is a collection of modules that have been contributed, refined, and perfected over the years. Many of the most common modules are shipped with Perl, and are probably installed on your system.

Here’s a script that uses the Net::FTP module to automatically fetch the file of recent uploads from the CPAN:

#!/usr/local/bin/perl -w

use strict;

use Net::FTP;

my $ftp = Net::FTP->new(′ftp.cpan.org′);

$ftp->login(′anonymous′,′[email protected]′);

$ftp->cwd(′/pub/CPAN/′);

$ftp->get(′RECENT′);

The $ftp variable is actually an object that encapsulates all the dirty work of FTP connections, allowing you to concern yourself only with the specifics of your task at hand.

Like many of the hours in this book, this one is the tip of an iceberg, and there’s plenty more you can learn about the powerful Perl programming language. Certainly having the right tool at the right time can turn a major headache into a delightful victory. After all, who’s the boss? You, or your Unix system?

Summary

This hour has offered a very brief discussion of some of the most powerful capabilities of both the awk and Perl programming environments. It leads to the question: Which should you use, and when? My answer:

• Use shells for very simple manipulation, or when running many different programs.

• Use awk for automatically iterating through lines of input text.

• Use Perl for extensive use of arrays and hashes, or when you need the power of CPAN.

Q&A

Q So which has more coolness factor, Perl or awk?

A No question: Perl. Just pop over to somewhere like BN.com and search for Perl, then search for awk. You’ll see what I mean.

Q What alternatives are there to Perl, awk, and shell scripting for a Unix system administrator?

A There are some other choices, including Tcl and Python, and you can always pop into C, C++, or another formal, compiled language. Remember, though, the right tool for the job makes everything progress more smoothly.

Workshop

Quiz

1. Is it true that everything you can do in awk you can also accomplish in Perl?

2. What’s one of the more frustrating differences between Perl and awk (think arrays)?

3. In awk, positional variables are referenced starting with a special character, but nonpositional variables, like the number of fields in a line, don’t have this special character prefix. What character is it?

4. What’s the two-character prefix you can use in scripts to specify which program should interpret the contents?

5. What is the greatest advantage to using -w and use strict in Perl?

6. What’s the difference between a variable referenced as $list and @list?

Answers

1. It is indeed. Something to think about when you pick which language to learn.

2. Perl indexes starting at zero, but awk uses one as its index base, so $1 is the first value in awk, but $list[1] is the second value in Perl.

3. The special character is $.

4. The magic two-character prefix is #! and it only works if the file is set to be executable, too.

5. Most of your typographical errors will be caught by the Perl interpreter before you try to start using your program.

6. Perl variables referenced with an @ are an array of values, whereas those prefaced with $ are considered a specific value of the array. Note that the same variable can be referenced in both ways depending on what you’re trying to accomplish!

The last two hours of the book explore the important Apache Web server included with just about every version of Unix shipped. The next hour explores basic configuration and setup, and the last hour will delve into virtual hosting.