CHAPTER 11

ELICITING CRITICAL THINKING SKILLS FOR SCENARIO-BASED e-LEARNING

When doing a task analysis, have you ever asked the subject-matter expert questions such as: “Why did you do that?” or “What made you think of that?” and get responses such as: “Well—you just know” or “It’s kind of intuitive” or “You can’t really teach that—it’s just something you have to get a feeling for.” Your expert is not trying to be obtuse. It’s just that he or she has a lot of tacit knowledge—knowledge that cannot be readily articulated. Experts literally can’t tell you what they know. Your challenge—especially when planning courses based on ill-structured problems—is to use special elicitation techniques that help experts reveal what they know.

As you’ve seen throughout the book, designing and developing scenario-based e-learning can be resource-intensive, especially compared to receptive or even directive designs. However, the resources you invest in preparing scenario-based e-learning lessons or courses will be largely wasted unless the knowledge and skills you incorporate are valid. By valid I mean that the specific behaviors illustrated and behavioral responses elicited in your scenarios mirror best practices linked to operational goals. Of course, this is true for any form of organizational training. However, scenario-based e-learning is more likely than traditional training to focus on critical thinking skills—knowledge and skills that cannot be readily observed by you or described by your subject-matter experts.

Scenario-based e-learning has the potential to accelerate expertise so that the actions and decisions of your most senior and effective performers can be emulated by more junior staff. But unless time is devoted to eliciting those behaviors and the knowledge that underpins them, you may end up with a multimedia training package that is engaging and popular but only gives an illusion of building expertise.

WHAT IS KNOWLEDGE ELICITATION?

The basic premise of knowledge elicitation is that tacit knowledge and skills must be drawn out indirectly from expert experience. When you directly ask experts how or why they resolve particular situations, they can often provide only limited information. To get the real story, you need to dig into their brains by using inductive task analysis methods. Rather than ask them to describe the principles, rationale, or methods they use, you will obtain better data by having them talk about what they are doing—either while they are doing it or by describing past real situations. By carefully recording, probing, and analyzing what several experts say and do in a target problem situation, you can define their actions and also identify the behind-the-scenes knowledge that led to those actions.

WHAT DO YOU THINK?

As you consider the what, when, and how of knowledge elicitation for your projects, check the options below that you think are true. You will find the answers by reading through this chapter, or skip to the end, where I summarize my thoughts.

THREE APPROACHES TO KNOWLEDGE ELICITATION

In this chapter I describe three approaches to knowledge elicitation that you can adapt to your own context. All three techniques involve a two-to-three-hour recorded interview of an expert, a transcription of the interview, and an analysis of the transcription to identify critical thinking skills. The three approaches are (1) concurrent verbalization, (2) after-the-fact stories, and (3) reflection on recorded behaviors. In Table 11.1, I summarize each of these approaches. Keep in mind that they are not mutually exclusive and you might benefit from combinations of the three.

TABLE 11.1. Three Approaches to Knowledge Elicitation

| Approach | Description |

| Concurrent Verbalization | The performer verbalizes her thoughts aloud as she resolves a problem or performs a task related to the training goal. |

| After-the-Fact Stories | The performer thinks back on tasks performed or problems solved in the past that are relevant to the training goals. |

| Reflection on Recorded Behaviors | The performer verbalizes her thoughts aloud as she reviews a recording of her performance. Recordings could include video, audio, keystroke, or eye tracking. |

Concurrent Verbalization

In concurrent verbalization, you ask the expert to perform a task or solve a problem aligned to the class of problems that is the focus of your lessons. The problem may be a “real” work task the expert performs on the job or may be a simulated problem. As they are working on the problem, they verbalize aloud all of their thoughts. In Figure 11.1 you can review typical directions given to experts asking them to verbalize while problem solving. The actions and verbalizations are recorded for later analysis. Occasionally, an expert might become absorbed in the problem and forget to talk aloud. Whenever the expert is silent for more than three to five seconds the interviewer gives her a gentle reminder to continue talking.

FIGURE 11.1. Typical Directions for Concurrent Verbalization.

You can probably immediately think of some work contexts that would not lend themselves to concurrent verbalization. If the work situation involves interpersonal communication—for example, overcoming objections as part of a sales cycle or interviewing for a hiring position—it will not be possible for the expert to do a secondary verbalization. Therefore, concurrent verbalization will only apply to problems that do not require talking during performance. Secondly, some problems will not lend themselves to this method because of privacy concerns (e.g., financial consulting), hazardous situations (e.g., military operations), or scarce problem occurrences (e.g., emergency responses to unusual events).

Sometimes you can set up a simulation that is close enough to the real-world situation that you could use concurrent verbalization. For example, for troubleshooting infrequent failures in specific equipment, the failure could be replicated in samples of the equipment, which could then be used as a model problem for experts to resolve. Likewise, in some settings, realistic simulators are already in place that could be programmed to emulate the types of problems that are the focus of your training. For example, realistic simulators are common training devices in medical settings, computer networking, military operations, aircraft piloting, and power plant control operations, to name a few.

After-the-Fact Stories

If concurrent verbalization is not feasible, consider harvesting expert tacit knowledge and skills through stories of past experiences. The stories should be descriptions of actual problems faced and solved by expert performers. There are several approaches to finding useful stories and I will summarize two.

Crandall, Klein, and Hoffman (2006) describe in detail the “critical decision method” for eliciting and extracting knowledge from expert descriptions of past experience. The interview process involves five main phases: (1) helping the expert to identify a past experience that will incorporate the knowledge and skills relevant to the instructional goal, (2) eliciting a high-level overview of the problem and how it was resolved, (3) recording and verifying a timeline or sequence of events of the problem solution, (4) reviewing the story in depth with frequent probing and amplifying questions from the interviewer, and (5) making a final pass with “what-if” questions. In total, the interview can require one or two hours and, as with all knowledge elicitation, it must be recorded and transcribed for later analysis. In Figure 11.2 I summarize some typical directions given to experts at the start of the interview. Further in the chapter are some examples from a critical decision method interview.

FIGURE 11.2. Typical Directions to Initiate the Critical Decision Method.

A second approach to getting after-the fact stories described by Van Gog, Sluijsmans, Joosten-ten Brinke, and Prins (2010) involves prework assignments to a small team of three to six experts, followed by synchronous collaborative team discussions on the completed assignments. First, ask each team member to work alone and write down three incidents that he or she faced in the problem domain. Ask the experts to write about a situation that was relatively simple to resolve, a second situation of moderate difficulty, and a third that was quite challenging. In Figure 11.3 I include directions for team members that you could adapt for your context. Ask each expert to bring a typed copy of the three situations to a meeting of approximately two or three hours. Begin the group discussion with the problems that each expert classified as relatively simple. Ask each expert to read his description in turn. Alternatively, make copies of each scenario and ask experts to read them. After hearing or reading each sample, ask the team for consensus that the problem indeed would qualify as relatively simple (or moderately challenging or very challenging). Then, using all the scenario samples of a given level as a resource, ask the team to identify the key features that characterize situations and solutions at that level. Use a wall chart, whiteboard, or projected slides to record features in three columns of simple, moderate, and complex.

FIGURE 11.3. Sample Directions for Identifying Past Scenarios.

Several aspects of this method appeal to me. First, it offers a useful approach to identifying the features that distinguish easier and more difficult scenarios, as well as a repository of actual scenarios to be adapted for the training. Since scenario-based e-learning courses should incorporate a range of complexity among a given class of problems, the features that distinguish simple from complex will inform your problem progression. In addition, basing the analysis on problems derived from actual experiences will be more valid than simply asking experts: “What makes a problem more complex?”

Second, the prework assignment will save time. Third, the collaboration of a team of experts will ensure a less idiosyncratic knowledge base compared to stories based on a single expert. Fourth, from the common guidelines derived from the various solutions, you can define the outcome deliverables of your scenarios. On the downside, you may not obtain the depth of knowledge compared to the critical decision method. However, you could follow up this initial round of work with individual interviews that dive into greater depth on the stories that seem the most appropriate for training purposes.

Reflections on Recorded Behaviors

One potential disadvantage to after-the-fact stories is the reliance on memory. As experts think back on past experiences, especially experiences they will share in a group of peers, they may omit or edit important details or events—especially mistakes. Alternatively, they may embellish elements that, upon reflection, seem more important. Finally, they may distort details simply due to memory failure. One approach to making reflections more accurate and detailed involves asking an expert to comment on recorded episodes of problem solutions. For example, a teacher might make video recordings of his classroom activities. Alternatively, customer service representatives are routinely audio-recorded during customer interactions. Later, you can review the recordings with the expert and use probing questions to elicit details, such as why he took a particular action or made a specific statement.

Naturally, this method relies on the feasibility of recording events. A video recording would be useful for situations that involve movement; engagement with artifacts, such as troubleshooting a vehicle failure; and interpersonal exchanges, such as those involved in teaching, sales, giving presentations, and so forth. For tasks that can be completed on a computer, you could record on-screen actions as well as focus of attention via eye-tracking methodologies. A replay would superimpose the eye-tracking data onto screen changes and/or a review of keystrokes. If you are unable to record real-world events, you might be able to set up model problem situations realistic enough to elicit authentic behaviors from expert performers. For example, in a pharmaceutical setting, doctor consultants could engage in recorded role plays with experienced account agents. Or equipment problem failures could be re-created for experts to identify and repair.

WHICH ELICITATION METHOD SHOULD YOU USE?

First, you need to decide if and where to use these resource-intensive knowledge elicitation methods. I would recommend blending one or more of these approaches with a traditional job analysis. Using traditional job analysis methods, identify the more critical tasks that will be the focus of problem-centered learning—the tasks that make a difference to operational outcomes. Next, isolate the tasks or task segments that involve knowledge and skills that are likely to reside as tacit knowledge in expert memory. For example, if during a task analysis interview you are hearing comments like: “Well, it’s just intuitive” or descriptions of actions that are very generalized and/or vague, you might bookmark that task as a candidate. Third, consider the volatility of the tasks. If the critical thinking skills underlying given problems are likely to change substantially and relatively quickly, you have to decide whether the investment required to elicit detailed knowledge and skills is worth it.

Once you have identified some tasks or classes of problems that are good candidates for knowledge elicitation, decide which method is best. Often, your context will dictate that decision for you. As I mentioned previously, in many situations concurrent verbalization won’t be a viable option. If not, consider whether there is a way to record problem-solving episodes in a real-world or simulated situation and ask experts to view the recordings and reflect on them. If reflection on recorded behaviors is not practical, then you will have to rely on after-the-fact stories by adapting one of the methods I described previously.

Evidence on Knowledge Elicitation Methods

Van Gog, Paas, van Merrienboer, and Witte (2005) compared the effectiveness of the three methods I’ve discussed in this chapter. In their experiment, they asked twenty-six college students to solve computer-simulated electrical circuits troubleshooting problems. Each individual solved two different problems using each of the three methods. For example, an individual would solve a problem and voice his thoughts as he worked (concurrent verbalization). Next, the same individual would solve a different problem silently and, after completing the problem, describe what he did and why (after-the-fact stories). Finally, the same individual would solve a third problem in silence while his keystrokes and eye fixations were recorded. These records were played back while the individual described what he did and why he did what he did (reflections on recorded behaviors).

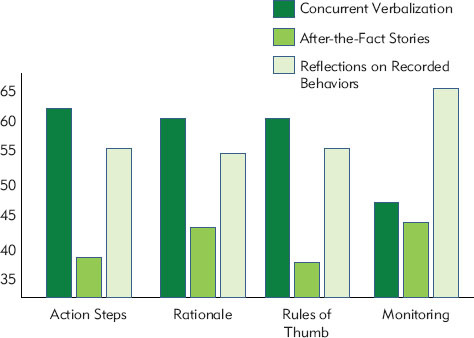

The team reviewed all of the verbalizations, classifying them into four types of information: actions taken, rationale for actions, rules of thumb, and monitoring thoughts (more on monitoring coming up in this chapter). In Figure 11.4 you can see the results of their comparison. Overall, the concurrent verbalization technique yielded the most information. After-the-fact stories yielded the least amount of information. However, as the research team pointed out, in the after-the-fact verbalizations, the interviewer did not ask additional probing questions, thus potentially limiting the amount of information that would normally surface with this method. In addition in the recorded condition, the problem solver viewed the recording in real time and did not have an opportunity to pause or replay episodes. Future research should give us more detailed guidance on when each method might yield the best results. In fact, some methods might be better for some types of problems, contexts, and type of knowledge desired.

FIGURE 11.4. Amount of Knowledge Elicited by Three Methods.

Adapted from Van Gog, Pass, Van Merrienboer, and Witte (2005).

TYPES OF KNOWLEDGE AND SKILL TO ELICIT

As you decide if and how to conduct knowledge elicitation and as you analyze the transcripts of your interviews, you need to define the categories of knowledge and skills most important to your instructional goals. In Table 11.2 I summarize six common general categories of knowledge to consider and illustrate each category with an example from an interview with an emergency room physician. The six types are (1) actions taken, (2) decisions made, (3) cues used, (4) rationale, (5) rules of thumb, and (6) monitoring.

TABLE 11.2. Six Types of Knowledge to Elicit

| Knowledge Type | Description | Example from ER Physician Interview |

| Actions Taken | Observable behaviors exhibited during problem solution | In two minutes’ time we did several things simultaneously—we put him on the bed, started oxygen, and called the respiratory therapist. |

| Decisions Made | Mental choices that drove actions | Before we intubated him, I spoke with his wife because I needed to be sure that he would want to be put on a ventilator. |

| Cues Used | Physical signs that led to a decision or an action | He was cyanotic. He was breathing, but the area above his collar bones was pulling in. |

| Rationale | Underlying principles or reasoning for a decision or action | The critical part was determining the cause of his respiratory distress. He had a history of emphysema and asthma and he was near a wood stove, which in the past had kicked off an attack. |

| Rules of Thumb | Underlying heuristics commonly used by expert performers to resolve specific classes of problems | When pulmonary edema causes respiratory distress, the breathing usually sounds wet. His respirations did not sound gurgling. |

| Monitoring | Monitoring and assessment of a situation leading to potential adjustments in actions or decisions | I was pretty certain of my diagnosis and I wanted an X-ray to confirm it. I was not going to wait for the X-ray to decide. I was going to treat him and review the X-ray to confirm. |

Thanks to Gary Klein for data from the emergency room physician interview.

Actions and Decisions

In just about all knowledge elicitation involving problem solving, you will want to identify a combination of actions and decisions. In some situations, such as the ER physician example shown in Table 11.2, there are many actions and you will need to probe for the decisions underlying those actions. In other cases, there is less observable behavior involved. More of the action is going on in the head. For example, a news analyst spends time searching sources on the computer, reviewing hits, refining searches, saving some documents, reviewing documents, and abstracting key information for a desired end-product such as a report. Lengthy periods of inactivity may occur while the analyst is reviewing hits, reading relevant returns, and thinking about how to write the story. In this situation, you will want to identify the thoughts and decisions that underlie the few actions that do occur.

Cues

Cues are an important component of just about all problem solving, and it’s important to identify those cues in order to help others see through the eyes of an expert. Cues involve sights, sounds, feelings—perhaps even smells—all sensory information used by the expert to assess a situation, make a decision or take action, and monitor responses to her actions. Cues are often the basis for an expert response of “Well—it’s intuitive” or “You just know.” It will be important to identify specific cues relevant to the problem solution and to reproduce those cues in your training scenarios.

Rationale and Rules of Thumb

Rationale and rules of thumb are closely related, and you may want to collapse them into a single category. Rationale refers to the reasons behind decisions made and actions taken. Rationale is often based on an interpretation of cues in light of prior experience and domain-specific knowledge. Rationale may involve knowledge components such as facts, concepts, processes, or other domain knowledge.

Rules of thumb are commonly held guidelines or principles that experts use when making decisions or taking actions. Rules of thumb, also called heuristics, tend to be cause-and-effect relationships and are often based on past experience. To identify rules of thumb, look for statements such as “Well—when I see XYZ I generally consider ABC first” or “The fact that XYZ happened tells me that most likely ABC would follow if we did not do D because . . .” or “More often than not, when faced with XYZ, we consider either ABC or FWQ.”

Monitoring

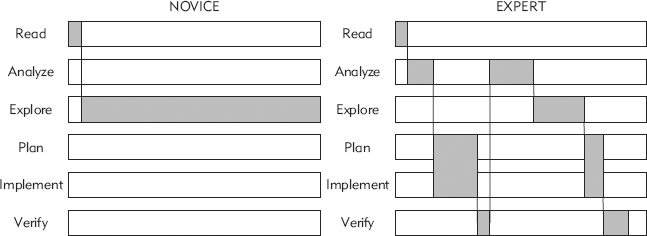

Monitoring involves mental activity used to (1) assess a given situation (based on cues, experience, and knowledge), (2) set goals for optimal outcomes, (3) note situation changes, and (4) adjust activities or decisions based on scenario progress. Psychologists refer to these skills as metacognition, and these types of skills often distinguish expert from junior performance. For example, Schoenfeld (1987) asked graduate mathematics students and expert mathematicians to talk aloud as they solved a challenging problem. He analyzed their monitoring or metacognitive statements and created the graph shown in Figure 11.5. The novices tended to start down a solution path and stick with it no matter what. In contrast, the expert moved more frequently among the problem-solving stages. This pattern of problem-solving behaviors of experts reflected greater monitoring skills. Based on this analysis, Schoenfeld emphasized metacognitive thinking in his practice sessions. For example, as student teams worked on problems, he encouraged metacognitive thinking by asking: “What are you doing now? Why are you doing that? What other course of action have you considered? How is your current course of action working?”

FIGURE 11.5. A Comparison of Problem-Solving Patterns Between Novices and Expert Mathematicians.

From Schoenfeld (1987).

Questions to Elicit Knowledge Needed During the Interview

Having in mind specific categories of knowledge and skills you need for your scenarios will help you ask productive probing questions during the interview and to identify specific examples of those categories for coding of the transcript. In Table 11.3 I list some common questions that will help elicit each type of information. You would use probing questions such as these only during after-the-fact stories or reflection on recorded behaviors, as you do not want to interrupt the expert with questions during concurrent verbalization.

TABLE 11.3. Sample Questions to Elicit Knowledge Types

| Knowledge Type | Sample Questions |

| Actions Taken | What did you do? What other alternatives did you consider? What might someone with less experience have done differently? |

| Decisions Made | What decisions did you make before taking the action? What part of this decision led to a desired outcome? What might someone with less experience have decided? Why did you make that decision at this point? |

| Cues Used | What did you hear, see, smell, or feel that indicated this situation was challenging? What did you hear, see, smell, or feel that led you to make this decision or take that action? What would have looked, sounded, smelled, or felt differently that would have led to a different action or decision? What did you hear, see, smell, or feel that told you that your action or decision was leading to a desired outcome? What did you hear, see, smell, or feel that someone with less expertise might have missed? |

| Rationale | Why did you take that action or make that decision? What was your rationale? What concepts or facts did you apply at this stage? What did you consider that someone with less experience might not have known or thought of? |

| Rules of Thumb | What general rules of thumb do you use in these situations? What general principles did you apply? What specific techniques made a difference to the outcomes? What would other experts like yourself consider when faced with this type of problem? In what ways is this situation similar to or different from others you have solved? |

| Monitoring | What were your initial goals? What did you look for after you took action X? Describe what you looked for that told you the situation was headed in the desired direction. What alternative actions did you consider? On what basis did you rule them out? What might have caused you to change your path of action? What did you notice as the situation played out that a more novice individual might have missed? |

INCORPORATING CRITICAL THINKING SKILLS INTO YOUR LESSONS

Now that you have elicited and identified some key critical thinking skills, how will you incorporate them into your design model? In Table 11.4 I summarize some suggestions and will illustrate with some examples to follow.

TABLE 11.4. How to Use Knowledge Types in Scenario-Based e-Learning

| Knowledge Type | Some Ways to Apply to Design Model |

| Actions Taken | Integrate into task deliverable to design selection options of actions to take or avoid during problem solving. Actions are the basis for response options (links, buttons, etc.) that learners select to research and resolve the problem. In addition, actions taken can be the basis for guidance (advisors, examples, etc.) as well as feedback. |

| Decisions Made | Integrate into task deliverable to provide decision options to take or avoid. In branched-scenario designs, include expert decision options as well as potential suboptimal decisions that a more junior performer might make as alternatives. Provide scaffolding for decisions, such as worked examples or worksheets. |

| Cues Used | Incorporate important cues in the trigger event, in case data, as well as in intrinsic feedback, to actions selected or decisions made. Cues can also be presented in guidance and feedback. If actions or decisions rely heavily on cue identification, incorporate realistic rendering of those cues into the interface. |

| Rationale | Make the reasoning behind actions and decisions explicit in worked examples of expert solutions, as well as in the feedback to actions taken or decisions made. Add response options in the interface that require learners to explicitly identify rationale behind decisions made or actions taken. |

| Rules of Thumb | Make the rules of thumb that support actions and decisions explicit in guidance, such as worked examples of expert solutions or virtual advisors. Interactions in the program ask learners to identify rules of thumb as they progress through a scenario. Instructional feedback can make rules of thumb explicit. |

| Monitoring | Identify junctures in the scenario when monitoring events occurred. Include explicit examples of expert monitoring questions and responses in worked examples. Incorporate monitoring reminders or choices in learner response options. Virtual experts and advisors model monitoring events, and monitoring is prompted during the reflection phases. |

Use of Actions Taken

The actions taken should reveal the work flow or the basic paths experts take to progress through a scenario. You will use the workflow to specify response options aligned to the task-deliverable stage of your design plan, as well as in guidance resources, feedback, and reflection. For example, in a branched scenario design, action steps from the cat anesthetic lesson (Figure 1.5) can be selected. In a menu-driven interface, you may use the main workflow stages as menu tabs, as in the bank loan analysis scenario (Figure 5.1). Identify and create not only correct response options but also the erroneous or less efficient actions that reflect misconceptions common to the target audience.

Use of Decisions Made

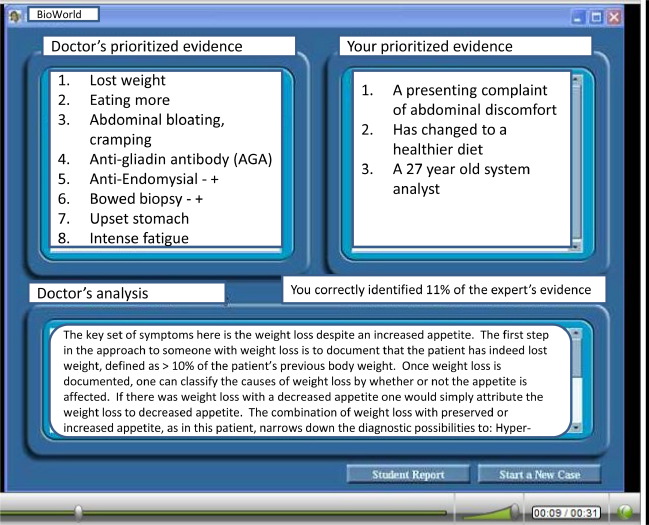

If task success relies heavily on the decisions behind actions, incorporate mechanisms for learners to make decisions explicit during problem solving as well as an emphasis on decisions in guidance resources, feedback, and reflection. For example, when selecting a given action in a branched scenario, the learner could be asked to also select a rationale or principle supporting the choice. As you can see in Figure 11.6, after prioritizing the evidence supporting a diagnosis, the learner can compare her priorities with those of an expert and type in lessons learned or additional questions.

FIGURE 11.6. Learners Compare Their Prioritized Evidence (Right) with Expert Priorities (Left).

With permission of Susanne Lajoie, McGill University.

Use of Cues Identified

Important cues can guide your trigger event, the responses to learner actions or decisions during the scenario, the scenario data, guidance, and intrinsic as well as instructional feedback. In other words, all of the major design elements may incorporate critical cues. In Figure 1.5 from the anesthetics lesson, vital signs are critical cues and summarized in the left-hand chart. In a dynamic simulation for treatment of blood loss in dogs (Figure 1.4), an EKG provides ongoing cues that fluctuate with treatment decisions. In Bioworld, the learner is required to select specific relevant patient signs and symptoms that are stored in the evidence table. In the automotive troubleshooting scenario, after an incorrect action is tried, the symptom still appears as an example of the use of cues as intrinsic feedback (see Figure 3.3). Overall, cue recognition and interpretation are key distinguishing features of expert performance, and the integration of important cues throughout your scenario is a hallmark of effective scenario-based e-learning.

Use the type and salience of cues also to select your scenario display modes. If decisions rely heavily on interpretation of visual and auditory cues, realistic visuals may be essential to an authentic representation of the scenario.

Use of Rationale and Rules of Thumb

Rationale and rules of thumb may be some of the most important elements of your training. You will want to illustrate these in your guidance resources, including problem-solution demonstrations. You may also ask learners to make them explicit during problem solution. As the learner gathers data in Bioworld, she selects a hypothesis and prioritizes evidence to support her hypothesis, eventually comparing her priorities with those of an expert. In this way, not only the solution, that is, the diagnosis, but also the rationale for the solution is made explicit. In the automotive troubleshooting lesson, the only test that can be selected initially is verify the problem. All other test options are inactive. This is a form of guidance to ensure application of the rule of thumb that all failures should be verified as a first step.

Teaching Monitoring Skills in Your Scenario-Based e-Learning

Problems offer a great opportunity to illustrate and to ask learners to practice monitoring skills. As shown in Figure 7.4, during a demonstration, the learner hears the expert respond to the doctor, but also sees a thought bubble that illustrates a situation-monitoring statement. Adding thought bubbles to demonstrations is a powerful technique to make expert rationale and monitoring explicit. Periodically during problem resolution, learners can be asked to monitor their progress by responding to questions asking them to assess whether they are moving toward their goal and what other actions they might consider. During reflection, the learners can describe what they might have done differently.

GUIDELINES FOR SUCCESS

If you have decided to conduct some form of knowledge elicitation, here are a few caveats:

1. Take Care in Selecting Experts

Since the experts you are interviewing will provide the basis for knowledge and skills potentially disseminated to hundreds of staff, take care in selection of those experts. In some situations, I’ve had specific experts assigned to my project because their absence would not adversely affect productivity. A different individual would have provided better expertise, but he or she was already overcommitted.

Also consider the level of expertise you need. If your course is for more junior staff, journeyman-level experts might articulate more appropriate scenarios or solutions than would those at the highest level of expertise.

Third, I always feel more comfortable when I am able to draw on several experts. A single expert may have some idiosyncratic approaches to resolving problems. Comparing the approaches of several experts to a similar scenario will help you define common knowledge elements shared by the community of practice. Also look for balance in your experts. In one project I conducted, my organizational liaison was an Army staffer. Even though the organization included civilians and four different military branches, 90 percent of my experts were Army. As I collected data, I felt certain that it was not representative of the work of the broader community of practice. In retrospect, I should have requested a cross-functional committee to nominate individuals with expertise for given classes of problems.

2. Don’t Over-Extend

Any of the knowledge-elicitation methods I’ve mentioned will be resource-intensive. The time of experts, interviewers, transcribers, and analysts can add up quickly. Be realistic about what you can accomplish with your resources. Narrow down to problems or problem phases that rely heavily on tacit knowledge that cannot be readily identified in more direct ways.

3. Work in a Team

Not only do you need balance in expertise, but it is also helpful to have a diverse team conducting interviews and helping with the analysis. Your analysis team should include subject-matter experts who can ask probing technical questions and interpret responses.

WHAT DO YOU THINK? REVISITED

Now that we have reviewed knowledge-elicitation techniques, let’s revisit the questions. Keep in mind that your context may justify a different answer than mine.

I consider Option A to be false. In some situations you can identify much of the important content from traditional job analysis methods, including work observations and traditional interviews. Some scenario domains, such as tradeoffs or situations that involve highly structured problems, can often be built based on documentation, interviews, and observations.

Option B is both true and false. In their research, Van Gog, Paas, Van Merrienboer, and Witte (2005) obtained the best data from concurrent verbalization. However, they did constrain the other methods, which may have yielded more complete data otherwise. In addition, there are many situations that will not lend themselves to verbalization during problems solving as discussed in this chapter.

Option C I consider false. Knowledge elicitation is resource-intensive and should be reserved for important tasks that cannot be validly identified in more efficient ways.

Option D is true. The ability to identify, act on, and interpret cues such as sounds, visuals, or patterns found in text documentation often distinguishes expert problem solving from problem solving by less experienced staff. Cues are often stored in memory as tacit knowledge, and experts may not readily articulate them in traditional interviews.

COMING NEXT

Throughout the first eleven chapters of this book, I have summarized the what, when, why, and how of scenario-based e-learning. However, even the best design possible will fall short in a poorly executed project. In the last chapter I review some guidelines unique to scenario-based e-learning projects to help you sell, plan, develop, and implement your course.

ADDITIONAL RESOURCES

Crandall, B., Klein, G., & Hoffman, R.B. (2006). Working minds: A practitioner’s guide to cognitive task analysis. Cambridge, MA: MIT Press.

I find this book very practical, with real-world examples, and it’s one of my training Bibles.

Van Gog, T., Paas, F., van Merrienboer, J.J.G., & Witte, P. (2005). Uncovering the problem-solving process: Cued retrospective reporting versus concurrent and retrospective reporting. Journal of Experimental Psychology: Applied, 11, 237–244.

This is a technical report that I summarized in this chapter. I think it’s a good example of the type of research that will be helpful to practitioners who want to take an evidence-based approach to cognitive task analysis.