CHAPTER 10

DOES SCENARIO-BASED e-LEARNING WORK?

Like most professional fields, workforce learning is no stranger to fads and fables. Take learning styles, for example. Millions of dollars have been spent on various learning style inventories, classes, books, and other resources. Yet there is no evidence for the validity of audio, visual, or kinesthetic learning styles (Clark, 2010). Before you make a significant investment in any new training method, product, or design, there are several questions worth asking. In this chapter I’ll summarize the evidence on several key questions regarding scenario-based e-learning.

DOES IT WORK?

The first question is Does it work? This question can be parsed into several related questions. First, Does it work better than a different method or approach? To answer this question, researchers will randomly assign one group of students to a lesson version using scenario-based e-learning and a second group to an alternative lesson teaching the same content using a different design, such as a traditional lecture or a directive approach. A second related question is Does it yield some unique outcomes not readily achieved by other approaches? For example, can scenario-based e-learning accelerate learning or does scenario-based e-learning result in better transfer of learning than alternative designs do? Finally, we could ask: For what kinds of outcomes and for what kinds of learners is scenario-based e-learning most effective? For example, Is it effective for learning procedures or for learning strategic skills? Alternatively, Is it most effective for novice learners or for learners with some background experience? To answer this question, researchers may compare learning from scenario-based e-learning among learners of different backgrounds or assess learning from scenario-based e-learning with tests measuring different knowledge and skills.

IS IT EFFICIENT?

Even if an instructional method works, we must also consider the cost. Does scenario-based e-learning take more time and resources to design and develop, compared with traditional multimedia training? And more importantly, will it require a longer learning period? The biggest cost involved in training in commercial organizations is the time that staff invests in the learning environment. Since most research comparisons allocate the same amount of time to the different experimental lesson versions to maintain equivalence, we don’t have as much evidence about efficiency as about some of the other questions. However, in a recent experiment that measured learning and learning efficiency in a game compared to a slide presentation that presented the same technical content as the game, the game version resulted in equivalent or poorer learning than the slide version and required over twice as long to complete (Adams, Mayer, MacNamara, Koenig, & Wainess, 2012). I’m hoping to see more of this type of data in the future.

DOES IT MOTIVATE?

If a given instructional method or program is equally effective for learning outcomes, but overall learners like it better, it might be a worthwhile investment. To answer questions about motivation, researchers ask learners to rate a scenario-based e-learning version and a traditional version of the same content and compare results. In addition, researchers can compare course enrollments and completion rates in instructional settings where learners have alternative courses to select and completion rates are tracked.

WHAT FEATURES MAKE A DIFFERENCE?

For an instructional approach with as many variations as scenario-based e-learning, we might ask which components enhance learning and which either have no effect or even could be harmful. For example, is learning better when a scenario is presented with video compared to text? Are some elements of the design, such as guidance, feedback with reflection, or visuals, more important than others? To answer these questions, researchers design different versions of a scenario-based e-learning lesson and compare their effects. For example, an unguided version of a simulation might rely on the learner to discover the underlying principles, while an alternative version of the same simulation would provide structured assignments to guide its use. This approach to research is what Mayer calls “value added” (Mayer, 2011). In other words, what features of scenario-based learning designs add learning value and what features either have no effects or even hurt learning?

WHAT DO YOU THINK?

Put a check by the statements that you think are true:

I’ll review these questions and more as I summarize the research on the following pages. If you want to see the answers now, turn to the end of the chapter.

LIMITS OF RESEARCH

Practitioners need much more research evidence than is often available. Hence, we need realistic expectations regarding how much wisdom we can mine from research—especially research on newer instructional methods or technology. Two major limitations regarding various forms of scenario-based e-learning include a rather low number of experiments conducted to date and inconsistency in terminology and implementation of scenario-based e-learning.

The Evolution from Single Experiments to Meta-Analysis

Evidence on any new technology or instructional strategy will lag behind its implementation by practitioners. Only after a new approach has been around for a while and has been sufficiently salient to stimulate research might we see an accumulation of evidence. At first there will be a few reports of individual experiments. Eventually, after a significant number of studies accumulate, a synthesis called a meta-analysis is published. The conclusions of a meta-analysis give us more confidence than individual studies because they summarize the results from many experiments. The meta-analysis will report an effect size of the many studies combined, which gives us some idea about the degree of practical significance of the results. Effect sizes greater than .5 indicate sufficient practical potential to consider implementing a given method.

By coding different features in different studies, the meta-analysis can also suggest insights regarding which learning domains or audiences benefit most or, alternatively, which techniques used in a an instructional implementation might be more effective. In this chapter I summarize results of a meta-analysis that synthesized results from more than five hundred comparisons of learning from discovery lessons with either guided discovery or traditional lecture-based versions of the same content.

What’s in a Name?

Instructional practitioners and researchers are challenged to define what is meant by a specific instructional method or design. It’s common to label quite different implementations of a given method with the same name. Likewise, we often see similar programs given different names. In professional practice as well as in the research literature, we see a range of terms for various guided discovery approaches, including problem-based learning, scenario-based learning, whole-task learning, project-based learning, case-based learning, immersive learning, inquiry learning, and so on.

It follows then that a given approach such as problem-based learning (PBL) may show conflicting results among different research reports because it is implemented differently in various settings. Hung (2011) has summarized a number of ways that the reality of PBL in practice often differs from the ideal definition and vision of what PBL should be. For example, PBL implementations may actually consist of traditional lecture-based instruction supplemented with problems. Alternatively, the PBL instructors may play quite different roles during the group sessions. For example, some facilitators may impose more structure and guidance regarding content, whereas others may primarily model problem-solving processes. In short, the label of PBL may in fact represent quite different instructional approaches. The result is research results about apples and oranges and, not surprisingly, inconsistent outcomes and conclusions. In a review of problem-based learning, Albanese (2010) concludes: “Research on the effectiveness of PBL has been somewhat disappointing to those who expected PBL to be a radical improvement in medical education. Several reviews of PBL over the past twenty years have not shown the gains in performance that many had hoped for” (p. 42).

In spite of these limits, I will review research on the following lessons learned from research on guided-discovery environments:

DISCOVERY LEARNING DOES NOT WORK

Perhaps one of the most important lessons from years of instructional research is that pure discovery learning is not effective. In a review of discovery learning experiments conducted over the past forty years, Mayer (2004) concludes that “Guidance, structure, and focused goals should not be ignored. This is the consistent and clear lesson of decade after decade of research on the effects of discovery methods” (p. 17). Mayer’s conclusion is empirically supported by a recent meta-analysis that compared discovery approaches with other forms of instruction (Alfieri, Brooks, Aldrich, & Tenenbaum, 2011). The research team synthesized 580 research comparisons of discovery learning to other forms of instruction, including direct instruction and guided-discovery approaches. Discovery learning was the loser, with more guided forms of instruction showing a positive effect size of .67.

Lessons that included guidance in the form of worked examples and feedback showed greatest advantage over discovery learning. A critical feature of any successful scenario-based e-learning will be sufficient structure and guidance to minimize what I call the “flounder factor.” Instructional psychologists use the term “scaffolding” to refer to the structure and guidance that distinguish discovery learning from guided discovery. In Chapter 6 I described nine common strategies you can adapt to build structure and guidance into your scenario-based e-learning lessons.

GUIDED DISCOVERY CAN HAVE LEARNING ADVANTAGES OVER “TRADITIONAL” INSTRUCTION

In part 2 of the meta-analysis summarized in the previous paragraph, the research team evaluated 360 research studies that compared guided discovery to other forms of instruction, including direct teaching methods. Overall, the guided-discovery methods excelled, with an average effect size of .35 which is positive but not large (Alfieri, Brooks, Aldrich, & Tanenbaum, 2011).

The Handbook of Research on Learning and Instruction (Mayer & Alexander, 2011) includes several chapters that summarize research on various forms of guided discovery compared to alternative teaching methods. For example, de Jong (2011) reviews results from instruction based on computer simulations. He summarizes the advantages of simulations as (1) opportunities to learn and practice in situations that cannot readily or safely learned on the job and (2) the opportunity to work with multiple representations such as graphs, tables, and animations, which stimulate deeper understanding. He concludes that “large-scale evaluations of carefully designed simulation-based learning environments show advantages of simulations over traditional forms of expository learning and over laboratory classes” (p. 458). One main theme of his chapter is that “Guided simulations lead to better performance than open simulations” (p. 451).

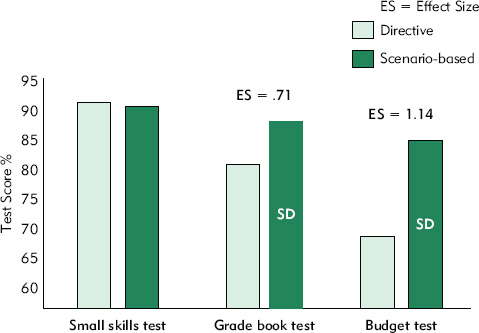

Learning Excel in Scenario-Based Versus Directive Lessons

One of the defining features of scenario-based e-learning is learning in the context of solving problems or completing tasks. Lim, Reiser and Olina (2009) compared two sixty-minute classroom lessons that taught student teachers how to prepare a grade book using Microsoft Excel. One version used a traditional step-by-step directive approach in which twenty-two basic skills such as entering data, merging cells, and inserting a chart were demonstrated. At the end of the second session of the directive version, learners practiced completing a grade book.

In contrast, in the scenario-based version, learners completed two grade books during Session 1 and two during Session 2. In these classes, the instructor demonstrated how to create the grade book and students then created the same grade book just demonstrated. Next, students created a second similar grade book with a different set of data. The two grade books in Session 1 were simple and those completed in Session 2 were more complex. In summary, the directive class taught a number of small skills and concluded with one grade book practice, while the scenario-based version taught all of the skills in the context of setting up four grade books. All lessons were conducted in an instructor-led environment.

At the end of the two classes, all learners completed three tests. One test required learners to perform sixteen separate small skills such as entering data, merging cells, etc. The second task-based test asked learners to prepare a grade book using different data than that used in class. A third test measured transfer by providing a set of data and asking students to use Excel to prepare a budget. None of the lessons had demonstrated or practiced preparing a budget, so this third test measured the learners’ ability to adapt what they had learned to a different task. In other words, this test measured ability to transfer skills to a different context. Take a look at the test results in Figure 10.1. Which of the following interpretations do you think best fits the data (select all that apply):

FIGURE 10.1. Learning from Directive Versus Scenario-Based Versions of an Excel Class.

Based on data from Lim, Reiser, and Olina (2009).

Scenario-based training was better for:

As you can see, there were no major learning differences on the small-skills test. The scenario group did better on the grade-book test, but because they had four opportunities to practice grade books compared to one opportunity in the directive group, this is not surprising. Perhaps the budget transfer test offers the most interesting results. Here we see that the scenario-based group was much better able to transfer what they had learned to a new task. The correct answers to the question above are Options B and C. A take-away from this research is that transfer of learning may be enhanced when learners practice new skills in several real-world contexts rather than as separate smaller component skills.

Similar results are reported by Merrill (2012), who compared learning from a multimedia scenario-based version of Excel training based on five progressively more complex spreadsheet tasks with an off-the shelf multimedia version that used a topic-based approach. The test consisted of three spreadsheet problems not used during the training. The scenario-based group scored an average of 89 percent, while the topic-centered group scored 68 percent. The time to complete the test averaged twenty-nine minutes for the scenario-based group and forty-nine minutes for the topic-centered group.

We need more research comparisons of scenario-based with directive lesson designs in different problem domains and with different learners to make more precise recommendations regarding when and for whom scenario-based e-learning is the more effective approach.

LEARNER SCAFFOLDING IS ESSENTIAL FOR SUCCESS

As I mentioned, one of the critical success factors for guided-discovery learning, including scenario-based e-learning, is guidance and structure. In Chapters 6 and 7, I outlined a number of techniques you can adapt to your projects. Scaffolding is an active area of research, so we don’t yet have sufficient studies to make comprehensive recommendations. However, in the following paragraphs I summarize a few recent research studies that compared learning from two or more versions of guided discovery that incorporated different types of structure and support.

Should Domain Information Come Before or After Problem Solving?

Whether relevant domain information in the form of explanations or examples should be provided before or after problem-solving attempts is a current debate among researchers. I’ll review evidence on both sides of the question, ending with some guidelines that reflect both perspectives.

Assign Problems First—Then Provide Explanations

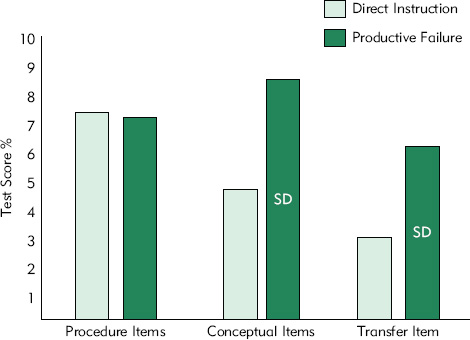

If the instructional goal is to learn to solve problems, is it better to start by telling and showing a solution procedure (direct instruction) or by letting learners try to solve problems even if they don’t derive a correct solution? Kapur (2012) compared the benefits of direct instruction followed by problem-solving practice versus problem-solving sessions followed by direct instruction. He refers to sequencing problem solving first, followed by directive instruction, as productive failure.

He taught the concept of and procedure for calculating standard deviation in one of two ways. The productive failure group worked in teams of three to solve a problem related to standard deviation, such as how to identify the most consistent soccer player among three candidates based on the number of goals each scored over a twenty-year period. No instructional help was provided during the problem-solving sessions. After the problem-solving sessions, the instructor compared and contrasted the student solutions and then demonstrated the formula for standard deviation and assigned additional practice problems.

In contrast, the direct instruction group received a traditional lesson, including an explanation of the formula to calculate standard deviation, some sample demonstration problems, and practice problems. All students then took a test that included questions to assess (1) application of the formula to a set of numbers (procedural items), (2) conceptual understanding questions, and (3) transfer problems requiring development of a formula to calculate a normalized score (not taught in class). In Figure 10.2 you can compare the test scores between the two groups for the three types of items.

FIGURE 10.2. Learning from Direct Instruction First Versus Productive Failure First.

Based on data from Kapur (2012).

Which of the following interpretations do you think best fits the data? (Select all that apply.)

Compared to direct instruction, a design that starts with unguided problem solving (productive failure) leads to better:

Kapur (2012) concludes that “Performance failure students significantly outperformed their direct instruction counterparts on conceptual understanding and transfer without compromising procedural fluency” (p. 663). Based on his data, options B and C are correct. This research suggests that designing problem activities—even activities that learners can’t resolve—in the initial learning phase will benefit longer-term learning, as long as learners are provided with appropriate knowledge and skills afterward. The argument is not whether unguided solution activity is better or worse than direct instruction, but rather how they can complement one another.

This research focused on mathematical problems with a defined solution. We will need more evidence to see how productive failure may or may not apply to more ill-defined problems as well as the conditions under which it is best used. Applied to scenario-based learning, the results suggest the benefits of allowing learners to tackle a relevant problem (even if they don’t fully or correctly resolve it), followed by direct instruction in the form of examples, explanations, and guided practice.

Provide Explanations Before and During Learning

In contrast to the experiment described in the preceding paragraphs, two recent studies revealed better learning when domain information was presented prior to and/or during learning. Lazonder, Hagemans, and de Jong (2010) measured learning from three versions of a business simulation in which learners had to maximize sales in a shoe store by selecting the best combination of five variables, such as type of background music, location of the stockroom, and so forth. The simulation allowed learners to manipulate the value of each variable and then run an experiment to see the effect on sales. A guide included the key information about the variables and was available (1) only before working with the simulation, (2) before and during the simulation, or (3) not at all. Better learning occurred when domain information was available, with best results from learners having access both before and during the simulation.

Similar results were reported in a comparison of learning from a game with or without a written summary of the principles to be applied during the game (Fiorella & Mayer, 2012). Learners having access to a written summary of game principles before and during game play learned more and reported less mental effort. From these experiments, we learn that providing key information before and during a simulation or game leads to better learning.

Perhaps the type of instructional environment and the background knowledge of the learners are variables that guide the optimal sequence of problem solving and direct instruction. Both studies that showed benefits of receiving information first involved a simulation or game—relatively unstructured environments that required learners to try different actions and draw conclusions from responses. In contrast, the research showing better conceptual and transfer learning from unguided problem solving followed by direct instruction involved a more structured topic (deriving and applying the formula for standard deviation). In addition, problem solving first requires a sufficient knowledge base for learners to draw upon prior knowledge as they attempt to resolve new problems. Solution attempts among the productive failure groups, although not successful, did reflect an attempt to apply prior mathematical knowledge, such as mean, median, graphic methods, and frequency-counting methods.

Until we have more data, unguided problem solving as an initial activity is perhaps best for learners with background knowledge relevant to the problem domain and for high structure problems that have defined solutions. Alternatively, for more novice learners and a more open instructional interface such as a simulation, a directive initial approach might be best. We need more research on the sequencing of problem solving and direct instruction.

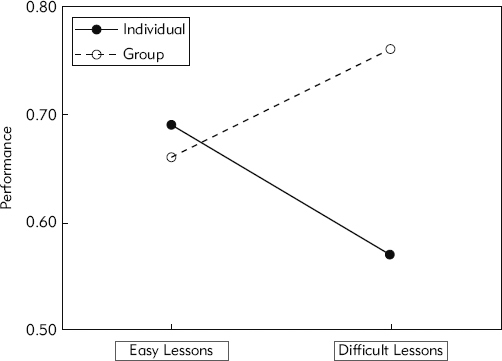

Use Collaboration for More Challenging Scenarios

One potential source of support during problem solving is some form of collaboration—either face-to-face in a classroom environment or online via social media. Do we have evidence that learning from scenario-based e-learning is better when learners work in teams? Kirschner, Pass, Kirschner, and Janssen (2011) created four lesson versions teaching genetics inheritance problems. Easier lesson versions demonstrated how to solve problems with worked examples, while harder versions assigned problems instead. A large body of research has shown the learning benefits of providing worked-out examples in place of some problem solving (Clark & Mayer, 2011). Each of the two versions (easy and hard) was taken by either individual learners or teams of three. You can see the results in Figure 10.3.

FIGURE 10.3. Collaboration Improves Learning from More Difficult Problems.

Based on data from Kirschner, Pass, Kirschner, and Janssen (2011).

The authors suggest that collaborative learning can help manage mental load because the workload is distributed across brains. At the same time, collaborative learning requires a mental investment in communicating and coordinating with others in the team. The experiment showed that collaborative work led to better learning, but only in the more demanding lessons. When assignments are more challenging, the benefits of teamwork offset the mental costs of coordination among team members. However, for easier lessons, individual problem solving was better. The authors suggest that: “It is better for practitioners NOT to make an exclusive choice for individual or collaborative learning, but rather vary their approach depending on the complexity of the learning tasks to be carried out” (p. 597).

A second experiment on productive failure that focused on standard deviation, as described in the Kapur (2012) study summarized previously in this chapter, found that learning in productive failure designs was best with collaborative groups of three that included a mix of higher and lower ability students rather than all high or all low ability (Wiedmann, Leach, Rummel, & Wiley, 2012).

Taken together, the studies to date indicate that collaboration is beneficial for more challenging learning goals such as problem solving prior to instruction—especially when the teams include a mix of background experience among the learners.

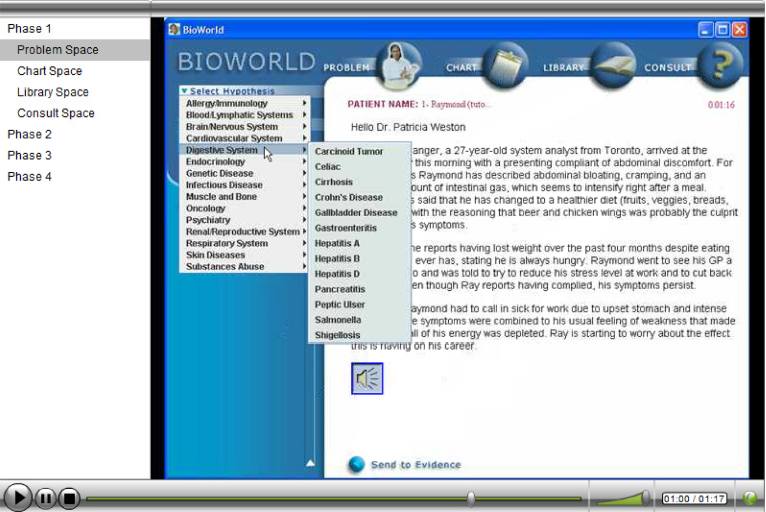

Make the Instructional Interface Easy to Use

In some scenario-based e-learning lessons, learners are required to type in responses such as hypotheses or explanations of examples. Alternatively, in other versions the interface is configured to allow a pull-down menu to select specific hypotheses. Selecting preformatted responses imposes less mental load than constructing a response from scratch and has been found to lead to better learning. For example, Mayer and Johnson (2010) found that a game designed to teach how circuits work benefitted from self-explanation questions when the learner could select a self-explanation from a menu rather than construct one. In Bioworld, shown in Figure 10.4, the learner can select a hypothesis from a pull-down menu rather than type in a hypothesis.

FIGURE 10.4. The Bioworld Interface Uses a Pull-Down Menu to Offer Hypotheses Options.

With permission of Susanne Lajoie, McGill University.

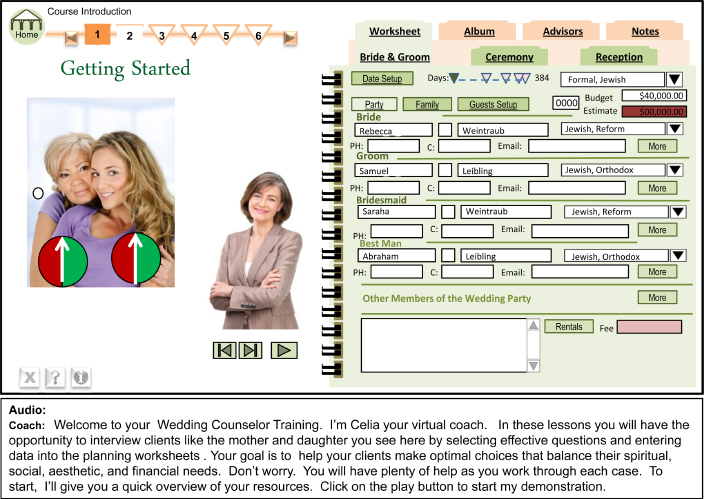

In addition to developing an easy-to-use interface, also consider providing instruction on how to use the interface. Holzinger, Kickmeier-Rust, Wassentheurer, and Hessinger (2009) compared learning of arterial blood flow principles from a simulation with a traditional text description of the concepts. Group 1 studied the text description. Group 2 used the simulation unaided, while Group 3 used the simulation preceded by a thirty-second video explaining its use and the main parameters involved. Learning was much better from the simulation, but only when preceded by the explanatory video. The research team concluded that “It is essential to provide additional help and guidance on the proper use of a simulation before beginning to learn with the simulation” (p. 300). A colleague reported that orientation to a scenario-based e-lesson is critical to its success. For example, Figure 10.5 shows a screen taken from the orientation for the Bridezilla lesson. Here the coach introduces herself, summarizes the goal of the lesson, and leads into a demonstration of support resources accessible in the interface.

FIGURE 10.5. The Coach Orients the Learner to the Bridezilla Scenario.

GUIDED DISCOVERY CAN BE MORE MOTIVATING THAN “TRADITIONAL” INSTRUCTION

With only a few exceptions, evaluation reviews of problem-based learning (PBL) have found that medical students in the PBL curriculum are more satisfied with their learning experience compared to students in a traditional science-based program. Loyens and Rikers (2011) reported higher graduation and retention rates among students studying in a PBL program than in a traditional curriculum. My guess is that most medical students find learning in the context of a patient case more relevant than learning science knowledge such as anatomy and physiology out of context. Because of relevance and multiple opportunities for overt engagement, it is possible that scenario-based e-learning will prove more motivational to your workforce learners as well. If your scenario-based lessons result in higher student ratings and greater course completions, the investment might pay off. We need more research comparing not only learning but completions and satisfaction ratings from scenario-based e-learning environments.

FEEDBACK AND REFLECTION PROMOTE LEARNING

For any kind of instruction, feedback has been shown to be one of the most powerful instructional methods you can use to improve learning (Hattie, 2009; Hattie & Gan, 2011). In Chapter 8 I discussed some alternatives to consider in the design of feedback and reflection for scenario-based e-learning. Here I review a couple of guidelines based on recent evidence on these important methods.

Provide Detailed Instructional Feedback

In some online learning environments, after making a response the learner is simply told whether it is right or wrong. This approach is called knowledge of results or corrective feedback. Alternatively, in addition to being told right or wrong, a more detailed explanation could be given. Writing detailed feedback takes more time, so is it worth the investment? Moreno and Mayer (2005) found that detailed feedback—what they called explanatory feedback—in a botany game resulted in better learning than knowledge of results alone.

Provide Opportunities to Compare Answers with Expert Solutions

Gadgil, Nokes-Malach, and Chi (2012) identified subjects who held a flawed mental model of how blood circulates through the heart. Two different approaches were used to help them gain an accurate understanding of circulation. One group was shown a diagram of the correct blood flow and asked to explain the diagram. A second group was shown an incorrect diagram that reflected the student’s flawed mental model along with the correct diagram. Students in this group were asked to contrast and compare the two diagrams and in so doing explicitly confront their misconceptions. Both groups then studied a text explanation of correct blood flow and were tested. One of the test items asked learners to sketch the correct flow of blood through the heart. Ninety percent of those asked to compare the incorrect and correct diagrams drew a correct model, compared with 64 percent of those who only explained the correct diagram. The research team concluded that instruction that included compare and contrast of an incorrect and correct model was more effective than an approach that asked learners to explain correct information. Asking learners to compare their own misconceptions with a correct representation proved to be a powerful technique.

From this study, we can infer that a useful feedback technique involves requiring learners to contrast and compare their solutions, especially solutions with misconceptions to an expert solution. Therefore, include two elements in your design. First, save and present the learner’s solution with an expert solution on the same screen to allow easy comparison. Second, to encourage active comparison, add reflection questions that require the learner to carefully compare the solutions and draw conclusions about the differences. In Figure 8.2 you can review how I adapted this technique to the Bridezilla lesson.

VISUAL REPRESENTATIONS SHOULD BE CONGRUENT WITH YOUR LEARNING GOALS

A wealth of research shows the benefits of adding relevant visuals to instructional text (Clark & Lyons, 2011). How can we best leverage visuals in scenario-based e-learning? Does the type of visual, for example, animation or still graphics, make a difference? We are just starting to accumulate evidence on how best to use different types of visuals for different learning goals. Here I will summarize research that can be applied to the visual design of scenario-based e-learning.

Use Visual Representations Rather Than Text Alone When Visual Discrimination Is Important

In a comparison of learning from examples of classroom teaching techniques presented in text narrative descriptions, in video, and in computer animation, both visual versions (video and animations) were more effective than text (Moreno & Ortegano-Layne, 2008). The research team suggested that computer animations might be more effective than video because extraneous visual information can be “grayed-out” as a cueing technique that helps the learner focus on the important visual elements. From a practical perspective, it is generally faster to update an animated version than to update a video. In this research, the goal was to teach student teachers classroom management—a skill set that relies on complex visual discrimination and implementation of verbal and physical actions. Since the visual representations offered more relevant detail related to the skill, it is not surprising that learning from visual examples was better than learning from a text narrative example.

We need much more evidence regarding the optimal modes, that is, text, video, animation, or stills to represent scenario-based e-learning cases. For skills that are based on discrimination of auditory and visual cues, such as teaching or medical diagnostics, visual representations via computer animation or video have proven more effective than text. In some situations animations may be more effective for updating scenarios and for drawing attention to the most relevant features of the visual display. In other cases, the higher emotional impact of video, seen, for example, in Gator 6 and Beyond the Front, may be more engaging to learners.

CAN SCENARIO-BASED e-LEARNING ACCELERATE EXPERTISE?

It seems logical that opportunities to practice tasks—especially tasks that would not be safe or convenient to practice on the job—would accelerate expertise. After all, expertise grows from experience. From chess playing to typing to musical and athletic performance, research on top performers finds that they have invested many hours and often years in focused practice (Erickson, 2006, 2009). Chess masters, for example, have typically devoted more than ten years to sustained practice, resulting in over 50,000 chess play patterns stored in their memories. Many businesses, however, don’t have ten years to build expertise. Perhaps scenario-based e-learning can provide at least a partial substitute for the years of experience that serves as the bedrock of accomplished performance.

Accelerating Orthopedic Expertise

Kumta, Tsang, Hung, and Cheng (2003) evaluated the benefits of scenario-based Internet tutorials for senior-year medical students completing a three-week rotation in orthopedics. One hundred and sixty-three medical students were randomly assigned to complete the traditional three-week program, consisting of formal lectures, bedside tutorials, and outpatient clinics, or to a test program that included the traditional program plus eight computer-based clinical case simulations. The scenarios required learners to comment on radiographs, interpret clinical and diagnostic test results, and provide logical reasoning to justify their clinical decisions. The test groups met with facilitators three times a week to discuss their responses to the simulations.

At the end of the three weeks, students were assessed with a multiple-choice computer-based test, a structured clinical examination test, and a clinical examination with ward patients. The students in the test group scored significantly higher than those in the control group. The research team concluded that well-designed web-based tutorials may foster better clinical and critical thinking skills in medical students. “Such multimedia-rich pedagogically sound environments may allow physicians to engage in complex problem solving tasks without endangering patient safety” (p. 273).

Note that, in this research, the test group received most of the same learning opportunities in the form of bedside tutorials and outpatient clinics as the control group. In addition, they received eight online cases to analyze, coupled with faculty debriefs. Therefore, it’s not surprising that additional carefully selected learning opportunities led to more learning.

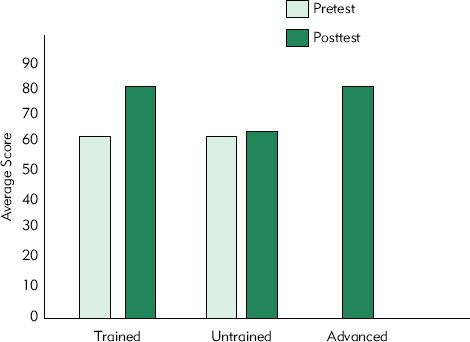

Accelerating Electronic Troubleshooting Expertise

Lesgold and associates (1992, 1993) tested a scenario-based troubleshooting simulation called Sherlock, designed to build expertise in electronic troubleshooting of the F-15 test station. Sherlock provides learners with simulated test station failures to resolve, along with tutorial help. Thirty-two airmen with some electronics background experience were divided into two groups. One group completed twenty-five hours of training with the Sherlock simulated environment, while the other sixteen served as a comparison group. All participants were evaluated through pre- and post-tests that required them to solve simulated test station diagnosis problems—problems that were different from those included in the training. In addition to the thirty-two apprentice airmen, technicians with more than ten years of experience were also tested as a comparison group. As you can see in Figure 10.6, the average skill level of those in the trained group was equivalent to that of the advanced technicians. The 1993 report concluded that “the bottom line is that twenty to twenty-five hours of Sherlock practice time produced average improvements that were, in many respects, equivalent to the effects of ten years on the job” (p. 54).

FIGURE 10.6. Acceleration of Expertise Using Sherlock Troubleshooting Simulation Trainer.

Based on data from Lesgold, Eggan, Katz, and Rao (1993).

In this experiment we see that expertise was accelerated by a relatively short amount of time spent in a troubleshooting simulation coupled with online coaching. Note, however, that the learners already had some background experience. Also note that, in this study, a group that was trained with Sherlock was compared to a group that did not receive any special training. It would be interesting to compare a Sherlock-trained group with an equivalent group that received twenty-five hours of traditional tutorial training.

The research I have reviewed here offers promise that scenario-based learning can accelerate expertise. However, we need more studies that include groups that receive the same number of hours of traditional training in order to conclude that scenario-based designs can accelerate expertise more effectively than traditional designs do.

RESEARCH ON SCENARIO-BASED e-LEARNING—THE BOTTOM LINE

In summary, I make the following generalizations based on evidence to date on scenario-based e-learning and related guided discovery learning environments:

WHAT DO YOU THINK? REVISITED

Previously in the chapter you evaluated several statements about scenario-based e-learning. Here are my thoughts.

Based on my review of the evidence accumulated to date, I would vote for Option C as having the most research support. A meta-analysis of more than five hundred studies showed learning was better from either traditional directive or guided-discovery lessons than from discovery versions.

Regarding Option A, there is limited evidence (mostly involving learning of Excel) that a scenario-based approach can enable learners to apply Excel to different problems than those presented during training.

Option B may be true when (1) visual cues are important to the analysis and solution of the case or (2) learner engagement is prompted by the emotional qualities of video, for example. We need more evidence on representations of scenarios.

Regarding Option D, I don’t see sufficient evidence to show that scenario-based e-learning can accelerate expertise, although it makes sense that for tasks that cannot be practiced in a real-world environment, a form of guided discovery—be it online simulation or scenario-based—would offer unique opportunities to compress expertise.

As to motivation (Option E), medical students do report problem-based learning as more motivational. However, medical students are a unique population and you will need to evaluate how your learners might respond.

COMING NEXT

As you have seen, the design and development of guided-discovery learning environments can be resource-intensive. When your focus is on thinking skills, it is critical that you define expert heuristics in specific and behavioral terms during your job analysis. In some situations knowledge and skills are tacit and thus cannot be readily articulated by your subject-matter experts. In the next chapter I review cognitive task analysis techniques you can use to surface and validate the knowledge and skills that underpin expertise.

ADDITIONAL RESOURCES

Alfieri, L., Brooks, P.J., Aldrich, N.J., & Tanenbaum, H.R. (2011). Does discovery-based instruction enhance learning? Journal of Educational Psychology, 103, 1–18.

A technical report with a great deal of data. Worth reading on an important issue if you can invest the time.

Clark, R.C., & Lyons, C. (2011). Graphics for learning. San Francisco: Pfeiffer.

Our book that summarizes evidence and best design practices for effective visuals in various media.

Kapur, M., & Rummel, N. (2012). Productive failure in learning from generation and invention activities. Instructional Science, 40, 645–650.

This special issue of Instructional Science includes several papers reporting research on problem solving first followed by directive instruction. This article overviews the issue.

Loyens, S.M.M., & Rikers, R.M.J.P. (2011). Instruction based on inquiry. In R.E. Mayer & P.A. Alexander (Eds.), Handbook of research on learning and instruction. New York: Routledge.

A useful review of research that focuses on different types of inquiry learning.

Mayer, R.E. (2004). Should there be a three-strikes rule against pure discovery learning: The case for guided methods of instruction. American Psychologist, 59, 14–19.

A classic article that should be in every advanced practitioner’s file.