APPENDIX C

RELIABILITY AND VALIDITY FOR LEARNING MEASURES FOR SCENARIO-BASED e-LEARNING

In Chapter 9 I review different paths to evaluate the outcomes of your scenario-based e-learning project. If you plan to evaluate learning outcomes, you will need to construct tests that are reliable and valid. This appendix reviews the basics of reliability and validity. Read it if you plan to measure learning outcomes and if you need a refresher. For detailed information, I recommend Criterion-Referenced Test Development by Shrock and Coscarelli (2007).

ENSURE TEST RELIABILITY

A test will never be valid unless it’s reliable. One of your first tasks is to prepare enough test items to support reliability. Basically a reliable test is one that gives consistent results. There are three forms of reliability, any of which you might need to evaluate learning from scenario-based e-learning. These are equivalence, test-retest, and inter-rater reliability.

Equivalence Reliability

Equivalence among different forms of a test means that test Version A will give more or less the same results as test Version B. Equivalence would be important if you plan a pretest-posttest comparison of learning. It would also be important if learners must reach a set criterion on the test to demonstrate competency. For those who fail the test on their first attempt, after some remediation, they will take a second test, which must be equivalent to the first but should not be identical to the first. As an example of reliability, Test A with twenty items and Test B with twenty different items would yield similar scores when taken by the same individuals (assuming no changes in those individuals). A person who scores 60 percent on Version A, will score close to 60 percent on Version B of an equivalent test.

Test-Retest Reliability

A second form of reliability is called test-retest reliability. Test-retest reliability means that the same person who took the test today and the same test tomorrow, without any additional learning, would score more or less the same both times. Test-retest reliability is a measure of the consistency of any given test over time.

Inter-Rater Reliability

Inter-rater reliability is the third type to consider. You may need to include open-ended test questions such as respond in a role play or draw a circuit to evaluate some of the strategic or critical thinking outcomes of scenario-based e-learning. Open-ended tests such as a role play will typically have multiple correct solutions. Therefore, you will need raters to evaluate responses, and it will be important that the raters be consistent in their scoring. If you use a single rater, it will be important for that individual to score each product in a consistent manner. If you use multiple raters, it will be additionally important that the group of raters be consistent in how they assess the same product.

For example, if a test involves a role play or requires the learner to create a product such as a drawing to illustrate a deeper understanding of principles, a human must review and “score” the product or performance. Consistency among your raters is called inter-rater reliability. As an aside, using raters on a large scale to evaluate outcomes is a labor-intensive activity. Therefore, you may want to use raters during pilot stages of scenario-based e-learning evaluation or in high-stakes situations in which individual performance is critical enough to warrant the investment and when other more efficient testing methods such as multiple-choice questions would lack sufficient sensitivity or validity to assess knowledge and skill competency.

Build Reliable Tests

How do you ensure your test is reliable? In general, longer tests are more reliable, although the exact length will depend on several factors, including knowledge and skill scope and task criticality. How broad is the span of knowledge and skills that you are measuring? A reliable test for an entire course or unit must include more items than a reliable test for a single lesson. How critical are the skills being assessed? More critical skills warrant longer tests and higher reliability correlations. There are no absolute answers but, in general, your test should include from five to twenty-five questions, depending on span and criticality.

There are statistical equations to calculate the reliability of your test. A perfect correlation between the scores of two forms of a test or two raters, for example, would be 1.00. Two tests with items that are completely unrelated would have a correlation of 0. You should obtain professional guidance on an acceptable correlation, but as a general figure it should be above +.85 for critical competencies and above +.70 for important competencies (Shrock & Coscarelli, 2007).

ENSURE TEST VALIDITY

A valid test is one that measures what it is supposed to measure. Remember your driving test? Most likely you completed a written test that evaluated your knowledge of the facts and concepts associated with driving. A high score on a written test, however, would not be a valid indicator of a competent driver. For that, you needed to take the dreaded behind-the-wheel examination—a performance test in which the examiner (hopefully) used a rating scale to assess driving skill and asked you to perform a reasonable sampling of common driving maneuvers.

Items to Measure Knowledge Topics

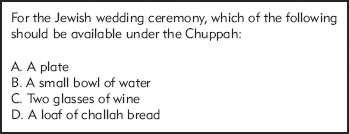

As illustrated in Figure C.1, test validity rests on a solid link from the job analysis to the learning objectives and, finally, to the test items. That’s why learning objectives are as important in scenario-based e-learning as in any form of training. Many scenario-based e-learning lessons may include learning objectives that focus on knowledge. For example, the Bridezilla course may include an objective requiring learners to identify key terms and practices of different religious or ethnic marriage traditions. For facts and concepts you can use multiple-choice test items such as the one in Figure C.2.

FIGURE C.1. A Valid Test Links Test Questions to Job Knowledge and Skills via Learning Objectives.

FIGURE C.2. A Sample Multiple-Choice Item to Measure Facts or Concepts.

Items to Measure Problem-Solving Skills

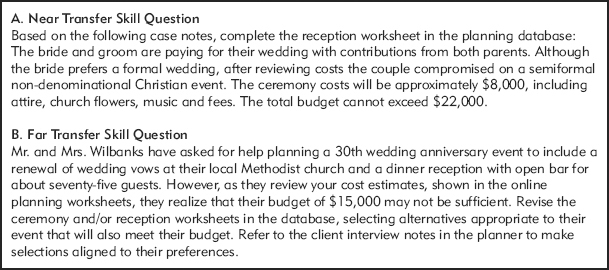

In addition to facts and concepts, scenario-based e-learning typically has some form of problem-solving objective. To evaluate problem-solving skills, your test will require learners to solve problems similar to those faced in the workplace and practiced in the training. While you could evaluate problem-solving ability on the job, it may not be easy to assess worker competence in the job setting. For many reasons, such as safety, expense, and time, some skills cannot easily be evaluated on the job. Instead, construct tests that come as close to real-world problem solving as practical. A simulation or scenario-driven test might be your best option. Your test scenarios may be quite similar to those presented during the training. These types of tests are called near transfer because the skill demonstrated is quite similar to the skills practiced in the training.

To evaluate learning transfer, you might include items that require the learner to apply new skills to somewhat different problems. These types of items are called far transfer because the learners will need to adapt what they learned to different situations than those practiced in the training. For example, in Figure C.3 are two potential simulation test questions for the Bridezilla course. The first item is quite similar to the kinds of scenarios included in the training. The second question focuses on an anniversary celebration with a number of potential differences from a wedding ceremony. Since none of the lesson scenarios focused on an anniversary, learners will need to adapt what they learned to a new situation.

FIGURE C.3. A Knowledge Question for the Bridezilla Course.

Items to Measure Open-Ended Outcomes

In some cases, your learning objective will require the learner to produce a product or demonstrate performance that is sufficiently nuanced and open-ended that you will need human raters to assess the accuracy or quality. A role play and design of a website that incorporates both functional and aesthetic features are two examples. Of course, using raters to assess performance or products of each learner is resource-intensive so you may want to do so only when there is no other practical way to evaluate competency and when the criticality of the skill warrants the investment. The behind-the-wheel driving test is one good example.

It is important to conduct formal validation of any test that will be used for any learning assessment that will be recorded or reported for individual learners. For example, a competency test may be required for certification, which in turn leads to promotions or specific work assignment eligibility. High-stakes evaluations such as these require a formal validation process. You can take a couple of approaches for formal validation, and I recommend you collaborate with your legal department and/or psychometrician to decide whether you need a formal validation and how best to go about it. For more details, refer to Shrock and Coscarelli (2007).