Imagine a situation where one day you have to set up a distributed cluster with the use of Solr. The amount of data is just too much for a single server to handle. Of course, you can just set up a second server or go for another master server with another set of data. But before Solr 4.0, you would have to take care of the data distribution yourself. In addition to this, you would also have to take care of setting up replication, data duplication, and so on. With SolrCloud, you don't have to do this—you can just set up a new cluster, and this recipe will show you how to do that.

Before continuing further, I advise you to read the Installing ZooKeeper for SolrCloud recipe from Chapter 1, Apache Solr Configuration. It shows you how to set up a Zookeeper cluster in order to be ready for production use. However, if you already have Zookeeper running, you can skip this recipe.

Let's assume that we want to create a cluster that will have four Solr servers. We also would like to have our data divided between the four Solr servers in such a way that we have the original data on two machines, and in addition to this, we would also have a copy of each shard available in case something happens with one of the Solr instances. I also assume that we already have our Zookeeper cluster set up, ready, and available at the address 192.168.1.10 on the 9983 port. For this recipe, we will set up four SolrCloud nodes on the same physical machine:

- We will start by running an empty Solr server (without any configuration) on port

8983. We do this by running the following command (for Solr 4.x):java -DzkHost=192.168.1.10:9983 -jar start.jar - For Solr 5, we will run the following command:

bin/solr -c -z 192.168.1.10:9983 - Now we start another three nodes, each on a different port (note that different Solr instances can run on the same port, but they should be installed on different machines). We do this by running one command for each installed Solr server (for Solr 4.x):

java -Djetty.port=6983 -DzkHost=192.168.1.10:9983 -jar start.jar java -Djetty.port=4983 -DzkHost=192.168.1.10:9983 -jar start.jar java -Djetty.port=2983 -DzkHost=192.168.1.10:9983 -jar start.jar

- For Solr 5, the commands will be as follows:

bin/solr -c -p 6983 -z 192.168.1.10:9983 bin/solr -c -p 4983 -z 192.168.1.10:9983 bin/solr -c -p 2983 -z 192.168.1.10:9983

- Now we need to upload our collection configuration to ZooKeeper. Assuming that we have our configuration in

/home/conf/solrconfiguration/conf, we will run the following command from thehomedirectory of the Solr server that runs first (thezkcli.shscript can be found in the Solr deployment example in thescripts/cloud-scriptsdirectory):./zkcli.sh -cmd upconfig -zkhost 192.168.1.10:9983 -confdir /home/conf/solrconfiguration/conf/ -confname collection1 - Now we can create our collection using the following command:

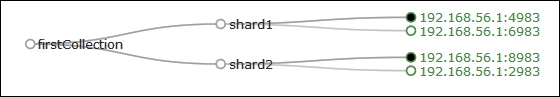

curl 'localhost:8983/solr/admin/collections?action=CREATE&name=firstCollection&numShards=2&replicationFactor=2&collection.configName=collection1' - If we now go to

http://localhost:8983/solr/#/~cloud, we will see the following cluster view:

As we can see, Solr has created a new collection with a proper deployment. Let's now see how it works.

We assume that we already have ZooKeeper installed—it is empty and doesn't have information about any collection, because we didn't create them.

For Solr 4.x, we started by running Solr and telling it that we want it to run in SolrCloud mode. We did that by specifying the -DzkHost property and setting its value to the IP address of our ZooKeeper instance. Of course, in the production environment, you would point Solr to a cluster of ZooKeeper nodes—this is done using the same property, but the IP addresses are separated using the comma character.

For Solr 5, we used the solr script provided in the bin directory. By adding the -c switch, we told Solr that we want it to run in the SolrCloud mode. The -z switch works exactly the same as the -DzkHost property for Solr 4.x—it allows you to specify the ZooKeeper host that should be used.

Of course, the other three Solr nodes run exactly in the same manner. For Solr 4.x, we add the -DzkHost property that points Solr to our ZooKeeper. Because we are running all four nodes on the same physical machine, we needed to specify the -Djetty.port property, because we can run only a single Solr server on a single port. For Solr 5, we use the -z property of the bin/solr script and we use the -p property to specify the port on which Solr should start.

The next step is to upload the collection configuration to ZooKeeper. We do this because Solr will fetch this configuration from ZooKeeper when you request the collection creation. To upload the configuration, we use the zkcli.sh script provided with the Solr distribution. We use the upconfig command (the -cmd switch), which means that we want to upload the configuration. We specify the ZooKeeper host using the -zkHost property. After that, we can say which directory our configuration is stored (the -confdir switch). The directory should contain all the needed configuration files such as schema.xml, solrconfig.xml, and so on. Finally, we specify the name under which we want to store our configuration using the -confname switch.

After we have our configuration in ZooKeeper, we can create the collection. We do this by running a command to the Collections API that is available at the /admin/collections endpoint. First, we tell Solr that we want to create the collection (action=CREATE) and that we want our collection to be named firstCollection (name=firstCollection). Remember that the collection names are case sensitive, so firstCollection and firstcollection are two different collections. We specify that we want our collection to be built of two primary shards (numShards=2) and we want each shard to be present in two copies (replicationFactor=2). This means that we will have a primary shard and a single replica. Finally, we specify which configuration should be used to create the collection by specifying the collection.configName property.

As we can see in the cloud, a view of our cluster has been created and spread across all the nodes.

There are a few things that I would like to mention—the possibility of running a Zookeeper server embedded into Apache Solr and specifying the Solr server name.

You can also start an embedded Zookeeper server shipped with Solr for your test environment. In order to do this, you should pass the -DzkRun parameter instead of -DzkHost=192.168.0.10:9983, but only in the command that sends our configuration to the Zookeeper cluster. So the final command for Solr 4.x should look similar to this:

java -DzkRun -jar start.jar

In Solr 5.0, the same command will be as follows:

bin/solr start -c

By default, ZooKeeper will start on the port higher by 1,000 to the one Solr is started at. So if you are running your Solr instance on 8983, ZooKeeper will be available at 9983.

The thing to remember is that the embedded ZooKeeper should only be used for development purposes and only one node should start it.

Solr needs each instance of SolrCloud to have a name. By default, that name is set using the IP address or the hostname appended with the port the Solr instance is running on and the _solr postfix. For example, if our node is running on 192.168.56.1 and port 8983, it will be called 192.168.56.1:8983_solr. Of course, Solr allows you to change that behavior by specifying the hostname. To do this, start using the -Dhost property or add the host property to solr.xml.

For example, if we would like one of our nodes to have the name of server1, we can run the following command to start Solr:

java -DzkHost=192.168.1.10:9983 -Dhost=server1 -jar start.jar

In Solr 5.0, the same command would be:

bin/solr start -c -h server1