18

Technique and audiovisual counterpoint in the Estuaries series

Introduction

An estuary is the outlet of a river where it meets the tide. Fresh water meets salt water in complex dynamics, varying with the flow of the river and forces of the tide. Continuity, fragmentation, instabilities and transformations intertwine.

We find a continuum, here, between the distinct streams, the mixing, and their ultimate combining. Consider a poetic comparison between this intertwining stage and musical counterpoint. Individuality and interdependence co-exist: multiple identifiable musical lines intertwine. The horizontal (harmonic) and linear (melodic) define each other. Contrary motion and consonance “serve the morphological task of unification and reconciliation amidst the contrapuntal drive for independence” (Levarie and Levy 1983: 125).

Extending the metaphors further, my Estuaries series of audiovisual compositions have served as an intertwining of several streams of exploration. Specifically, through Estuaries 1 (2016), Estuaries 2 (2017), and Estuaries 3 (2018),1 I aimed to achieve the following:

- Develop and apply a new abstract-animation technique based on the visualisation of direct-search optimisation processes.

- Explore and refine further potentials of variable-coupled map networks (VCMN) – embodied in my Nodewebba software – as a generative-music tool.

- Assess the potential for VCMN to help generate coherent audiovisual counterpoint.

- Gain further insights into the formation of “fluid audiovisual counterpoint”.

These will be discussed following in this order. Thus, the initial discussion is technical, describing some innovative tools and approaches to sound and image generation. Attention then shifts towards the aesthetic. This culminates in a detailed consideration of a selection from Estuaries 3, showing how technological means and aesthetic view points worked together to establish elements of a fluid audiovisual counterpoint.

I suggest on one hand that controlling sound and image simultaneously with VCMN – an event-based generative process that is not perceptually informed – has limited capacity to consistently generate convincing audiovisual counterpoint, fluid or otherwise. On the other hand, I demonstrate that such an approach can effectively generate some viable solutions in this vast domain, thereby serving to stimulate creative solutions and new insights into potential audiovisual relationships.

Visual technique: the OptiNelder filter

Introduction

The OptiNelder filter is a custom image-processing algorithm developed for the Estuaries series. It visualises the search paths of direct-search optimisation agents applied to an input image.

Mathematical optimisation refers broadly to finding best solutions to mathematical problems. The goal is to find the input values that will return the minimum or maximum output for a given function. Ideally, one can optimise through solving equations, but sometimes this is not possible. In these cases, other means must be used. One class of approaches uses iterative processes whereby a trial solution is tested and the results inform a new potential solution. These are called direct-search methods (Lewis et al. 2000; Kolda et al. 2003).

From an artistic perspective, we might find it clarifying to conceive of the potential outputs of a mathematical function as forming a terrain – a contoured landscape. Optimisation, then, entails finding the inputs to the function that will return the lowest or highest point in that landscape. A direct search will typically do so by exploring that terrain step-by-step, using what is discovered at any one step to infer a likely best direction (uphill or downhill) to search in the next step. This way, ideally, the process will eventually find its way to the top of the highest peak or to the bottom of the lowest valley.

The core hypothesis driving the development of the OptiNelder filter was that visualising aspects of a direct-search process could generate artistically useful images. This might occur through optimising a mathematical function or by using an actual image or video input as the search terrain. Artistic interest might be found in several aspects: (i) in the temporal behaviour exhibited by a direct searcher as it iterates, (ii) in the compound figures that would arise through the accumulation of shapes in the path of a searcher, (iii) in the changes of the search behaviour if/when the search terrain itself was altered, and (iv) the combined effect of visualising many search agents simultaneously. Thus, the interest was not in quality optimisation per se. Instead, the intent was to visualise direct-search processes to provide a gamut of artistically distinctive and malleable shape-vocabularies, textures and behaviours.

Optimisation, visualisation, and artistic applications

Unsurprisingly, published research to date on visualisation of direct-search processes is aimed at understanding, describing and refining the performance of such processes, rather than artistic visualisation. Generally, the intent has been to reveal the search path of the algorithm on the problem terrain to support analysis (for example Stanimirović et al. 2009). Another key issue is 2D or 3D visualisations of higher-dimensional problems (such as in Dos Santos and Brodlie 2004).

However, some precedents for the artistic application proposed here exist in the literature on swarm-based artistic visualisation. For example, Greenfield and Mach-ado (2015) provide an overview of ant-colony-inspired generative art. Swarm-based multi-agent systems have been used to create non-photorealistic rendering based on multiple source images (Love et al. 2011; Machado and Pereira 2012). Bornhofen et al. use biological metaphors such as an energy model with food chasing and ingesting to create abstract images, plant-like structures, and processing of input images (2012). Choi and Ahn combine the classic BOIDS algorithm with a genetic algorithm to create stylised rendering of photos (2017).

The OptiNelder technique takes this multi-agent approach from swarm processing, with each agent being an instance of a classic direct-search algorithm – the Nelder-Mead method. One or more Nelder-Mead agents search an input image for the darkest or brightest points, displaying their search paths in the process. The colours extracted from the images at the points of the search process are used when drawing the search path. (However, since the Nelder-Mead agents do not share state information with each other, OptiNelder cannot be considered an example of swarm processing in the normal sense of the term.)

The Nelder-Mead simplex method

The Nelder-Mead simplex method is a classic direct-search approach (Nelder and Mead 1965). Since its introduction, it has developed and retained strong popularity and can be found in many numerical analysis tools (Lewis et al. 2000). Nelder-Mead seemed a strong choice for this project, in part due to this technical ubiquity: code implementations and documentation are readily available. Further, given the shape vocabulary used in its search process, it seemed likely that it could provide visually novel complex accumulations of simple shapes.

In short, the method involves addressing a problem of n dimensions (variables) by first creating an initial simplex shape of n + 1 points. For example, for a two-variable problem, this simplex will have three points, forming a triangle. Given what the function values at these points imply about the downward direction in the problem terrain (if minimisation is the goal), a new simplex is specified. This process is iterated until exit criteria are met, it is hoped – though not necessarily – by converging on a solution.

At each step, the new simplex is formed by either a reflection, expansion, contraction or shrinkage of the evaluated simplex. These operations “probe” around the simplex with simple rules to determine the points of the next simplex. Coefficients control this behaviour, referred to in the original paper as ρ for reflection, χ for expansion, γ for contraction, and σ for shrinkage. Formal definitions of the algorithm are readily available, such as from Wright (1996). I have developed a somewhat simplified description aimed at helping artists and non-specialists understand its behaviour and the effect of the coefficients, available at http://BatHatMedia.com/Research/neldermead.html

Implementation

The OptiNelder filter currently uses an input image as the “terrain” on which Nelder-Mead agents seek maxima or minima. In the implementations thus far, this has entailed searching for brightest or darkest points in an RGB image. This is a two-dimensional problem: a point x, y in the image returns a brightness value. In our terrain metaphor, bright points are high in the landscape and dark points low. As the agents search, the simplex points and/or the simplexes themselves are drawn, using colours extracted from the input image at the simplex points.

OptiNelder is a custom plugin filter for Apple’s Motion 5.3 video-effects, animation and compositing tool. The code is Objective-C++, using Apple’s FxPlug 3.1 SDK. This approach provides the advantage of combining refined time, editing and rendering controls of a modern visual-effects tool with custom-developed, OpenGL-accelerated effects, and easy manipulation of stills or movies to provide the visual input to the filter.

The plugin was built around the author’s objective-C conversion of a C implementation of the Nelder-Mead algorithm by Michael Hutt (2011). The Hutt code implements constraints, which are needed to keep the search within the bounds of the image. It also creates a scalable equilateral simplex as the initial state, with sides one-unit long. This scaling is a necessary feature for this image processing application: one needs to scale the size upward to provide an appropriate start condition for the terrain, since one pixel in the source image is one unit.

The control parameters for the plugin fall into four main categories: placement and behaviour control, point control, triangle control and spline control. For the sake of brevity, only a key subset of these parameters is described next.

Placement and behaviour control

The user determines the starting points of the search agents on the input image either through a grid (with the user specifying the number of rows and columns) or with positions determined through seeded randomness (with the user specifying total agent count). In addition to the standard Nelder-Mead setup and the initial scaling factor mentioned earlier, the plugin also provides the capacity to rotate the initial simplex around the starting point. Variations in this starting angle can change the search path, ranging from subtle shifts in detail to an output that finds radically different end points. Users can also control the reflection, expansion, and contraction coefficients for the search process and set a maximum number of iterations before an agent stops searching.

Finally, a parameter can rotate the complete set of all simplexes in the search path around the starting point. This has nothing to do with the optimisation process, but it can serve aesthetic ends. It provides a way, for example, to gradually pull apart a coherent image formed of completed search paths – or gradually put it back together again.

Point control

The point size and transparency can be specified separately for the agent start-point, the simplex corner points, and for the end-point of the search. The point sizes can be automatically scaled by their relative brightness. This latter feature can help provide variation in the points for a less mechanical-seeming visual result.

Triangle control

One can enable colour fills for the simplexes and set the alpha (transparency) value. The colour fill of the triangle is created with an OpenGL interpolated fill between the colours of the three corner points.

Spline control

The user can activate a spline curve that passes through the points of the simplexes. In particular, the filter uses a custom Objective-C implementation of Kochanek Bartels (KB) splines.2 KB splines were originally created as a means for interpolating between animation keyframes (Kochanek and Bartels 1984). This means that they also serve in situations where one wishes to cast a smooth spline that is guaranteed to pass through a given ordered set of points. In addition, KB splines include controls for tension, continuity, and bias to provide results ranging from straight lines to very loose curves (see ibid. for details).

In the OptiNelder implementation, the user can determine whether the spline will be drawn and whether it will pass through points 1, 2 and/or 3 of each simplex in a search path. A “join spines” option determines whether a separate spline set is drawn for each Nelder-Mead agent path or a single spline is cast through the full set of paths of all active agents subsequently. Finally, this KB-spline implementation also allows for colour interpolation along the spline, so colours change gradually between those specified at the simplex points.

Example

As the first piece in the series, Estuaries 1 provides some of the simplest and clearest demonstrations of OptiNelder concepts. The primary input image is a Motion-generated circular gradient – from blue outside to white inside – with agents seeking the brightest points in that image. Throughout the work, agents are arranged using the grid-placement option.

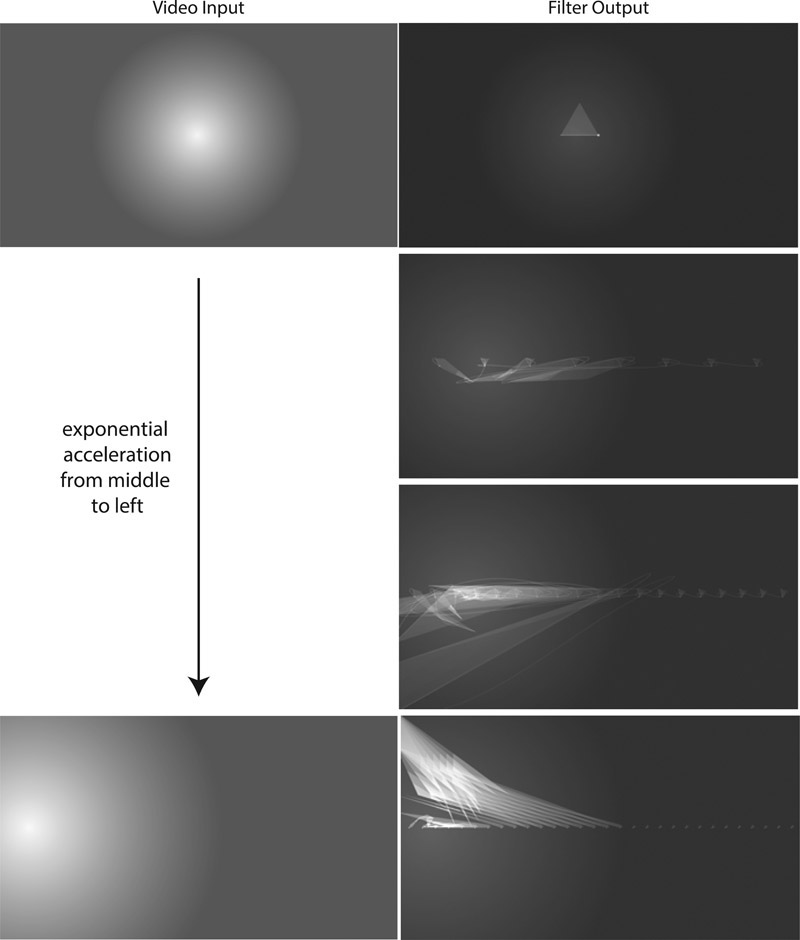

In the 15-second gesture at the beginning of the work, the input is a radial gradient in the centre of the image (see Figure 18.1). OptiNelder begins with a single agent placed in the centre of the image, limited to one iteration – with the initial simplex gradually scaled up to reveal a single triangle. The triangle starts to rotate as the gradient moves left. This leftward motion of the gradient is an exponentially accelerating motion curve. During this leftward gesture, more agents are gradually added to the row and the Nelder-Mead iteration limit is increased to lengthen the search paths, which are tending to “reach out” towards the centre of the gradient. Thus, all of these elements work together to create a “throwing” gesture to the left side of the screen.

Figure 18.1 Four frames from the beginning of Estuaries 1. A radial gradient moving left over 15 seconds provides the source image. The number of agents in the filter increases gradually, arranged in a row. Other parameters are modulated concurrently to provide an overall transformation that matches an underlying musical gesture.

Watching this example in video form also reveals that the agent search-paths can be unstable and jump suddenly and unpredictably frame-to-frame as the source image undergoes gradual changes. This contributes to an essential aesthetic quality of Estuaries 1: the evocation of an unstable and awkward audiovisual semi-stasis that fractures and re-stabilises, seeking resolution.

![]()

Discussion

The Estuaries series demonstrates a wide range of animated textures through the OptiNelder technique applied to both still and moving images. The diversity of possible behaviours, driven by an input image, proved amenable to supporting my ongoing interest to sculpt visual change in time in ways analogous to and interlinking with musical gestural and texture – such as the gesture described earlier.

The technique clearly functions in alignment with some of the strategies I have previously proposed for the generative artist (Battey 2016a):

- It provides a disciplined means to relinquish control to generate new ideas.

- It provides fruitful unpredictability if the artist is willing to dialog with the system to discover its potentials and limits.

- It can readily provide a perceptual complexity that lies between extremes or redundancy and randomness.

- It is arguably an example of creative “misuse” of a mathematical process to create unexpected and serendipitous visual outcomes.

As seen with the Estuaries 1 example, one challenge of working with the OptiNelder filter is the difficulty of creating smooth, gradual transformation of the output image if any aspect of the search parameters or the search terrain is modulated. In Estuaries 2, with its focus on dense, canvas-filling textures driven by noisy image sources (water surfaces), the unpredictability of the paths simply becomes part of the noisy texture. In all of the Estuaries pieces, however, I struggled at times with this frame-to-frame nonlinearity. One solution, when this characteristic detracted from the aesthetic ends I was after, was to smooth the abrupt changes by applying extreme motion-blur effects after OptiNelder. In this case, the results of many different frames can blend together to create even more complex composites.

Musical technique

With the Estuaries series, I have continued to develop my variable-coupled map networks (VCMN) approach to generate emergent behavioural patterns for music. In particular, I have been refining my Nodewebba software and gaining a greater sense of its potentials.

VCMN and Nodewebba overview

In short, VCMN entails the linking together of iterative functions to create emergent behaviours. Each function (or “node”) comprises Lehmer’s Linear Congruence Formula (LLC) and a timing function to determine when it will next emit its state and iterate to a new state. LLC is a formula normally used to create pseudo-random numbers. However, when its variables are de-optimised, it can become a useful pattern generator, demonstrating behaviours ranging from stasis, decay/increase, simple iteration, compound iteration, and unpredictable self-similarity. By using the output of one node to set variables or timing of another node or nodes, more complex behaviours can emerge, including potentially perceptually interesting relationships between the nodes. Once feedback connections are considered, where nodes drive themselves or start paths of influence that ultimately come back to themselves, a wide range of possibilities emerge. The VCMN approach has been described in detail elsewhere (Battey 2004).

Nodewebba is software I developed to provide a GUI-based system for composing with a six-node VCMN system.3 It ties all of the nodes to a metronome, with the timing of each node set as multiples of a small durational value (e.g. multiples of a 16th-note duration). A 2D matrix control allows for easy connection of node outputs and inputs. Node outputs can be mapped to various pitch-modes and instrumental ranges. The output can by synchronised to an external sequencer to record the MIDI output. Created in Max/MSP, Nodewebba can either run as stand-alone software or can be extended through interaction with other code developed in Max, with a set of global variables providing output and input to the Nodewebba system. Details can be found elsewhere (Battey 2016b).

Example: music in Estuaries 3

A few specific points about the musical approach, with emphasis on Estuaries 3, are worth mentioning to frame the following discussion of audiovisual counterpoint.

The primary timbre approach in the Estuaries series entails driving samples of orchestral instruments via MIDI, but convolving these with tamboura samples (though piano sounds, not convolved, often provide a foreground function). Besides creating an extended decay tail on the samples, this convolution approach creates complex interaction between the pitches performed by an instrument and their alignment with the overtones of the tamboura. This is made more complex by having a different tamboura fundamental for each instrument. For example, in Estuaries 1, pizzicato violin is convolved with a tamboura pitched at F, while plate bells are convolved with a tamboura pitched at E. This creates spectral richness and complexity of temporal behaviour that transcends the original orchestral sources. Thus, the distance between the note events generated by the VCMN system and the perceptual result is significantly magnified.

All of the Estuaries works are unified in part through using a given set of 13 Messiaen-inspired chords as the core pitch material. However, each piece uses a different generative approach to create details and large-scale structure from those chords. In the case of Estuaries 3, each chord controls one portion of the piece. For each of these “chord-sections”, the chord was turned into a pitch-mode definition that was loaded into the nodes in Nodewebba. (Note that a “chord section” does not necessarily correspond perceptually to what we might consider a large-scale section of the piece. As we shall see later, for example, the first counterpoint “section” of Estuaries 3 comprises multiple chord-sections.)

Each chord section has a manual specification of overall duration, which nodes would be active, the pitch range for each node, and how many notes would form a “phrase” (after which a node would pause). A phasor running between zero and one across the duration of each section was mapped to control phrase length and rhythmic range, generally to provide higher density and activity towards the end of a section – often creating a sense of forward impetus.

Compressed Feedback Synthesis (CFS) is a sound synthesis approach based on software-based feedback loops controlled by automatic gain-control (Battey 2011). Estuaries 3 includes CFS to provide a more continuum-based, rather than event-based, sound element. Nodewebba controlled aspects of the ongoing CFS process, including its fundamental feedback frequency (based on the first note of the chord for each section), the pitch-shift ratios, and frequency of ring filters. As with the convolution approach, the independence and unpredictability of the CFS process created behaviours that belie the simplicity of the VMCN-generated note-events that are shaping them.

Ultimately, these generative approaches provided initial materials, which were captured in a DAW and then subject to editing. Given this generative foundation for the music, could it also aid in the control of visual elements to create a complex but coherent audiovisual counterpoint?

Audiovisual counterpoint, fluid and otherwise

Starting with my work on the Luna Series of audiovisual compositions (2007–2009), I have been slowly developing a concept of a “fluid audiovisual counterpoint”. The term points to an artistic intuition about which I hope to gradually gain analytical clarity. My most extensive published exploration of the idea to date is my article “Towards a Fluid Audiovisual Counterpoint” (2015). Starting with the traditional music pedagogical framework of species counterpoint, the article considers whether and to what extent this could be a legitimate metaphor to apply to discussion of audiovisual counterpoint. From there it explores the idea of a counterpoint of gestures, moving beyond discrete note and visual events. It the considers the idea of fluid audiovisual counterpoint:

The Estuaries series explores this idea with the aid of “audiovisualisation” of VCMN activity. The audiovisual artist Ryo Ikeshiro proposes the word audiovisualisation to refer to the simultaneous sonification and visualisation of the same source of data.

Audiovisualisation can be both a fascinating and problematic prospect – arguably something likely to prove difficult to achieve to a convincing standard, given the differences between visual and musical perception. How and to what degree and depth can music and image cohere if arising from a single underlying abstraction that is neither musical nor visual in its essence, nor perceptually informed?

My hypothesis was that VCMN could provide a way to explore the vastly multivariate problem-space of audiovisual relationships, including generation of solutions that might not have conceived of directly. Thus, it might generate some aspects of convincing solutions to audiovisual counterpoint, helping to create effective solutions in the short run which could also inform a theory of audiovisual counterpoint in the long run. This is to say that it was not expected that convincing counterpoint would be achieved fully through VCMN control. Indeed, as we shall see, a great deal of editing of the audiovisualisation results were required to achieve results that I found perceptually convincing. Thus, this cannot be called audiovisualisation, per se. Instead we might call it audiovisualisation-assisted composing.

The clearest examples of VCMN-assisted counterpoint can be seen in the two counterpoint sections of Estuaries 3, which serve as the climax (4:00–5:55) and ambiguous close (7:30–8:30) of the work. The relative counterpoint clarity of these sections, including discrete visual objects within the image, provide a clear dramatic contrast to the more textural approach of the other sections. We will now consider this in more detail.

Estuaries 3 example

Technically, the approach involved exporting node data from Nodewebba to set keyframes in Motion to control OptiNelder and the visual elements fed to it.4 There was an immediate tension, here. The quality of fluidity requires emphasis on continuums and gestures, while VCMN, MIDI and the core sound-design approach of the series is naturally oriented towards discrete event triggers. I sought to overcome this event bias to some degree in some portions of the Estuaries series. Musically, as already noted, the use of convolution and the inclusion of the CFS instrument in Estuaries 3 was also aimed at providing more continuous sonic elements. Further, when using Nodewebba to set visual keyframes, I used varying types of transitions between those keyframes to shape time between those events.

In the two previously mentioned counterpoint sections in Estuaries 3, for example, two thin, green vertical bars use primarily exponentially shaped motion between Nodewebba-determined keyframes. This creates a very strong point of arrival at the key-frame – a clear accelerating gesture landing on and highlighting a discrete event-point.

In contrast, orange and white membranes in the background (provided by the “Membrane” generator in Motion) have smoother motion curves through key-frames. In this latter case, the keyframes become articulation points (in the sense used in the quotation about fluid counterpoint), rather than events. They provide moments of relative stability in a continuous flow of motion.

Similarly, the simplex starting-angle of the OptiNelder filter is often modulated with smooth motion curves, providing a fluid, detailed paralleling or dialog with elements of the other musical and visual elements. Arguably, the interplay between the various continuums-based motion and the clear event/arrival motions provide some of the fascination and complex coherence in these sections of Estuaries 3.

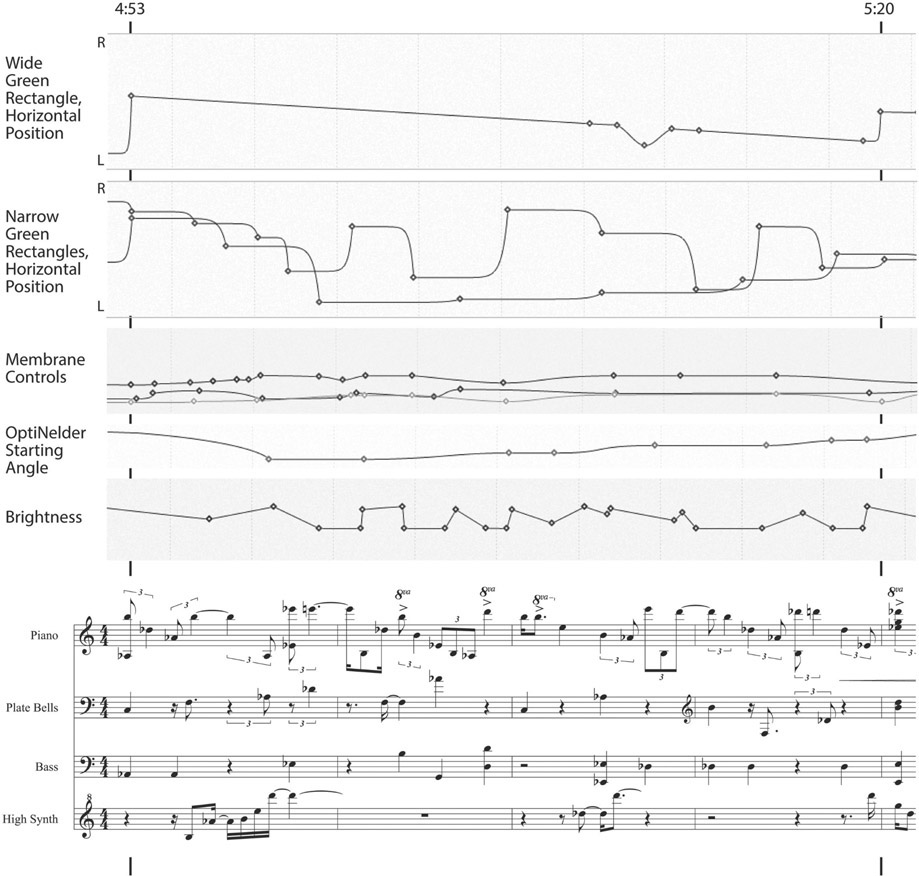

We can look at 4:51 to 5:56 in Estuaries 3 for some examples of how these musical and audiovisual elements work together hierarchically to create elements of gestural and fluid counterpoint. To aid in this discussion, a video excerpt of this section of Estuaries 3, with time code superimposed, can be found at https://vimeo.com/356157209. Figures 18.2a and 18.2b provide a notation-reduction of key aspects of the musical texture and the keyframe controls of some of the primary elements in the visual texture.5

![]()

Figure 18.2a and 18.2b. Estuaries 3 excerpt: Music elements and visual control data.

Note: The musical notation at the bottom provides a simplified pitch and rhythm notation of prominent musical elements. Above that, the figure displays Motion keyframes and time controls for prominent visual objects and processes.

The span shown in Figure 18.2 covers two chord sections. The first runs from 4:53 to 5:20, and the second from 5:20 to the resolution of the whole counterpoint section at 5:42. Thus 4:53, 5:20, and 5:42 are the highest-level structural points of this excerpt. The Max phasor associated with each chord section was mapped to the horizontal position of the wide green vertical bar. As a result, it gradually moves from right to left over the time span of a chord section. It moves quickly with exponentially accelerated motion back to a rightward position at the start of each new chord section, providing a heavily emphasised arrival point. Note that this jump to the right is of lesser magnitude at 5:20 then at 5:42. That is, the larger leap is reserved to demark the strongest point of arrival: the resolution of the whole section.

From 5:10–5:12, there is one deviation from this, where the wide green bar smoothly and momentarily accelerates to the left and then back to the original linear trajectory. This was actually an error in the data transfer for Max to Motion, but I felt that it provided an effective fluid response to the “high synth” gesture occurring at this point, and also prepared the perceptual downbeat at the start of measure four. I perceive a passing of gestural energy between elements here: brightness, Opti-Nelder starting angle and membrane controls modulate together in parallel with the high-synth gesture. Hierarchically, these elements provide shifts in the details of the visual texture rather than changes in the higher-level organisational elements (such as positions of the rectangles), thus paralleling the decorative or ancillary-gesture nature of the high-synth element. These visual modulations stabilise on the high note of that gesture. That high note is also the starting point of the left-and-return deviation-gesture of the wide-green rectangle. This gesture in turn is “answered” by a narrow-green line accelerating to the left and joining the wide-green line, with the sounding of a plate bell.

The keyframes for the narrow green rectangles were derived from the data that also generated the plate bells and bass notes. In this case, the exponentially accelerated horizontal motion of a green rectangle culminates in a note, providing a kind of visual anticipation of the note event. However, I often heavily edited the actual horizontal-location value of the keyframe to create horizontal alignments of these rectangles with other graphical elements, particularly in ways that might aid in the balance or cohesion of the image or provide emphasis, such as by having two elements landing in the same location.

As examples of the former, at 4:53 the left-most thin-green line aligns with the right-most edge of the orange membrane; at 5:04, the left-most thin-green line aligns with the third fold (from the left) of the orange membrane. As an example of both balance and emphasis considerations working together, at 5:06 the right-most thin-green line has leapt a considerable distance to a position right on the edge of the orange membrane, corresponding to a musical point of relative emphasis – the accented note shown at the start of measure three. As an example of horizontal positions corresponding to create emphasis, at the chord-section change at 5:20, the wide-green line leaps to the position of the left-most thin-green line.

Compositionally, from a high-level structural perspective, the details of each chord-section should contribute on the whole to the culmination of that chord-section and preparing the transition to the next. We can see how smooth articulations of a continuum can contribute to this by looking at the membrane behaviour in the build of tension to 5:42. At 5:31, I manipulated the membrane keyframes to result in a horizontal line appearing mid-screen in the white membrane. This provides a strong, momentary sense of visual stability corresponding to the accented note in measure six. This note initiates a period of higher note density and considerably higher pitch ambitus building to 5:42. During this passage, the membrane oscillates up and down in an accelerating fashion. This culminates at 5:36–5:45, where the membranes for the first time fold up on top of themselves in many layers, evoking a sense of previously pent-up energy expressing itself in an almost spring-like build of tension and release – paralleling the musical energetic profile building to the peak note of the section (5:42). This can also be seen in the keyframe data in Figure 18.2, with the relatively large upward change in one of the membrane controls begins to pull back just before the final rightward-leap of the wide-green rectangle.

The elements described in these examples all entail the interlinking of detail, mid- and high-level organisation through coordination of both event-points and continuums. Audio and visual events, gestures, and fluidity work together in interdependence to shape our experience of time. This passage originated in audiovisualised relationships established from a VCMN origin. I then interceded, sometimes significantly, to clarify the counterpoint, create or refine gestural linkages between the mediums, establish visual alignments for emphasis, and shape tension contributing to mid-scale audiovisual structures.

Conclusion

The Estuaries series, and Estuaries 3 in particular, have served my intent to help develop and clarify the idea and practice of gestural and fluid counterpoint. VCMN and Nodewebba helped in this process. However, as expected, using VCMN for audiovisualisation did not generate useful audiovisual counterpoints in these works so much as much as serve as starting point and source of surprise from which I could work heuristically to create counterpoint solutions that I found perceptually satisfying.

The OptiNelder filter, besides demonstrably providing a wide range of visual potentials, also served the search for a fluid counterpoint. First, the creation of the input to the filter (choice of video or structuring of images from basic elements like rectangles and membranes) could readily be used to determine high- to mid-level structural features. OptiNelder processing would then contribute more towards detail elements of the structure, as shown in the Estuaries 3 example.

At the same time, OptiNelder supported a dialog between event/object focus and continuum focus. In the Estuaries 3 example, an initial scene comprising discrete objects (rectangles and membranes) was “blurred” through OptiNelder processing – intermingling the boundaries of discrete objects and providing overall textural features that could be expressively modulated.

The fluidity of some materials in the Estuaries 3 example (textures, membranes, and their articulations) may function in the counterpoint in part due to the clarity provided by their interplay with relatively discrete points of arrival (notes, objects, exponential-movement preparing/anticipating events) in other materials. This raises questions for further consideration: to what degree can one move towards purely fluid materials and maintain enough clarity in articulation to have a sense of ordered relations? How “light” can articulation of a continuum be and still provide sufficient perceptual impact to establish counterpoint?

In the Estuaries 3 passage examined, there are many note-to-motion correspondences. These help glue the mediums together perceptually but are of the simplest kind of relationship that could be established. The question of when image and sound events/gestures can be timed independently – or to what degree there can be independent audio or visual “voices” (in the counterpoint metaphor) is still difficult to assess. In the Estuaries 3 example, independence among parts could be high as long as careful attention was applied to structural hierarchy and relative perceptual weight of events: more important temporal events were reinforced by alignment of multiple parts, and could be emphasised by wider leap in approach (be that a musical leap or a visual leap). Between such events, relationships can be freer. This is not a surprise; it is a basic principle of traditional Western musical counterpoint.

The range of artistic possibilities in audiovisual counterpoint remains immense – and perhaps ultimately resistant to a comprehensive formal explanation. From that perspective, audiovisualisation-assisted composition provides one valuable means for exploring this range and generating creative outcomes. In this case, sensitive artistic perception remains essential for guiding selection and adaptation of algorithmically generated materials to achieve the delicate task of balancing unification and independence of materials.

Bibliography

- Battey, B. (2004) Musical Pattern Generation with Variable-Coupled Iterated Map Networks. Organised Sound. 9(2). Cambridge University Press. pp. 137–150.

- Battey, B. (2011) Sound Synthesis and Composition with Compression-Controlled Feedback. Proceedings of the International Computer Music Conference, Huddersfield, UK.

- Battey, B. (2015) Towards a Fluid Audiovisual Counterpoint. Ideas Sónicas. 17(4). Contro Mexicano para la Musica y las Artes Sonoras. pp. 26–32.

- Battey, B. (2016a) Creative Computing and the Generative Artist. International Journal of Creative Computing. 1(2–4). Inderscience Publishers. pp. 154–173.

- Battey, B. (2016b) Nodewebba: Software for Composing with Networked Iterated Maps. Proceedings of the International Computer Music Conference, Utrecht, Holland.

- Bornhofen, S.; Gardeux, V.; Machizaud, A. (2012) From Swarm Art toward Ecosystem Art. International Journal of Swarm Intelligence Research. 3(3). IGI Global. pp. 1–18.

- Choi, T.J.; Ahn, C.W. (2017) A Swarm Art Based on Evolvable Boids with Genetic Programming. Journal of Advances in Information Technology. 8(1). Engineering and Technology Publishing. pp. 23–28.

- Dos Santos, S.; Brodlie, K. (2004) Gaining Understanding of Multivariate and Multidimensional Data through Visualization. Computers & Graphics. 28(3). Elsevier. pp. 311–325.

- Greenfield, G.; Machado, P. (2015) Ant- and Ant-Colony-Inspired a Life Visual Art. Artificial Life. 21(3). MIT Press. pp. 293–306.

- Hutt, M. (2011) Nelder-Mead Simplex Method [C-code]. Version 1 March. Available online: https://github.com/huttmf/nelder-mead [Last Accessed 18/09/27].

- Ikeshiro, R. (2013) Live Audiovisualisation Using Emergent Generative Systems. PhD Thesis, University of London, Goldsmiths.

- Kochanek, D.H.U.; Bartels, R.H. (1984) Interpolating Splines with Local Tension, Continuity, and Bias Control. Computer Graphics. 18(3). ACM SIGGRAPH. pp. 33–41.

- Kolda, T.G.; Lewis, R.M.; Torczon, V. (2003) Optimisation by Direct Search: New Perspectives on Some Classical and Modern Methods. SIAM Review. 45(3). SIAM. pp. 385–482.

- Levarie, S.; Levy, E. (1983) Musical Morphology: A Discourse and a Dictionary. Kent, OH: The Kent State University Press.

- Lewis, R.M.; Torczon, V.; Trosset, M.W. (2000) Direct Search Methods: Then and Now. Journal of Computational and Applied Mathematics. 124(1–2). Elsevier. pp. 191–207.

- Love, J.; Pasquier, P.; Wyvill, B.; Gibson, S.; Tzanetakis, G. (2011) Aesthetic Agents: Swarm-Based Non-Photorealistic Rendering using Multiple Images. Proceedings of the International Symposium on Computational Aesthetics in Graphics, Visualization, and Imaging. 1. pp. 47–54.

- Machado, P.; Pereira, L. (2012) Photogrowth: Non-Photorealistic Rendering through Ant Paintings. Proceedings of the Fourteenth International Conf. of Genetic Evolutionary Computing.

- Nelder, J.A.; Mead, R. (1965) A Simplex Method for Function Minimization. The Computer Journal. 7(4). Oxford Academic. pp. 308–313.

- Stanimirović, P.; Petković, M.; Zlatanović, M. (2009) Visualization in Optimisation with Mathematica. Filomat. 2. Faculty of Sciences and Mathematics, University of Nis, Serbia. pp. 68–81.

- Wright, M.H. (1996) Direct Search Methods: Once Scorned, Now Respectable. In: Pittman Research Notes in Mathematics Series: Numerical Analysis 1995. Harlow, UK: Addison Wesley Longman. pp. 191–208.