![]()

How Do Scientists Think?

You have lost your keys again. You just had them, and you needed to be out the door five minutes ago. The first thing you do is try to remember where you saw them last. Next to the door? You go to the door and then to the kitchen counter, but no luck. Pockets in the last coat you remember wearing? Nope. No one is home but you; they have to be somewhere.

Finally, you retrace your steps all the way out to the garage and find them sitting right where you left them: up on the roof of the car. You must have put them there when you got the last package from the back seat! If you are short, you might never have found them until someone taller came into the garage and asked what your keys were doing on the car roof.

A scientist is someone who looks at the facts and behavior of the world in an orderly way, then uses that knowledge to solve a problem or to predict something. (There are niceties here about what part of this is science versus engineering, but more on that later.) In the lost keys example, you are taking a scientific approach. You have a list in your head of the places you normally put your keys. You also have a set of assumptions and knowledge about the world that you rarely have to spell out for yourself or anyone else—until a problem arises. Then you slow down and start to recite your assumptions and habits: “I know I opened the door, so I had them then. I put down the groceries, and I had on the green coat.” You decided to check those places and, when there was no success, concluded that because keys do not move on their own, if you were to revisit all the places you had been since you last saw your keys, you would find them.

Scientists call this process the scientific method. First, you collect data. Here the data would be all the times you’ve observed yourself, without thinking about it, putting your keys somewhere when you come into the house. Next, you develop a hypothesis—a best prediction given the data you have (my keys are missing but are probably by the door). Finally, you test the hypothesis (by looking at the door) and reject it (because the keys aren’t there) and continue to develop new hypotheses until such time as the facts fit the new hypothesis (you find your keys). Once a hypothesis proves to a reasonable degree to fit the way the world works, it becomes a theory. We could develop any hypothesis we wanted to about where our keys have gotten to. Testing our hypothesis, though, and elevating it to a theory, requires observing how the world actually works. For example, you presumably have never dug a hole in the yard, dropped your keys in, and covered them up. Thus, there is no need to look in the yard (but there might be, if you share your space with intrepid small children who just discovered the concept of buried treasure).

Most of us stick to a tightly controlled, rational approach when we need an answer quickly, but rarely analyze this process of developing a theory. So, if we are all scientists, why does science seem so alien to most of us? Part of it is because scientists need to know about a lot of specialized stuff. In other words, to make progress, scientists have to learn what others have discovered already about how certain parts of the world work. If you do not know about existing antibiotics and the best theories about how they kill bacteria, it’s hard to figure out how to search for a new one. If you do not know the physics of how an airplane works, you have to recreate more than 100 years of experiments on your own.

Another alien thing is the language. Often scientists invent new words for things to simplify the way they talk to others in their field. We all do it every day. Ten years ago, many of the items we are looking at in this book did not exist (and even now take some explanation—think about how many things you complain about every day, such as pop-up ads and home movie streaming speeds, that would have been mumbo-jumbo to you a few years ago).

Scientists have this problem in spades. Their fields change rapidly, and so most fields develop a whole language to describe ideas and help organize facts to make them easier to remember and understand. The trouble, too, is that if instead of referring to Twitter by its name, you said, “That web service with 140 characters where people send each other what they had for lunch,” you would be terminally uncool. Scientists, being people, also do not like looking uncool to their peers. Therefore, they learn to use their field’s jargon, and it’s hard for them to turn it off when they talk to nonscientists. Imagine how it would be for you if someone from 1945 landed in your living room and you had to explain the Internet or, even worse, Internet cloud services to him or her. It would be frustrating not to use any of the normal names for things, wouldn’t it? More fundamentally, though, in daily life we cannot question our assumptions as much as a scientist must. For instance, we get up in the morning, walk across the room presuming that gravity will keep us on the floor, and so on. Most of us do not spend our days in games of “what if” but pretty much believe what we see. We do not ask: What if gravity stopped working for five minutes? What would happen? What new things could I learn while the gravity was off? We believe what we see, and for many purposes this serves us well.

In this chapter, we give some examples of how scientists go about trying to observe and understand the universe. You will recognize many of the same skills you probably have been using to get the projects described in earlier chapters to work. Joan will be the tour guide through most of this chapter about how scientists think, but a lot of it is the same process hackers use to learn—with some twists. Later on, Rich weighs in on that part.

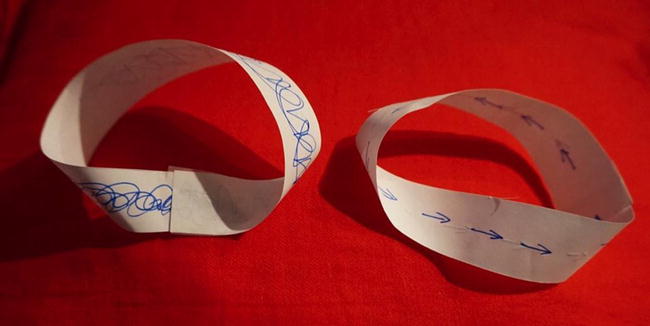

We See Only What We Believe

Often, we see only what we believe, a trait that scientists have to work to suppress. Try the following experiment: Cut yourself a strip of ordinary paper, say 11 inches long by about two inches wide. Put a half-twist in it and tape the two ends together as shown in Figure 13-1. You will now have a weird-looking ring known as a Möbius strip. Now, paper has two sides to it, right? Try drawing a line of arrows down the center of your strip. The arrows go all the way around (covering the entire strip, crossing over from what used to be one side to the other) with the arrows continuing to point the same way. You have created a piece of paper that has only one side! Is that possible? How can paper only have one side? But there it is—you have it in your hands. If you color one side before you tape them up, you can see that nothing magic happened—the scribbled-on side just meets the other side, as you would expect. Try coloring one edge. What happens? Now try cutting it in thirds. What happens? Is that possible? A scientist would find this exciting and would play with it for a while.

Figure 13-1. Möbius strips

This experience (presuming you have not seen a Möbius strip before) is similar to what happens when scientists see a piece of data that does not fit any existing theory. How can they broaden their theory to take in this new data? Do they have to come up with a new theory? Did you like the feeling of discovering what a Möbius strip could do, or did it make you vaguely uneasy? By the way, this phenomenon was discovered in 1848 by August Möbius, a German mathematician, and, at nearly the same time, by Johann Benedict Listing. It led to a burst of new mathematics that continues to be important to theoretical physics today.

Different Ways of Doing Science

Scientists can be split into three very broad types: observers, experimentalists, and theoreticians. In addition, some scientists curate collections or protect rare resources, as you will see in a later section. In practice, most are a bit of each type. As the names would imply, observers look at the world around them without trying to change anything. Experimenters try to change something about the world in a controlled way to see what happens. Theoreticians, on the other hand, look at the data collected by observers and experimenters and (usually using math and computers) try to decide what is going on and to predict what will happen in the future (if a particular experiment were to be performed, for instance).

In the example about finding your keys, you were a theoretician when you stopped to think about where your keys might be and why. Then you defined some observations and experiments that needed to be done to find them. All types of scientists have to be careful observers of the world. They must be very conscious of their assumptions so that if they make a mistake, they do not assume right away that they’ve discovered something new. (Well, OK, they might get excited for a while, but then they calm down and start paying attention again.) It can be pretty embarrassing if a scientist announces a “new discovery” to the world, only to find out it was all a mistake. Scientists hate it when that happens.

How do scientists check that they have not made mistakes? They first double-check how they made each observation—was anything unusual going on that day? Could anything have affected their measurements? If you buy shoes on a hot day, and your feet tend to swell, you know you should keep that in mind when choosing a size. In the same way, scientists also have to allow for outside effects and check their experimental process, conditions during the experiment, and whether they might have missed something.

If you are balancing your checkbook and the amount of money you think you have does not match what the bank says you have, a few things could have gone wrong. You may have added or subtracted wrong, you may have written down the amount of a check wrong, or you may have forgotten to write in a check altogether. As you go through figuring out what happened, typically you first check your addition and subtraction. If they are not the problem (or if fixing your math made the problem different or worse), you go back to the original receipts for the month to see what was going on. So, first you check the process you used to get to your result (your bank balance), and then you start to check your errors of omission—the things you left out or wrote down wrong. Eventually, you get everything to balance out (hopefully, since even banks make mistakes).

Scientists do not get a handy statement from the universe each month telling them what their answers should be; instead, they check each other—a process called peer review. When a scientist is pretty sure he has a good observation or experiment, he writes a paper about it. Then he sends that paper out to a scientific journal. The editor of that journal then has other scientists review it (often anonymously). These reviewers look carefully both at how the experiment or observation was done (like checking addition in our checkbook example) and whether the authors forgot anything. Then, if the conclusion about how the world works is different than people thought it was before, the reviewers think hard about what else may have gone wrong. Reviewers will make suggestions to the editor (including, sometimes, having the author re-do the experiment, analyze data in alternative ways, or do other additional work). If everyone agrees that the experiment was done correctly and it does seem that something new was discovered, the paper is published in a science journal. This process is not perfect, and, people being people, sometimes an innovative idea does not make it into print, or a bad one does. By and large, it serves science well as a way of questioning assumptions and getting more than one pair of eyes on a particular problem.

![]() Note This continuous questioning also leads to a culture that, broadly speaking, tends to encourage some degree of nonconformity and doubts about authority. If you visit any science department at a major university, you are highly unlikely to see a suit or tie. This is true, of course, for laboratory scientists who wear lab coats (or more esoteric protective gear). However, many scientists just use a computer, whiteboard, and paper all day long and so have a tendency toward jeans and sweatshirts. More significantly, if you try to tell scientists what to do, most of the time they will decide whether you’re right, and, if not, will ignore you. Scientists expect this from each other, and when someone with different norms and expectations attempts to collaborate with them, oftentimes misunderstandings occur. But perhaps scientists are not so different from the rest of us. What would your response have been if early in your search for your keys your spouse had asked, “Looked on the top of the car, honey?” As you might imagine, this is another thing that scientists share with the hacker culture.

Note This continuous questioning also leads to a culture that, broadly speaking, tends to encourage some degree of nonconformity and doubts about authority. If you visit any science department at a major university, you are highly unlikely to see a suit or tie. This is true, of course, for laboratory scientists who wear lab coats (or more esoteric protective gear). However, many scientists just use a computer, whiteboard, and paper all day long and so have a tendency toward jeans and sweatshirts. More significantly, if you try to tell scientists what to do, most of the time they will decide whether you’re right, and, if not, will ignore you. Scientists expect this from each other, and when someone with different norms and expectations attempts to collaborate with them, oftentimes misunderstandings occur. But perhaps scientists are not so different from the rest of us. What would your response have been if early in your search for your keys your spouse had asked, “Looked on the top of the car, honey?” As you might imagine, this is another thing that scientists share with the hacker culture.

Observing

In many ways, the oldest activity in science is observing how the world works and then developing a story about why it works that way. Yet scientists, like the short person who could not have seen the keys on the car roof, sometimes cannot solve a problem with existing instruments in their own fields. They have to wait until they figure out how to invent a new instrument, or until someone in a different field happens to turn something built for a different purpose into a potential solution to their problem. (Or they wait until a new hypothesis is put forth that explains a set of observations that did not make sense before.) This is why scientists want to know a lot about how precise a measurement is that a particular instrument makes. If you want to see how many fleas are on a dog, a picture of a dog a mile away showing the whole dog as a dot is not very useful. It’s true that you counted zero fleas on the dog in the picture; it’s also meaningless to say so.

Some scientists have to deal with observing things that happened a long time in the past, or reconcile observations taken with instruments of varying quality, such as rainfall measurements taken in 1600, with ones made today. Climate change researchers need the longest possible record of weather to find out how much warmer the world is now than in the distant past. However, thermometers have only been around for 400 years or so, and good ones for far less time than that. Some people have it even worse. Astronomers must always view their quarry at unthinkable distances and come up with ways to predict behavior that might take millions or billions of years to occur. How do they make progress in the face of such literally astronomical odds? Astronomer Stephen Unwin guides engineers developing telescopes that will fly in space to look for planets around other stars. New discoveries these days usually require large and complex telescopes to see the subtle effects, for example, of a small planet orbiting a big, distant star.

Astronomers as a community have to argue and compete to figure out what equipment should be built, what astronomy is best done in space, what is best done on the ground, and so on. Because very few new, professional-grade telescopes are built each year, either on Earth or in space, scientists have to know what kinds of experiments they want to do long before anyone starts polishing mirrors. It’s like deciding what kind of new car your family will buy: some people might want a two-seater to zip around in, but you might need to haul things and so you get a truck instead. No matter what sorts of telescopes are ultimately built, astronomers cannot alter the sky—they can only observe it. Or as Unwin puts it, “I have to take what sky gives me.”

Astronomy is largely an observational science. This means that astronomers cannot change anything in the sky and see what happens to validate their theories about how the universe works. What they have to do instead is figure out ways to make the effect they are looking for at any given time stand out clearly. Once they have all that figured out, astronomers still have to do a lot of thinking to interpret what they are seeing and pick the right things to look at in the first place. For example, Unwin explains that if you were looking at stars at all different distances and trying to figure out how bright they were, the distance would distort this measurement because closer stars (like one flashlight closer to you than another) would appear brighter. Instead, you would use your knowledge of the sky to find a cluster of stars that are all more or less the same distance from the Earth. Then you can look at all the stars and see whether there are more bright ones than dim ones among stars all at the same distance. “You learn how to read them,” Unwin says.

One of the cornerstones of science is the ability to do an experiment more than once and get the same result, a trait scientists call reproducibility. If an experiment can be reproduced, the outcome is less likely to have been a freak result due to some weird circumstance or something measured incorrectly, which can happen in science just like anyplace else. Because astronomers cannot start the universe over and make the exact same measurement twice, how can they know their data is reproducible? They make measurements multiple times; of course, if the sky is changing, astronomers have to do the best they can with data from other, similar objects in the sky. For that reason, astronomers, perhaps more so than other scientists, can’t take any one piece of data by itself but must view all the known data in context. That context itself changes continuously as more data is collected. This raises the question of what happens if a piece of data is measured incorrectly, due to equipment failure or human error. Does it matter?

Unwin says, “Because you can’t actively do experiments, it’s very hard in astronomy to build a coherent picture based on a single event or single piece of data. So, testing of a theory requires collecting more data, which will either verify or contradict that theory.” In other words, it’s back out to the telescope dome for the observers to see whether they’ve discovered something new or just made a mistake. A balance has to be made between how an observation appears to not fit into established theory (which might mean a new discovery) versus the chances that the data is just plain wrong. “Bad data is worse than no data” is a truism in astronomy (in all science, really) because bad data can waste your time and raise your hopes of a new theory when you may just have recorded some unfortunately timed interference.

Curating and Protecting

Science careers can be all-consuming. As a result, a scientist may find himself explaining to his wife why he needs to make a late-night foray to count rare toads splashing their way down a rain-lashed road. Some avoid these explanations (or, more likely, lengthen them enormously) by marrying another scientist. Husband and wife Bob Cook and Mary Hake, currently working at Cape Cod National Seashore in Massachusetts (shown in Figure 13-2), have made studying and advocating for endangered species their collective life’s work.

Figure 13-2. Cape Cod National Seashore

Cook and Hake work for the National Park Service. They live and have brought up two daughters far out on the Cape, where just a couple of miles of land separate the Atlantic from Cape Cod Bay. The rhythm of Hake’s life is driven by the migrations of the piping plover, a small, endangered shorebird that nests on undeveloped beaches. The birds depart in late August or September and return to Cape Cod from their winter migration around St. Patrick’s Day. During the six months the plovers are around, Hake is as frantic as she would be during a visit by a picky houseguest. The plovers lay their camouflaged eggs on the sand, vulnerable to being accidentally crunched by a visitor’s foot (or a dog’s paw). So, the first step is to fence off the beach each year with what Hake calls symbolic fencing—just a line of string intended to warn people off, but not actually to stop them. Once the plovers start laying eggs, “there are never enough hours in the day,” Hake says, because the birds need protecting along 20 miles of shoreline.

Once the eggs are laid, she places wire boxes over each nest, open on the bottom and held down by stakes pounded into the sand. These predator exclosures have openings large enough for an adult plover to come and go, but they are too small for foxes or larger predatory birds. No subtle, expensive instruments here: Hake’s tools are a shovel, chicken wire, stakes, and a sledgehammer, which she wields with impressive gusto.

Just as important as the aerobic part of her job is her presence on the beach in a National Park Service uniform, explaining to people why they need to keep their dogs away from the plover nesting area. She realizes that she typically has only one to three minutes before people lose interest and wander off. If the time of year is right, she shows them a plover chick: “If someone has an off-leash dog, I show them how vulnerable that chick is.” Once they see an actual cotton ball of a chick, she says, their dog usually goes on a leash thereafter. “We’ll get an injured gull on the beach, and 20 people will gather around it,” she says, but because the plovers are out of sight, a chick killed by a dog doesn’t get the same sympathy.

Hake says that it’s important not just to inform people about rules, but also to empower them so that they know they can make a difference. She says her greatest frustration is the small number of visitors who resist her attempts to protect nesting plovers. (The extreme version of this attitude manifests itself on local cars sporting bumper stickers that read “Piping Plover: Tastes Like Chicken.”) Educating the public is important to her, but she realizes that if a person is 50 or 60 years old, their attitudes are harder to change. “But that doesn’t stop me from trying,” she says. “I do change some, and it’s so rewarding,” as when local kids excitedly tell her about how they are watching out for plovers. “That’s what makes our salary priceless.”

Her husband Bob Cook is a PhD scientist who develops reports and statistics about the Cape’s fauna, with stints before that having taken him as far away as American Samoa. His is far from a desk job, though: it can involve going out on rainy nights to close one of the park’s secondary roads to protect the spadefoot toad, which is listed as threatened by the state of Massachusetts. Rainy nights are a particularly good time to collect information on the number, sizes, ages, and sexes of the toads, which are brought out by what they apparently regard as fine travel weather. “What I end up doing,” Cook says, “is collecting pilot data on subjects of interest that may have bearing on management issues.” This data can be used to entice graduate students from universities to come and do a more thorough project for their thesis, and spend more time than he can realistically on any one issue.

How do Hake and Cook think about the need to save species? They do not really think of it in those terms. Cook says that that in the national parks, “We’re trying to preserve not necessarily individual populations or individual species but the ecosystem and ecological processes that go with it.” Cape Cod, for instance, has various rare environments, such as its deep freshwater kettle ponds, salt marshes, and the like. The reality, though, is that as soon as you build roads and parking lots in a national park, you have impacted natural processes within the park. Even when the environmental judgments do not involve people, difficult management issues can arise. For example, Cook says, “We have a habitat that was altered 100 years ago that one group of people are trying to restore, but the complicating factor is that some state-listed rare species are making use of that habitat in its present condition.”

To decide what to do in cases like that, he would consider how abundant the two different competing species are within the park. He might expand his analysis beyond the boundaries of the park to a regional and state level, taking into account lots of factors. “The truth is, with landscape management, any decision you make to do something, even if the decision is to not do anything, is going to affect some population.” If you have a species that builds nests in fields, you have to keep in mind that, over time, wild fields will become shrubs and then woodlands. As the land goes through succession, it is occupied by one group of species and then by another. You may have to decide to sacrifice the field species to save woodlands, and your decision would depend on other fields and forest and how much of each remains available to any rare species.

Are all species created equal, then? For wildlife biologists like Cook and Hake, they are. However, for the general public, big, furry animals that can’t be missed when they stand in the middle of the road command more attention than, say, bugs. (Animals with a cuddly factor advantage are referred to as charismatic megafauna by biologists, with a bit of tongue in cheek.) Cook points out that most animals are small and spend most of their lives hiding because otherwise they would be easy pickings for predators. For an extreme example, imagine the lot of the endangered bug. Cape Cod, for instance, has an endangered species of beach beetle, which occupies the same habitat as piping plover. It can be a hard sell to convince people who want to use vehicles on the land that a beetle and a small bird need saving as part of preserving the larger ecosystem.

Why are Hake and Cook spending their lives working to keep these creatures alive? Why not let endangered species die out? Cook says he wants to prevent extinctions caused by the impacts of industrialized human beings. There may be pragmatic reasons for this—sometimes a plant or animal has medicinal or other immediately obvious economic value, and extinction cannot be reversed. Beyond the pragmatic, though, Cook says that for many people, there is a spiritual and inspirational reward in being able to see animals and plants in their natural habitats rather than in a zoo. “Ultimately, the long-term survival of wildlife is not going to be based on squeezing the last animal out of a piece of land. It’s going to be based on educating people about the effects of their lifestyles on the natural world.” He hopes that people will become more aware of population growth’s effects so that they will make wise decisions about housing and transportation that are harmonious with the land. Perhaps the couple’s work will someday result in a world in which, as he puts it, “wildlife isn’t relegated to something that’s only in preserves, but wildlife is part of the world wherever one lives.”

Science Philosophy

As with every other human achievement, someone had to invent an orderly process of observing and explaining the world. The critical pieces of this process are the ability to question existing knowledge and to develop new explanations as inconsistencies are developed. Someone somewhere had to dream up a system of keeping track of which facts could be used to draw which types of conclusions. In that respect, philosophers probably developed the very earliest pieces of the puzzle.

Suppose I say, “If I use an expensive shampoo, my hair will be gorgeous.” Does that mean that if my hair looks great, I must have used an expensive shampoo? Actually, no—advertising aside, I might just be lucky and have good hair days all the time. The statement did not imply that the only way my hair might look great was to use an expensive shampoo. Keeping track of what one can actually infer from observations underlies a lot of what scientists do. The example here goes by the fancy name of hypothetical syllogism: I develop a hypothesis (if I use an expensive shampoo, my hair will be gorgeous), make an observation (my hair looks great), and draw a conclusion (therefore, I must have really splashed out on shampoo). However, as mentioned, I actually can’t draw that conclusion from the hypothesis and facts given, so this particular syllogism is also a logical fallacy—logic that might lead me to an incorrect conclusion.

These ways of looking carefully at cause and effect are thought to have first been written down by Aristotle about 2,300 years ago, in Classical Greece. It’s very easy to develop all sorts of cause-and-effect relationships if you’re a casual observer of nature—but scientists make mistakes if they’re not very careful about what has to be the cause of an observed effect and what might be coincidence. People have probably been doing experiments of one sort or another since well back into prehistory. Developing experiments that follow what we today understand as the scientific method, however, was first clearly articulated in print in Western society by Francis Bacon in the 1600s, and shortly thereafter by members of the Royal Society of London.

Officially founded on November 28, 1660, the Royal Society was developed for “natural philosophers” (forerunners of today’s scientists) to get together once a week and witness science experiments. Early members included Christopher Wren, Robert Hooke, Robert Boyle, and, later, Isaac Newton. Some credit Boyle’s vacuum pump as one of the first modern pieces of experimental equipment, and his discovery of what is now called Boyle’s law (the relationship between pressure and temperature of a gas) as one of the first experimental discoveries.

For centuries before the founding of the Royal Society, the ideas of ancient philosophers and religious texts were accepted without question, and it was considered heretical to actually observe nature and draw conclusions about causes of natural phenomena. Once it became acceptable to observe and measure the world directly and publish the results, science began to advance at a rapid rate.

Indirect Measurement

Sometimes scientists cannot measure what they want to measure directly, either because an event happened in the past and was not recorded at the time or because the thing they really want to know is hard to measure. Imagine that you have a black metal box with a powered light bulb inside. You cannot tell from looking at the box whether the light bulb is on, but if you put your hand on the box, you might well be burned if the light is on. However, if the box is hot, you cannot tell whether the light has just been turned off, and the box is still hot because the light was on for an hour. And if the box is cool to the touch, you have no way of telling whether the light was just turned on (and thus the box has not had time to heat up yet). Therefore, the temperature of the black metal box is not a perfect indicator of whether the light is on, but it is a generally accurate indicator.

If you could measure instead whether any current is flowing into the box, then you would know with far more certainty whether the light is on, because there is no appreciable time lag between the current flowing or not and the light turning on or off. Therefore, measuring current would be a better surrogate measure of whether the light bulb is on or off than feeling the temperature of the box. Using a surrogate measure means that you have to thoroughly understand the process that ties the surrogate to what you want to measure, or you might wind up with misleading data. Coming up with good surrogates is one of the key creative skills of a scientist and is critical in many fields. Clinical medicine uses surrogate measurements all the time. An electrocardiogram (ECG) measures different electrical voltages on your skin and then creates a squiggly line that is interpreted to see whether your heart is functioning well; ditto for electroencephalograms (EEGs) that measure brain function. Doctors take a long time to learn how subtle differences in these surrogate measures mean particular diagnoses for their patients.

Beyond What We Can See

Another way to make observations is by using measuring tools that can see what we normally cannot with our eyes. Anyone who has ever wondered how on Earth a dentist can tell that a dark blob on an X-ray is a cavity knows how hard it can be to learn to read a type of image that isn’t a normal photograph. For one thing, images like X-rays are not really pictures per se; they’re transmissive, which means things that block X-rays (like bones) appear bright, and things that let the X-rays through (like skin) appear dark. A shadow is the simplest type of transmissive image, in the reverse way of an X-ray—if light passes through, it’s bright; if light is blocked (from your hands making a dragon, for example), then there is a dark shadow. A problem with transmissive images is that if, for instance, one bone is in front of another, the X-ray will show just one bright pair of crossed bones—not very useful if you are trying to see whether there is a crack at that very spot.

Other kinds of light we cannot see with our eyes let scientists gather data, too. A rainbow contains all the colors our eyes can see, from violet through blue, to green, yellow, and then red. If you keep going off the red side of the rainbow, you get to infrared light. Infrared energy is given off by just about everything—you cannot see it, but you can feel it as heat if it is strong enough (another surrogate measure). Infrared cameras show things that are warm as bright and things that are cold as dark. This allows scientists to measure the temperatures of objects they cannot easily check with thermometers, such as the tops of clouds. Infrared images of the Earth show clouds with cold, high tops in contrast to the warm ground or sea, allowing for good cloud images even at night. (By and large, higher cloud tops are colder, and probably imply bigger storms—more surrogate measures.) Infrared images of hurricanes and other weather systems allow meteorologists to make predictions that would have been impossible before the development of weather satellites.

Designing Good Experiments

Suppose you are lucky enough to be in a field in which you can do good experiments to determine how the world works. The word experiment brings up visions of bubbling beakers (and, in Hollywood, often maniacal laughter from a “mad scientist”). In real life, though, scientists have limited budgets, and often experiments are expensive to perform. Thus, it is pretty rare for someone in a lab to randomly try things to see what will happen. An experiment can involve months if not years of planning, not to mention a great deal of time finding someone to pay for it.

Normally, scientists will spend a long time figuring out what question(s) their experiments will answer. So how do scientists figure out which experiments they should conduct? A large part of science is posing questions. Very often, a good experiment will open more questions than it answers—and, to scientists, that’s a good thing. To design that great, field-opening, Nobel-prize-winning (well, OK, science-fair-honorable-mention-winning) experiment, you have to figure out what you are trying to learn and why.

The question you are asking (“Why did it snow more this year than last?”) has to be posed in a way that can be answered concretely. It is also important that others can repeat the experiment and get the same result. Different types of scientists have different problems trying to measure things when they do an experiment. For example, some types of physics experiments are very easy to do because our ability to measure relevant things is very mature. It is very easy to measure how much someone weighs at a given moment because accurate scales are widely available.

However, pity the poor medical researcher trying to figure out whether a new diet helps people lose weight. Not only are there a lot of things that affect how much a person weighs, but the test subjects will probably fib about what they actually ate, might exercise more than usual because they will know they are going to be weighed in front of other people, and so on. In other words, it is difficult in many areas of science (including medicine) to conduct what physicists would consider a controlled experiment. A controlled experiment is one in which only one thing changes at a time (the variable), and the variable varies in a way that is understood as well as possible—hopefully, in a way that can be measured accurately.

If you’ve ever tried to get a robot with a lot of components to work, you can appreciate that it’s hard to figure out why something that is supposed to be moving is not moving if you change several things every time you try a new fix. Better to leave all but one knob alone and try moving that, then go on to another, then maybe move a cable, and then turn the power on and off and start over.

Scientists try to do the same thing, but sometimes turning only parts of the universe on and off is hard. Biologist Charles Mobbs is a researcher at Mount Sinai Medical School in New York City. Mobbs studies the link between being skinny and living longer by studying mice, which get old and fat in ways similar to humans (although a lot faster) and thus are good models to use for studies like this. The fact that many factors affect how long mice live sensitizes scientists working in fields like this to craft experiments very carefully.

Mobbs says a good outcome is one from which you learn something, even if it’s not what you had in mind. Mobbs emphasizes carefully thinking through all the possible outcomes before the experiment starts. For example, he wanted to test a drug that might make mice lose weight (and, in the process, get some insight into how the drug works). First, he made some mice fat by feeding them a high-fat diet (doubtless they became the envy of their cousins, gnawing out a living on the other side of their Manhattan laboratory walls). Then he injected the drug into half of the obese mice. The other half were injected instead with a chemical that he knows does not make mice lose weight. (This removes the possibility that just giving them the injection is what makes them lose the weight.) Mobbs asks, “But you might say, why do we have to do that? Why not just inject the drug into obese mice and see if they lose weight?” Many unlikely things could go wrong, though—the caretakers could forget to feed the mice, for example, which would make them lose weight.

“Always do controls to pick up the things you didn’t think of,” he cautions. This type of controlled experiment is called a negative control—it helps analyze the cases in which the drug did work and makes it reasonably clear that it’s not just some sort of accident that would have happened anyway. If the drug failed to work, though, you might not have had enough insight to know why—did the drug not get to the part of the body where it needed to go, for example?

Therefore, it’s a good idea to have a positive control too. Maybe the drug should have worked, but something went wrong. In order to rule that out, you would divide the mice into three groups and inject one-third with the drug under study, one-third with something you know does not make mice lose weight, and one-third with something you know does make mice lose weight. No matter what happens, then, you would learn something—Mobbs’s definition of a good experiment. He concludes: “Controlled experiments are built into the way that a scientist thinks. I couldn’t do an experiment without a control any more than I could go to bed without brushing my teeth.”

![]() Rich’s perspective Learning to do controlled experiments also enhances the learning process. The abundance of kits, tutorials, and example code available today enables you to start making things by only making one change and seeing what happens, rather than needing to learn everything about a subject before you get started. My generation is often derided for needing instant gratification, but I think there’s real value in being able to quickly see the results of what you’ve done, whether it works as intended or not, and act on that feedback. In this case, your hypothesis is that you have correctly understood how to do X. Being able to test that hypothesis as quickly as possible, by changing one thing in an otherwise working program, circuit, or mechanical assembly, allows you to either move on to the next topic or see where you have misunderstood and try again to understand.

Rich’s perspective Learning to do controlled experiments also enhances the learning process. The abundance of kits, tutorials, and example code available today enables you to start making things by only making one change and seeing what happens, rather than needing to learn everything about a subject before you get started. My generation is often derided for needing instant gratification, but I think there’s real value in being able to quickly see the results of what you’ve done, whether it works as intended or not, and act on that feedback. In this case, your hypothesis is that you have correctly understood how to do X. Being able to test that hypothesis as quickly as possible, by changing one thing in an otherwise working program, circuit, or mechanical assembly, allows you to either move on to the next topic or see where you have misunderstood and try again to understand.

For example, I tried to teach myself the C programming language in my first year of high school. I carried around books that were thicker than my arms, reading whenever I had the time to do so. These books failed to teach their subject because all the computers I used were Macintosh computers, in the days before Mac OS X, when the Mac operating system did not include a way to run basic text-based programs directly. The barrier to entry for creating a program on a Mac was high because in order to run a program, it had to have a graphical interface, which is a more advanced concept than what I was trying to learn. I could write some code and try to compile it, but in order to create a program that I could actually run, I would have to build up so much foundation that if it failed, it would be difficult to understand why.

In contrast, before that I had created web pages using the HTML language, and had found a few codes that could make a page do interesting things in response to the user. These bits of code, which were embedded in the HTML but looked and acted differently, turned out to be little bits of JavaScript. JavaScript is a language that looks and behaves similarly to C, but can function within the context of a web page in such small snippets that it’s possible not to recognize them as a language independent from the HTML that they’re embedded in. These bits of code are able to make changes to what is displayed on the page. I was able to start with a bit of JavaScript copied from another page, modify that code, and then see if it worked as expected just by reloading the page. With Mac OS X and the Arduino, I was eventually able to use C in an environment where I could start simply and build up.

Sometimes, though, doing orderly experiments is not possible. Referring to his earlier discussion about well-thought-out controls, Mobbs says, “In reality, though, you can’t actually do a lot of science that way.” Science can’t really proceed without what he characterizes as “fishing expeditions”—casting a wide net to make some real progress without knowing how to do a thoroughly controlled experiment in a new research area. Because the experiments are not controlled very well, if you find nothing, you may not learn a lot. But if you do discover something during a fishing expedition, you can push a field forward in a hurry. However, you still will need controlled experiments to verify any potential findings.

The classic fishing expedition example Mobbs offers is the discovery of the antibiotic streptomycin. A specialist in the biology of soil-dwelling microscopic life, Selman Waksman got the idea that because mold lives in soil in successful competition with faster-spreading bacteria, mold must be producing a chemical that kills off competing bacteria. He sent his students (including Albert Schatz, whose contribution was later controversial as noted in the next paragraph) off to various places to find mold that produced materials poisonous to bacteria. Finally, one day the group discovered one mold that killed off the bacteria that caused tuberculosis.

This was important because other antibiotics available at the time could only kill certain kinds of bacteria (called gram positive), and tuberculosis was a different kind of bacteria (gram negative), against which there was no defense. These fishing expeditions landed a big catch indeed—and saved many lives. In a somewhat controversial honor, Selman was awarded the Nobel Prize for the discovery, but many thought others who helped troll for the fish, notably Schatz, should have gotten some of the recognition as well.

Developing a Theory

The word theory has developed a somewhat different common meaning than the one scientists mean when they use it. For most people, theory and guess mean the same thing. However, to a scientist, theory is as high a level of certainty as is possible. Scientists are trained never to think of anything as absolutely correct (or not). They are trained to think of any body of knowledge as “the best information we have now.” After all, it is their job to question and to move the line between what we do and do not know.

Therefore, at any given time, there are the best theories that constitute biology, physics, and so on. There is nothing else. Scientists cannot magically check the back of the universe’s book to see the answers. Therefore, they continually question their theories to see how they can prove various parts wrong or incomplete and thereby move the frontier forward. This is the point that is alien to most of us, but fundamental to science, and is the point at which our looking-for-lost-keys example begins to fail us.

Scientists never feel they know an absolute, true-for-all-time answer about how some part of the world works. If they did, they would be out of a job. However, the best theory in a field has been questioned and tested and turned this way and that by the smartest people around. That means it is most likely right. Guessing, inventing other theories, or otherwise developing completely new ways of explaining the world need to be tested in the same ways as the currently accepted theory, and any new hypothesis must be proven to explain the world better than the existing theory.

Even better, a good theory has predictive power: we can say that if our theory is true, we can go out and look at thus and such and it should be a certain way. If that works out, our theory is stronger (and the new theory will supplant the old). Note that this method requires a culture of openness, sharing of data, and disclosure of limitations of any observation to work. It also means that no one has the ability or right to declare something true—the data and the observable world have to support the theory.

Somehow we have to guide experiments and all that data-taking. We can take all the data we like, but the exciting part comes when we can predict what the next data should be. Will it be a warm or dry summer? If we measure the number of birds along a stretch of coastline after wetlands have been restored, exactly how many more should there be ten years from now, and why? If we turn the biggest telescope ever developed on a particular area of sky, how many stars will we see? Scientists develop a hypothesis to explain things they have seen and to predict what they should see when they do new experiments and make new observations.

A hypothesis, loosely speaking, is a scientist’s best estimate about how things work, but it probably has not been tested yet by seeing whether it fits data that was not available when the hypothesis was developed. Scientists develop experiments or plan new observations to test and refine a hypothesis into a theory. Some fields—like seismology, the study of earthquakes—have particular difficulties in testing their hypotheses. Because earthquakes are (fortunately) few and far between, it takes a long time for any given hypothesis to be proven correct (or not). Some scientists can use an animal to model a human being. Just like an architect creates a little model of a house before building one, scientists have a variety of ways to test theories when it is not feasible to experiment directly on their ultimate subject. For example, medical researchers like Mobbs use mice to test the effects of drugs in order to avoid exposing humans to possible toxic effects. But even experiments on mice are usually approved by an ethics panel at the university or hospital in question, because no one wants to sacrifice any living thing without good reason.

The other way to test a hypothesis is to develop computer models, or simulations. Meteorologists take data all over the world and feed it into big computer programs that have been developed to model the physics, chemistry, and history of weather all over the globe. Data is fed into these programs all the time, and periodically new theories about how the oceans and atmosphere work (or bigger and faster computers) lead the models to be tweaked to be more accurate as time goes by. However, to build a computer model, the underlying science must be understood pretty well. Forecasting weather depends on computational fluid dynamics (CFD) models. We understand fairly well what happens if we heat a little cube of air, add moisture to it, and so on; that kind of physics is easy to measure and analyze. What gets complicated is trying to understand what happens when you have a gazillion little cubes of air piled next to one another. The air is also being heated, cooled, and humidified as it interacts with water, lakes, trees, desert sand, and so on. What happens when one little cube of air has some sun heating it and another does not? Computer models divide the Earth’s atmosphere (or a part of it) into many, many, tiny cubes of air. Then, one cube at a time, these giant computer programs mix “real” data (from weather balloons, ground stations, and so on) with predictions to forecast how warm or cold, wet or dry, each of those cubes is going to be and whether wind and clouds will flow through them. Over time, steady improvements in computers (these weather models take a lot of memory and processing), as well as more robot measuring stations in more places, have improved these models (and therefore improved forecasts).

There are always a lot of assumptions, though, as well as things that have to be averaged and estimated to make it plausible to run these programs even on the biggest computers. Professional meteorologists usually run several programs (with different underlying assumptions, strengths, and weaknesses) and then use their experience to combine the results into a forecast. Once you have a weather computer model you believe, you can also use it as the basis of experiments. You can start out with a weather model and add more effects to it so that it can make more accurate predictions. These models, however, are only as good as the data and assumptions in them, and for something that models the entire Earth, deciding what to include accurately and what to estimate requires some deep knowledge of physics and other fields.

Scientists can and do disagree about what is important to include in computer models—disagreements that ideally result in experiments to measure the quantities in question, observe how they vary, and see the resulting effects on weather and climate when they do. Scientists have to spend a lot of time prioritizing what to go out and measure in these complicated situations. For many sciences, however, we are still learning so many basics that we really can’t yet develop a computer model, and we have to ask mice or yeast cells to stand in for us for a while longer. In all cases, though, a model is never perfect; but each model and experiment gets a little better than the last one, and gradually we learn more and more about how the world (or the universe) actually works.

Science at All Ages

I am often asked to be a science fair judge. One of the things I find most intriguing about this is seeing how students go about learning the scientific method. Because of the limited science and mathematics young students typically know, projects tend to be oriented mostly toward experimental science rather than theory. All too often, science fair projects come off as something more appropriate for a cooking show: The process takes precedence over asking new questions. Yes, you can measure some quantity with great care—but you need to know why you are measuring it. Nothing is more frustrating to a scientist judging a science fair than a well-executed project that appears to be an exercise in mixing chemicals with no obvious question answered by the student. Good scientists spend a lot of time posing questions (and then spend more time trying to see whether anyone else has already posed and answered these questions, referred to by scientists as “keeping up with the literature”). I always get excited when a student has asked a good question, even if it is imperfectly answered. I get even more excited if I discover that the student knows she has an imperfect answer! Learning to ask good questions and develop experiments to answer those questions is a key skill that is taught far too rarely. I try to start my young friends off as early as possible—all you have to do is let them ask questions, which is the essence of the hacker mentality, too.

![]() Note With the Internet, it is easier than ever to find materials to answer novel questions, as Rich has noted many times in this book. I will give the caveat here though that one has to consider the source carefully, because a lot of the science material online is flawed, particularly for controversial topics, and there are no hard-and-fast rules on how to tell whether a source is authoritative The best bet is to take a look at the author’s qualifications and ask, just as you would in real life, “Who is this guy?” (An academic scientist? Does the source claim to be peer-reviewed?) There are now many open-access journals (like the ones at www.plos.org) that are both free and peer reviewed, and these can be a good place to start. Consider the source: a scientist from a well-known research institution is probably giving good information; someone who just acted in a film about the topic, perhaps not so much.

Note With the Internet, it is easier than ever to find materials to answer novel questions, as Rich has noted many times in this book. I will give the caveat here though that one has to consider the source carefully, because a lot of the science material online is flawed, particularly for controversial topics, and there are no hard-and-fast rules on how to tell whether a source is authoritative The best bet is to take a look at the author’s qualifications and ask, just as you would in real life, “Who is this guy?” (An academic scientist? Does the source claim to be peer-reviewed?) There are now many open-access journals (like the ones at www.plos.org) that are both free and peer reviewed, and these can be a good place to start. Consider the source: a scientist from a well-known research institution is probably giving good information; someone who just acted in a film about the topic, perhaps not so much.

Summary

In this chapter we have explored the different ways that scientists think about their work and compared it with the hacker’s learn-by-doing ethos. We have seen that questioning assumptions and trying to see the world in as hands-on a way as possible are tendencies both groups share. However, there are also caveats about finding good background information for those cases when you cannot get all your own data—which scientists work hard to keep in mind, and which hopefully inform hacker style learning as well. In the next chapter, we move on to seeing what a sampling of scientists and other technologists actually do all day.