The onslaught of new cameras and technology can be daunting. If we are to survive this tumult and tell compelling stories, we must accept to some extent the steady bombardment of new formats, cameras, sensors, and all the rest. We’ve chosen this medium for our livelihood (or serious avocation), and so we must remember the business of video storytelling should be fun even in the face of unrelenting madness. At times, I feel like Slim Pickens in Dr. Strangelove1 cackling atop the A-bomb hurtling to earth. At least we should enjoy the ride to our mutually assured destruction!

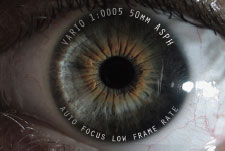

FIGURE 4.1

Go ahead! Enjoy the ride! You should relish the power of today’s cameras beneath you!

There’s a California restaurant chain called In-N-Out Burger. It is enormously successful despite the fact (or due to the fact) that its posted menu features only three options: you get a plain hamburger with a medium soda and fries, a cheeseburger with a medium soda and fries, a double hamburger with a medium soda and fries. That’s it. No anguished equivocation, no soul-sapping handwringing, no paralysis at the drive-up window. When it comes to burgers, I say yes to despotism and lack of choice. 2

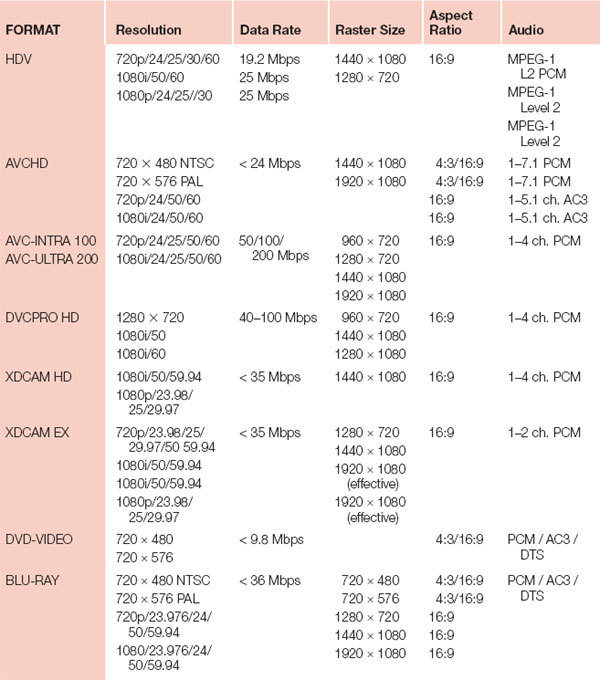

Now compare the In-N-Out experience with the mayhem that is HD. At last count, there were at least 43 different types and formats of HD video at various frame rates and resolutions. Throw in a dozen or more compression types, scan modes with or without pull-down, segmented frames, MXF, MPEG-2, MPEG-4, DNx, umpteen aspect ratios, and pretty soon we’re talking about some real choices. Yikes! When I think about this book, I should have included a motion discomfort bag!

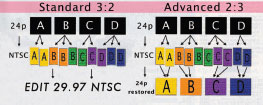

FIGURE 4.2

You know it and I know it: Human beings are happiest with fewer choices.

FIGURE 4.3

Nauseating. Really.

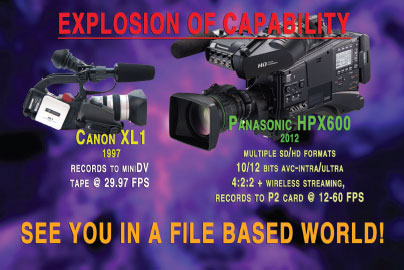

Still, one can say with regard to camera technology at least that newer is usually better. This means we can assume that the Sony HDCAM introduced in 1997 is not going to perform as well or as efficiently as a Panasonic AVC-Ultra model introduced in 2013. Similarly, in consumer camcorders, we see much better performance in new AVCHD models than in older HDV units grappling with the rigors of MPEG-2. 3

Tape-based cameras were inherently fragile, sensitive to moisture, and needed periodic and costly maintenance. The spinning heads and tape transport operated at a constant 60 frames (or fields) per second. The Canon XL1 in 1997 epitomized the tape reality. Recording to a single format (DV) at a single frame rate (29.97FPS), the XL1 offered ruggedness and usability but little versatility.

Zoom ahead 15 years. The solid-state Panasonic HPX370 records 24p in more than 30 different ways, at 1080i, 1080p, 720p, and a multitude of standard definition resolutions. The camera can shoot DV, DVCPRO, DVCPRO HD, and 10-bit AVC-Intra, plus single-frame animation, and various frame rates from 12fps to 60fps—a far cry from the hobbled tape-based cameras of only a few years ago. Table 4.1 provides a vague assessment of the profound discomfort we’re facing.

FIGURE 4.4

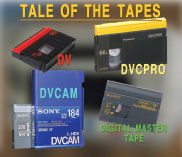

Don’t like the weather? Just wait a minute. The world has seen more than a few video formats blow in and out over the years.

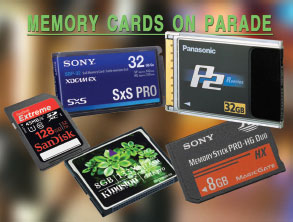

FIGURE 4.5

Go ahead. Pick one.

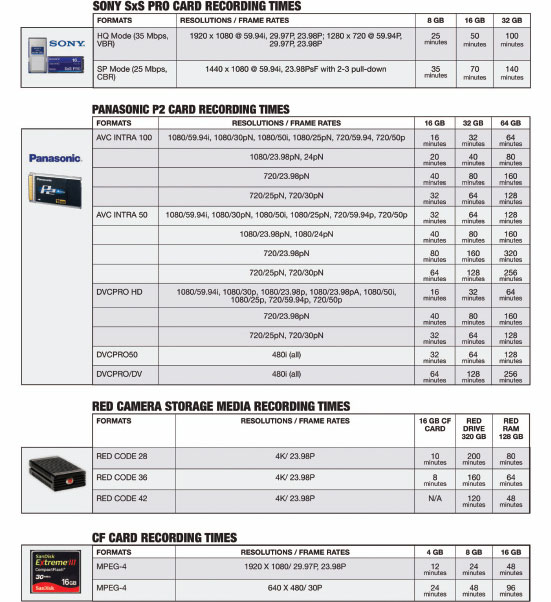

FIGURE 4.6

The recording times indicated are approximate.

Setting aside the 2K- and 4K-resolution options, the shooter faces a multitude of HD choices at 1080p, 1080i, and 720p. Reflecting industry practice we specify the frame’s vertical dimension so 1920 × 1080 or 1440 × 1080 is referred to as 1080 whereas 1280 × 720 or 960 × 720 is referred to as 720. Images may be captured progressively like film in a single scan, or in an interlaced manner by scanning every frame twice, the odd and even fields merging to produce a complete frame.

There are advantages to each approach. Progressive frames eliminate the temporal (1/50th or 1/60th second) aliasing that can occur between fields, thus contributing to the improved perceived resolution at 24p. 4 Many professionals citing The Kell Factor 5 insist that progressive images at 720 deliver higher resolution than interlaced 1080 images, owing to the absence of aliasing artifacts.

Progressive capture has many advantages including more efficient compression in-camera, the ability to shoot at various frame rates, and simpler frame-based keying and color correction in post. There is also inherent compatibility with progressive playback devices such as DVD and Blu-ray players, which owing to the demands of the entertainment industry are 24p devices.

Progressive frames don’t always make for superior images, however. When shooting sports or panning rapidly an interlaced frame may provide a smoother, more faithful representation than the same scene recorded at 24p or even 30p.

ABOUT STANDARDS

The “standard” is what everyone ignores.

What everyone actually observes is called “industry practice.”

This chart assesses the relative quality of SD and HD formats on a scale of 1to 10, uncompressed HD = 10, rough and ready VHS = 1. You may want to take these rankings with a grain of oxide or cobalt binder to go with your In N Out burger. |

|

Uncompressed HD |

10.0 |

Panasonic AVC-Ultra 200 444 |

9.8 |

Sony HDCAM SR |

9.4 |

Panasonic D-5 |

9.3 |

Panasonic AVC-Intra 100 |

9.2 |

Sony XDCAM HD 422 |

8.6 |

Panasonic DVCPRO HD |

8.3 |

Panasonic AVC-Intra 50 |

8.2 |

Sony XDCAM EX |

7.9 |

Sony XDCAM HD 420 |

7.5 |

Canon MPEG-2 50 Mbps |

7.4 |

Blu-ray (H.264) |

7.2 |

Sony DigiBeta |

6.4 |

DVCPRO 50 |

5.8 |

Sony Betacam SP |

4.6 |

HDV |

4.3 |

Sony Betacam |

4.0 |

Sony DVCAM |

3.8 |

DV (multiple manufacturers) |

3.6 |

DVD-Video |

2.9 |

MPEG-1 Video |

1.2 |

VHS |

1.0 |

Fisher-Price Pixelvision |

0.05 |

Hand shadows on wall |

0.00001 |

Progressive frames captured at 24 FPS are subject to strobing, a phenomenon whereby viewers perceive the individual frame samples instead of continuous motion. Although the interlaced frame contains a blurring of fields displaced slightly in time, the progressive shooter finds no such comfort so the motion blur must be added in some other way to reduce the strobing risk. For this reason, when shooting 24p it is advisable to increase the camera’s shutter angle from 180° to 210°, the wider angle and longer shutter time increasing the amount of motion blur inside the progressive frame. Although this sacrifices a bit of sharpness, the slower shutter/longer exposure time produces smoother motion while also improving a camera’s low-light capability by about 20%.6

SAFE TRACKING AND PANNING SPEEDS

At 24 FPS, strobing may occur in consecutive frames when the displacement of a scene is more than half its width while panning. High-contrast subjects with strong vertical elements such as a white picket fence or a spinning wagon wheel are more likely to strobe. If feasible, it is advisable to shoot at a frame rate greater than 24 FPS and to maintain the higher rate, say, 30 FPS, from image capture through postproduction and output of the edited master.

In many cases, the client or network dictates the shooting format, frame rate, and delivery requirements. If you’re shooting for ESPN or ABC, you’ll likely be shooting 720p, which is the standard for these networks. If you’re shooting for CBS, CNN, SKY, or any number of other broadcasters, you’ll probably originate in 1080i. Keep in mind that deriving 1080i from 720p is straightforward and mostly pain-free, but the reverse is not the case. In other words if you’re shooting 720p you can uprez to 1080i with little ill effect, but downrezzing from 1080i to 720p is another matter with substantial risk for image degradation.

Note that 1080 contains 2.25 times more lines than 720. If you need the larger frame for output to digital cinema, then 1080 (preferably, 1080p) is the clear choice. Many cameras can shoot 1080p24, which is ideal for narrative projects destined for the big screen, DVD, or Blu-ray. If you’re shooting a documentary or other nonfiction program then 720p is a good option, allowing for easy upconversion later to 1080i, if required.

In general, a camera performs best at its native resolution frame size. Thus, the Panasonic HPX2700 with a 1280 × 720 3-CCD sensor performs best at 720, whereas the Sony EX3 with a native resolution of 1920 × 1080 performs optimally at 1080. As in all matters, the story you choose dictates the right resolution and frame rate. And, oh yes, your client’s requirements may play a small role as well.

FIGURE 4.7

Although 1080i has more lines and higher “resolution,” the suppression of aliasing artifacts contributes to 720p’s sharper look. Error correction applied to the de-interlaced frame in a progressive display may produce an inaccurate result. (Images courtesy of NASA.)

FIGURE 4.8

When panning across an interlaced frame, the telephone pole is displaced leading to a “combing” effect when the odd and even fields are merged. Shooting in progressive mode eliminates the aliasing seen in interlaced images.

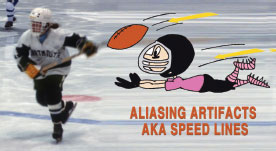

FIGURE 4.9

Interlaced frames capture fast-moving objects more effectively owing to the slight blurring between fields. Progressive frames comprised of a single field must rely on motion blur alone to capture smooth action. Cartoon speed lines are drawn intentionally to mimic the artifacts associated with interlaced images.

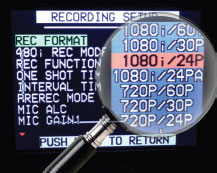

FIGURE 4.10

Recent camcorder models offer a range of recording options, including 1080p at 24 FPS. The setting is ideal for output to film, digital cinema, DVD and Blu-ray.

1080i24p?? How can a format be both interlaced and progressive? The “1080i” system setting refers to the output to a monitor, that is, what a monitor “sees” when plugged into the camera. The second reference is the frame rate and scan mode of the imager, in this case, 24 frames per second progressive (24p).

The advent of cameras such as the Sony F55, ARRI Alexa, and the RED has raised the specter of Higher Resolution Folly. It’s an ongoing threat, which we ought to resist, given the implications on our budget, workflow, and efficiency. Still we have to wonder: Is there any benefit to shooting at higher than HD resolution?

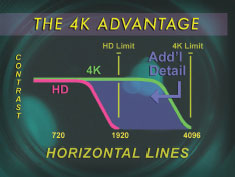

FIGURE 4.11

Two curves plot the contrast of HD and 4K-resolution images versus detail fineness. At 1920, HD’s fine detail is maximized, but the lowering of contrast reduces the perceived sharpness. 4K’s advantage is apparent owing to the higher contrast retained at the 1920 HD cutoff. Shooting at 2K resolution offers a similar albeit somewhat less dramatic improvement in contrast when output to HD.

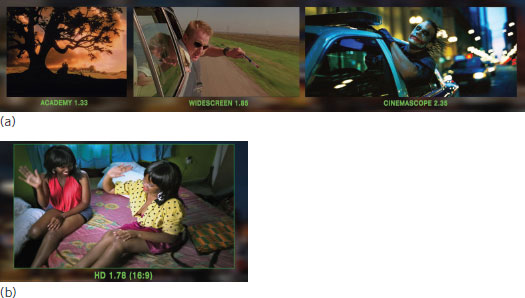

FIGURE 4.12

Shooting for the cinema (a) at 2K, 4K, or higher resolution allows for greater aspect ratio flexibility, which is based on traditional film sizing. Compare to (b) HD 1.78:1.

For digital cinema applications, the answer is yes. Although 1920 × 1080 provides more than ample contrast and sharpness for most nontheatrical programming given a good camera, lighting, and the appropriate optics, there is another factor to consider: Just as originating in 35mm yielded a more professional look when outputting to VHS than shooting VHS in the first place, so, too, does shooting 2K or 4K yield a more polished look when output to HD, DVD, or Blu-ray.

The fact is 2K and 4K origination makes our HD images look better! For some shooters, this is reason enough to forego HD origination in favor of higher resolution capture. There are practical tradeoffs of course, but the additional fineness and contrast is evident in the downrezzed HD frame.

In stories that are otherwise riveting, the shooter needs not be overly concerned with an occasional picture anomaly as a minor defect, such as a hue shift, is not likely to threaten the integrity of the storytelling. Four decades ago, I suspect the U.S. Army recognized The Sound of Color not for its engineering marvel (which was dubious at best) but for its engaging storytelling. The judges appreciated the feeling and whimsy of the color and sound interplay. And if the army’s humorless engineers can respond in such a way, there must be hope for the rest of us with similar unsmiling dispositions.

Engineers tell us that only 109% of a video signal can be captured before clipping and loss of detail occurs. But what does this mean in the context of telling a compelling story? Do we not shoot an emotional, gut-wrenching scene because the waveform is peaking at 110%? Is someone going to track us down like rabid beasts and clobber us over the head with our panhandles?

Some of us have engineer friends, and so we know they can be a fabulous lot at parties and when drinking a lot of beer. Years ago, I recall a tech who complained bitterly to the director about my flagrant disregard for sacred technical scripture. He claimed, and he was right, that I was exceeding 109% on his waveform! OMG! Was it true? Was I guilty of such a thing?

I admit it. I did it. But isn’t that what an artist is supposed to do? Push the envelope and then push it some more? Fail most of the time but also succeed once in a while by defying convention, exceeding 109%, and pushing one’s craft to the brink?

And so that is our challenge: to wed our knowledge of the technical with the demands of our creativity and the visual story. Yes, we need the tools—camera, tripod, lights, and all the rest—and we should have a decent technical understanding of them. But let’s not forget our real goal is to connect with our audience in a unique and compelling way.

To be clear, my intent is not to transform you into a troglodyte 7 or to launch a pogrom against guileless engineers. Rather, I wish to offer you the dear inspired shooter a measure of insight into a universe that is inherently full of compromises.

We discussed how story is the conduit through which all creative and technical decisions flow. Although story, story, story is our mantra, it isn’t the whole story. Just as a painter needs an understanding of his brushes and paints, the video shooter needs an understanding of his tools—the camera, lenses, and the many accessories that make up his working kit. You don’t have to go nuts in the technical arena. You just need to know what you need to know.

Truth is that the shooter’s craft can compensate for many if not most technical shortcomings. After all, when audiences are engaged they don’t care if you shot your movie with a 100-man crew on 35mm or single-handedly on your iPhone. It’s your ability to tell a compelling story that matters, not which camera has a larger imager, more pixels, or better signal-to-noise ratio. The goal of this chapter is to address the technical issues but only so much as they have an impact on the quality of images and the effectiveness of your storytelling.

Consider The Blair Witch Project8 shot on a hodgepodge of film and video formats. Given the movie’s success, it is clear that audiences will tolerate a cornucopia of technical shortcomings in stories that captivate. But present a tale that is stagnant, boring, or uninvolving, you better watch out. Every poorly lit scene, bit of video noise, or picture defect will be duly noted and mercilessly criticized.

FIGURE 4.13

The success of THE BLAIR WITCH PROJECT (1999) underscores the potential of low- and no-budget filmmakers with a strong sense of craft. The movie’s ragged images were a part of the story, a lesson to shooters looking to leve rage low-cost video’s flexibility.

Every shooter understands that audiences have a breaking point. Often this point is hard to recognize, because viewers are not able to articulate even the most obvious technical flaws or craft failings, such as an actor’s face illogically draped in shadow beside a lit candle.

This doesn’t mean that these flaws don’t have an impact. They certainly do! Illogical lighting, poor framing, and unmotivated camera gyrations all take their toll because the audience feels every technical and craft-related defect you throw at it. The issue is whether these glitches in total are enough to propel the viewer out of the story.

FIGURE 4.14

Overexposed. Out of focus. Poor color. Maybe this is your story!

FIGURE 4.15

Stay in control! Illogical lighting and technical flaws can undermine your storytelling.

THE TECHNICAL NATURE OF THE WORLD

Sometimes while sitting in the endless snarl of Los Angeles’ 405 freeway, I ponder the nature of the world: Is this an analog mess we live in, or a digital one?

At first glance, there is evidence to support the analog perspective. After all, as I sit idly on the 405, I can look around and see the sun rise and set, the sky brightening and darkening in a smooth continuous way. That seems pretty analog. And 99% of folks would agree that the world is an analog place.

But wait. Consider for a moment my ninth-grade science teacher in his horn-rimmed glasses explaining how the eye works. So now I’m thinking this freeway experience is flashing at me at the rate of 15 snapshots per second 9 upside down on the back of my retinas. Why then am I not seeing the cars and road-raging drivers like images in a flipbook, inverted, and creeping along with an obvious stutter?

The brain as a digital processor flips the images and smooths the motion by filling in the missing snapshots (or samples) through a process of interpolation. In math, we call this phenomenon the fusion frequency; in science, we call it persistence of vision; and in video, we call it error correction. However, you describe it the world we know and love may only seem like an analog place. It could be a digital mess after all—or some combination of the two.

FIGURE 4.16

Is this an analog or digital mess?

FIGURE 4.17

Each day the sun breaks the horizon and the sky brightens in a continuous way. Yup. The world seems like an analog place.

FIGURE 4.18

Auto everything. Great in low light. Pretty slow frame rate.

LET’S HAVE AN ANALOG EXPERIENCE

Attach a dimmer to an incandescent table lamp. Over the course of 1 second, raise and lower the light’s intensity from 0% to 100% to 0%. Plotting this profound experience on a graph we see:

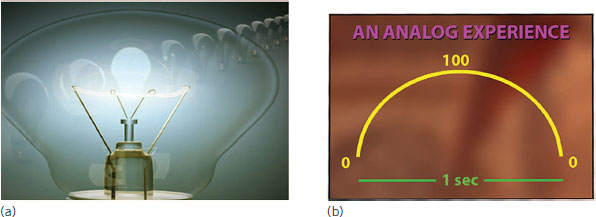

FIGURE 4.19 a,b

The output of an incandescent lamp (a) is smooth, continuous, and analog. The best digital recording closely approximates the analog curve (b), which is how through our conditioning we perceive and experience the world.

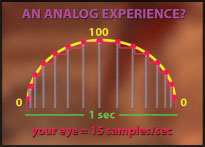

FIGURE 4.19c

Sampling the world at only fifteen ‘snapshots’ per second we need a LOT of error correction to get through our day!

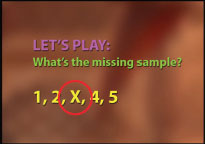

FIGURE 4.20

Let’s play Guess the Missing Sample! If you say “3” you will receive credit on a standard test and be deemed ‘intelligent’. But must “3” be the correct response? The x can be any value, but “3” seems correct because we assume the world is an analog place where information flows smoothly. Thus, the brain applying error correction draws a continuous curve from 2 to 4 through the missing sample “3.”

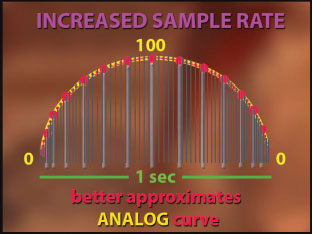

IMPROVING OUR DIGITAL RECORDINGS

Our brain’s digital processor interpolates missing samples based on analog assumptions of the world. Compressed recordings with samples deliberately omitted to reduce file size are prone to inaccuracies when reconstructing the original media file. To reduce the error correction required during playback we can better approximate the original analog curve by increasing the number of sample snapshots per second.

FIGURE 4.21

Increasing the sample rate produces a more accurate representation of the smooth analog curve—and a better digital recording.

Increased bit-depth is the second major way to improve the fidelity of digital recordings. Whereas a higher sample rate reduces the step size and produces a smoother more analog curve a greater bit-depth more faithfully places a sample along the curve, thus improving the color precision.

I often ask my students, “What is the most common element on Earth?” I usually receive a range of responses: Someone will suggest iron, which would be correct if we lived on Mars the Red Planet. Somebody else might say water, which isn’t an element but a compound. Still other folks may volunteer carbon, hydrogen, carbon dioxide, or any number of other possibilities.

Although nitrogen comprises three quarters of the earth’s atmosphere, silicon is the most abundant element in the earth’s crust and is the principal component of sand. Essentially free for the taking, silicon belongs to a group of elements known as semiconductors because they might or might not conduct electricity depending on the presence of an electron bond.1 If the bond is present, silicon assumes the qualities of a conductor like any metal. But if the bond is not present—that is, the electrons have gone off to do some work, say, run a Game Boy or charge a battery—the silicon that’s left is essentially a nonmetal, a nonconductor.

The two states of silicon form the basis of all computers and digital devices. Engineers assign a value of one to a silicon bit that is conductive, or zero if it is not. Your Macbook Pro, iPad, or PlayStation executes untold complex calculations every second according to the conductive states of trillions of these silicon bits.

The CCD11 imager in a camera is linked to an analog-to-digital converter, which samples the electron stream emanating from the sensor. If the camera processor were composed of only a single bit, it would be rather ineffective given that only two possible values—conductor or nonconductor, one or zero—could be assigned to each sample. Of course, the world is more complex and exhibits a range of grey tones that can’t be adequately represented by a one-bit processor that knows only black or white.

Adding a second bit improves the digital representation as the processor may assign one of four possible values: 0–0, 0–1, 1–0, and 1–1. In other words, both bits could be conductive, neither bit could be conductive, one or the other could be conductive, or vice versa. In 1986, Fisher-Price introduced the Pixelvision camera, a children’s toy utilizing a 2-bit processor that recorded to an ordinary audiocassette. The camera captured crude images that inspired a cult following and an annual film festival in Venice, California. 12

Most modern CCD cameras utilize a 14-bit analog-to-digital converter. Because greater bit-depth enables more accurate sampling, a camera employing a 14-bit analog-to-digital converter (ADC) produces markedly better more detailed images than an older-generation 8- or 10-bit model. The Panasonic HPX170 with a 14-bit A → D can select from a staggering 16,384 possible values for every sample compared to only one of 256 discrete values in a 1990s-era 8-bit Canon XL1. Given the range of values available to a 14-bit processor, it’s likely that one will be a fairly accurate representation of reality!

FIGURE 4.22

The element silicon contained in sand forms the basis of every digital device. A silicon “bit” may assume one of two states: conductor or nonconductor, zero or one, black or white. You get the idea.

FIGURE 4.23

A 1-bit processor can assign only one of two possible values to each sample: pure black or pure white. A 2-bit processor increases to four the number of assignable values to each sample. The result is a dramatic improvement in grey scale in the captured image. An 8-bit processor produces near-continuous gray and color scales. NTSC, PAL, and most HD formats, including Blu-ray are 8-bit systems.

FIGURE 4.24

The 1986 Pixelvision featured a crude 2-bit processor and recorded to a common audiocassette. The camera’s cryptic images have gained an artsy following.

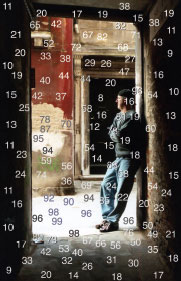

FIGURE 4.25

The world’s passageways feature a delicate interplay of light and shadow. To capture the subtlety and nuance, the shooter must have an understanding of analog and digital processes. The luminance values in this Venice doorway are indicated on an analog scale from 7.5 to 100 (NTSC).

The value of 10-bit recording cannot be overstated. Given the vast crop of 8-bit formats on the market, 10-bit capture to HDCAM SR, AVC-Intra, or Apple ProRes, enables four times more precise sampling of color and luminance, eliminating or greatly reducing the contour ridges and jagged edges often seen in 8-bit images. Beyond smoother gradients 10-bit capture also enables more efficient color correction and keying especially for green screen applications.

FIGURE 4.26

Images captured at 8-bits may exhibit uneven gradients such as the bands seen across a monochromatic blue sky.

FIGURE 4.27

The Panasonic HPX250 camcorder records in 10-bits to a P2 memory card. Ten-bit cameras capture very smooth gradients and four times more accurate color sampling than traditional 8-bit systems such as XDCAM, AVCHD, or HDV.

IS YOUR CAMERA A DOG

FIGURE 4.28

I consider two main areas when assessing a camera’s worthiness: its performance and its operation, which can be just as important.

• Does the camera feel comfortable in your hand?

Is its shape and bulk ergonomically agreeable? Is the camera well centered and balanced? Does the camera permit easy operation from a variety of positions? Does the camera have a blind side? Can you see approaching objects over the top of the camera?

• Are the controls well placed?

Is focus and zoom easy to access with bright witness marks in the useful shooting range from 6 to 8 feet (1.5–2.5 m)? Are buttons and controls robust and appropriately sized for your hands? Is the zoom action smooth and capable of glitch-free takeoffs and landings? Are audio meters visible from afar and not obscured by the operator? Are external controls (or WiFi) provided for setting routine parameters like time code, frame rate, and shutter speed?

• Is the camera versatile? Can it operate worldwide?

At 24, 25, 30 and 60 FPS? Is both PAL and NTSC output supported? Is 1/100th second or 172.8º shutter selectable for shooting 24p in 50-Hz countries? Or 150º shutter for shooting 25p in 60-Hz countries?

• Does the camera feature the output options you need?

USB, FireWire, SDI, HDMI. Is the HDMI output supported by your monitor at full frame without cropping?13 Are the plugs and jacks most subject to stress firmly anchored to the camera chassis and not soldered directly to a PC board?

• Is the camera rugged enough for the intended application?

Shooting news? Action sports? Wildlife? Is the camera adequately protected against moisture and dirt? Can it operate reliably in a range of temperatures and conditions? Can the lens housing support an external matte box? Does the mounting surface feature both 3/8 × 16 and 1/4 × 20 threads?

• Is the swing out viewing screen practical for use in bright daylight?

Does the electronic viewfinder (EVF) allow easy focus? Are focus assists provided, and are they available when recording? Is the focus readout in the viewfinder clear and intuitive?

• Is the camera insulated from handling and operating noise, for example, from the zoom motor? Is the on-camera mic properly directional and placed sufficiently forward? Are XLR inputs provided and placed to the rear of the camera so as not to entangle the operator?

• Is the power consumption reasonable?

Can the camera run for 4 to 5 hours continuously? Does the camera provide onboard power to a monitor or light?

• Does the camera feature built-in neutral density 14 filters?

Does the camera offer a sufficient range for effective control of exposure?

• Does the camera feature an interchangeable lens? Is the locking mechanism secure? Is a range of lenses available? Can backfocus be set quickly? Does the camera maintain backfocus through the shooting day? (See Chapter 6.)

FIGURE 4.29

Compare the Aaton (left), a masterpiece of handheld design to the boxy RED model (right).

FIGURE 4.30

Many of today’s camcorders are poorly balanced. This model is weighted substantially to one side making handheld operation inconvenient and wearying over time.

FIGURE 4.31

A badly designed latch makes access to the camera’s memory cards unnecessarily awkward especially in winter with gloved hands.

FIGURE 4.32

Larger camcorders may block the operator’s view of potentially dangerous objects approaching from the blind side. This camcorder features a low profile and excellent operator visibility.

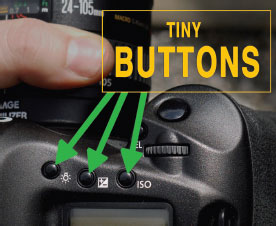

FIGURE 4.33

The micro buttons are inconvenient for shooters with Bart Simpson–sized hands.

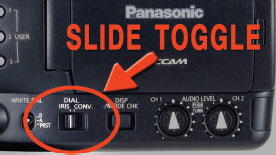

FIGURE 4.34

The lack of real estate in compact camcorders requires significant compromises. This slide switch inconveniently controls iris and convergence in a 3D camera.

FIGURE 4.35

Cable connectors are subject to stress and must be anchored securely to the camera chassis.

FIGURE 4.36

The viewfinder must be large enough to see critical focus and the frame edges. Most camcorders have viewfinders that are too small and lack sufficient resolution.

FIGURE 4.37

The top handle should feature ample mounting points for a light, a microphone, and a monitor.

FIGURE 4.38

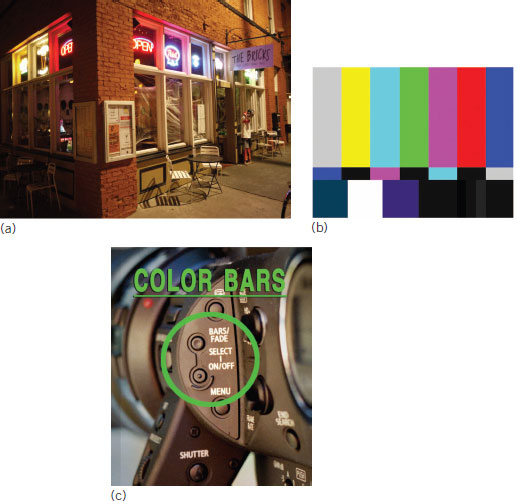

Frequenting the bars (a)? Record 30 seconds of bars and tone before rolling on a program. The bars (b) capture the state of the camera at the time of recording and helps ensure consistent audio and video levels throughout a production. Color bars are usually enabled (c) via a button at the side of a camera. Drilling down through multiple menus to select one’s bars is a waste of a life.

DOES YOUR CAMERA PASS THE 10-MINUTE RULE?

Some cinematographers believe that deciphering the controls of an unfamiliar camera should not take more than 10 minutes. When a camera is well designed, it does not impose an operational burden, so the shooter’s attention can be focused properly on his craft.

The ARRI Alexa is a tour de force of camera design with simple functions and menus in an easy-to-read display. The camera’s display panel glows red when recording so the status is immediately obvious from across a set. The major buttons and controls are conveniently laid out and backlit for low-light operation. The camera is well balanced with I/O ports and plugs placed for easy access. Every detail is considered to help a crew move swiftly and efficiently. A great camera is not just about performance!

FIGURE 4.39

Owing to a simple design and layout the ARRI Alexa allows shooters to focus more on what really matters!

DOES YOUR CAMERA PURR LIKE A KITTEN?

FIGURE 4.40

So you’ve found a camera that passes the ten-minute rule, the buttons and controls are in the right place, and you feel good about the world and the operational efficiency of your machine. So what about its performance?

FIGURE 4.41

• Consider optics first. And last.

Given the high resolution of today’s cameras, the quality of optics most determines the quality of images. To achieve good optical performance one need not invest in pricey exotic lenses. Camera makers now routinely apply sophisticated digital correction to the mediocre optics built into low- and mid-price camcorders. Thus, the camera (usually under $10,000) with a noninterchangeable lens may perform significantly better than a comparable model with a swappable lens; the latter arrangement is best avoided in this price range, unless you have a $10,000-plus lens to go with it.

• Processing sophistication.

Modern camcorders employ sophisticated processing to help produce artifact-free images. In cameras like the RED that capture RAW data the complex task of producing a viable image is off-loaded to an external device.

• Color space

Cameras recording 4:2:2 sample the blue and red channels at 50% resolution; cameras recording 4:2:0, AVCHD, HDV, XDCAM, and so on also sample the blue and red channels at 50% but skip every other red/blue line. The green luminance channel in digital video is seldom compressed. (Read more about color sampling and color space later in this chapter.)

• Shutter type

Traditional CCD (analog) cameras utilize a global shutter, which like a film camera exposes the entire frame at the same instant. Most CMOS (digital) sensors scan pixel by pixel line by line, and so introduce a time differential, which when panning or tracking, can lead to a sloping of vertical lines, aka rolling shutter or jello-cam.

• Performance-related camera features

1. Variable Frame Rates (VFR)

This feature reduces or eliminates stutter or strobing while capturing fast-moving objects. VFR is used creatively for fast- and slow-motion effects, to render wildlife captured at high magnification at normal screen speed, to add weight to actor’s performance, and/or to capture scenes in very low light.

2. Shutter control

A camera’s coarse shutter settings of 1/50th, 1/60th, 1/100th second, and so on may be used to increase or decrease motion blur or to eliminate flicker from out-of-sync light sources such as neon or fluorescents when shooting abroad. A camera’s fine shutter, also known as clear-scan or synchro-scan, can prevent rolling in CRT computer screens and nonconforming TVs and other displays.

3. Gamma, matrix, and knee settings

These image control adjustments help communicate the mood and genre of your story. See Chapter 9 for detailed discussion.

4. Detail control

The detail or edging placed around objects can detract from your camera’s perceived performance. News and nonfiction programs may benefit from a higher detail setting whereas dramas usually fare better and appear more organic with reduced detail.

The CCD15 is an analog system; the more light striking the CCD, the more electrons that are smoothly and continuously displaced, sampled, and digitally processed. The charging of a CCD mimics traditional photographic emulsion, 16 a global-type shutter exposing the sensor’s entire raster in the same instant.

In most modern cameras equipped with high-resolution CMOS imagers, shooters face a new and potentially more serious disruption to the visual story. CMOS sensors are digital devices composed of rows of pixels that in most cameras are scanned in a progressive or interlaced fashion. The rolling scan of the sensor surface containing millions of pixels can take a while, and so we can often see a disconcerting skewed effect when panning or tracking in scenes with strong vertical elements.

Although digital CMOS or MOS 17 cameras are subject to rolling shutter artifacts, not all shooters will experience the jello effect. It really depends on the nature of the material and the frame. You won’t see the vertical skewing in a static interview with the CEO or in the still life of a fruit bowl on a set at NAB. But the risk is real when shooting action sports or aerial shots from a helicopter, so the savvy digital shooter must be well aware.

FIGURE 4.42

High-resolution CMOS sensors consume only 20% of the power of a comparable-sized CCD. The MOS imager, developed by Panasonic, is said to feature improved brightness and smoothness comparable to a CCD.

FIGURE 4.43

The shutter in some CMOS type cameras produces an objectionable skewing effect when tracking or panning across scenes with pronounced vertical elements. CCD-type cameras do not exhibit such artifacts.

FIGURE 4.44

The rolling shutter interacting with a photographer’s strobe light may create a truly bizarre effect. A portion of the frame may contain the discharging flash while another section may not. Many cameras now contain a flash band corrector that overwrites the offending frame with parts of the preceding or following frame. In the future, viewers may be more accepting of such anomalies!

FIGURE 4.45

A rolling shutter may be quite apparent in DSLRs equipped with large CMOS sensors. This model features a 22.3-megapixel (5760 x 3840) sensor, the equivalent of almost 6K resolution in video parlance. DSLRs describe imager resolution in total pixels whereas video cameras only refer to horizontal resolution. This arrangement better accommodates the various frame heights associated with film and video: 2.35:1, 1.85:1, 16:9. 4:3, and so on.

FIGURE 4.46

CMOS sensors eliminate the CCD vertical smear often seen in urban night scenes.

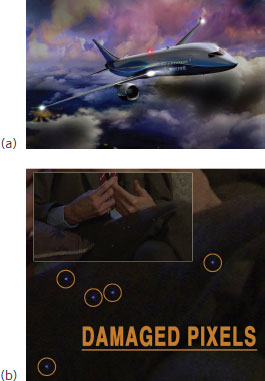

FIGURE 4.47

A CCD camera aboard an aircraft at high latitudes may incur cosmic ray damage, which appears later as immovable white or violet dots on screen. In some cameras, the damaged pixels may be masked during auto-black balance (ABB). CMOS sensors are much less susceptible to cosmic ray damage during long international flights.

FIGURE 4.48

Although the performance of digital sensors has improved, many top cameras continue to employ lower noise CCD type (analog) imagers. The Sony F35 utilizes a Super 35mm CCD.

FIGURE 4.49

With improved processing and scanning strategies, today’s HD cameras fitted with CMOS sensors exhibit fewer serious artifacts such as rolling shutter.

In stories that truly captivate the size and type of a camera sensor should not be a major concern. Still at a given resolution, the larger imager with more surface area allows correspondingly larger pixels, which translates (usually) into better dynamic range and improved low-light sensitivity. It also enables a shallow depth of field, a look fashionable among shooters these days. The longer focal-length lens required to cover the large sensor makes it easier to establish a clearly defined focal plane, which is central to many current shooters’ storytelling prowess.

Although a larger sensor offers advantages, the trade-offs may not always be worth it. When shooting wildlife with telephoto lenses, the shallow depth of field can be frustrating and lead to soft unfocused images. Cameras equipped with sensors larger than two thirds of an inch, may be more difficult to transport and hold, owing to the increased weight and bulk of the body and lenses.

Cameras featuring large sensors with a huge number of pixels may also require high compression 18 and/or a dramatically higher data load, which can be crippling from an operational, workflow, and data storage perspectives.

Today’s camera sensors range in size from 1/6 of an inch to 1 3/8 inches (35 mm) or larger. Like most everything else in this business, the numbers are not what they seem: When referring to a 2/3-inch imager the actual diameter is only 11 mm, less than half an inch, whereas a 1/2-inch sensor has a diagonal of 8 mm, less than a third of an inch. The discrepancy dates back to the era of tube cameras, when the outside diameter of a 2/3-inch sensor inclusive of its mount really did measure two thirds of an inch.

FIGURE 4.50

Audiences don’t study resolution charts and neither should you! The Canon shoots high definition at 1920 × 1080. The RED Epic shoots 5K at 5120 × 2700 resolution. Which camera is “better”? Which camera is right for the job?

For many shooters, the illogical numbers game rules the roost, because cameras are frequently judged these days not on performance or suitability for a project but by which offers the largest imager with the most pixels. American cars and trucks were once sold this way: The vehicle with the largest engine was deemed invariably the most desirable.

We discussed how feature films and other programs destined for the cinema must display enough picture detail to fill the large canvas; soft, lackluster images with poor dynamic range and contrast will alienate the viewer by conveying an amateur feeling. Remember your implied message as a shooter: If you don’t value the story enough to deliver compelling images, why should your audience value the story enough to invest its time and attention?

Viewers’ perception of resolution is determined by many factors, a camera sensor’s native resolution being only one. Of greater importance is the exercise of good craft, especially how well we capture and maintain satisfactory contrast. High-end lenses low in flare and chromatic aberration19 produce dramatically better images regardless of a camera’s manufacturer or sensor type.

FIGURE 4.51

The converging lines in the reference chart suggest how resolution and contrast work together. With improved contrast, the converging lines appear more distinct and higher resolution.

FIGURE 4.52

Similar to fine-grained film, HD sensors with diminished pixel size also sacrifice some low-light sensitivity in exchange for increased resolution. Larger sensors with larger pixels tend to have better dynamic range.

FIGURE 4.53

Clipping, compression anomalies, lens defects. At high resolutions, the viewer sees it all—the good, the bad, and the ugly!

The proper use of accessories such as a matte box or sunshade is also critical to prevent off-axis light from obliquely striking the front of the lens and inducing flare. Simple strategies like utilizing a polarizing filter when shooting exteriors can substantially improve contrast and therefore perceived resolution.

HUMAN LIMITS TO PERCEPTION OF RESOLUTION

HD in some form or GOP length 20 is here. But why did it take so long? Clearly one problem was defining HD. Is it 720p, 1080i, or 1080p? Is it 16:9, 15:9, or even 4:3? Many viewers with widescreen TVs simply assume they are watching HD. This is true especially for folks experiencing the brilliance of plasma TV for the first time. If it looks like HD, it must be HD, right?

The popular demand for larger sensors and higher resolution is fundamentally flawed. Indeed, most viewers are hard-pressed to tell the difference between standard-definition and high-definition images at a ‘normal’ viewing distance. In a typical U.S. home, the viewing distance to the screen is about 10 feet (3 m). At this range, the average viewer would require a minimum 96-inch display (fixed pixel plasma or LCD) before perceiving an increase in resolution over standard definition. 21 In Japan where living rooms are smaller, HDTVs higher resolution is more apparent, and so, unsurprisingly, HD gained much faster acceptance there.

VIVA LA RESOLUTION

Can you read this? Sure you can. Now move this page 10 feet away. The text is still high resolution at 300 dpi or more, only now the print is too small and too far away to be readable. So there is a practical upper limit to resolution and how much is actually discernible and worthwhile. Do we need the increased detail of HD? Yes! But how much resolution is ideal, and how much resolution is enough?

FIGURE 4.54

The short viewing distance in stores helps sell the superior resolution of HDTV. Viewers at a more typical viewing distance at home can usually perceive little improvement in definition until they buy a bigger TV or move closer to the screen!

The camera sensor in terms of resolution may be approaching its practical limit. Our primary viewing areas, in front of a computer, mobile device, or home TV, aren’t getting larger, nor are the screen dimensions at the local multiplex. Fundamentally, there is only so much resolution that we can discern on small screens and mobile devices.

Most shooters will acknowledge the need for a 4K-resolution camera sensor for digital cinema applications. Although an 8K-resolution sensor may be required to produce a 4K-resolution image free of artifacts, shooters and manufacturers seem inclined finally to halt the current madness and not go much beyond 4K resolution for the original image capture. In the future, we will likely turn to increased bit-depth or a higher frame rate for enhancement of our images.

FIGURE 4.55

For most applications, including digital cinema. this 4K model may represent the practical upper limit for camera resolution.

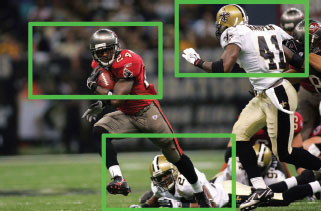

FIGURE 4.56

Intraframe switching? For broadcast coverage of sports, a 4K camera provides the ability to extract multiple 1920 × 1080 frames.

We’ve all heard the endless discussions of film versus video looks. A video sensor resolves focus and edge contrast differently than does film. Film allows a smooth transition from in to out of focus objects due to the randomness of the film grain and the more complete coverage that allows. By comparison, the regular pixel pattern of a sensor is interspersed with gaps so the transition between sharp and not sharp is abrupt and images look more video-like— without a shooter’s thoughtful intervention.

FIGURE 4.57

Camera sensor pixel arrangement in rows.

FIGURE 4.58

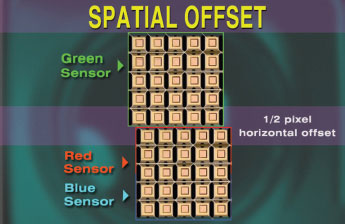

In a three-chip camera the green sensor is moved one-half pixel out of alignment relative to the blue and red sensors. The offset is intended to capture detail that would otherwise fall inside the grid, that is, between the regularly spaced pixels.

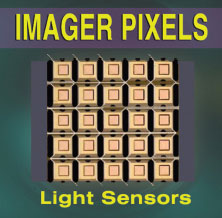

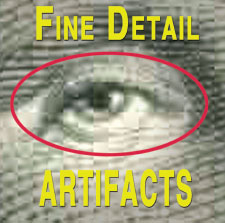

Engineers have long recognized the shortcomings of a discontinuous grid pattern. Although pixels arranged in rows ensure low-cost manufacturing, the regular arrangement thwarts the sampling of detail falling between the rows. This relative inability to capture high-frequency detail may produce a bevy of objectionable artifacts, including a pronounced, chiseled effect through sharply defined vertical lines.

To suppress such defects in single sensor cameras, engineers employ an optical low pass diffusion filter. The slight blurring attenuates the fine detail in high-resolution scenes, which has the counterintuitive effect of increasing apparent sharpness and contrast.

FIGURE 4.59

In scenes that contain mostly green tones (as well as in scenes that contain no green at all), a large portion of the image is not offset, increasing the likelihood of artifacts appearing in the finer details.

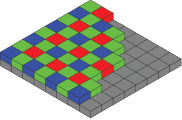

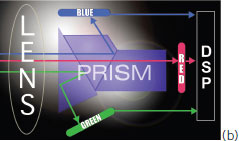

The three-chip camera utilizes a beam splitter or prism behind the lens to divert the green, red, and blue (RGB) image components to the respective sensors. The result is three discrete color channels that can be precisely tweaked according to the taste and vigor of the camera manufacturer, the compression engineer, or the savvy video shooter.

FIGURE 4.60

FIGURE 4.61

Dominating the professional broadcast world for years, three-chip cameras employ a prism behind the lens to achieve color separation. The strategy allows for maximum control of the individual RGB channels at the price of increased complexity.

FIGURE 4.62

Single sensor cameras do not utilize spatial offset. An optical low-pass diffusion filter (OLPF) is used instead to suppress high-frequency artifacts. Many DSLRs do not use an OLPF and are therefore subject to severe image defects in areas of fine detail.

FIGURE 4.63

The traditional single sensor camera utilizes an overlying filter called a Bayer mask to achieve the red–green–blue separation.

FIGURE 4.64

Single sensor video cameras and some DSLRs utilize an OLPF to reduce high-frequency artifacts. Use caution when removing dust from the OLPF. A 10X loupe and hurricane air bulb may facilitate the job. Never use Dust Off—the propellant will ruin the filter’s delicate crystal surface!

The three-sensor approach has notable shortcomings. Due to differences in energy levels, the red, green, and blue color components navigate the dense prism glass at different speeds. The camera’s processor then must compensate and recombine the component colors as if they had never been split—a task of considerable complexity. Single-chip models without the prism are simpler and cheaper to manufacture, but require an overlying Bayer mask 22 or other technology to achieve the necessary RGB color separation.

We saw how more samples per second produce a smoother, more accurate representation of the analog curve that defines our perceived experience on Earth. Of course, if we had our druthers, we’d shoot and record all programs with as many bits as possible, and why not? Uncompressed recordings with all its samples intact obviate the need for guesswork during playback, thus averting the defects and picture anomalies that inevitably arise when attempting to reconstruct the abbreviated frames.

We often work with uncompressed audio, as most modern cameras can record two to eight channels of 48-kHz 16-bit PCM. 23 Uncompressed audio may be identified by its file extension. If you’re on a PC you’re most likely working with .wav files; if you’re on a Mac you’re probably handling .aif files. There isn’t practically much difference. It’s mostly a matter of how the samples are arranged and are alluded to in the data stream.

Bear in mind that uncompressed audio (or video) does not necessarily mean a superior recording. At a low sample rate and shallow bit-depth, a telephone recording may well be uncompressed, but it can hardly be considered good audio.

CHART: UNCOMPRESSED AUDIO SAMPLE RATES

Telephone = < 11,025 samples per second

Web (typical)/AM Radio = 22,050 samples per second

FM Radio/ some HDV/DV audio = 32,000 samples per second

CD Audio = 44,100 samples per second

ProVideo/DV/DVD = 48,000 samples per second

DVD-Audio/SACD/feature film production = 96,000 samples per second

Let’s say that in a moment of unexplained weakness, we want to release the 92-minute classic Romy & Michele’s High School Reunion (1997),24 uncompressed on single-sided single-layer DVDs. The capacity of a disc is 4.7GB. The uncompressed stereo audio track 25 would fill about one fourth of the disc, approximately 1.1GB. It’s a big file but doable. Fine.

Now let’s look at the big kahuna. Uncompressed standard definition video requires 270 Mbps. At that rate given the movie’s run time of 5,520 seconds, we could fit less than 135 seconds of the glorious work on one DVD. So for the entire movie, we’re talking about a boxed set of approximately 41 discs for the standard-definition release!

Venturing further into the macabre, let’s consider the same title transferred to DVD as uncompressed HD. At the staggering rate of 1,600 Mbps, each disc would run a mere 22 seconds, and the size of the collector’s boxed set would swell to a whopping 253 discs! Loading and unloading that many discs every half a minute is tantamount to serious calisthenics. Clearly it makes sense to reduce the movie’s file size to something more manageable and marketable.

What do compressors want? What are they looking for? The goal of every compressor is to reduce the movie file size in such a way that the viewer is unaware that some samples (indeed most samples, about 98% for a DVD!) have been deleted.

Compressors work by identifying redundancy in the media file. A veteran shooter may recall holding up a strip of film to check for a scratch or to read an edge number. He may have noticed that in a given section, one frame seemed hardly different from the next. In other words, there seemed to be redundancy from frame to frame.

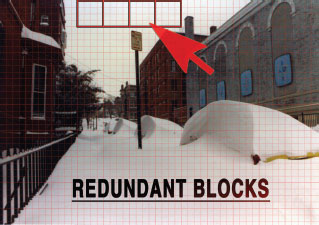

Video engineers also recognized that redundancy occurred within the frame. Consider a winter landscape in New England, a group of kids playing in the snow under a slate gray sky. An HD camcorder framing the scene divides the idyllic tableau into blocks as small as 4 × 4 pixels, the camera compressor evaluating each block across the monochromatic sky and sensing little difference from block to block.

So the data and bits from the redundant blocks are deleted, and a message is placed in a descriptor file instructing the playback device when reconstructing the frame to repeat the information from the first block in subsequent blocks. To the shooter, this gimmickry is dismaying because the sky is not in fact uniform, but actually contains subtle variations in color and texture. So I’m wondering as a shooter with a modicum of integrity: Hey! What happened to my detail?

FIGURE 4.65

In a strip of film, one frame appears hardly different from the next or previous frames. Compression schemes take advantage of this apparent redundancy to reduce file size.

FIGURE 4.66

The HD compressor divides the frame into blocks as small as 4 × 4 pixels. The blocks in the sky are deemed “redundant”; their pixel data is replaced with a mathematical instruction to the player to repeat the values from a previous block when reconstructing the frame.

FIGURE 4.67

Compression can be ruthless as samples inside a block are subject to quantization, a profiling process by which pixels of roughly the same value are rounded off, declared “redundant,” and then discarded. Such shenanigans can lead to the loss of detail and artifacts when playing back a compressed file. (Images courtesy of JVC.)

YOU DON’T MISS WHAT YOU CAN’T SEE

The imperative to reduce file size leads engineers into the realms of human physiology and perception. The eye contains a collection of rods and cones. The rods measure the brightness or luminance of objects; the cones measure the color or chrominance. We have twice as many rods as cones, so when we enter a dark room, we can discern the outline of the intruder looming with a knife but not the color of dress she is wearing.

Engineers understand that humans are unable to discern fine detail in dim light. Because we can’t see the detail anyway, they say, we won’t notice or care if the detail is discarded. Thus, the engineers look to the shadows primarily to achieve their compression goals, which is why deep, impenetrable blacks are such a problem for shooters working in highly compressed formats such as HDV.

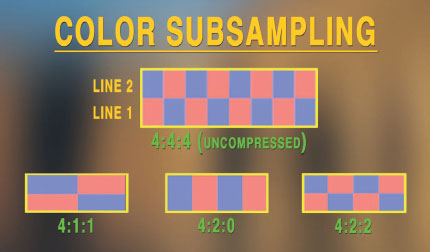

When considering the notion of color space engineers mimic the ratio of rods and cones in the human eye. Accordingly, your camera shooting 4:2:2 26 samples a pixel’s luminance (“4”) at twice the rate of its chrominance as measured in the blue and red channels. The 4:2:0 color space used in formats such as AVCHD and XDCAM further suppress the red and the blue sampling—because you’ll never notice, right?—in order to achieve a greater reduction in file size.

FIGURE 4.68

Owing to the eye’s relative insensitivity to red and blue we need not sample for most applications at full-resolution RGB. Although the luminance bearing the green component is invariably sampled at full resolution because the eye is most sensitive to mid-spectrum wavelengths, the blue and red channels are sampled at only one-half resolution, that is, 4:2:2. When recording 4:2:0, the red and blue channels are alternately sampled at 50%, skipping every other vertical line.

FIGURE 4.69

In a dark room, the color information in the dress is difficult to perceive. It is therefore deemed “irrelevant” and may be discarded. Or so the thinking goes.

INTERFRAME VERSUS INTRAFRAME COMPRESSION

In formats like DV, DVCPROHD, and AVC-Intra, compression is applied intraframe only, that is, within the frame. This makes sense since the capturing of discrete frames is consistent with the downstream workflow that requires the editing, processing, and pretty much everything else including color correction, at a frame level.

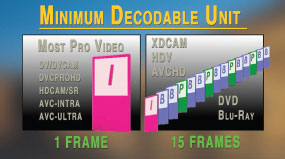

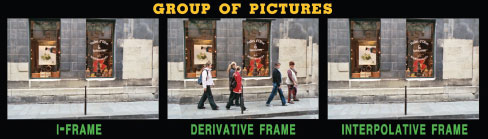

To further reduce file size and storage requirements, AVCHD, HDV, and DVD-Video, utilize interframe as well as intraframe compression. In these more compressed formats, the minimum decodable unit is not the frame (as in film and most professional formats) but the group of pictures (GOP), composed usually of 15 frames reduced by hook and by crook to a single reference intra or I-frame. Within the GOP, the fields, frames, and fragments of frames deemed redundant or irrelevant are discarded, and no data is written. Instead, a mathematical message is left in the GOP header instructing the player how to resurrect the missing frames or bits of frames based on embedded cues.

Bidirectional and predictive frames enable long-GOP formats to anticipate and complete action inside the group of pictures. This scheme of I, B, P frames seeks to identify and fill in events, by referencing the previous I-frame; the forward-looking P-frame and B-frame looking forward and back to track changes inside the GOP interval.

H.264, also known as AVC (Advanced Video Codec27), utilizes a system of bidirectional prediction that looks ahead or back over an entire video stream, substantially increasing the processing and encoding times. H.264 may be implemented as either long GOP, as in AVCHD cameras, or intraframe, as found notably in Panasonic AVC-Intra models.

FIGURE 4.70

Although the frame is the minimum decodable unit for most pro video, the GOP is the basic unit for HDV, AVCHD, and XDCAM cameras that utilize interframe compression. A GOP size of 15 frames is typical—retaining only one complete frame about every half second!

FIGURE 4.71

As pedestrians enter and leave the GOP, the background building is revealed and must be interpolated. Clues recorded during encoding enable a player to reconstruct the missing portions of frames by referencing the initial I-frame—the only complete frame in a GOP. AVCHD cameras utilize long-GOP H.264 to achieve a dramatic reduction in file size.

FIGURE 4.72

A computer’s minimum decodable unit is the byte, the 8-bit sequence that defines each stroke or key on a 256-character keyboard. In pro video, the minimum decodable unit is usually the frame. In formats such as XDCAM HD/EX, HDV, and DVD-Video, the minimum unit is the GOP. Most NLEs must decompress the long-GOP formats in real time to enable editing at the frame level. A fast computer and use of an intermediate format such as Apple ProRes or Avid DNxHD is normally used to facilitate this.

FIGURE 4.73

In low light, owing to the uneven compression, some cameras attenuate green wavelengths more than red, so shadow areas acquire a warm cast. Hue shifts are more likely to occur in Caucasian skin due to the predominant red tones.

FIGURE 4.74

Compression anomalies like macroblocking are more noticeable in the shadows so the shooter should look there first for trouble. Cameras displaying such artifacts, especially in the facial shadows, should be rejected.

Beyond the requirements of a network or client, the choice of frame rate is a function of the story. For news or sports captured at 30 or 60 FPS, the higher frame rate reflects the feeling of immediacy in a world of breaking stories, city council meetings, and endless police chases. The 30-FPS look is ideal for reality TV, corporate programs, and documentaries, when a clean, contemporary feel is desired. 28 Thirty frames per second allow easy down-conversion from HD to NTSC 29 and the Web, where video streaming at 15 FPS is the norm.

For dramatic applications, 24p offers the shooter a more filmic look rooted firmly in the past tense. Reflected in the actors’ slightly truncated movements 24p communicates the sense of the classic storyteller seated by a campfire captivating his audience. In a show’s first images the 24p flicker across a screen says, “Oh, let me tell you a story …”

Beyond the feel and story implications of 24p the frame rate provides ideal compatibility with display platforms such as DVD and Blu-ray. Given the near-universal support for 24p in postproduction, the shooter-storyteller can capture, edit, and output 24p without incurring the wrath of NTSC artifacts or encoder snafus stemming from the 2:3 pulldown30 inserted to produce a 29.97 FPS video stream.

WHY THE GOOFY FRAME RATES—OR WHY 24P ISN’T 24P?

In video as in much of the modern world there seems to be a paucity of honesty. So when we say 30p, we really mean 29.976 progressive frames per second; when we say 24p, we usually mean 23.976 progressive frames per second; and when we say 60i, we really mean 59.94 interlaced fields per second. This odd frame rate business is the legacy of 1950s’ NTSC, which sadly instituted a 29.97 frame rate and not a more cogent and logical 30.00 FPS.

You may recall how we faded a light up and down for one second and we all shared and marveled at the seeming analog experience. We understood our eye actually witnessed the event as a series of 15 (or so) snapshots per second, but the signal processor in our brains applied error correction, interpolated the “missing” frames, and produced the perception of smooth, continuous analog motion.

The error correction we apply is evident in a child’s flipbook. Flip through the book too slowly and we see the individual snapshots. But flip through a bit faster and our brains are suddenly able to fuse the motion by fabricating the missing samples.

In the early days of cinema in the nickelodeons of 1900, silent films were normally projected at 16 to 18 FPS, which is above the threshold for most of us to see continuous motion but is not quite fast enough to eliminate the flicker, a residual perception of the individual frame samples. Audiences in 1900 found the flicker so distracting that moviegoers more than a century later still refer to this past trauma as going to see a flick.

Seeking a solution engineers could have simply increased the frame rate of the camera and projector and thereby delivered more samples per second to the viewer. But this would have entailed more film and greater expense. As it was, the increase in frame rate to 24 FPS did not occur until the advent of sound in 1928, when talking pictures required the higher frame rate in order to achieve satisfactory audio fidelity.

When film runs through a projector, each frame is drawn into the gate and stops. The spinning shutter opens and the frame is projected onto the screen. The shutter continues around and closes again, and a new frame is pulled down. The process is repeated in rapid succession, which our brains fuse into a continuous motion.

To reduce flicker, engineers doubled the rotational speed of the shutter so that each frame was projected twice. This meant that viewers at 16 FPS actually experienced the visual story at 32 samples per second; compare this to the viewers of today’s 24 FPS movies who see 48 samples per second projected at the local multiplex.

The imperative to reduce flicker continued into the television era. Given the 60-Hz main frequency in North America and the need for studios, cameras, and television receivers to all synchronize to it, it would have been logical to adopt a 60 FPS standard, except, that is, for the need to sample every frame twice to reduce flicker. 31

So engineers divided the frame into odd and even fields producing an interlaced pattern of 60 fields or the equivalent of 30 frames per second. Fine. Thirty frames per second is a nice round number. But then what happened?

With the advent of color in the 1950s, engineers were desperate to maintain compatibility with the exploding number of black-and-white TVs entering the market. The interweaving of color information in the black-and-white signal produced the desired compatibility but with an undesirable consequence: Engineers noted a disturbing audio interference with the color subcarrier, which they deduced could be remediated by the slight slowing of the video to 29.97 FPS.

This seemingly innocuous adjustment is today the ongoing cause of much angst and woe. When things go wrong as they tend to—bad synchronization, dropped frames, an inability to input a file into the DVD encoder—we always first suspect a frame rate or a time code snafu related to the 29.97/30 FPS quagmire. Even as we embrace HD, the need persists to down-convert programs to NTSC or PAL for broadcast or DVD. Thus, we continue to struggle mightily with the legacy of NTSC’s goofy frame rate, particularly as it pertains to timecode. I discuss the NTSC’s dueling timecode systems in Chapter 8.

So herein lies the rub: Given NTSC’s 29.976 FPS standard, it makes sense then to shoot 24p at 23.976 FPS, with the six-frame boost to standard definition being very straightforward, and thereby avoiding the mathematical convolutions that degrade image quality. Alas, NTSC’s odd frame goofiness lives on!

For shooters with aspirations in feature films and dramatic productions the advent of 24p was a significant milestone with improved resolution and a more cinematic look. Before 2002, cost-conscious filmmakers engaged in various skullduggery like shooting 25 FPS PAL 32 to achieve the desired progressive look and feel. That changed with the introduction of the Panasonic DVX100 that offered 24p for the first time in a camcorder recording to DV tape.

SHOOTING 24P FOR DVD AND BLU-RAY

FIGURE 4.75

Every DVD and Blu-ray player is inherently a 24p device. This is a compelling reason for many shooters to originate in 24p.

Since DVD’s introduction in 1996 movie studios originating on film have encoded their movies at 24 FPS for native playback on a DVD player. Relying on the player to perform the required conversion to standard definition at 29.97 FPS, the shooter can pursue an all-24p workflow and avert NTSC’s most serious shortcomings, while also reducing the program file size by 20%—not a small amount in an era when producers are jamming everything and the kitchen sink on a DVD or Blu-ray disc.

During the last decade, camera manufacturers have devised various schemes to record 24 FPS. The Panasonic DVX100 recording to tape offered two 24p recording modes, which continue to be relevant in solid-state P2 and AVCCAM models. In standard mode, images are captured progressively at 24 frames per second (actually 23.976) then converted to 60 interlaced fields (actually 59.94) using a conventional 2:3 pulldown. After recording to tape or flash memory and capturing into the NLE, 33 the editing process proceeds on a traditional 60i timeline.

In 24pA (advanced) mode, the 24-frame progressive workflow is maintained from image capture through output to DVD, Blu-ray, or hard drive. The camera scans progressively at 24 frames per second, but the 60i conversion to tape or flash memory is handled differently. In this case, the 24p cadence is restored in the NLE upon ingestion by removing the extra frames inserted temporarily during capture to facilitate capture and monitoring at 60i.

For shooters reviewing takes with their directors, it is important to note that playback of 24pA footage displays a pronounced stutter due to the temporarily inserted frames. This is completely normal and is not to be construed as just another cameraman screw up!

FIGURE 4.76

Every manufacturer handles 24p differently. Canon’s inscrutable “24F” format derives a progressive scan from an interlaced imager—a complex task! Some Sony 24p cameras output “24PsF,” comprised of two identical (segmented) fields per frame. Although 24p playback with pulldown appears smoother, the 24PsF video may seem sharper.

FIGURE 4.77

In 24p standard mode, progressive images are captured at 23.976 FPS and then are converted to 29.976 FPS (60i) by adding 2:3 pull-down. The process merges every second or third field and contributes to 24p’s slightly stuttered film look. Footage recorded in 24p standard mode is treated like any other 60i asset in the NLE timeline. Images captured in advanced mode are also scanned progressively at a nominal 24 FPS, but in this case, entire frames are inserted to make up the 29.976 time differential; these extra frames are then subsequently removed during ingestion into the NLE. In this way, the shooter can maintain a favorable 24p workflow and avert the NTSC interlacing artifacts when encoding to DVD, Blu-ray, or digital cinema.

FIGURE 4.78

Freed from the shackles of videotape and a mechanical transport solid-state camcorders are able to record individual frames just like a film camera. Recording only the desired “native” frames reduces by up to 60% the required storage when recording 24p to flash memory.

It doesn’t make you a bad person. Maybe HD just isn’t your thing. Or maybe you’re leery of HDV’s high compression, the 6 times greater bandwidth, or AVCHD’s long-GOP construction that can instill convulsions in the NLE timeline. Maybe the tapeless workflow seems too daunting, or you’re shooting a cop show late at night and you need the low-light capability that only your old SD camcorder can deliver—about two stops better sensitivity on average than a comparable-class HD or HDV model.

FIGURE 4.79

Standard definition still rules the roost in many parts of the world. The adoption of HD for broadcast in countries in Africa is a decade away—at least.

Until recently, when our stories originated invariably on videotape, we felt a comfort in the tangible nature of the medium. We knew tape well, even with its foibles, and had a mature relationship with it, like a 30-year marriage. At the end of the day, we might have griped and sniped at our long-term partner, but then it was off to bed after supper and television and all was well with the world.

FIGURE 4.80 a,b,c

We could see it, feel it, watch the innards turning. The mechanical world of videotape was comforting, until, uh—it wasn’t.

FIGURE 4.80d

I think we’ve had quite of enough of you, thank you.

FIGURE 4.81

Who has the tapes? Production folks nervously pose this question after every shooting day. With tapeless cameras, the manner of handling and safeguarding original camera footage is critical.

In an era of blue-laser recording and flash memory, the dragging of a strip of acetate and cobalt grains across an electromagnet seems crude and fraught with peril. Condensation can form and shut a camera down. A roller or a pincher arm can go out of alignment and cause the tape to tear or crease, or produce a spate of tracking and recording anomalies. And there is the dust and debris that can migrate from the edges onto the tape surface and produce ruinous dropouts.

Today’s tape shooters no longer face quite the same perils. Although clogged heads and the occasional mechanical snafu still affect some recordings, the frequent dropouts from edge debris are no longer a serious risk if we use master-quality media. 34

TALE OF DV TAPES

Apart from the physical cassette and robustness of the media, there is no quality difference among DV, DVCAM, and DVCPRO formats. It is not advisable to mix brands of tape as the lubricants used by different manufacturers may interact and cause head clogs or corrosion leading to dropouts. Be loyal to a single brand of tape if you can!

FIGURE 4.82

We should always exercise care when handling videotape. Carelessly tossing loose tapes into the bottom of a filthy knapsack is asking for trouble. Shooting in unclean locations such as a factory or along a beach amid blowing sand can also be conducive to dropouts as grit can penetrate the storage box, the cassette shell, and the tape transport itself.

Dirt and other contaminants can contribute substantially to tape deterioration over time. The short shelf life of MiniDV tapes (as little as 18 months) should be of concern to producers who routinely delete original camera files from their drives, and rely solely on the integrity of the camera tapes for backup and archiving.

FIGURE 4.83

No swallowed tapes in this puppy! And this camcorder has a 5-year warranty!

FIGURE 4.84

EDUCATOR’S CORNER: REVIEW TOPICS

1. Discuss the relative merits of shooting progressive versus interlaced. For what applications might shooting 50i or 60i be appropriate? Live TV? Broadcast?

2. Consider the factors that have an impact on perceived resolution. Is greater resolution always better? Under what conditions might less resolution be desirable?

3. How does camera sensor size impact workflow and the images you present to the world? Explore the notion of more or less depth of field. Consider the aesthetic and practical aspects of shooting with a narrow focus.

4. Explain the two (2) major ways to improve digital recordings. Why does more compression increase the risk of noise and objectionable artifacts?

5. Explain 4:2:2 and 4:2:0 color sampling. How does a 10- or 12-bit workflow substantially improve the quality of images?

6. Consider your next camera. Identify eight (8) features that are important to you in terms of performance and operation.

7. Salespeople have been saying this for years: Digital is better than analog. Do you agree?

8. Discuss the advantages, if any, of long-GOP formats like XDCAM EX and HDV compared to intraframe only compressed formats like ProRes and AVC-Intra. Why do camera makers continue to promote 8-bit formats when 10-bit recording systems are superior in performance and workflow?

9. Why is 24p not 24 FPS? Why is 30p not 30 FPS? Why is 60p not 60 FPS? What’s going on? Explain this calmly without histrionics or vitriol.

10. Please list ten camera and/or postproduction codecs with which you are familiar. Indicate the associated bit rates for each. Are the formats long GOP or I-frame only? Consider the rudiments of compression. What do encoders look for when determining what pixel information to discard?

11. Compression is bad. Do you agree?

12. Finally, is the world an analog mess or a digital mess? Cite three (3) examples to support each perspective.

1 Kubrick, S. (Producer & Director), Lyndon, V. (Producer), & Minoff, L. (Producer). (1964). Dr. Strangelove or: How I Learned to Stop Worrying and Love the Bomb [Motion picture]. USA: Columbia Pictures.

2 I’m told that it’s possible to order items off the menu at In-N-Out, but you need to know a secret handshake or code word or something. It’s worth investigating.

3 AVCHD utilizing H.264 compression is much more efficient than HDV (MPEG-2) at roughly the same bitrate (21–24 Mbps).

4 A progressive scan is indicated by the letter p; when referring to interlaced frame rates, we specify fields per second followed by the letter i. So 24p refers to 24 progressive frames per second whereas 60i refers to 30 interlaced frames per second.

5 The Kell Factor specifies a 30% reduction in resolution for interlaced frames due to blurring between fields compared to progressive frames.

6 Variable shutter is expressed in fractions of a second or in degrees of the shutter opening as in a traditional film camera. The default in most cameras is 180° or 1/48th of a second at 24 FPS.

7 A troglodyte is a subspecies of Morlocks created by H. G. Wells for the novel The Time Machine (London, England: Heinemann, 1895). The dim-witted folks dwelt in the underground English countryside of 802,000 AD.

8 Cowie, R. (Producer), Eick, B. (Producer), Foxe, K. J. (Producer), Hale, G. (Producer), Monello, M. (Producer), Myrick, D. (Director), & Sánches, E. (Director). (1999). The Blair Witch Project [Motion picture]. USA: Haxan Films.

9 Although many scientists acknowledge the sample rate of the eye at 15 FPS, some engineers consider the interpolated frame rate of about 60 FPS as potentially more relevant.

10 Yeah, I know it’s more complicated than this, and we should really be talking about N- and P-type silicon, multi-electron impurities, and covalent bonding. See www.playhookey.com/semiconductors/basic_structure.html for a more in-depth discussion of semiconductor theory.

11 Charge-Coupled Devices (CCDs) are fundamentally analog devices. Complementary Metal-Oxide Semiconductor (CMOS) sensors perform the analog-to-digital conversion on the imager surface itself.

12 The PXL THIS festival has been held each year in Venice CA since 1991.

13 See Chapter 8 for discussion of FireWire, Serial Digital Interface (SDI), and High Definition Multimedia Interface (HDMI).

14 Neutral density filter used for exposure and depth of field control. See Chapter 9.

15 A CCD sensor moves an electrical charge to an area where the charge can be processed and converted intoa digital value.

16 Back to the future! One can argue that film is the only true digital technology. A grain of silver is either exposed or not exposed, similar to a bit that is either a conductor or not a conductor, a 0 or a 1. The binary nature of film emulsion seems as digital as digital can be!

17 Many Panasonic cameras now feature MOS type imagers designed to suppress the uneven color and brightness of conventional CMOS sensors. CCD imagers do not exhibit such variations.

18 See the discussion of Compression fundamentals later in this chapter.

19 See Chapter 5 for in-depth discussion of lens flare and chromatic aberration.

20 GOP refers to Group of Pictures. Read on.

21 Schubin, M. (2004, October). HDTV: High & Why. Videography. Retrieved from http://www.bluesky-web.com/high-and-why.html.

22 A Bayer mask contains a filter pattern that is 50% green, 25% red and 25% blue, mimicking the ratio of rods and cones in the human eye. It is named after its inventor, Eastman Kodak’s Bryce E. Bayer.

23 Pulse Code Modulation (PCM) is frequently listed in camera specs to denote uncompressed audio recording capability. PCM 48 kHz 16-bit stereo requires 1.6Mbps. Many HDV and low-cost HD camcorders record compressed (MPEG, Dolby Digital, or AAC) audio. Recording compressed audio or PCM at sample rates less than 48 kHz is not advisable for video production.

24 Kemp, B. (Producer), Mark, L. (Producer), Rothschild, R. L. (Producer), Schiff, R. (Producer), & Mirkin, D. (Director). (1997). Romy and Michele’s High School Reunion [Motion picture]. USA: Touchstone Pictures.

25 PCM 48-kHz 16-bit stereo audio at 1.6Mbps.

26 The “4” in 4:2:2 indicates the full resolution luminance value of a pixel sampled four times per cycle: 4 × 13.5 million times per second in SD; 4 × 74.25 million times per second in HD.

27 A compressor/decompressor, or “codec,” is a strategy for encoding and decoding a digital stream.

28 For general broadcast, 30 frames per second provides optimal compatibility with 60 Hz facilities. In 50 Hz countries, 25 FPS is the broadcast standard for standard- and high-definition TV.

29 The National Television Standards Committee (NTSC) met in 1941 to establish the frame rate and resolution for broadcast television. Although a 525 line/60 field standard was adopted, one member Philco championed an 800-line standard at 24 FPS. NTSC is often humorously referred to as Never Twice the Same Color. It is used most notably in the United States, Canada, and Mexico.

30 The term pulldown refers to the mechanical process of “pulling” film down through a projector. A 2:3 pulldown pattern enables playback of 24p video at 30 FPS (actually 29.97 FPS) on a NTSC television. The blending of frames and fields leads to a loss of sharpness when encoding to DVD and Blu-ray.

31 In audio, this dual-sample requirement is known as Nyquist’s Law. When CD audio was being devised in the 1970s engineers looked to a young child with unspoiled hearing. Determining a maximum perceived frequency of 22050 Hz, engineers doubled the value to reduce flicker and established the 44100 Hz sample rate standard for CD audio.