In a sense, we’ve always shot 3D. Since the dawn of photography, the challenge to shooters has been how to best represent the 3D world in a 2D medium. Because the world we live in has depth and dimension, our filmic universe is usually expected to reflect this quality, by presenting a lifelike setting within which our screen characters can live and breathe and operate most transparently.

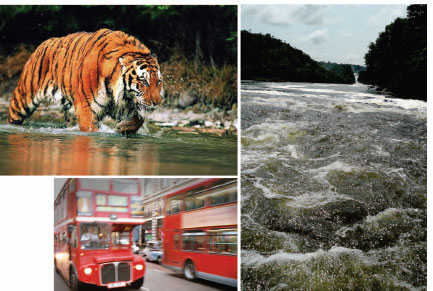

FIGURE 7.1

Knowingly or not, 2D shooters regularly integrate a range of depth cues to sell the 3D illusion. The highlights in the billiard balls and the soft focus background are suggestive of a scene that has dimension, that is real, that exists.

FIGURE 7.2

The converging lines at the horizon contribute to this scene’s strong 3D quality.

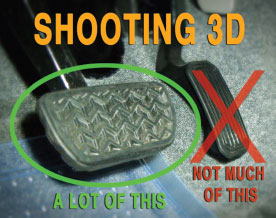

FIGURE 7.3

Shooters in 2D and 3D usually seek to maximize the texture apparent in a scene. Texture is a powerful depth cue because only objects with dimension exhibit it.

FIGURE 7.4

In 3D, the texture in the face is emphasized so care must be taken to suppress any unwanted detail. Sometimes the detail in the face is the story!

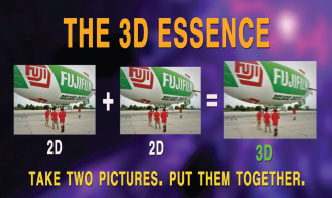

We take two pictures, one from each eye, and put them together in the same frame. Think of it as a multicamera shoot with two cameras’ images appearing simultaneously on one screen.

And therein lies the difference between a cinematographer and a stereographer. The 2D shooter is concerned foremost with the sanctity of the frame; the cinematographer uses focus, composition, and lighting to properly direct the viewer’s eye while defending the frame’s edges from elements that can detract from or contradict the intended visual story.

FIGURE 7.5

The 3D shooter places the left and right images atop each other and hopes viewers will accommodate the fusing of the two into a single stereo image.

FIGURE 7.6

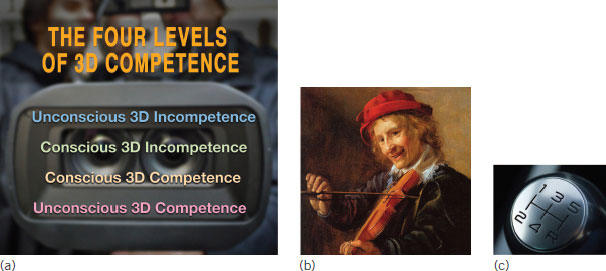

Picking up a camera for the first time, the new 3D shooter feels reassured by its familiar size and shape and proceeds to shoot unwatchable rubbish. Sobered by the experience, the shooter becomes aware of how much he doesn’t know. With practice, the shooter gains competence, but he or she must consciously apply the requisite skills until after many years and 10,000 experiences, the craft becomes automatic. The same stages of learning apply to other skills, such as playing a violin or operating a standard-transmission car. (b) Violin Player (c. 1640) by Dutch Golden Age painter Molenaer-Jan-Miense.

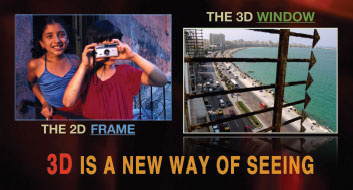

The stereographer is primarily concerned with the window. Unlike in 2D, the 3D viewer has the option of exploring objects at various planes behind the window; at the window aka the screen plane; or in front of the window in what may be regarded as the viewer’s personal space.

The skill required to manage this extra dimension requires a new way of seeing and capturing the world, and so for us accomplished in the 2D realm, it is truly disheartening to have to unlearn 90% of what we know. Forget the over-the-shoulder shots and the bread-and-butter close-ups. The 3D medium is a different beast that requires a vastly different set of skills.

Keep in mind we are not really shooting 3D—we are shooting stereo. In real life, 3D is immersive like walking down the aisle of a 7-Eleven and being enveloped by the trail mixes and Little Debbie cakes. We are transported by the allure of the merchandise and the magic of the place that sweeps over and around us.

The format we call 3D is not nearly so immersive, but it can still create intimacy as audiences experience characters in front of the screen and feel the personal interaction.

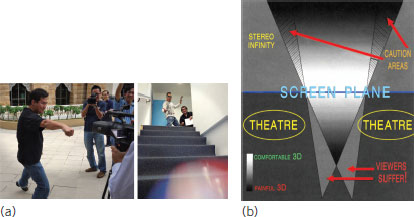

FIGURE 7.7

The 2D shooter places a frame around the world and instructs the viewer to “Look at this! This frame and everything in it are important.” The 3D shooter establishes a window through which the audience may experience multiple scenes simultaneously in front of, at, or behind the screen plane.

FIGURE 7.8

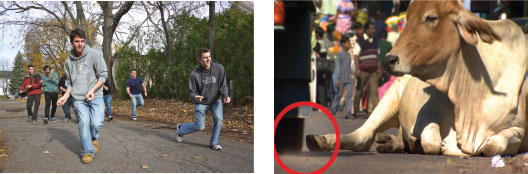

Shooting 3D requires a new way of seeing and capturing the world. It’s easy to assume we know more than we do.

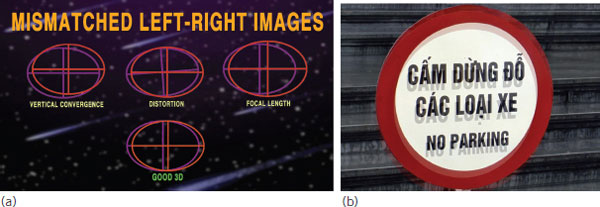

There’s nothing real about it. Audiences create the stereo image in their brains by fusing the two images presented on screen. Most go along with the ruse, but here’s the rub: The front of the brain understands it’s only a movie and there’s nothing to fear. But the back of the brain, the primitive part responsible for our survival and physical well-being, isn’t so sure. The primitive brain reacts with fear at our fellow species members running around with their heads or body parts chopped off, when they are not in focus, or wrapped in red; these conditions, hardly notable in 2D, provoke an involuntary automatic response in 3D. The two parts of the brain in conflict can and do induce headaches, nausea, and even epileptic fits. No 2D filmmaker beyond Kevin Smith has the potential to wreak such wrenching pain on viewers. Because 3D toys with the animal portion of the brain, technical snafus can have a serious impact on the 3D story. Left and right images that are vertically misaligned or are rotated off axis are especially difficult for the primitive brain to reconcile. The one-piece camcorders from Panasonic use a bevy of built-in servomotors to control these errors, a major advantage over the complex mirror rigs used commonly on high-end productions.

FIGURE 7.9

The stereo shooter must understand the physiological implications of the medium. About 12% of audience members are unable or unwilling to form the 3D image.

FIGURE 7.10

The injection of red into a 3D scene invokes instant fear and elevates the blood pressure of an audience. As a shooter, you cannot alter this response because on a primitive, unconscious level, the threat is real. The animal brain associates red with fire, blood, and gore—and impels us to run like hell.

Understanding the physical layout of the world protects us from the perils of daily life. Whether we’re crossing a busy street or ducking a 100-mph fastball, it’s fortunate for us that this portion of our brains operates on autopilot; the threats to our survival require an instantaneous response unslowed by a conscious thought process.

FIGURE 7.11

Facing the menace of marauding college students or a speeding rickshaw, we constantly assess the depth cues in the world around us for imminent approaching danger.

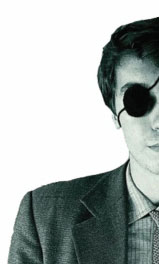

FIGURE 7.12

FIGURE 7.13

Folks with one eye can still have good depth perception and operate a motor vehicle, despite some loss of peripheral vision and ability to navigate in tight spaces.

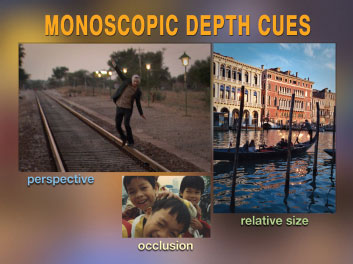

Monoscopic cues like linear and aerial perspectives contribute significantly to our perception of a third dimension. The direction and character of shadows and their relative size are also strong communicators of depth. We know for example that the actor in Figure 7.15 is much smaller than the train in the distance. If the actor appears larger in frame, it must be because the train is very far away. Such sizing cues communicate a strong sense of depth.

In Figure 7.16, one actor partially blocks the other. We know, from the way the world works, that the more distant actor is likely still in one piece, the unseen part of his body hasn’t disappeared but is occluded by the closer actor. Occlusion cues are monoscopic and so do not require the muscular effort of fusing two separate images, which may over time contribute to viewer fatigue.

FIGURE 7.14

FIGURE 7.15

The relative size of the train and actor effectively communicates a third dimension.

FIGURE 7.16

An object partially blocking another object conveys depth. No physical muscular effort required.

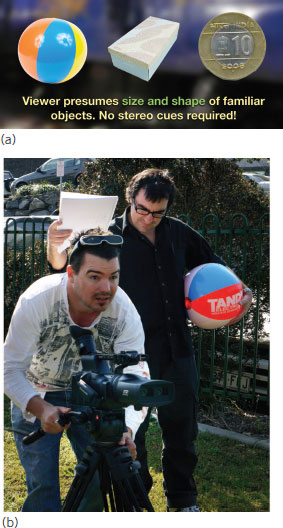

Increasing the number of monoscopic depth cues can help produce a more comfortable viewing experience. We can place objects of a familiar size and shape around the set, or move the camera laterally along a slider rail. A tracking camera produces an abundance of motion depth cues, which help an audience acclimate to the strange and unnatural stereo environment.

FIGURE 7.17

The viewer assumes that the stones at the top and bottom of the frame are the same size. If the stones don’t appear that way in the scene, it must be because they are receding into the distance.

FIGURE 7.18

The perception of depth is influenced by our knowledge of the world. We know the beach ball is round, so we don’t need a stereo view to understand its shape. We know the configuration of the shoebox and the coin without seeing around the objects. As part of my Brisbane workshop (b) in 2010, my students considered ways to integrate a familiar monoscopic depth cue, the beach ball, into a scene.

FIGURE 7.19

We see the interplay of highlights and shadows and understand this object is real. Only 3D objects exhibit such texture.

FIGURE 7.20

Motion cues are powerful communicators of depth. The aircraft flying at hundreds of kilometers per hour appears stationary in the sky. How can this be? Your audience understands how fast planes really fly. It can only mean that the aircraft is very far away.

Whereas monoscopic cues are sufficient to protect us from threats beyond 15 meters (50 ft), our ability to process stereoscopic depth cues is essential to shield us from more immediate dangers. In other words, there is a limit to the depth information that a single eye can provide. Beyond 15 meters, there are no stereoscopic cues because our eyes are not separated sufficiently to see around objects at such distance.

FIGURE 7.21

A moving camera multiplies the number of easy-to-read depth cues. A slider rail or Steadicam work well in 3D!

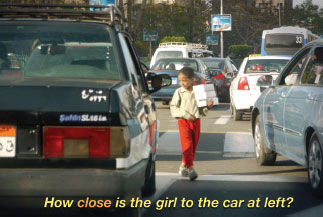

FIGURE 7.22

This girl selling tissues in the streets of Cairo is very close to the car at left. But how close? The stereo view sees slightly around the car and reveals her true peril.

Stereoscopic cues are powerful because they provide input that cannot be gleaned from a single eye’s perspective alone. The successful 3D shooter understands how to moderate the use of stereo revelations by using monoscopic cues wherever possible to communicate depth.

Keep in mind that shooting 3D well is a matter mostly of applying the brake, of holding back the depth in a scene before giving it a little gas and rarely a lot of gas. With 3D, you have the power to entertain and to create the most amazing stories, or you can make people wretch. Whatever you want. It’s your choice. What kind of 3D shooter are you?

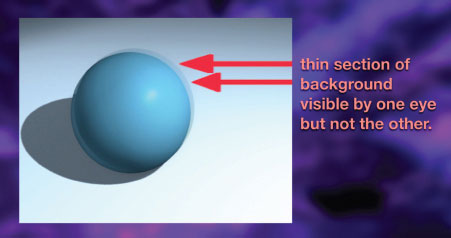

FIGURE 7.23

FIGURE 7.24

The detail revealed at the sides of objects cannot be re-created in postproduction. This is why 3D shooters have to get it right: The amount of depth in a scene is fixed at the time of image capture.

FIGURE 7.25

Shooting 3D is mostly brake—with a little gas. Continuously hotfooting it will leave your audience writhing in pain.

Let’s keep it straight: Interocular distance refers to the separation of the eyes; interaxial distance refers to the separation of the camera lenses. A 3D camera’s interaxial distance has a significant effect on the perceived shape and size of objects. A narrow interaxial allows objects to approach the camera more comfortably and enables closer perspectives, but the reduced stereo effect may require shorter focal length lenses to restore the proper roundness. Conversely, a wide interaxial enhances the 3D at greater distances, but a telephoto lens is required to flatten the field slightly and mitigate the unnatural stereo revelations.

Cameras fitted with a 60mm interaxial are said to be orthostereoscopic because they closely approximate the human perspective at 3 to 15 meters (10–50 ft). For shooters accustomed to working at a more typical 1.5- to 2.5-meters (6–8 ft), a narrower interaxial from 25 to 45 mm is usually preferred in combination with a shorter focal length lens.

FIGURE 7.26

Interocular? Interaxial? Although some shooters use the terms interchangeably your stature as a 3D shooter and craftsman will be enhanced by the use of the appropriate reference.

FIGURE 7.27

Which interaxial makes sense for you? 58mm? 42mm? 28mm? The implications on the 3D story can be profound.

FIGURE 7.28

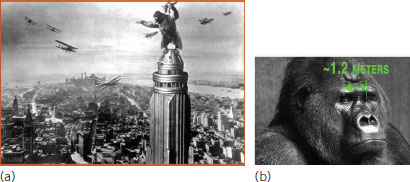

Human beings cannot perceive much stereo beyond 15 meters (50 ft), so expansive scenes such as cityscapes can be captured just as well with a 2D camera for 3D presentations.

FIGURE 7.29

Whose point of view are we in? An audience peering through King Kong’s eyes perceives the city like a toy model because Kong’s wide interocular distance allows him to see around buildings that humans normally cannot.1 If a human is able to see around a building 2 miles away, the brain assumes the object must be a miniature.

FIGURE 7.30

3D producers often demand that every shot exhibit depth, so sports, such as football/soccer played over a large field, fare poorly in stereo as distant players look like little toy dolls! Honestly, take a look around. Gaze into the heavens. Some things in 3D, as in life, ought not to show depth.

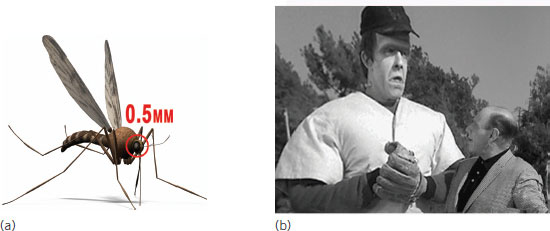

FIGURE 7.31

An audience seeing through a mosquito’s eyes is not able to see around objects it would normally expect to so objects appear huge! The fella to the right of Herman Munster (Fred Gwynne) is the great Brooklyn Dodgers manager Leo Durocher. Mr. Durocher’s eyes are not sufficiently separated to see around Mr. Munster so Mr. Munster looks massive—which he is!

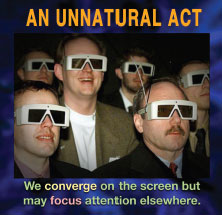

James Cameron, the 3D filmmaker and King of the World, puts it simply: You converge on the money. If Tom Cruise is in your scene, you converge on Tom Cruise. If you’re shooting a Mercedes commercial, you converge on the Mercedes. In 3D, the viewer’s eyes go to the screen plane first, the plane of convergence, so it makes sense that the bulk of your storytelling should be focused there.

So audiences converge their eyes on the screen and then hunt around for other objects in front of or behind the screen. This seeking and fusing of images across multiple planes occurs across every cut in a 3D movie, presenting a potentially significant disruption to the flow of the finished stereo program.

For this reason, shooters try to avoid large shifts in the position of objects relative to the screen. One way to achieve this is to feather the amount of depth in and out within a scene during original photography. The strategy can ease the bump across 3D transitions and obviate the need to perform substantial horizontal image translation (HIT)2 in postproduction.

Most 3D plug-ins have a HIT capability to adjust the overlap of the left and right images. Note that repositioning of the screen plane via HIT does not affect the actual depth as the occlusion revelations in a scene are baked in and can’t be altered.

Also, in cameras that originate in 1920 × 1080, there is no ability to perform HIT without blowing up the frame slightly to accommodate the shift in pixels. This is why 2K-resolution cameras (or greater) are preferred for 3D production, the 2048 horizontal resolution allows for a 10% horizontal translation without loss of resolution when outputting to 1920 × 1080, the preferred display format for most 3D movies and programs.

FIGURE 7.32

NLE platforms usually require a plug-in for stereo editing. Left–right separation, vertical gap, rotation, and magnification can be adjusted in the plug-in settings.

FIGURE 7.33

Viewing 3D, the eyes converge first on the screen then explore objects lurking in other planes. Objects in front of the screen in the audience (negative) space may have humorous implications, which might not be desirable in a drama.

FIGURE 7.34

Just as in life, converging on objects too close to the eye or camera can induce pain and fatigue.

FIGURE 7.35

3D takes advantage of a loophole in our physiology that allows us to separate focus from convergence. For some folks, this takes practice.

FIGURE 7.36

The 3D shooter’s first order of business: Where is the screen plane? Where do I converge?

FIGURE 7.37

The audience’s eyes go first to the screen plane across every cut.

THE THREE TENETS OF STEREOGRAPHY

2. Focus is not convergence.

3. Focus is not convergence.

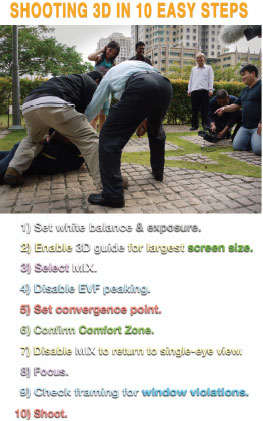

The essential functions are familiar—white balance, exposure, shutter, and focus. With respect to scanning mode, it is best to avoid the interlaced formats, which may complicate color correction and post processing and impede the viewer’s merging of the left and right images that contain a temporal separation. Although shooting in progressive mode at 24, 25, 30 or 60 FPS is preferable it may not always be possible for broadcast applications.

With the advent of The Hobbit: An Unexpected Journey (2012)3 presented at 48 FPS, increased attention is being paid now to shooting 3D at a higher than normal 24 FPS. Although resolution is important, it is not nearly as critical as frame rate to realizing a compelling viewer experience. The impact of 3D HFR (High Frame Rate) is felt notably in the smoothness of motion and the ease and willingness of audiences to merge the left and right images. The film has raised significant issues however with respect to genre and viewer perceptions. I discuss some of these at the conclusion of this chapter.

3D requires matching photography in each eye. This is automatically done for us in one-piece 3D camcorders—but not always. Vertical misalignment can still be problematic especially with longer focal length lenses. Most 3D camcorders have a manual adjustment to correct the error.

FIGURE 7.38

Integrated 3D camcorders feature an intricate series of servomotors that lock the left and right imaging systems together. The matching is a delicate balancing act especially given the complexity of a zoom lens!

FIGURE 7.39

When white balancing a 3D camera, be sure the white reference covers both lenses! Interestingly, when superimposing a pair of images this far out of whack, the brain selects the properly balanced left eye and disregards the right!

FIGURE 7.40

The vertical gap apparent in (b) is among the most objectionable of stereo defects. Vertical misalignment can be manually corrected in some cameras.

On one hand, it is vital to see as much 3D as we can to develop a proficiency at spotting defects like vertical registration errors or excessive parallax. On the other hand, we know that shooting 3D is a lot like using drugs. Over time, we need more to feel the same effect, as we become acclimated to the 3D gimmick and are able to fuse increasingly higher levels of stereo. Keep in mind that our viewers are not nearly as addicted or as proficient at viewing 3D.

When operating a 3D camera, it is important to see our work in stereo from time to time to test our assumptions and confirm all is well, but we should not be making critical imaging decisions based on a small monitor’s stereo image. A monitor’s view can be misleading and should not be referenced by the shooter or client as an accurate representation of the final work. Seeing a compelling image on a small monitor only means the stereo image looks good on that size monitor. It has no bearing on the viability of the stereo image on larger (or smaller) screens.

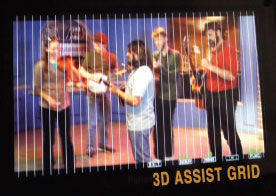

The camera finder or monitor should be set to mixed mode to ascertain the position of the screen plane, where the overlapping left and right eyes appear as a single image. It is important to disable peaking and EVF 4 to more clearly see the outline of the overlapping the left and right channels. Switch to the base view (left eye only) to frame, focus, and follow action. Don’t forget to focus. That’s important.

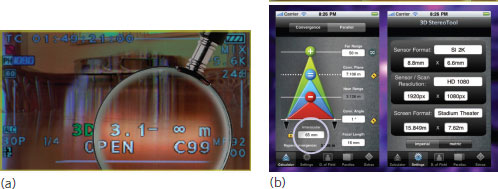

FIGURE 7.41

Shooters must learn to assess proper parallax from the mixed image—not the 3D view.

FIGURE 7.42

The 3D Assist in some monitors provides a grid to check for excessive parallax.

FIGURE 7.43

Do not rely on a 3D monitor for shooting. It only reflects how the stereo will look on that size screen!

FIGURE 7.44

Proper parallax is assessed while monitoring the mixed left and right images.

The human adult’s eyes are approximately 65 mm apart. This means to avoid the pain and discomfort of splaying the eyes outward the separation of the left and right images regardless of screen size cannot exceed 65 mm (2.5 in.). On a tablet or mobile device, the 65 mm separation represents a relatively high percentage of the screen area, which means small-screen viewers are able to enjoy greater depth than large-screen viewers in a cinema, where the 65 mm represents less than 1% of the projected image.

For this reason, 3D shooters must know and take into account the largest anticipated screen size, even at the risk of losing depth on smaller displays. In order to preserve a consistent look across multiple platforms, James Cameron was said to have prepared 34 different versions of Avatar (2009)5 for different screen sizes, distribution formats, and display devices.

If in doubt about the ultimate display venue, it’s always better to err in favor of the larger screen. The conservative approach may also help preserve one’s broadcast options. SKY TV currently restricts 3D programming to 2% separation in positive space and only 1% in negative space. That’s fewer than 20 pixels of parallax in front of the screen!

FIGURE 7.45

Most audiences have limited proficiency when viewing 3D. A conservative approach to setting parallax can help smooth the public’s transition into this alien universe.

FIGURE 7.46

Be considerate. Regardless of screen size, 65 mm is the maximum amount of left–right separation that we can comfortably handle.

We have various tools to help determine the proper separation of the left and right images. The integrated 3D camera features a convergence control that sets the relative position of the screen plane and amount of parallax. In Panasonic cameras, the convergence control is linked to a 3D Guide, a numerical readout that indicates the distance range of objects that lie safely within the audience’s comfort zone. The comfort zone is calculated mathematically and varies according to screen size, interaxial distance, lens focal distance, and focal length. At longer focal lengths, the comfort zone may be reduced to a few centimeters!

FIGURE 7.47

Like focus, the convergence may also need to be adjusted mid-scene to ensure actors do not venture to the wrong side of the screen!

FIGURE 7.48

(a) A 3D shooter must be cognizant at all times of the viewer’s comfort. Unfused objects (UFOs) such as fists and beach balls that exit the comfort zone and race toward the viewer are time sensitive and may not linger in front of the screen (b) A comparable level of discomfort may also be realized from hyperdiverged objects behind the screen.

FIGURE 7.49

The 3D Guide (a) in Panasonic cameras features settings for 77- and 200-inch screens. This display indicates a comfort range for objects situated from 3.1 meters to infinity. Smartphone apps like this one (b) support a wide range of formats, sensor sizes, inter-axials, and display venues.

FIGURE 7.50

FIGURE 7.51

Allow actors to breathe! Placing the convergence point too close to an actor may lead him to fall out of the screen!

A window or edge violation occurs when a person or another object appears in front of the stereo window and is cut off by the window’s edges. It’s illogical that the screen window located behind an object is somehow able to obscure it. A window violation that truncates a human body is especially disturbing, because the primitive brain doesn’t react well to detached heads and torsos hanging willy-nilly in space.

The seriousness of window violations is highly dependent on screen size. Audiences in front of a 20-meter cinema screen are more forgiving because they are less aware of the window edges. With the move to mobile devices and smaller screens, the impact and disturbing nature of window violations are much greater.

FIGURE 7.52

Not all window violations require remediation. An audience in a large cinema, not seeing the edges of the 3D volume, can be very forgiving.

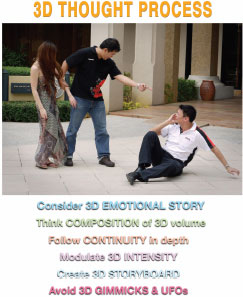

If 3D is to flourish and become more than a gimmick, it is critical that the third dimension contribute to the emotional stakes of the story. Similar to how color may be used to track the emotional journey of a character, so, too, ought a character’s three-dimensionality or roundness reflect in some way his filmic journey.

When planning a 3D production, the depth in each scene should be thoroughly explored from the points of view of the characters. The storyboard should consider the relative placement of objects and players in positive and negative space, and continuity should be carefully monitored on multiple planes understanding that the viewer’s attention may not be limited exclusively to the screen plane.

Careful blocking of the actors allows the shooter to modulate the 3D intensity during the course of a production, restraining the amount of stereo when it is not needed for example in a dialogue scene, and letting go with greater abandon when it is needed in a chase or action scene. This level of control elevates the impact of the work and creates a more comfortable and satisfying audience experience.

FIGURE 7.53

The 3D storyboard details the usable depth in each scene and helps ensure a consistent position of the screen plane.

FIGURE 7.54

When blocking 3D scenes, we integrate as many monoscopic depth cues as we can to facilitate more comfortable stereo viewing.

FIGURE 7.55

Forcing a viewer to fuse excessively diverging images behind the screen is exhausting for a viewer and will lead to cries of anguish.

The 3D shooter, like shooters in general, understands the critical importance of treating actors well. When our actors, bride and groom, president of the republic, or CEO look warped or distorted, we as shooters suffer. We lose clients, we lose opportunities, and we lose income.

Preserving proper roundness is the 3D shooter’s primary responsibility. In 2D, a long telephoto lens flattens the facial features of actors. The extreme wide-angle lens conversely produces excessive roundness in close-ups, conveying the not-so-flattering look of an ogre.

FIGURE 7.56

The head and face of your 3D subjects must exhibit proper shape and volume. Grotesque distortion of a leading lady’s visage is not a smart career move!

Sometimes the monster look is intentional, and the story demands such a treatment. In 3D, the distortion of the head and face is especially disturbing. To primeval men and women, the sight of an alien with a medicine ball for a skull spells danger. It means “Run! Get away!” The unfamiliar being from another world could be a serious predator.

3D camcorders with humanlike interaxials do a good job at preserving the proper roundness in the face. Utilizing a telephoto lens with these cameras reduces the roundness in the human form and produces the effect of a die-cut Colorforms 6 figure.

It happens all the time. I see shooters in my workshops crouching low for a ground-level shot or forcing the perspective of a handrail or staircase. I see them zooming in for a choker close-up,7 shooting over the shoulder, or going handheld.

These strategies do not work because 3D engages a portion of the brain that is primitive, fearful, and literal. The shakycam of 1980s’ MTV is problematic in the stereo world—and this is why: Look around wherever you are, in the college library, airport loo, or the aisle of a local bookstore where you’re not going to buy this book anyway. What do you see?

Well, one thing, unless you’re drunk, high on drugs, or live in Southern California, is that the walls aren’t moving. To the primitive brain, a rocking and rolling room is powerful motivation to seek immediate more secure shelter.

FIGURE 7.57

Same deal with focus. In 2D scenes, soft focus can be an effective. It says to an audience, “Don’t look at this. It’s not important to the story.” In 3D, the opposite applies. Wherever we are in the world, most everyone and everything we look at is in focus. If someone or something is not in focus, we perceive a potential threat and scrutinize the object more closely to decipher what the heck it is. The 3D audience, fearing for its safety, can’t keep its eyes off out-of-focus objects!

The same can be said of areas in a scene that lack clear depth cues. Significant over- or underexposed areas are difficult for the primitive brain to process and let go of. Likewise, expansive surfaces such as a wall or a table that lack texture can be difficult to reconcile, as well as reflections in mirrors and glass and repeating patterns in waves and puddles.

FIGURE 7.58

Reverting to old 2D habits? Consider having someone spank you.

FIGURE 7.59a

The approaching walkway at ground level is coming out of the comfort zone. In 3D, the forcing of perspective in this way will induce severe pain.

FIGURE 7.59b

The repeating patterns in puddles make it difficult to assess the location of individual elements. Without clear depth cues, the viewer places these areas at the screen plane, which may not be logical.

FIGURE 7.59c

Out of focus 3D is usually not a good option. For this reason, small sensor 3D cameras are preferred owing to their inherent greater depth of field. James Cameron’s Avatar was photographed with cameras fitted with 2/3-inch sensors!

FIGURE 7.59d

Large areas of over- or underexposure lack clear depth cues and should be ameliorated.

FIGURE 7.59e

Handheld 3D can be effective for action scenes and barroom brawls because the individual shots are brief and the viewer is not inclined to try to fuse the left and right images.

FIGURE 7.59f

The careless cropping of the head and other body parts in front of the screen screams, “Danger! Run for the exits!”

FIGURE 7.60

In 3D, the flat cutout of Mr. Edison looks like a flat cutout. Objects that are supposed to be 3D must actually be 3D!

Audiences do not like wearing glasses to view a 3D movie. Aside from the inconvenience and the bad fashion statement, the notion of wearing dark glasses in a dark theatre strikes many folks as counterintuitive. Indeed, second to the discomfort of the glasses themselves, audiences regularly cite the lack of screen brightness as the other serious impediment to enjoying 3D movies in a cinema.

3D glasses provide the left and right eye separation required to view stereo. Active glasses utilize an electronic shutter that synchronizes to a transmitter in the projector or monitor. For home use active glasses are usually preferred as they offer better off-axis viewing, a consideration for folks watching 3D while folding the laundry or preparing a spaghetti and meatball dinner.

Active glasses offer brighter viewing than the passive type because viewers receive the full 1080p image in each eye. The active system also does not require a special screen, so images can be projected on any white wall in a gallery, classroom, or apartment.

FIGURE 7.61

Akin to normal sunglasses, passive 3D eye-wear relies on polarizing filters with a left and right eye orientation that reduces brightness and resolution by 50% in each eye. Passive glasses without electronics are much cheaper to manufacture and require no battery or charging, and so are favored in commercial cinemas and public venues. A silver screen or monitor that preserves polarization for each eye is required to view 3D with passive glasses.

When no better system is available, the old anaglyph system provides an easy low-cost way to view stereo content. Anaglyph 3D encodes the left and right images using opposite colors usually red and cyan. When played back on a 2D laptop or TV screen, anaglyph 3D provides a simple way to check assumptions regarding parallax, position of the screen plane, and smoothness of transitions. Many editors operating without a stereo monitor go this route to occasionally check their work.

Eventually, glasses-free viewing will become the norm as large cinema screens give way to mobile devices as the primary means for displaying 3D content. Handheld displays grow brighter each year, and can be fitted with a lenticular8 lens to achieve the required left–right separation, effectively placing the 3D glasses on the device rather than the viewer. Low-cost autostereo (glasses-free) TVs with a wide viewing angle are still at least several years away.

FIGURE 7.62

Incompatible eyewear among manufacturers have contributed to the public disillusionment with all things 3D. These newly minted shooters in Brisbane don’t seem to mind their flashy new accoutrement.

DON’T EVEN THINK ABOUT ENJOYING A 3D MOVIE!

If you’re a shooter learning the ropes and are out seeing a 3D movie with a date, don’t just enjoy the movie or the date! It’s time to get educated! Raise and lower your glasses frequently to check the parallax on-screen. When scenes work, note the separation, and when scenes don’t work, note that too. Especially note that.

3D VIEWING ON A BIG SCREEN WITHOUT GLASSES?

This Magic Eye® stereogram conceals a three-dimensional scene behind a repeating pattern of roses. Are you able to de-synchronize convergence from focus to reveal the hidden image? A few of my students can from across a room without glasses!

FIGURE 7.63

In a 2012 poll, four out of five Americans expressed a negative or mostly negative view of 3D movies and TV. 9 By mid-2012, just 2% of the 330 million TVs in the United States could show 3D programs. Avatar was supposed to spur 3D’s grand entrée into homes around the world. It didn’t work out that way. Indeed any way you look at it, passively or actively, the stereo picture doesn’t look good … or does it?

FIGURE 7.64

This camera’s humanlike interaxial makes sense for small-screen displays that support greater parallax.

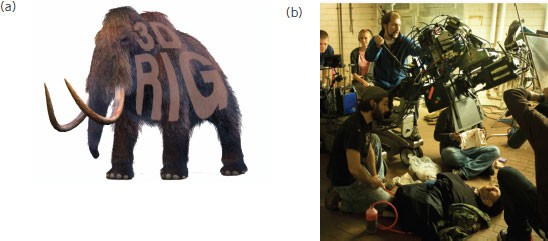

FIGURE 7.65

It’s essential to learn all we can now about the $250,000 3D behemoth rigs because in a few years they will be gone.

FIGURE 7.66

Less is more. It’s true in lighting. It’s true in life. And it’s true in 3D cameras.

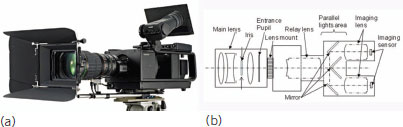

FIGURE 7.67

3D cameras with dual lenses employ complex technology to ensure a common optical axis, image size, and focus. This single-lens Sony prototype uses mirrors in place of shutters to create the left and right images. The simpler approach ensures better registration and precision at frame rates up to 240 FPS.

The digital editing of stereo programs is accomplished using familiar tools. Final Cut Pro, Adobe Premiere, and Avid, allow for simple editing of the base view (left eye), with a plug-in automatically conforming the right eye for length, color correction, geometry, and so on.

Editors new to 3D understand that the pacing of stereo programs must be slower than 2D to accommodate the moment of disorientation across every cut, as audiences must each time locate the screen and any objects lurking behind or in front of it. The confusion across 3D transitions may be mitigated by adjusting the position of the screen plane while shooting or in post via a plug-in such as Dashwood Stereo3D. Its HIT function may also help address serious window violations by pushing the offending objects back into or behind the screen. As mentioned previously in cameras that shoot 1920 × 1080, there is no room to adjust the left–right eye overlap without enlarging one or both images and suffering some resolution loss.

FIGURE 7.68

Use caution when editing to a stereo monitor. Besides developing a tolerance for overly aggressive 3D images, the pacing, parallax, and depth utilization indicated by the small screen may not be applicable to larger venues.

FIGURE 7.69

Digital editing is simple and straightforward—a major reason why 3D is going to stick around this time.

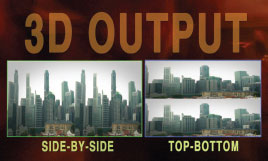

FIGURE 7.70a

Whereas SbS sacrifices 50% of the horizontal resolution in each eye, top-and-bottom (T&B) foregoes 50% of the vertical resolution, which may be preferable for viewing sports with lots of lateral motion. Three-dimensional commercial discs utilize a frame packing strategy that stacks the left and right images in order to retain full resolution in each eye. A special encoder not widely available is required to prepare full resolution 3D discs.

FIGURE 7.70b

The SbS format is usually preferred for distributing one-off discs for screeners, demo reels, weddings, and small corporate projects.

FIGURE 7.70c

Adobe Encore allows for limited BD authoring as well as encoding and burning of SbS and T&B discs on a Mac or PC. Burning a Blu-ray volume to recordable DVD media is possible, but not all BD players will recognize the disc unless encoded to an H.264 long-GOP “consumer” format.

Despite the 50% loss of horizontal resolution, side by side (SbS) is the most common configuration common format for delivering 3D content for broadcast, Web, and Blu-ray. Note that 3D Blu-ray (3DBD) is a different format delivering full 1080p resolution to each eye. 3DBD requires its own player and a special encoder that is very expensive and not widely available. SbS or top-and-bottom (T&B) consumes no more bandwidth than does 2D HD, making SbS attractive to broadcasters and others working within narrow bandwidth constraints.

This time we’re not talking about theatrical 3D. That ship has sailed, and by almost all accounts, it’s teetering and taking on water. If current trends continue and the major studios adhere to current plans, 3D feature films will account for no more than 10% of industry releases by 2015. Compare that to more than half of all studio product in 2011, which means any way you slice it, converge it, or horizontally translate it, 3D for the big screen is becoming a nonissue.

Notwithstanding the loss of public appetite for 3D movies and TV, the next 3D go-around will be much more virulent and far-reaching. The impending wave will have an impact on a broad swath of nontheatrical users, from corporate, industrial, and educational concerns to weddings and events, tourism, and short-form entertainment vehicles such as music videos and eventually episodic television. The catalyst for this transformation is the imminent introduction of 3D tablets and smartphones, that is, a 3D iPad, with potentially millions of portable stereo viewers entering the market virtually overnight.

Understand that I have no direct knowledge of Apple intending to release a 3D iPad, iPhone, or anything else. If I did, Apple would hunt me down, tie a pair of leaden Lisa computers to my feet, and toss me in the Sacramento River. I would die there and nobody would care. Still the stereo writing is on the wall. This time, 3D is for real and you can take that to the bank. Just be sure to leave your 3D glasses. You won’t be needing them.

FIGURE 7.71

A flood of new 3D mobile devices will create a robust demand for nontheatrical applications from corporate and industrial training to remote learning and entertainment. This is the future baby!

Is it possible to shoot 2D and convert to convincing stereo later? It depends. Although good 2D to 3D conversion is expensive and only applies to big-budget productions, it is sometimes possible to achieve acceptable real-time results in some contexts, such as American football, owing to the relative ease of deciphering the spatial relationships among the players, field, and the frame edges. Unsurprisingly, close-ups are the most difficult scenes to convert on the fly, owing to the lack of occlusion revelations required for producing compelling stereo.

FIGURE 7.72

This real-time $40,000 converter exploits the monoscopic depth cues within a scene to produce stereo-like images.

The Hobbit: An Unexpected Journey challenged the validity of many heretofore presumed stereo notions. Its high frame rate at 48 FPS appears at first unnatural and stilted like watching a rehearsal tape for a high school play. But after 10 minutes or so, audiences become acclimated to the new hyperreal look, and can then appreciate the 3D story and the clear, smooth images.

The movie challenged the long-term conditioning of viewers for whom a frame rate greater than 24 FPS communicates the look and feel of television, not cinema. As The Hobbit demonstrated there is no magic formula for shooting 3D. The out-of-focus backgrounds so vilified by 3D trainers such as myself were really not so bothersome on the big screen. Ditto for the moderate-to-fast cutting tempo, which audiences didn’t seem to mind either.

So let’s put it this way: 3D defects fall into two main categories: the aesthetic, such as the evolving use of soft focus and fast cutting, as the animal brain in us becomes more acclimated; and the technical, such as grossly mismatched left and right images, vertical disparities, or the inadequate brightness of a projector or a monitor. These latter defects are clearly problematic for audiences and really do inflict pain, especially when several of the most serious defects are combined!

FIGURE 7.73

The Hobbit: An Unexpected Journey challenged many aspects of the 3D craft from frame rate and soft focus to use of texture and pace of cutting. The wisdom of such techniques will continue to be debated as audiences are exposed increasingly to 3D content.

SHOW ME A WORLD I HAVEN’T SEEN BEFORE

The 3D perspective supports our greater mission to represent the world in a unique and captivating way. With tens of millions of mobile devices about to enter the market, the opportunities for 3D shooters will grow exponentially in the years ahead.

FIGURE 7.74

Be bold. Embrace 3D’s alien universe with all the gusto within you.

FIGURE 7.75

Seek a new 3D horizon.

FIGURE 7.76

EDUCATOR’S CORNER: REVIEW TOPICS

1. Please explain the statement: We’ve always shot 3D.

2. Describe three (3) differences between a videographer and a stereographer. How does each type of shooter approach his or her craft differently?

3. List five (5) depth cues used commonly by 2D shooters. Do the same monoscopic cues work more or less effectively in a 3D environment?

4. Identify (3) factors that influence the placement of the screen plane.

5. Why is the ability to unlink convergence from focus so critical to 3D viewing and storytelling?

6. 3D is a technical trick that impacts the primitive brain. Identify three (3) conditions on screen that could trigger an automatic fearful response in an audience.

7. What might be the storytelling rationale for placing objects in negative space, that is, in front of the screen?

8. “3D is antithetical to effective storytelling. It constantly reminds us we are watching a screen and completely prevents emotional involvement.” Do you agree? Please discuss.

9. Consider the roundness of objects and characters in a 3D story. From a camera perspective, list three (3) factors that impact the size and the shape of an actor’s head and face in a scene?

10. Is it ever appropriate to blame the viewer for a bad 3D experience?

1 I have no idea what King Kong’s interocular distance might be. The 1.2 meters I’ve suggested is speculative.

2 Horizontal Image Translation is a process of shifting the left- and right-eye images to improve the apparent position of the screen for more comfortable viewing. HIT can also alleviate excessive parallax in original camera footage.

3 Blackwood, C. (Producer), Boyens, P. (Producer), Cunningham, C. (Producer), Dravitzki, M. (Producer), Emmerich, T. (Producer), Horn, A. (Producer), … Jackson, P. (Producer & Director). (2012). The Hobbit: An Unexpected Journey [Motion picture]. USA: New Line Cinema.

4 The electronic viewfinder (EVF) peaking in most cameras may be disabled via an external switch or control.

5 Cameron, J. (Producer & Director), Breton, B. (Producer), Kalogridis, L. (Producer), Landau, J. (Producer), McLaglen, J. (Producer), Tashjian, J. (Producer), … Wilson, C. (Producer). (2009). Avatar [Motion picture]. USA: 20th Century Fox Film Corporation.

6 Colorforms, invented in 1951 by Harry and Patricia Kislevitz, were part of a children’s play set consisting of thin patches of vinyl that could be applied to a plastic laminated board to create imaginative scenarios.

7 A choker close-up is just what it sounds like—a tight framing that cuts through an actor’s forehead and chin.

8 A lenticular screen projects interlaced left and right images through tiny lenses embedded in an overlying film. Lenticular displays require precise viewer placement to ensure good 3D effect. Future lenticular TVs may track the viewer’s position in front of the screen and adjust the dual images accordingly.

9 Who’s Watching? 3-D TV Is No Hit With Viewers; USA Today. (2012, September 29). Durham, NH: Leichtman Research Group.