Foundations of Audio for Image

Overview

The concepts presented in this chapter are intended to develop conceptual common ground and a working vocabulary that facilitates communication between the filmmaker, sound designer, and composer. Where possible, these concepts are presented in the context of the narrative film.

Perception of Sound

Sound

There are three basic requirements for sound to exist in the physical world. First, there must be a sound source, such as a gunshot, which generates acoustic energy. The acoustic energy must then be transmitted through a medium such as air. Finally, a receiver, such as a listener’s ears, must perceive and interpret this acoustic energy as sound. In Film, the animator creates the first two conditions, the sound designer represents these conditions with sound, and the audience processes the sound to derive meaning. Sound can also be experienced as a part of our thoughts, in a psychological process known as audiation. As you are silently reading this book, the words are sounding in your head. Just as animators visualize their creations, composers and sound designers conceptualize elements of the soundtrack through the process of audiation. Voice over (in the first person) allows us to hear the interior thoughts of a character, an example of scripted audiation.

Audio without image is called radio, video without audio is called surveillance.

When acoustic energy arrives at our ears, it excites the hearing apparatus and causes a physiological sensation, interpreted by the brain as sound. This physiological process is called hearing. However, if we are to derive meaning from sound, we must first perceive and respond to the sound through active listening. Audiences can actively listen to a limited number of sounds present in the soundtrack. Fortunately, they can also filter extraneous sounds while focusing on selected sounds; this phenomenon is known as the cocktail effect. One shared goal of sound design and mixing is to focus the audience’s attention on specific sounds critical to the narrative.

To listen is an effort, and just to hear is no merit. A duck hears also.

Localization

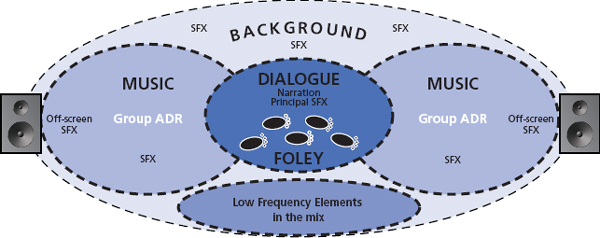

In most theaters (and an increasing number of homes), sound extends beyond the screen to include the sides and back of the room. The physical space implied by this speaker configuration is referred to as the sound field. Our ability to perceive specific sound placements within this field is known as localization (Figure 1.1).

Figure 1.1 The Soundtrack in the Sound Field

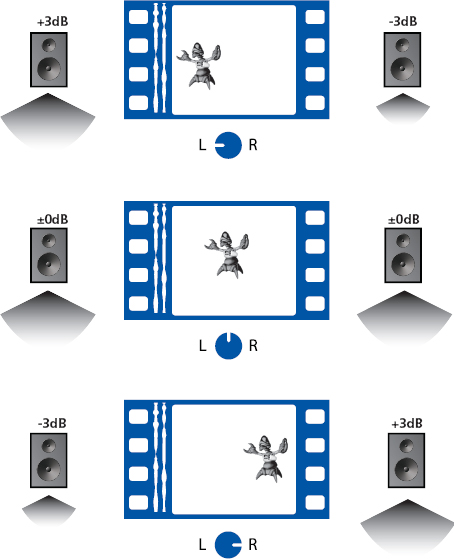

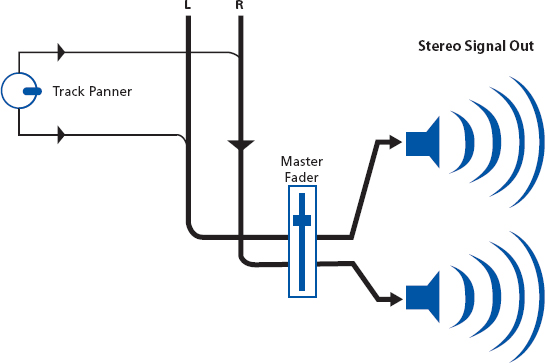

The panners (pan pots) on a mixing board facilitate the movement of sound from left to right by adjusting the relative levels presented in each speaker. Using this approach, individual sounds can be placed (panned) within the sound field to accurately match on-screen visuals even as they move. Off-screen action can be implied by panning sound to the far left or right in the stereo field (Figure 1.2).

Independent film submission requirements typically call for a stereo mix but there is an increasing acceptance of multi-channel mixes. Multi-channel sound extends the sound field behind the audience (surrounds). The surrounds have been used primarily to deliver ambient sounds but this role is expanding with the popularity of stereoscopic (3D) films. Walt Disney pioneered multi-channel mixing with the Fanta-sound presentations of “Fantasia” in the late 1930s, adding height perspective for the sixtieth anniversary screening.

Acoustics is a term associated with the characteristics of sound interacting in a given space. Film mixers apply reverb and delay to the soundtrack to establish and reinforce the space implied onscreen. The controls or parameters of these processors can be used to establish the size and physical properties of a space as well as relative distances between objects. One of the most basic presets on a reverb plug-in is the reverb type or room (Figure 1.3).

Figure 1.3 Reverb Room Presets

The terms dry and wet are subjective terms denoting direct and reflected sound. Re-recording mixers frequently adjust the reverb settings to support transitions from environment to environment.

Rhythm and Tempo

Rhythm is the identifiable pattern of sound and silence. The speed of these patterns is referred to as the tempo. Tempo can remain constant to provide continuity, or accelerate/decelerate to match the visual timings of on-screen images. Footsteps, clocks, and heartbeats are all examples of sound objects that typically have recognizable rhythm and tempo. Vehicles, weapons, and dialogue are often varied in this respect. Many sounds such as footsteps or individual lines of dialogue derive additional meaning from the rhythm and tempo of their delivery. This is an important point to consider when editing sound to picture. Composers often seek to identify the rhythm or pacing of a scene when developing the rhythmic character of their cues.

Noise and Silence

The aesthetic definition of noise includes any unwanted sound found in the soundtrack. Noise always exists to some degree and sound editors and mixers have many tools and techniques to minimize noise. Backgrounds are sometimes mistakenly referred to as noise. However, backgrounds are carefully constructed to add depth to a scene whereas noise is carefully managed as not to detract from the narrative. Silence is perhaps the least understood component of sound design. Silence can be an effective means of creating tension, release, or contrast. However, complete silence is unnatural and can pull the audience out of the narrative. Silence before an explosion creates contrast, effectively making the explosion perceptually louder.

It’s the space between the notes that give them meaning.

The Physics of Sound

Sound Waves

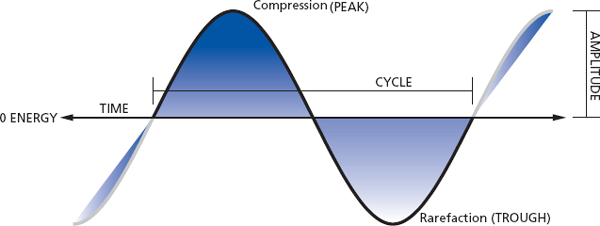

The sine wave is the most basic component of sound. The horizontal line shown in Figure 1.4 represents the null or zero point, the point at which no energy exists. The space above the line represents high pressure (compression) that pushes inward on our hearing mechanisms. The higher the wave ascends, the greater the sound pressure, the more volume we perceive. The highest point in the excursion above the line is the peak. The space below the line represents low pressure (rarefaction). As the wave descends, a vacuum is created which pulls outward on our hearing mechanism. The lowest point in the downward excursion is the trough. A single, 360° excursion of a wave (over time) is a cycle.

Frequency

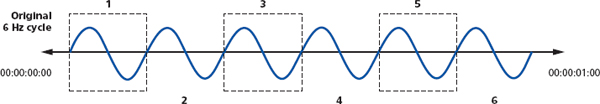

Frequency, determined by counting the number of cycles per second, is expressed in units called hertz (Hz); one cycle per second is equivalent to 1 hertz (Figure 1.5).

Figure 1.5 Six Cycles Per Second

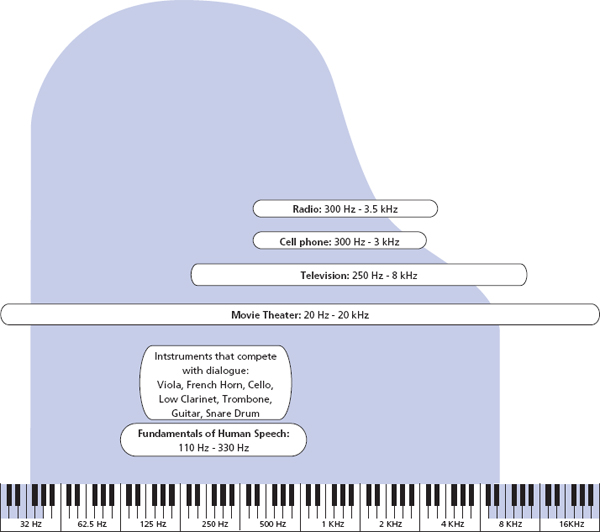

Pitch is our subjective interpretation of frequency such as the tuning note for an orchestra being A=440 Hertz. The frequency range for humans begins on average at 20 Hz and extends upwards of 20,000 Hz (20 kHz). Frequency response refers to the range of fundamental frequencies that an object can produce. Frequency response is a critical factor in the selection of microphones, recording devices, headphones, speakers, and commercial SFX/Music. It is also an important qualitative feature relating to audio compression codecs such as Mp3 and AAC. In musical terms, the frequency range of human hearing is 10 octaves, eight of which are present in a grand piano (Figure 1.6).

Figure 1.6 Frequency in Context

Frequency can be used to re-enforce many narrative principles such as age, size, gender, and speed. SFX are often pitched up or down to create contrast or to work harmoniously with the score. There are physiological relationships between frequency and human perception that can be used to enhance the cinematic experience. For example, low frequencies travel up our bodies through our feet, creating a vertical sensation. Consequently low frequency content can be brought up or down in the mix to enhance visuals that move vertically.

Amplitude

When acoustic energy is digitized, the resultant wave is referred to as a signal. Amplitude is used to describe the amount of energy (voltage) present in the signal (Figure 1.7).

The strength of the sound waves emanating from the speakers as shown in Figure 1.6 is measured in a unit called dB SPL (decibel sound pressure level). Volume or perceived loudness is our subjective interpretation of SPL. Audiences perceive volume in terms of relative change more than specific dB levels. The continuum beginning from the softest perceptible sound to the loudest perceptible sound is referred to as the dynamic range. Dialogue, if present, is the most important reference used by audiences to judge the volume levels for playback. Re-recording mixers use amplitude or level to create intensity, provide emphasis, promote scale or size, and establish proximity of sound objects. An audience’s perception of volume is also linked with frequency. Human hearing is most sensitive in the mid-range frequencies. More amplitude is required for low and high frequencies to match the apparent loudness of the mid-range frequencies. This perceptual curve is known as the equal loudness curve. This curve influences how we mix and why we seek to match the playback levels on the mix stage with the playback levels in theaters (reference level).

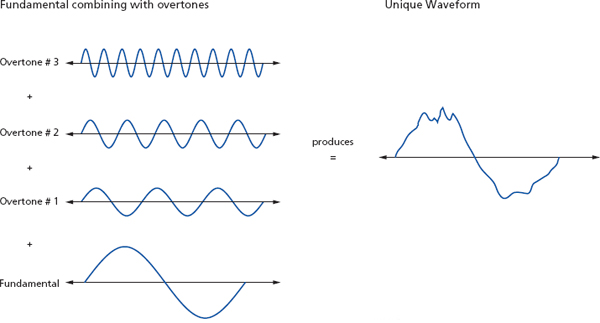

The combination of a fundamental frequency and associated harmonics combine to create a unique aural signature that we refer to as timbre or tone quality. We have the ability to discretely identify objects or characters solely by timbre. Composers select instruments to play specific components of a cue based largely on timbre. Timbre plays an important role in voice casting, the selection of props for Foley, and nearly every SFX developed for the soundtrack (Figure 1.8).

Wavelength

Wavelength is the horizontal measurement of the complete cycle of a wave. Wavelengths are inversely proportionate to frequency and low frequencies can be up to 56 feet in length. The average ear span of an adult is approximately 7 inches. Waves of varied length interact uniquely with this fixed ear span. Low-frequency waves are so large that they wrap around our ears and reduce our ability to determine the direction (localization) of the sound source. The closer the length of a wave is to our ear span (approx 2 kHz), the easier it is for us to localize. One popular application of this concept is the use of low-frequency sound effects to rouse our “fight or flight” response. When audiences are presented with visual and sonic stimuli determined to be dangerous, the body instinctively prepares to fight or escape. If the audience cannot determine the origin or direction of the sound because the sounds are low in frequency, their sense of fear and vulnerability is heightened.

Speed of Sound

Sound travels at roughly 1130 feet per second through air at a temperature of 70°F; the more dense the medium (steel versus air), the faster the sound travels. The speed of sound is equal at all frequencies. In reality, light travels significantly faster than sound. Therefore, we see an action or object before we hear it; however, the cinematic practice for sound editing is to sync the audio on or slightly after the action; this is referred to as hard sync.

Digital Audio

Digitizing Audio

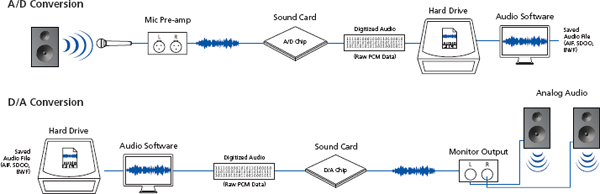

Digital audio has impacted nearly every aspect of soundtrack production. When handled properly, digital audio can be copied and manipulated with minimal degradation to the original sound file. Digital audio is not without its drawbacks, however, and it is important to understand its basic characteristics in order to preserve the quality of the signal. The conversion of acoustic energy to digital audio is most commonly achieved in a process known as LPCM or Linear Pulse Code Modulation. An analog signal is digitized using specialized computer chips known as analog-to-digital (A/D) converters. A/D converters are designed to deliver a range of audio resolutions by sampling a range of frequencies at discrete amplitudes. D/A converters reverse this process to deliver analog signals for playback. The quality of A/D converters vary from device to device and are often a factor in the cost of higher end audio equipment (Figure 1.9).

Sampling Rates

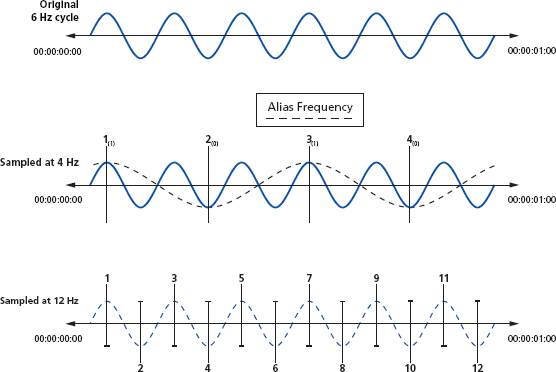

The visual component of animation is represented by a minimum of 24 frames per second. As the frame rate dips below this visual threshold, the image begins to flicker (persistence of vision). Similar thresholds exist for digital audio as well. Frequency is captured digitally by sampling at more than twice the rate of the highest frequency present, referred to as the Nyquist frequency (Figure 1.10).

If the sampling rate falls below this frequency, the resultant audio will become filled with frequencies that were not present in the original (harmonic distortion). The minimum sampling rate for film and television is 48 kHz with high definition video sample rates extending up to 192 kHz. The sampling rates of web delivery are often lower than the NTSC standard. The process of converting from a higher sample rate to a lower sample rate is referred to as down sampling.

Bit-Depths

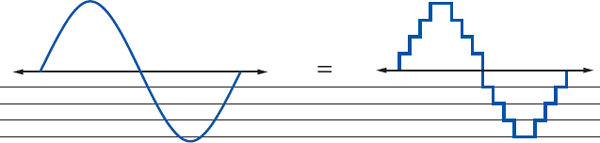

The amplitude of a wave is captured by sampling the energy of a wave at various points over time and assigning each point a value in terms of voltage. Bit-depth refers to the increments or resolution used to describe amplitude. At a bit-depth of two, the energy of a wave is sampled in four equal increments (Figure 1.11).

Figure 1.11 Low Bit-Depth Resolution

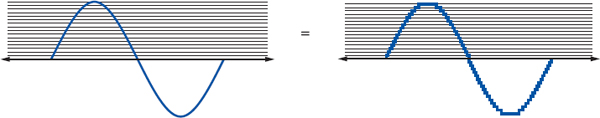

Notice that all portions of the wave between the sampling increments are rounded up or down (quantized) to the nearest value. Quantization produces errors (noise) that are sonically equivalent to visual pixilation. As the bit-depth is increased, the resolution improves and the resulting signal looks and sounds more analogous to the original (Figure 1.12).

Figure 1.12 High Bit-Depth Resolution

In theoretical terms, each added bit increases the dynamic range by 6.01 dB (16 bit = 96 dB). The professional delivery standard for film and television soundtracks is currently 16 to 24 bit. However, sound editors often work at bit depths up to 32 bit when performing amplitude based signal processing. The process of going from a higher bit depth to a lower bit depth is called dither.

Audio Compression

PCM (pulse code modulation) is a non-compressed or full resolution digital audio file. Non-compressed audio represents the highest quality available for media production. File extensions such as aiff, .wav, and BWF are added to a PCM file to make them readable by computers. In a perfect world, all audio for media would be full resolution. However, due to file storage and transfer limitations for Internet, package media, and broadcast formats, audio compression continues to be a practical reality. Audio codecs (compression/decompression) have been developed to meet delivery requirements for various release formats. Of these, the most common are mp3, AAC, AC-3, and DTS. The available settings on a codec have a direct impact on the frequency response of the resultant files. For example, higher transfer rates preserve more of the high frequency content in the original file. Therefore, it is important to know the allowable transfer rate and file size limitations for specific release formats when encoding the audio. For Internet delivery, streaming options are typically lower than download options. Though the differences between high and low resolution audio may not be readily heard on consumer monitors or head phones, these differences are dramatic when played through high quality speakers like those found in theaters. Therefore it is important to understand what type of monitors your potential audiences will be listening to and compress accordingly.