Sound Design Theory

Overview

The pairing of audio and visual media is part of our everyday experiences. Armed with an ipod and earbuds, we intuitively underscore our daily commutes and recreation time through carefully selected song-lists. We visualize while listening to the radio and we audiate while reading. In the absence of either sound or picture, audiences will create that which is not present, potentially redefining the original intention of any given work. Therefore, in narrative animation, we must carefully select the content used in this pairing as we guide the audience through the story. To accomplish this, we must first understand the unique relationship of sound paired with image. As early as 1928 with the release of Steamboat Willie, directors, editors, and composers have explored this relationship as they develop the aesthetic for creative sound design.

No theory is good except on condition that one use it to go beyond.

Sound Classifications

Chion Classifications

Michel Chion (a noted sound theorist) classifies listening in three modes: causal, semantic, and reduced. Chion’s typology provides a useful design framework for discussing sounds function in a soundtrack. Causal sound is aptly named, as it reinforces cause and effect (see a cow, hear a cow). Sound editors often refer to these as hard effects, especially when they are used in a literal fashion. The saying “we don’t have to see everything we hear, but we need to hear most of what we see” describes the practical role that causal sounds play in the soundtrack. The term semantic is used to categorize sound in which literal meaning is the primary emphasis. Speech, whether in a native or foreign tongue, is a form of semantic sound. Morse code is also semantic and, like foreign languages, requires a mechanism for translation (e.g. subtitles) when used to deliver story points. Even the beeps placed over curse words imply a semantic message. The term reduced refers to sounds that are broken down, or reduced, to their fundamental elements and paired with new objects to create new meanings. Animation provides many opportunities for this non-literal use of sound.

Diegetic and Non-Diegetic Sound

The term diegetic denotes sound that is perceived by the characters in the film. Though unbeknownst to the characters, diegetic sound is also perceived by the audience. This use of sound establishes a voyeuristic relationship between the characters and their audience. Non-diegetic sound is heard exclusively by the audience, often providing them with more information than is provided to the characters. When discussing music cues, the terms source and underscore are used in place of diegetic and non-diegetic. Diegetic sound promotes the implied reality of a scene. Principle dialogue, hard effects, and source music are all examples of diegetic sound. In contrast, non-diegetic components of a soundtrack promote a sense of fantasy. Examples of non-diegetic sound include narration, laugh tracks, and underscore. The cinematic boundaries of diegesis are often broken or combined to meet the narrative intent at any given moment.

They forgot to turn off the ambience again!—

Narrative Functions

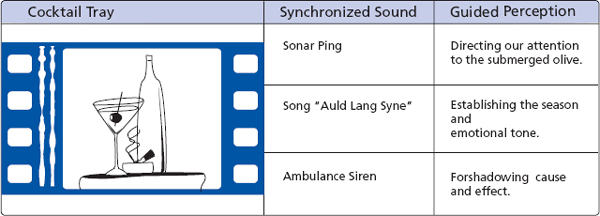

Guided Perception

The images presented within a scene are often ambiguous in nature, inviting the audience to search for non-visual clues as they attempt to clarify both meaning and intensity. Within these shots are creative opportunities to guide the audience perceptually from frame to frame. The editors and composers for the early Warner and MGM shorts understood this and became masters at guiding the audience’s perception. They accomplished this by using non-literal sound for subjective scenes. Disney also uses this design approach when producing films for younger audiences. For example, in Beauty and the Beast, the final conflict between the villagers and the castle’s servants is underscored with a playful rendition of “Be our Guest”. Here, the score guides the audience to a non-literal interpretation of the visuals, promoting a “softer” perception of the content, thus making the film appropriate to a wider audience.

If that squeak sounds kind of sad and you never meant it to sound sad, it will throw it that way, so you can’t ignore it.—

Drawing the Audience into the Narrative

The title sequence is typically the first opportunity for soundtrack to contribute to the storytelling process. Like a hypnotist, the sound design team and composer develop elements for the title sequence to draw the audience out of their present reality and into the cinematic experience. Sound designers and re-recording mixers take great care to keep the audience in the narrative. At the conclusion of the film, the soundtrack can provide closure while transitioning the audience back to their own reality.

Directing the Eye

Re-recording mixers create sonic foregrounds, mid-grounds, and backgrounds using volume, panning, delay, and reverb. They choose what the audience hears and the perspective for which it is heard. Subtle visuals can be brought into focus with carefully placed audio that directs the eye (Figure 2.2).

Establishing or Clarifying Point of View

In a scene from Ratatouille (2007), Remy and Emile (rats) are being chased by an elderly French woman in her kitchen. When we experience the scene through their perspective, the principle dialogue is spoken in English. However, when the perspective shifts to the elderly women, their dialogue is reduced to rat-like squeaks. This is an example of dialogue effectively transitioning with the point of view (POV) within a scene. In Toy Story (1995), Gary Rydstrom (sound designer) and Randy Newman (composer) contrast diegetic sound and underscore in a street-crossing scene. The world as heard from the perspective of the toys is primarily musical. In contrast, distance shots of the toys are covered with hard effects and BGs. Cinema has borrowed a technique from Opera where specific thematic music is assigned to individual characters. This approach to scoring is referred to as Leitmotif and is often used to establish or clarify the POV of a scene, especially where more than one character is present. Sound designers often pitch shift or harmonize props in an attempt to create a sonic distinction between characters.

Clarifying the Subtext

During the silent film era, actors were prone to exaggerated expressions or gestures to convey the emotion of a scene. With the arrival of synchronized sound, the actor was liberated from exaggerated acting techniques. When a character is animated with a neutral or ambiguous expression, an opportunity for the soundtrack to reveal the characters’ inner thoughts or feelings is created. When a music cue is written specifically to clarify the emotion of the scene, it is referred to as subtext scoring. Subtext scoring is more immediate and specific than dialogue at conveying the emotion. The subtext can also be clarified through specific design elements or BGs.

You have to find some way of saying it without saying it.

Contrasting Reality and Subjectivity

Though animation is subjective by nature, there exists an implied reality that can be contrasted with subjective moments such as slow motion scenes, montage sequences, and dream sequences. Subjective moments are often reinforced sonically by means of contrast. One common design approach to subjective moments is to minimize or remove all diegetic sound. This approach is often used in montage sequences where music is often the exclusive sound driving the sequence. In slow motion sequences, diegetic sound is typically slowed down and lowered in pitch to simulate analog tape effects. Though clichéd in approach, dream sequences are often reinforced with additional reverb to distance the sound from reality.

Extending the Field of Vision

There is a saying that a picture is worth a thousand words. In radio (theater of the mind), sound is worth a thousand pictures. When objects producing sound are visible, the sound is referred to as sync sound or on-screen. Off-screen sound can introduce and follow objects as they move on and off-screen, thereby re-enforcing the visual line established through a series of shots. Our field of vision is limited to roughly 180°, requiring visual cuts and camera movements to overcome this limitation. Multi-channel soundtracks, on the other hand, are omni-directional and can be used to extend the boundaries of a shot beyond the reach of the eye. The classic camera shake used by Hanna-Barbera is a visual approach to storytelling where off-screen sound is used to imply that which is not shown. This approach serves to minimize the perceived intensity of the impacts while avoiding costly and time-consuming animation. Multi-channel mixing has the potential to mirror front-to-back movements while extending the depth of a scene to include the space behind the audience. With the growing use of stereoscopic projection, there is increased potential for multi-channel mixing to mirror the movement of 3-dimensional images.

The ears are the guardians of our sleep.

Tension and Release

Tension and release is a primary force in all art forms. Film composers have many means of creating tension, such as the shock chords (dissonant clusters of notes) featured prominently in the early animations of Warner Brother and MGM studios. Another approach common to this period was the harmonization of a melody with minor 2nds. The suspension or resolution of a tonal chord progression or the lack of predictability in atonal harmonic motion can produce wide ranges of tension and release. A build is a cue that combines harmonic tension and volume to create an expectation for a given event. Some sounds have inherent qualities that generate tension or release and are often used metaphorically. For example, air-raid sirens, crying babies, emergency vehicles, growling animals, and snake rattles all evoke tension. Conversely, the sound of crickets, lapping waves, and gentle rain produce a calming effect. Either type of sound can be used directly or blended (sweetened) with literal sounds to create a subliminal effect on the audience.

Continuity

Continuity has always been an important aesthetic in filmmaking, regardless of length. In a narrative animation, continuity is not a given; it must be constructed. For this reason, directors hire a supervising sound editor to insure that the dialogue and SFX are also cohesive. In Disney’s Treasure Planet (2002), director Ron Clemons developed a 70/30 rule to guide the design for the production. The result was a visual look that featured a blending of eighteenth-century elements (70 percent) with modern elements (30 percent). Dane Davis, sound designer for Treasure Planet, applied this rule when designing SFX and dialogue to produce an “antique future” for this soundtrack. Continuity is not limited to the physical and temporal realms, emotional continuity is equally important. The score is often the key to emotional continuity. Perhaps this is why few scores are written by multiple composers. It takes great insight to get to the core of a character’s feelings and motivations and even greater talent to express those aspects across the many cuts and scenes that make up a film. When developing cues, continuity can be promoted through instrumentation. For example, in The Many Adventures of Winnie the Pooh (1977), composer Buddy Baker assigned specific instruments to each of the characters.

I was looking for a special sound and it was kind of keyed into the design of the movie.

Promoting Character Development

An effective soundtrack helps us identify with the characters and their story. Animators create characters, not actors. Much of the acting occurs in the dialogue while SFX reinforce the character’s physical traits and movements. The score is perhaps the most direct means of infusing a character with emotional qualities. Character development is not limited to people. In animation, virtually any object can be personified (anthropomorphism) through dialogue, music, and effects. Conversely, humans are often caricatured in animation. For example, the plodding footsteps of Fred Flintstone are often covered with a tuba or bassoon to emphasize his oversized physique and personality.

Theoretical Concepts Specific to Dialogue

Dialogue is processed in a different manner than SFX or music in that audiences are primarily focused on deriving meaning, both overt and concealed. Consequently, dialogue tracks do not require literal panning and are most often panned to the center. One notable exception to this practice can be heard in the 1995 film Casper, where the dialogue of the ghost characters pans around the room as only ghosts can. Narration, like underscore, is non-diegetic, promoting the narrative fantasy and requiring a greater willing suspension of disbelief. Narration is sometimes processed with additional reverb and panned in stereo, placing it in a different narrative space than principle dialogue. When Fred Flintstone speaks direct to the camera, he is breaking the sonic boundary (diegesis) that normally exists between the characters and the audience. This style of delivery is referred to as breaking the 4th wall. One clever application of both concepts is the introduction of principle dialogue off-screen, suggesting narration, and then through a series of shots, revealing the character speaking direct to camera. This allows dialogue to deliver story points while also minimizing animation for speech.

Theoretical Concepts Specific to Score

In addition to establishing the POV of a scene, an established leitmotif can allow audiences to experience the presence of a specific character, even when that character is off-screen. The abstract nature of animation often calls for a metaphoric sound treatment. For example, in A Bug’s Life (1998), the back and forth movement of a bowed violin is used to exaggerate the scratching motion of a bug in a drunken state. The use of musical cues to mimic movement is known as isomorphism. Ascending/descending scales and pitch bending add directionality to isomorphism. The use of musical instruments as a substitution for hard effects gained favor in the early years of film as it facilitated coverage of complex visual action without requiring cumbersome sound editing. Percussion instruments in particular became a fast and effective means of representing complex rhythmic visuals. The substitution of musical intervals for Foley footsteps (sneak steps) began as early as 1929 with Disney’s The Skeleton Dance. The melodic intervals and synchronized rhythm help to exaggerate the movement while specifying the emotional feel. Anempathetic cues are deliberately scored to contrast the implied emotion of a scene. This technique is typically used to increases an audience’s empathy for the protagonists or to mock a character. Misdirection is a classic technique where the score builds toward a false conclusion. This technique is often used for dream sequences or as a setup for visual gags. Acoustic or synthetic instrumentation can be used to contrast organic and synthetic characters or environments. The size of instrumentation or orchestration establishes the scale of the film or the intimacy of a scene. Conversely, a full orchestra can give a scene an epic feel. Score can function transitionally as well. For example, a cue can morph from source-to-underscore as a scene transitions to a more subjective nature.

You’re trying to create a sonic landscape that these drawings can sit on . . . and these characters can live within.

Theoretical Concepts Specific to SFX

Sound effects are an effective means of establishing narrative elements such as time period, location, and character development. For example, seagulls imply the ocean, traffic implies urban settings, and machinery implies industry. World War II air-raid sirens and steam-powered trains are historical icons that help to clarify the time period. Characters are often developed through associated props, such as a typewriter (journalist) or a whistle blast (traffic cop). Many sounds elicit emotional responses from an audience due to their associative nature. Sounds have the potential of revealing or clarifying the underlying meaning or subtext of a scene. For example, the pairing of a gavel with a cash register (sweetening) implies that justice is for sale. Visual similarities between two objects such as a ceiling fan and a helicopter blade can be re-enforced by morphing their respective sounds as the images transition, this is referred to as a form edit (Table 2.1). Sound effects are often used to de-emphasize the intensity of the visuals, especially when used to represent off-screen events and objects.

Woodpecker (country) |

Jackhammer (urban) |

Flock of geese (organized activity) |

Traffic jam (breakdown) |

Alarm clock (internal) |

Garbage truck backing up (external) |

Telegraph (antique) |

Fax machine (modern) |

Typewriter (documentation) |

Gunshot (flashback to event) |

Overview

In the early years of film, picture editors handled many of the tasks now handled by sound editors. They intuitively understood how the picture editor directed the flow of images, established a rhythm, maintained continuity, and facilitated the narrative. They were also sensitive to the implications of camera angles, camera movement, and framing. As a result, their sound edits were visually informed. As sound design evolves into a more specialized field, it is important for sound editors to maintain this perspective. This can be a challenging proposition for when properly executed, picture editing is nearly transparent to the audience. Sound designers and composers must develop an awareness of visual cues and their implications for sound design. The purpose of this section is to establish a common language for both picture and sound editors. There is a danger when discussing this broad a topic in a shortened format. While reading the following, be mindful that the craft and creativity of editing cannot be reduced to a set of rigid rules. As you work on each film, allow that film to evolve by never allowing methodology to be a substitute for personal logic and feelings. No filmmaker ever hoped his audience would leave the theater saying “boy that was a well-edited film.”

In Hollywood there are no rules, but break them at your own peril.

Shots

The most basic element of the film is the frame. A series of uninterrupted frames constitute a shot or, in editor language, a clip. A sequence of related shots are assembled to create a scene. Some shots are cut and digitally spliced while others are given a transitional treatment such as a wipe or dissolve. In most cases, the goal of picture and sound editing is to move from shot to shot with the greatest transparency. Storyboards, shot lists, and spotting sessions are all important means of communicating the vision of the film and the nuance associated with each frame, shot, and edit.

Framing

During the storyboard stage, the animator designs a series of shots to develop a scene. There is a multitude of approaches to individual shots and a wide range of verbiage to describe each. One aspect of the shot is framing, which establishes the distance of the audience from the subject. From close-up to extreme long shot, framing has its counterpart in the foreground and background of the soundtrack. For example, close-up shots (CU) isolate an action or expression, eliminating peripheral distractions. Therefore the soundtrack supports this perspective by minimizing off-screen sounds. Long shots, on the other hand, provide a more global perspective, and are better suited for sonic treatments of a more ambient nature. When designing sound for animation, it is important to consider individual shots in the larger context of a sequence. For example, in an action sequence, it is common to frame the same subject from many different perspectives. As a result, the movements of these objects appear to move faster or slower depending on the framing. To promote continuity, this type of sequence is often scored at a continuous tempo, creating a unified sense of pacing when played through the scene.

Camera Placement

Camera placements offer a variety of ways to portray a character. The most common camera placement is at the eye level. This placement implies a neutral or equal relationship with the subject. When the camera angle points up to a character, the intent often indicates a position of power. The reverse might also be true but it is important to clarify intent before designing sound based on camera placement alone. The over-the-shoulder shot blocks our view of the character’s mouth, creating an opportunity to add or alter lines of dialogue in post-production. This placement also shifts the perspective to another character, often revealing that character’s reaction to what is being said.

Camera Movement

Camera movements can be horizontal (pan), vertical (tilt), or move inward and outward (zoom). Both pan and vertical shots are often motivated by the need to reveal more information in an extended environment. Pan shots are often underscored and/or designed with static backgrounds that provide continuity for the camera move. Tilt and zoom shots are more common in animation as they often promote an exaggerated feel. Isomorphic cues are commonly used to score these types of camera movements. A pull back shot gradually moves away from a subject to reveal a new environment. This type of shot can be supported by morphing from one set of BGs to another or by opening up the sound field in the mix. Though not a camera move, the shift of camera focus from the foreground to the background, known as pulling focus, is a natural transition within a scene. The re-recording mixer can mirror this transition by shifting elements of the soundtrack from the sonic background to the sonic foreground.

Movement of Objects

Fly-bys and fly-throughs refer to objects moving in relationship to a fixed camera position to create the illusion that an object is passing left to right or front to back. Fly-bys are re-enforced by panning sound left or right as indicated by the on-screen movement. Fly-throughs can be realized by panning to and from the screen channels and the surround channels as indicated by the on-screen movement. When panning bys of either type, it is still important to observe the line. Processing the sound object with Doppler and/or adding whoosh effects can provide additional motion and energy to the visual by. Doppler is the dynamic change of pitch and volume of an object as it approaches and passes by the audience.

Perspective Shot (POV)

In a perspective (POV) shot we experience the action subjectively through the eyes of a specific character. Just as we are seeing with their eyes, so too are we hearing through their ears. A good example of POV can be heard in The Ant Bully (2006), where the audience views the action through the mask of a bug exterminator. This perspective was achieved by contrasting the exterminator’s breathing (interior) with the muffled sound of the environment (exterior).

Insert Shots and Cutaways

An insert shot cuts from a shot framed at a greater distance to close-up shot. Insert shots are used to reveal detailed information like the time on a watch or a message written on a note. The insert shot can be further exaggerated by briefly suspending the time of the reveal by freezing the frame. One effective design approach to freeze-frames is to cut the SFX and dialogue (diegetic elements) but play the underscore (non-diegetic and linear). A cutaway shot moves to a framing of greater distance, providing information from larger objects like a grandfather clock or a billboard sign. Sound elements that hit with a reveal are typically timed a few frames late to suggest the character’s reaction time. If the soundtrack does not hit when the information is revealed, it is said to play through the scene. In the early years of animation, music cues hit on much of the action, a technique referred to as Mickey Mousing. This technique is used more sparingly today and many directors view its use in a negative context. However, in the hands of a skilled composer, cues can hit a significant portion of the action without calling attention to the technique.

Cuts

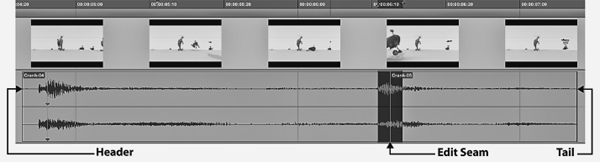

A cut is the most common type of edit and consists of two shots joined without any transitional treatment. In Walter Murch’s book In the Blink of an Eye (2001), Murch discusses the cut as a cinematic experience unique to visual media and dreams. As part of our “willing suspension of disbelief,” audiences have come to accept cuts as a normal part of the film experience. Sound editors often use the terms audio clip and audio region interchangeably. When discussing sound edits, they refer to the beginning of a clip as the header, the end of the clip as the tail, and a specific point within the clip as a sync point. The point where two clips are joined is called the edit seam (Figure 2.5).

Figure 2.5 Audio Clip Header, Edit Seam, and Tail

The question of where to cut involves timing and pacing. The question of why to cut involves form and function. When a visual edit interrupts a linear motion such as a walk cycle, the edit jumps out at the audience. Jump cuts present a unique challenge for the sound editor in that any attempt to hard sync sound to the linear motion will further advertise the edit. If sound is not required for that specific motion, it is better to leave it out altogether. However, if sound is essential, the sound editor must find a way to cheat the sound in an effort to minimize continuity issues. This is a situation where linear music can be used effectively to mask or smooth the visual edit.

Transitions

Dissolves

A visual dissolve is a gradual scene transition using overlap similar to an audio cross-fade. Dissolves are used to indicate the passage of time or a change in location. The sonic equivalent of a visual dissolve is an audio cross-fade, a technique commonly used in connection to visual dissolves. In Antz (1998), as the male character Z waits excitedly for his love interest Princess Bala to join him on a date, the instrumentation of the underscore gradually thinned through the many dissolves, finally ending on a solo instrument to play his isolation and disappointment for getting stood up.

Wipes

A wipe is a transitional device that involves pushing one shot off and pulling the next shot into place. Unlike the dissolve, there is no overlap in a wipe. Blue Sky uses wipes cleverly in the Ice Age films, integrating seasonal objects like leaves and snow to re-enforce the passage of time. This approach lends itself to hard effects, BGs, and score. In the Flintstones television series, wipes are frequently used and often scored with cues timed specifically to the transition.

Fades

A fade uses black to transition the audience in and out of scenes. Fade-outs indicate closure and are timed to allow the audience time to process what has recently transpired. Closure can be supported through music cues that harmonically resolve or cadence. In many episodes of the Flintstones, Hoyt Curtain’s music cues would half cadence prior to the first commercial break and fully cadence prior to the second commercial break indicating the resolution of the first act. Fade-ins invite the audience into a new scene. BGs, underscore, and dialogue are often introduced off-screen as the film fades in from black. The mixer typically smoothes fade transitions by gradually increasing or decreasing levels in the soundtrack.

Sound Transitions

Not all sound edits are motivated by the literal interpretation of picture edits. As discussed earlier, pre- and post-lap techniques are useful transitional devices. Pre-lapping sound or score to a subsequent scene can be an effective smoothing element when moving between shots, whereas a hard cut of a SFX or BG can advertise the transition and quickly place the audience in a new space. Post-laps allow the audience to continue processing the narrative content or emotion of the preceding scene. In many cases, audio simply overlaps from cut to cut. This technique is used for dialogue, SFX, and source music. A ring-out on a line of dialogue, music cue, or SFX can be a very subtle way to overlap visual edits. Ring-outs are created by adding reverb at the end of an audio clip, causing the sound to sustain well after the clip has played. This editing technique will be covered more extensively in Chapter 6. Dialogue is sometimes used to bridge edits. For example, in Finding Nemo (2003), the backstory is delivered through a series of characters handing off the dialogue in mid sentence from cut to cut. With each of these transitional treatments, there are no hard fast rules as to guide the editor. This is why editing is both an art and a craft.

Scenes

Parallel Edit

Parallel editing or cross cutting is designed to present two separate but related characters or actions through a series of alternating shots. This editing approach often implies that the actions are occurring simultaneously. Though the audience is made aware of this relationship, the characters are often unaware; this is an important design consideration. When a music cue plays through the scene, it typically indicates that characters on both sides of the edit are aware of each other and the presenting conflict. If the sound design and score are made to contrast with each cut, this typically indicates that the characters are unaware of their shared experience.

Montage Sequence

A montage sequence consists of rapid visual edits designed to compress the narrative. Montage sequences typically feature songs or thematic score with little or no dialogue or SFX. The songs used in montage sequences often contain lyrics that relate to the narrative (narrative lyrics). Songs or thematic score contain melodic lines that create linear motion across the non-linear video edits. In a scene from Bee Movie (2007), Barry B Benson falls asleep in the pool of honey. During the dream sequence that follows, Barry’s relationship with love interest Vanessa Bloome develops through montage and is scored with the song “Sugar Sugar,” a clever play on words.

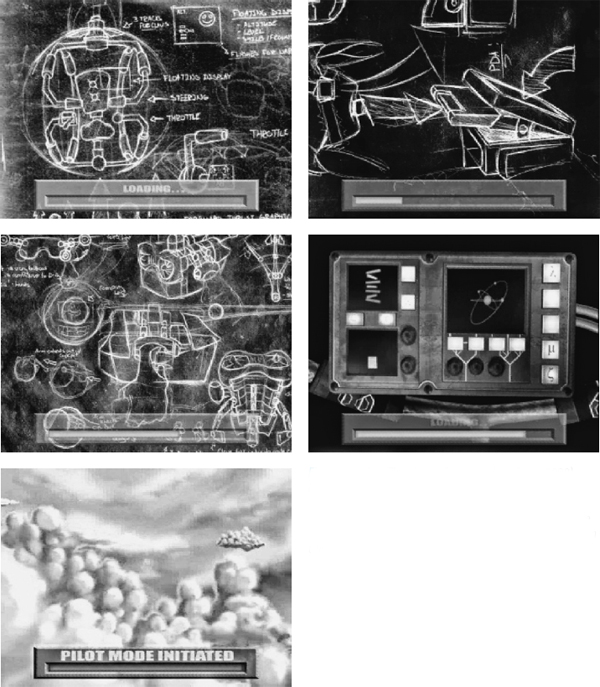

Time-Lapse and Flashback Sequences

Short form animation often seeks to compress the narrative through the use of temporal devices such as time-lapse and flashback sequences. Time-lapse is an effective means of compressing the narrative. It differs from the montage largely in the way individual shots are transitioned and in its use of diegetic sound. If the sequence does not feature diegetic elements, than underscore is an effective means of promoting continuity. When diegetic elements are the focus of each transition (e.g. a television or radio), then sound edits are often deliberately apparent and designed to exaggerate the passage of time. Flashback sequences are an effective means of delivering backstory. Typically, a flashback sequence has a more subjective look and feel than scenes in the present time. This contrast can be achieved by adding more reverb to the mix or by allowing the underscore to drive the scene (Figure 2.6).

The term sound design implies the thought process that begins at the storyboarding stage and continues through the final mix. It is an inclusive approach to soundtrack development that facilitates both technical and creative uses. In film, sound and image are symbiotic. Consequently, sound designers and composers must integrate visual thought into their creative process as animators must integrate aural thought into theirs. The ability to design and execute a film from this mutual perspective will be seen, heard, and, most importantly, felt by the audience.

If something works when it “shouldn’t” that’s when we have to pay attention and try to figure out why. And that’s when real learning about editing results.