2Dynamic fuzzy autonomic learning subspace algorithm

This chapter is divided into four sections. Section 2.1 analyses the research status of Autonomic Learning. In Section 2.2, we present the theoretical system of autonomic learning subspace based on DFL. In Section 2.3, we provide learning algorithm of Autonomic Learning Subspace based on DFL. The summary of this chapter is in Section 2.4.

2.1Research status of autonomic learning

Autonomic Learning (AL), from the aspect of learning theory, is a kind of self-directed learning mechanism composed of a learner’s attitude, ability, and learning strategies. For example, it can refer to the ability of individuals to guide and control their learning, set learning goals, choose different learning methods and learning activities according to different tasks, monitor the learning process, evaluate the learning results, and help others according to the situation. AL usually refers to active, conscious, and independent learning, as opposed to passive, mechanical, and receptive learning. It is the basis of individual lifelong learning and lifelong development. AL has always been an important issue in education and psychology, and it is also a hot topic in the field of machine learning [1].

Alearningsystemmustbeabletodetect,plan,experiment,adapt,anddiscover[2]. These activities, in an integrated way, set a framework for an autonomous system to learn and discover from the environment: LIVE. LIVE consists of three modules: a prediction sequence generator (model application), model construction/modification, and implementation/perception (environmental interface). Prediction is used as the evaluation criterion for model construction; model construction (modification) provides a tool to improve the predictive ability; problem solving using the approximation model detects where and when to improve the model; the creation of a new term provides more components for creating, predicting, and problem solving. This study shows that it is an effective way to discover the process of merging activity from the environment. LIVE has three main advantages: (1) it defines the problem of learning from the environment as that of constructing an environment model in the context of problem solving. This definition distinguishes the internal actions of the individual from the results of the action on the environment. The approximation model of the environment is extracted from the “rough” space determined by the internal ability and a priori knowledge of the individual. The extraction process is guided by the information gathered during the interaction with the environment. (2) The framework provides an integrated approach for coordinating multiple activities such as perception, behaviour, inquiry, experimentation, problem solving, learning, discovering, and creating new terms. LIVE is an execution system that combines these activities. (3) The key to identifying and integrating the framework is the concept of the prediction sequence and learning methods using complementary discrimination. The prediction sequence provides a joint window for planning, exploration, and experimentation. However, there are some shortcomings and deficiencies in the framework. For example, the framework cannot handle uncertainty, meaning that a single noise prediction failure can cause the model to be completely modified. It does not react in real time, and all actions are produced by careful consideration. Thus, it takes a lot of time to make decisions. The biggest limitation of LIVE is the exhaustive search method. The new terminology is too time-consuming and depends on two kinds of biases: (1) the terminology must be set in advance given useful mental relations and functions and (2) behaviour-dependent terminology methods are limited to considering only the features and objects that are relevant to the current conditions and behaviours.

In [3], an autonomous machine learning model based on rough set theory is proposed. In this paper, the problem of uncertainty in decision tables and decision rules is studied and the process of machine learning is controlled by the law that knowledge uncertainty should not change during machine learning. Thus, autonomic machine learning proposes an AL algorithm for default rule knowledge acquisition and the pre-pruning of the decision tree. Rough set theory can solve the fuzzy problem, but the result of solving the dynamic problem is not satisfactory.

Real-world problems can be described in terms of learning spaces. Learning problems can be mapped to multiple learning spaces, and a learning space can be used to describe multiple learning problems [4]. According to the degree of autonomy of the learning individual, the study space is further divided into autonomous and non-autonomous learning spaces. In the actual learning situation, completely independent and completely involuntary learning is rare, with most of the learning occurring somewhere between these two poles. Therefore, instead of dividing the learning space into autonomous or involuntary regions, it is better to consider the degree of autonomy of learning and then distinguish what learning is autonomous and what is not autonomous. This is more conducive to independent learning toward a target, in order to achieve better completion of learning tasks [5].

Thus, the AL system is a dynamic fuzzy system. Therefore, it is necessary to choose a theoretical tool that can solve the problem of dynamic fuzziness. To deal with these complex, dynamic, ambiguous, and unstructured properties in application systems, DFL has been introduced into autonomous machine learning methods, and some basic concepts of fuzzy logic have been proposed [6–8].

This chapter focuses on the following aspects of self-learning:

(1)Learning is a process of reasoning.

As knowledge increases, learning becomes the process of exploring and acquiring information in the knowledge space to satisfy the purpose of learning. It is a continuous process involving all kinds of reasoning and memorizing methods. During each iteration, the system extracts new knowledge or expressions from the input information and basic knowledge. If the acquired knowledge meets the learning goal, it is stored in the knowledge base and becomes part of the basic knowledge. The input information can be observed data, facts, concrete concepts, abstract concepts, knowledge structures, and information about the authenticity of knowledge.

Therefore, learning can be seen as a reasoning process. The process can be summarized as follows: autonomous machine learning based on DFL = independent reasoning based on DFL + memory.

(2)Learning is a transformation operator.

Learning is a process of exploring the knowledge space. This search is done by a series of knowledge transformation operators. The learning ability of a system is determined by the type and complexity of the transformation. According to different types of operations, knowledge transformation can be divided into knowledge generation transformation and knowledge processing transformation. Knowledge generation transformation modifies the content of knowledge by basic logic transformations such as substitution, addition, deletion, expansion, combination, decomposition, and so on, as well as the logical reasoning methods of extension, specialization, abstraction, concretization, analogy, and inverse analogy. Knowledge processing transformation concerns the original change in organizational structure, physical location, and other operations but does not change the content of knowledge. Thus, a learning process can be defined as follows:

Given: input knowledge (I), learning objectives (G), basic knowledge (BK);

Determine: output knowledge (O) to meet G by determining the appropriate transformation T to apply to I and BK.

(3) Learning goals are variable.

The goal is the desired achievement and result. The goal is also variable. From a psychological point of view, the need is the starting point of human behaviour and the source of the target. When a goal is achieved, it means that people have met their material or psychological needs. However, new needs will inevitably arise, becoming new goals. The variability of goals is also manifested in a seemingly unattainable goal, often through a cleverly achieved target workaround.

In the existing control structure, the goal is usually set to be constant and have some limitations. In the existing control structure, taking into account the variability of the control target, a closed-loop control structure diagram illustrates the appropriate transformation: the impact of the target disturbance. In addition to noise disturbance, some degree of target disturbance may exist. The former must be filtered and smoothed out, and the latter must be retained and processed.

(4) Learning environment is variable.

At present, computer science mainly considers the environment through human-machine interface design. However, the human-machine interface cannot adapt the demands of the user to the computer’s ability. To break through this limitation, Brooks presented an artificial intelligence system in the mid-1980s, and Minsky proposed a multi-agent system, which Picard later employed for emotional computing. These problems involve the representation and processing of the environment. In fact, the consideration of environmental representations in adaptive computation is completely different from that in traditional control theory. In traditional control theory, the environment is often characterized by a mathematical description. In fact, the most realistic description of the environment is its own, and the use of other tools to characterize it is only an approximation of the environment itself. Presently, in adaptive computing, the representation of the environment emphasizes the use of true and direct representations.

The direct representation of the natural environment is simple, whereas the direct representation of the social environment must consider the observed behaviour of members of society. Besides the direct expression of the human environment, there is almost no other means of expression; thus, we can only adopt a direct expression similar to the social environment.

In this chapter, the representation of the environment and its transformation will be represented by a dynamic fuzzy model. The basic concepts of DFL are given in Appendix 8.3.

2.2Theoretical system of autonomous learning subspace based on DFL

2.2.1Characteristics of AL

1. Auto-knowledge

An autonomic learner can only be highly autonomous if he or she is at a high level of auto-awareness. Therefore, auto-knowledge is the basis for AL. The learner must have “auto-knowledge” to determine whether the current behaviour is compatible with the learning environment. New conditions and a new environment will give rise to new behaviour; therefore, auto-knowledge should be constantly updated and evolve according to the environment. In other words, auto-knowledge is the internal knowledge base of the AL system [9]. With such auto-knowledge, the learner’s self-learning ability will become stronger.

2. Auto-monitoring

Self-directed learning is a feedback cycle. AL individuals can monitor the effectiveness of their learning methods or strategies and adjust their learning activities based on this feedback. In the process of learning, auto-monitoring refers to the process of self-learning individuals assessing their learning progress using certain criteria. Auto-monitoring is a key process of AL. Self-directed learning is only possible when learners are self-monitoring [8]. Planning can be used to monitor the problem-solving process. Auto-monitoring strengthens the learner’s self-learning ability.

3. Auto-evaluating

The auto-evaluating process is the most important component of AL because it influences not only the ability judgment, task evaluation, goal setting, and expectation of learners but also the individual auto-monitoring of the learning process and the learning result of the self-strengthening [8].

Existing research suggests that auto-evaluating includes self-summary, self-assessment, self-attribution, self-strengthening, and other sub-processes. Self-assessment involves comparing the learning results with the established learning objectives, to confirm which goals have been completed, which goals have not yet been achieved, and then to judge the merits of self-learning. Self-attribution refers to a self-assessment based on the results of learning success or failure, reflecting the reasons for the follow-up learning providing experience or lessons. Self-reinforcement is the process of rewarding or stimulating oneself based on the results of self-assessment and self-attribution, and it often motivates subsequent learning [5].

Behavioural evaluation is a way of simulating the behaviour of AL individuals. When solving a problem, two simple evaluation methods can be selected: the first is to choose the best behaviour to perform, that is, in the current state, that which acts most toward the direction of the best move; the second is to choose a better behaviour, that is, choose an act that offers the superior direction.

When learning an action, the learner autonomously evaluates his or her behaviour using the current evaluation function. The evaluation function is described as follows.

The original objective function value of the learner’s self-learning is that a new situation is obtained after the execution of an action. The corresponding new objective function is denoted by [1, 2, ..., n], where n is the number of objective functions.

The historical evaluation function is adjusted as follows:

where VYi denotes the historical evaluation function, VYi denotes the new evaluation function, and α denotes the weighting coefficient.

Auto-evaluation enhances the AL ability of the learner.

2.2.2Axiom system of AL subspace [7]

The various problems in the real world can be described by learning space. That is mapping learning problems into learning space. A learning problem can be mapped into multiple learning spaces, and a learning space can be used to describe multiple learning problems [4].

Definition 2.1 Learning space: The space used to describe the learning process is called the learning space. It consists of five parts: {learning sample, learning algorithm, input data, output data, representation theory}, which can be expressed as S = {EX, ER, X, Y, ET}.

Definition 2.2 Learning subspace: A spatial description of a specific learning problem, that is, a subset of the learning space, is called the learning subspace.

The axiom system of the AL subspace consists of two parts: (1) a set of initial formulas, namely axioms, and (2) a number of deformation rules, namely deduction rules.

First, on the basis of DFL, we define AL as follows:

Definition 2.3 Autonomic learning: refers to the mapping of an input dataset to an output dataset in an AL space, which can be expressed as According to the process can be divided into 1:1 AL, n:1 AL, 1: n AL, and m: n AL.

AL can be divided into the following modes according to the knowledge stored in the knowledge base (KB): When the KB is the empty set, there is no a priori knowledge, only 1:1 or n:1 AL. As the learning process continues, the KB grows and updates itself, enabling 1: n or m: n AL.

Definition 2.4 Autonomic learning space: The space used to describe the learning process is composed of all AL elements, called the AL Space. It consists of five elements, which can be expressed as where represents the AL space, denotes the AL samples, ER is an AL algorithm, are the input and output data, respectively, and ET is the learning theory.

Definition 2.5 Autonomic learning subspace (ALSS): According to some standard, we divide the AL space into n subspaces:

ALSS is a learning subspace with the highest and lowest limits, namely, In the algorithm, it can be regarded as the subspace of algorithm optimization. In this subspace, some AL individuals are distributed, and the corresponding search ability of the individuals is given, so that they can carry out AL in the corresponding environment.

The relationship between the AL space and ALSS is depicted graphically in Fig. 2.1.

The partition given in Definition 2.5 is complete, and the axioms can be derived as follows:

Axiom 2.1

Axiom 2.2

Axiom 2.3 If

Axiom 2.4 If

2.3Algorithm of ALSS based on DFL

ALSS is a learning subspace with the highest and lowest limits, which can be regarded as the subspace for optimization in the algorithm. Some AL individuals are scattered about this subspace, and as the AL progresses, their AL ability will become stronger and stronger.

2.3.1Preparation of algorithm

AL individuals in the ALSS can be seen as vectors.

1. Definition of variables

The state of an AL individual can be expressed by the vector where is the variable to be optimized; the objective function value represents the learner’s current object of interest, Learner_consistence; represents the distance between AL individuals; Visual represents the perceived distance of AL individuals; Step represents the maximum number of steps moved by AL individuals; and δ represents the congestion degree of AL individuals.

The neighbourhood of AL organization is defined as follows:

2. Description of behaviour

(1)Setting target behaviour

Let the current state of AL individual Learner be and choose a state at random in the corresponding sensing range. If Yi is less than Yj, go forward a step in that direction; otherwise, choose another state at random to judge whether or not the forward condition is satisfied. If the forward conditions are not met after a specified number of attempts, then perform some other behaviour (such as moving a step at random).

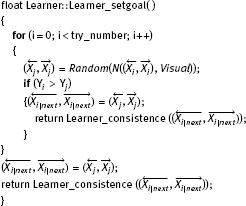

The pseudocode description of this process is shown in Algorithm 2.1, where Random Visual)) represents a randomly selected neighbour in the neighbourhood of the visual distance of

Algorithm 2.1 Setting target behaviour

let be the current state of AL individual Learner, and let n be the number of learning partners while exploring the current neighbourhood (which is dij < Visible). If nm / N (0 < 1), the learning partner centre has more learning objects but is not crowded. Under the condition Yi < Yc, move a step toward the centre of the learning partner; otherwise, perform other behaviours (such as setting the target behaviour).

The pseudocode description of this process is shown in Algorithm 2.2:

Algorithm 2.2 Aggregation behaviour

(3)Imitating behaviour

Let be the current state of AL individual Learner, and explore the neighbour with the best state in the current neighbourhood.

Let n be the number of learning partners of the neighbour If then there is high Learner_consistence near the learning partner without being crowded. Thus, the Learner moves a step toward the learning partner otherwise, some target setting behaviour is performed.

The pseudocode description of this process is shown in Algorithm 2.3.

Algorithm 2.3 Imitating behaviour

The realization of random behaviour is relatively simple. A state in a smaller neighbourhood is selected at random, and Learner moves in that direction. This ensures that the AL object will not be far away from the better ALSS in the blind moving process. Other autonomic learners who did not find the learning goal would conduct stochastic learning behaviour in their neighbourhood D.

In fact, the random learning behaviour is the default target setting behaviour.

(5)Auto-evaluating behaviour

After learning an action, the learner autonomously evaluates his or her behaviour using the current evaluation function. The evaluation function is described as follows.

Let Yi be the original objective function value of Learner, and consider a new situation obtained after performing an action. The corresponding new objective function value is and n denotes the number of objective functions.

The historical evaluation function is adjusted as follows:

where VYi denotes the historical evaluation function, VYi denotes the new evaluation function, and denotes the weighting coefficient.

This auto-evaluation process strengthens the AL ability of Learner. The pseudocode description of this process is shown in Algorithm 2.4.

Algorithm 2.4 Auto-evaluating behaviour

2.3.2Algorithm of ALSS based on DFL

In the process of learning, the changing environment and lack of complete information make it difficult to predict the exact model of the environment. Traditional supervised learning methods cannot perform behaviour learning in an unknown environment, so Learner must exhibit intelligent behaviour. Intensive learning, as an atypical learning method, provides agents with the ability to select the optimal action in the Markov environment, and obviates the need to establish the environment model. This approach has been widely used in the field of machine learning with a certain degree of success. However, intensive learning has some limitations: (1) single-step learning is inefficient and can only accept discrete state inputs and produce discrete actions, but the environment in which machine learning is usually spatially continuous. (2) Discretization of continuous state space and motion space often leads to information loss and dimension disaster. (3) The computational complexity increases exponentially with the increase of the state-action pairs. Trial-and-error interactions with the environment can bring about losses in the system.

In view of the above limitations of reinforcement learning, this section proposes an AL mechanism based on DFL. According to the learner’s AL performance, the learning mechanism is decomposed into multi-step local learning processes. The state space is divided into a finite number of categories, which reduces the number of state-action pairs. After receiving an input state vector, the learner chooses an action to execute through a dynamic fuzzy reasoning system, and continuously updates the weight of the result in the rule base until convergence. Thus, a complete rule base can be obtained, which provides prior information for the learner’s behaviour.

1. Reasoning model of DF

The reasoning process of DFL attempts to solve the problem of dynamic fuzziness. The model can be expressed as:

where DF(A), DF(A1), DF(A2), ..., DF(An) are the prerequisites or conditions of dynamic fuzzy reasoning, DF(C) is the conclusion or posterior of dynamic fuzzy reasoning, and DF(R) is the dynamic fuzzy relation between the premise and the conclusion satisfied by DF(R) ∈ [0, 1] × [←,→]. The premises and conclusions are facts represented by DFL.

The rule base for a dynamic fuzzy inference system is generally composed of n rules in the following form:

where Rj represents the j th rule.

The goal of Q-learning is to learn how to choose the suboptimal or optimal action according to the external evaluation signal in the dynamic environment. The essence is of a learning process of dynamic decisions. However, the relevant environmental information may be lost when the learner does not have complete knowledge of the environment. Thus, a trial-and-error method must be used, and so the efficiency of the algorithm is not high. Sometimes, learning in an unknown environment can also involve some risks. One way to reduce these risks is to use an environmental model. The environmental model can be built using the experience gained by performing the relevant tasks before. Using environmental models can facilitate the results of the action without risking losses.

The environment model is a function from the state and action (St+1, a) to the next state and the enhanced value (St+1, r). The model is established in the following two ways: first, the agent builds the model offline using the data provided in the initial stage of the learning; second, the agent establishes or perfects the environment model online in the process of interacting with the environment. We consider the second method in AL.

In this chapter, we add the function E: S × A → R, which represents the knowledge of experience. This function influences the learner’s choice of action in the process of AL, thus accelerating the convergence speed and learning speed of the algorithm.

Definition 2.6 Experience: Experience is represented by a four-tuple indicating that an action at is performed at state generating a new state and obtaining an enhanced signal rt at the same time.

The empirical function in the AL algorithm records the relevant empirical information about performing a in state The most important problem when adding an empirical function to an algorithm is how to obtain empirical knowledge in the initial stage of learning, that is, how to define the empirical function The answer mainly depends on the specific field to which the algorithm is applied. For example, in the case of agent-based routing optimization, when the agent collides with the wall, the corresponding knowledge is acquired. That is, the agent obtains empirical knowledge about the environmental model online in the process of interacting with the environment.

We assume that the rule base described in the previous section has been established. This rule base can be used by the AL individual to select an action. According to the output value and empirical function of the dynamic fuzzy reasoning system for action selection, the environment goes into the next state and returns a certain value. According to the return value of this action, the result weights are updated in the KB.

The pseudocode of the autonomic subspace learning algorithm based on DFL is shown in Algorithm 2.5.

The demand for initial values in the algorithm is not high: usually, initializing a random distribution of Learners in the variable domain using ::Learner_init(); the algorithm can be terminated according to the actual situation, such as whether the variance between the values of successive results is less than the expected error.

The characteristics of our autonomic subspace learning algorithm based on DFL can be summarized as follows:

(1)The autonomic subspace learning algorithm based on DFL takes the learning objective as the objective function of the algorithm in the learning process (equivalent to the optimization process). The algorithm only compares the objective function values and does not consider other specific problems of the traditional optimization algorithm (such as derivative information). The nature of the objective function is not computationally complex. Therefore, the ALSS algorithm based on DFL can be considered a type of “black box” algorithm.

(2)Without high demands on the initial value, the algorithm only needs to initialize a number of AL individuals at random in the learning subspace to generate the initial value of the algorithm.

(3)In the process of optimization, there are relatively few parameter settings, mainly related to giving the behaviour process of the individuals a larger allowable range.

(4)The autonomic subspace learning algorithm based on DFL is very robust, which means that the algorithm gives similar results to the same problem under different run conditions.

2.3.3Case analysis

1. Experiment description

Using Java as a development tool, the autonomic subspace learning algorithm was applied in a 10 × 10 grid environment. The experimental environment is shown in Fig. 2.2. Each square represents a state of the Learner. Box 2 is the Learner’s initial position, Box 3 represents the Learner’s target position, and Region 1 is the obstacle. The obstacles and targets in the environment are static. For the Learner, the environment (obstacles, boundaries, and the location of the target) is unknown. In the Learner-centred two-dimensional space, the four directions of motion are equally distributed. The Learner’s four directions of movement, East, West, South, and North, represent four optional actions. If the Learner encounters obstacles or boundaries, then he must return to the original state.

The parameters of the standard Q-learning algorithm and autonomic subspace learning algorithm based on DFL were set to a = 0.1, r{100, 100, 0}, and the corresponding condition was {hit obstacles, reach the target, other}.

2. Experiment results

Using the autonomic subspace learning algorithm based on DFL (denoted as Method I) and Q-learning algorithm (denoted Method II), we compared the time cost and average number of search steps (L) required for the Learner to reach the target. The experimental results are shown in Figs. 2.3 and 2.4. The Episodes in Reinforcement Learning denote the learning cycle, i.e. the process in which the Learner moves from the initial position to the target position. Steps represents the number of steps taken by the Learner in moving from the initial position to the target position in each learning cycle.

In Fig. 2.3, it can be seen that, with the increase in the number of experiments, the autonomic subspace learning algorithm based on DFL required much less time than the Q-learning method.

Figure 2.4 shows that, with the increase in the number of experiments, the average number of search steps in method I is much smaller than that in method II.

In summary, the experiment shows that, in the 10 × 10 grid, the autonomic subspace learning algorithm based on DFL achieves a faster learning rate and better convergence ability than the ordinary Q-learning method.

2.4Summary

In this chapter, we introduced a subspace learning algorithm for AL. The contribution of this chapter has two aspects. (1) The axiom system of ALSS uses DFL. The relevant axiom for an AL system was established, and a full axiom system and related content were derived. This provides the theoretical basis for solving the problem of machine learning activity and dynamic fuzziness in the system. (2) An autonomic subspace learning algorithm based on DFL was proposed.

References

[1]Pang WG. Self-regulated learning. Shanghai, China, East China Normal University Edition, 2003.

[2]Shen WM. Discovery as autonomous learning from the environment. Machine Learning, 1993, 12(8): 143–165.

[3]Wang GY, He X. Initiative machine learning based on rough set. Computer Science, 2002, 29(9): 24–26.

[4]Chen F, Li FZ. A Preliminary study on the theory and algorithm of learning subspace orbital generation for lie group machine learning. Journal of Soochow University (Nature Science Edition), 2007, 23(1): 61–66.

[5]Wang J. Research and application on learning algorithm of autonomic learning subspace based on DFL. Master’s thesis, Soochow University, Suzhou, China, 2008.

[6]Wang J, Li FZ. Axiomatic system of autonomic learning subspace based on DFL. Computer Science, 2008, 35(12): 146–148.

[7]Wang J, Li FZ. Autonomic learning model and algorithm based on DFL. In Proceedings of 2007 IEEE International Conference on Granular Computing, Silicon Valley, USA, 2007, 259–265.

[8]Cofino T, Doganata Y, Drissi Y, et al. Towards knowledge management in autonomic systems. In Proceedings of the Eighth IEEE International Symposium on Computers and Communication, 2003, 2: 789–794.

[9]Li FZ. Dynamic fuzzy logic and its applications. New York, USA, Nova Science Publishers, 2008.