CHAPTER 24. Overview of the TCP/IP Protocol Suite

SOME OF THE MAIN TOPICS IN THIS CHAPTER ARE

TCP/IP and the OSI Reference Model 360

The Internet Protocol (IP) 364

The Address Resolution Protocol—Resolving IP Addresses to Hardware Addresses 380

The Transmission Control Protocol (TCP) 386

The User Datagram Protocol (UDP) 395

Ports, Services, and Applications 397

The Internet Control Message Protocol (ICMP) 398

TCP/IP is the primary network protocol used on the Internet. Unlike many earlier network protocols—such as ARCnet and DECnet—TCP/IP was not developed by a single vendor as a proprietary solution. TCP/IP was created to provide a network link between computer hardware and software platforms from various vendors (such as IBM and Digital Equipment Corporation at the high end, as well as personal computers at the low end). By standardizing on a single set of protocols, each of which serves a specific function, TCP/IP can be used to create a network, no matter what underlying hardware is used. During the early years of TCP/IP, universities, businesses, and government organizations were able to exchange information on the ARPANET—the Internet’s predecessor—because TCP/IP could be implemented on just about any kind of computer. It is easy to implement TCP/IP on a wide variety of operating systems because TCP/IP was developed with a layered approach, which means that network functionality was compartmentalized into layers instead of the traditional approach of writing network drivers as single programs tied to specific hardware.

Using this layered approach means that a vendor need only write a low-level driver for their hardware to work with the upper layers of the TCP/IP code (which provides a standard interface). By freeing the development of the protocol(s) from the hands of particular manufacturers, TCP/IP has been developed to satisfy the needs of the many, instead of the needs of a single vendor’s proprietary hardware. TCP/IP has evolved over time, using a process in which many individuals have had the opportunity to supply input into its development. The Request for Comments (RFCs) documents that you hear about all through this book are the documents that allow suggestions for protocol enhancements and new protocols to be reviewed by a diverse group of individuals who specialize in the particular topic at hand. Although many projects created by a committee turn out to be unwieldy, cumbersome works, this is not the case with TCP/IP. Instead, the RFC process allows for a great deal of input when creating standards, often resulting in a higher quality standard after scrutiny by experts in the field.

Note

Request for Comments documents can be useful when you are learning new technology. Over the years newer documents have superceded older standards documents as TCP/IP (and other related protocols used on the Internet) has matured. If you have difficulty understanding how a protocol works, or why it was developed the way it was, you can read the documents online at www.rfc-editor.org. This site contains all the RFC documents—both new and those that have been replaced. Some of these documents are difficult to read at first but can prove valuable guides for readers who want to understand the minute details of any particular protocol.

In this chapter, we will look at all the major protocols that make up the TCP/IP suite and show how they work together. In addition to the protocols you will read about here, the TCP/IP suite includes some standard applications, such as FTP and Telnet. These are discussed in Chapter 25, “Basic TCP/IP Services and Applications.” Finally, in Chapter 27, “Troubleshooting Tools for TCP/IP Networks,” you will find useful information about programs that were written to help diagnose problems when this complex suite of protocols and applications doesn’t appear to be working as it should.

To begin, it is important to understand the basic protocols on which the entire TCP/IP suite is built.

TCP/IP and the OSI Reference Model

As discussed earlier, TCP/IP was built using a layered approach. You may have heard about the OSI (Open Systems Interconnect or Open Systems Interconnection) Reference model that is used mostly as a framework around which a discussion of network protocols can be discussed. Developed in 1984 by the International Organization for Standardization (ISO), this model defines a protocol stack in a modular fashion, specifying what functions are performed by each module.

![]() For further discussion of the OSI reference model, see Appendix A, “Overview of the OSI Seven-Layer Networking Reference Model.”

For further discussion of the OSI reference model, see Appendix A, “Overview of the OSI Seven-Layer Networking Reference Model.”

For the purposes of this chapter, it should be noted that development of TCP/IP began long before the OSI model, and, as can be expected, TCP/IP protocols don’t always neatly match up to the seven layers of the OSI model.

Note

There is one bit of Internet trivia that is perpetuated about the ISO “acronym” that you might find interesting. You’ll find that many writers say that ISO stands for the International Standards Organization. Sounds right, doesn’t it? Well, it’s not true. In the first place, ISO is not an acronym, it’s a name. And it’s not the International Standards Organization, it’s the International Organization for Standardization. The name ISO was chosen for a very specific reason. “ISO” is derived from the Greek word isos, which can be translated as “equal.” In the English language you’ll find the prefix iso-quite frequently with this meaning; for example, the word “isometric.” Established in 1947, the ISO wanted a name that could be used worldwide, without having to take into account translations of their name, which would result in different acronyms depending on the language or translation. Thus, OSI is an acronym, but ISO is a name and is used to refer to the International Standards Organization. You can find out more about the wide range of standards promulgated by this organization at its website: www.iso.org.

The ISO used this model to develop a set of open network protocols, but these were never widely adopted. This was due to several factors. First, at that time many computer vendors held market share by keeping customers locked into proprietary hardware/software solutions. Second, the OSI protocols required a considerable amount of system resources, so it was impractical to try to implement them on smaller computers, such as minicomputers, much less the now-standard PC. However, the OSI networking model is still used today when discussing network protocols, and it is a good idea to become familiar with it if you will be working in this field. TCP/IP was developed based on a similar, though less modular, reference model, the DOD (Department of Defense) or DARPA model.

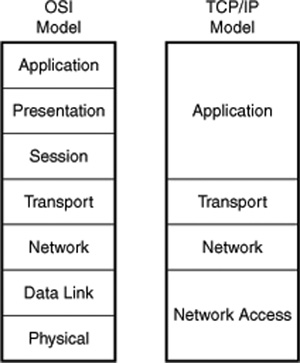

In Figure 24.1, you can see the four layers that make up the TCP/IP-DOD model, and how each layer relates to the OSI model.

Figure 24.1. Comparison of the TCP/IP and OSI networking models.

As you can see, TCP/IP doesn’t exactly fit into the OSI model, but it is still possible to refer to the model when discussing certain aspects of the protocols and services that TCP/IP provides.

TCP/IP Is a Collection of Protocols, Services, and Applications

The acronym TCP/IP stands for Transmission Control Protocol/Internet Protocol. In addition to these two important protocols, many other related protocols and utilities are commonly grouped together and called the TCP/IP protocol suite. This “suite” of protocols includes such things as the User Datagram Protocol (UDP) and the Internet Control Message Protocol (ICMP), and others discussed in this chapter and in several other chapters in this book.

Note

The terms protocol stack and protocol suite often are used to mean the same thing. Although it is convenient to think of TCP/IP as a single software entity, that is not the case. The protocols discussed in this chapter are called a “suite” because they work together, some providing services to others. For example, IP is the transport protocol that TCP uses when it wants to send data on the network. UDP likewise uses IP when it communicates on the network. At the bottom of the stack, ARP functions to associate hard-coded network card addresses with IP addresses. And when you get to the physical layer of any protocol, many methods can be used to transmit bits of information from one place to another. For LANs the most prevalent “wire” protocol is Ethernet. You may also encounter Token-Ring networks, though this protocol commands only a very small portion of the marketplace today.

Thus, when we talk about TCP/IP protocol suite (or stack), we are talking about a group of protocols, applications, and services.

TCP/IP, IP, and UDP

The main workhorses of this protocol suite are IP, TCP, and UDP:

![]() IP—The Internet Protocol is an unreliable, connectionless protocol that provides the means to get a datagram from one computer or device to another and for internetwork addressing.

IP—The Internet Protocol is an unreliable, connectionless protocol that provides the means to get a datagram from one computer or device to another and for internetwork addressing.

![]() TCP—The Transmission Control Protocol uses IP but provides a higher-level functionality that checks to be sure that the packets that IP manages actually get to and from their intended destinations. TCP is a reliable, connection-oriented protocol, requiring that a session be established to manage communications between two points in the network so that errors can be detected and, if possible, corrected.

TCP—The Transmission Control Protocol uses IP but provides a higher-level functionality that checks to be sure that the packets that IP manages actually get to and from their intended destinations. TCP is a reliable, connection-oriented protocol, requiring that a session be established to manage communications between two points in the network so that errors can be detected and, if possible, corrected.

![]() UDP—The User Datagram Protocol also uses IP to move data through a network. Whereas TCP uses an acknowledgment mechanism to ensure reliable delivery, UDP does not. UDP is intended for use in applications that don’t necessarily need the guaranteed delivery service provided by TCP. The Domain Name System (DNS) service is an example of an application that uses UDP. Applications that make use of UDP are responsible for taking on the functions of checking for reliable delivery that is provided by TCP.

UDP—The User Datagram Protocol also uses IP to move data through a network. Whereas TCP uses an acknowledgment mechanism to ensure reliable delivery, UDP does not. UDP is intended for use in applications that don’t necessarily need the guaranteed delivery service provided by TCP. The Domain Name System (DNS) service is an example of an application that uses UDP. Applications that make use of UDP are responsible for taking on the functions of checking for reliable delivery that is provided by TCP.

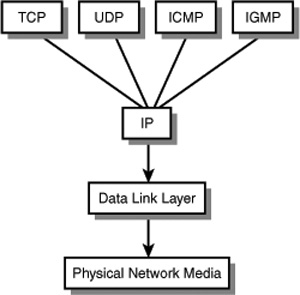

As you can see in Figure 24.2, IP is the basic protocol used in the TCP/IP suite to get datagrams delivered.

Figure 24.2. IP is used by many other protocols as the mechanism by which their data is routed and delivered through the network.

This figure shows that TCP/IP and its related protocols work above the physical components of the network. Therefore, it is easy to adapt TCP/IP to different types of networks, such as Ethernet and Token-Ring. When you talk about using TCP/IP on the network, what it all boils down to is that you’re packaging your data into an IP packet that is passed down to the actual network hardware for delivery. Because IP is the common denominator of the TCP/IP suite, this chapter covers it first, and after that shows how the remaining protocols build on the functions provided by IP.

Note

The terms datagram, packet, and frame are often misunderstood and used interchangeably. Starting with the TCP protocol, the data to be sent is actually called a segment. TCP passes segments to IP, which creates packets (or datagrams if the data comes from UDP) from these segments. IP passes the data farther down the protocol stack, and when it reaches the wire, it’s called a frame. For all practical purposes, however, you can consider a packet and a datagram to be the same thing.

Other Miscellaneous Protocols

In addition to TCP and IP, many other protocols are part of the TCP/IP suite. Back in Figure 24.2 you can see that the IGMP and ICMP protocols are included. IGMP is the Internet Group Management Protocol, which is used to manage groups of systems that are members of multicast groups. Multicasting is a technique that allows a datagram to be delivered to more than one destination. Figure 24.2 also shows the Internet Control Message Protocol (ICMP), which performs many functions to help control traffic on a network. In addition to these protocols, which are discussed later in this chapter, other protocols usually considered as part of or associated with the TCP/IP protocol suite include the following:

![]() ARP—The Address Resolution Protocol. ARP is used by a computer to determine what hardware address is associated with an IP address. This is necessary because IP addresses are used to route data between networks, while communications on the local network segment are done using the burned-in hardware address of the network cards.

ARP—The Address Resolution Protocol. ARP is used by a computer to determine what hardware address is associated with an IP address. This is necessary because IP addresses are used to route data between networks, while communications on the local network segment are done using the burned-in hardware address of the network cards.

![]() RARP—The Reverse Address Resolution Protocol is similar to ARP but works in reverse. This is an older protocol that was developed to allow a computer to find out what IP address it should use, based on a table stored on a device such as a router. This functionality has generally been replaced by other protocols, such as BOOTP and DHCP. However, you can still find this protocol in use on many networks that contain older legacy equipment that has yet to reach the end of its useful life, such as diskless X-Windows terminals.

RARP—The Reverse Address Resolution Protocol is similar to ARP but works in reverse. This is an older protocol that was developed to allow a computer to find out what IP address it should use, based on a table stored on a device such as a router. This functionality has generally been replaced by other protocols, such as BOOTP and DHCP. However, you can still find this protocol in use on many networks that contain older legacy equipment that has yet to reach the end of its useful life, such as diskless X-Windows terminals.

![]() DNS—The Domain Name System is the hierarchical naming system used by the Internet and most TCP/IP networks. For example, when you type

DNS—The Domain Name System is the hierarchical naming system used by the Internet and most TCP/IP networks. For example, when you type http://www.quepublishing.com into a browser, your TCP/IP stack sends a request to a DNS server to find out the IP address associated with that name. From then on, the browser can use the IP address to send requests to the Web site. More information about DNS can be found in Chapter 29, “Network Name Resolution.”

![]() BOOTP—The Bootstrap Protocol is also an older protocol that has generally been replaced by DHCP. In fact, most DHCP servers can act as BOOTP servers as well. BOOTP was created to allow a diskless workstation to download configuration information, such as an IP address and the name of a server that can be used to download an operating system. Because the diskless workstation has no local storage (other than memory), it can’t store this information itself between boots.

BOOTP—The Bootstrap Protocol is also an older protocol that has generally been replaced by DHCP. In fact, most DHCP servers can act as BOOTP servers as well. BOOTP was created to allow a diskless workstation to download configuration information, such as an IP address and the name of a server that can be used to download an operating system. Because the diskless workstation has no local storage (other than memory), it can’t store this information itself between boots.

![]() DHCP—The Dynamic Host Configuration Protocol relieves the network administrator of the task of having to manually configure each computer on the network with static addressing and other information. Chapter 28, “BOOTP and Dynamic Host Configuration Protocol (DHCP),” covers this topic in great detail.

DHCP—The Dynamic Host Configuration Protocol relieves the network administrator of the task of having to manually configure each computer on the network with static addressing and other information. Chapter 28, “BOOTP and Dynamic Host Configuration Protocol (DHCP),” covers this topic in great detail.

![]() SNMP—The Simple Network Management Protocol was developed to make managing network devices and computers from a central location easier. You can find out more about SNMP in Chapter 49, “Network Testing and Analysis Tools.”

SNMP—The Simple Network Management Protocol was developed to make managing network devices and computers from a central location easier. You can find out more about SNMP in Chapter 49, “Network Testing and Analysis Tools.”

![]() RMON—The Remote Monitoring protocol was developed to further enhance the administrator’s ability to manage computers and network devices remotely. This protocol is also covered in greater detail in Chapter 49.

RMON—The Remote Monitoring protocol was developed to further enhance the administrator’s ability to manage computers and network devices remotely. This protocol is also covered in greater detail in Chapter 49.

![]() SMTP—The Simple Mail Transfer Protocol is the protocol that gets your email from here to there. Chapter 26, “Internet Mail Protocols: POP3, SMTP, and IMAP,” can give you more information about how this protocol functions, along with other email protocols.

SMTP—The Simple Mail Transfer Protocol is the protocol that gets your email from here to there. Chapter 26, “Internet Mail Protocols: POP3, SMTP, and IMAP,” can give you more information about how this protocol functions, along with other email protocols.

The Internet Protocol (IP)

Although the Internet protocol is the second component of the TCP/IP acronym, it is perhaps the more important of the two. IP is the basic protocol in the suite that provides the information used for getting packets from one place to another. IP provides a connectionless, unacknowledged network service, and also provides the addressing mechanism used by TCP/IP. The following main features distinguish IP from other protocols:

![]() IP is a connectionless protocol. No setup is required for IP to send a packet of information to another computer. Each IP packet is a complete entity and, as far as the network is concerned, has no relation with any other IP packet that traverses the network.

IP is a connectionless protocol. No setup is required for IP to send a packet of information to another computer. Each IP packet is a complete entity and, as far as the network is concerned, has no relation with any other IP packet that traverses the network.

![]() IP is an unacknowledged protocol. IP doesn’t check to see that a datagram or packet actually arrives intact at its destination. However, the Internet Control Message Protocol does assist IP so that some conditions can be corrected. For example, although IP doesn’t receive an acknowledgment from the destination of an IP packet, it will receive ICMP messages telling it to slow down if it is sending packets faster than the destination can process them.

IP is an unacknowledged protocol. IP doesn’t check to see that a datagram or packet actually arrives intact at its destination. However, the Internet Control Message Protocol does assist IP so that some conditions can be corrected. For example, although IP doesn’t receive an acknowledgment from the destination of an IP packet, it will receive ICMP messages telling it to slow down if it is sending packets faster than the destination can process them.

![]() IP is unreliable. This is easy to see based on the first two items in this list. IP lacks a mechanism for determining whether packets are delivered, and thus packets can be dropped by routers or other network devices. This can happen, for example, when the network traffic exceeds the bandwidth that a network device can handle.

IP is unreliable. This is easy to see based on the first two items in this list. IP lacks a mechanism for determining whether packets are delivered, and thus packets can be dropped by routers or other network devices. This can happen, for example, when the network traffic exceeds the bandwidth that a network device can handle.

![]() IP provides the address space for TCP/IP. This is perhaps the most important feature of the IP protocol. The hierarchical nature of IP addressing is what makes it possible to connect millions of computers on the Internet without requiring each computer to know all the addresses on the network.

IP provides the address space for TCP/IP. This is perhaps the most important feature of the IP protocol. The hierarchical nature of IP addressing is what makes it possible to connect millions of computers on the Internet without requiring each computer to know all the addresses on the network.

IP Is a Connectionless Transport Protocol

IP is connectionless—each packet is separate from the others. From the IP standpoint, each packet is unrelated to any other packet. IP does not contact the destination computer or network device and set up a route that will be used to send a stream of data. Instead, it just accepts data from a higher-level protocol, such as TCP or UDP, formats a package that contains addressing information, and sends the packet on its way using the underlying physical network architecture. The information found in the IP datagram header is used on a hop-by-hop basis to route the datagram to its destination. When a higher-level protocol uses IP to deliver a series of information packets, there is no guarantee that each packet created by the IP layer will take the same route to get to the eventual destination. It is quite possible for a series of packets created by a higher-level protocol to reach the destination in a sequence out of order from how they were transmitted. IP doesn’t even care whether packets arrive at their destination. That function is left to the protocol that uses IP for delivery. This doesn’t mean that IP is a useless protocol, however—it just means that the higher-level protocols (such as TCP) that use IP need to provide for some kind of error checking, packet sequencing, and acknowledgment. You’ll learn more about this subject in the section “The Transmission Control Protocol (TCP),” later in the chapter, when we talk about how TCP sets up a connection and acknowledges sent and received packets.

IP Is an Unacknowledged Protocol

IP does not check to see whether the datagrams it sends out ever make it to their destination. It just formats the information into a packet and sends it out on the wire. Thus, it is considered to be an unacknowledged protocol. The overhead involved in acknowledging receipt of a datagram can be significant. Leaving out an acknowledgment mechanism enables IP to be used by other protocols and applications that do not require this functionality, and thus eliminates the overhead associated with acknowledgments. Applications and protocols that do need to know that a datagram has been successfully delivered will not use the IP protocol. Instead, they can implement the acknowledgment mechanism found in the TCP protocol.

One way of thinking about this relationship between IP and upper-level protocols is to consider how the postal service works. If you send a letter through the mail, you have no way to know when—or even whether—the letter was correctly delivered. Unless you pay the extra money to get a signed receipt of delivery returned to you, you can’t be sure that the letter ever reached its destination.

IP Is an Unreliable Protocol

Because IP is connectionless and because it does not check to see whether packets arrive at their destination, and because packets may arrive out of order, IP is considered an unreliable protocol. Or, to put it another way, it’s a best-effort delivery service. IP doesn’t perform routing functions (that task is left up to routers and routing protocols), and IP can’t guarantee what route a datagram will take through the network. Another reason it is considered unreliable is that IP implements a Time to Live (TTL) value that limits the number of network routers or host computers through which a datagram can travel. When this limit is reached, the datagram is simply discarded. Because no acknowledgment mechanism is built into IP, it is unaware of this kind of situation. The reason for this is to solve problems associated with routing. For example, it’s quite possible for an administrator to configure a router incorrectly, causing an endless loop to be created in a network. If it were not for the TTL value, the packet could continue to pass from one router to another, and another, forever. The TTL value is used mainly to prevent just this type of situation from occurring.

IP Provides the Address Space for the Network

Addressing is one of the most important functions implemented in the IP layer. In earlier chapters you learned that network adapter cards use a burned-in address, usually called a Media Access Control (MAC) address. These addresses are determined by the manufacturer of the network card, and the address space created is considered to be a “flat” address space. That is, there is no organization provided by MAC addresses that can be used to efficiently route datagrams from one system or network to another. On an Ethernet card, for example, a MAC address is composed of two parts. The first part of the MAC address identifies the manufacturer of the network card. The remaining octets (or bytes) are assigned, usually in a serial fashion, to the cards the manufacturer produces. The MAC address assigned to each adapter is unique and is made up of a 6-byte address (48 bits), which is usually expressed in hexadecimal notation to make it easier to write. For example, 00-80-C8-EA-AA-7E is much easier to write than trying to express the same address in binary, which would be a string of zeros and ones 48 bits long (in this example, 000000001000000011001000111010101010101001110011).

IP addresses are also made up of two components: a network address and a host address. By allowing a network address, it is possible to create a hierarchy that allows for an efficient routing mechanism when sending data to other networks. Whereas a particular network might consist of systems that have network adapters from multiple vendors, and thus have MAC addresses that are seemingly random numbers, IP addresses are organized into networks. Because of this, routers don’t have to keep hundreds of millions of MAC addresses in a memory cache to deliver datagrams. Instead, they just need a table of addresses that tells them how to best route a datagram to the network on which the host system resides.

Just What Does IP Do?

IP takes the data from the Host-to-Host layer (as shown previously in Figure 24.1) and fragments the data into smaller packets (or datagrams) that can be transferred through the network. On the receiving end, IP then reassembles these packets and passes them up the protocol stack to the higher-level protocol that is using IP. To get each packet delivered, IP places the source and destination IP addresses into the packet headers. IP also performs a checksum calculation on the header information to ensure its validity. Note, however, that IP does not perform this function on the data portion of the packet.

Note

The term checksum is used to refer to a mathematical calculation performed at the source and destination of a collection of bits to ensure that the information arrives uncorrupted. For example, the cyclic redundancy check (CRC) method, which is used by many network protocols, uses a polynomial calculation for this purpose. Some error detection methods work better than others. CRC not only can detect that an error has occurred during transmission, but can, to some degree, determine which bits are in error and fix the problem.

As already noted, TCP/IP allows for networks made up of different underlying technologies to interoperate. While one network might use the Ethernet 802 frame format, another might use FDDI. Each of these lower-level frames has its own particular header that contains information needed by that technology to send frames through the physical network media. At this lower level in the protocol stack, the IP packet rides in the data portion of the frame. After IP adds its header information to the message it receives from a higher-level protocol, and creates a packet of the appropriate size, it passes the packet to the Network Access layer, which wraps the IP packet into an Ethernet frame, for example. At the receiving end the Ethernet frame header information is stripped off, and the IP datagram is passed up the stack to be handled by the IP protocol. Similarly, the IP header information is stripped off by the higher-level protocols that use IP, such as TCP or UDP.

Examining IP Datagram Header Information

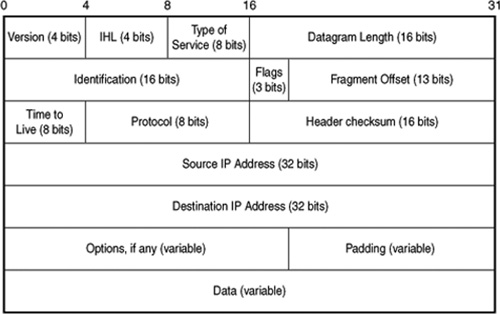

In Figure 24.3 you can see the format of an IP packet. In the IP header you will find the addressing information that is used by routers and other network devices to deliver the packet to its eventual destination.

Figure 24.3. The IP header contains information concerning addressing and routing the packet.

These are the header fields of the IP packet:

![]() Version—IP comes in different versions. This 4-bit field is used to store the version of the packet. Currently, IP version 4 is the most widely used version of IP. The “next generation” IP is called IPv6, which stands for version 6. Because different versions of IP use different formats for header information, if the IP layer on the receiving end is a lower version than that found in this field, it will reject the packet. Because most versions of IP at this time are version 4, this is a rare event. Don’t worry about this field until you upgrade your network to IPv6.

Version—IP comes in different versions. This 4-bit field is used to store the version of the packet. Currently, IP version 4 is the most widely used version of IP. The “next generation” IP is called IPv6, which stands for version 6. Because different versions of IP use different formats for header information, if the IP layer on the receiving end is a lower version than that found in this field, it will reject the packet. Because most versions of IP at this time are version 4, this is a rare event. Don’t worry about this field until you upgrade your network to IPv6.

![]() Internet Header Length (IHL)—This 4-bit field contains the length of the header for the packet and can be used by the IP layer to calculate where in the packet the data actually starts. The numerical value found in this field is the number of 32-bit words in the header, not the number of bits or bytes in the header.

Internet Header Length (IHL)—This 4-bit field contains the length of the header for the packet and can be used by the IP layer to calculate where in the packet the data actually starts. The numerical value found in this field is the number of 32-bit words in the header, not the number of bits or bytes in the header.

![]() Type of Service (TOS)—This 8-bit field is intended to implement a prioritization of IP packets. Until recently, however, no major implementation of IP version 4 has used the bits in this field, so these bits are usually set to zeros. With Gigabit Ethernet and 10 Gigabit Ethernet, this is changing. Because these faster versions of Ethernet can compete with other protocols such as ATM, which do provide a type of service function, you can expect to see this field used in faster versions of Ethernet. IPv6 also provides mechanisms that allow this functionality.

Type of Service (TOS)—This 8-bit field is intended to implement a prioritization of IP packets. Until recently, however, no major implementation of IP version 4 has used the bits in this field, so these bits are usually set to zeros. With Gigabit Ethernet and 10 Gigabit Ethernet, this is changing. Because these faster versions of Ethernet can compete with other protocols such as ATM, which do provide a type of service function, you can expect to see this field used in faster versions of Ethernet. IPv6 also provides mechanisms that allow this functionality.

![]() Datagram Length—This field is 16 bits long and is used to specify the length of the entire packet. It contains the number of 8-bit octets (or bytes). The largest value that can be stored in 16 bits is 65,535 bytes. Subtracting the IHL field from this value, IP will yield the length of the data portion of the packet.

Datagram Length—This field is 16 bits long and is used to specify the length of the entire packet. It contains the number of 8-bit octets (or bytes). The largest value that can be stored in 16 bits is 65,535 bytes. Subtracting the IHL field from this value, IP will yield the length of the data portion of the packet.

![]() Identification—IP often must break a message it receives from a higher-level protocol into smaller packets, depending on the maximum size of the frame supported by the underlying network technology. On the receiving end, these packets need to be reassembled. The sending computer places a unique number for each message fragment into this field, and each packet for a particular message will have the same value in this 16-bit field. Thus, the receiving computer can take all the parts and re-create the original message.

Identification—IP often must break a message it receives from a higher-level protocol into smaller packets, depending on the maximum size of the frame supported by the underlying network technology. On the receiving end, these packets need to be reassembled. The sending computer places a unique number for each message fragment into this field, and each packet for a particular message will have the same value in this 16-bit field. Thus, the receiving computer can take all the parts and re-create the original message.

![]() Flags—This field contains several flag bits. Bit 0 is reserved and should always have a value of zero. Bit 1 is the Don’t Fragment (DF) field (0 = fragmentation is allowed, 1 = fragmentation is not allowed). If a computer finds that it needs to fragment a packet to send it through the next hop in the physical network, and this DF field is set to 1, then it will discard the packet (remember that IP is an unreliable protocol). If this field is set to 0, it will divide the packet into multiple packets so that they can be sent onward in their journey. Bit 2 is the More Fragments (MF) flag and is used to indicate the fragmentation status of the packet. If this bit is set to 1, there are more fragments to come. The last fragment of the original message that was fragmented will have a value of zero in this field. These two fields (Identification and Flags), along with the next field, control the fragmentation process.

Flags—This field contains several flag bits. Bit 0 is reserved and should always have a value of zero. Bit 1 is the Don’t Fragment (DF) field (0 = fragmentation is allowed, 1 = fragmentation is not allowed). If a computer finds that it needs to fragment a packet to send it through the next hop in the physical network, and this DF field is set to 1, then it will discard the packet (remember that IP is an unreliable protocol). If this field is set to 0, it will divide the packet into multiple packets so that they can be sent onward in their journey. Bit 2 is the More Fragments (MF) flag and is used to indicate the fragmentation status of the packet. If this bit is set to 1, there are more fragments to come. The last fragment of the original message that was fragmented will have a value of zero in this field. These two fields (Identification and Flags), along with the next field, control the fragmentation process.

![]() Fragment Offset—When the MF flag is set to 1 (the message was fragmented), this field is used to indicate the position of this fragment in the original message so that it can be reassembled correctly. This field is 13 bits in length and expresses the offset value of this fragment in units of 8 bytes.

Fragment Offset—When the MF flag is set to 1 (the message was fragmented), this field is used to indicate the position of this fragment in the original message so that it can be reassembled correctly. This field is 13 bits in length and expresses the offset value of this fragment in units of 8 bytes.

![]() Time to Live (TTL)—Were it not for TTL, a packet could travel forever on the network because it is possible for loops to exist in the routing structure (due to an administrator’s error, or the failure of a routing protocol to update routing tables in a timely manner). The TTL value is used to prevent these endless loops. Each time a packet passes through a router, the value in this field is decremented by at least one. The value is supposed to represent seconds, and in some cases in which a router is processing packets slowly, this field can be decremented by more than one. It all depends on the vendor’s implementation. When the value of the TTL field reaches zero, the packet is discarded. Because IP is a best-effort, unreliable protocol, the higher-level protocol that is using IP must detect that the packet did not reach its destination and resend the packet.

Time to Live (TTL)—Were it not for TTL, a packet could travel forever on the network because it is possible for loops to exist in the routing structure (due to an administrator’s error, or the failure of a routing protocol to update routing tables in a timely manner). The TTL value is used to prevent these endless loops. Each time a packet passes through a router, the value in this field is decremented by at least one. The value is supposed to represent seconds, and in some cases in which a router is processing packets slowly, this field can be decremented by more than one. It all depends on the vendor’s implementation. When the value of the TTL field reaches zero, the packet is discarded. Because IP is a best-effort, unreliable protocol, the higher-level protocol that is using IP must detect that the packet did not reach its destination and resend the packet.

![]() Protocol—This field is 8 bits long and is used to specify a number that represents the network protocol for the data contained in this packet. The Internet Corporation for Assigned Names and Numbers (ICANN) decides the numbers used in this field to identify specific protocols. For example, a value of 6 is used to specify the TCP protocol.

Protocol—This field is 8 bits long and is used to specify a number that represents the network protocol for the data contained in this packet. The Internet Corporation for Assigned Names and Numbers (ICANN) decides the numbers used in this field to identify specific protocols. For example, a value of 6 is used to specify the TCP protocol.

![]() Header Checksum—This 16-bit field contains a computed value used to ensure the integrity of the header information of the packet. When information in the header is changed, this value is recalculated. Because the TTL value is decremented by each system that a packet passes through, this value is recalculated at each hop as the packet travels through the network.

Header Checksum—This 16-bit field contains a computed value used to ensure the integrity of the header information of the packet. When information in the header is changed, this value is recalculated. Because the TTL value is decremented by each system that a packet passes through, this value is recalculated at each hop as the packet travels through the network.

![]() Source IP Address—The IP address of the source of the packet. This is a 32-bit-long field. The format of IP addresses is discussed in greater detail later in this chapter.

Source IP Address—The IP address of the source of the packet. This is a 32-bit-long field. The format of IP addresses is discussed in greater detail later in this chapter.

![]() Destination IP Address—The IP address of the destination of the packet. This also is a 32-bitlong field.

Destination IP Address—The IP address of the destination of the packet. This also is a 32-bitlong field.

![]() Options—This is an optional variable-length field that can contain a list of options. The option classes include control, reserved, debugging, and measurement. Source routing can be implemented using this field and is of particular importance when configuring a firewall. Table 24.1 lists the option classes and option numbers that can be found in this field.

Options—This is an optional variable-length field that can contain a list of options. The option classes include control, reserved, debugging, and measurement. Source routing can be implemented using this field and is of particular importance when configuring a firewall. Table 24.1 lists the option classes and option numbers that can be found in this field.

![]() Padding—This field is used to pad the header so that it ends on a 32-bit boundary. The padding consists of zeros. Different machines and different operating systems work based on different sizes for bytes, words, quadwords (all of which are multiples of 8), and so on. Padding makes it easier to handle a known quantity of data (that is, to pad the header to a known length) than for the system to have to find some other method for determining where a data structure ends. For example, a router must operate quickly. It must perform calculations, look up information in the routing table, and so on. Extracting the header information from a packet can be implemented in hardware or software to make the router work faster by using known quantities of bits.

Padding—This field is used to pad the header so that it ends on a 32-bit boundary. The padding consists of zeros. Different machines and different operating systems work based on different sizes for bytes, words, quadwords (all of which are multiples of 8), and so on. Padding makes it easier to handle a known quantity of data (that is, to pad the header to a known length) than for the system to have to find some other method for determining where a data structure ends. For example, a router must operate quickly. It must perform calculations, look up information in the routing table, and so on. Extracting the header information from a packet can be implemented in hardware or software to make the router work faster by using known quantities of bits.

Table 24.1. Option Classes and Option Numbers

The Options Field and Source Routing

The Options field is optional. Source routing (which is discussed in Chapter 45, “Firewalls”), for example, can be implemented using this field. Although IP usually lets other protocols make routing decisions (that is, the path the packet takes through the network), in most cases it is possible to specify a list of devices for the route instead. As shown in Table 24.1, IP can use two options for routing purposes. These are loose source routing (option number 3) and strict source routing (option number 9).

Hackers can use source routing to force a packet to return to their computer, using a predefined route. Using source routing with TCP/IP should be discouraged. For more information, see Chapter 45.

Each of these techniques for source routing provides a list of addresses that the packet must pass through. Loose source routing uses this list but doesn’t necessarily use it in all cases—other routes can be used to get to each machine addressed in the list. When strict source routing is used, however, the list must be followed exactly; if it cannot, the packet will be discarded.

IP Addressing

Although most people think of IP as the transport protocol used by higher-level protocols, one of its more important functions is to provide the address space used by the TCP/IP suite. Earlier in this chapter we discussed the difficulty of having to create a routing table that consists of hundreds of millions of actual hardware addresses, providing for no built-in organization capability.

IP addresses are used for just this purpose: to provide a hierarchical address space for networks. Each network adapter has a hard-coded network address that is 48 bits long. When data packets are sent out on the wire of the local area network (LAN) segment, this MAC address is used for the source and destination addresses that are embedded in the Ethernet frame, which encapsulates the actual IP packet. After an IP packet reaches the destination network, the router sends the packet out onto the network segment that contains the destination. The MAC address is used from there on to deliver the data. On a LAN segment, MAC addresses can be used efficiently because most LAN segments consist of just a few hundred or a few thousand host computers. This number of addresses can easily be stored in network devices, such as bridges or switches.

IP Addresses Make Routing Possible

Because the IP address is composed of two components, the network address and the host computer address, it is a simple matter to construct routers that use the network portion of the address to route packets to their destination networks. After the packet has arrived at a router on the destination network, the host portion of the address is used to locate the destination computer. Without the capability to designate a network address, as well as a host address, the hierarchical address space could not exist, and routing would require routing tables that literally would have to store every address of every computer or device on the network. In such a scenario the IP address would have no advantage over the MAC address. As it stands, IP gets a packet to the destination network by limiting routing tables to storing only network addresses allowing routing to be a simple and more efficient process.

IP addresses allow you to organize a collection of networks in a logical hierarchical fashion. There are three kinds of IP addresses:

![]() Unicast—This kind of address is the most common type of IP address. It uniquely identifies a single host on the network.

Unicast—This kind of address is the most common type of IP address. It uniquely identifies a single host on the network.

![]() Broadcast—Not to be confused with an Ethernet frame broadcast, IP also provides this capability by setting aside a set of addresses that can be used for broadcasting to send data to every host system on a particular network.

Broadcast—Not to be confused with an Ethernet frame broadcast, IP also provides this capability by setting aside a set of addresses that can be used for broadcasting to send data to every host system on a particular network.

![]() Multicast—Similar to broadcast addresses, multicasting addresses send data to multiple destinations. The difference between a multicast address and a broadcast address is that a multicast address can send data to multiple networks to be received by hosts that are configured to receive the data instead of every host on the network.

Multicast—Similar to broadcast addresses, multicasting addresses send data to multiple destinations. The difference between a multicast address and a broadcast address is that a multicast address can send data to multiple networks to be received by hosts that are configured to receive the data instead of every host on the network.

![]() Anycast—When Ipv6 nodes need to transmit, each individual node can transmit data to a list (or group) of addresses. This type of transmission is called Anycast and it’s fully supported in IPv6.

Anycast—When Ipv6 nodes need to transmit, each individual node can transmit data to a list (or group) of addresses. This type of transmission is called Anycast and it’s fully supported in IPv6.

Additionally, there are address classes, which are used mainly to define the size of the network and host portions of the IP address.

IP Address Classes

The Internet is a collection of networks that are all joined together by routers to create a larger network. The name itself says it all. The Internet Protocol (IP) makes this possible because it allows for addressing each network that is attached to the Internet, as well as identifying the host computers that reside on each network. When packets are routed through the Internet (or through a private corporate network that uses TCP/IP—an intranet), the IP address is used to get the data to the destination network. When the data packet is delivered to a router on the destination network, the actual hardware address (MAC address) of the computer is used to deliver the packet to the correct computer. This is done by taking the host portion of the IP address and consulting a table that maps hardware addresses to IP host addresses for the local network. If no match is found, the Address Resolution Protocol (ARP) is used on the local wire to find out the hardware address, and it is added to the table.

The important factor here is that it’s possible to assign an address both to networks and to the individual hosts.

Note

IP Address classes were first defined in RFC 791. Although this class system has served its purpose for many years, routing on the Internet today actually is much more complicated than these simple address classes allow for. However, it is essential to understand address classes on a local LAN or a corporate network. For more information on how the IP address is used for routing purposes on the Internet, see Chapter 33, “Routing Protocols.”

An IP address is 4 bytes long (32 bits). Whereas MAC addresses usually are expressed in hexadecimal notation, IP addresses usually are written using dotted-decimal notation. Each byte of the entire address is converted to its decimal representation, and then the 4 bytes of the address are separated by periods to make it easier to remember. As you can see in Table 24.2, the decimal values are much easier to remember than their binary equivalents.

Table 24.2. IP Addresses Are Expressed in Decimal Notation

As you can see, it is much easier to write the address in dotted-decimal notation (150.204.200.27) than to use the binary equivalent (10010110110011001100100000011011).

Note

If you have problems converting between binary and decimal, or even hexadecimal and octal numbering systems, don’t worry. There’s a simple way to do this using the Windows Calculator accessory. When you bring up the calculator, select the View menu and then select Scientific. You’ll get a larger display for the calculator that allows you to enter a number in any of the supported numbering systems. You can then simply click on another numbering base system to automatically convert the value you entered to the value you want to see in another numerical base system. If you don’t use Windows, affordable calculators that will perform the same function are widely available. If all else fails, use a pencil and paper and think back to your high-school math class.

Because IP addresses are used to route a packet through a collection of separate networks, it is important to know what part of the IP address is used as the network address and what part is used for the host computer’s address.

IP addresses are divided into three major classes (A, B, and C) and two less familiar ones (D and E). Each class uses a different portion of the IP address bits to identify the network. There is a need for classifying networks because there is a need to be able to create networks of different sizes. Whereas a small LAN might have only a few computers or a few hundred, larger networks can have thousands or more networked computers. The class system of IP addresses is accomplished by using a different number of bits of the total address to identify the network and host portions of the IP address. Additionally, the first few bits of the binary address are used to indicate which class an IP address belongs to.

The total number of bits available for addressing is always the same: 32 bits. Because the number of bits used to identify the network varies from one class to another, it should be obvious that the number of bits remaining to use for the host computer part of the address will vary from one class to another also. This means that some classes will have the capability to identify more networks than others. Conversely, some will have the capability to identify more computers on each network.

The first 4 bits of the address tell you what class an address is a member of. In Table 24.3, you can see the IP address classes along with the bit values for the first 4 bits. The bit positions that are marked with an “x” in this table indicate that this value makes no difference in the determination of IP address class.

Table 24.3. The First 4 Bits of the IP Address Determine the Class of the Address

Class A Addresses

As shown in Table 24.3, any IP address that has a zero in the first bit position is a Class A address. The values for the remaining bits make no difference. Also, you can see that any address that has 10 for the first 2 bits of the address is a Class B address, and so on. Remember that these are bit values, and as such are expressed in binary. These are not the decimal values of the IP address when it is expressed in dotted-decimal notation.

Class A addresses range from all zeros (binary) to a binary value of 0 in the first position followed by seven 1 bits. Converting each byte of the address into decimal shows that Class A addresses range from 0.0.0.0 to 127.255.255.255, when expressed in the standard dotted-decimal notation.

Note

It is not possible to have an IP address expressed in dotted-decimal notation that exceeds 255 for any of the four values. The decimal value of a byte with all 1s (11111111) is 255. Take the address 140.176.123.256, for example. This address is not valid because the last byte is larger than 255 decimal. When planning how to distribute IP addresses for your network, keep this fact in mind. It is not possible to express a value larger than 255 in binary when using only 8 bits.

Keeping in mind that the class system for IP addresses uses a different number of bits for the network portion of the address, the Class A range of networks is the smallest. That is because Class A addresses use only the first byte of the address to identify the network. The rest of the address bits are used to identify a computer on a Class A network. Because the first bit of the first byte of the address is always zero, this leaves only 7 bits that can be used to create a network address. Because only 7 bits are available, there can be only 127 network addresses (binary 01111111 = 127 decimal) in a Class A network. It is not possible to have 128 network addresses in this class because, to express 128 in binary, the value would be 10000000, which would indicate a Class B address.

However, Class A networks can contain the largest number of host computers or devices on each network, because they use the remaining 3 bytes to create the host portion of the IP address. Three bytes can store a value, in decimal, of up to 16,777,215 (that’s 24 bits all set to 1 in binary). Counting zero as a possibility (0–16,777,215), this means that a total of 16,777,216 (2 to the 24th power) addresses can be expressed using 3 bytes.

To summarize, there can be a total of 127 Class A networks, and each network can have up to 16,777,216 unique addresses for computers on the network. The range of addresses for Class A networks is from 0.0.0.0 to 127.255.255.255. When you see an address that falls in this range, you can be sure that it is a Class A address.

Class B Addresses

The first 2 bits of an IP address need to be examined to determine whether it is a Class B address. If the first 2 bits of the address are set to 10, the address belongs in this class. Class B addresses range from 1 followed by 31 zeros to 10 followed by 30 ones. If you convert this to the standard dotted-decimal notation, this is 128.0.0.0 to 191.255.255.255. In binary, the decimal value of 128 decimal is 10000000. The decimal value of 191 translates to 10111111 in binary. Both of these values in binary have 10 as the first two digits, which places them in the Class B IP address space.

Because the first 2 bytes of the Class B address are used to address the network, only 2 remaining bytes can be used for host computer addresses. If you do the calculations, you’ll find that there can be up to 16,384 possible network addresses in this class, ranging from 128.0 to 191.255 in the first 2 bytes. There can be 65,536 (2 to the 16th power) individual computers on each Class B network.

You might wonder why the number of network addresses and the number of host addresses aren’t the same in the Class B address range, because they both use 2 bytes. It’s simple: Just remember that the network portion of the Class B address always has 1 for the first bit position and 0 for the second bit position. That zero in the second position is what keeps the number of network addresses less than the number of host computer addresses. In other words, the largest host address you can have in a Class B network, expressed in binary, is 10111111, which is 191 in decimal. Because there is no restriction on the value of the first two digits of the host portion of the address, it is possible to have the host portion set to all ones, giving a string of 16 ones, which would be 255.255 in dotted-decimal notation.

Class C Addresses

The Class C address range always has the first 3 bits set to 110. If you convert this to decimal, this means that a Class C network address can range from 192.0.0.0 to 223.255.255.255. In this class the first 3 bytes are used for the network part of the address, and only a single byte is left to create host addresses.

Again, doing the math (use that Windows calculator!), you can see that there can be up to 2,097,152 Class C networks. Each Class C network can have up to 256 host computers (0–255). This allows for a large number of Class C networks, each with only a small number of computers.

Other Address Classes

The first three address classes are those used for standard IP addresses. Class D and E addresses are used for different purposes. The Class D address range is reserved for multicast group use. Multicasting is the process of sending a network packet to more than one host computer. The Class D address range, in decimal, is from 224.0.0.0 to 239.255.255.255. No specific bytes in a Class D address are used to identify the network or host portion of the address. This means that a total of 268,435,456 possible unique Class D addresses can be created.

Finally, Class E addresses can be identified by looking at the first 4 bits of the IP address. If you see four 1s at the start of the address (in binary), you can be sure you have a Class E address. This class ranges from 240.0.0.0 to 255.255.255.255, which is the maximum value you can specify in binary when using only 32 bits. Class E addresses are reserved for future use and are not normally seen on most networks that interconnect through the Internet.

Note

It became apparent during the early 1990s that the IPv4 address space would become exhausted a lot sooner than had been previously thought. Actually, this forecast has proved to be a little overstated. Network Address Translation (NAT) can be used with routers so that you can use any address space on your internal network, while the router that connects to the Internet is assigned one or more actual registered addresses. The router, using NAT, can manipulate IP addresses and ports to act as a proxy for clients on the internal network when they communicate with the outside world.

Request For Comments 1918, “Address Allocation for Private Internets,” discusses using several IP address ranges for private networks that do not need to directly communicate on the Internet. These are the ranges:

10.0.0.0 to 10.255.255.255.255

172.16.0.0 to 172.31.255.255

192.168.0.0 to 192.168.255.255

Because these addresses now are not valid on the Internet, they can be used by more than one private network. To connect the private network to the Internet, you can use one or more proxy servers that use NAT. If you have a DSL or cable modem and want to use the broadband connection for more than one computer, you can purchase an inexpensive router (around $100, less with rebates) to perform this very function. See Chapter 45 for more about how this is done. Windows operating systems (2000, Millennium, and XP) also use the 192.168.0.0 address space when computers are configured to get addressing information from a DHCP server and no DHCP server is present. This procedure is known as Automatic Private IP Addressing (APIPA). You can learn more about how APIPA works by reading Chapter 28.

Up to this point we have identified the possible ranges that could be used to create IP addresses in the various IP address classes. There are, however, some exceptions that should be noted. As previously discussed, an address used to uniquely identify a computer on the Internet is known as a unicast address.

Several exceptions take away from the total number of addresses that are possible in any of the address classes. For example, any address that begins with 127 for the first byte is not a valid address outside the local host computer. The address 127.0.0.1 (which falls in the Class A address range) is commonly called a loopback address and is normally used for testing the local TCP/IP stack to determine whether it is configured and functioning correctly. If you use the ping command, for example, with this address, the packet never actually leaves the local network adapter to be transmitted on the network. The packet simply travels down through the protocol stack and back up again to verify that the local computer is properly configured.

You can use this address to test other programs. For example, you can Telnet to the loopback address to find out whether the Telnet program is working on your computer. This assumes that you have a Telnet server running on the computer.

Other exceptions include the values of 0 and 255. When used in the network portion of an address, zeros imply the current network. For example, the address 140.176.0 is the address of a Class B network, and the value of 193.120.111.0 is the address of a Class C address.

The number 255 is used in an address to specify a broadcast message. A broadcast message is sent out only once but doesn’t address a single host as the destination. Instead, such a packet can be received by more than one host, hence the name “broadcast.” Broadcasts can be used to send a packet to all computers on a particular network or subnet. The address 140.176.255.255 would be received by all hosts in the network defined by 140.176.0.

After subtracting these special cases, you can see in Table 24.4 the actual number of addresses for Classes A through C that are available for network addressing purposes.

Table 24.4. IP Addresses Available for Use

There is another exception to usable addresses that fall within the IP address space. This is not dictated by an RFC or enforced by TCP/IP software. Instead, it is a convention followed by many network administrators to make it easy to identify routers. Typically you will find that an IP address that has as its last octet the value of 254 is a router. When you stick to this convention, it is easy to remember the default gateway when you are setting up a computer. It’s the computer’s address, with 254 used as the last octet.

Subnetting Made Simple!

The IP address space, although large, is still limited when you think of the number of networked computers on the Internet today. For a business entity (or an Internet service provider) to create more than one network, it would appear that more than one range of addresses would be needed. A method of addressing called subnetting was devised that allows a single contiguous address space to be further divided into smaller units called subnets. If you take a Class B address, for example, you can have as many as 65,534 host computers on one network. That’s a lot of host computers! There aren’t many companies or other entities in the world today that need to have that many hosts on a single network.

Subnetting is a technique that can be used to divide a larger address space into several smaller networks called subnets. So far, you’ve learned about using part of the IP address to identify the network and using part of the address to identify a host computer. By applying what is called a subnet mask, it is possible to “borrow” bits from the host portion of the IP address and create subnets.

A subnet mask is also a 32-bit binary value, just like an IP address. However, it’s not an address, but instead is a string of bits used to identify which part of the total IP address is to be used to identify the network and the subnet.

The subnet mask is expressed in dotted-decimal format just like an IP address. Its purpose is to “mask out” the portion of the IP address that specifies the network and subnet parts of the address.

Note

The technique of using subnetting was first discussed in RFC 950, “Internet Standard Subnetting Procedure.”

Because subnet masks are now required for all IP addresses, the A, B, and C address classes that were just described all have a specific mask associated with them. The Class A address mask is 255.0.0.0. When expressed as a binary value, 255 is equal to a string of eight 1s. Thus, 255.0.0.0 would be 11111111000000000000000000000000. Using Boolean logic, this binary subnet mask can be used with the AND operator to mask out (or identify) the network and subnet portion of the IP address. Using the AND operator, the TRUE result will be obtained only when both arguments are TRUE.

If you use the number 1 to represent TRUE and use 0 to represent FALSE, it’s easy for a computer or a router to apply the mask to the IP address to obtain the network portion of the address. Table 24.5 shows how the final values are obtained.

Table 24.5. Boolean Logic Is Used for the Subnet Mask

A Class A address, as you can see, will have a subnet mask of 255.0.0.0. The only portion of the IP address that is used with this mask to be the network address is those bits contained in the first byte (11111111 in binary). Similarly, a subnet mask for a Class B address would be 255.255.0.0 (11111111111111110000000000000000 in binary), and for a Class C address it would be 255.255.255.0 (a lot of ones!).

Because we’ve already set aside certain values at the beginning of an IP address to identify what class the address belongs to, what value can be gained by using subnet masks? Each subnet mask just discussed blocks out only the portion of the IP address that the particular class has already set aside to be used as a network address.

The value comes by using part of the host component of the IP address to create a longer network address that consists of the classful network address plus a subnet address. By modifying the subnet mask value, we can mask out additional bits that make up part of the host portion of the address, and thus we can break a large address space into smaller components.

To put it simply, subnetting becomes useful when you use it to take a network address space and further divide it into separate subnets.

Note

One of the benefits of subnetting is that, before the advent of switches, it allowed you to take a large address space and divide it using routers. A large number of computers on a single subnet would create a large amount of traffic in an Ethernet environment. In this kind of situation, you would eventually get to a point where the broadcast traffic on the segment would result in too many collisions and network performance would slow to a crawl. By taking a large address space and subnetting it into smaller broadcast domains, and connecting them using a router, you can increase network performance dramatically.

If you use a subnet mask of 255.255.255.128, for example, and convert it to binary, you can see that a Class C address can be divided into two subnets. In binary, the decimal value of 128 is 10000000. This means that a single bit is used to create two distinct subnets. If you were to use this mask with a network address of 192.113.255, you would end up with one subnet with host addresses ranging from 192.113.255.1 to 192.113.255.128 and a second subnet with host addresses ranging from 192.113.255.129 to 192.113.255.254. (In this example, addresses that end in all zeros or all ones are not shown because those addresses are special cases and are generally not allowed as host addresses—192.113.255.0, for example.)

To take subnetting one step further, let’s use a mask of 255.255.255.192. If you take the decimal value of 192 and convert it to binary, you get 11000000. Applying this subnet mask to a Class C network address space yields four subnets. Each subnet using the remaining bits of the host address can have up to 62 host computers. The reason you have four subnets is that the first 2 bits of the last byte of the subnet mask are 11. Because the first 2 bits are ones, there are four possible subnet values you can express using these two digits (11 in binary equals 3—if you count zero, you have four values that can be expressed using 2 bits). When this mask is applied to a byte, there are only 6 bits remaining to be used for host addresses. Because you cannot use a host address of all ones or all zeros, this means that although the largest number you can store in 6 bits is 63, you must subtract 2 from this value. This leaves only 1–62 for host addresses on these subnets.

Note

If you don’t want to go through the trouble of calculating subnet values yourself, you’ll find a handy table on the inside front cover of this book. This discussion is intended to help you understand the mechanics working behind the scenes in routers and protocol stacks that make subnetting possible.

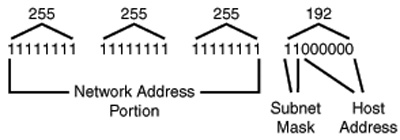

In Figure 24.4, you can see that the IP address now consists of three parts: the network address, the subnet address, and the host address.

Figure 24.4. A subnet mask can be used to identify the network address, subnet address, and host portions of the IP address.

The first thing you should do when preparing to subnet an address space is decide how many host addresses will be needed on each subnet. Then convert this number to its binary value. Looking at the binary value, you can see how many bits you will need for the host portion of the address space. If you then subtract that value from the number of bits available (which is 8 if you’re subnetting the last byte of a Class C address), you can calculate what the decimal equivalent would be for a binary number that contains that number of leftmost bits set to one.

Suppose you wanted to create subnets that would allow you to put up to 30 computers on each subnet. First, determine what 30 is when converted to binary: 11110. You can see that it takes 5 bits to represent the decimal value of 30 in binary. After you subtract this from 8, you have left only 3 bits that can be “borrowed” from the Class C host part of the address (8 – 5 = 3). In binary, this mask would be 11100000. If you convert this value to decimal, you get 224.

The next question to ask is how many subnets can you create using this mask? Because only 3 bits are left, just figure out the largest number you can express using 3 bits in binary. You’ll come up with a value of all 1s (111), which translates to 7 in decimal. Therefore, you can have seven possible subnets, or eight if you include zero as a possibility.

After you’ve calculated what your subnet mask needs to be, you’ll need to calculate what the actual host addresses must be for each subnet. The first subnet address would be 000. Because the IP address is expressed in dotted decimal notation, calculate how many addresses you can store in an 8-bit binary value that always begins with 000, and then translate that to decimal: 00000001 to 00011110, which is 1–30 in decimal.

Note

Remember that the addresses of 00000000 and 00011111 are not valid because they result in a host address of all zeros or all ones. If this mask were applied to a Class C network address of 192.113.255.0, hosts in the first subnet would range from 192.113.255.1 to 192.113.255.30.

Continuing the process, the second subnet address would be 001, and the third would be 011. The range of host addresses that could be created for a subnet value of 001 is 00100001 to 00111110, which is 33–62 in decimal.

The range of hosts on the second subnet would be from 192.113.255.33 to 192.113.255.62.

Simply continue this process and you’ll be able to figure out the correct subnet addresses, based on the mask you’ve chosen.

It’s possible to further divide the Class C address space by using up to 6 bits for the subnet mask, but this would leave only two usable host addresses and is not very practical. However, it can be done!

Note

In the examples given in this book for creating subnets, and in the charts you’ll find on the inside front cover, subnets consisting of all zeros or all ones are included. In the original RFC on subnetting (RFC 950), these values were specifically excluded from use. However, when this is done, a large number of subnets, and thus host addresses, are excluded. RFC 1812 allows for the use of all zeros or all ones in the subnet mask. However, you should check to be sure that the routers on your network support this before using these subnet addresses. Older routers most likely will not support them. Newer ones probably will require that you configure them to operate one way or the other.

Classless Interdomain Routing Notation and Supernetting

As we discussed earlier in this chapter, the system of classifying IP addresses (A, B, C) worked well when the Internet was much smaller. The class system and subnetting is still widely used on local network routers. However, on the Internet backbone routers, a system called Classless Interdomain Routing (CIDR) is the method used to determine where to route a packet. This technique is also referred to as supernetting. CIDR can be considered a technique that uses a subnet mask that ignores the traditional IP class categories.

Why is CIDR needed? When the IP address class system was introduced, it was simple for routers to use the first byte of the IP address to figure out the network number, and thus make routing an easy task. For example, for an IP address of 140.176.232.333, the router would recognize that 140 falls in the Class B address range, so the network number would be 140.176.0. A quick glance at the routing table was all that was necessary to determine the next hop to which a packet should be routed to get to its network.

As the Internet continued to grow (or explode, as some might say), the huge number of Class B and Class C networks that were being added meant that routing tables on Internet backbone routers were also growing at a fast rate. Eventually, there would come a point where it would be impossible to efficiently route packets if routing tables continued to grow.

CIDR allows for address aggregation. That is, a single entry in a routing table can represent many lower-level network addresses.

Another reason why CIDR was needed is that much of the classful address space is wasted. This happens at both ends of the spectrum. Consider a small network at the low end, with a total of 254 usable addresses in a Class C address block. If the owner of that address space has a network with only 50 or 100 computers, that means that more than half of the available host addresses are essentially lost to the Internet. At the high end, a Class A network has a total of 16,777,216 possible host addresses. How many organizations need 16 million host addresses?

By dropping the address class constraints, and instead using a subnet mask to specify any number of contiguous bits of the IP address as the network address, it is possible to carve up the total 32-bit address space into finer blocks that can be allocated more efficiently.

Note

CIDR was widely implemented on the Internet beginning in 1994. For specific details about CIDR, see RFCs 1517, “Applicability Statement for the Implementation of CIDR”; 1518, “An Architecture for IP Address Allocation with CIDR”; 1519, “CIDR: An Address Assignment and Aggregation Strategy”; and 1520, “Exchanging Routing Information Across Provider Boundaries in the CIDR Environment.”

CIDR uses a specific notation to indicate which part of the IP address is the network portion and which is the host portion. The CIDR notation syntax is the network address followed by /#, where # is a number indicating how many bits of the address represent the network address. This /# is commonly called the network prefix. Table 24.6 shows the network prefix values for A, B, and C network classes.

Table 24.6. CIDR Network Prefix Notation for A, B, and C IP Address Classes

However, because CIDR no longer recognizes classes, it’s quite possible to have a network address like 140.176.123.0/24. Thus, while 140 would indicate that only 16 bits are used as the network portion of the address when using classful addressing, the /24 notation would specify that the first 24 bits are used, and the remaining 8 bits would be used for host addressing. Using the /24 notation allows the former class B address space to be allocated in smaller blocks than the class system allows.

In Table 24.7 you can see how this system allows for networks that range in size from 32 hosts to more than 500,000 hosts. The middle column shows the equivalent of a Class C network address space that the CIDR prefix creates, and the last column shows the number of hosts that would exist in the network.

Table 24.7. Use of CIDR Network Prefix Notations

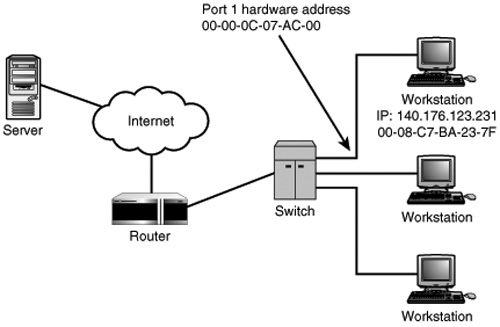

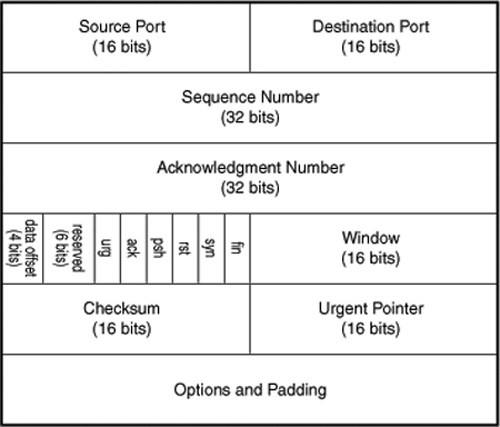

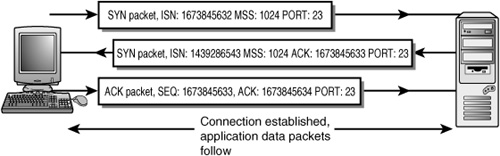

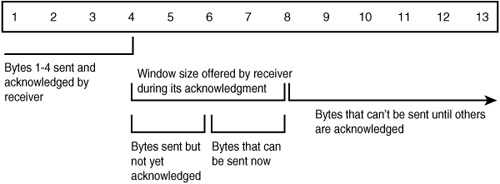

In Table 24.7, note that I’ve expressed the Class C equivalent networks that can be created. However, when using the /16 prefix, you get 256 Class C size networks, which is the same thing as a single Class B network. To continue this train of thought, a /15 prefix will allow you to create two Class B–sized networks, and so on.