The idea of controlling a sound transformation according to the musical scene it is displayed in may be as old as the idea of composition. The particular case of adaptive DAFX is when the sound to be transformed is also used as the source of the modification control parameter(s): some information about the sound is then collected and used to modify the sound with a coherent evolution. With such a perspective, adaptive DAFX can be considered as an extension of, or inspired by, composition processes similar to counterpoint. This process can also be compared to what happens in natural physical phenomena, such as the harmonic enrichment happening in brass instruments when the sound level increases. This chapter presents and formalizes the use of adaptive control in order to build new digital audio effects. It presents a general framework that encompasses existing DAFX and opens doors to the development of new and refined DAFX. It is aimed at presenting the adaptive control of effects, describing the steps to build an adaptive effect and explaining the necessary modifications of signal-processing techniques.

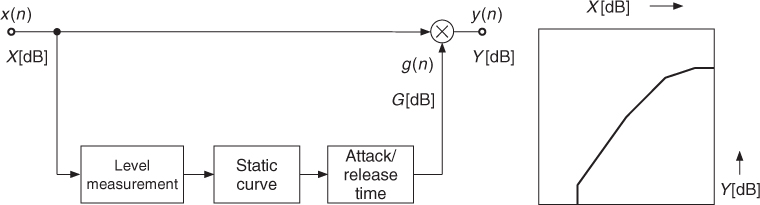

Adaptive DAFX can be developed and used with various goals in mind: to provide a high-level control for usual DAFX, to propose new production and/or creative tools by offering new DAFX, or to strengthen the relationship between Fx, control and perception. The control and mapping aspects of adaptive DAFX are important matters. In any DAFX, the control is sometimes part of the effect (e.g., compressor), sometimes part of the mapping (e.g., pitch-shift amount). Also, the gestural control mapping of DAFX is unfortunately often limited to a one-to-one mapping (one gestural parameter is directly mapped to one effect parameter), with the noticeable exception of the GRM Tools [Fav01, INA03], which allow for linear interpolation between presets of N parameters. In order to define a general framework we will look at some famous simple examples of adaptive DAFX. As explained in Section 4.2 the compressor (see Figure 9.1) transforms the input sound level to the output sound level according to a gain control signal. The input sound level is mapped depending on a non-linear curve into the control signal with an attack and release time (inducing some hysteresis).

Figure 9.1 Compressor/expander with input/output level relationship.

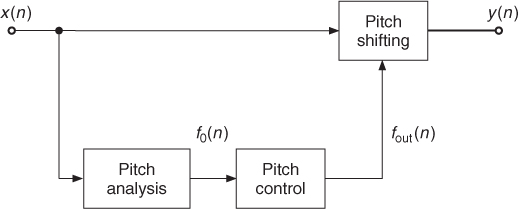

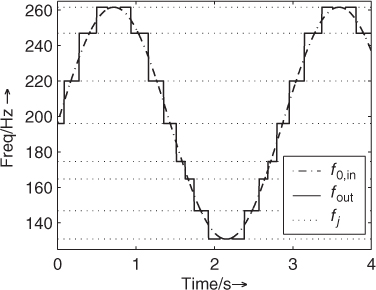

The principle of auto-tuning a musical sound consists in applying a time-varying transposition (or pitch shift), which is controlled by the fundamental frequency of the input signal so that the pitch becomes tuned to a given musical scale (see Figure 9.2). It is also called “pitch discretization to temperate scale” (see Chapter 10), even though it would work for any non-temperature scale too. The auto-tune effect modifies the pitch by applying a f0-dependent pitch shift, as depicted in Figure 9.2. The output fundamental frequency depends on: (i) the input fundamental frequency, (ii) a non-linear mapping law that applies a discretization of fundamental frequency to the tempered scale (see Figure 9.3), and (iii) a musical scale that may change from time to time depending on the music signature and chord changes.

Figure 9.2 Auto-tune.

Figure 9.3 Fundamental frequency of an auto-tuned FM sound

Some adaptive DAFX have existed and been in use for a long time. They were designed and finely crafted with a specific musical or music production goal. Their properties and design are now used to generalize the adaption principle, allowing one to define several forms of adaptive DAFX and to perform a more systematic investigation of sound-feature control. When extracting sound features, one performs some kind of indirect acquisition of the physical gesture used to produce the sound. Then, the musical gesture conveyed by the sound used to extract features is implicitly used to shape the sound transformation with meaning, because ‘the intelligence is (already) in the sound signal.’ Such generalizing approach has been helpful to finding new and creative DAFX.

Definition of Adaptive DAFX

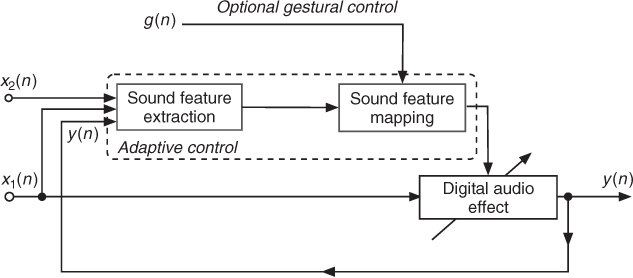

Adaptive DAFX are made by combining an audio effect with an adaptive control stage. More specifically, adaptive DAFX are defined as audio effects with a time-varying control derived from sound features1 transformed into valid control parameter values using specific mapping functions [VA01], as depicted in Figure 9.4. This definition generalizes previous observations of existing adaptive effects (compressor, auto-tune, cross-synthesis), but is also inspired by the combination of an amplitude/pitch follower with a voltage-controlled oscillator [Moo65].

Figure 9.4 Diagram of the adaptive effect. Sound features are extracted from an input signal x1(n) or x2(n), or from the output signal y(n). The mapping between sound features and the control parameters of the effect is modified by a gestural control. Figure reprinted with IEEE permission from [VZA06].

Adaptive DAFX are called differently according to the authors, for instance ‘dynamic processing’ (even though ‘dynamic’ will mean ‘adaptive’ for some people, or more generally ‘time-varying’ without adaptation for other people), ‘intelligent effects’ [Arf98], ‘content-based transformations’ [ABL+03], etc. The corresponding acronym to adaptive DAFX is ADAFX. With ADAFX, it is to be noted that the effect name can be non-specific to the sound descriptor (for instance: cross-synthesis, adaptive spectral panning, adaptive time-scaling), but it also can have a control-based name (for instance: auto-tune, compressor). Such names are based on the perceptual effect produced by the signal-processing technique. Generally speaking, ADAFX don't have their control limited to the adaptive part, but rather it is two-fold:

- Adaptive control derived from sound features;

- Gestural control—used for real-time access through input devices.

The mapping laws to transform sound features into DAFX control parameters make use of non-linear warping curves, possibly hysteresis, as well as feature combination (see Section 9.3).

The various forms ADAFX may take depend on the input signal used for feature extraction:

- Auto-adaptive effects have their features extracted from the input signal x1(n). Common examples are compressor/expander, and auto-tune.

- Adaptive or external-adaptive effects have their features extracted from at least one other input signal x2(n). An example is cross-ducking, where the sound level of x1(n) is lowered when the sound level of x2(n) goes above a given threshold.

- Feedback adaptive effects have their features extracted from the output signal y(n); it follows that auto-adaptive and external-adaptive effects are feed-forward-adaptive effects.

- Cross-adaptive effects are a combination of at least two external-adaptive effects (not depicted in Figure 9.4); they use at least two input signals x1(n) and x2(n). Each signal is processed using the features of another signal as controls. Examples are cross-synthesis and automatic mixing (see Chapter 13).

Note that cross synthesis can be considered as both an external-adaptive effect and a cross-adaptive effect Therefore, the four forms of ADAFX do not provide a good classification of ADAFX since they are not exclusive; they do, however, provide a way to better describe, directly in the effect name, where the sound descriptor come from. The next two sections investigate sound-feature extraction (Section 9.2), and mapping strategies related to adaptive DAFX (Section 9.3).