9.1 INTRODUCTION

Some items on the balance sheets of banks need to be modelled by a behavioural approach. By “behavioural” is generally meant a model that takes into account not only standard rationality principles to evaluate contracts, which basically means that economic agents prefer more wealth to less wealth, and that they prefer to receive cash sooner than later; behavioural models consider other factors as well, typically estimated by means of statistical analysis, which may produce effects that otherwise could not be explained. It should be stressed that in any event financial variables, such as interest rates or credit spreads, are the main driver of customer or, more generally, counterparty behaviour.

There are three main phenomena that need behavioural modelling: prepayment of mortgages, evolution of the amount of sight and saving deposits and withdrawals from credit lines. We will propose models for each focusing mainly on what we think is a good solution for liquidity management, without trying to offer a complete picture on the entire range of available models developed in theory or in practice. Anyway, as far as mortgage prepayments and withdrawals from credit lines are concerned, we introduce models that to our knowledge have never been proposed before, which aim at considering financial, credit and liquidity risk in a unified framework.

9.2 PREPAYMENT MODELLING

The prepayment of mortgages has to be properly taken into account in liquidity management, although many of the effects of the decision to pay back the residual amount of debt by the mortgagee are financial as they may cause losses to the financial institution. We will present a model to cope with the prepayment of fixed rate mortgages, because they combine both liquidity and financial effects.

9.2.1 Common approaches to modelling prepayments

There are two fundamental approaches to model prepayments

- Empirical models (EMs): Prepayment is modelled as a function of some set of (non-model based) explanatory variables. Most of these models use either past prepayment rates or some other endogenous variables (such as burnout) or economic variables (such as GDP or interest rate levels) to explain current prepayment. Since they are just heuristic reduced-form representations for some true underlying process, it is not clear how they would perform in a different economic environment. Besides, no dynamic link between the prepayment rate and other explanatory variables has been established.

- Rational prepayment models (RPMs): They are based on contingent claims pricing theory and as such the prepayment behaviour depends on interest rate evolution. Prepayment is considered as an option to close the contract at par (by repaying the nominal value of the outstanding amount), which will be exercised if the market value of the mortgage is higher than the nominal residual value. Although these models consistently link valuation of the mortgage and prepayment, their prepayment predictions do not closely match observed prepayment behaviour since not all debtors are skilled enough to evaluate the convenience of exercising the options. One of the drawbacks of rational models is that in their basic forms they imply that there will either be no prepayment or all mortgages with similar features will suddenly prepay, because all mortgagees will exercise their options.

Empirical features commonly attributed to mortgage prepayment are the following:

- some mortgages are prepaid even when their coupon rate is below current mortgage rates;

- some mortgages are not prepaid even when their coupon rate is above current mortgage rates;

- prepayment appears to be dependent on a burnout factor.

Since basic and simple RPMs are unable to fully take into account these features, most banks adopt EMs in an attempt to accurately predict prepayment rates. The prediction is the so-called CPR, or constant prepayment rate, which is used to project expected cash flows and which can be expressed as a function of different variables. For example, a well-known EM is the Richard and Roll [105] model adopted by Goldman Sachs and the US Office of Thrifts and Supervision; this model can be written in very simple form as:

CPR = f(Refinance incentive)g(Seasoning)h(Month)l(Burnout factor)

So the CPR depends on four functions of four different factors, the most important of which happens to be the refinance incentive or, in other words, exercising the option when it is convenient to do so. The refinance incentive (RI) function f() is modelled as:

RI = 0.3124 − .020252 × arctan(8.157[−(C + S)/(P + F) + 1.20761])

where arctan is the arctangent function, C is the fixed rate of the coupon, S is the servicing rate of the pool,1 P is the refinancing rate and F are additional costs due to refinancing.

In general, EMs perform quite well when predicting expected cash flows; they are also used to set up portfolios with hedging instruments with the notional adjusted according to the CPR. Since most model vendors plug EMs into their ALM systems and most banks use them, we examine how hedging with EMs works in practice.

9.2.2 Hedging with an empirical model

The refinance incentive is the most important factor, particularly in market environments with extremely low rates. In some countries mortgagees are charged no prepayment penalties, so they are more eager to exploit the prepayment option. Moreover, some regulations allow mortgagees to transfer the mortgage to another bank at no cost: in this case competition amongst banks pushes the refinancing of mortgages with high contract rates when rates are low, thus increasing the “rationality” of prepayment. Even if the bank manages to keep the mortgagee, it is forced to refinance the mortgage at new market rates. In either case, in practical terms this is equivalent to a prepayment.

It goes without saying that, when the refinancing incentive is the major driver for prepayments, the bank suffers a loss that in very general terms can be set equal to the replacement cost of the prepaid contract.

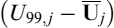

For this reason we introduce a very simplified EM, and we use a function of the kind:

where C and P are defined as above. The CPR in equation (9.1) is a constant α plus a proportion β of the ratio between the mortgage rate C and the current rate level P. The lower the current rate P, the higher the CPR.

Let us create a laboratory environment and calibrate model (9.1) to empirical data reproduced by a random number generator. We assume that P is representative of a “general” level of interest rates (e.g., the average of the 5, 10 and 20-year swap rates). Moreover, C is the average fixed rate of the portfolio of mortgages, which we set equal to 3.95% (in line with the market rates we consider below). For each level of rates we have an annual CPR generated by the equation:

![]()

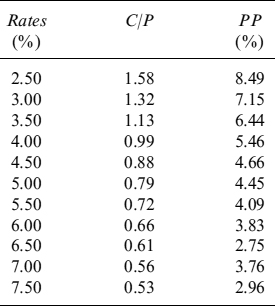

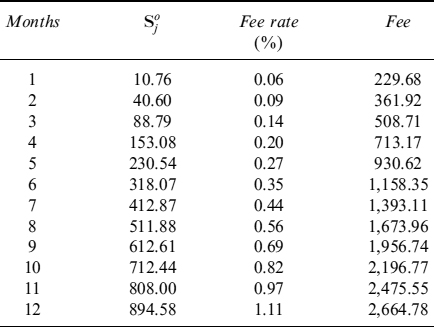

where ![]() is a random number extracted from a normally distributed variable. The data are shown in Table 9.1.

is a random number extracted from a normally distributed variable. The data are shown in Table 9.1.

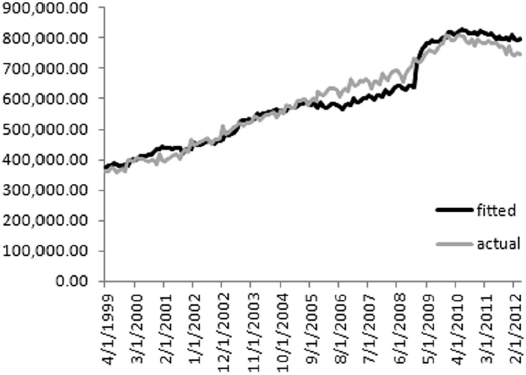

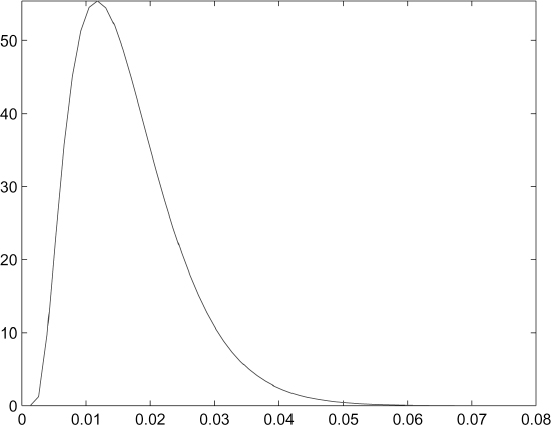

We carry out linear regression to estimate the parameters and get α = 0.02881267 and β = 0.029949414. A graphical representation of the fitting is given in Figure 9.1.

The simple model above seems able to capture the relevant factors affecting prepayment activity, which is strongly dependent on the level of the current interest rate. Given this, we have the CPR to project expected cash flows and then to set up proper hedging strategies with other interest rate derivatives, typically IR swaps. The main problem with such an EM is that it is not dynamic and, unfortunately, does not allow for an effective hedge against both movements in interest rates and prepayment activity.

Table 9.1. Current level of rates P, ratio between fixed mortgage rate and current level of rates (C/P) and percentage of prepaid mortgages PP%

Figure 9.1. Linear regression estimation of prepayment data. The percentage of prepayments in one year is plotted against the current interest rate level

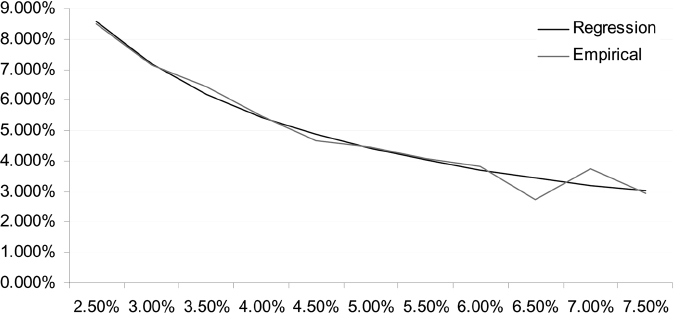

To see this, let us consider a mortgage that is representative of a bank's mortgage portfolio sold to clients at current market conditions. The mortgage expires in 10 years, it is linearly amortizing and its fair fixed rate, yearly paid, is 3.95%, given the 1Y Libor forward rates and associated discount factors shown in Table 9.2. In Table 9.3 the oustanding capital at the beginning of each year is shown. For simplicity's sake we also assume that no credit spread is applied, nor any markup to cover administrative costs, so that the mortgage rate is given only by Libor rates.

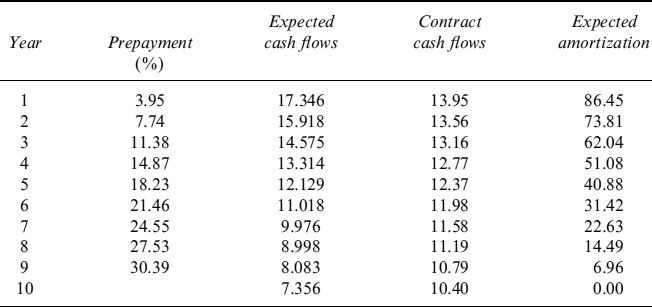

We can also compute expected cash flows given the prepayment activity forecast by the model we calibrated. If we assume that the current level of the interest rate is summarized in the 10Y rate, 5.5%, the model provides a CPR of 4.02% p.a. Expected amortization and contract and expected cash flows are easily computed (see Table 9.4). In computing expected cash flows we used the convention that the CPR is a continuous rate such that, for a given year T, the percentage of prepaid mortgages is (1 − e−CPR×T).

Table 9.2. 1Y Libor forward rates and discount factors for maturities from 1 to 10 years

Table 9.3. Outstanding capital at the beginning of each year for the representative mortgage

| Year | Outstanding capital |

| 1 | 100 |

| 2 | 90 |

| 3 | 80 |

| 4 | 70 |

| 5 | 60 |

| 6 | 50 |

| 7 | 40 |

| 8 | 30 |

| 9 | 20 |

| 10 | 10 |

The mortgage rate computed on expected cash flows, keeping in mind the prepayment effects, is slightly lower and equal to 3.89%. This is easy to understand, since we are in a steep-curve environment and the prepayment entails a shorter (expected) maturity of the contract, thus making the fair rate lower. In a very competitive market, it is tempting for the bank to grant such a lower rate to mortgagees, because after taking account of the costs related to hedging the bank appears not to be actually giving away value to customers.2

In fact, the ALM of the bank typically finances the mortgage portfolio by rolling over short-term debt or a similar maturity debt, but at a floating rate. The reason is easily understood, since as a result of floating rate indexation the duration of the bank debt is very short, and hence the volatility of balance sheet liabilities is reduced as well. As a consequence, the bank transforms its fixed rate mortgage portfolio into a floating rate mortgage portfolio (so that asset duration matches liability duration),3 by taking expected cash flows instead of contract cash flows into account: in this way risk managers believe they have appropriately hedged prepayment risk as well, at least in average terms. The transformation, or hedge, is performed using liquid market instruments, usually swaps, by paying the fixed rate earned on the mortgage and receiving the Libor fixing (which is concurrently paid on financing).

In the example we are considering, the swap used for hedging purposes is not standard, but an amortizing swap with a decreasing notional equal to expected amortization as shown in the fourth column of Table 9.4, which reveals expected outstanding capital at the end of each year. Since we are not considering any credit spread on the mortgage, and assuming no credit issues in the swap market as well, we get the swap fair rate at inception as 3.89%, which is exactly the mortgage rate computed using expected (i.e., including the prepayment effect) cash flows, hence confirming that none of the hedging costs have been ignored when pricing the mortgage out of expected cash flows instead of contract cash-flows.

Table 9.4. Percentage of prepaid loans up to a given year, expected and contract cash flows and expected amortization

If the model is correctly predicting prepayment rates, then there would be no loss: at the end of each year the outstanding capital matches the expected capital (net of prepayments) and the hedging swap would still be effective in protecting against exposure to interest rate movements. The problem is that the very model we are using (which, we recall, is a simple version of the most common models included in the ALM applications of software vendors) actually links the level of the CPR to the level of interest rates. So, barring possible divergences due to normal statistical errors, variations in the CPR are also due to movement in the term structure of interest rates, although this cannot be dynamically included in risk management policies since the EM is static. If rates move, this means that swap hedging will no longer be effective and the bank will have to appropriately rebalance its notional quantities. But, interest rates do move, so we can be sure that the hedge has to be rebalanced in the future, even if the estimated parameters of model (9.1) prove to be correct and do not change after a new recalibration.

Being pretty sure that the bank will change the notional of the hedging swap in the future, the problem is now to understand if this rebalancing generates a loss or a profit (without considering transaction costs). Let us see what happens if the term structure of forward rates experiences a parallel shift downward or upward of 2% after 1 year. In this case, if the probabilities of prepayment are kept constant, the hedging swap portfolio would experience a positive, or negative, variation of its net present value (NPV), thus counterbalancing the negative, or positive, variation of the NPV suffered on the mortgage. The profit, or loss, can be approximated with very high accuracy, since we are assuming a parallel shift of the forward rates that would be equal to DV01 × Δr, where DV01 is the discounted annuity of fixed rate payments of the swap, and Δr is variation of the swap fair rate due to the change in Libor forward rates.

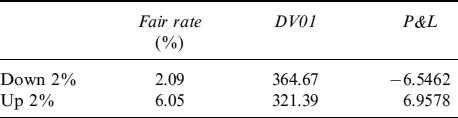

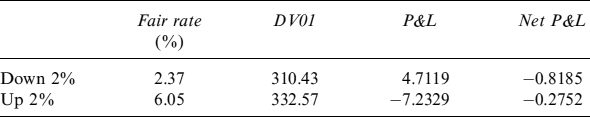

In Table 9.5 we show the profit and loss due to unwinding of the original hedging swap for the two scenarios of parallel shift (upward and downward) of the forward rate term structure. When rates fall, the hedging swap suffers a loss, since it is a (fixed rate) payer and the new fair rate for a similar swap structure is 2.09%; the loss is given by the DV01 indicated in the second column times the Δr = 2.09% − 3.89%. On the other hand, when forward interest rates move upward by 2%, the unwinding of the swap generates a profit, computed as before considering the new fair swap rate 6.05%. As expected, the profit has the same order of magnitude as the loss. Moreover, since the swap is mimicking the mortgage, variation of the NPV of the latter is a mirror image of the former. The reason we have to unwind the original hedging swap and open a new one will be clear in what follows.

Table 9.5. Profit and loss due to closing of the original hedging swap position

Actually, if the probabilities of prepayment change according to (9.1), we have two consequences: first, the original swap no longer perfectly hedges variations in the value of the mortgage; second, rebalancing of the notional of the swap is needed at least to bring it back into line with the new expected amortization schedule of the mortgage. We have then to unwind the original position and open a new one with a new swap with a notional amount matching the expected amortization schedule of the mortgage, with a fixed rate equal to the mortgage rate based on current market rates.4

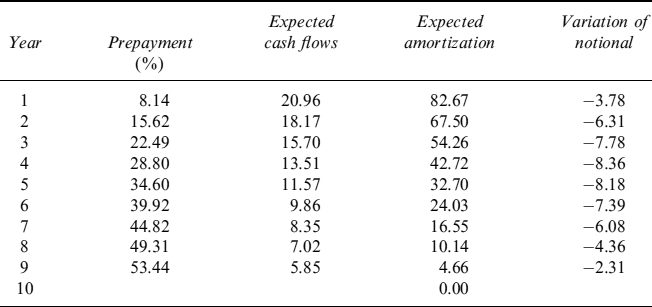

First, let us examine what happens to expected repayments in the future when rates move. Table 9.6 shows the new expected amortization schedule after a change in the CPR due to a movement in interest rates: with a new level of the 10Y5 at 3.5%, the CPR would be 8.49%. This means that the actual outstanding capital after one year will be less than that projected by the starting CPR (4.02%), and consequently future expected outstanding capital amounts will also be smaller (i.e., the expected amortization will be more accelerated).

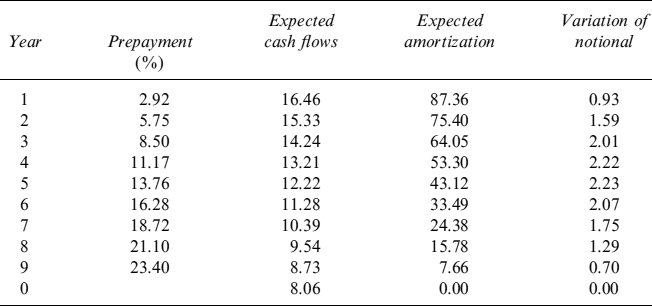

The same reasoning also applies to a scenario where the term structure of forward rates experiences a shift upward of 2%, as shown in Table 9.7. In this case, from (9.1), we know that the new CPR rate will be 2.96%, in correspondence with the 10Y forward rate of 7.50%. Hence the oustanding amount after one year will be higher than the one previously projected, and thus all expected future capital amounts will also be revised upward (i.e., amortization will be slower).

We now have to compute the profit or loss produced by opening a position in a swap with the same rate as the original one (which is also the mortgage rate that we need to match on interest payment dates), with a reference capital schedule mirroring the revised expected amortization of the mortgage at current market rate levels. Table 9.8 summarizes the results for both scenarios. When rates are down 2%, the new swap generates a profit of 4.7119: this is easy to understand, since the bank still pays 3.89% on this contract, although the new fair swap rate is 2.37% (the profit is computed as above by means of the DV01 in the second column). Nevertheless, keeping the loss incurred in mind when closing the original swap position, the bank suffers a total net loss of −0.8185 (shown in the fourth column).

Table 9.6. New expected amortization schedule after the term structure of forward rates drops 2%. The CPR moves from 4.02 to 8.49% according to model (9.1)

Table 9.7. New expected amortization schedule after a shift upward for the term structure of forward rates of 2%. The CPR moves from 4.02 to 2.96% according to model (9.1)

Table 9.8. Profits and losses due to the opening of new hedging swaps and the net result of closing the original position

Surprisingly enough, a net loss is also suffered by the bank when prepayment activity slows down as a result of higher rates in the upward parallel shift scenario. Actually, notwithstanding the profit gained when closing the original swap position, the loss suffered when opening the new swap is even higher.

At this point we can be pretty certain that, unfortunately, the hedging strategy of a mortgage, based on taking a position in swaps with a notional schedule mimicking the mortgage's expected amortization, is flawed and produces losses unless rates do not change and consequently the CPR is fixed too. In reality, this rarely happens since interest rates move and EMs predict changing CPRs. We need to investigate further where the losses come from, so that we can hopefully come up with a more effective hedging strategy.

9.2.3 Effective hedging strategies of prepayment risk

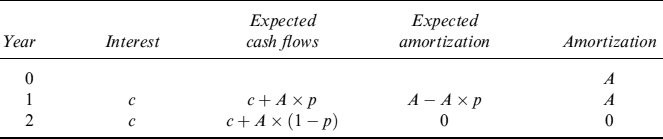

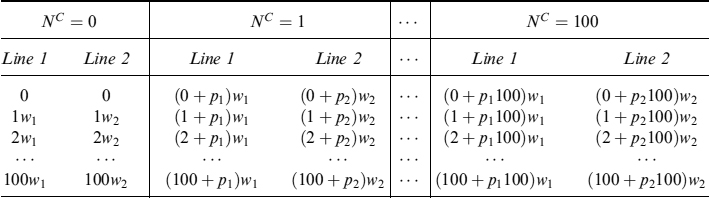

To better understand how losses are produced when hedging expected cash flows, we consider the following case: a bank has a bullet mortgage with a mortgagee of amount A, which expires in two years. At the end of the first year the mortgagee pays fixed rate interest c and at the end of the second year she repays interest c and capital A. At the end of the first year, she also has the option to prepay the entire outstanding amount plus the interest accrued up to then: we assume that this option is exercised with probability p. Table 9.9 shows expected cash flows and expected and contract amortization at the beginning of each year.

Table 9.9. A simple 2-year bullet mortgage

The bank closes a (fixed rate) payer swap with 2-year expiry and varying notional amount equal each year to the expected amortization. This is not a standard swap traded in the interbank market, but it is not difficult to get a quote on it by a market maker. Let us indicate by Swp(n, m) a swap starting at time n and expiring at m. We can decompose the 2-year swap in two single-period swaps, so that the hedging swap portfolio P is comprised of:

- A × Swp(0, 1)

- (A − A × p) × Swp(1, 2)

It is very easy to check that the portfolio is

P = A × Swp(0, 2) − p × A × Swp(1, 2)

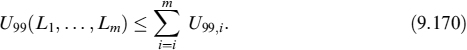

since Swp(0,1) + Swp(1,2) = Swp(0,2). The second component of the portfolio is a short position in a forward starting swap, whose notional is the mortgage notional amount weighted by the probability of prepayment at the end of the first year. The forward starting swap can be further decomposed, by means of put–call parity, as follows:

Swp(1, 2) = Pay(1, 2; c) − Rec(1, 2; c)

where Pay(n, m; K) (Rec(n, m2; K)) is the value of a payer (receiver) swaption struck at K, expiring at n, written on a forward swap starting at n and maturing at m. So, collecting results, we have that the hedging portfolio is:

If the probability of prepayment p (the CPR approximately in practice) is invariant with the interest rate level,6 equation (9.2) is just an alternative way of expressing a position in a swap with maturity and amortizing notional equal to that of the mortgage. So, the following two strategies are exactly equivalent:

- Entering in a (fixed rate) payer swap, with an amortizing schedule equal to the mortgage's expected amortizing schedule, and with the same expiry as the mortgage.

- Entering in a (fixed rate) payer swap, with a amortizing schedule equal to the mortgage's contract amortizing schedule, and with the same expiry as the mortgage; selling a payer swaption expiring in one year and written on a one-year swap, struck at the mortgage rate level c; buying a receiver swaption, otherwise identical to the payer swaption. The amount of the swap underlying the swaptions follows the contract amortizing schedule, whereas the quantity of swaptions to buy, or sell, is equal to the prepayment probability.7

Any equivalence between strategies (1) and (2) vanishes if the probability of prepayment p is not independent of the interest rate level, as is also the case in the very simple EM we presented in the previous section and generally the case in reality. If this is the case, decomposition allows for a more precise and effective hedge, provided we design a model capable of encompassing all these aspects.

Returning to our numerical example in the section above, it is now quite simple to understand the factors causing losses. In fact, in the numerical example the bank has decided to hedge the mortgage by strategy(1), assuming there is constant probability of prepayment (CPR). But the behaviour of the mortgagee (modelled via EM (9.1)) implies a higher probability when rates go down and lower when they go up. We can make the following points:

- When interest rates fall, the mortgage's NPV increases, and this is compensated by the payer swap with the same maturity. The loss originates from the fact that the probability of prepayment is higher than that guessed at the contract's inception. When seen from the perspective of strategy (2), it is as if the bank had bought a receiver swaption whose quantity is the probability of prepayment assumed at the beginning of the contract, but this is not enough to cover the loss of the mortgage which implies being short a receiver swaption with a larger quantity equal to the new prepayment probability.

- When interest rates rise, the mortgage's NPV decreases, this being counterbalanced by an increase in the swap value. In this case the loss also originates from the fact that the short position in the payer swaption (equal to the starting probability of prepayment) turns out to be bigger than that needed by the lower prepayment probability concurrent with higher rates.

- A more general point relates to why a bank should also sell the payer swaption when hedging the mortgage? When the bank adopts strategy (1), it is implicitly replicating the short position in the payer swaption, with the even worse circumstance of doing so in a higher than needed quantity when its NPV is negative (i.e., when rates move upwards). Actually, if the bank adopts hedging strategy (2), it is able to disentangle the different instruments to be bought or sold, and then to decide which is worth trading or not.

The analysis in this section is very useful and in Appendix 9.A we break down the hedging portfolio for a generic mortgage with a given expiry and amortizing schedule. In summary, the hedging portfolio comprises:

- A payer swap with the same fixed rate and expiry as the mortgage, and with the same amortizing schedule as the mortgage's contract amortization schedule.

- A short position in a portfolio of payer swaptions, expiring on each repayment date and written on a swap whose maturity date is the same as the mortgage's and as the mortgage's contract amortization schedule.

- A long position in a portfolio of receiver swaptions, expiring on each repayment date and written on a swap whose maturity date is the same as the mortgage's and as the mortgage's contract amortization schedule.

Each swaption has a quantity equal to the probability that prepayment occurs between the expiry dates of two contiguous swaptions.

Important implications can be drawn from the hedging portfolio:

- To properly hedge prepayment risk, a dynamic model of prepayment probability has to be designed, so as to allow for an increase in prepayments when rates go down.

- Making the probability higher when rates are low increases sensitivity to the value of the receiver swaptions that the bank has to buy to hedge the exposure. This yields more reliable hedging ratios and allows appropriate hedging against the costs incurred when prepayment activity increases.

- Moreover, making the probability higher when rates are low increases the price of the receiver swaption portfolio needed to hedge the exposure. This means that prepayment options are priced more accurately and can be included in the final rate to apply in the mortgage's contract.

- Selling the payer swaption portfolio is not needed: doing so unnecessarily offsets a mirror image long-option position on the mortgage that can grant some profits. We have seen that the standard strategy and other commonly adopted strateges do indeed mimic the selling of this swaption portfolio, very likely by an overdue amount.

- When including the effects of prepayments in the pricing of the loan, the bank does not have to price the long position in the payer swaption portfolio, which reduces the final contract rate. In fact, this is an obscure optionality that cannot easily be priced by mortgagees, even in a very competitive environment with rather sophisticated players.

9.2.4 Conclusions on prepayment models

Some recipes can be provided for the design of a prepayment model:

- EMs can be useful, but they have to be integrated with a RPM: the decomposition shown above makes it clear that mortgagees are implicitly long a portfolio of receiver swaptions by a certain amount that has to be included in the pricing.

- Probabilities of prepayment must be dynamically linked to the level of interest rates.

- Since we need to include the valuation of options in the pricing, we need also to account for the volatility of interest rates, so that a prepayment model has to be designed jointly with an interest rate model.

- As a consequence we can hedge sensitivities not only to interest rates, but also to volatilities (i.e., Vega).

In Section 9.2.5, we develop a prepayment model8 to hedge the prepayment risk of fixed rate mortgages which considers all the points above. It provides the ALM with a valuable tool to embed the costs of implied optionalities in the pricing of new mortgages, and to effectively hedge the exposures of an aggregated mortgage portfolio.

Undertaking the computation is a formidable challenge, so we come up with an accurate and quick solution that avoids resorting to Monte Carlo valuations, which are rather unstable when computing sensitivities and not suitable for a portfolio of hundred of thousands of contracts such as bank mortgage portfolios.

9.2.5 Modelling prepayment decisions

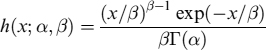

Assume that a bank closes a number of fixed rate mortgage contracts with clients who have the possibility to prepay the outstanding debt balance in the future. Further assume that mortgagees decide whether to prepay their mortgage at random discrete intervals, usually clashing with payment dates. The probability of a prepayment decision taken on the basis of the interest rate level is described by hazard function λ: the probability that the decision is made in a time interval of length dt is approximately λdt. Basically, this decision is taken when interest rates fall.

Besides prepayment (refinancing) for interest rate reasons, mortgagees may also prepay for exogenous reasons (e.g., job relocation or house sale). The probability of exogenous prepayment is described by hazard function ρ: this represents a baseline prepayment level (i.e., the expected prepayment level when no financially optimal (interest-driven) prepayment should occur).

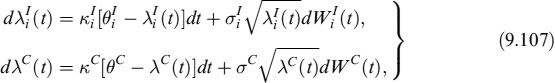

We model the interest rate based prepayment within a reduced-form approach. This allows us to include prepayments in the pricing, interest rate risk management (ALM) and liquidity management consistently. We adopt a stochastic intensity of prepayment λ, assumed to follow CIR dynamics:

![]()

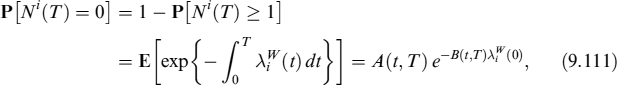

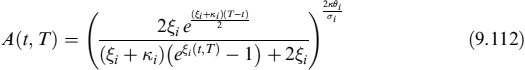

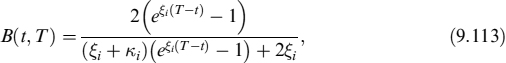

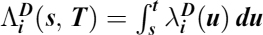

This intensity provides the probability of the mortgage rationally terminating over time. We further assume that the intensity is correlated to interest rates, so that when rates move to lower levels, more rational prepayments occur: this stochastic framework allows for a wide variety of prepayment behaviours. Survival probability (i.e., the probability that no rational decision is taken up to time T, evaluated at time t) is:

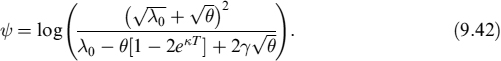

Functions A(t, T) and B(t, T) are given in equation (8.69). Parameter ψ is the market premium for prepayment risk and is assumed to be 0.9

Exogenous prepayment is also modelled in reduced-form fashion by constant intensity ρ: it can actually be time dependent as well. In this case the survival probability, or the probability that no exogenous prepayment occurs up to time T, evaluated at time t, is:

Total survival probability (no prepayment for whatever reason occurs) is:

whereas total prepayment probability is:

Consider a mortgage with a coupon rate c expiring at time T. At each period, given current interest rates, the optimal prepayment strategy determines whether the mortgage holder should prepay and refinance at current rates. Loosely speaking, for a given coupon rate c, keeping transaction costs in mind, there is a critical interest rate level r* such that if rates are lower (rt < r*) then the mortgagee will optimally decide to prepay. If it is not optimal to refinance, any prepayment is for exogenous reasons; otherwise, the mortgagee may prepay either for interest rate related or for exogenous reasons.

In order to make the model more analytically tractable, we assume that both types of decisions10 may occur at any time, but the effects on prepayments by the rational decision are produced only when the rates are below critical levels. In other words, when a rational decision is taken and the rates are above critical level r*, no prepayment actually occurs and no cost is borne by the bank. For such a mortgage, the rational decision produces no effects and cannot be taken again in the future, since both rational and exogenous decisions may occur only once, and as soon as one of the two occurs the mortgage is prepaid.

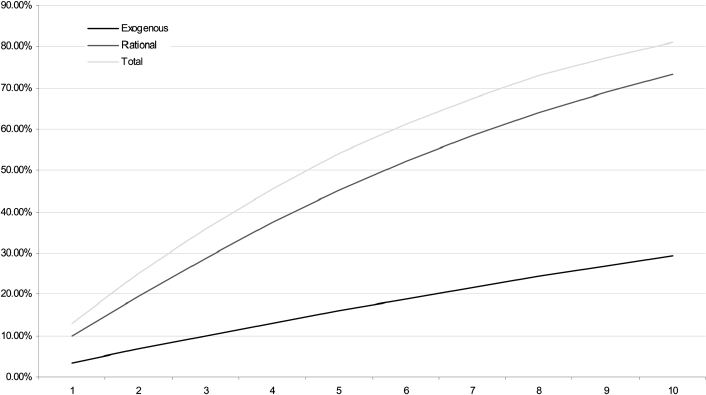

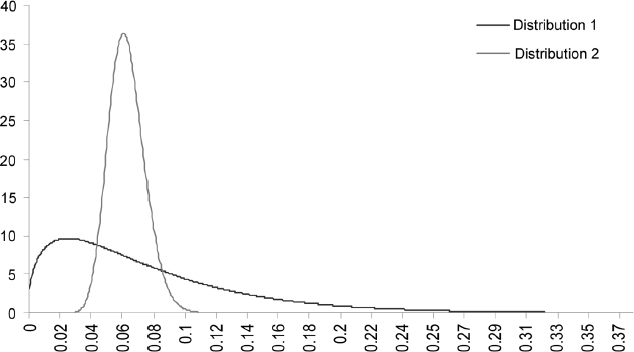

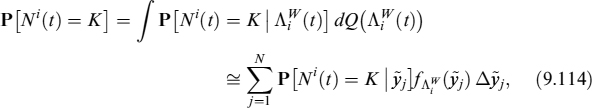

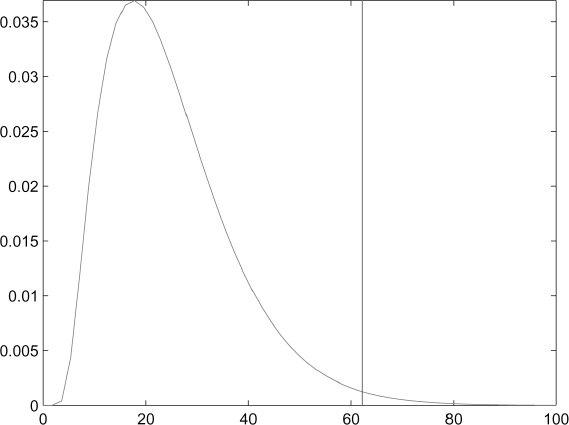

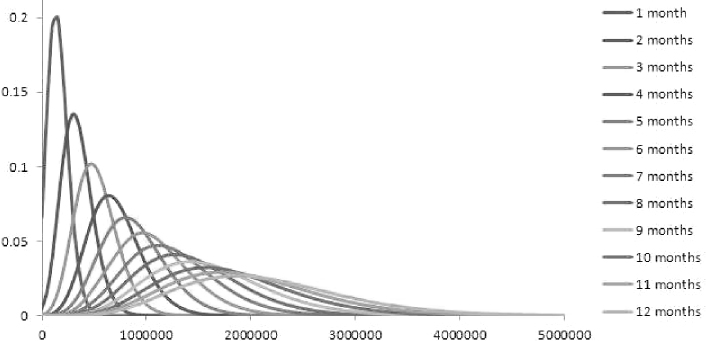

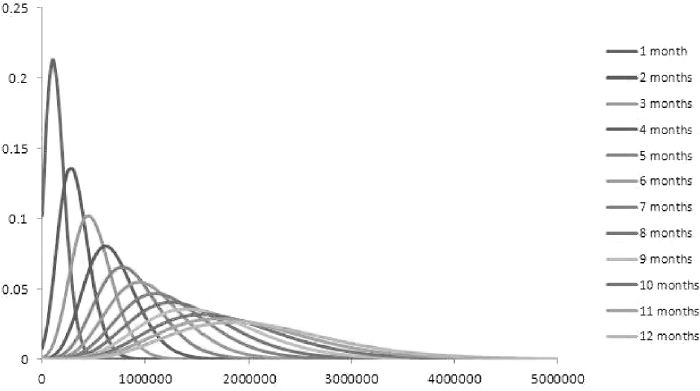

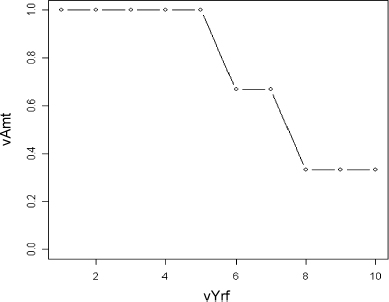

Figure 9.2. Rational and exogenous prepayment probabilities for a 10-year mortgage

Example 9.2.1. Figure 9.2 plots prepayment probabilities for different times up to (fixed rate) mortgage expiry, assumed to be in 10 years. The three curves refer to:

- exogenous prepayment, given by constant intensity ρ = 3.5%;

- rational (interest-driven) prepayment, produced assuming λ0 = 10.0%, κ = 27±%, θ = 50.0% and ν = 10.0%.

- total prepayment, when it is rational to prepay the mortgage (rt < r*).

9.2.6 Modelling the losses upon prepayment

Assume at time t0 the mortgage has the following contract terms:

- the mortgage notional is A0 = A and the mortgagee is not subject to credit risk;

- the mortgagee pays at predefined scheduled times tj, for j ∈ (0,1,…, b), a fixed rate c computed on the outstanding residual capital at the beginning of the reference period Tj = tj − tj−1, denoted by Aj−1. The interest payment will then be cTjAj−1;

- on the same dates, besides interest the mortgagee also pays Ij, which is a portion of the outstanding capital, according to an amortization schedule;

- the expiry is time tb = T;

- the mortgagee has the option to end the contract by prepaying on payment dates tj the remaining residual capital Aj, together with the interest and capital payments as defined above. The decision to prepay, for whatever reason, can be taken at any time, although the actual prepayment occurs on scheduled payment dates.

The assumption that the interest, capital, and the prepayment dates are the same is easily relaxed.

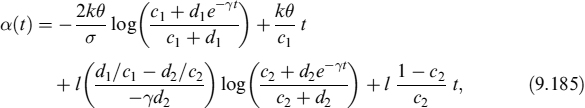

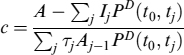

The fair coupon rate c can be computed by balancing the present value of future cash flows with the notional at time t0:

![]()

which immediately leads to:

where PD(t0, tj) is the discount factor at time t0 for date tj. It should be noted that the quantity A − ∑jIjPD(t0, tj) can be replaced by ∑jTjAj−1Fj(t0)PD(t0, tj),11 where Fj(t0) is the forward rate at time t0 starting at time tj.

Assume now that the mortgage is prepaid at a given time tk (for k ∈ {0,1,…, b}); its current value will be:

![]()

where AP will almost surely be different from the residual capital amount Ak−1, unless the forward rates implied in the term structure at time t0 actually occur in the market at time tk. The prepayment can be either rational or exogenous.

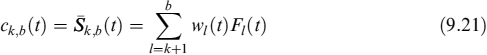

After prepayment, to hedge its liabilities the bank closes a new mortgage similar to the prepaid one, so that this new one replaces all previous capital payments and yields new interest rate payments as well. The fair rate ck,b(tk) of this new mortgage12 will be determined by market rates at time tk:

![]()

Hence, the bank will suffer a loss or earn a profit given by:

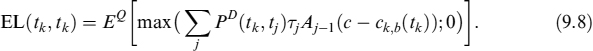

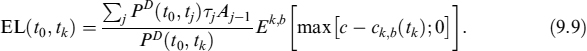

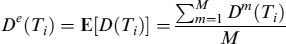

The bank is mainly interested in measuring (and managing) expected losses relating to the (rational) prepayment at times {tk}, which we indicate as expected loss (EL) evaluated at time t0:

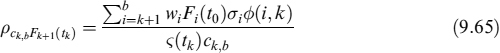

Equation (9.8) can be computed under the forward mortgage rate measure Qk,b (where Q is the real measure), associated with the rate ck,b(tk), as:

The numeraire under this measure is ∑jPD(tk, tj)TjAj−1.

EL is a function of the term structure of risk-free rates.13 We model risk-free rates in a market model framework:14 each forward rate is lognormally distributed, with a given volatility that can be estimated historically, or extracted from market quotes for caps and floors and swaptions:

dFj(t) = σjFj(t)dWt

Any prepayment causing a loss for the bank can be caused for both exogenous and rational reasons, such an occurrence is described by intensity λt: we assume that this intensity is negatively correlated to the level of interest rates. There is also a contribution arising from exogenous prepayment decisions, which may occur under any market condition, thus generating either a loss or a profit to the bank. It is then possible to compute the expected loss on prepayment (ELoP), defined as expected loss at time tk when the decision to prepay (for whatever reason) is taken between tk−1 and tk:

where 1T∈[tk−1, tk] is the indicator function equal to 1 when prepayment occurs within period of time [tk−1, tk]. Under the forward mortgage rate measure Qk,b we have

Valuation of the EL

From (9.8) the EL at time tk can easily be seen as the (undiscounted) value of a swaption written on a non-standard swap. A closed-form approximation for such contracts has been derived by Castagna et al. [49].

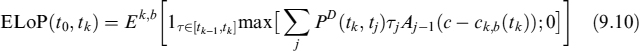

Let us start with a standard swaption (i.e., a swaption written on a standard swap). The fair swap rate at inception of the contract is:

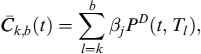

where τi is the year fraction between Ti−1 and Ti fixed rate payment dates, and PD(t, T) is the price of a zero-coupon bond at time t expiring at time T. The rate is derived by setting the value of the swap at the start of the contract at zero:

where Fi(t) is the forward risk-free rate

![]()

We denote by Swpt(t, s, Tb, S(t; s, Tb), K,σs, Tb, ω) the value of a swaption at time t, expiring in s and struck at K, written on a forward swap rate S(t; s, Tb); this value is calculated by the standard market (Black) formula with implied volatility σs, Tb, and the last argument indicates whether the swaption is a payer (1) or a receiver (−1). The formula is:

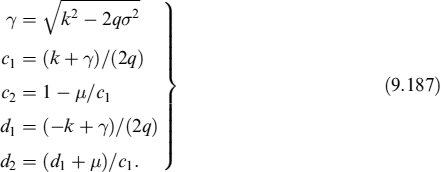

The formula can be found in Section 8.3.9. We have used the notation Ca,b(t) for an annuity that is equal to:

In the specific case of a non-amortizing mortgage of notional A, with fixed rate payments on dates {tk}, starting at tk and ending at Tb, the fair coupon rate can easily be shown to be equal to the fair swap rate, so ck, b(t0) = Sk,b(t0). This has to be compared with the original mortgage rate c (relating to a similar mortgage that started at t0, see equation (9.7)), so the expected loss on prepayment dates {tk} is:

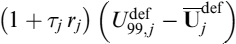

Typically mortgages are amortizing, so we need a formula to price non-standard swaptions. We use the term “meta-swap” for a swap with unit notional and a time-varying fixed rate that is equivalent to the contract fixed rate times the notional amoun for each date ![]() (i.e., the one at the start of the calculation period).

(i.e., the one at the start of the calculation period).

Let us assume that the IRS floating leg pays at times Ta,…, Tb, where Ta is the first payment time after the EL time tk, and that the IRS fixed leg pays at times Tc1,…, TcJ, where c1 ≥ a and cJ = b (fixed leg times are assumed to be included in the set of floating leg times, and in reference to a mortgage they will be assumed to be the same for both legs).

The fixed rate payment at each payment date Tcj is:

where

and ![]() denotes the year fraction for the fixed leg.

denotes the year fraction for the fixed leg.

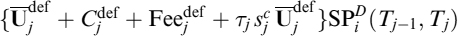

The floating leg will exchange the future risk-free (OIS) forward times αl, which is the year fraction times the notional ![]() at the beginning of the calculation period:

at the beginning of the calculation period:

Note that despite the fact that the meta-swap has unit notional, both the total fixed rate and the fraction into which the year is divided contain the notional of the swap. Note also that the year fraction τi can be different for the floating and the fixed leg. When fixed rate mortgage c amortizes with an amortization schedule ![]() , the expected loss on prepayment dates tk can be calculated as follows:

, the expected loss on prepayment dates tk can be calculated as follows:

where

is the DV01 of the forward (start date ti) meta-swap. In case ![]() , the EL can be approximated with the (positive) value of the underlying forward swap (mortgage rate).

, the EL can be approximated with the (positive) value of the underlying forward swap (mortgage rate).

Define:

We then have:

which is the forward swap rate of the meta-swap and the forward fair amortizing mortgage rate. In a standard swap the forward swap rate is the average of the OIS forward rates Fl weighted by a function of the discount factors. In the case of the meta-swap the average of the OIS forward rates is weighted by a function of the notional and discount factors. We assume that ck,b(tk) is lognormally distributed, with the mean equal to its forward value. The volatility of the meta-swap rate, or the amortizing mortgage rate, can be approximated by widely adopted “freezing” of the weights in (9.20), so that by setting ![]() we get:

we get:

which is the volatility of the forward rate of the meta-swap assuming that the volatility of OIS forward rates, σ, is constant through time and that φ(l, m) is the correlation between Fl(0) and Fm(0).

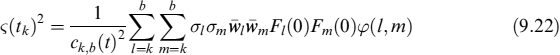

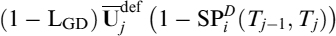

Adding mortgagee credit risk

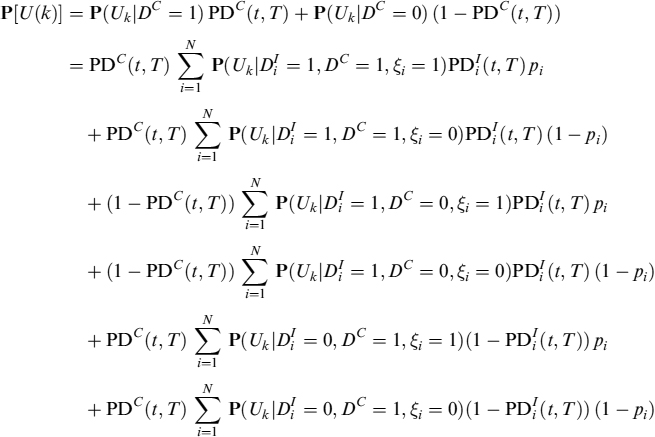

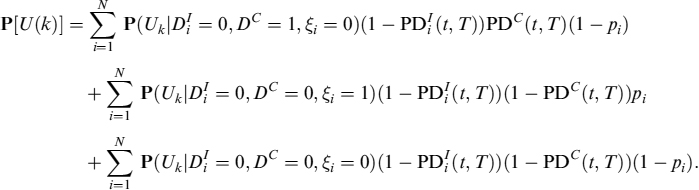

Assume that the default probability for the mortgagee between time t and T is PD(t, T) and that the loss-given default is a percentage of the outstanding capital equal to LGD, which is equivalent to (1 − Rec) with Rec being the recovery rate.

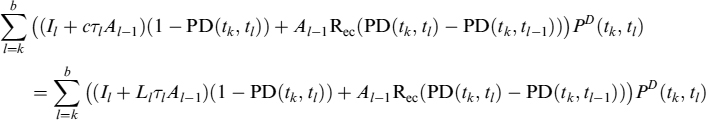

It is relatively easy to infer a fair mortgage rate default risk adjusted ![]() . In fact, considering again that the mortgage rate is equivalent to the rate of a swap that perfectly matches cash flows and pays the Libor rate against receiving the fixed rate, the fair mortgage rate is derived by setting the floating leg equal to the fixed leg, this time keeping expected cash flows depending on the occurrence of default in mind:

. In fact, considering again that the mortgage rate is equivalent to the rate of a swap that perfectly matches cash flows and pays the Libor rate against receiving the fixed rate, the fair mortgage rate is derived by setting the floating leg equal to the fixed leg, this time keeping expected cash flows depending on the occurrence of default in mind:

where Il is the capital installment paid at time tl. Simplifying we get:

and

Typically, mortgages are quoted at spread Sp over a reference curve (say, Libor): the problem is not how to infer the PD from this information. It is possible to show15 that the (assumed constant) default intensity γ(t) = γ of a given reference entity can be extracted from the spread at time t0 by means of the following approximation:

where Sp0,b(0) is the spread for a mortgage starting at time 0 expiring in Tb.

Formula (9.24), besides being quite simple and intuitive, is extremely convenient since it does not require knowledge of discount factors (to be extracted from the interest rate curve). One just needs the spread and an assumption on the LGD. It is well known that the approximation works rather well even when the default intensity is far from being constant.

The survival probability of the credit entity can then be approximated in a straightforward manner:

We can then infer, at each given date, an entire term structure of SPs from the spreads for mortgages with different expiries Tb. Even if the γ values for two maturities Tb and Tb′ are likely to be different, this does not create any inconsistency, since such γs must be viewed as average values over their respective intervals rather than constant (instantaneous) intensities.

Default probabilities are simply:

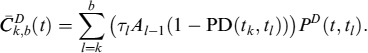

Valuation of the ELoP

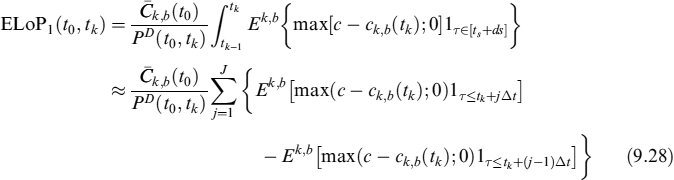

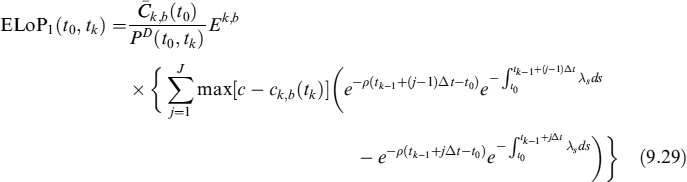

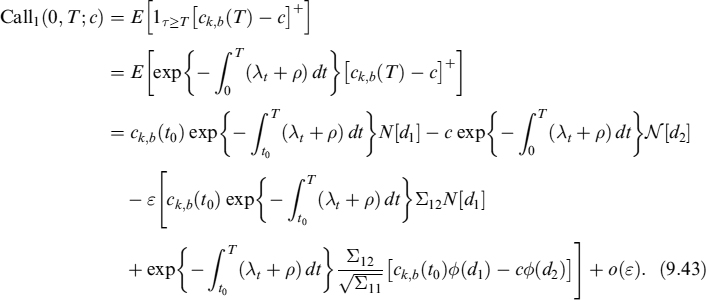

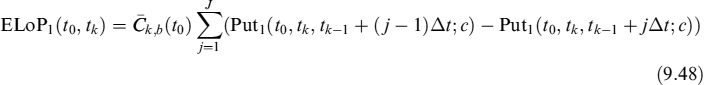

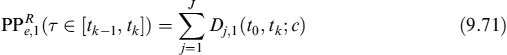

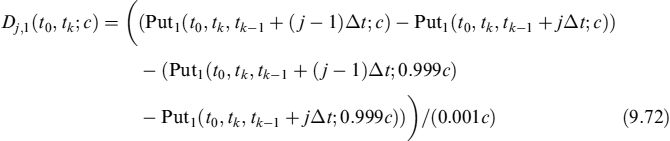

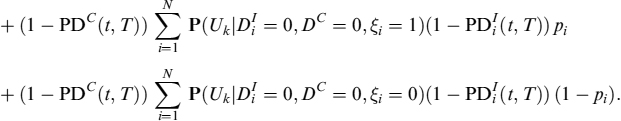

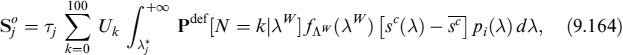

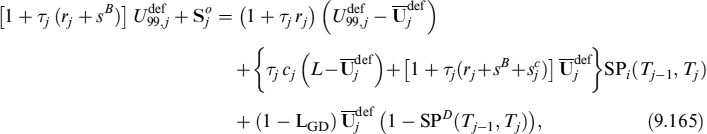

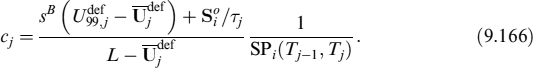

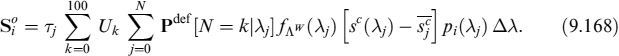

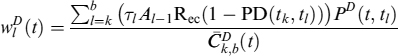

Having derived valuation formulae for the EL, it is straightforward to value the ELoP, for prepayments in {tk}. We indicate this expected loss on prepayment as ELoP1, since we will afterward introduce a second type of rational prepayment. In the most general formulation, it is:

![]()

Equation (9.27) is the most general form to value the ELoP1 and includes both the amortizing and non-amortizing cases we have shown above. So we will focus on solving this equation. We move to the forward mortgage rate ck, b(tk) measure, so that:

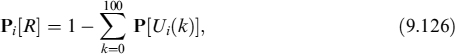

The first simplification we make is to assume that the prepayment decision (whose effects manifest themselves at the next payment date in any event) occurs at discrete times between [tk−1, tk], which for our purposes are divided into J intervals whose length is ![]() so that we can write (by applying Fubini's lemma as well):

so that we can write (by applying Fubini's lemma as well):

or equivalently

More explicitly we have:

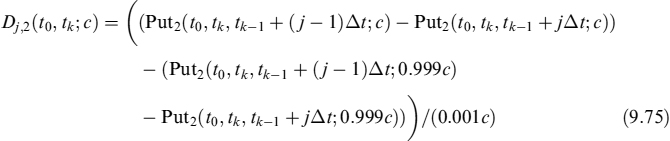

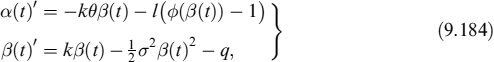

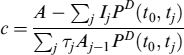

9.2.7 Analytical approximation for ELoP1

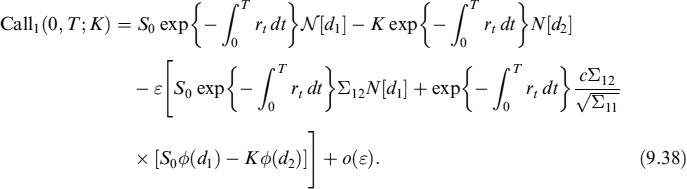

Equation (9.29) does not admit an explicit analytical solution, but an analytical approximation is viable. We start from a more general case of the pricing of an option when the instantaneous interest rate is correlated with the underlying asset.16 Let us focus first on valuing a payoff of the kind:

where St is an exponential martingale:

with solution S(t0) = S0 equal to:

where σt is a deterministic function of t. Assume also that the stochastic interest rate ![]() is described by the dynamics:

is described by the dynamics:

with 0 ≤ ε ≤ 1. We assume that dW1t and dW2t are correlated with the correlation parameter ![]() .

.

When the instantaneous interest rate follows CIR dynamics, we have that ![]() is

is

with expected value at time t:

We then get

and the underlying asset's dynamics is

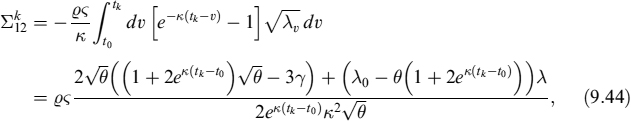

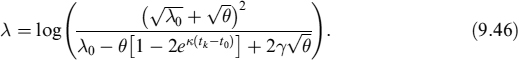

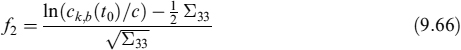

Under this specification, it can be shown17 that:

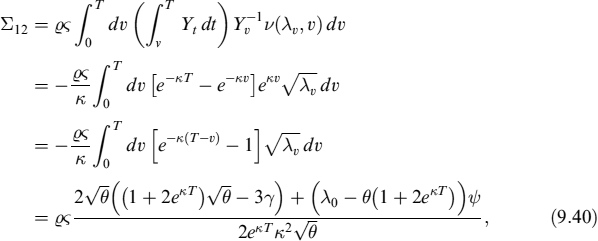

which can be seen as a standard BS formula plus a correction factor due to the correlation between the interest rate and the underlying asset. Terms Σ11 and Σ12 are:

where

and

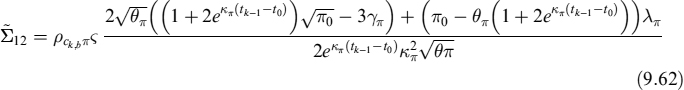

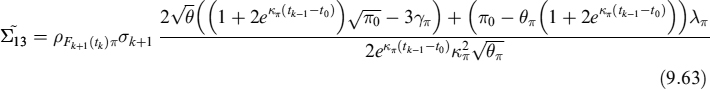

Now, if we set St = ck,b(t) and we consider the interest rate as the stochastic prepayment intensity λt and include the exogenous rate ρ as well, we can rewrite (9.38):

where the remaining notation is the same as above.

In order to value (9.29), we first need to compute the expected loss over the entire interval [tk−1, tk]. To that end we consider the loss to be given by the terminal payoff, in terms of a put option and not a call. In this case the intensity process λt is correlated only up to each tk−1 + jΔt, and not over the entire interval. So we have to modify ![]() as follows:

as follows:

and

The call option price is then:

Basically, it provides the value of a call option subject to survival of the underlying process ck,b(t0) up to tk.

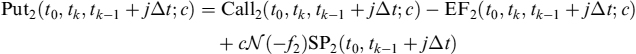

We also need to derive the put value via put–call parity:

where EF1 is the expected value ck,b(t0) in case a prepayment does not occur before tk. It can be computed as a call option struck at 0: EF1(t0, T, tk) = Call1(t0, T, tk; 0). The prepayment probability PP is calculated as in equation (9.6).

We now have all we need to compute (9.29), which can be rewritten as follows:

9.2.8 Valuing the ELoP using a VaR approach

Intensity λ cannot easily be hedged using market instruments, since no standard contract exists whose value depends on rational prepayment intensity. A possible conservative approach to valuation of the ELoP would be to consider an intensity process occurring at a high level with a given confidence level (say, 99%).

For our purposes, we need to use this distribution to determine the minimum survival probability from t0 up to each date {tk}, or equivalently the maximum prepayment probability up to {tk}. But what we actually need is the forward risk-adjusted distribution for λt, which is given in equation (8.36).

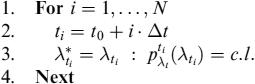

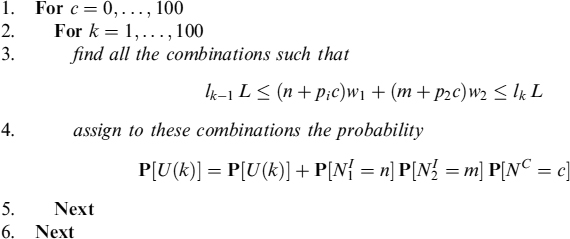

Assume we want to build an expected survival probability curve up to expiry of the mortgage in tN = T. Assume also that we divide the interval [t0, tN] into N subintervals Δt = [tN − t0]/N. We follow Procedure 9.2.1 which is given in pseudocode.

Procedure 9.2.1. This procedure derives the maximum expected levels of prepayment intensity ![]() , at discrete prepayment dates, with a confidence level (c.l.), say, of 99%:

, at discrete prepayment dates, with a confidence level (c.l.), say, of 99%:

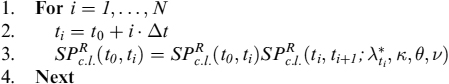

Having determined the maximum default intensity levels, we can compute the term structure of (minimum) survival probabilities SPR(0, ti):

Having determined the minimum rational survival probability, the maximum (total) prepayment probability up to given time {ti} is straightforward:

for i = 1; …, N.

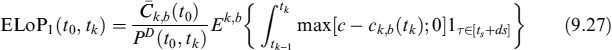

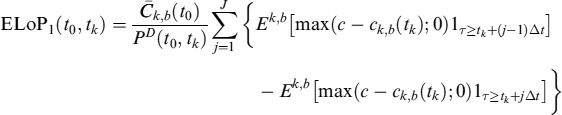

To evaluate the ELoP using a VaR-style approach we need to compute the conditional mean (drift) and conditional volatility of the mortgage rate process as well:18 we know that it is assumed to be lognormally distributed with the mean equal to its forward level (so that the drift of the process of ci, b is zero) and with the volatility parameter the same as in (9.22). Conditional volatility for a mortgage rate at time T, and for a rational intensity process observed in ti ≤ T, is:

where

![]()

The drift of the process is:

with initial condition ![]() and

and

![]()

The ELoP can thus be computed with formula (9.48) by using the adjusted forward mortgage rate

![]()

and volatility parameter ![]() .

.

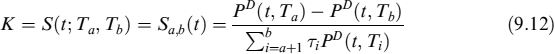

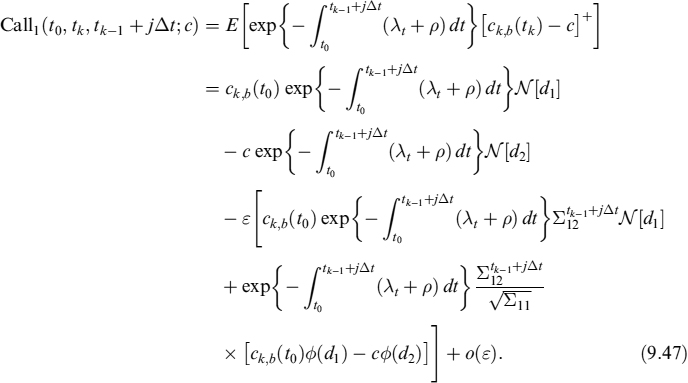

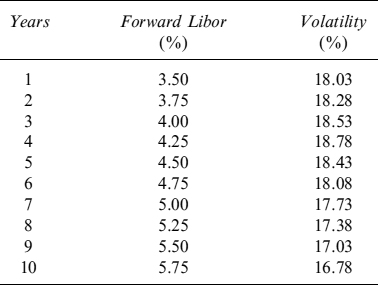

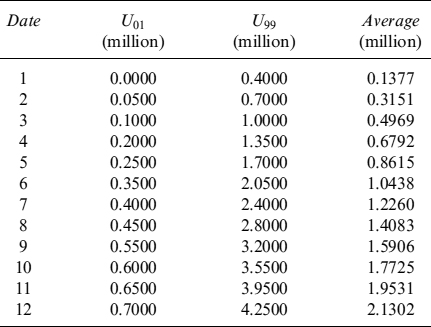

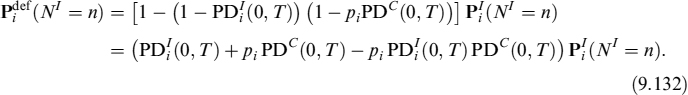

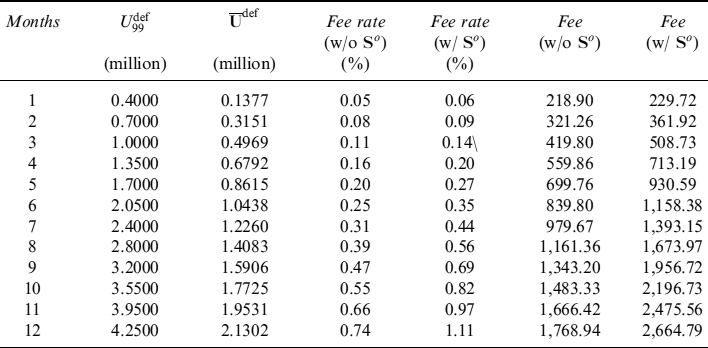

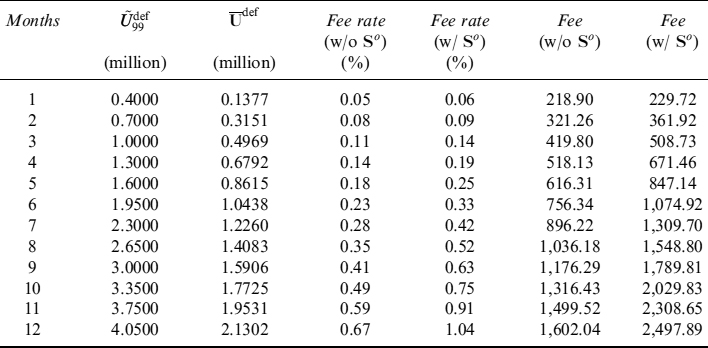

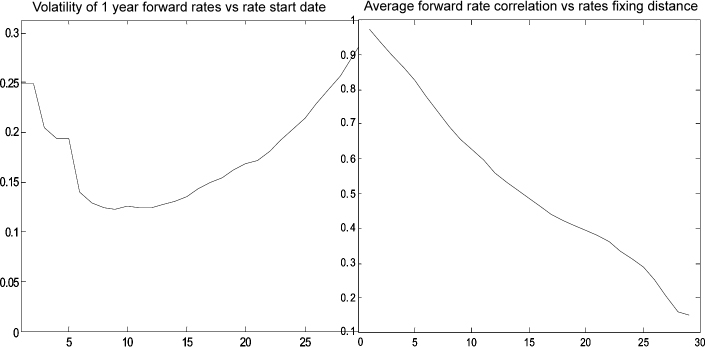

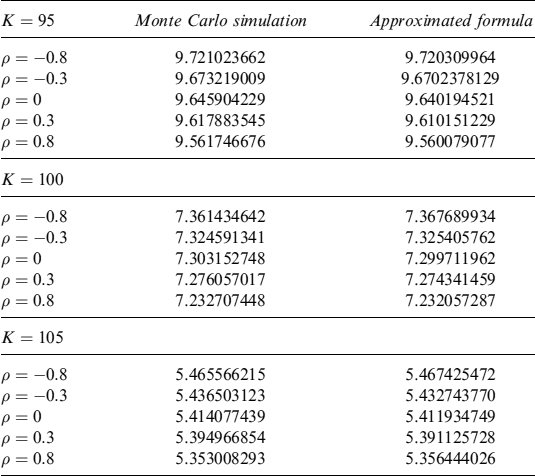

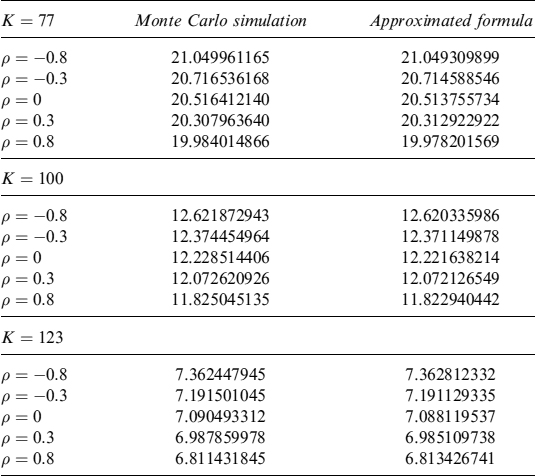

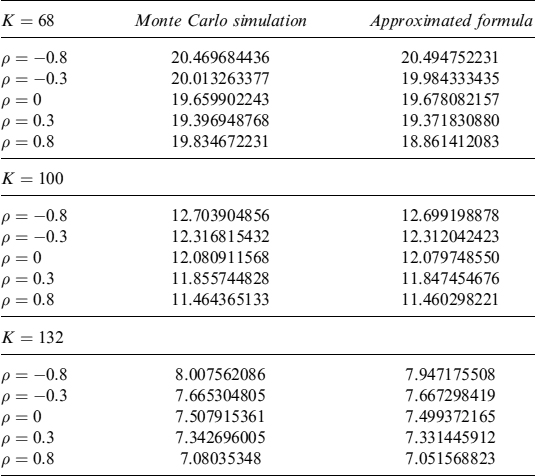

Example 9.2.2. Assume 1Y (risk-free) OIS forward rates with volatilities like those in Table 9.10. Assume further that exogenous prepayment intensity is 3% p.a. and rational prepayment intensity has the same dynamics parameters as presented above. We consider a 10Y mortgage, with a fixed rate paid annually of 3.95%. The fair rate has been computed without taking into account any prepayment effect (credit risk is not considered, although it can be included within the framework). The amortization schedule is in Table 9.11.

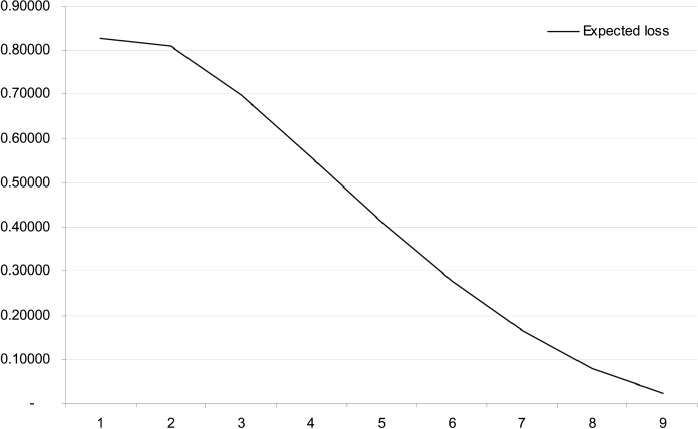

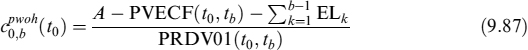

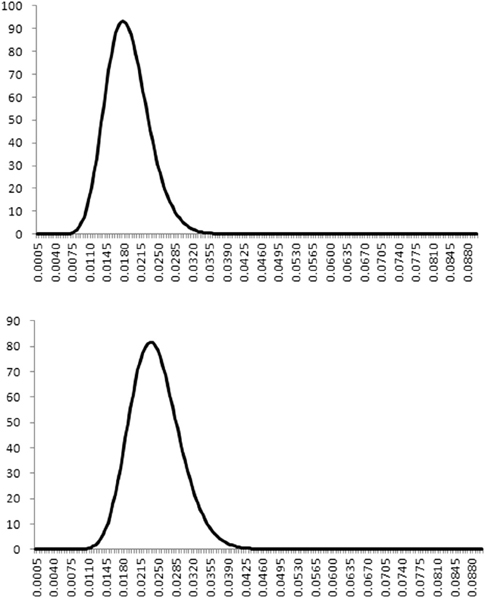

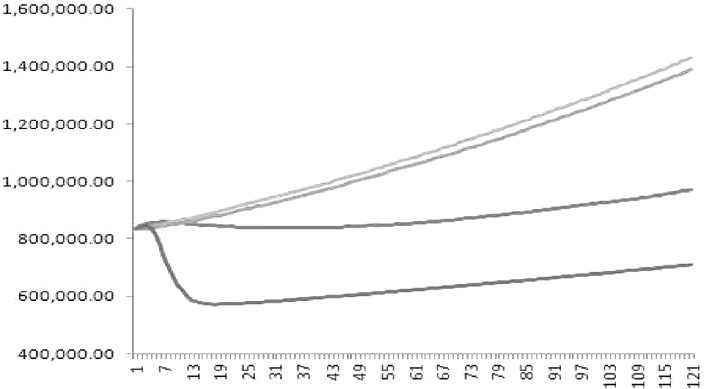

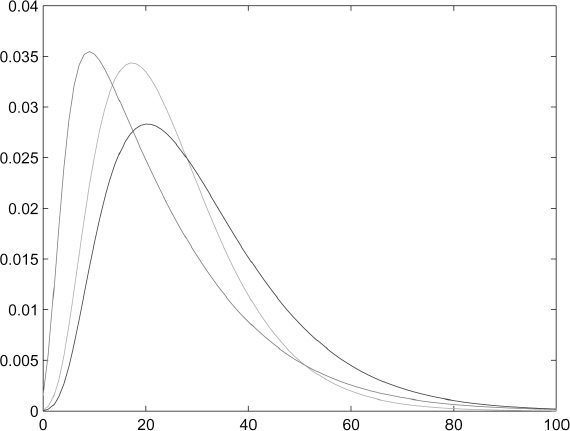

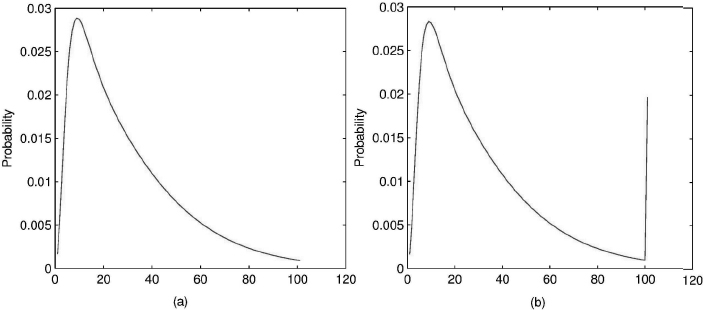

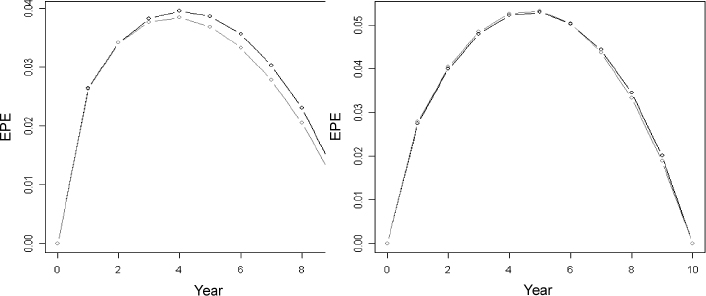

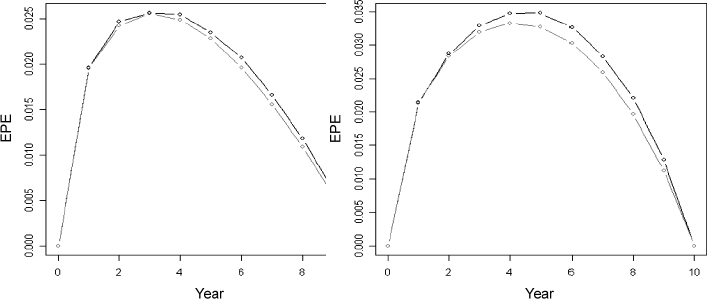

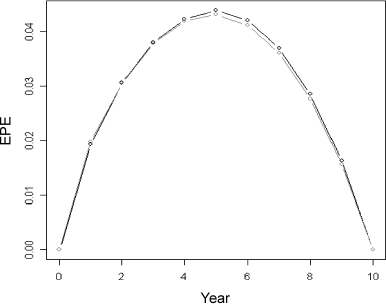

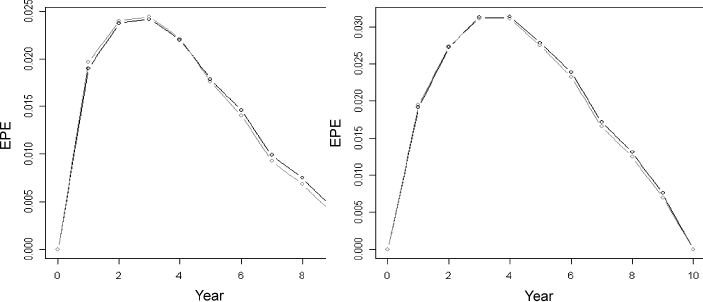

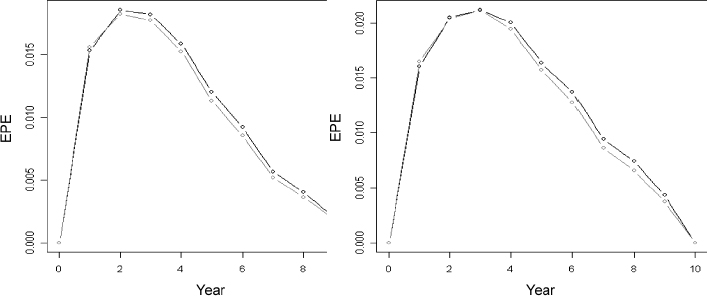

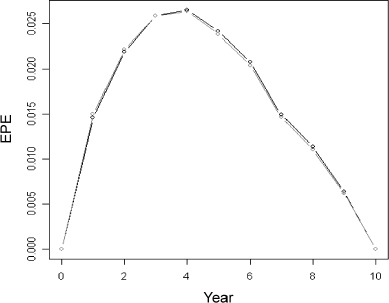

Given the market and contract data above, we can derive the EL at each possible prepayment date, which we assume occurs annually. It is plotted in Figure 9.3. A closed-form approximation has been employed to compute the EL. In a similar way it is possible to calculate the ELoP. We also use in this case an analytical approximation that allows for correlation between interest rates and rational prepayment intensity.

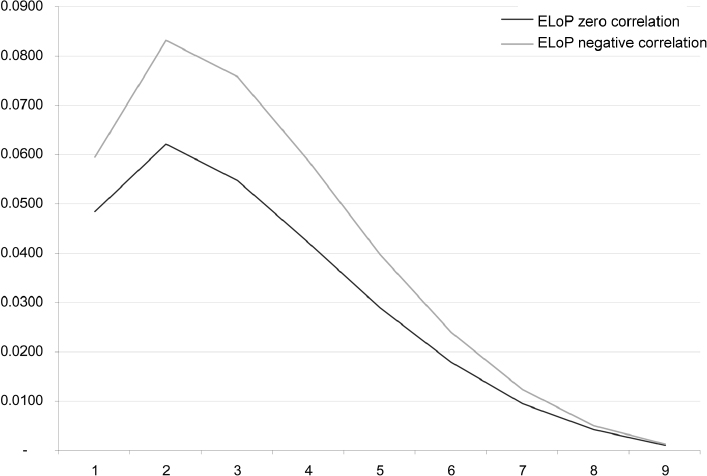

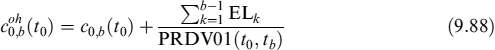

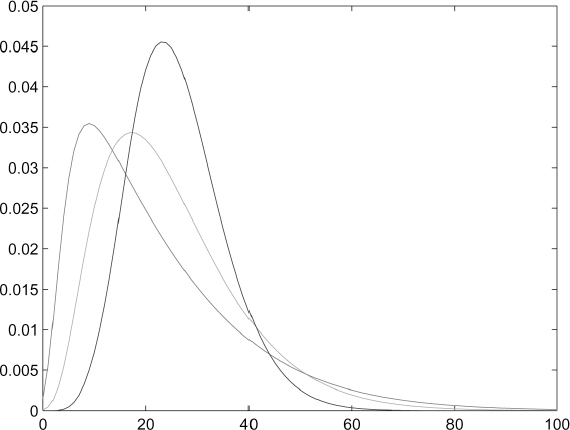

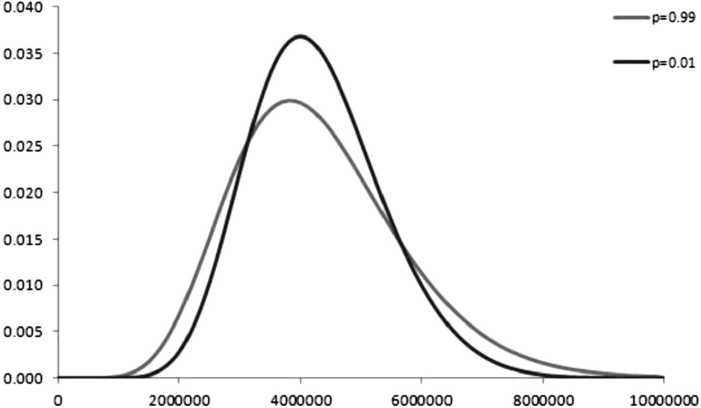

In Figure 9.4 the ELoP is plotted for a zero correlation case and for a negative correlation set at −0.8. This value implies that, when interest rates decline, the default intensity increases. Since the loss for the bank is bigger when rates are low, the ELoP in this case is higher than in the uncorrelated case.

Table 9.10. Volatilities of 1Y OIS forward rates

Table 9.11. Amortization schedule of a 10Y mortgage

| Years | Notional |

| 1 | 100.00 |

| 2 | 90.00 |

| 3 | 80.00 |

| 4 | 70.00 |

| 5 | 60.00 |

| 6 | 50.00 |

| 7 | 40.00 |

| 8 | 30.00 |

| 9 | 20.00 |

| 10 | 10.00 |

Figure 9.3. Expected loss for a 10-year mortgage

Figure 9.4. Expected loss on prepayment for a 10-year mortgage

9.2.9 Extension to double rational prepayment

The framework designed so far implicitly assumes that rational prepayment is driven by the convenience of closing a live fixed rate mortgage and opening a new one with a corresponding residual maturity and a lower contract rate. An alternative the mortgagor could pursue is to open a new mortgage with a floating rate: although from a theoretical financial perspective the two alternative choices are equivalent, from a behavioural perspective as a result of poor financial skills a comparison between the floating rate (e.g., the 3-month Libor) and the original fixed rate can produce a “rational” reason to prepay.

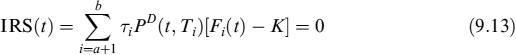

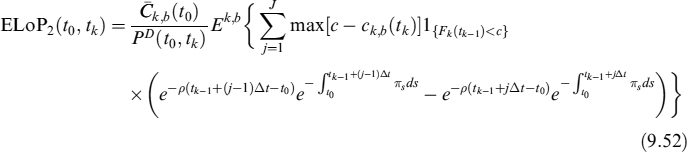

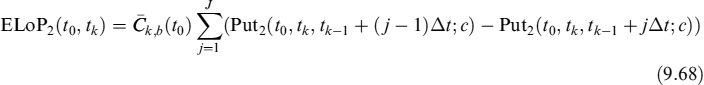

To model this behaviour, assume that the prepayment decision modelled before resembles a jump whose occurrence is described by an intensity rate π. The decision can be taken at any time, but it produces effects only when the Libor rate is lower than the contract rate Lt < c. The EL is the same as that subsequent to a rational prepayment, whereas the ELoP, which we indicate by ELoP2 in this case, can be written as:

where we have kept the effects of the exogenous prepayment that is operating in any case in mind.

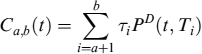

The dynamics of rational intensity π, which only manifests its effects when the Libor rate Fk+1(tk) < c, are specified as follows:

![]()

According to this intensity, the probability that no rational decision is taken up to time T, evaluated at time t, is:

where A(t, T) and B(t, T) are the same as in equation (8.27). ψπ is the market premium for prepayment risk which is also assumedin this case to be 0. We indicate the survival probability in this case as:

and the total prepayment probability is:

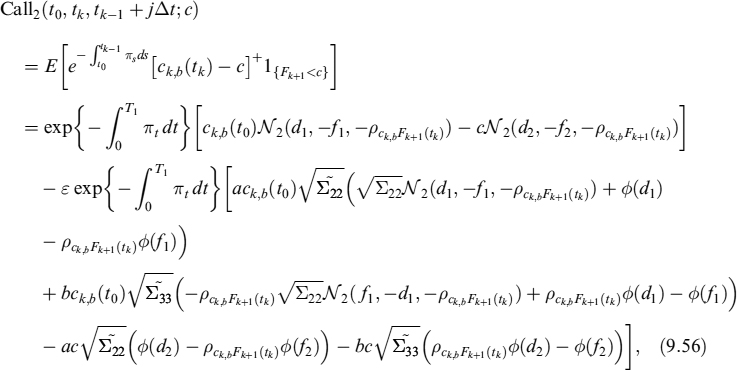

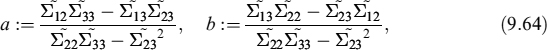

To value (9.52) we first need to compute the following:19

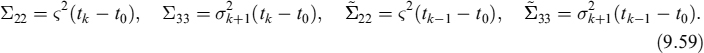

where N2(.;.;.) is the bivariate normal distribution, and:

and

Furthermore

The other two covariances are more difficult to solve explicitly, but we can find an analytical expression for both of them in any case. We define

and

Hence the two covariances can be expressed as

and

We denote by ρck,bπ the correlation between the mortgage rate at time tk and the intensity π, by ρFk+1(tk)π the correlation between the Libor rate fixing at time tk and the intensity π and, finally, by ρck,bFk+1(tk) the correlation between the mortgage rate and Libor at time tk. The last quantity can be derived by means of the formula:

where the notation is the same as that used above. We use the well-known procedure of freezing Libor rates at the level prevailing at time t0.

We can derive the put via put–call parity:

The quantity EL2(t0, tk, tk−1 + jΔt; c) is equal to ![]() and can be computed as the price of Call2(t0, tk, tk−1 + jΔt; 0), forcing the following quantities in formula (9.56) (although the strike c = 0) to be:

and can be computed as the price of Call2(t0, tk, tk−1 + jΔt; 0), forcing the following quantities in formula (9.56) (although the strike c = 0) to be:

and

Finally, we are able to compute the expected loss on prepayment

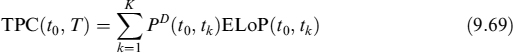

Total ELoP of this extended version of the model is the sum of ELoP1 in (9.48) and ELoP2 in (9.68) (ELoP = ELoP1 + ELoP2). This will also be the ELoP used to compute total prepayment cost, that we define in the following section.

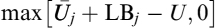

9.2.10 Total prepayment cost

The ELoP is a tool to measure expected losses a bank will suffer upon prepayment. For hedging and pricing purposes, though, it is more useful to compute total prepayment cost (TPC), defined as the sum of the present values of ELoP(t0, tk) for all possible K prepayment dates in the interval [t0, tb = T]:

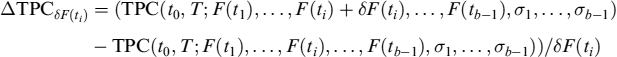

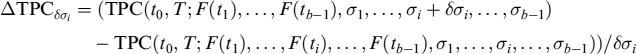

TPC can be hedged, since it is a function of Libor forward rates and related volatilities entering in the mortgage rate ck,b(t0): TPC(t0, T)→TPC(t0, T; F(t1), …, F(tb−1), σ1,…, σb−1). As for its sensitivity to interest rates, we can bump a given amount (say, 10 bps) separately each forward and then calculate the change in TPC. Denoting by ΔδF(ti) TPC the sensitivity of TPC with respect to bump δF(ti) of the forward F(ti), we have that:

In an analogous fashion we can compute its sensitivity to volatilities:

These sensitivities can easily be converted in hedging quantities of liquid market instruments, such as swaps and caps and floors.

9.2.11 Expected cash flows

The model can also be employed to project expected cash flows, taking into account the prepayment effect. More specifically, as already stated, the rational prepayment decision may occur at any time, but the actual effects both in terms of anticipated unwinding of the contract and of costs for the bank, manifest themselves only when the condition that the forward mortgage rate is lower than the contract rate ck,b(tk) < c is verified. In the previous section we considered this condition, since we only calculate the P&L effects when ck,b(tk) < c. Actually, the bank always suffers a loss in this case.

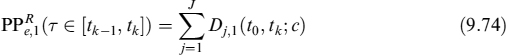

When projecting expected cash flows, the probability of an anticipated inflow of the residual notional at a given time tk has to be computed as follows:

where ![]() is the survival probability jointly with condition ck, b(tk) < c, which we name “effective”. The latter can be calculated by exploiting the approach described above for the ELoP: so we start with the effective rational prepayment probability in the interval [tk−1, tk]. Assume we divide this into J subintervals Δt

is the survival probability jointly with condition ck, b(tk) < c, which we name “effective”. The latter can be calculated by exploiting the approach described above for the ELoP: so we start with the effective rational prepayment probability in the interval [tk−1, tk]. Assume we divide this into J subintervals Δt

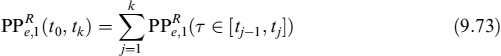

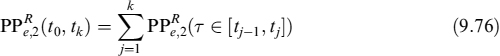

The notation and pricing formulae for the put options are the same as above. In practice, we calculate the price of a digital option in case it terminates before expiry with a probability determined by rational prepayment intensity λt. The effective prepayment probability from t0 up to a given time tk can be obtained by summing all the probabilities relating to prepayment times occurring before tk and including the latter as well:

This quantity is used in (9.70) since ![]() .

.

The same can also be done if a second type of rational prepayment is introduced, so that:

where

and

Total survival probability if we keep this additional rational prepayment in mind would be ![]() .

.

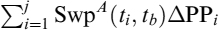

Expected total cash flow (interest + capital) at time t0 for each scheduled payment time20 {tj = tk} is given by the formula:

The expected outstanding amount at each time is given by:

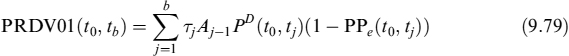

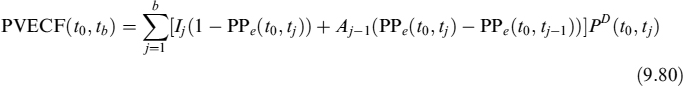

It will be useful to define the prepayment-risky annuity, PRDV01:

and the present value of the sum of expected capital cash flows PVECF:

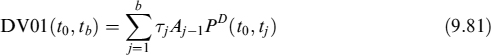

Both quantities can be computed with respect to standard prepayment probabilities or those derived by means of a VaR approach. Moreover, both can be compared with equivalent quantities when no prepayment risk is considered. Hence we have the DV01:

and the present value of the sum of the contract capital cash flows CF:

9.2.12 Mortgage pricing including prepayment costs

The fair rate of a mortgage at inception has to take into account two effects of prepayment. The first effect is due to the fact that prepayment is equivalent to accelerated amortization, so that the bank receives earlier than expected the amount lent to the mortgagee: this produces a lowering of the fair mortgage rate. This effect is gauged by weighting future cash flows with prepayment probabilities. The second effect is due to the cost that the bank bears when prepayment occurs when the replacement of the mortgage in the bank's assets can be operated at a rate lower than the original one: we have measured this cost using the TPC.

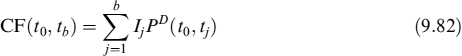

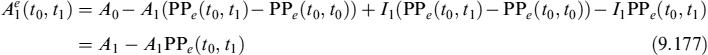

Let us start with the fair rate at time t0 of a mortgage with notional A starting at t0, ending at T = tb, with a predefined amortization schedule:

This formula does not include any effect due to prepayment. We can include the first effect mentioned above by replacing the DV01 and the present value of the contract's capital cash flows by their expected value:

where the superscript pw stands for prepayment weighted. The effect of anticipating the amortization implies that ![]() . To calculate a full risk fair rate that also includes the cost stemming from prepayment, formula (9.84) modifies as follows:

. To calculate a full risk fair rate that also includes the cost stemming from prepayment, formula (9.84) modifies as follows:

Equation (9.85) acknowledges to the mortgagee both the benefits and costs for the bank in case of prepayment. A more conservative approach would be to include the TPC computed with a VaR approach, instead of the standard approach. An alternative would be to charge the TPC, split over the expected life of the contract, over the fair rate with no prepayment risk:

This rate can be considered the standard fair rate including prepayment cost but not the first prepayment effect (accelerated amortization).

Finally, to give an idea of the maximum rate that can be charged to the mortgagee, we consider overhedging the TPC, which is simply the summation of all the expected losses EL without any weighting for the prepayment probability. In this case the formula for the mortgage rate is:

as long as we are only considering the first prepayment effect. Otherwise, we simply add total expected loss (split over the expected life of the contract) to the standard fair rate:

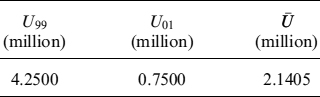

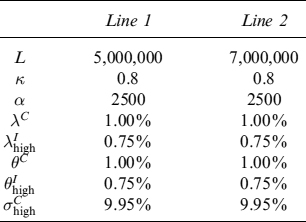

Example 9.2.3. Considering the case in Example 9.2.2, we now compute the TPC related to the 10-year mortgage, which is equal to 48 bps.

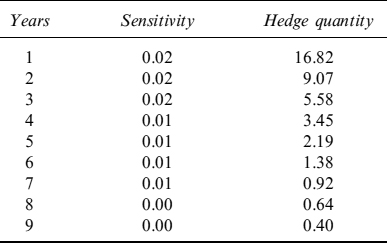

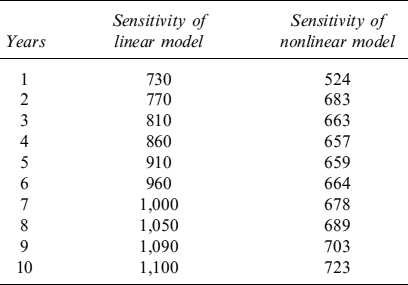

Table 9.12 shows the sensitivity of the TPC to a tilt of 10 bps for each forward rate. These sensitivities are then translated in an equivalent quantity of swaps, with expiries from 1 year to 10 years, needed to hedge them.

Table 9.13 shows the Vega of the TPC with respect to the volati'/ities of each forward rate. These exposures can hedged using caps and floors or swaptions in the Libor market model setting we are working in (by calibrating the forward rate correlation matrix to the swaption volatility surface).

Table 9.12. Interest rate sensitivity of the TPC

| Years | Vega |

| 1 | 0.08 |

| 2 | 0.20 |

| 3 | 0.33 |

| 4 | 0.45 |

| 5 | 0.53 |

| 6 | 0.54 |

| 7 | 0.48 |

| 8 | 0.33 |

| 9 | 0.12 |

Let us now assume we want to include the prepayment cost in the 10-year mortgage. We first include exogenous prepayment, which is independent of the level of interest rates, so that on average its effects boil down to anticipated repayment of the outstanding notional: this will reduce the fair rate and, according to the data we used above, we have the fair rate modified as:

c = 3.95% → c = 3.89%

Second, we include the TPC arising from rational prepayment (48bps), which surely entails an increase of the fair rate:

c = 3.89% → c = 4.00%

or the total effect of the prepayment of 5 bps in the fair rate.

For comparison purposes, we consider the overhedge strategy which consists in replication of the EL instead of the ELoP. In this case the fair mortgage rate would change as follows:

c = 3.89% → c = 4.78%

As mentioned in the main text, while the interest rate and volatility risk can be hedged using standard (and liquid) market instruments, the prepayment risk related to the stochasticity of (rational prepayment) intensity cannot be eliminated. We suggest a VaR-like approach to resolve this. The corresponding TPC for the 10-year mortgage is 56 bps and the fair rate modifies as:

c = 3.89% → c = 4.02%

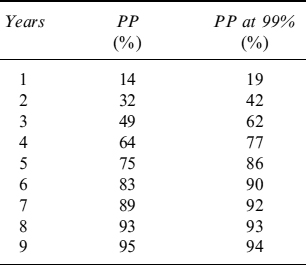

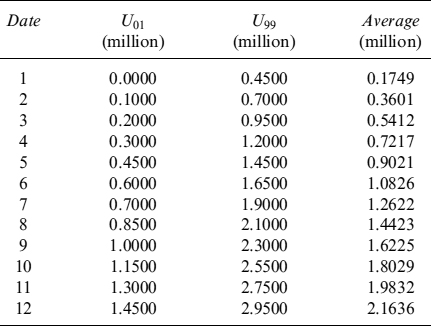

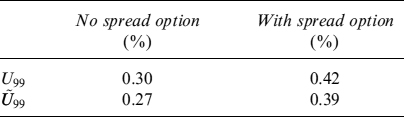

which means that a generally higher prepayment probability has little impact on pricing. In Table 9.14 we show a comparison between expected and 99th percentile rational prepayment probabilities. Higher probability increases the costs but, since it also anticipates prepayment, the likelihood to have larger differences between current and mortgage rates is reduced.

Table 9.14. Expected and 99th percentile prepayment probabilities from the first to the last possible prepayment date

Table 9.15. Sets of parameters, fair rates and TPC (using standard and VaR-like approaches)

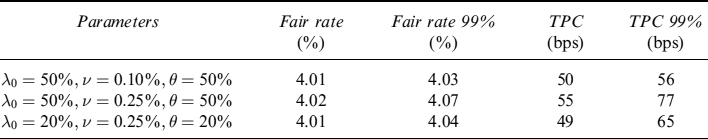

To appreciate the effect of different parameters on the TPC, in Table 9.15 we show three sets of parameters of the intensity dynamics of rational prepayment and their effect on:

- the fair rate;

- the fair rate at 99th percentile prepayment probabilities;

- the TPC;

- the TPC at 99th percentile prepayment probabilities.

The total effect is rather limited for the mortgage fair rate. When considering the TPC, the differences between the base and VaR-like approach are bigger.

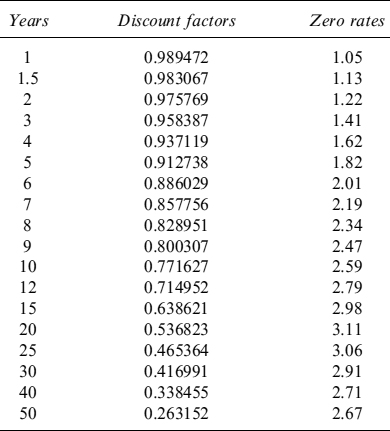

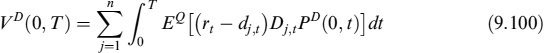

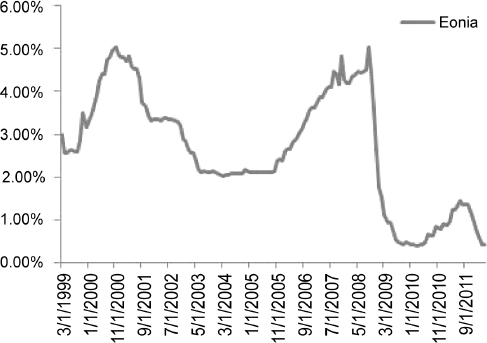

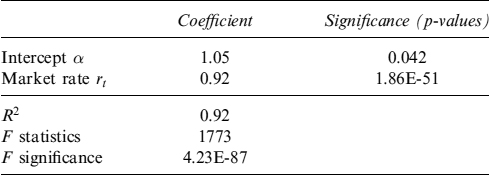

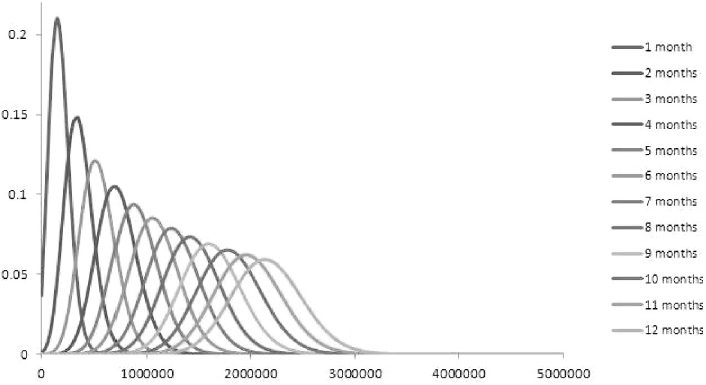

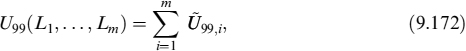

Example 9.2.4. We now show how the model presented works for a portfolio of mortgages. The Eonia discount factors and zero rates (in percent), for years 1 to 30, that we have used have been extracted from deposits, FRAs and swaps on Euribor (shown in Table 9.16). In Table 9.17 we show volatilities for the forward rates needed to compute the EL.

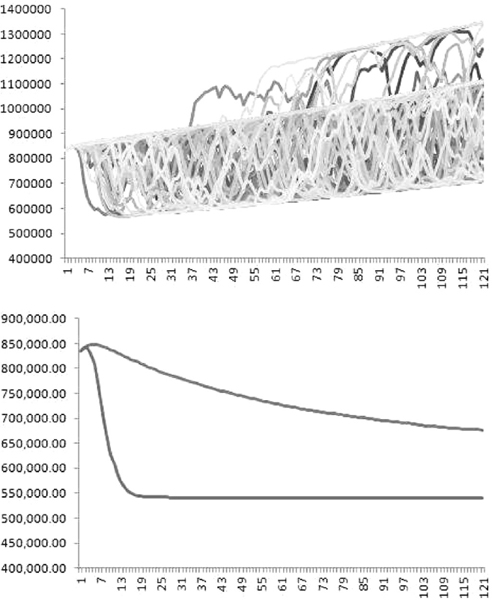

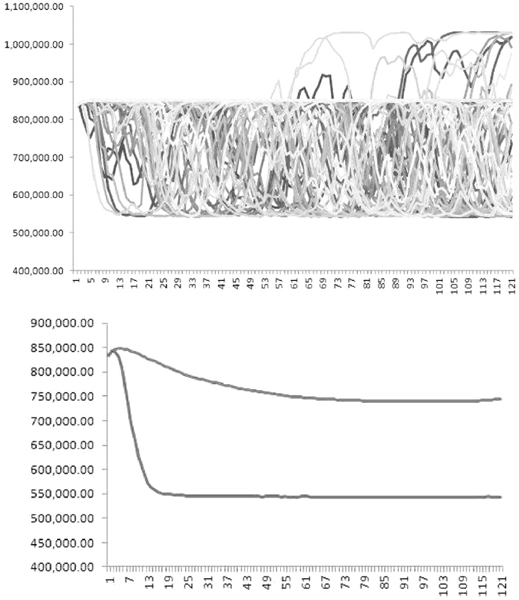

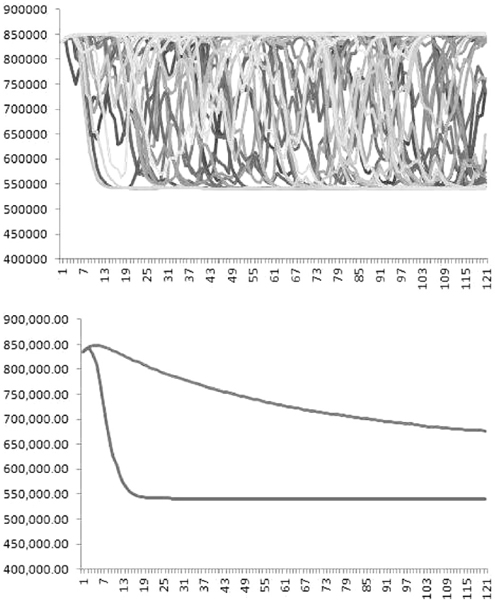

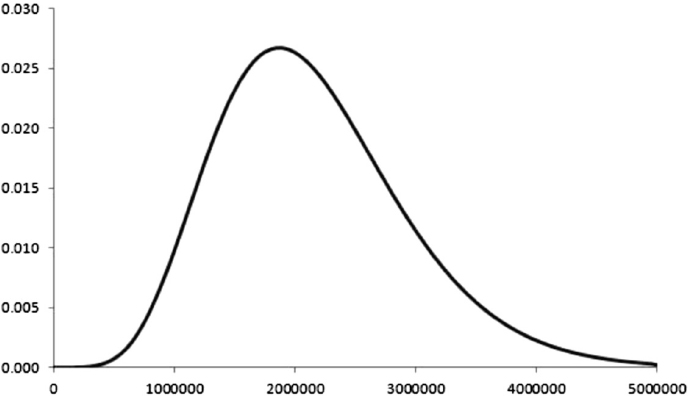

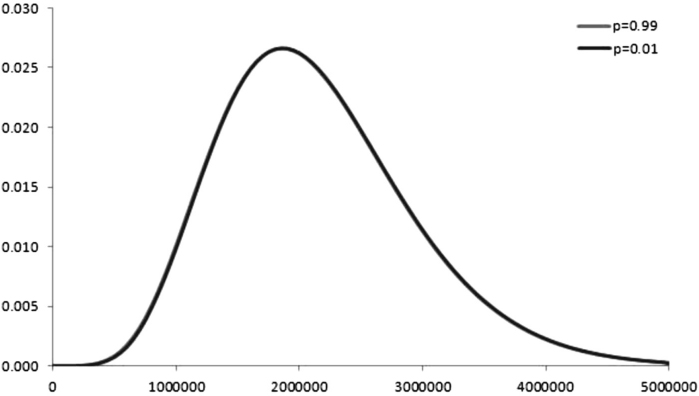

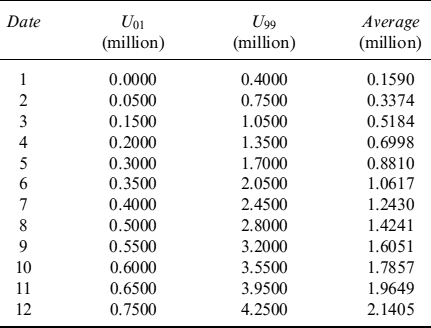

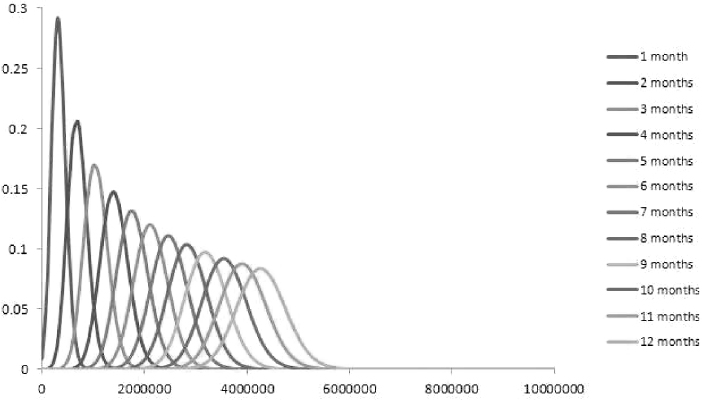

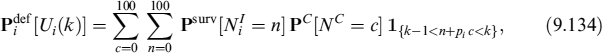

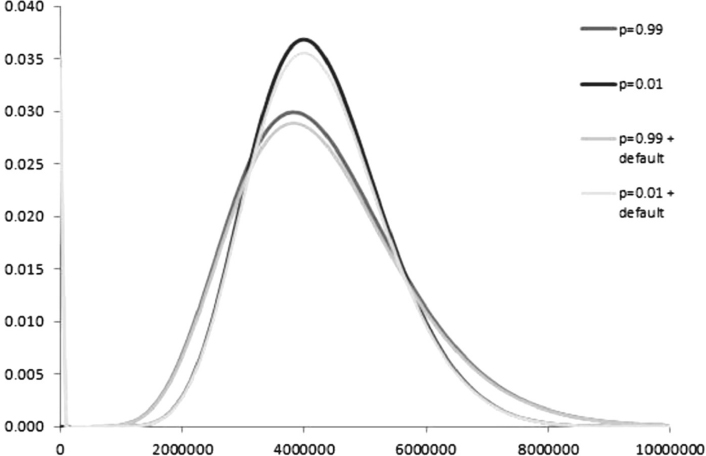

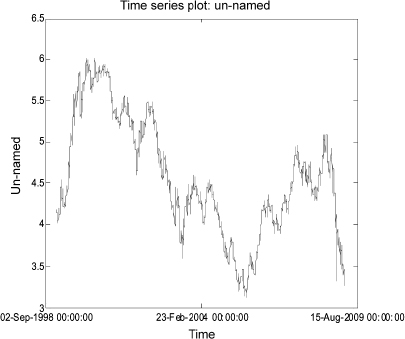

We consider a portfolio of 307,048 mortgages worth a total amount of EUR1 billion. The distribution of contract fixed rates within the portfolio is given in Table 9.18 and represented in Figure 9.5.

The distribution of notional amounts is shown in Table 9.19 and Figure 9.6. The vast majority of mortgage notional amounts were less than EUR200,000, and only 506 contracts were above 500,000 euros and 111 above EUR1 million.

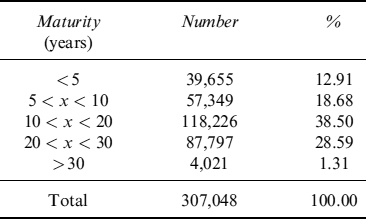

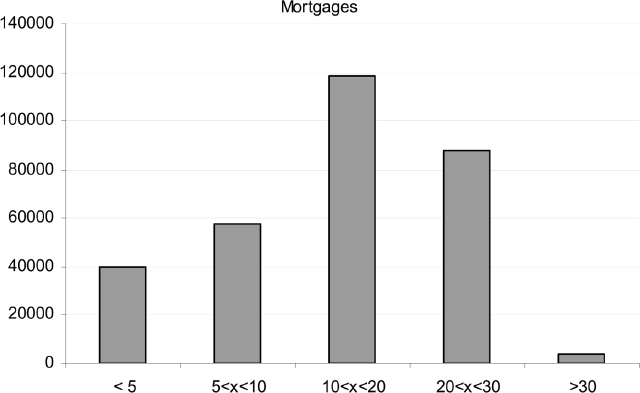

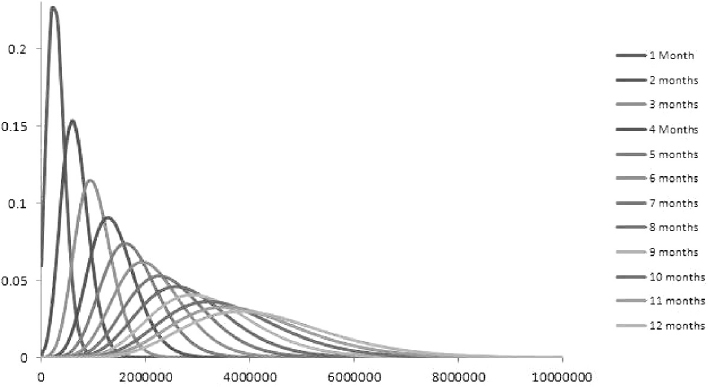

Finally, we show the distribution of maturities within the portfolio in Table 9.20 and Figure 9.7. Mortgage maturity is mainly concentrated on 10 years and 20 years; fewer contracts have shorter maturities and only 1.31% of the total portfolio has an expiry after 30 years.

Table 9.16. Eonia discocunt factors and zero rates for maturities from 1 to 30 years

Table 9.17. Volatilities of Eonia forward rates for maturities from 1 to 30 years

| Years | Volatility |

| 1 | 44.41 |

| 1.5 | 51.01 |

| 2 | 53.38 |

| 3 | 47.99 |

| 4 | 45.87 |

| 5 | 42.64 |

| 6 | 39.27 |

| 7 | 36.31 |

| 8 | 33.86 |

| 9 | 31.93 |

| 10 | 30.35 |

| 12 | 27.80 |

| 15 | 25.50 |

| 20 | 23.77 |

| 25 | 23.59 |

| 30 | 24.40 |

Total prepayment cost (TPC), given market conditions at the evaluation date, is around EUR49 million (see Table 9.21). This is a not a small percentage of the outstanding notional amount (remaining capital) of the mortgages. TPC is the current value of expected losses incurred in the future from the prepayment decisions taken by mortgagees.

Table 9.18. Distribution of contract fixed rates for the mortgage portfolio

Figure 9.5. Distribution of contract fixed rates for the mortgage portfolio

The possibility to hedge this quantity is crucial to minimize the costs related to prepayments. In a low-margin environment for the bank, such a cost is definitely not negligible. Prepayment exposures must be monitored and appropriate hedging strategies must be implemented.

Zero rate sensitivities are reported in Table 9.22 for different tenors from 1 year to 30 years: most exposures are between 10 years and 25 years. For a parallel shift in the zero rate curve of 1 basis point, variation in TPC is of about EUR145,000. The bank gains (i.e., TPC decreases) when rates move up.

Exposures to volatilities are shown for expiries running from 1 to 30 years in Table 9.23. Most sensitivity is on expiries between 10 and 20 years. An upward shift of the term structure of market implied volatilities produces an increase in TPC of about EUR420,000.

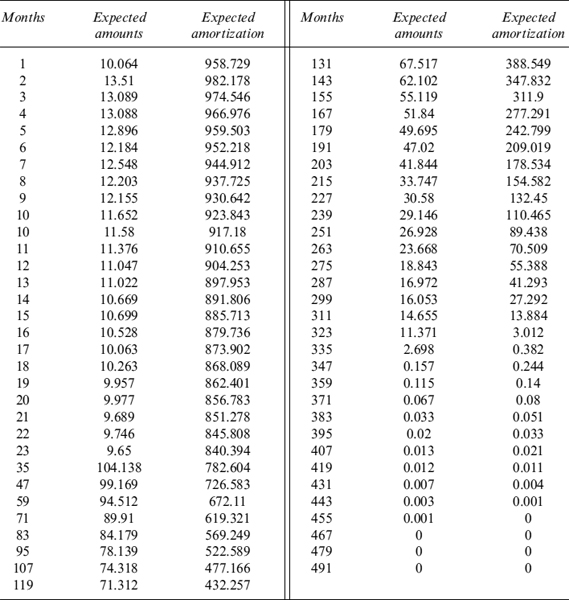

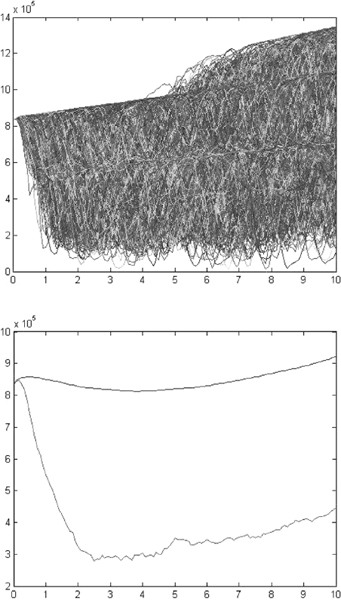

The expected cash flows and amortization of the pool of mortgages for each month, running from the calculation date to 41 years, are shown in Table 9.24. Expected cash flows include contract repayments (capital and interest) weighted by the probability of no prepayment and the full reimbursement of the remaining capital, plus interest for the last period, weighted by the probability of prepayment. Expected amortization includes the amount of capital to be repaid weighted by the no prepayment probability and the amount of remaining capital paid back when the mortgage ends before expiry, wighted by the prepayment probability.

Table 9.19. Distribution of notional amounts for the mortgage portfolio

Figure 9.6. Distribution of notional amounts for the mortgage portfolio

Table 9.20. Distribution of maturities for the mortgage portfolio

Figure 9.7. Distribution of maturities for the mortgage portfolio

Table 9.21. Total prepayment cost of the portfolio of mortgages

| total | percent |

| 48,736,032 | 4.874 |

Table 9.22. Zero rate sensitivities of the TPC of the portfolio of mortgages

| Years | Zero rate sensitivity |

| 1 | −2,203.03 |

| 2 | − 1,462.35 |

| 3 | −2,336.41 |

| 4 | − 1,652.77 |

| 5 | − 1,068.93 |

| 6 | −575.21 |

| 7 | 2,289.25 |

| 8 | 5,928.28 |

| 9 | 7,129.96 |

| 10 | 9,665.28 |

| 12 | 16,813.30 |

| 15 | 33,398.23 |

| 20 | 46,136.98 |

| 25 | 31,621.84 |

| 30 | 3,291.74 |

| Total | 146,976.18 |

Table 9.23. Volatility sensitivities of the TPC of the portfolio of mortgages

| Years | Vega |

| 1 | −361.1 |

| 1.5 | −705.2 |

| 2 | −2,375.5 |

| 3 | − 10,554.2 |

| 5 | −31,045.8 |

| 7 | −51,706.8 |

| 10 | − 119,649.7 |

| 15 | −121,675.8 |

| 20 | −67,321.9 |

| 30 | −13,647.9 |

| Total | −419,043.9 |

9.3 SIGHT DEPOSIT AND NON-MATURING LIABILITY MODELLING

The modelling of deposits and non-maturing liabilities is a crucial task for liquidity management of a financial institution.21 It has become even more crucial in the current environment after the liquidity crisis that affected the money market in 2008/2009.

Typically, the ALM departments of banks involved in the management of interest rate and liquidity risks face the task of forecasting deposit volumes, so as to design and implement consequent liquidity strategies.

Moreover, deposit accounts represent the main source of funding for the bank, primarily for those institutions focused on retail business, and they heavily contribute to the funding available in every period for lending activity (see Chapter 7). Of the different funding sources, deposits have the lowest costs, so that in a funding mix they contribute to reducing the total cost of funding.22

Indeed, deposit contracts have the peculiar feature of not having a predetermined maturity, since the holder is free to withdraw the whole amount at any time. The liquidity risk for the bank arises from the mismatch between the term structures of assets and liabilities of the bank's balance sheet, since liabilities are mostly made up of non-maturing items and assets by long-term investments (such as mortgage loans). We extensively analysed this problem in Chapter 7.

The optionality embedded in non-maturing products, relating to the possibility for the customer to arbitrarily choose any desired schedule of principal cash flows, has to be understood and accounted for when performing liability valuation and hedging market and liquidity risk. Thus, a sound model is essential to deal with embedded optionality for liquidity risk management purposes.

Table 9.24. Expected cash flows and amortization schedule of the portfolio of mortgages

9.3.1 Modelling approaches

Two different approaches can be found in the financial literature and in market practice to model the evolution of deposit balances:

- bond portfolio replication

- OAS models.

Bond portfolio replication, probably the most common approach adopted by banks, can be briefly described as follows. First, the total deposit amount is split into two components:

- a core part that is assumed to be insensitive to market variable evolution, such as interest rates and deposit rates. This fraction of the total volume of deposits is supposed to decline gradually over a medium to long-term period (say, 10 or 15 years) and to amortize completely at the end of it.

- a volatile part that is assumed to be withdrawn by depositors over a short horizon. This fraction basically refers to the component of the total volume of deposits that is normally used by depositors to match their liquidity needs.

Second, the core part is hedged using a portfolio of vanilla bonds and money market instruments, whose weights are computed by solving an optimization problem that could be set according to different rules. Typically, portfolio weights are chosen so as to replicate the amortization schedule of deposits or, in other words, their duration. In this way the replication portfolio protects the economic value of the deposits (as defined later on) against market interest rate movements. Another constraint, usually imposed in the choice of portfolio weights, is target return expressed as a certain margin over market rates.

Since deposit rates are updated, with relatively large freedom of action, by banks to align them with market rates, the replication portfolio can comprise fixed rate bonds, to match the inelastic part of deposit rates that do not react to changes in market rates, and floating rate bonds, to match the elastic part of deposit rates. The process to rebalance the bond portfolio, although simple in theory, is quite convoluted in practice. For a more detailed explanation of the mechanism see [86].

Third, the volatile part is invested in very short term assets, typically overnight deposits, and represents a liquidity buffer to cope with daily withdrawals by depositors.

The critical point of this approach is estimation of the amortization schedule of non-maturing accounts, which is performed on a statistical basis and has to be reconsidered periodically. One of the flaws of the bond replica approach is that risk factors affecting the evolution of deposits are not modelled as stochastic variables. So, once statistical analysis is performed, the weights are applied by considering the current market value of the relevant factors (basically, market and deposit rates) without considering their future evolution.

This flaw is removed, at least partially, by the so-called option-adjusted spread (OAS) approach, which we prefer to call the stochastic factor (SF) approach.23 In principle, the approach is little different from the bond portfolio replica approach: it identifies statistically how the evolution of deposit volumes is linked to risk factors (typically, market and interest rates) and then sets up a hedge portfolio that covers their exposures.

The main difference lies in that, in contrast to bond portfolio replication, in the SF approach the weights of hedging instruments are computed keeping the future random evolution of risk factors in mind, so that the hedging activity resembles the dynamic replication of derivatives contract. The hedging portfolio is revised based on the market movements of risk factors, according to the stochastic process adopted to model them.

We prefer to work with a SF approach to model deposit volumes for several reasons. First, we think the SF approach is more advanced from the modelling perspective, explicitly taking into account the stochastic nature of risk factors. Second, if bond portfolio replication can be deemed adequate to hedge the interest rate margin and the economic value of deposits, from the liquidity risk management point of view the SF approach is superior, by the very fact that it is possible to jointly evaluate within a unified consistent framework the effects of risk factors both on the economic value and on future inflows and outflows of deposits. Third, it is easier to include complex behavioural functions linking the evolution of volumes to risk factors in the SF approach. Finally, bank-run events can also be considered and properly taken into account in the SF approach, whereas their inclusion seems quite difficult within the bond portfolio replication approach.

9.3.2 The stochastic factor approach

The first attempt to apply the SF approach, within an arbitrage-free derivatives-pricing framework, to deposit accounts was made by Jarrow and van Deventer [78]. They derived a valuation framework for deposits based on the analogy between these liabilities and an exotic swap whose principal depends on the past history of market rates. They provide a linear specification for the evolution of deposit volumes applied to US federal data.

Other similar models have been proposed24 within the SF approach: it is possible to identify three building blocks common to all of them:

- A stochastic process for interest rates: in [78], for example, it is the Vasicek model (see Chapter 8).

- A stochastic model for deposit rates: typically, these are linked to interest rates by means of a more or less complex function.

- A model for the evolution of deposit volumes: since this is linked by some functional forms to the two risk factors in points 1 and 2, it too is a stochastic process.

Specification of the dynamics of deposit volumes is the crucial feature distinguishing the different SF models: looking at things from the microeconomic perspective, volumes depend on the liquidity preference and risk aversion of depositors, whose behaviour is driven by opportunity costs between alternative allocations. When market rates rise, depositors have a greater temptation to withdraw money from sight deposits and invest them in other assets offered in the market.

SF models can be defined behavioural in the sense that they try to capture the dynamics of depositor behaviour with respect to market rates and deposit rates movements. In doing this, these models exploit option-pricing technology, developed since the 1970s, and depend on stochastic variables, in contrast to the previously mentioned class on simpler statistical models.

Depositor behaviour can be synthesized in a behavioural function that depends on risk factors and determines their choice in terms of the amount allocated in deposits. This function could be specified in various forms, allowing for different degrees of complexity. Given their stochastic nature, those models are suitable for implementation in simulation-based frameworks like Monte Carlo methods.

Since closed-form formulae for the value of deposits are expressed as risk-neutral expectations, the scenario generation process has to be accomplished with respect to the equivalent martingale probability measure. For liquidity management purposes, it is more appropriate to use real-world parameter processes. In what follows we will not distinguish between them: as we have also assumed in other parts of this book, with a risk premium parameter equal to zero, real-world processes for interest rates clash with risk-neutral ones.

We propose a specification for the SF approach which we think is parsimonious enough, yet effective.

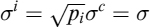

Modelling of market interest rates

The dynamics of market interest rates can be chosen rather arbitrarily: the class of short-rate models we introduced in Chapter 8 is suitable and can be effectively used. In our specification we adopted a single-factor CIR++ model (see Section 8.3.4): we know that such a model is capable of perfectly matching the current observed term structure of risk-free zero rates. The market instantaneous risk-free rate is thus given by

rt = xt + ϕt

where xt has dynamics

![]()

and ϕt is a deterministic function of time.

Modelling of deposit rates

Deposit rate evolution is linked to the pricing policy of banks, providing a tool that can be exploited to drive deposit volumes across time. It is reasonable to think that an increase in the deposit rate will work as an incentive for existing depositors not to withdraw from their accounts or to even increase the amount deposited.

The rate paid by the bank on deposit accounts can be determined according to different rules. Here are some examples:

- Constant spread below market rates:

to avoid having negative rates on the deposit, there is a floor at zero.

- A proportion α of market rates:

We analysed the fair pricing of sight deposits and non-maturing liabilities in Chapter 7, where we also derived the fair rate that a bank should pay on these contracts, discovering that it is a functional form of the kind in equation (9.90).

- A function similar to the two above but also dependent on the amount deposited:

where Dj and Dj+1 are the range of deposit volumes D producing different levels of deposit rates.

We adopt a rule slightly more general than equation (9.90) (i.e., a linear affine relation between the deposit rate and the market short rate):

where E(ut) = 0, ∀t.

As will be manifest in what follows, the evolution of deposit volumes depends on the deposit rate, so in this framework the pricing policy function, which is obviously discretionary for the bank, represents a tool to drive deposit volumes and, consequently, can be used to define liquidity strategies.

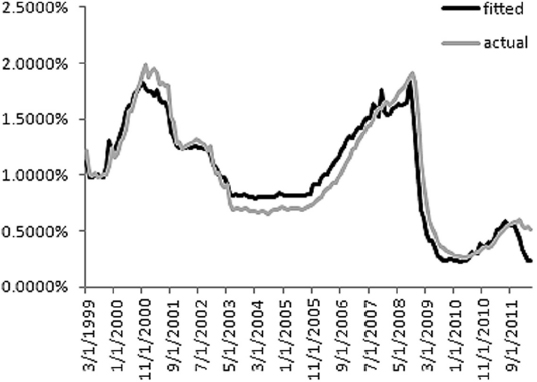

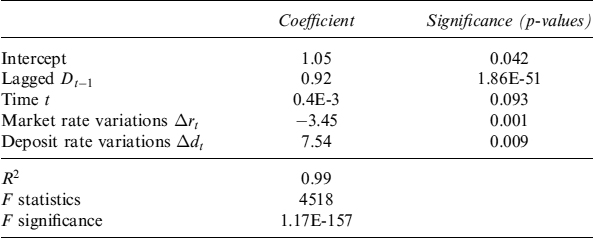

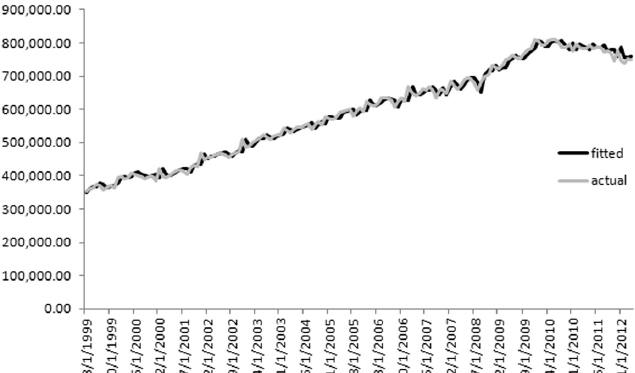

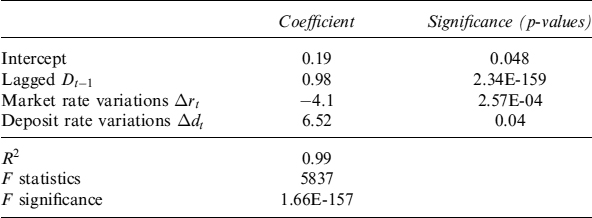

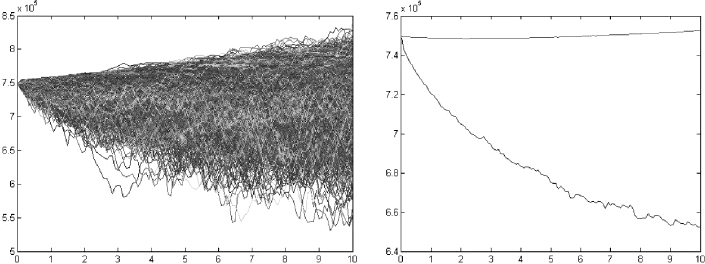

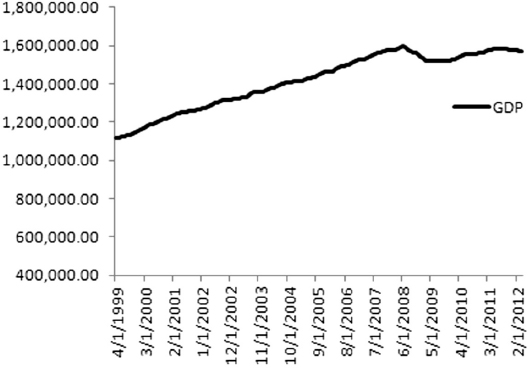

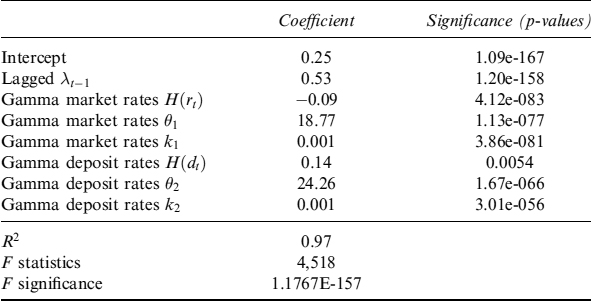

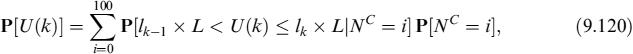

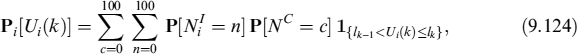

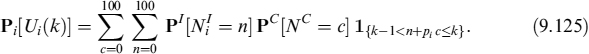

Modelling of deposit volumes: linear behavioural functions