Chapter 5

Operating System

This chapter provides an outlook on operating system. An operating system is a collection of system programs, which controls the operation of a computer system. The discussion begins with the evolution and types of operating system. It continues with the various functions of an operating system, namely, process management, memory management, file management, device management, security management, and user interface.

After reading this chapter, you will be able to understand:

The need to develop an operating system

What is an operating system and what are its objectives

How operating systems evolved from single user character-based interfaces to modern day multi-user graphical user interface

Six major types of operating systems

Six major functions of an operating system

5.1 INTRODUCTION

In the early days of computer use, computers were huge machines, which were expensive to buy, run and maintain. The user at that time interacted directly with the hardware through machine language. A software was required which could perform basic tasks such as recognizing input from the keyboard, sending output to the display screen, keeping track of files and directories on the disk, and controlling peripheral devices such as printers and scanners. The search for such software led to the evolution of modern day operating system (OS). This software is loaded onto the top of memory and performs all the aforesaid basic tasks. Initially, the OS's interface was only character-based. This interface provides the user with a command prompt and the user has to type all the commands to perform various functions. As a result, the user had to memorize many commands. With the advancement in technology, OS became even more user friendly by providing graphical user interface (GUI). The GUI-based OS allows the user to interact with the system using visual objects such as windows, pull-down menus, mouse pointers and icons. Consequently, operating the computer became easy and intuitive.

5.2 OPERATING SYSTEM: DEFINITION

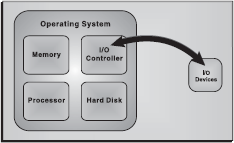

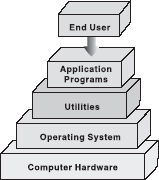

An operating system is a collection of system programs that together controls the operation of a computer system. The OS along with hardware, applications, other system software and users constitute a computer system as shown in Figure 5.1. It is the most important part of any computer system. It acts as an intermediary between a user and the computer hardware. The OS has two objectives.

Figure 5.1 Managing Hardware

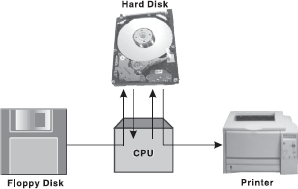

- Managing Hardware: The prime objective of the OS is to manage and control various hardware resources of a computer system. These hardware resources include processor, memory, disk space, I/O devices and so on. The OS supervises which input device's data is requesting for being processed and which processed data is ready to be displayed on the output device. In addition to communicating with hardware, the OS provides an error handling procedure and displays an error notification. If a device is not functioning properly, the OS tries to communicate with the device again. If it is still unable to communicate with the device, it provides an error message notifying the user about the problem. Figure 5.2 illustrates how an OS manages the hardware resources of a computer system.

Figure 5.2 Computer System Components

- Providing an Interface: The OS organizes applications so that users can easily access, Figure 5.2 Computer System use and store them. When an application is Components opened, the OS assists the application to provide the major part of the user interface. It provides a stable and consistent way for applications to deal with the hardware without the user having to know all the details of the hardware. If the application program is not functioning properly, the OS again takes control, stops the application and displays an appropriate error message.

5.3 EVOLUTION OF OPERATING SYSTEM

In the early days, the computers lacked any form of OS. The user would arrive at the machine armed with his program and data, often on punched paper tape. The program would be loaded into the machine and the machine set to work. Then came machines with libraries of support code (initial OSs), which were linked to the user's program to assist in operations such as input and output. At this stage, OSs were very diverse with each vendor producing one or more OSs specific to its particular hardware. Typically, whenever new hardware architecture was introduced, there was a need of new OS compatible with the new architecture. This state of affairs continued until 1960s when IBM developed the S/360 series of machines. Although there were enormous performance differences across the range, all the machines ran essentially the same OS called S/360.

Then came the small 4 bit and 8 bit processors known as microprocessors. The development of microprocessors provided inexpensive computing for the small businesses. This led to the widespread use of interchangeable hardware components using a common interconnection, and thus creating an increasing need for standardized OS to control them. The most important among the early OSs was CP/M-80 for the 8080/8085/Z-80 microprocessors. With the development of microprocessors like 386, 486 and the Pentium series by Intel, the whole computing world got a new dimension. AT&T and Microsoft came up with character-based OSs, namely, Unix and Disk OS, respectively, which supported the prevalent hardware architectures. After the character-based OSs, Microsoft and Apple Macintosh came with their Windows 3.1 and MAC, which were GUI-based OSs and well suited for the Desktop PC market. Today, OSs such as Windows XP and Red Hat Linux have taken the driver's seat in personal desktops. These OSs with their remarkable GUI and network support features can handle diverse hardware devices.

5.4 TYPES OF OPERATING SYSTEMS

The OS has evolved immensely from its primitive days to the present digital era. From batch processing systems to the latest embedded systems, the different types of OSs can be classified into six broad categories.

- Batch Processing OS: This type of OS was one of the first to evolve. Batch processing OS allowed only one program to run at a time. These kinds of OSs can still be found on some mainframe computers running batches of jobs. Batch processing OS works on a series of programs that are held in a queue. The OS is responsible for scheduling the jobs according to priority and the resources required. Batch processing OSs are good at churning through large numbers of repetitive jobs on large computers. For example, this OS would be best suited for a company wishing to automate their payrolls. A list of employees will be entered, their monthly salaries will be calculated, and corresponding pay slips would be printed. Batch processing is useful for this purpose since these procedures are to be repeated for every employee and each month.

- Multi-user or Time-sharing OS: This system is used in computer networks which allow different users to access the same data and application programs on the same network. The multi-user OS builds a user database account, which defines the rights that users can have on a particular resource of the system.

- Multi-programming OS: In this system, more than one process (task) can be executed concurrently. The processor is switched rapidly between the processes. Hence, a user can have more than one process running at a time. For example, a user on his computer can have a word processor and an audio CD player running at the same time. The multi-tasking OS allows the user to switch between the running applications and even transfer data between them. For example, a user can copy a picture from an Internet opened in the browser application and paste it into an image editing application.

- Real-time OS (RTOS): This system is designed to respond to an event within a predetermined time. This kind of OS is primarily used in process control, telecommunications and so on. The OS monitors various inputs which affect the execution of processes, changing the computer's model of the environment, thus affecting the output, within a guaranteed time period (usually less than one second). As the real-time OSs respond quickly, they are often used in applications such as flight reservation systems, railway reservation systems, military applications, etc.

- Multi-processor OS: This system can incorporate more than one processor dedicated to running processes. This technique of using more than one processor is often called parallel processing. The main advantage of multi-processor systems is that they increase the system throughput by getting more work done in less time.

- Embedded OS: It refers to the OS that is self-contained in the device and resident in the ROM. Since embedded systems are usually not general-purpose systems, they are lighter or less resource intensive as compared to general-purpose OSs. Most of the embedded OSs also offer real-time OS qualities. Typical systems that use embedded OSs are household appliances, car management systems, traffic control systems and energy management systems.

5.5 FUNCTIONS OF OPERATING SYSTEMS

The main functions of a modern OS are as follows:

- Process Management: As a process manager, the OS handles the creation and deletion of processes, suspension and resumption of processes, and scheduling and synchronization of processes.

- Memory Management: As a memory manager, the OS handles the allocation and de-allocation of memory space as required by various programs.

- File Management: The OS is responsible for creation and deletion of files and directories. It also takes care of other file-related activities such as organizing, storing, retrieving, naming and protecting the files.

- Device Management: The OS provides input/output subsystem between process and device driver. It handles the device caches, buffers and interrupts. It also detects the device failures and notifies the same to the user.

- Security Management: The OS protects system resources and information against destruction and unauthorized use.

- User Interface: The OS provides the interface between the user and the hardware. The user interface is the layer that actually interacts with the computer operator. The interface consists of a set of commands or menus through which a user communicates with a program.

THINGS TO REMEMBER

States of a Process

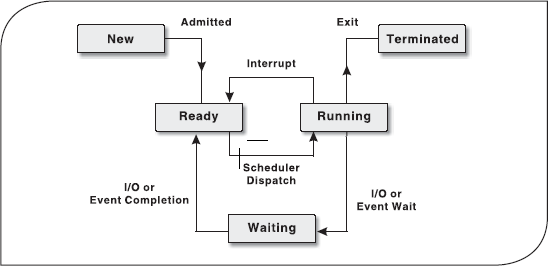

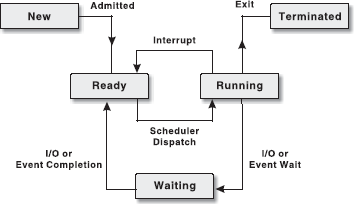

A process changes various states during its lifetime where each state indicates the current activity of the process. These states are as follows:

New: The process is being created.

Ready: The process is ready to be assigned to the processor.

Running: The process is being executed.

Waiting: The process is waiting for signal from some other process.

Terminated: The process has finished its execution.

5.5.1 Process Management

A process is an execution of a sequence of instructions or program by the CPU. It can also be referred to as the basic unit of a program that the OS deals with, with the help of the processor. For example, a text editor program running on a computer is a process. This program may cause several other processes to begin—like it can furnish a request for printing while editing the document. Thus, we can say that the text editor is a program that initiates two processes—one for editing the text and second for printing the document.

Hence, a process is initiated by the program to perform an action, which can be controlled by the user or the OS. A process in order to accomplish a task needs certain resources like CPU time, memory allocation and I/O devices. Therefore, the idea of process management in an OS is to accomplish the process assigned by the system or the user in such a way that the resources are utilized in a proper and efficient manner.

Life Cycle of a Process: The OS is responsible for managing all the processes that are running on a computer and allocating each process a certain amount of time to use the processor. In addition, the OS also allocates various other resources that processes need during execution, such as computer memory or disk space. To keep track of all the processes, the OS maintains a table known as the process table. This table stores many pieces of information associated with a specific process, that is, program counter, allocated resources, process state, CPU-scheduling information, and so on.

Initially, a process is in the new state. When it becomes ready for execution and needs the CPU, it switches to the ready state. Once the CPU is allocated to the process, it switches to the running state. From the running state, the process goes back to the ready state if an interrupt occurs or to the waiting state if the process needs some I/O operation. In case the process has switched to ready state, it again comes to running state after the interrupt has been handled. On the other hand, if the process has switched to waiting state, then after the completion of I/O, it switches to ready state and then to running state. Thus, a process continues to switch among the ready, running and waiting states during its execution. Finally, it switches to terminated state after completing its execution as shown in Figure 5.3.

Figure 5.3 Life Cycle of a Process

Note: The change of the state of the process from one form to another is called context change and this course of action is known as context switching.

Let us consider the steps in an example of two processes, a text editor and a calculator, running simultaneously on a computer system.

FACT FILE

Multithreading

Writing a program where a process creates multiple threads is called multithread programming. It is the ability by which an OS is able to run different parts of the same program simultaneously. It offers better utilization of processor and other system resources. For example, word processor makes use of multithreading—it can check spelling in the foreground as well as save a document in the background.

Threads: A thread is a task that runs concurrently with other tasks within the same process. Also known as lightweight process, a thread is the simplest unit of a process. The single thread of control allows the process to perform only one task at one time. An example of a single thread in a process is a text editor where a user can either edit the text or perform any other task like printing the document. In a multitasking OS, a process may contain several threads, all running at the same time inside the same process. It means that one thread of a process can be editing the text while another is printing the document. Generally, when a thread finishes performing a task, it is suspended or destroyed.

Uniprogramming and Multiprogramming: As the name implies, uniprogramming means only one program at a time. In uniprogramming, users can perform only one activity at a time. In multiprogrammed systems, multiple programs can reside in the main memory at the same time. These programs can be executed concurrently, thereby requiring the system resources to be shared among them. In multiprogrammed systems, an OS must ensure that all processes get a fair share of CPU time.

Process Scheduling: In a multiprogrammed system, at any given time, several processes will be competing for the CPU's time. Thus, a choice has to be made as to which process to allocate the CPU next. This procedure of determining the next process to be executed on the CPU is called process scheduling and the module of OS that makes this decision is called scheduler. The prime objective of scheduling is to switch the CPU among processes so frequently that users can interact with each program while it is running.

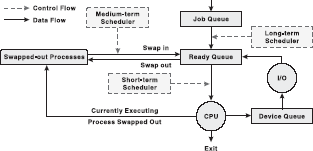

For scheduling purposes, there exist different queues in the system: job queue, ready queue and device queue. As the processes enter the system for execution, they are kept in the job queue (or input queue) on a mass storage device such as hard disk. From the job queue, the processes which are ready for execution are brought into the main memory. In the main memory these processes are kept in the ready queue. In other words, the ready queue contains all those processes that are waiting for the CPU. For each I/O device attached to the system, a separate device queue is maintained. The process that needs to perform I/O during its execution is kept into the queue of that specific I/O device and waits there until it is served by the device.

Depending on the level of scheduling decisions to be made, the following types of schedulers may coexist in a complex OS:

- Long-term Scheduler: Also known as job scheduler or admission scheduler, it works with the job queue. It selects the next process to be executed from the job queue and loads it into the main memory for execution. This scheduler is generally invoked only when a process exits from the system. Thus, the frequency of invocation of long-term scheduler depends on the system and workload and is much lower than other two types of schedulers.

- Short-term Scheduler: Also known as CPU scheduler, it selects a process from the ready queue and allocates the CPU to it. This scheduler is required to be invoked frequently as compared to long-term scheduler. This is because a process generally executes for a short period and then it may have to wait either for I/O or some other reason. At that time, the CPU scheduler must select some other process and allocate the CPU to it. Thus, the CPU scheduler must be fast in order to provide the least time gap between executions.

- Medium-term Scheduler: Also known as swapper, it comes into play whenever a process is to be removed from the ready queue (or from the CPU in case it is being executed) thereby reducing the degree of multiprogramming. This process is stored at some space on the hard disk and later brought into the memory to restart execution from the point where it left off. This task of temporarily switching a process in and out of main memory is known as swapping. The medium-term scheduler selects a process among the partially executed or unexecuted swapped-out processes and swaps it in the main memory. The medium-term scheduler is usually invoked when some space becomes free in memory by the termination of a process or if the supply of ready process reduces below a specified limit.

The various types of schedulers are illustrated in Figure 5.4.

Figure 5.4 Types of Schedulers

Preemptive and non-preemptive scheduling CPU scheduling may take place under the following four circumstances:

- When a process switches from the running state to the waiting state.

- When a process switches from the running state to the ready state.

- When a process switches from the waiting state to the ready state.

- When a process terminates.

When scheduling takes place under the “first” and “fourth” circumstances, it is said to be non-preemptive scheduling and if the scheduling takes place under the “second” and “third” circumstances, it is said to be preemptive. In the preemptive scheme, the scheduler can forcibly remove the processor from the currently running process before its completion in order to allow some other process to run. In the non-preemptive scheme, once the processor is allocated to a process, it cannot be taken back until the process voluntarily releases it (in case the process has to wait for I/O or some other event) or until the process terminates. Thus, the main difference between the two schemes is that in the preemptive scheme, the OS has the control over the process current states whereas in the case of the non-preemptive scheme, the process once entered in to the running state gets the full control of the processor.

The scheduler uses some scheduling procedure to carry out the selection of a process for execution. Two popular scheduling procedures implemented by different OSs are first-come-first-served and round robin scheduling.

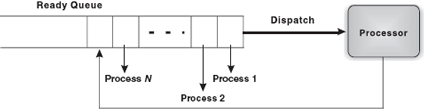

- First-come-first-served (FCFS): As the name suggests, in FCFS, the processes are executed in the order of their arrival in the ready queue, which means the process that enters the ready queue first, gets the CPU first. To implement the FCFS scheduling procedure, the ready queue is managed as a FIFO (first-in first-out) queue. Each time the process at the start of queue is dispatched to the processor, all other processes move up one slot in the queue as illustrated in Figure 5.5. When new processes arrive, they are put at the end of the queue. FCFS falls under non-preemptive scheduling and its main drawback is that a process may take a very long time to complete, and thus holds up other waiting processes in the queue.

Figure 5.5 First-Come-First-Served Procedure

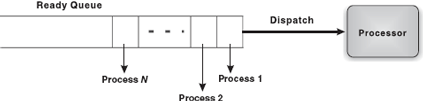

- Round Robin: Round robin scheduling was designed keeping in mind the limitations of the FCFS scheduling procedure. This procedure falls under preemptive scheduling, in which a process is selected for execution from the ready queue in FIFO sequence. However, the process is executed only for a fixed period known as time slicing or quantum period after which it is interrupted and returned to the end of the ready queue (see Figure 5.6). In the round robin procedure, processes are allocated CPU time on a turn basis.

Figure 5.6 Round Robin Procedure

Nowadays, it is common in OSs for processes to be treated according to priority. This may involve a number of different queues and scheduling mechanisms using priority based on previous process activity. For example, the time required by the process for execution or how long it has been, since it was last executed by the processor.

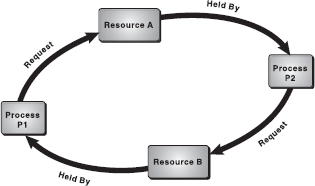

Deadlock: In a multiprogramming environment, several processes may compete for a limited number of resources. A process requests for the required resource and if it is not available then the process enters the waiting state and remains in that state until it acquires the resource. There might be a situation when the process has to wait endlessly because the requested resource may be held by another waiting process. This type of situation is known as deadlock. To illustrate the deadlock situation, consider a system with two resources (say, printer and disk drive) and two processes P1 Held By and P2 running simultaneously. During execution, P1 requests for printer and P2 for disk drive. As the requested resources are available, the requests of both P1 and P2 are granted and the desired resources are allocated to them. Further, P1 requests for disk drive held by P2 and P2 requests for printer held by P1. Here, both processes will enter the waiting state. Since each process is waiting for the release of resource held by the other, they will remain in the waiting state forever, thus producing a deadlock (see Figure 5.7).

Figure 5.7 Deadlock

A deadlock situation arises if the following four conditions hold simultaneously on the system:

- Mutual Exclusion: Only one process can use a resource at a time. If another process requests for the resource, the requesting process has to wait until the requested resource is released.

- Hold and Wait: In this situation, a process might be holding some resource while waiting for additional resource, which is currently being held by another process.

- No Preemption: Resources cannot be preemptive, that is, resources cannot be forcibly removed from a process. A resource can only be released voluntarily by the holding process after that process has completed its task.

- Circular Wait: This situation may arise when a set of processes waiting for allocation of resources held by other processes forms a circular chain in which each process is waiting for the resource held by its successor process in the chain.

To ensure that deadlocks never occur, the system can use either a deadlock-prevention or a deadlock-avoidance scheme.

- Deadlock Prevention: Deadlock can occur only when all the four deadlock-causing conditions hold true. Hence, the system should ensure that at least one of the four deadlock-causing conditions would not hold true so that deadlock can be prevented.

- Deadlock Avoidance: Additional information concerning which resources a process will require and use during its lifetime should be provided to the OS beforehand. For example, in a system with one CD drive and a printer, process P might request first for the CD drive and later for the printer, before releasing both resources. On the other hand, process Q might request first for the printer and the CD drive later. With this knowledge in advance, the OS will never allow allocation of a resource to a process if it leads to a deadlock, thereby avoiding the deadlock.

5.5.2 Memeory Management

In addition to managing processes, the OS also manages the primary memory of the computer. The part of the OS that handles this job is called memory manager. Since every process must have some amount of primary memory to execute, the performance of the memory manager is crucial to the performance of the entire system. As the memory is central to the operation of any modern OS, its proper use can make a huge difference. The memory manager is responsible for allocating the main memory to processes and for assisting the programmer in loading and storing the contents of the main memory. Managing the main memory, sharing, and minimizing memory access time are the basic goals of the memory manager. The major tasks accomplished by the memory manager so that all the processes function in harmony, are as follows:

- Relocation: Each process must have enough memory to execute.

- Protection and Sharing: A process should not run into another process's memory space.

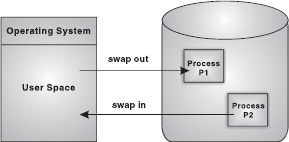

Relocation: When a process is to be executed, it has to be loaded from the secondary storage (like hard disk) to the main memory (RAM). This is called process loading. Since, the main User Space memory is limited and other processes also need it for their execution, an OS swaps the two processes, which is called swapping (see Figure 5.8). Once the process is “swapped out”, it is uncertain to say when it will be “swapped in” because of the number of processes running concurrently.

Figure 5.8 Process Swapping

Normally, when the process is swapped back into the main memory, it will be placed back to the same memory space that it occupied previously. However, in certain cases, it is not possible to place the process at the same memory location. This is not of much importance if the process is not address-sensitive. However, if the process requires some of its data or instruction to occupy the memory with a specific address, the process needs to be relocated. It is the responsibility of the memory manager to modify the addresses used in address-sensitive instructions (that use memory addresses) of the process so that it can execute correctly from the assigned area of memory.

Protection and Sharing: In multiprogrammed systems, as a number of processes may reside in the main memory at the same time, there is a possibility that a user program, during execution, may access the memory location allocated either to other user processes or to the OS. It is the responsibility of the memory manager to protect the OS from being accessed by other processes and the processes by one another. At the same time, the memory protection program should be flexible enough to allow concurrent processes to share the same proportion of the main memory. For example, consider a program that initiates different processes. If the memory manager allocates the same portion of memory to all the processes instead of different memory allocations to different processes, a lot of memory is saved. Therefore, the memory protection routine of the OS should allow controlled sharing of the memory among different processes without letting them breach the protection criteria. If a process attempts to modify the contents of memory locations that do not belong to it, the memory protection routine intervenes and usually terminates the program.

Memory Allocation: In uniprogramming systems, where only one process runs at a time, memory management is very simple. The process to be executed is loaded into the part of memory space that is unused. Early MS-DOS systems support uniprogramming. The main challenge of efficiently managing memory comes when a system has multiple processes running at the same time. In such a case, the memory manager can allocate a portion of primary memory to each process for its own use. However, the memory manager must keep track of the running processes along with the memory locations occupied by them, and must also determine how to allocate and de-allocate available memory when new processes are created and old processes have finished their execution, respectively.

While different strategies are used to allocate space to processes competing for memory, three of the most popular are as follows:

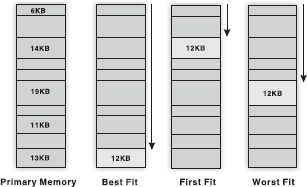

- Best Fit: In this case, the memory manager places a process in the smallest block of unallocated memory in which it will fit. For example, a process requests 12 KB of memory and the memory manager currently has a list of unallocated blocks of 6 KB, 14 KB, 19 KB, 11 KB and 13 KB blocks. The best fit strategy will allocate 12 KB of the 13 KB block to the process.

- First Fit: The memory manager places the process in the first unallocated block that is large enough to accommodate the process. Using the same example to fulfil the 12 KB request, first fit will allocate 12 KB of the 14 KB block to the process.

- Worst Fit: The memory manager places a process in the largest block of unallocated memory available. To furnish the 12 KB request again, worst fit will allocate 12 KB of the 19 KB block to the process, leaving a 7 KB block for future use.

Figure 5.9 illustrates that in best fit and first fit strategies, the allocation of memory results in the creation of a tiny fragment of unallocated memory. Since the amount of memory left is small, no new processes can be loaded here. This job of splitting primary memory into segments as the memory is allocated and de-allocated to the processes is known as fragmentation. The worst fit strategy attempts to reduce the problem of fragmentation by allocating the largest fragments to the new processes. Thus, a larger amount of space in the form of tiny fragments is left unused. To overcome this problem, the concept of paging was introduced.

Figure 5.9 Strategies for Memory Allocation

THINGS TO REMEMBER

Physical and Logical Address

Every byte in memory has a specific address known as physical address. Whenever a program is brought into the main memory for execution, it occupies certain memory locations. The set of all physical addresses used by the program is known as its physical address space. However, before execution, a program is compiled to run starting from some fixed address and accordingly all the variables and procedures used in the program are assigned some specific address known as logical address. The set of all logical addresses used by the program is known as its logical address space.

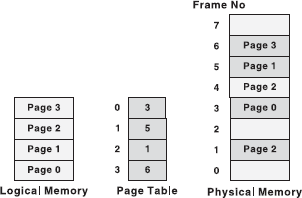

Paging Paging is a memory management scheme that allows the processes to be stored non-contiguously in the memory. The memory is divided into fixed size chunks called page frames. The OS breaks the program's address space (the collection of addresses used by the program) into fixed-size chunks called pages, which are of the same size as that of the page frames. Generally, a page size is of 4 KB. However, some systems support even larger page sizes such as 8 KB, 4 MB, etc. When a process is to be executed, its pages are loaded into unallocated page frames (not necessarily contiguous).

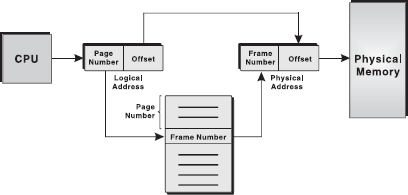

Each address generated by the CPU (that is, logical address) is divided into two parts: page number (high-order bits) and a page offset or displacement (low-order bits). Since the logical address is a power of 2, the page size is always chosen as a power of 2 so that the logical address can be converted easily into page number and page offset. To map the logical addresses to physical addresses in memory, a mapping table called page table is used. The OS maintains a page table for each process to keep track of which page frame is allocated to which page. It stores the frame number allocated to each page and the page number is used as the index to the page table. Figure 5.10 shows the logical memory, page table and physical memory.

Figure 5.10 Logical Memory, Page Table and Physical Memory

Now let us see how address translation is performed in paging. To map a given logical address to the corresponding physical address, the system first extracts the page number and the offset. The system, in addition, also checks whether the page reference is valid (that is, it exists within the logical address space of the process). If the page reference is valid, the system uses the page number to find the corresponding page frame number in the page table. That page frame number is attached to the high-order end of the page offset to form the physical address in memory. The mechanism of translation of logical address into physical address is shown in Figure 5.11.

Figure 5.11 Address Translation in Paging

The main advantage of paging is that it minimizes the problem of fragmentation since the memory allocated is always in fixed units and any free frame can be allocated to a process.

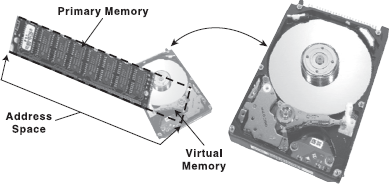

Concept of Virtual Memory: A process executes only in the main memory, which is limited in size. Today, with the advent of graphic-oriented applications like 3D video games, business applications and so on, a user requires a larger memory than the main memory for running such applications. Note that it is not essential that the whole program must be loaded in the main memory for processing as only the currently referenced page needs to be present in the memory at the time of execution. Therefore, the rest of the pages of the program can reside in a hard disk portion allocated as virtual memory and can be loaded into the main memory whenever needed. This process of swapping the pages from the virtual memory to the main memory is called page-in or swap-in. With virtual memory, the system can run programs that are actually larger than the primary memory of the system (see Figure 5.12). Virtual memory allows for very effective multiprogramming and relieves the user from the unnecessarily tight constraints of the main memory.

Figure 5.12 Virtual Memory

Virtual memory, in other words, is a way of showing the main memory of a computer system to appear effectively larger than it really is. The system does this by determining which parts of its memory are often sitting idle, and then makes a command decision to empty their contents onto a disk, thereby freeing up precious RAM.

Note: In virtual memory systems, the logical address is referred to as virtual address and logical address space is referred to as virtual address space.

Page Faults: In virtual memory systems, the page table of each process stores an additional bit to differentiate the pages in the main memory from that on the hard disk. This additional bit is set to 1 in case the page is in the main memory; otherwise 0. Whenever a page reference is made, the OS checks the page table to determine whether the page is in the main memory. If the referenced page is not found in the main memory, a page fault occurs and the control is passed to the page fault routine in the OS. To handle page faults, the page fault routine checks first of all whether the virtual address for the desired page is valid (that is, it exists within the virtual address space of the process). If it is invalid, it terminates the process giving an error. Otherwise, it locates for a free page frame in memory and allocates it to the process, swaps the desired page into this allocated page frame, and updates the page table to indicate that the page is in memory.

While handling a page fault, there is a possibility that the memory is full and no free frame is available for allocation. In that case, the OS has to evict a page from the memory to make space for the desired page to be swapped in. To decide which page frame is to be replaced with the new page, the OS must track the usage information for all pages. In this way, the OS can determine which pages are being actively used and which are not (and therefore, can be removed from the main memory). Often the “least currently accessed” page (the page that has gone the longest time without being referenced) is selected.

5.5.3 File Management

File system is one of the most visible aspects of the OS. It provides a uniform logical view of the information storage, organized in terms of files, which are mapped onto the underlying physical device like the hard disk. While the memory manager is responsible for the maintenance of the primary memory, the file manager is responsible for the maintenance of the file system. In the simplest arrangement, the file system contains a hierarchical structure of data. This file system maintains user data and metadata (the data describing the files of the user data). The hierarchical structure usually contains the metadata in the form of directories of files and sub-directories. Each file is a named collection of data stored on the disk. The file manager implements this abstraction and provides directories for organizing files. It also provides a spectrum of commands to read/write the contents of a file, to set the read/write position, to use the protection mechanism, to change the ownership, to list files in a directory, and to remove a file. The file manager provides a protection mechanism to allow users to administer how processes executing on behalf of different users can access the information contained in different files.

The file manager also provides a logical way for users to organize files in the secondary storage. To assist users, most file managers allow files to be grouped into a bundle called a directory or a folder. This allows a user to organize his or her files according to their purpose by placing related files in the same directory. By allowing directories to contain other directories, called sub-directories, a hierarchical organization can be constructed. For example, a user may create a directory called games that contains sub-directories called cricket, football, golf, rugby and tennis (see Figure 5.13). Within each of these sub-directories are files that fall within that particular category. A sequence of directories within directories is called a directory path.

Figure 5.13 File System

5.5.4 Device Management

Device management in an OS refers to the process of managing various devices connected to the computer. The device manager manages the hardware resources and provides an interface to hardware for application programs. A device communicates with the computer system by sending signals over a cable. The device communicates with the machine through a connection point called port. The communication using a port is done through rigidly defined protocols, like when to send the data and when to stop. These ports are consecutively connected to a bus (a set of wires) which one or more device uses to communicate with the system. The OS communicates with the hardware with the help of standard software provided by the hardware vendor called device drivers. A device driver works as a translator between the electrical signals from the hardware and the application programs of the OS. Drivers take data that the OS has defined as a file and translate them into streams of bits placed in specific locations on storage devices. There are differences in the way that the driver program functions, but most of them run when the device is required, and function much the same as any other process. The OS will frequently assign processes based on priority to drivers so that the hardware resources can be released and set free for further use.

Broadly, managing input and output is a matter of managing queues and buffers. A buffer is a temporary storage area that takes a stream of bits from a device like keyboard to a serial communication port. Buffers hold the bits and then release them to the CPU at a convenient rate so that the CPU can act on it. This task is important when a number of processes are running and taking up the processor's time. The OS instructs a buffer to continue taking the input from the device. In addition, it also instructs the buffer to stop sending data back to the CPU if the process, using the input, is suspended. When the process, requiring input, is made active once again, the OS will command the buffer to send data again. This process allows a keyboard to deal with external users at a higher speed.

Spooling: SPOOL is an acronym for simultaneous peripheral operation on-line. Spooling refers to storing jobs in a buffer so that CPU can be efficiently utilized. Spooling is useful because devices access data at different rates. The buffer provides a waiting station where data can rest while the slower device catches up. The most common spooling application is print spooling. In print spooling, documents are loaded into a buffer, and then the printer pulls them off from the buffer at its own rate. Meanwhile, a user can perform other operations on the computer while the printing takes place in the background. Spooling also lets a user place a number of print jobs on a queue instead of waiting for each one to finish before specifying the next one. The OS manages all requests to read or write data from the hard disk through spooling (see Figure 5.14).

Figure 5.14 Spooling

5.5.5 Security Management

Security in terms of a computer system covers every aspect of its protection in case of a catastrophic event, corruption of data, loss of confidentiality and so on. Security requires not only ample protection within the system, but also from the external environment in which the system operates. In this section, we will be covering security in terms of internal protection, which is one of the most important functions of the OS. This involves protecting information residing in the system from unauthorized access. Various security techniques employed by the OS to secure the information are user authentication and backup of data.

User Authentication: The process of authenticating users can be based on a user's possession like a key or card, user information like username and password or user attributes like fingerprints and signature. Apart from these techniques, user information is often the first and most significant line of defence in a multi-user system. After the user identifies himself by a username, he is prompted for a password. If the password supplied by the user matches the password stored in the system, the system authenticates the user and gives him access to the system. A password can also be associated with other resources (files, directories and so on), which when requested, prompts the user for password. Unfortunately, passwords can often be guessed, illegally transferred, or exposed. To avoid such situations, a user should keep the following points in mind:

- Password should be at least six characters in length.

- The system should keep track of any event about any attempt to break the password.

- The system should allow limited number of attempts for submitting a password on a particular system.

- Password based on dictionary words should be discouraged by the system. Alphanumeric passwords, such as PASS011, should be used.

Backup of Data: No matter what kind of information a system contains, backup of data is of utmost importance for its users. Backup or archiving is an important issue for a user and especially for business organizations. Typically, a computer system uses hard drives for online data storage. These drives may sometimes fail, or can be damaged in case of a catastrophic event, so care must be taken to ensure that the data is not lost. To ensure this, the OS should provide a feature of backing up of data, say from a disk to another storage device such as a floppy disk or an optical disk. The purpose of keeping backups is to restore individual files or complete file system in case of data loss. Recovery from the loss of an individual file, or of an entire disk, may be done from backup. OSs usually provide some system software that is used for taking backups of the data.

5.5.6 User Interface

OSs organize applications so that users can easily access them, use them and store application data. When an application is opened, the OS lets the application provide the majority of the user interface. The OS still has the responsibility of providing access to the hardware for whatever the application needs. If the program cannot function properly, the OS again takes control, stops the application, and displays an error message. An effective interface of an OS does not concern the user with the internal workings of the system. A good user interface should attempt to anticipate the user's requirements and assist him to gather information and use necessary tools. Common interfaces provided by different OSs can be categorized as command line interface (CLI) and graphical user interface (GUI).

Command Line Interface: In early days of computing, OSs provided the user with the facility of entering commands via an interactive terminal. Those were the only means of communication between a program and its user, based solely on textual input and output. Commands were used to initiate programs, applications and so on. A user had to learn many commands for proper operation of the system (see Figure 5.15).

Figure 5.15 Command Line Interface

Graphical User Interface: With the development in chip designing technology, computer hardware became quicker and cheaper, which led to the birth of GUI-based OS. These OSs provide users with pictures rather than just characters to interact with the machine. The OS displays icons, buttons, dialog boxes, etc., on the screen (see Figure 5.16). The user sends instructions by moving a pointer on the screen (generally mouse) and selecting certain objects by pressing buttons on the mouse while the mouse pointer is pointing at Figure 5.16 Graphical User Interface them.

Figure 5.16 Graphical User Interface

Let Us Summarize

- OS is a type of software that controls and coordinates the operation of the various types of devices in a computer system. The two objectives of an OS are controlling the computer's hardware and providing an interface between the user and the machine.

- OS has six major roles to perform: process management, memory management, file management, device management, security management and providing user interface.

- A process or task is a portion of a program in some stage of execution. A program can consist of several processes, each working on its own. It may be in one of a number of different possible states, such as new, running, waiting, ready or terminated.

- A thread is the simplest part of a process. To enhance efficiency, a process can consist of several threads, each of which execute separately.

- In the uniprogramming system, only one process can exist at a time while in the multiprogramming system, multiple processes can be initiated at a time.

- Deciding which process should run next is called process scheduling. Process scheduling is necessary, so that all programs are executed and run fairly.

- Preemptive switching means that a running process will be interrupted (forced to give up) and the processor is given to another waiting process.

- The process of switching from one process to another is called context switching. A period that a process runs for before being context switched is called a time slice or quantum period.

- In first-come-first-served scheduling, the processes are executed in the order of their arrival in the ready queue, which means the process that enters the ready queue first, gets the CPU first. New processes are placed at the end of the queue.

- Round robin scheduling employs a technique called time slicing. When the time slice is up, the running process is interrupted and placed at the rear of the queue. The next process at the top of the queue is then started.

- A process is said to be in a state of deadlock when it is waiting for an event, which will never occur. It can occur if four conditions prevail simultaneously; they are mutual-exclusion, circular wait, hold and wait, and no preemption.

- The part of the OS that manages the primary memory of the computer is called the memory manager.

- Paging is a memory management scheme that allows the processes to be stored non-contiguously in memory.

- Virtual memory is a way of showing the main memory of a computer system to appear effectively larger than it really is.

- The system that an OS uses to organize and keep track of files is known as the file management system.

- A program that controls a device is called the device driver. OS's device manager uses this program to let a user use the specific device.

- A user interface is a set of commands or menus through which a user communicates with the system.

Exercises

Fill in the Blanks

- .........is a program which acts as a mediator between the user and the hardware.

- A table where many pieces of information associated with a specific process, that is, program counter, process state, CPU-scheduling information and so on are stored, is known as...........

- ...........is also called a lightweight process.

- ...........refers to storing the jobs in the buffer so that the CPU can be efficiently utilized.

- Two main user interfaces, which an OS has, are .......... and ..........

- Four main conditions that cause deadlock to occur are ..........., ..........., ........... and ..........

- A process is said to be in a state of ......... when it is waiting for an event that will never occur.

- The major tasks accomplished by the memory manager so that all the processes run in a harmonious manner are ........... and ............

- RTOS stands for ............

- First-come-first-served and round robin are types of ............

Multiple-choice Questions

- The OS that is self-contained in a device and resident in the ROM is ..........

- Batch Processing System

- Real-time OS

- Embedded OS

- Multi-processor OS

- The example of non-preemptive scheduling is ............

- First-Come-First-Served

- Round Robin

- Last-In-First-Out

- Shortest-Job-First

- An interface where facility is provided for entering commands is ...........

- Menu-driven

- Command-driven

- Graphic-driven

- None of these

- The OS that allows only one program to run at a time is .........

- Batch Processing

- Embedded

- Real-time

- Multitasking

- The substitution made by the OS between the processes to allocate space is ...........

- Swapping

- Deadlock

- Fragmentation

- Paging

- The memory management scheme that allows the processes to be stored non-contiguously in memory is ..........

- Paging

- Spooling

- Swapping

- None of these

- The fit policy of a memory manager to place a process in the largest block of unallocated memory is ..........

- First Fit

- Best Fit

- Worst Fit

- Bad Fit

- With which memory can the system run programs that are actually larger than the primary memory of the system?

- Cache Memory

- Primary Memory

- Virtual Memory

- None of these

- What allows the user to run two or more applications on the same computer so that he/she can move from one to the other without closing the application?

- Virtual Storage

- Multi-processing

- Multi-tasking

- Multiprogramming

- The scheduler that selects a process from the ready queue and allocates CPU to it ..........

- Short-term

- Long-term

- Medium-term

- All of these

State True or False

- OS is a hardware component.

- Microsoft Windows XP is a GUI-based OS.

- A document printing process uses spooling.

- Virtual memory allows for very effective multiprogramming.

- Another term for time-sharing is multitasking.

- Railway reservation systems use batch-processing OSs.

- Round robin is a non-preemptive scheduling technique.

- Best-fit, first-fit and worst-fit are memory allocation techniques.

- The directory system of Microsoft Windows operating is hierarchical.

- The job of splitting the primary memory into segments as the memory is allocated and de-allocated to the processes is known as fragmentation.

Descriptive Questions

- What is an OS? Explain various types of OS.

- Define a process. Diagrammatically explain the life cycle of a process.

- Discuss various types of interfaces in the OS.

- What is a deadlock? How can it be handled?

- Explain how memory protection and process allocation is done by an OS.

- Write down the differences between:

- Uniprogramming and Multiprogramming

- Preemptive and Non-preemptive Scheduling

- Deadlock Avoidance and Deadlock Prevention

ANSWERS

Fill in the Blanks

- OS

- Process table

- Thread

- Spooling

- GUI, CLI

- Circular wait, Mutual exclusion, No preemption, Hold and wait

- Deadlock

- Relocation, Protection and sharing

- Real-time OS

- Process scheduling

Multiple-choice Questions

- (c)

- (a)

- (b)

- (a)

- (a)

- (a)

- (c)

- (c)

- (c)

- (a)

State True or False

- False

- True

- True

- True

- False

- False

- False

- True

- True

- True