Honestly, you already know almost everything about Terraform now. Creating networks in the Cloud? Easy. Starting a new virtual server and provisioning it with favorite configuration management tool, isn't it a piece of cake? You know how to write a beautiful template. Refactor it into modules and configure it with many ways that are there to configure the template.

Perhaps you even moved your whole infrastructure to Terraform. But then you realize that creating infrastructure with Terraform is just the beginning; that is, your infrastructure is there to stay, and it needs to be updated continuously as your business requirements change.

There are many challenges in managing resources you have. We need to figure out how to perform changes without interrupting the service. We will take a look at the following and some other topics in this chapter:

- The means of scaling the service up and down with Terraform

- Performing an in-place upgrade of a server and how to do rolling updates

- How to perform blue-green deployments

- How to scale automatically with Auto-scaling groups of AWS

- What is Immutable Infrastructure and how the tool named Packer helps to achieve that

Before proceeding with this chapter, destroy all your previously created resources with the terraform destroy command. We are starting from the clean state again.

So far, we have created only one EC2 instance for the web application MightyTrousers. As popularity of the app increases, a single server can't handle the load properly anymore. We could scale vertically by increasing the instance size, but it would still leave us with a single server that handles all the critical traffic. In a Cloud world, you should assume that absolutely every machine you have can be gone at any moment. You should be prepared for worst case scenarios: Distributed Denial of Service (DDoS) attack putting your cluster on knees, earthquake destroying the whole data center, internal AWS outage, and many others.

Thus, not only as a way to scale the infrastructure but also to make the application highly available, we should increase the number of instances we have. And it's not only about the number of them but also the location of each of them: keeping 20 instances in a single place still puts you in a situation where the outage of one data center puts you out of business, while 10 instances each in two data centers in two different geographical locations will keep your software running even in the event of a worst case scenario for one of DCs.

Terraform, being a tool based on declarative DSL doesn't have loops as part of its language. If we would do it in a regular programming language, let's say Ruby, we would write something like the following:

5.times do create ec2_instnace end

In Chef, this combines both declarative and imperative approaches, which would be a reasonable (though not in every occasion) thing to do. However, in Terraform, we don't and can't tell the tool what to do. We tell Terraform what should exist as part of our infrastructure, and the specifics of creation are handled by the tool itself. That's why instead of iterating over the same resource, Terraform gives as an extra property, responsible for defining how many resources of a same kind should exist. It's named count.

Using it is simple: for every resource that needs to be created more than once, you just specify the count parameter to be equal to the number you desire:

resource "aws_instance" "my-app" {

...

count = 5

}

One problem with count is that it cannot be used with modules. In our case, it's not really a big problem though; we want to multiply the number of instances created in the module, while keeping only one security group for all of them. Still, as we don't want to hardcode any values, we need a way to pass the required number of instances to the module.

As we are already well familiar with variables, let's just add one more to the application module in the modules/application/variables.tf file:

...

variable "instance_count" { default = 0 }

Let's use it straight away:

resource "aws_instance" "app-server" {

ami = "${data.aws_ami.app-ami.id}"

instance_type = "${lookup(var.instance_type, var.environment)}"

subnet_id = "${var.subnet_id}"

vpc_security_group_ids = ["${concat(var.extra_sgs, aws_security_group.allow_http.*.id)}"]

user_data = "${data.template_file.user_data.rendered}"

key_name = "${var.keypair}"

connection {

user = "centos"

}

provisioner "remote-exec" {

inline = [

"sudo rpm -ivh http://yum.puppetlabs.com/puppetlabs-release-el-7.noarch.rpm",

"sudo yum install puppet -y",

]

}

tags {

Name = "${var.name}"

}

count = "${var.instance_count}"

}

Now, pass the variable to the module:

module "mighty_trousers" {

source = "./modules/application"

...

instance_count = 2

}

We can try to apply our template now, but trust me it won't be a success:

$> terraform plan Refreshing Terraform state in-memory prior to plan... The refreshed state will be used to calculate this plan, but will not be persisted to local or remote state storage. module.mighty_trousers.data.template_file.user_data: Refreshing state... module.mighty_trousers.data.aws_ami.app-ami: Refreshing state... Error running plan: 1 error(s) occurred: * Resource 'aws_instance.app-server' does not have attribute 'id' for variable 'aws_instance.app-server.id'

The problem here is that we are using the aws_instance resource in null_provisioner, defined in the previous chapter:

resource "null_resource" "app_server_provisioner" {

# ....

connection {

user = "centos"

host = "${aws_instance.app-server.public_ip}"

}

We configured this provisioner when we had only one EC2 instance, but now, as Terraform creates two of them, it's not valid anymore. It's not the only occurrence of this mistake: the public_ip output also assumes that there is just one instance.

There are two ways to fix it:

- Take only the first instance created

- Get values from all instances

It actually won't fix null_resource for us, so just for the sake of demonstration, comment it out. We will fix it in a bit.

Now, if we want to access one particular instance from the list, we can reference it as follows: aws_instance.app-server.$NUMBER.public_ip. Try it out:

output "public_ip" {

value = "${aws_instance.app-server.0.public_ip}"

}

The Terraform plan is now successfully completed. But, well, that's kind of useless, isn't it? We need all addresses to be returned from the module. That's where the join() function becomes handy: it allows you to build a single string from the values of all elements in a list. The following example speaks for itself:

output "public_ip" {

value = "${join(",", aws_instance.app-server.*.public_ip)}"

}

If you would try to apply template like this is, you will get an almost satisfying result:

Outputs: mighty_trousers_public_ip = 35.156.29.192,35.156.32.127

This output is good only if it returns IPs of servers that were properly provisioned, so let's get back to fixing null_provisioner. This is how it should look like now before it's working with multiple instances:

resource "null_resource" "app_server_provisioner" {

triggers {

server_id = "${aws_instance.app-server.id}"

}

connection {

user = "centos"

host = "${aws_instance.app-server.public_ip}"

}

provisioner "file" {

source = "${path.module}/setup.pp"

destination = "/tmp/setup.pp"

}

provisioner "remote-exec" {

inline = [

"sudo puppet apply /tmp/setup.pp"

]

}

}

The first thing to fix here is the triggers block. It needs to take all instances into consideration, and we can do so with the same join() function:

triggers {

server_id = "${join(",", aws_instance.app-server.*.id)}"

}

This will, of course, fix the problem with retriggering the provisioner. But it still provisions only one instance, as you can see in the following line:

host = "${aws_instance.app-server.public_ip}"

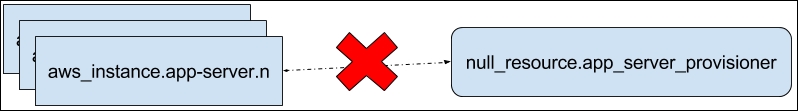

The problem becomes more complicated: we can't go further with just a single provisioner for multiple instances, and this 1-n relationship simply won't work:

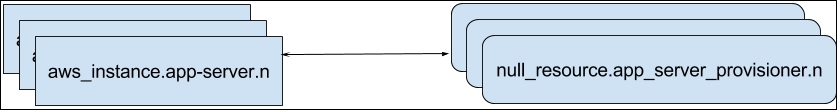

What we need rather is, something like the following:

This means that first of all, we need to add the count parameter to null_resource as well. It would be the same instance_count variable used for the aws_instance resource. The trick here is that right inside resource configuration you can access the index of particular instance of this resource via the ${count.index} variable, which, combined with element() function, allows us to query attributes on the other list of same size--the list of instances. With this change, the complete null_resource block should look like as follows (notice the highlighted connection block):

resource "null_resource" "app_server_provisioner" {

triggers {

server_id = "${join(",", aws_instance.app-server.*.id)}"

}

connection {

user = "centos"

host = "${element(aws_instance.app-

server.*.public_ip,

count.index)}"

}

provisioner "file" {

source = "${path.module}/setup.pp"

destination = "/tmp/setup.pp"

}

provisioner "remote-exec" {

inline = [

"sudo puppet apply /tmp/setup.pp"

]

}

count = "${var.instance_count}"

}

This pattern can be seen in Terraform templates quite often: binding two or more groups of resources with lookups like this. We don't have a need to do so here, but it is also common to do the same for the user_data attribute and the template_file data resource combination, in case user data needs an index as a variable.

With this new fancy count-involved configuration, you might be tempted to finally apply the template. Go ahead and do so. You will notice a few things. For example, the log output for counted resource looks like as follows:

module.mighty_trousers.null_resource.app_server_provisioner.0

It looks the same in the state file as well:

"null_resource.app_server_provisioner.0": {

# ...

If you examine the body of one of null_resource in the state file, you will also note this part:

"attributes": {

"id": "9043342603318320402",

"triggers.%": "1",

"triggers.server_id": "i-85e9d839,i-86e9d83a"

},

And there is a bug right here for you to fix: every provisioner for every instance will be retriggered even if only one of the instances changes. Our triggers are wrong, and it's up to you to fix it with the element() function.

While it's nice to have two instances--we can handle twice as much traffic now--we are still far away from being Highly Available (HA).