As you know, by default, Terraform will store the state file on your local disk and you have to figure out yourself how to distribute it within your team. One option you learned is to store it in the git repository: you get the workflow, you get the versioning and you even get some level of security on top. But there is also a concept of remote state provided by Terraform.

The idea is that before you start applying your templates, you configure a remote storage. After that, your state file will be pulled and pushed from a remote facility. There are 11 backends for your state provided by Terraform: Consul, S3, etcd, Atlas, and others. You will learn how to use Simple Storage Service (S3) for this purpose.

Note

Atlas is a commercial offering from HashiCorp. One part of it is named Terraform Enterprise: it combines secure remote state storage, versioning of state file changes, logs of Terraform runs, and some other features. It is well integrated with GitHub. You could use it as a ready-to-go solution, or you could keep reading this book to learn how to implement all these features yourself without spending any extra cash, and little to no time on implementation.

S3 is another AWS service. It's an object storage: you can throw into it as many files you like. Instagram used it in order to store photographs, and many other companies use it for many other tasks. We will use it to store the Terraform state file. Why? Because it has many nice features, such as follows:

- Versioned buckets (more on this in a second)

- Flexible powerful access controls

Storage on S3 is split into buckets: consider them as a separate disk (though it's not a completely correct analogy). Inside buckets, you can have folders (actually, there are no real folders on S3) and objects. Each object has a key: consider it as a filename. A bucket can be versioned; it means that all objects in this bucket will be stored as well and you can configure for how long they are stored and how many versions will remain.

Access to S3 is configured the same way as any other AWS service: with IAM service. It allows you to set per-object permissions for a user in your AWS account, as well as a server role, and so on. Very powerful indeed.

Before using it with Terraform, we need to create a bucket, of course. You could create this bucket with Terraform itself, but that would become a bit of a chicken and an egg problem: if Terraform creates a bucket, then where is the state file for the template that creates this bucket stored? In another bucket? Oops, infinite loop.

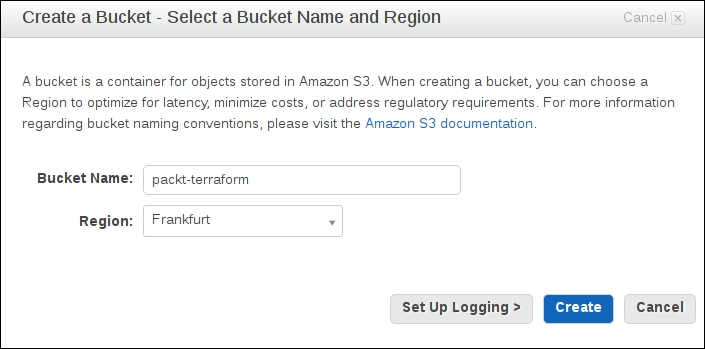

Go to AWS console, choose S3 service, and create a bucket:

Don't click on Create! Instead ,click on Set up Logging first: it will allow us to audit who accesses this bucket and performs which changes and when. You need a separate bucket for logs, so create it in advance in the same AWS region as the bucket. Note that bucket names are unique across AWS, so you won't be able to create a packt-terraform bucket.

Use a drop of imagination and pick the name yourself.

After the bucket is created, click on it in the bucket list and then click Properties on the top right. You need to select Enable Versioning:

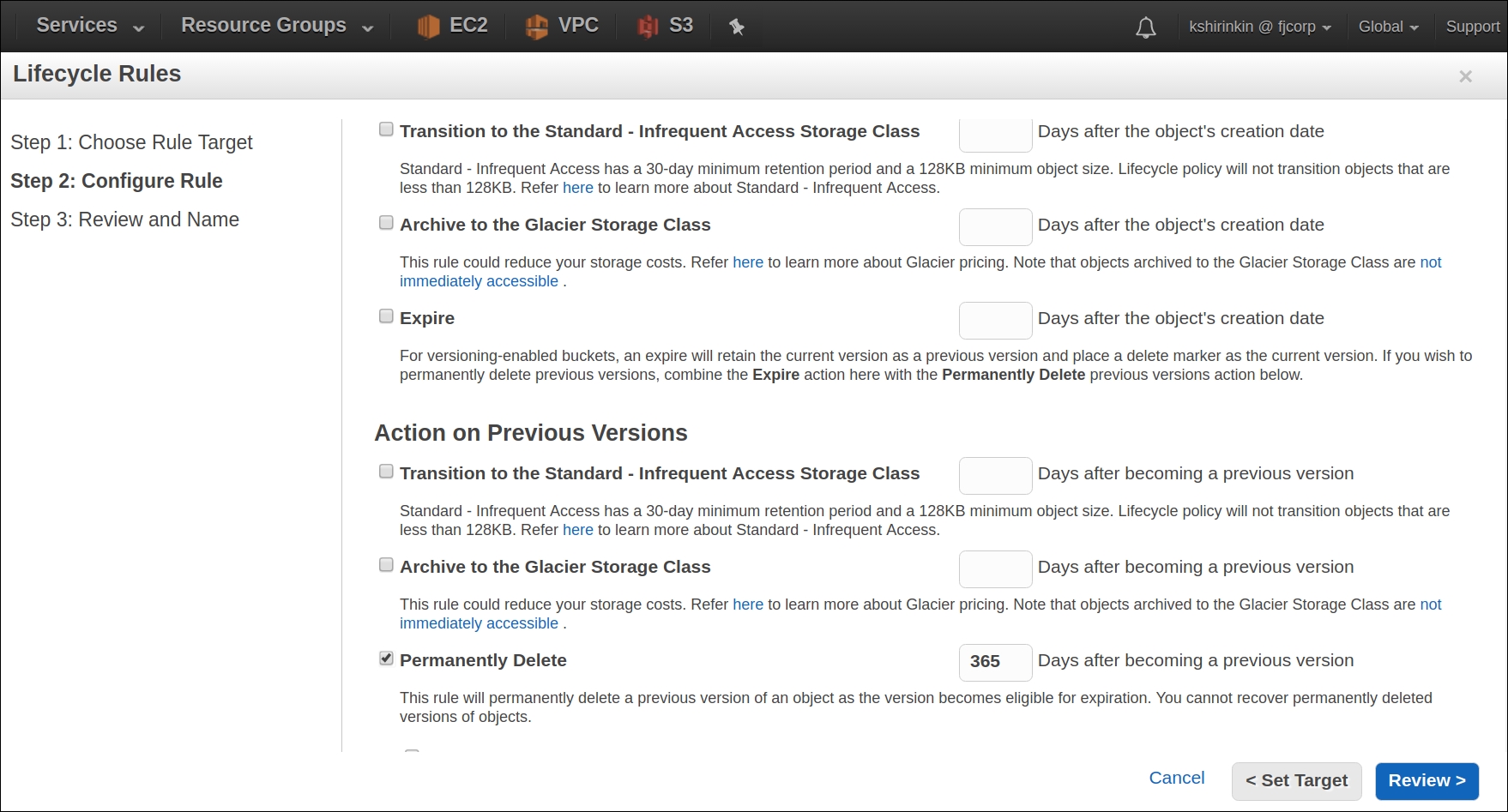

After versioning is enabled, all versions of all objects in this bucket will be stored. It's a bit wasteful to store all the versions though. You probably don't care much about some state file version from half a year ago, and you don't want to pay for storing it in S3. To solve this problem, create a new Lifecycle Rules for this bucket: the bucket is right under the Versioning tab in Properties. In the following screenshot, I chose to remove all versions older than one month:

We are all set up to use this bucket as a remote state storage! Head back to your console, remove the existing state files, and run this command to enable the remote storage:

$> terraform remote config -backend=s3 -backend-config="bucket=packt-terraform" -backend-config="key=mighty_trousers/terraform.tfstate" -backend-config="region=eu-central-1" Remote state management enabled Remote state configured and pulled.

Note the key option: we surely can store multiple state files in the same bucket. Now go ahead and run the terraform apply command. After it is finished, your state file will be uploaded to S3 bucket. Run the terraform destroy command right after that just, so the new version is created on S3 and head to AWS console to verify that both versions are stored indeed (click on Show on the top to show the versions):

We have a remote versioned secure storage for state file decoupled from the git repository with actual Terraform templates!

Using S3 gives you a few extra benefits. For example, you could use Events features that allow you to trigger some events on each change to a particular object or group of objects. Want to send notifications about the state file updates to the Slack channel? Easy.

S3 remote storage also gives you a benefit of encrypted state file, which you can enable with the encrypt option. Try it out yourself as an exercise.