1

Future of Medical Imaging

Mark Nadeski and Gene Frantz

CONTENTS

1.3 Making Health Care More Personal

1.3.1 Advances in Digital and Medical Imaging

1.4 How Telecommunications Complements Medical Imaging

1.7 What We Can Expect from Technology

1.7.3 Multiprocessor Complexity

1.7.3.1 Multiprocessing Elements

1.7.4.1 Lower Power into the Future

1.1 Introduction

There are those who fear technology is nearly at the physical limitations of our understanding of nature, so where can we possibly go from here? But technology is not where our limits lie. Integrated circuits (ICs) have always exceeded our ability to fully utilize the capacity they make available to us, and the future will be no exception, for technology does not drive innovation. In truth, it is innovation and human imagination which drive technology.

1.2 Where Are We Going?

The broad field of medical imaging has seen some truly spectacular advances in the past half-century, many that most of us take for granted. Once a marvel only in the laboratory, advances such as real-time and Doppler ultra-sonography, functional nuclear medicine, computed tomography, magnetic resonance imaging, and interventional angiography have all become available in clinical settings.

It is easy to sit back in wonder at how far the field of medical imaging has come. However, in this chapter, we glimpse into the future. Some of this future is quickly taking shape today, though some of it will not arrive for years, if not decades.

Specifically, we look at how advances in medical imaging are based on existing technology; how these technologies will provide more capacity and capabilities than we can conceivably exploit; and finally lead in to the conclusion that the future of medicine is not limited by what we know, but rather by what we can imagine.

Let us begin by looking at the edge of what is real, that wonderful place where ideas are transformed into reality.

1.2.1 EyeCam

For centuries, humanity has dreamed of being able to make the blind see. And, for as long, restoring a person's eyesight has been considered a feat commonly categorized as “a miracle.”

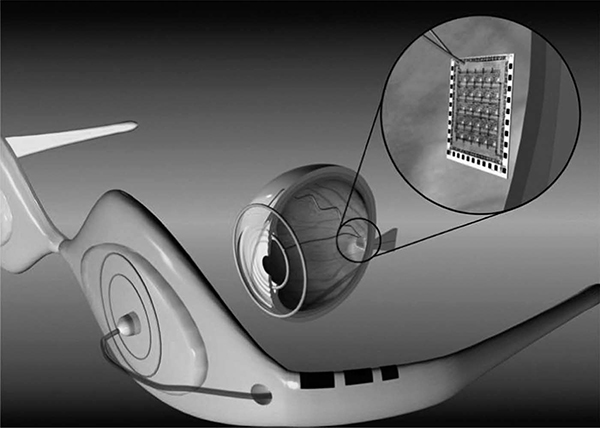

About 10 years ago, Texas Instruments (TI) began collaborating with a medical team at Johns Hopkins, well known for its ability to make miracles happen. The team's goal was to develop a way to take the signal from a camera and turn it into electrical impulses that could then be used to excite the retina as shown in Figure 1.1. If successful, they could return some level of vision back to individuals who had lost their eyesight due to retinitis pig-matosa, a disease that affects more than 100,000 people in the United States alone.*

Now at the University of Southern California, this team continues to make significant progress. The project has evolved considerably over the years. Its initial conception consisted of mounting a camera on a pair of glasses that would require patients to rotate their heads in order to look around. Today, the team is working to actually implant a camera module within the eye since it is much more natural to let the eye do the moving to point the camera in the right direction. However, it is one thing to say, implanting a camera in a person's eye is more practical than mounting it on glasses and quite another to achieve it. A number of challenges come to mind:

* Source: http://www.wrongdiagnosis.com/r/retinitis_pigmentosa/stats-country.htm

FIGURE 1.1

(See color insert.)

Example of the eyecam created and tested at the University of Southern California.

Size: The complete camera module has to be significantly smaller than an eyeball in order to fit.

Power: The camera must have exceptionally low power consumption. At the very least, the energy needs to be scavenged from body heat, the surrounding environment, or a yet-to-be-invented wireless power circuit.

Heat: Initial cameras may rely upon a connected power source. Even so, it is critical that the camera should not produce much heat. To be practical, the camera must be able to dissipate enough power so as not to heat the eye to the point of discomfort.

Durability: The camera must be packaged in such a way as to be protected from the fluids in the eye.

Currently working with Georgia Tech University and experts at TI, the team at USC is busy making all this happen. Is such an ambitious project even possible? Although success has yet to be seen, the team envisions a successful completion of the project. And they have good reason to be confident for they are only just pressing at the edges of possibility.

Much of what lies ahead of us in the world of medicine is the identification of technologies and devices from other parts of our world that we can apply to medical electronics. For example, Prof. Armand R. Tanguay, Jr., Principal Investigator on the “eyecam” project, acknowledges that they have many ideas about where else in the body they could implant a camera.*

Here a camera, there a camera,

In the eye a little camera.

Old Doc Donald had a patient.

E, I, E, I, O.

Certainly, there is more than one verse to this song. The question we might ask ourselves is what do we imagine we need next?

1.3 Making Health Care More Personal

A device that can help the blind to see is a life-changing application of medical technology. Not all medical devices will have such a dramatic effect on the way we live. Most of the changes in medical care will have a much lower profile, for they will be incorporated into our daily lives. However, while their application may be more subtle, the end result will certainly still be quite profound.

The future of medicine is based upon a firm foundation of existing technologies. What is new, in many cases, is not the technology itself, but rather how the technology is applied in new ways. Consider these key technologies:

Digital imaging

Telecommunications

Automated monitoring

Each of these technologies is already firmly established in a number of disparate industries. Specifically applying them to medicine will still require creativity and hard work, but doing so will enable entirely new applications. Perhaps most importantly, for health care providers and their patients, the resulting advances will help shift health care into becoming a more routine part of daily life, creating a future where medical devices help us

Manage our chronic conditions

Predict our catastrophic diseases

Enable us to live out our final months/years in the comfort of our homes

* Unfortunately, we cannot print any of these exciting ideas without a nondisclosure agreement in place.

1.3.1 Advances in Digital and Medical Imaging

Improving health care is the ultimate goal behind advances in medicine. As medical imaging advances, it will allow patients to have more personalized and targeted health care. Imaging, diagnosis, and treatment plans will continue to become more specialized and customized to a patient's particular needs and anatomy. We may even see therapies that are tailored to a person's specific genetics. Look at how far we have come already:

The migration to digital files: Photographic plates were once used to “catch” x-ray images. These plates gave way to film, which in turn are now giving way to digital radiography. Through the use of advanced digital signal processing, x-ray signals now can be converted to digital images at the point of acquisition while imposing no loss in image clarity. Digital files have a variety of benefits, including eliminating the time and cost of processing film, as well as being a more reliable storage medium, which can be transferred near-instantaneously across the world.

Real-time processing: The ability to render digital images in realtime expands our ability to monitor the body. Using digital x-ray machines during surgical procedures, doctors can view a precise image at the exact time of surgery. Real-time processing also increases what can be done noninvasively. For example, the Israeli company CNOGA* uses video cameras to noninvasively measure vital signs such as blood pressure, pulse rate, blood oxygen level, and carbon dioxide level simply by focusing on the person's skin. Future applications of this technology may lead to identifying biomarkers for diseases such as cancer and chronic obstructive pulmonary disease (COPD).

Evolution from slow and fuzzy to fast and highly detailed: Today's magnetic resonance imagers (MRIs) can provide higher quality images in a fraction of the time it took state-of-the-art machines just a few years ago. These digital MRIs are also highly flexible, with the ability to image, for example, the spine while it is in a natural, weight-bearing, standing position. With diffusion MRIs, researchers can use a procedure known as tractography to create brain maps that aid in studying the relationships between disparate brain regions. Functional MRIs, for their part, can rapidly scan the brain to measure signal changes due to changing neural activity. These highly detailed images provide researchers with deeper insights into how the brain works—insights that will be used to improve treatment and guide future imaging equipment.

Moving from diagnostic to therapeutic: High-intensity focused ultrasound (HIFU) is part of a trend in health care toward reducing the impact of procedures in terms of incision size, recovery time, hospital stays, and infection risk. But unlike many other parts of this trend, such as robot-assisted surgery, HIFU goes a step further to enable procedures currently done invasively to be done noninvasively. Transrectal ultrasound,* for example, destroys prostate cancer cells without damaging healthy, surrounding tissue. HIFU can also be used to cauterize bleeding, making HIFU immensely valuable at disaster sites, accident scenes, and on the battlefield. Focused ultrasound even has a potential role in a wide variety of cosmetic procedures, from melting fat to promoting formation of secondary collagen to eradicate pimples.

Portability of ultrasound: Ultrasound equipment continues to become more compact. Cart-based systems increasingly are complemented and/or replaced by portable and even handheld ultrasound machines. Such portability illustrates how, for a wide variety of health care applications, medical technology can bring care to patients instead of forcing them to travel. Portable and handheld ultrasound systems have also been instrumental in bringing health care to rural and remote areas, disaster sites, patient rooms in hospitals, assisted-living facilities, and even ambulances.

Wireless connectivity: Portability can be further extended by cutting cables. Putting a transducer, integrated beamformer, and wideband wireless link into an ultrasound probe will not only enable great cost savings by removing expensive cabling from the device but will also allow greater flexibility and portability. Further reducing cost and increasing portability enables more widespread use of digital imaging technology, enabling treatment in new areas and applications. A cable-free design also complements 3D probes, which have significantly more transducer elements and thus require more cabling, something that may become prohibitively expensive using today's technology.

Fusion of multiple imaging modalities: The fusion of multiple imaging modalities—MRI, ultrasound, digital x-ray, positron emission tomography (PET), and computerized tomography (CT)—into a single device provides physicians with more real-time information to guide treatment while reducing the time that doctors must spend with patients. For example, PET and CT are increasingly being combined into a single device. While the PET scan identifies growing cancer cells, the CT scan provides a picture of the location, size, and shape of cancerous growths.

Many of the real-time imaging modalities have greatly benefitted by advances in digital signal processors (DSP), devices which specialize in effi-cient, real-time processing. Specifically, the ability to exponentially increase the processing capabilities in imaging machines has enabled these advances to be useful in a hospital setting. However, to drive many of these applications into more widespread usage, another order of magnitude increase in processing capability will be necessary. This is a tall challenge.

Fortunately, silicon technology companies are now turning their attention to the world of medical electronics to meet these challenges. For instance, TIs formed its Medical Business Unit in 2007 to address the needs of the medical industry. This type of partnership between technology companies and the medical industry will help ensure that the exciting possibilities of the future that we envision will be realized.

* www.prostate-cancer.org/education/novelthr/Chinn_TransrectalHIFU.html

1.4 How Telecommunications Complements Medical Imaging

Advances in medical imaging are frequently complemented by advances in communications networks. Together, they have significantly improved patient care, while also reducing costs for health care providers and insurance companies. The question is rapidly moving importance away from where we receive treatment to how we receive treatment.

Telemedicine is the concept where a patient's medical data are transported digitally over the network to a medical professional. For example, 24/7 radiology has begun to emerge as a commonly available service. Instead of maintaining a full radiological staff overnight, a hospital emergency room can now send an x-ray via a broadband internet link to Night Hawk Radiology Services* in Sydney, Australia, or Zurich, Switzerland. Night Hawk's staff then reads the x-ray and returns a diagnosis to the ER doctors.

For some patients, the combination of imaging and communications enables diagnosis that they otherwise would not receive for reasons such as finances or distance. A prime example is the work of Dr. Devi Prasad Shetty, a cardiologist who delivers health care via broadband satellite to residents of India's remote, rural villages who otherwise would not receive it simply because of where they live.† Today, one of Dr. Shetty's clinics can handle more than 3000 x-rays every 24 h. Shetty's telemedicine program has had a major impact in India, where an average of four people have a heart attack every minute.‡

In contrast to telemedicine, telepresence§ is where a medical professional virtually visits a patient through videoconferencing. Telepresence is increasingly used in both developed and developing countries to widen the distribution of health care. Videoconferencing is often paired with medical imaging systems, such as ultrasound, to enable both telepresence and telemedicine. Such applications frequently enjoy government subsidies because they bring health care to areas where it is expensive, scarce, or both.

One example of telemedicine is the Missouri Telehealth Network,¶ whose services include teledermatology. Using this service, a patient at a rural health clinic can put his or her scalp under a video camera for viewing and diagnosis by a dermatologist hundreds of miles away. Videoconferencing equipment simulates a face-to-face meeting, allowing the doctor to discuss any conditions with the patient. In the case of someone with stage 1 melanoma, early detection via telemedicine may save his or her life.

‡ www.abc.net.au/foreign/stories/s785987.htm

§ www.telepresenceworld.com/ind-medical.php

¶ www.proavmagazine.com/industry-news.asp?sectionID=0&articleID=596571

Whether patients delay a doctor's visit because of distance, cost, available resources, being too busy, or even fear, telemedicine can mean the difference between suffering with a disease or receiving treatment. Virtual house calls, where physicians use videoconferencing and home-based diagnostic equipment to bring health care to a person without necessitating a visit to the doctor, can address most of these concerns.

Virtual house calls may be particularly attractive to patients in rural areas* or those in major cities with chronic traffic jams. Virtual house calls are also a way to bring health care to patients who otherwise would not be able to see a physician, perhaps because they are bedridden, suffering from latrophobia (fear of doctors), or have limited means of transportation. Whatever the hurdle they are helping overcome, virtual house calls are yet another example of how advances in medical technology increasingly are bringing care to the patient instead of the other way around.

For example, let us take a look at how medical technology may have been able to help the nineteenth century poet, Emily Dickinson, who was reclusive to the point that she would only allow a doctor to examine her from a distance of several feet as she walked past an open door. If she were alive today, she would greatly benefit from advances in medical imaging that could accommodate her standoffishness while still diagnosing the Bright's disease that ended her life at age 55.

Future medical technology will reach even further into our lives. Imagine a bathroom mirror equipped with a retinal scanner behind the glass that looks for retinopathy and collects vital signs. In the case of Dickinson, that mirror could have noticed a gradual increase in the puffiness of her face, a symptom of Bright's disease, and alerted her physician through an integrated wireless internet connection.

One of the underlying technologies behind medical imaging—DSPs—has a lot in common with that of telemedicine. DSPs play a key role in tele-medicine. For example, DSPs provide the processing power and flexibility necessary to support the variety of codecs used in videoconferencing and telepresence systems. Some of these codecs compress video to the point that a TV-quality image can be transported across low-bandwidth wired or wireless networks, an ability that can extend telemedicine to remote places where the telecom infrastructure has limited bandwidth. In the future, compression will also help extend telemedicine directly to patients’ homes over cable and DSL connections.

* www.columbiamissourian.com/stories/2007/05/12/improving-care-rural-diabetics

DSPs also provide the processing power necessary to support the lossless codecs required for medical imaging, since compression could impact image quality and affect a diagnosis. Another advantage DSPs offer is their programmability, which allows them to be upgraded in the field to support new codecs as they become available, thereby providing a degree of future-proofing for hospitals and physicians.

1.5 Automated Monitoring

Consider this short list of medical devices that can operate noninvasively within our homes:

Bathroom fixtures with embedded devices could monitor for potential problems, such as a toilet that automatically analyzes urine to identify kidney infections or the progression of chronic conditions such as diabetes and hypertension.

A bathroom scale could track sudden changes in weight or body fat and then automatically upload this data to a patient's physician. The scale could even trigger scheduling of an appointment based on a physician's predetermined criteria.

Diagnostic devices such as retinal scanners could be coupled with a patient's existing consumer electronics products, such as a digital camera, to provide additional diagnostic and treatment options. If the device can connect to the network, the medical data collected could automatically be made available to medical personnel.

Sensors in the home could measure how a person is walking to determine if he or she is at risk for a medical episode such as a seizure.

Equipment could be connected to a caregiver's network for remote monitoring. One example of such a product under development is a gyroscope-based device worn by elderly patients to detect whether they have fallen.* Near-falls could trigger alerts to caregivers while being documented and reported to a patient's physician. This device could also track extended sedentary periods, which could be a sign of a developing physical or psychological problem.

The term “personal area network” (PAN) may come to refer to the variety of devices that work together to regularly and noninvasively monitor and record a person's vital signs. Collected data could be automatically correlated to identify more complex medical conditions.

* http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?tp=&arnumber=1019448&isnumber=21925

All of these examples will change how we approach practical medicine. Passive care of this nature becomes a “round-the-clock” service rather than something that occurs infrequently and disrupts our busy schedules. Constant monitoring also enables earlier identification of health problems before conditions can become irreversible, as well as eliminates the problem of patients not being conscientious about recording information about themselves. As a result, a patient may receive care that is more thorough than if he or she found time for an office visit every week or month.

This technology could be a viable way to improve care for patients who are too busy to schedule routine doctor's visits or, as is the case with Iatrophobics, whose fear of the doctor has them putting off regular check-ups. For the rest of us, who are either too busy, too unconcerned, or too lazy to schedule regular checkups, this technology can help ensure that we do not go too long before any changes get needed care. And, as health care becomes a continuous service through automated processes, the cost of delivering care will be substantially reduced as well.

1.6 Future of Technology

This is quite an impressive list of what is just around the corner. And while many of these applications may sound like inventions from a future that we can only hope for and dream about, it is likely that the reality will be even more exciting. To understand why this is the case, let us now shift our attention to the underlying factors that enable and drive innovation.

In the decades since the invention of the transistor, the IC, the microprocessor, and the DSP, technology has significantly impacted every part of our lives. And during this time, we have seen computers shrink from filling large air-conditioned rooms until they fit into our pockets. Now we are seeing the next stage of this progression as computers move from being dedicated devices in our pockets to becoming small subsystems that are integrated into other systems or even embedded in our clothing or our bodies.

Because it is technology that has brought us where we are, it is tempting to believe that technology is the driving factor behind medical imaging and innovation. We do not believe this is the case. For example, the reason that computers became smaller was not because technology allowed them to become smaller. The reason computers became smaller was because people found compelling reasons to make them that way. It is the need or desire for portable computing, not technology, that put computers like the Blackberry and IPhone into our pockets. This point is critical:

Technology does not drive innovation. Innovation drives technology.

1.6.1 Remembering Our Focus

It will be the wants and needs of the marketplace that determine the next technology that will go in our pockets as well as the things we can expect to be embedded in our clothing and bodies. In terms of advances in medical technology, it will be the needs of the patient that dictate what comes next.

Technology is exciting, and it is easy to forget what all of these amazing advances are really all about. For whether it is a retinal scanner in a bathroom mirror or a home ultrasound machine, it is the patient who is the greatest beneficiary. These advances enable health care to become more personal by bringing patients to doctors in their offices and doctors to patients in their homes. They also increase the effectiveness of health care by providing ways to identify diseases and other conditions before they become untreatable.

At the same time, these advances also allow health care to fade into the background and become a part of daily life. Imagine being scanned each morning while brushing your teeth instead of only during an annual checkup. That would be particularly valuable for patients with chronic or end-stage diseases, because it may allow them to live their lives without having to move into a hospice.

Health care revolves around the patient, as it should. And technology in turn will help us to address their key needs, as stated earlier in this chapter, to manage their chronic conditions, predict their catastrophic diseases, and allow them to live out the last days of their lives in the comfort of their own homes.

1.7 What We Can Expect from Technology

Knowing that need drives innovation allows us to approach technology from a different perspective, one where it is more relevant to discuss what these advances will be rather than to discuss what will make them possible. For example, we could speculate on the new process technologies that will overcome current IC manufacturing limitations. However, this will tell us little about what will be built with these future ICs. Rather, it is by exploring what we can reasonably expect from technology that we can gain insight into the possible future.

Let us begin with a well-known tenant from the world of ICs, Moore's law. Moore's law [1] forecasts that the number of transistors that can be integrated on one device will double every 2–3 years. Made in 1965 by Intel co-founder, Gordon E. Moore, in 1965, this prediction has not only been adopted by the industry as a “law” but, as shown in Figure 1.2, has also accurately described the progress of ICs for the past 40 years.

FIGURE 1.2

The trend for process technology over the past 40 years.

In practical terms, Moore's law has allowed us to reduce the price of ICs. Advances in performance and power dissipation have also affected cost, but over the past decade it has been advances in IC technology that have been primarily responsible for driving cost down.

As we look into the future, we can expect Moore's law to continue to hold true; that is, we should be able to integrate about twice the number of transistors on a piece of silicon every 2–3 years for about the same cost. What does this mean in terms of medical imaging and innovation? By the year 2020, the price of an IC could be as low as $1 for a billion transistors (a buck a billion). At that time, a high-end processor might cost $50.

Just imagine what could be done with 50 billion transistors. Of course, this many transistors do not come without their issues:

The cost of developing a new IC may become prohibitively expensive.

Raw performance is no longer being driven by Moore's law.

Power dissipation must be actively managed.

Digital transistors are not particularly friendly to the analog world.

1.7.1 Development Cost

Back in the mid-1990s, TI introduced a new technology node for ICs. With this particular new technology node, we were able to integrate 100 million transistors on an IC. To put this in perspective, most personal computers in the 1990s had less than 10 million transistors, not counting memory. (As a short aside, advances in new process nodes are generally 0.7 times the size of previous nodes, which yields twice the number of transistors for the same die area.)

Being able to build an IC with this density does not answer the question of what will be done with them. The more difficult question, however, was what it would take to design a product with 100 million transistors. Consider the math. If we estimate that a design effort could be efficient enough such that the average time to design each transistor was about 1 h, such a project would take 50,000 staff years to complete. Clearly, starting a design of 100 million transistors from scratch would be virtually impossible. TI and other IC manufacturers solved and continue to solve this problem through intellectual property (IP) reuse. We will come back to this concept shortly.

On top of the excessive cost of an IC design, the cost of manufacturing tooling is on the order of 1 million dollars (We will overlook the billions of dollars spent on the IC wafer fab itself for the moment.). For high-volume applications, this cost spreads out to a manageable number. For example, a design with a total build of 1 million units would reduce the per-unit tooling cost to $1. For low-volume applications, however, the cost can become prohibitive: an IC design for a product anticipated to sell 10,000 units during its lifetime has a per-unit tooling cost of $100.

The obvious conclusion is that only those IC designs with extremely high volumes can be justified. Most applications, however, and not just those in the medical industry, have significantly smaller scope. Thus, these applications will not be based on ICs specifically designed for them but rather on standard programmable processors with application-specific software. And as tooling costs increase, this will be true for virtually all products developed in the future.

Because standard programmable processors allow IP to be implemented in software, the tooling cost of a programmable device can be shared among many different products, even across industries (i.e., medical imaging, high-end consumer cameras, industrial imaging, and so on). Software customizes the processor, so to speak; and the more applications a processor can serve, the lower its cost.

The trade-off of implementing IP in software is, from an engineering perspective, a fairly “sloppy” way of designing a product, meaning that the final design will require far more transistors than if an application-specific IC is used. Given the advanced state of IC process technology, however, this does not matter. Back in the 1990s, we had more processors than we knew what to do with. Today, we can build processors with more capacity than we can use. And in the year 2020, we will still have more transistors than our imaginations can exploit.

1.7.2 Performance

For years, the performance of ICs has seemed to be driven by Moore's law, just as cost was. However, if we measure raw performance—that is, the number of cycles a processor could execute—it actually drifted from Moore's law in the early 1990s. Despite this, processors have still doubled in effective performance in accordance with Moore's law through sophisticated changes to processor architectures such as deeper pipelines and multiple levels of cache memory. These changes came at their own cost—lots of transistors. Fortunately, as stated previously, we have plenty of those.

Improving performance through sophisticated changes to a processor's architecture is, in some ways, just a fancy way of saying that an architecture has been made more efficient. Deeper pipelines, for example, eliminate the inefficiencies of processing a single instruction by simultaneously processing multiple instructions. Eliminating inefficiencies only goes so far, though. Caches improved memory performance significantly, and caches for caches squeezed out a bit more performance, but having caches for caches actually begins to slow things down.

There are still many opportunities for increasing processor performance through architectural sophistication, but to achieve a major increase in performance, the industry is moving toward multiprocessing. Also referred to as multicore, the central idea is that for many applications, two processors can do the job (almost) twice as fast as one. Multiprocessing, while yielding a whole new level of performance, also introduces a whole new level of complexity to processor architectures. And as we continue to add complexity, we then must create more complex development environments to hide the complexity of the architecture from developers.

1.7.3 Multiprocessor Complexity

To understand the effect of the complexity that multiprocessing imposes on design, we need to take a look at Amdahl's law [2]. Simply stated, Amdahl's law says that sometimes using more processors to solve a problem can actually slow things down. Consider a task such as driving yourself from point A to B. There is really no way to use multiple cars to get yourself there any faster. In fact, using multiple cars along the way will likely slow you down as you stop, switch cars, and then get up to speed again, not to mention the traffic jam you would have created.

The same problem applies to an algorithm that cannot be parallelized—that is, easily distributed across multiple processors. Splitting such an algorithm across equal performance processors will slow down overall execution because of the added overhead of breaking up the task across the multiple cores. Engineers describe these types of tasks as being “Amdahl unfriendly.”

Amdahl-friendly tasks are those which can be easily broken into multiple smaller tasks that can be solved in parallel. These are the types of tasks for which DSPs are well suited. Consider how a video signal can be broken into small pieces. Since each piece is relatively independent of the others, they can be processed in parallel/simultaneously.

The size of each piece depends upon the processors being used. For example, TIs developed the Serial Video Processor (SVP) [3] for the TV market. The SVP contained 1000 1 bit DSPs each simultaneously processing one pixel in a horizontal line of video. Because Amdahl-friendly tasks like these can be parallelized, it is easier to determine how to architect the multiprocessing system as well as how to create the development environment with which to design applications that exploit it.

FIGURE 1.3

Higher-performance through parallelism—More DSPs + flexible coprocessors.

Amdahl-unfriendly tasks by far are much harder to solve as they prove difficult to divide into parts. Much of the research related to multiprocessing going on now in universities is focused on addressing how to approach such seemingly difficult problems (Figure 1.3).

1.7.3.1 Multiprocessing Elements

Even given the limitations of Amdahl's law, the best approach for increasing performance appears to be through multiprocessing. Before we can begin to consider how best to take advantage of multiple processors in the same system, however, we first need to ask, “What is a processor?” There are, after all, many different types of processing elements:

General-purpose processors: Examples include ARM cores, MIPS cores, and Pentium-class processors.

Application-specific processors: Examples include DSPs and graphic processing units (GPUs).

Programmable accelerators: Examples include floating point units (FPUs) and video processors.

Configurable accelerators: These are similar to programmable accelerators in that they can perform a range of specific tasks such as filtering or transforms.

Fixed-function accelerators: These are also similar to programmable accelerators with the exception that they perform only a single task such as serving as an anti-aliasing filter for an audio signal.

Programmable hardware blocks: Examples include FPGAs, PLDs, etc.

In general, the term “multiprocessing” refers to heterogeneous (different elements) multiprocessing while “multicore” refers to homogeneous (same elements) multiprocessing. The importance of this distinction is greater when talking about DSP algorithms than for more general-purpose applications. In a DSP application, there is more opportunity to align the various processing elements to the tasks that need to be accomplished. For example, an accelerator designed for audio, video/imaging, or communications can be assigned appropriate tasks to achieve the greatest efficiency. In contrast, very large, generic algorithms may be best implemented using an array of identical processing elements.

Despite all of its challenges, multiprocessing appears to be one of the next major advances that will shape IC, electronics, and medical equipment design. Today, multiprocessing is still not well understood, and progress will likely be slow as advances percolate out of university research laboratories into the real world over the next decade or so. In the meantime, we have to get used to the hit-and-miss nature of multiprocessing architectures and do our best to use them as efficiently as we can.

1.7.4 Power Dissipation

In relation to price and performance, power dissipation is the new kid on the block and where much research is starting to be focused. TI's first introduction to this important aspect of value goes back to the mid-1950s when its engineers developed the Regency radio [4] to demonstrate the value of the silicon transistor. This was the first transistor radio, and its obvious need for battery operation made it important to demonstrate low power dissipation.

Power dissipation again became important with the arrival of the calculator in the 1970s. Although most uses for these early calculators allowed for them to be plugged into a wall, sockets were not always nearby or convenient to use. The subsequent movement to LCD calculators with solar cells made low power an even more important requirement.

Lower power dissipation became a primary design constraint in the early 1990s with the arrival of the digital cellular phone. Early customers in this new market made it clear to TI that if power dissipation was not taken seriously, they would find another vendor for their components.

With this warning, TI began its now 20 year drive to reduce power dissipation in its processors. One of the results of this reduction in power is the creation of processors that have helped revolutionize the world of ultrasound by turning the once bulky, cart-based ultrasound systems into portable and even handheld systems.

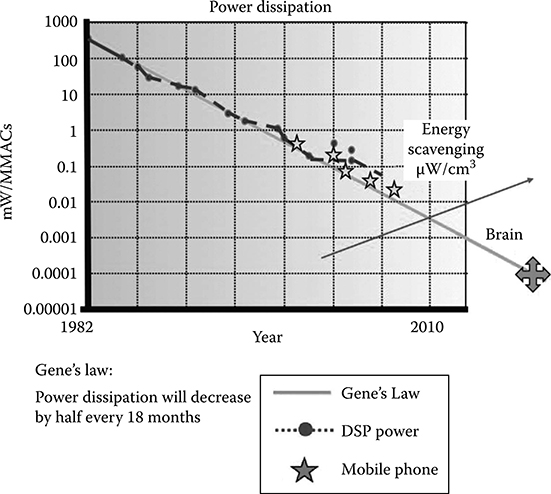

FIGURE 1.4

Gene's law. This graph shows the trend of power efficiency over time with efficiency measured as mW/MMAC. The MMAC is the base unit of DSP performance. The upward trending line on the right represents our ability to scavenge energy from the environment.

Figure 1.4 shows how power dissipation has improved over the history of DSP development. Measured in units of DSP performance—the MMAC (millions of multiplies and accumulates per second)—it shows how power dissipation has been reduced by half every 18 months. As this chart was created by Gene Frantz, Principal Fellow at TI, this trend of power efficiency over time has come to be known as Gene's law.

It should be noted that the downward efficiency trend flattened a few years ago. This occurred because of issues with IC technology where leakage power was at parity with active power. As with any problem, once understood, it was able to be resolved. Now, we are back on the downward trend of power dissipation per unit of performance.

1.7.4.1 Lower Power into the Future

As we look to the future, the question is whether IC technology will continue to follow Gene's law. There are several reasons to believe it will. The two that seem to have the most promise are lower operating voltages and the availability of additional transistors.

Much of the downward trend for power dissipation has been, in fact, due to lowering the operating voltage of ICs. Over the past 20 years, device voltage has gone from 5 to 3 to 1 V. Nor is this the end of the line. Ongoing research predicts processors operating at 0.5 V and lower [5].

Just as more transistors can be used to increase the performance of a device, they can also be used to lower the power dissipation for a given function. The simplest method for lowering power consumption is using what is known as the father's solution: who does not remember that loud voice resonating through the house, “When you leave a room, turn off the light!”

This wisdom is easily applied to circuit design as well—simply turn off sections of the device, especially the main processor, when they do not need to be in operation. A good example of this type of management is implemented by the MSP430 family of products. And while it does require more transistors to turn sections on and off, fortunately, it is only a few, making this an extremely efficient approach to power management.

Power can also be managed through multiprocessing, although this approach requires many more transistors. Consider that a task performed on a single processor running at 100 MHz can be performed as quickly on two of the same processors running at 50 MHz. Given the nature of power, one processor running at 100 MHz will consume the same power as two processors running at 50 MHz so long as they are operating at the same voltage.

Due to the characteristics of IC technology, however, the 50 MHz processors can operate at a lower voltage than the 100 MHz processor. Since the power dissipation of a circuit is reduced by the square of the voltage reduction, two 50 MHz processors running at a lower voltage will actually dissipate less power than their 100 MHz equivalent.

The trade-off for managing power through multiprocessing is that two slower processors require twice the number of transistors as a single, faster processor. And again, given that we can rely upon having more transistors as we move into the future, multiprocessing is a feasible method for reducing power dissipation.

1.7.4.2 Perpetual Devices

Confident that IC power dissipation will continue to go down, we can begin to think about how we might take advantage of that. One interesting corresponding area of research which is receiving a lot of attention is energy scavenging. Energy scavenging is based on the concept that there is plenty of environmental energy available to be converted into electrical energy [5]. Energy can be captured from light, walls vibrating, and variations in temperature, just to name a few examples of sources of small amounts of electrical energy.

Combining energy scavenging with ultra-low-powered devices gives us the concept of “perpetual devices.” Back in Figure 1.4, there is an upsloping line that represents our ability to scavenge energy from the environment. At the point this red line crosses the power reduction curve, we will have the ability to create devices that can scavenge enough energy from the environment to operate without a traditional plug or battery power source.

Imagine the medical applications for perpetual devices. Implants once not feasible because of the need to replace batteries will be possible. Pacemakers will be able to support a wireless link to upload data, eyecams will be permanent once installed, and we may even see roving sensors that travel through our bodies monitoring our heart while cleaning our arteries.

1.8 Integration through SoC and SiP

So far we have addressed the three “P”s of value: price, performance, and power. The final aspect of IC technology that will serve as a foundation of medical imaging into the future is integration. Integration refers to our ability to implement more functionality onto a single IC as the number of transistors increases. Many in the electronics industry believe that the ultimate result of integration will be what is referred to as an SoC or “system on a chip.” There is no nice way to say this: They are wrong.

To understand this position, let us look back at history. When TI began producing calculators, the initial goal was to create a “single-chip calculator.” This may be surprising, but such a device has never been produced by TI or anyone else. The reason is that we have never figured out how to integrate a display, keyboard, and batteries onto an IC. What we did develop was a “subcalculator on a chip.” It is important to catch the subtlety here. We did not develop the whole system, just a part of it. Perhaps, it would be more accurate to use the term subsystems on chip (SSOC).

The same is true when we look at technology today. No one creates complete systems on chip, and for many good reasons. The best, perhaps, is that in practical terms, by the time we develop an SoC, we find it has become a subsystem of a larger system. Put another way, once a technology makes sense to implement as an IC (i.e., it has passed the high-volume threshold required to reduce tooling to a reasonable per-unit cost), it has likely been found to be useful in a great variety of applications.

And this leads us to the real focal point of system integration in the future—the system in package (SiP). Figure 1.5 shows that the roadmap of component integration can be simplified to three nodes. The first node—the design is built on a printed circuit board (PCB)—is well understood by system designers. At the second node, SiP, all of the components are integrated “upward” by stacking multiple ICs into one package. It is at the third and final node that all of the components are integrated onto a single IC using SoC technology.

FIGURE 1.5

A simple roadmap of component integration.

Again, once a system warrants its own SoC, invariably it is being designed back into larger systems. For this reason, technology is not stable at the SoC node, and it settles back either to being placed directly onto a PCB (node 1) or into a package (node 2). As we continue to increase the number of transistors available and lower their cost, these subsystems and the IP they represent will increasingly settle into a package (SiP) and less onto a PCB.

While compelling in itself, this is not the only reason for moving away from the SoC as the ultimate way to design systems. Consider that each advance in digital IC process technology delivers more transistors and perhaps operates at a lower voltage. But the real world is not digital, nor does it follow this trend, and analog circuits, as well as RF circuits, seem to favor higher voltages.

To create a whole IC system on a single piece of silicon requires using a single process technology. However, implementing digital circuits in an analog or RF process significantly increases the relative cost of the digital circuit. Likewise, implementing analog and RF in a digital process substantially reduces signal integrity. The only way we will be able to efficiently integrate all aspects of the system into one “device” is to implement each circuit in its appropriate process technology and combine the various ICs in a single package using SiP technology.

The fact that SiP is more efficient than SoC actually turns out to be really good news for we will be able to take “off-the-shelf” devices and stack them in one package that provides virtually all of the advantages an SoC would. The primary difference—and it is significant—is that by using SiP technology we will be able to manufacture devices within months rather than the years required to produce a new SoC design.

And, in much the same way that programmable processors can bring the cost economies of high volume to specialized applications, developers will be able to create highly optimized, application-specific SiPs as if they were standard ICs. This will give rise to a new product concept, the “boutique IC,” where system designers can select from a variety of “off-the-shelf” ICs and “integrate” them into a single package for about the same cost as for the individual ICs. The resulting SiP will have the advantage of faster time-to-market, a smaller footprint, and a “living” specification where new SoCs can be integrated regularly to continually cost-reduce designs. This will certainly give new meaning to the concept of “one-stop shopping” for components.

1.9 Defining the Future

We have covered a lot of ground in our discussion of the future. When you first read about many of the medical applications we have suggested in this chapter, perhaps you thought they sounded more like science fiction than fact. However, most of these devices build upon existing, proven technologies such as digital imaging, telecommunications, and automated monitoring that will change how we approach medicine.

For the common person, and even latrophobics such as Emily Dickinson, new, noninvasive techniques that are increasingly available in the comfort of their home will make the difference in diagnosing and treating diseases before they become debilitating or life-threatening. For people who live in remote locations, telemedicine will bring doctors and patients together in new and powerful ways.

The underlying IC technology required to bring many of these devices to reality is already, or will soon be, available. Moore's law will continue to give us more transistors than we can conceivably use. More general-purpose devices based on software programming models will enable the volumes that result in these transistors being available at a reasonable cost. Using some of those extra transistors to create multiprocessing circuits will provide higher performance and lower power dissipation. Finally, SiP technology will make possible smaller, more integrated designs as well as potentially enable an entirely new way to design ICs.

As a result, technology is not the limiting factor defining the future of medical imaging. Quite the opposite, technology is more the sandbox in which we can design the creations inspired by our imagination. Innovation is driven not by the fact that we can shrink a computer to fit in our pocket or our body, but rather by the fact that we need or want to shrink that computer.

For the medical industry, it is all about the patient. And what applications will arise and how fast they will manifest depends upon what patients need. Technology, for its part, will comply by providing us everything we need to make what we envision become real. So what will the future of medical imaging bring? The future will be whatever we want to make it.

The miracles are just beginning.

References

1. G.E. Moore, Cramming more components onto integrated circuits, Electronics, 38(8), 4–7, April 19, 1965.

2. A. Gene, Validity of the single processor approach to achieving large-scale computing capabilities, AFIPS Conference Proceedings, (30), 483–485, 1967.

3. Texas Instruments, SVP for digital video signal processing, Product overview, 1994, SCJ1912, Dallas, TX.

4. A.P. Chandrakasan , Low-power CMOS digital design, IEEE J. Solid-State Circ., 27(4), 473–484, April 1992.

5. S. Roundy, P.K. Wright, and J.M. Rabaey, Energy Scavenging for Wireless Sensor Networks, Kluwer Academic Press, Boston, MA, 2003.