22

Medical Image Registration: A Review

Lisa Tang and Ghassan Hamarneh

CONTENTS

22.1 Introduction to Medical Image Registration

22.2.1 Image Representation and Imaging Terminologies

22.2.3 Spatial (Geometric) Transformations

22.2.3.2 Spatial Transformation Models

22.3 Fundamentals of Image Registration

22.3.1 Mathematical Formulation of Image Registration

22.3.2.1 Smoothness Regularization: Implicit Approaches

22.3.2.2 Smoothness Regularization: Explicit Approaches

22.3.2.3 Constraints for Invertibility

22.3.2.4 Other Regularization Constraints

22.3.4.1 Closed-Form Solutions for Image Registration

22.3.4.2 Iterative Optimization

22.4 Two Commonly Adopted Registration Paradigms

22.4.1 Demons Algorithm and Its Extensions

22.1 Introduction to Medical Image Registration

With the advent of different medical imaging modalities, clinicians can now perform diagnosis in a minimally invasive manner. Nevertheless, each modality currently only provides particular types of information. CT and MRI, for example, provide structural information of our body (e.g., an anatomical map), but do not convey functional information* (i.e., relating to metabolic functions of an imaged organ). Conversely, modalities such as PET and SPECT capture functional but not structural information. This motivates image registration, the task of bringing two medical images into spatial alignment. Once images are aligned, information from different modalities can then be integrated and examined as a whole.

MIR indeed is a crucial step for many image analysis problems where information from different sources are combined and examined. The images involved may come from the same modality but captured at different times, or from multiple modalities and/or at different times, for example, as introduced earlier. Some general applications of MIR are listed in the following; more can be found in [18,38,89,160].

Multi-temporal image analysis. Images of the same subject are acquired at different times and/or under different physical conditions. Registration of these intra-subject images allows us to monitor disease progression, or to detect and/or quantify changes in an anatomy. Registration of intra-operative (between preoperative and postoperative) images can also enhance surgical procedures.

Multi-subject image analysis. Also known as (aka) intersubject registration; images of different subjects are registered for deformation-based morphology [8,114]. The subjects may also be of different species (e.g., human brain to chimpanzee brain for “asymmetry analysis” [7]).

Multimodality image fusion. As introduced earlier, images acquired from different modalities are aligned to create image fusions that facilitate clinical diagnosis [44,113]. Some clinical applications may also involve matching images of different dimensionality.

Construction and use of atlases. Group of images that are acquired from different sites and/or at different times are aligned simultaneously. This allows for statistical analysis of anatomical shapes [146], creation of probabilistic segmentations [139], brain functional localization [43], etc.

Dynamic image sequence analysis. This analysis involves dynamic stacking static images that were acquired at different time steps form dynamic image sequences, which are typically used to capture and quantify motion of an anatomy, for example, respiratory/cardiac cycle of the lungs/heart. One example application is the fusion of 2D+time ultrasound with 2D+time MRI to measure the distensibility of carotid arteries [87].

As we will see later, most of the proposed techniques for the aforementioned problems involve performing the following tasks: (1) characterizing the registration solution, which may involve a set of constraints imposed on the desired solution; (2) defining a list of criteria to quantify the optimality of a candidate solution; and (3) devising a strategy to find the desired solution. In the coming sections, we will individually examine the essential components central to each task. Let us first review the preliminaries to MIR.

22.2 Preliminaries

22.2.1 Image Representation and Imaging Terminologies

A digital image I gives a discrete representation of a continuous signal that measures physical quantities (e.g., light in photographs, or photon densities in an x-ray image). Following conventions of [56,92], the term physical or world coordinate is used to describe an arbitrary location in a continuous domain and the term image coordinate is used to index a particular cell on the image grid. We also refer to the data value at a grid center as its intensity value (“pixel” or “voxel” are also used in the context of a 2D or 3D domain, respectively) and denote a physical coordinate as x (in 2D, x = [x1 x2] represents the position of a point in the x and y dimensions).

Typically, structural images acquired from invisible light medical imaging modalities are scalar functions, that is, I: Ω ↦ ℝr, r = 1. For color images, or visible light medical images in general (e.g., dermoscopy, wound care imageries, etc.), I is a vector-valued function with r = 3. When I is an image sequence that is acquired to capture dynamic information occurring over time which is typically created by stacking static images to form 2D + time or 3D + time data, one may instead denote as I: ℝd × ℝ ↦ ℝr.

22.2.2 Data Interpolation

When one wants to query for intensity values at locations between cell centers (e.g., one third position of a cell), one must approximate these values from the original data. This is known as image interpolation or image resam-pling, and is usually done in a process where a continuous function is fitted to the original set of data points.

Common interpolation methods include nearest neighbor, linear (bi- or tri-), polynomial (cubic, quintic, etc.), and windowed sinc. Other less commonly used ones include Lagrange and Gaussian interpolation, as well as methods based on the Fourier and Wavelet transforms. For details on the latter methods proposed, see the survey of [72].

The simplest interpolation method is nearest neighbor (aka piecewise constant or proximal interpolation) where the intensity value of x is determined as the intensity value of its closest grid location. However, being a discontinuous function, nearest neighbor is not normally used for registration.

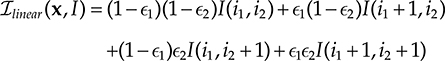

The more commonly used interpolant is linear interpolation (bilinear and trilinear for 2D and 3D, respectively). In 2D, it is defined as

where i1 and ∊1 are, respectively, the integer and remainder parts of x1 (similarly defined for those of x2). Unlike nearest neighbor, it generates spatially continuous intensity values. However, it is not differentiable at the center of grid cells, which is a problem when a gradient-based optimization technique is used to optimize the registration objective (see Section 22.3.4.2). It also causes spatially varying, low-pass filtering of the images [38], which can be a problem when computing similarity metrics on image pairs.

From the perspective of signal processing theory, the most ideal interpo-lant is one that uses the sine function because it has the fullest potential to recover the continuous signal underlying I [56,72]. For reasons of practicality, the sine function is only approximated with a Window function w. In 2D, a windowed sine interpolant is defined as

where m is the radius or spatial extent of w and

Nevertheless, the windowed sine interpolant has several trade-offs in its utilization, for example, large m, while being more precise, will require longer computation times [144].

Spline interpolation may arguably give optimal balance between computation time and precision of interpolation [73]. The spline interpolant involves representing the continuous form of I by a set of spline basis functions βj and computing a set of expansion coefficients cj of βj such that the resulting basis function interpolates the values of I at grid locations. The set of coefficients are computed by solving a linear system of equations, which is most effi-ciently done by recursive digital filtering. Intensity at x is computed by multiplying the B-spline coefficients with shifted B-spline kernels within a small support region of the requested position; in 2D, this is done as

where j indices the pixel coordinates within the support region of the spline window.

22.2.3 Spatial (Geometric) Transformations

In image registration, a spatial transform plays the crucial role of representing registration solutions in a precise manner. Mathematically, it may be defined as U: Ω ↦ ℝd, Ω ⊆ ℝd, which describes a mapping between the coordinate system of one image and that of another image.

22.2.3.1 Terminologies

A mapping U is said to be smooth if all of its partial derivatives of up to a certain order exist and are continuous. It is said to be bijective if it is a one-to-one mapping. It is said to be invertible (aka homeomorphic or topology-preserving) if it is bijective and continuous, and that its inverse is also continuous. Note that smoothness of U is not equivalent to invertibility, that is, invertibility does not require differentiability of U. When U is both smooth and invertible, it is called diffeomorphic.

Some of these properties of U are highly desirable; after all, properties of U affect the appearance of the image to which it is applied. Generally, smoothness of U is desired as it directly affects the smoothness of the image on which it is applied to. Applying a random warp (vector field with random displacements) to an image, for instance, will destroy the morphology (level sets) of an image [34].

Bijectivity, on the other hand, may or may not be required. Bijectivity of U ensures that the folding of space does not happen as multiple points cannot be mapped into the same point. In some cases, however, the folding of space should be allowed. This occurs, for example, in intra-operative image registration where tearing and resection of tissues can occur in the postoperative image. Even for registration of intersubject brain images, the topology of the brain, the shape of the ventricles, the number and shape of the sulci may vary significantly from one subject to another [114] such that bijectivity should not be strictly enforced.

Invertibility also may or may not be required. It is needed when one needs to map individual images from a set to a common space. It is also needed in order to allow for decomposition of the transformation Jacobian, which is often done in registrations of diffusion tensor images to reorient tensor values at each location after deforming an image.

Lastly, we desire a diffeomorphic transform when we need to maintain the (non-) connectivities between neighboring anatomical structures after registration [12]. Note that a diffeomorphic transform that matches arbitrary number of points can always be found (proof given in [63]).

22.2.3.2 Spatial Transformation Models

Spatial transformation models (transforms) are often used to parameterize U, thereby allowing U to be compactly represented. The ones commonly used for image registration are categorized as either linear or nonlinear.

Linear transforms include translation, rotation, rigid, affine, and perspective. A rigid transform allows for translations and rotations only; an affine transform allows for rigid as well as scale and skew (distortions that preserve parallelism of lines); a projective transform allows for all distortions that preserve colinearity (lines remain straight after distortion).

Compared to linear models, nonlinear transformation models allow for more localized deformations. Common nonlinear models include those that are based on radial basis functions (RBF). Under these models, a set of points ℘ = {xi}, for example, manually identified landmarks in Ω, is used to extrapolate U:

where

Hm−1 (x) is a polynomial of degree m − 1

β is a basis function

ci are coefficients to be solved in a system of equations

Note that ℘ may be irregularly located in Ω. Different forms of β(r) results in different types of spline [124]. For example, with r = ‖x − ℘i‖, defining the RBF as β(r) = r2 ln r and β(r) = r gives the thin-plate-spline (TPS) in 2D and 3D, respectively. With (r2 + a2), where 0 < b < 1 and a is a user-parameter that increases the amount of smoothing, one gets the multi-quadric splines (MQ). Other choices are described in [52,124].

Many RBFs like TPS and MQ give global support, that is, one control point has a global effect on U (i.e., deformations in one image region affects the entire domain). This property may cause problems as large and inconsistent displacements in ℘ can lead to singularities in the systems of equation to be solved. Furthermore, the size of ℘ directly affects computational complexity of solving the system.* In these regards, basis functions with local support are highly desired.

One of such locally supported functions is the basis-spline (B-spline). When extended to a multivariate function via the use of tensor products, it gives the free-form deformation (FFD) model. Under FFDs, the image domain is divided into a lattice whose intersections represent the positions of a set of control points B such that translating the control points induces local deformations. Performing registration is then reduced to finding the optimal translations for each control point [46], or the optimal values of the set of B-spline coefficients [107], or the divergence† and curl of U at control point [54]. The DOFs of FFD is thus determined by the size of this set of parameters. The spacing between control points determines the scale of local deformations, for example, lowering the spacing allows for more localized motion. In 2D, it has the form:

where

β.,. is a basis function of degree n

(r, s) are lattice coordinates relative to x

Despite some of its deficiencies [126], FFD is a popular choice in many applications due to its ease of implementation, low computational complexity, and the superior properties of B-spline functions (continuity and locality).

Another popular choice is to represent U with finite elements [102,122], which involves discretizing Ω with mesh elements, each with a set of meshes. To obtain values of U at points within a mesh element e, one then uses linear interpolation:

where

Be is a basis function for e

the nth node of mesh e

Lastly, parameterization of U has also been done with discrete Fourier transformation (DFT) [27] and discrete cosine transformation (DCT) [52], which involve globally supported basis functions (as they operate in frequency domain). Usually, only low-frequency basis functions are used for parameterization, which by construction gives inherently smooth regu-larized solutions [159]. Gefen [42] also modeled U with wavelet expansion and showed that its use can give more accurate registrations than use of TPS. However, the complexity of the wavelet approach depends on the size of U.

In summary, linear models and nonlinear models differ in terms of their DOFs, which in turn determine the different types of geometric distortions they induce on images. In some applications, linear models are sufficient to describe misalignment between two images, for example, registration of brain images. In others, both linear and nonlinear models are needed to correct for global and local misalignment between two images. There are also cases where misalignment between two images involve both articulation (e.g., of rigid bones) and elastic deformations (e.g., soft tissues surrounding rigid bones) such that the use of a global nonlinear transform will give elastic deformations everywhere, or conversely, the use of a global linear transform will fail to align elastic tissues. In these cases, a common strategy is to partition the image domain into different regions, determine a local (non) linear transform for each region, and employ a scheme to spatially fuse the piecewise local transforms [100]. Another approach is the use of polyrigid models [3]. Details on the aforementioned linear and nonlinear models can also be found in focused reviews [52,144] or general surveys [18,44,77,83].

22.3 Fundamentals of Image Registration

22.3.1 Mathematical Formulation of Image Registration

Let there be two images F and M. The goal of registering F and M is to find a spatial transformation U that optimally maps points in F to the corresponding points in M.* Optimality of U is measured by a set of criteria of two types: regularization (aka internal forces), which measures the regularity of U, and data dissimilarity (aka external forces), which measures how well U relate F and M based on image data. Details on these terms will be surveyed in the following subsections. Once a set of criteria has been defined for a specific registration problem, the optimal U can then be found via minimization of a cost function C that contains a weighted sum of two or more of these criteria, for example,

where

denotes a data dissimilarity term

ℛi+1 denotes a regularization term

αi is a weight on the ith term

22.3.2 Regularization

Solution of an image registration problem, U, is generally not unique.† To reg-ularize U, we may restrict the type of U to a certain space, such as the space of affine transformations or the space of diffeomorphisms (Section 22.4.1). We may also explicitly enforce constraints on the behavior of U based on our prior knowledge about the expected solution. For example, when registering x-ray breast images, one might impose incompressibility constraints in tissue deformation [104]. When registering images containing rigid bodies (e.g., bones [129]) one might impose local rigidity constraints to disallow elastic deformations in rigid regions.

In Section 22.2.3, we have outlined the desired properties of U, including smoothness and invertibility, arguably two of the most important ones. We next present how these may be achieved. Other regularization constraints proposed in the literature are highlighted in Section 22.3.2.4.

22.3.2.1 Smoothness Regularization: Implicit Approaches

A common approach to regularization is to constrain the behavior of U via use of a spatial transformation model. As discussed previously, these models impose constraints on the type of geometric distortions they induce on images. For example, rigid transforms preserve all internal angles and distances, perspective transforms only preserve colinearity (points that lie on a line remain so after they have undergone transformation), while nonlinear transforms allow for localized deformations.

Solving U using parametric transforms generally simplifies the optimization of the objective function (Equation 1.7). Rather than solving for U at each spatial location in Ω (c.f. Section 22.4.1), one only needs to resolve the transform's parameters, effectively reducing the size of the solution space (in case of linear transforms, the dimension of the search space is equal to the number of transform parameters). Furthermore, the inherent smoothness properties provided by a given spatial transformation model ensure some regularity of U. Thus, in many older works, for example [82], no explicit regularization was done. However, in more recent works, invertibility has become an important criterion [29]. As all of the aforementioned parametric models do not enforce invertibility, additional regularization terms are needed; these will be examined in Section 22.3.2.4.1.

22.3.2.2 Smoothness Regularization: Explicit Approaches

When no parameterization of U is used, any point may be displaced arbitrarily, which would lead to an unrealistic warp of M. To ensure smoothness of U, as well as other desired properties of U outlined in Section 22.2.3, explicit energy terms are needed (ℛi+1 in Equation 22.7). We now examine in detail some of the common ones found in the literature.

22.3.2.2.1 Regularizers Motivated from Basis Functions

Regularization terms in Equation 22.7 are typically defined based on an application-dependent Sobolev semi-norm (bilinear form) [90]:

where L is a differential operator on U.

One regularizer proposed by Horn and Schunk [53] is the diffusion regularizer, which penalizes variations in the gradients of U. In 2D, it is defined as

where Ui(x) denotes the ith component of U at x. This definition relates closely to the classical Tikhonov regularization for ill-posed problems. When instead an L1 norm is used, such regularization falls in the class of total variation (TV) methods [10,41], leading to a TV regularizer that is defined as

which allows for discontinuities in U. However, as it is non-differentiable at zero, researchers have proposed to replace |x| with where value of k affects amount of discontinuities allowed.

Note that the aforementioned regularizers also penalize linear transformations [92]. To address this, the curvature regularization (aka bi-harmonic) [20,92] was proposed:

which approximates the mean curvature of the surface of U. By taking the Laplacian of U instead of its gradient, linear transforms result in zero smoothness cost. Furthermore, as the null-space of the operator has infinite dimension and contains all harmonic vector fields, use of this regularizer ensures smooth deformations [92].

Another common regularizer is based on the thin-plate-spline model (Section 22.2.3):

Note that this regularization term is zero for any affine transformations and therefore penalizes only non-affine transformations.

In [22], Chen and Suter proposed a generalization of the scalar thin-plate splines (TPS) called the m-order regularization:

where the divergence and curl operators are used to impose incompressible flow and irrotational flow, respectively, and β1 and β2 weight the respective components. According to [22], this regularizer is applicable in tagged cardiac MRI registration that require the solenoidal component of U to be divergence-free.

22.3.2.2.2 Regularizers Based on Continuum Mechanics

The linear-elastic and fluid-flow models are two classic physics-based regularization methods [52,92,103].* Both models borrow concepts from continuum mechanics to describe the amount and type of forces exerted on a medium and model image deformations as responses to forces acting on either a elastic body or viscous fluid.

Elastic regularization is based on the theory of linear elasticity, which states that the body forces and “surface stresses” of a linear elastic material sum to zero when the material is in equilibrium, thus giving rise to the following Navier-Cauchy linear elastic partial differential equation (PDE) [52]:

where f denotes the body force; μ and λ are the Lame parameters, which can be interpreted in terms of Young's modulus and Poisson's ratio†, and are used to describe the relationship between stress and strain (internal forces that bring the material into equilibrium). The first term is associated to the incom-pressibility of the material [15,52]. The second term allows for expansion (dilation) or contraction of the material [17,124]. Formulating MIR with this PDE, f is modeled as matching forces based on image similarity (more details in Section 22.3.3) and the remaining terms are seen as internal forces that regu-larize U. Accordingly, elastic regularization can be formulated as [92]

The derivation of Equation 22.14 assumes that the stress and strain are linearly related, thus assuming that the elastic properties of the material is homogeneously by isotropic [52]. This is generally not true in many biological materials. In practical terms, the linear relationship assumes that the restoring forces increase monotonically with strain [29], thus penalizing large deformations. Additionally, ignoring the second-order derivatives of U would lead to large numerical errors for large deformations [52].

To address these problems, Christensen et al. [29] proposed a viscous-fluid-based model, which views a deforming image as a highly viscous (sticky and thick) fluid. Specifically, this model is built on several physical laws that govern conservation of mass, energy, linear and angular momentum. We will highlight two of the most relevant. The first is the constitutive equations [52], which state that the stress tensor Σstress is linearly related to the rate of deformation tensor D as follows:

where D is expressed as

with V being the instantaneous velocity of U.

The second is the conservation of linear momentum, which states that

where η is a parameter that allows for “arbitrary creation or destruction of mass.” Combining Equations 22.16 through 22.18 results in the Navier–Stokes–Duhem equation:

and assuming negligible change in ρ and ignoring* the inertial terms and ηV) yield the following Navier–Stokes PDE:

Remarkably, Equation 22.20 is similar to Equation 22.14 except that this PDE operates on V instead of U. Intuitively, operating on V gives a more accurate calculation of the displacement gradients (second-order gradients of U are not ignored) leading to a more realistic model of material deformations.

22.3.2.3 Constraints for Invertibility

None of the aforementioned regularization methods detailed so far ensures invertibility of U. A simple approach to ensure invertibility is to add a regu-larization term to the registration objective function (Equation 22.7) that penalizes cases when |D| < 0.3 [106,126].

In [62], Chen and Lee showed that FFD based on cubic B-splines is locally injective over Ω if the maximum displacement of control points is limited to 0.40δ, where δ is the spacing of control point grid. This means that invert-ibility of U can also be ensured by constraining the maximum displacement of control points of an FFD lattice (e.g., control points on a lattice with 20 mm spacing should be constrained to translate no more than 8 mm). However, this restriction becomes impractical when one needs to recover large deformations (i.e., >8 mm). A better alternative may be the polyrigid/polyaffine transformation models proposed in [3].

22.3.2.4 Other Regularization Constraints

22.3.2.4.1 Inverse Consistency

Related to the invertibility constraint is inverse consistency, which demands that registration results be consistent or symmetric: if U2 is the inverse transform of U, that is, M ° U = F and F ° U2 = M, then U2 ° U = Id [8,27]. Inverse consistency is important when the end-goal of MIR is to transfer some quan-titation from one image to another (e.g., segmentation via registration) such that results are invariant to choice of the reference image.

Theoretically, the process of finding an invertible transform is inherently symmetric; however, this is practically not true if an asymmetric data similarity cost is used [11]. One solution is to symmetrize the data term as done in [5,11,111], or to add a regularization that penalizes the residue between U and its inverse [26], for example,

22.3.2.4.2 Volume-Preservation

One may require U to be volume-preserving (VP), which is desired* when F and M are known to be capturing the same anatomy that does not change in size. An interesting formulation of VP is based on the optimal mass transport problem of Monge-Kantorovich [127]. A simpler alternative is the incom-pressibility constraint:

Other forms include and those given in [104].

22.3.2.4.3 Local Rigidity

When registering images containing rigid objects (e.g., bones, hard tissues), one demands that these hard objects remain rigid after registration. One can do this by enforcing additional local rigidity constraints over selected regions; for example, in 2D [91]:

where each aforementioned term measures local deviations from area-preservation (linearity), angle-preservation (orthogonality), and VP, respectively. Another [48] formulates local rigidity as hard constraints. Pennec et al. [98] also measured local rigidity with trace((JT J − Id)2).

22.3.3 Data (Dis)Similarity

The sources of information that the data (dis)similarity term is built upon can generally be classified as model-based or intensity-based [2,92].

Model-based information is often provided in forms of landmark correspondences identified by clinical experts or manual segmentation of the input images. Examples include [30] where Chui et al. used the Robust Point Matching algorithm to match manually extracted sulcal points for brain image registration, and [25,63] where researchers have matched landmarks via dif-feomorphic warps. Fuzzy or hard segmentation labels, too, can be matched using the cardinality match or Kappa statistics similarity metrics [56].

Due to the need for human intervention, accuracy of model-driven registration highly depends on the correctness of landmark correspondences or segmentation. When employing landmark correspondences, the identified landmarks should be spatially distributed [56,92]. When using segmentation, one needs to ensure that interpolation artifacts would not corrupt evaluations of image similarity. Consequently, pure use of model-based data terms is quite restrictive.

In contrast to model-based information, intensity-based information is derived entirely from the input images. This information may be based on raw image intensities or features extracted from the intensities.

In mono-modality registration, data similarity terms using information about raw intensities usually assume that the similarity between F and M is related to their intensity distributions. Assuming both F and M contain the same anatomy and U represents an optimal alignment, their intensity relationship becomes:

where

τ is zero-mean Gaussian noise

ζ is an intensity correction field

Essentially, Equation 22.25 states that after applying U to M, M and F only differ by an intensity mapping with an additive noise.

For some applications, e.g., CT-CT intra-subject image registrations, we may assume ζ = 0, thus allowing for the use of the Sum of Squared Difference (SSD) metric. If instead, intensities of F and M are linearly related, then one may use the (normalized) cross-correlation (CC) metric [5,144], the coefficient of variation [143], or those based on the normalized intensity gradients [49].

The assumption that ζ is zero or linear can easily be violated when images are corrupted by spatially-varying or “nonstationary” intensity distortions, for example, MR images. Real-world images are also often corrupted by spatially varying distortions, occlusions, illumination inhomogeneity, and reflectance artifacts [77,95].

To address intensity inhomogeneities, measures based on information theory may be used. These include joint entropy [92], entropy correlation coefficient [40], cumulative residual entropy [138], normalized mutual information (NMI) [2] and its numerous other extensions, for example, regional MI, alpha-MI, generalized survival exponential entropy–based MI (GSEE-MI) [75,78,81,107,137]. As these measures belong to a subclass of the divergence measures, which measures distances between distributions [101], they can be more robust against intensity inhomogeneities. However, while they are shown to be effective for many multimodal registration problems [2], these measures still assume global spatial dependencies between corresponding pixels. To better capture nonstationary dependencies, authors have proposed to compute these measures locally, that is, average over a small pixel neighborhood, based on the observation that spatially varying intensity distortion is constant within a small pixel neighborhood. Examples include local CC and regional MI.* More explicit ways include performing intensity correction and registration simultaneously or removing ζ (via some training procedures, etc.) before metric evaluation [37,93,95]. Alternatively, Droske and Rumpf [34] proposed to match image level sets using a Mumford-Shah formulation for registration.

Data dissimilarity terms built on feature-based information generally involve extracting and using particular type of information from the images for registration. Edge-based features include edgeness, ridges, local curvature extrema, corners, crest, and extremal lines [17,83,96,149]. Others that examine the texture of image regions include Gabor [16] and grey level cooccurrence matrix (GLCM) [16], “braintons” [75], and Laws’ texture coefficients [60]. Features that analyze and capture the structural properties in images include vesselness [21], local structure [116], and moment invariants [39,151]. Those adopted from the computer vision community include SIFT [23], SURF [157], and shape context [1,128].

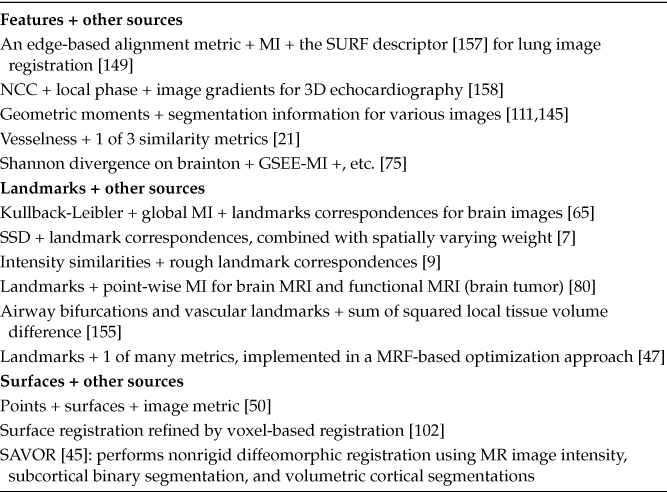

In the past decade, researchers began to adopt a hybrid approach where multiple sources of information are combined to improve existing metrics. One of the most prominent examples includes the HAMMER algorithm (Section 22.4.2), which combines segmentation-based information and geometric moment invariants (GMI), which are calculated in neighborhoods of different sizes. Avants et al. also [6] proposed the idea of integrating different image metrics and sources for DTI-registration (e.g., combining a correlation-based metric on T1 and FA images). Their software, too, allows users to combine multiple image metrics as well as landmarks. Hartkens et al. [50] also combined user-provided surface information with NMI for nonrigid registration of brain images, while Cao et al. [21] combined a feature-based measure with a conventional intensity-based measure for lung image registration. Table 22.1 highlights various other hybrid approaches.

22.3.4 Solution-Search

If the registration objective contains only one data term (e.g., Equation 22.7 contains D only), it may be possible to perform registration analytically, as we shall see in the next section. However, as Section 22.3.2 explained, reg-ularization constraints are generally needed, meaning that Equation 22.7 involves more than one energy term. Optimization methods are therefore needed to minimize a potentially complex and nonlinear objective function. Section 22.3.4.2 will highlight some of the optimization methods that have been devised for MIR.

22.3.4.1 Closed-Form Solutions for Image Registration

Most closed-form solutions for image registration operate on ordered point sets (point sets with known correspondences) that have been extracted from the images to be registered. As discussed in Section 22.2.3.2, if point correspondences are available, one may compute a nonlinear transform directly via TPS interpolation. It is also possible to resolve the parameters of a rigid transform between two ordered point sets in closed form. According to [36], the earliest method for closed-form rigid registration is based on minimization of a least squares (LS) error* (known as fiducial registration error if the point correspondences originate from fiducial markers or expert-identified landmarks) and is solved via SVD. Its later variants include different formulations of the Eigen system describing the point correspondences. Three of such are described in [36].

TABLE 22.1

Hybrid Approaches to Construction of Data Dissimilarity Terms

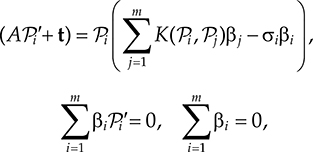

For affine motions based on least squares, regularization is needed to prevent all points in one point set to be mapped to a single point of the other set (as this would give an LS error of zero) [35]. For this, one may employ the Laplacian operator (i.e., L = a∇2 + cI where a, b are constants) for regularization and allow for inexact point correspondences by assuming Gaussian distributed noise around each. This led to the following Bayesian energy minimization as proposed in [28]:

where

m is the number of correspondences

A, t are respectively the affine matrix and translation vector to be estimated

σi is the covariance matrix that represents the spatial uncertainty of the ith pair of correspondence

By inverting the system matrix of the following system of linear equations

one attains the optimal values for A, t, and βi (a vector of weights to be calculated for each correspondence), with K(x, y) = k Id being a d × d matrix where k ∝ e‖x − y‖. The optimal transformation is then computed as . Another closed-form approach for affine registration is to reduce the affine problem to that of the orthogonal case [51]. However, the accuracy of this approach is questioned in [35].

When correspondences between extracted point sets are not known, rough estimation of the translation and rotation parameters can be done independently by first computing the displacement vector that would align the centroids of the point sets and then resolving for the rotation parameters via principal axis transform [18]. Spectral algorithms may also be used to solve rigid registrations, for example [58]. These algorithms operate by encoding point sets as affinity matrices and performing eigen-decomposition on these matrices. However, for spectral methods to work robustly, the eigen-structure of the affinity matrix must be rich in order to provide discriminating features for proper determination of point correspondences. Otherwise, optimization techniques such as the iterative closest point algorithm and its variants may be better alternatives [35].

Affine transformations may be solved in closed form using properties of the Fourier transform [125]. Furthermore, if U can be described by a finite set of displacement vectors, one may also solve for U by formulating a linear assignment problem (LAP) [130]. Recently, Westin et al. [64] also developed the polynomial expansion framework that was designed to solve global and local linear registrations analytically.

22.3.4.2 Iterative Optimization

A common approach taken by many optimization methods is to view the solution space as a 1D landscape, known as functional landscape, where a position on the landscape corresponds to a candidate solution and elevation corresponds to the optimality of a solution, that is, C. One then takes a step from the current to a new position on a path that would lead to the location of lowest elevation in the landscape. This can be mathematically expressed as

where Pk denotes a vector of transform parameters obtained in the current iteration, Pk+1 corresponds to a candidate vector to be examined next, dk is the direction of the next step, and ak is the size of the step.

There are many strategies for determining ak. For example, it can simply be set to a fixed value. It can also be defined using a decaying function of k or a line search scheme where for every k, one tries to minimize C along the search direction dk:

This can be costly as each iteration involves multiple evaluations of C, so inexact searches are usually used [67].

How one exactly computes dk and ak differentiates different optimization techniques from one and another. We next present two general classes of optimization methods: gradient-based and gradient-free. The former computes dk based on the gradient of the cost function, typically by examining the cost function with regard to changes in transformation parameters. Conversely, gradient-free approaches operate on the cost function directly. The next subsections present the details of each.

22.3.4.2.1 Gradient-Based

Gradient-based methods may be deterministic or stochastic, where the difference lies in the use of a deterministic or random process during the entire optimization process [67].

Deterministic techniques that have been employed for image registration include gradient descent, quasi-Newton, nonlinear conjugate gradient, etc. These are common in that the search direction dk is based on the derivative of the cost function with respect to the parameters. Under gradient descent techniques, the search direction is updated as

Klein et al. [66] explored two variants of the gradient descent method. One applies a decaying function on k for ak; the other uses an inexact line search routine called “More-Thuente” to determine ak.

Quasi-Newton (QN) methods may have “better theoretical convergence properties than gradient descent,” thanks to the use of second-order information [66]. They are based on the Newton–Raphson algorithm, which is given by

where H(Pk) is the Hessian matrix of the cost function. However, calculation of the inverse of the computation is expensive, so QN methods approximate the Hessian, for example, L = [H(Pk)]−1. Calculation of Lk can be done in several ways, including Symmetric-Rank-1, Davidon–Fletcher–Powell, Broyden–Fletcher–Goldfarb–Shanno (BFGS), and the Limited memory BFGS, which is a variant of BFGS that avoids the need to store L in memory. According to [67], BFGS is very efficient in many applications.

The nonlinear conjugate gradient (NCG) method is based on the linear conjugate gradient method, which was first designed for solving a system of linear equations. NCG is an extension that is suitable for minimizing nonlinear functions. Under NCG, the search direction is defined as a linear combination of the cost gradient and the previous search direction, that is,

where the scalar βk affects the convergence property and can be computed using different equations (see examples in [67]).

All of the aforementioned methods require an exact calculation or close approximation of the first- or second-order functional gradient. This can be very computational expensive. To address this, approximation of the cost gradient using stochastic sampling is done. The Kiefer–Wolfowitz method, for instance, uses finite difference to approximate the gradient, that is, [67]. This method requires 2N evaluations of the cost function for each k where N is the dimensionality of the solution space. The simultaneous perturbation method may also be used, which only performs two evaluations of the cost function, independent of N. The Robbins-Monro algorithm approximates the functional derivative using only a small subset of voxels randomly selected in every iteration. It was shown to give best compromise between convergence and accuracy over all aforementioned methods (both deterministic and stochastic ones), as evaluated in various registration experiments [67]. For further details on the aforementioned or other gradient-based optimization algorithms, we refer readers to Klein's thesis [67].

22.3.4.2.2 Gradient-Free

Next, we present different classes of gradient-free approaches, many of which make few or no assumptions about the problem being optimized and can conduct searches in a very large solution space. Often, some form of stochastic procedures is used in these methods.

22.3.4.2.2.1 Search Approaches

Powell's method (Powell's conjugate gradient descent method) is one commonly used gradient-free, search-based optimization technique. It finds the optimum in a d-dimensional solution space with a series of 1D searches. For example, finding the optimal parameters for an affine 3D transformation usually starts with a 1D search along lateral translation direction, then along the cranial–caudal translation direction, and followed by a rotation about each of the three axes. The choice of ordering influences the success of optimization and many have adopted heuristics to select the most suitable order.

A related algorithm is the simplex algorithm (aka Nelder-Mead simplex, downhill simplex, or amoeba method). Following the analogy of [144], one can see simplex as a creature with n + 1 feet crawling in an n-D search space. One of its feet stands on the initial parameter estimate while the rest are randomly placed. At each iteration, the cost function is evaluated at each foot position and the foot with the worst cost is moved to improve its cost. Some specific rules are designed to guide which foot to move next and by how much.

Other examples of search-based algorithms include simulated annealing, DIRECT [135], and Tabu search.

22.3.4.2.2.2 Evolutionary Algorithms

A representative class of metaheuristics is evolutionary algorithms (EAs). EAs are based on the principle of natural selection, that is, the fittest survives. The solution space is seen as a dynamic population. A candidate solution plays the role of an individual in a population and its function cost corresponds to its fitness value of surviving in the population. Evolution of the population then occurs after repeated applications of reproduction (aka inheritance), mutation, recombination (aka crossover), and selection. Many methods based on this idea have been proposed in the literature; an extensive review is given in [31].

One popular choice is the evolutionary strategy (ES). In each iteration, “children” are created via mutation of the population (its “parents”). A mutation is a random vector generated from a multidimensional normal distribution. The covariance matrix of the distribution is adapted in each iteration: it is increased if the new population consists of better individuals or decreased otherwise. The population then reduces back to its original size by keeping only the fittest. A variant is the one-plus-one ES [115], where both the population size and the number of children generated are equal to 1; this variant was used by Chillet et al. [24] to affinely register 3D models of brain and liver vasculatures (encoded with an inverted distance map) to CT or MR images.

A relatively new class of algorithms for multimodal function optimization is those based on artificial immune systems (AIS), which are inspired by theoretical immunologic models. They are capable of locating the global minimum of a function as well as a large number of strong local optima by automatically adjusting the population size and combining local with global searches. Recently, Delibasis et al. [33] applied it to solve for a spatial matching between point correspondences identified in medical images.

Of all EA methods, the current state-of-the-art is probably the covariance matrix adaptation method [67]. It has several phases. In the reproduction phase, a set of m search directions (dk) is generated from a normal distribution whose covariance matrix is computed to favor search directions that were successful in previous iterations (and m is a user-defined parameter controlling the population size). In the selection phase, the cost function is evaluated for each search direction using Equation 22.28, and the l best search directions are selected. Finally, in the recombination phase, a weighted average of the l selected trial directions is computed to generate the next set of search directions. Recommendations for the choice of m and l based on n are given in [67].

22.3.4.2.2.3 Discrete Optimization

Discrete optimization operates by searching for the best solution out of a discrete set of solutions. When applied to image registration, they usually involve modeling the deformation U as a random field and recasting registration of F and M as a Markov Random Field (MRF) energy minimization problem [14,46,71,109], which formulates the statistical dependence between F and M as

where the conditional probability p(F|U, M) is the data likelihood that models dependence between intensity values between the two images and the prior probability p(U) is the prior that favors plausible deformations. As we will see shortly, these probabilities essentially correspond to data dissimilarity and regularization terms of a typical registration objective given in Equation 22.7. Assume F is conditionally independent of U and M, the first term in the preceding becomes

Assuming further that F(x) only depends on M ° U(x) and not the rest of the values in M, one obtains

We will now see how use of discrete optimization can simplify maximum a posterior (MAP) inference. Let the spatial coordinates of M be a graph G (whose set of nodes V correspond to x ∊ Ω and edges (xi, xj) ∊ ∊ encode neighborhood relationships, for example, 4-connectivity in 2D, etc.). In the simplest form, the data likelihood can model pixel-wise dependence between F and M with a Gaussian noise model, that is,

and the prior can be encoded as a regularization penalty that examines the interactions between displacements of two neighboring points xi, xj, that is,

Performing MAP inference can now be done as minimization of the following:

which leads to minimization of the following MRF energy:

where the first summation over unary potentials θi is identical to the SSD metric (Section 22.3.3) and the second summation over pair-wise potentials θij resembles a Laplacian regularizer on U (Section 22.3.2). Note that this MRF energy contains potentials of first-order* and that the maximum clique of its corresponding graph is size of 2.

MAP inference with MRF as given earlier can be solved with different classes of algorithms; these include linear programming, message passing, and graph-cut. Algorithms for solving integer linear programs include cutting-plane method, branch-and-bound, branch-and-cut, etc. Message passing algorithms include Belief Propagation, Tree Reweighted Message Passing (TRMP), and Max-Product algorithms. Graph-cut algorithms include α-expansion and α-β swap [14].

Different matching problems have adopted a discrete optimization approach [14,70]. For MIR, one of the earliest applications of MRF energy minimization include [121] where Tang and Chung resolved U at every location via minimization of Equation 22.38. Lombaert et al. [79] later extended [120] by incorporating the use of landmarks for deformable registration of coronary angiograms.

To control deformations more effectively, Glocker et al. [46] parameterized U with FFD transformation model and applied MAP inference to solve for the optimal translations of the control points of the B-spline model. To minimize their objective function, they employed Fast-PD [69] (stands for Fast Primal-Dual approximation), which works by solving the energy minimization problem by a series of graph-cut computations. The process reuses the primal and dual solutions of the linear programming relaxation of the energy minimization problem obtained in the previous iteration, thus effectively decreases run time; see review [47] for details. Their method was widely accepted due to its high efficiency and public availability made possible through their software called DROP, which contains implementations of various image similarity measures. Kwon et al. [71] also used the FFD framework for 2D registration, but proposed the following higher-order regularization term that does not penalize global transformations like Equation 22.38 would:

where Ud denotes the dth component of U. The higher-order MRF energy was then converted to a pair-wise one, so it could be solved with TRWP.

In [109], Shekhovtsov et al. performed MRF-based matching using a linear programming relaxation technique. However, they proposed to decompose a 2D deformation model into x and y components so that the problem could be solved with sequential TRMP. Lastly, Lempitsky et al. [74] proposed the fusion-move approach to combine discrete optimization with continuously valued solutions for 2D image registration, effectively avoiding local minima while avoiding problems due to discretizing the deformation space. Specifically, candidate flow fields (Û) were generated using the method of [53]. Each candidate flow was seen as a “proposal” label l ∊ ℒ, which was then iteratively examined and fused in a moving-making approach. MRF-energy minimization in each iteration was performed with the Quadratic Pseudo Boolean Optimization algorithm [68]. In regularizing U, the authors proposed the following pair-wise potentials:

where and b is a constant. Fused solutions were then refined with gradient-based optimization.

22.4 Two Commonly Adopted Registration Paradigms

22.4.1 Demons Algorithm and Its Extensions

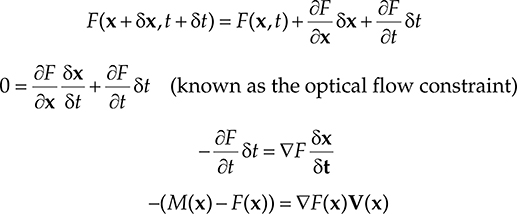

To better understand thermodynamic equilibrium, the Scottish physicist James Maxwell [123] came up with the concept of demons as door guards that selectively allow “hot” molecules through. Adopting this concept, Thirion [123] proposed to formulate registration as a process where demons locally push voxels into or out of object boundaries to allow for image matching, by treating images as isointensity contours and computing demon forces to push these contours in their normal direction to encourage image alignment. The orientation and magnitude of the forces are derived from the instantaneous optical flow equation [56], which assumes that M is the deformed F, whose deformations δx are due to local motions that occur after δt, that is,

where the last equation is known as the brightness constancy assumption. Using linear approximation, we get,

The method alternates between computing the image forces and performing regularization on the motion field using Gaussian smoothing. This simple scheme is computationally efficient. In [97], Pennec et al. showed that the Demons algorithm can be seen as an approximation of a second-order gradient-descent on the SSD metric. Bro-Nielsen [17] also showed that applying the Gaussian filter instead of the real linear elastic filter is an approximation of the fluid model; see details in Section 22.3.2.2.2. Surveys of the state of art of optical flow algorithms are given in [10,135] and the references therein; the latter in particular also provides public data sets and methodologies for evaluation of optical flow algorithms. [75] also provides ground truth motion fields of real-world videos.

Since its conception, different extensions of Thirion's Demons algorithm were proposed; these include extension of the image forces to be based on cross-correlation [5] or phase [59]. In [19], Cachier et al. proposed the PASHA algorithm, which incorporates the addition of a random field C (essentially, an “intermediate transformation” [85]) to allow for inexact point correspondences as given by U. This leads to minimization of the following energy

where the first term measures the quality of the field of point correspondence C between the deformed M and F based on a data dissimilarity measure; γn accounts for the noise in the image, γc accounts for spatial uncertainty on the correspondences, and γ controls the amount of regularization on U. Rather than solving the complex energy minimization in Equation 22.46, Cachier et al. alternatively optimize Equation 22.46 with regard to C given U, and then optimize Equation 22.46 with regard to U given C. The second optimization has a closed-form solution when the regularization is quadratic and uniform, and can be obtained by setting it as the convolution of C by a Gaussian kernel [132].

To solve the first optimization, that is, minimize with regard to C given U, one linearly approximates the data (first) term for every pixel as

where J(x) = − ∇M° U(x). This allows one to rewrite the first optimization as

where γn(x) = |F(x) − M ° C(x)| is a local estimation of noise that is used. Since the approximation for each pixel is independent of each other, one instead solves a simple system for each pixel using the following normal equation:

All of this leads to the following modified demons algorithm:

Start with an initial estimate of Û.

Compute an update for C as given in Equation 22.45.

To enforce fluid-like regularization (Section 22.3.2.2), set C ← K * C, where K is generally a Gaussian kernel.* Otherwise, skip this step.

Compute C ← U + C.

To enforce elastic-like regularization, compute U ← K * C.

Otherwise, compute U ← C.

where the convolution of C with a Gaussian kernel is an efficient approximation to elastic or fluid regularization.

To spatially adapt regularization, Stefanescu et al. [114] smoothed U using a variable Gaussian kernel whose size depended on a scalar field that encoded the expected amount of deformations as estimated from prior segmentation of the anatomy. The authors also filtered C after each iteration according to a measure that is based on local intensity variance and gradient.

Yet another extension is called the Log Domain Diffeomorphic Demons (LDDD), which was proposed by Vercauteren et al. [131,133] to constrain U to reside in the space of diffeomorphisms Diff(Ω). Recall that when performing an n-dimensional optimization search in ℛn, the iterative step used in Newton's optimization method is of the form Pk+1 = Pk + akdk, where dk is the descent direction, which lies in the tangent space of ℛn. n to the space of diffeomorphisms (i.e., smooth manifold), the descent direction that lies in the tangent space at Pk is different from the tangent spaces of any other point. To map a point on the tangent space of Pk to a point on the manifold, one thus employs the exponential map operation, that is, exp: V↦ Diff(Ω). Accordingly, parameter-izing U and C by stationary velocity fields V and VC via exponential map, that is, U = exp(V), C = exp(VC), one now minimizes the log-domain version of Equation 22.46 with regard to VC:

The iterative update in the aforementioned step 4 becomes

where δv is a small update velocity field that was derived in [84] as .

To further enforce inverse consistency (Section 22.3.2.4.1), Vercauteren et al. further proposed a symmetric version of LDDD [132]. Other extensions of the demons algorithm include multichannel diffeomorphic demons [99]; spherical demons, which operates on the sphere for surface registration [153]; as well as iLogDemons [85], which incorporates elasticity and incompress-ibility constraints.

22.4.2 block-Matching and HAMMER

Block-matching (BM) is an efficient scheme for isolating and selecting salient parts of an image to drive the process of registration. Usually, a BM algorithm consists of the following steps that iterate until certain convergence criterion is met. First, blocks of image pixels computed from F and M are matched according to their similarity scores and displacement vectors are computed from the best matches. Second, displacements of all voxels in the entire domain are then computed from the matches via interpolation, for example, using TPS [84] or a Gaussian weighting scheme [111]. Then, regularization on U is subsequently enforced via Gaussian filtering on U.

This matching method was originally proposed for video sequence* matching but has been successfully used for MIR since its first adoption in [32] by Collins et al. Some of its successful applications include elastic registration of neck-and-head, paraspine, and prostate images as performed in [84] (where blocks belonging to detected anatomical landmarks were matched based on local cross-correlation), as well as piecewise affine registration of biological images (e.g., histological sections, autoradiographs, cryosections, etc.) as performed in [100] (where robust least square was used to estimate the local transformations associated to each matching pair).

Both demons framework and BM algorithms are quite similar in that both perform regularization of U via post-filtering (i.e., apply filtering on update of U per iteration). However, rather than computing the data forces for all voxels, BM involves an additional/explicit step of preselecting salient regions only in which data forces are calculated for matching. Unlike the original demons algorithm, BM algorithms are also capable of recovering large deformations thanks to the strategy of matching blocks of different scales hierarchically (e.g., start with large blocks, then reduce their sizes in subsequent stages). For example, it was shown capable of recovering rotations up to 28° in a study involving rat brain sections (see details in [100]).

One frequently cited BM-based registration framework is called HAMMER, which stands for Hierarchical Attribute Matching Mechanism for Elastic Registration. First proposed in [111] by Shen and Daatziko, important voxels or driving voxels are selected based on the attribute vectors (AV) (Section 22.3.3) computed from each voxel. The selection of voxels and their influences on the current estimate of U are hierarchically determined. Specifically, in the early stages of registration, the similarity between two driving voxels is determined based on the weighted sum of the AV similarities in the subvol-umes that encompass each of the two voxels. A match occurs only when the integrated similarity is beyond a user-defined threshold, which is initially set to a high value. The threshold as well as the size of the subvolumes are then decreased at a user-defined rate in each iteration.

Some of the later extensions and applications of HAMMER include the following:

22.5 Evaluating Registrations

A logical start on evaluating registration results is to examine them subjectively. For images of the same modality, one can visualize the results by examining the registered images individually on separate windows with a linked cursor (“split window validation” [86]), examining their checker-board or difference image, or viewing them jointly with alpha-bending.* For images of different modality, the alpha-bending is the most common tool as all image characteristics in both images can be displayed simultaneously [119]. Furthermore, if segmentation of one or both of the two images is available, one can also overlay the segmentation contours of one image on top of the image to be matched to; see [16] for examples.

In general, due to limits of subjective evaluation,† qualitative approaches are also guided with quantitative evaluation.

Quantitative evaluation of a registration technique may be broadly classified in terms of the nature of the experiment: synthetic or real.

Validation based on synthetic data is generally conducted in a controlled setting, usually performed to evaluate particular components of a registration method. For example, when performing registration with gradient-based optimization, one often examines the “smoothness” and the capture range of the metric (by computing the metric between two images with regard to a range of misalignments). One may also examine the robustness of an algorithm, that is, registration results remain reasonable even in extreme scenarios. A formalized protocol involving the aforementioned measures on a given image pair with known “ground truth” registration is given in [112]. One may also examine an algorithms’ performance by studying its inverse consistency and transitivity (i.e., registration results transfer, e.g., propagating registration result from A to B to C to B, and then back to A should yield zero in the composition of all obtained deformations maps [13]).

For mono-modality registration, where one assumes that M is a deformed version of F with additive noise corruption, one common practice to quantify registration quality is to compute the mean square error (MSE) between the deformed moving image and fixed image. MSE may further be used to compute the Peak-Signal-to-Noise Ratio (PSNR), which equals to where Imax is the maximum intensity between two images. The PSNE is a common measure to evaluate image reconstruction quality, and has been used in [134] to measure registration accuracy. However, an implausible warp may always produce zero MSE. Generally, examining intensity values alone does not evaluate the plausibility and quality of U and so both MSE and PSNR should not be the sole quantitative measurements.

A more direct and arguably more complete evaluation approach is to examine an algorithm's ability to recover known misalignments. Starting with a pair of images, subjectively judged as registered, one then applies random misalignments and/or warps to one of the pair [61]. Then, a registration error metric (e.g., angular error or mean Euclidean distance) is computed to measure the residual between the obtained transform and the known transform (that misaligned the original pair) within the region of interest. Countless works, for example [10,46,71,109,117,118,121,140,157–159], have adopted this approach.

Validation based on real data involves performing actual image registrations and employing additional data, ideally provided by clinical experts, to quantify accuracy of the obtained results. For example, if landmark correspondences can be acquired, one may compute the target registration error (TRE) [141]. However, in order for precise assessment, it is important that the correspondences are as spatially distributed as possible, especially for results obtained from deformable registration. If segmentation of the images is available, one can also apply the warp to the segmentation and compute overlap measures* between the target and deformed segmentations. The latter approach is known as morphology-based evaluation [5,66].

Several issues evolve around landmark-based and morphology-based evaluations. First, acquiring additional validation data is difficult and laborious. For instance, landmarking 3D images (usually using 2D displays) is a challenging task due to limited sense of spatial context and depth. And when one or both of the input images are functional data (e.g., PET or SPECT, where anatomical boundaries cannot be reliably segmented automatically or manually), it becomes extremely difficult [136], if not impossible. Second, low interoperator variability† of the segmentation/landmarking results cannot always be guaranteed. To reduce variability that may be introduced by operator's biases and experience, protocols have been established for segmentation, for example, brain images [66], and for identification of anatomical landmark, for example, femur [108]. Third, morphology-based evaluation does not provide a complete insight of registration accuracy. One problem is that quantitation cannot be expressed in a unit that is relevant for task of registration (e.g., distance in mm). Another is that it focuses only on segmented regions and disregards unsegmented structures as well as the regions within or surrounding the segmented regions.

Due to the aforementioned factors, evaluation based on segmentations are usually regarded as “bronze” evaluation, suggesting that one should interpret with caution.

In the MIR literature, numerous researchers have performed comparative studies to evaluate a set of registration algorithms that have been designed for different problems. The earliest work is the Nonrigid Image Registration Evaluation Project (NIREP), which standardized a set of benchmarks and metrics (overlap, variance of image intensities in a population of registered images,‡ transitivity, and inverse consistency). The initial phase of NIREP involved a data set of 16 labeled brain images. Other comparative analyses include study of [128], where Urschler et al. compared six deformable registration algorithms with an experiment involving synthetic deformations applied to two pairs CT lung images.

Recently in 2010, Murphy et al. [94] have set up a web-based public platform called EMPIRE to allow for a “fair and meaningful comparison of registration algorithms applied to thoracic CT data.” The website allows developers to download image pairs and upload the corresponding registrations they obtained for evaluation as performed by the website. Four sets of measures are then later reported on the site, which include those based on alignment of lung boundary and fissures. As of October 2011, over 30 teams have submitted their results to this website.

For brain image, comparative studies in which at least three nonlinear algorithms were compared on whole-brain registrations that have been completed in the last decade include:

Studies by Hellier et al. (see [66]): A series of publications that compared five nonlinear methods that focused on registration accuracy in the cortical areas. Two sets of evaluation measures were used, including segmentation overlap, deformation quality (amount of singularities present), and symmetric hausdorff distance between segmented sulci surfaces. The authors also examined results in terms of shape similarity using PCA.

Study by Yassa and Stark [152]: Six algorithms and two semi-automated methods were compared based on two measures (overlap and “measure of blur” similar to intensity variance) computed in the cortical areas. They remarked that their evaluation involving 18 images could not affirm that the nonrigid methods they examined can perform better in registering major cortical sulci.

Study of Klein et al. [66]: the study compared 14 registration algorithms using four data sets by performing over 2168 image registrations per algorithm and computing various evaluation measures and performing statistical analyses on the obtained evaluation measures. Top-ranking methods were SyN (Symmetric Normalization) [8]), ART*, IRTK [105], and SPM's DARTEL toolbox [4], with the first two giving top scores in all tests and for all label sets. SyN is recommended if high accuracy is desired; ROMEO† and Diffeomorhpic Demons [131] are more efficient alternatives for time-sensitive tasks.

22.6 Conclusions

This chapter reviewed the various techniques proposed for MIR, as well as those relating to registration evaluation. In summary, key tasks of a registration procedure include (1) characterization of the registration solution Section 22.3.2; (2) definition of one or a set of criterion to quantify the optimality of the solution (Section 22.3.3; and (3) design and employment of an optimization strategy for finding the solution (Section 22.3.4.2). As there is currently no generic registration algorithm that works in all applications, choices of data (dis)similarity terms and optimization strategy remain highly problem-specific.

There remain many open questions in MIR. For instance, MIR is challenged by the need for tuning a potentially long list of parameters that relate to optimization and feature extraction settings, as well as the weights between data dissimilarity and regularization terms. Furthermore, when operated on large images (e.g., vector- or tensor-valued images and dynamic sequences), MIR is burdened by heavy computation and memory demands. Future research works that aim at addressing these issues are therefore both interesting and important.

References

1. O. Acosta, J. Fripp, A. Rueda, D. Xiao, E. Bonner, P. Bourgeat, and O. Salvado. 3D shape context surface registration for cortical mapping. In Proceedings of the 2010 IEEE International Conference on Biomedical Imaging: From Nano to Macro, ISBI’10, IEEE Press, Piscataway, NJ, 2010, pp. 1021–1024.

2. A. Pluim, J. Maintz, and M. Viergever. Mutual information based registration of medical images: A survey. IEEE Transactions on Medical Imaging, 21:61–75, 2003.

3. V. Arsigny, X. Pennec, and N. Ayache. Polyrigid and polyaffine transformations: A new class of diffeomorphisms for locally rigid or affine registration. In Proceedings of MICCAI, Springer, Heidelberg, Germany, 2003, pp. 829–837.

4. J. Ashburner. A fast diffeomorphic image registration algorithm. Neuroimage, 38(1):95–113, 2007.

5. B. Avants, C. Epstein, M. Grossman, and J. Gee. Symmetric diffeomorphic image registration with cross-correlation: Evaluating automated labeling of elderly and neurodegenerative brain. Medical Image Analysis, 12(1):26–41, 2008. Special Issue on The Third International Workshop on Biomedical Image Registration—WBIR 2006.

6. B. Avants, N. Tustison, and G. Song. Advanced normalization tools: V1.0. Insight Journal, http://hdl.handle.net/10380/3113, July 2009.

7. B. B. Avants, P. T. Schoenemann, and J. C. Gee. Lagrangian frame diffeomor-phic image registration: Morphometric comparison of human and chimpanzee cortex. Medical Image Analysis, 10(3):397–412, 2006. Special Issue on The Second International Workshop on Biomedical Image Registration (WBIR’03).

8. B. B. Avants, N. J. Tustison, G. Song, P. A. Cook, A. Klein, and J. C. Gee. A reproducible evaluation of ANTS similarity metric performance in brain image registration. Neuroimage, 54(3):2033–2044, 2011.

9. A. Azar, C. Xu, X. Pennec, and N. Ayache. An interactive hybrid non-rigid registration framework for 3D medical images. In Third IEEE International Symposium on Biomedical Imaging: Nano to Macro, 2006, Paris, France, April 2006, pp. 824–827.

10. S. Baker, D. Scharstein, J. P. Lewis, S. Roth, M. J. Black, and R. Szeliski. A database and evaluation methodology for optical flow. International Journal of Computer Vision, 92(1):1–31, 2011.

11. M. Beg and A. Khan. Symmetric data attachment terms for large deformation image registration. IEEE Transactions on Medical Imaging, 26(9):1179–1189, September 2007.

12. M. F. Beg, M. I. Miller, A. Trouvé, and L. Younes. Computing large deformation metric mappings via geodesic flows of diffeomorphisms. International Journal of Computer Vision, 61:139–157, February 2005.

13. E. T. Bender and W. A. Tomé. The utilization of consistency metrics for error analysis in deformable image registration. Physics in Medicine and Biology, 54(18):5561, 2009.

14. Y. Boykov and V. Kolmogorov. An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision. In Proceedings of the Third International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, EMMCVPR ‘01, Springer-Verlag, London, U.K., 2001, pp. 359–374.

15. M. Bro-Nielsen. Medical image registration and surgery simulation. PhD thesis, IMM-DTU, 1996.

16. M. Bro-Nielsen. Rigid registration of CT, MR and cryosection images using a GLCM framework. In J. Troccaz, E. Grimson, and R. Mösges, eds., CVRMed-MRCAS’97, vol. 1205 of Lecture Notes in Computer Science, Springer, Berlin, Germany, 1997, pp. 171–180. 10.1007/BFb0029236.

17. M. Bro-Nielsen and C. Gramkow. Fast fluid registration of medical images. In Proc. Visualization in Biomedical Computing (VBC’96), September, Springer Lecture Notes in Computer Science, Hamburg, Germany, vol. 1131, 1996, pp. 267–276.

18. L. G. Brown. A survey of image registration techniques. ACM Computing Surveys, 24:325–376, December 1992.

19. P. Cachier, J.-F. Mangin, X. Pennec, D. Rivière, D. Papadopoulos-Orfanos, J. Régis, and N. Ayache. Multisubject non-rigid registration of brain MRI using intensity and geometric features. In Proceedings of MICCAI, Utrecht, the Netherlands, 2001, pp. 734–742.

20. N. D. Cahill, J. A. Noble, and D. J. Hawkes. A demons algorithm for image registration with locally adaptive regularization. In Proceedings of the 12th International Conference on Medical Image Computing and Computer-Assisted Intervention: Part I, MICCAI ‘09, Springer-Verlag, Berlin, Germany, 2009, pp. 574–581.

21. K. Cao, K. Ding, G. E. Christensen, M. L. Raghavan, R. E. Amelon, and J. M. Reinhardt. Unifying vascular information in intensity-based nonrigid lung CT registration. In WBIR, Springer-Verlag, Berlin, Germany, 2010, pp. 1–12.

22. F. Chen and D. Suter. Image coordinate transformation based on div-curl vector splines. In ICPR’98, IEEE Computer Society Press, Brisbane, QLD, Australia, 1998, pp. 518–520.

23. W. Cheung and G. Hamarneh. n-sift: n-dimensional scale invariant feature transform. IEEE Transactions on Image Processing, 18(9):2012–2021, September 2009.

24. D. Chillet, J. Jomier, D. Cool, and S. Aylward. Vascular atlas formation using a vessel-to-image affine registration method. In R. Ellis and T. Peters, eds., Medical Image Computing and Computer-Assisted Intervention—MICCAI 2003, vol. 2878 of Lecture Notes in Computer Science, Springer, Berlin, Germany, 2003, pp. 335–342.

25. G. Christensen, P. Yin, M. W. Vannier, K. Chao, J. F. Dempsey, and J. Williamson. Large-deformation image registration using fluid landmarks. In Fourth IEEE Southwest Symposium on Image Analysis and Interpretation, 2000, pp. 269–273.

26. G. E. Christensen and H. J. Johnson. Consistent image registration. IEEE Transaction on Medical Imaging, 20(7):568–582, 2001.

27. G. E. Christensen and H. J. Johnson. Invertibility and transitivity analysis for nonrigid image registration. Journal of Electronic Imaging, 12(1):106–117, 2003.

28. G. E. Christensen, S. C. Joshi, M. I. Miller, and S. Member. Volumetric transformation of brain anatomy. IEEE Transactions on Medical Imaging, 16:864–877, 1997.

29. G. E. Christensen, R. D. Rabbitt, and M. I. Miller. 3D brain mapping using a deformable neuroanatomy. Physics in Medicine and Biology, 39(3):609–618, 1994.

30. H. Chui, J. Rambo, J. Duncan, R. Schultz, and A. Rangarajan. Registration of cortical anatomical structures via robust 3D point matching. In A. Kuba, M. áamal, and A. Todd-Pokropek, eds., Information Processing in Medical Imaging, vol. 1613 of Lecture Notes in Computer Science, 1999, pp. 168–181.

31. C. A. C. Coello. A comprehensive survey of evolutionary-based multiobjective optimization techniques. Knowledge and Information Systems, 1:269–308, 1998.

32. D. L. Collins and A. C. Evans. ANIMAL: Validation and applications of nonlinear registration-based segmentation. IJPRAI, 11(8):1271–1294, 1997.

33. K. K. Delibasis, P. A. Asvestas, and G. K. Matsopoulos. Automatic point correspondence using an artificial immune system optimization technique for medical image registration. Computerized Medical Imaging and Graphics (in press).

34. M. Droske and M. Rumpf. Multiscale joint segmentation and registration of image morphology. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(12):2181–2194, December 2007.

35. S. Du, N. Zheng, S. Ying, and J. Liu. Affine iterative closest point algorithm for point set registration. Pattern Recognition Letters, 31(9):791–799, 2010.

36. D. Eggert, A. Lorusso, and R. Fisher. Estimating 3-d rigid body transformations: a comparison of four major algorithms. Machine Vision and Applications, 9:272–290, 1997. 10.1007/s00138005 0048.

37. A. El-Baz, A. Farag, G. Gimel'farb, and A. Abdel-Hakim. Image alignment using learning prior appearance model. In 2006 IEEE International Conference on Image Processing, Atlanta, Georgia, October 2006, pp. 341–344.

38. J. M. Fitzpatrick, D. L. G. Hill, and C. R. Maurer. Image registration. In J. M. Fitzpatrick and M. Sonka, eds., Handbook of Medical Imaging, Vol. 2. Medical Image Processing and Analysis (SPIE Press Book). Academic Press, Inc., San Diego, CA, 2000, pp. 449–506.

39. J. Flusser. Moment invariants in image analysis. In Proceedings of the International Conference on Computer Science. ICCS’06, Reading, U.K., 2006, pp. 196–201.

40. F. Maes, A. Collignon, D. Vandermeulen, G. Marchal, and P. Suetens. Multi-modality image registration by maximization of mutual information. IEEE Transaction on Medical Imaging, 16:187–189, 1997.

41. C. Frohn-Schauf, S. Henn, and K. Witsch. Multigrid based total variation image registration. Computing and Visualization in Science, 11:101–113, 2008. 10.1007/s00791-007-0060-2.