17

Reliability Management

17.1 Corporate Policy for Reliability

A really effective reliability function can exist only in an organization where the achievement of high reliability is recognized as part of the corporate strategy and is given top management attention. If these conditions are not fulfilled, and if it receives only lip service, reliability effort will be cut back whenever cost or time pressures arise. Reliability staff will suffer low morale and will not be accepted as part of project teams. Therefore, quality and reliability awareness and direction must start at the top and must permeate all functions and levels where reliability can be affected.

Several factors of modern industrial business make such high level awareness essential. The high costs of repairs under warranty, and of those borne by the user, even for relatively simple items such as domestic electronic and electrical equipment, make reliability a high value property. Other less easily quantifiable effects of reliability, such as customer goodwill and product reputation, and the relative reliability of competing products, are also important in determining market penetration and retention.

17.2 Integrated Reliability Programmes

The reliability effort should always be treated as an integral part of the product development and not as a parallel activity unresponsive to the rest of the development programme. This is the major justification for placing responsibility for reliability with the project manager. Whilst specialist reliability services and support can be provided from a central department in a matrix management structure, the responsibility for reliability achievement must not be taken away from the project manager, who is the only person who can ensure that the right balance is struck in allocating resources and time between the various competing aspects of product development.

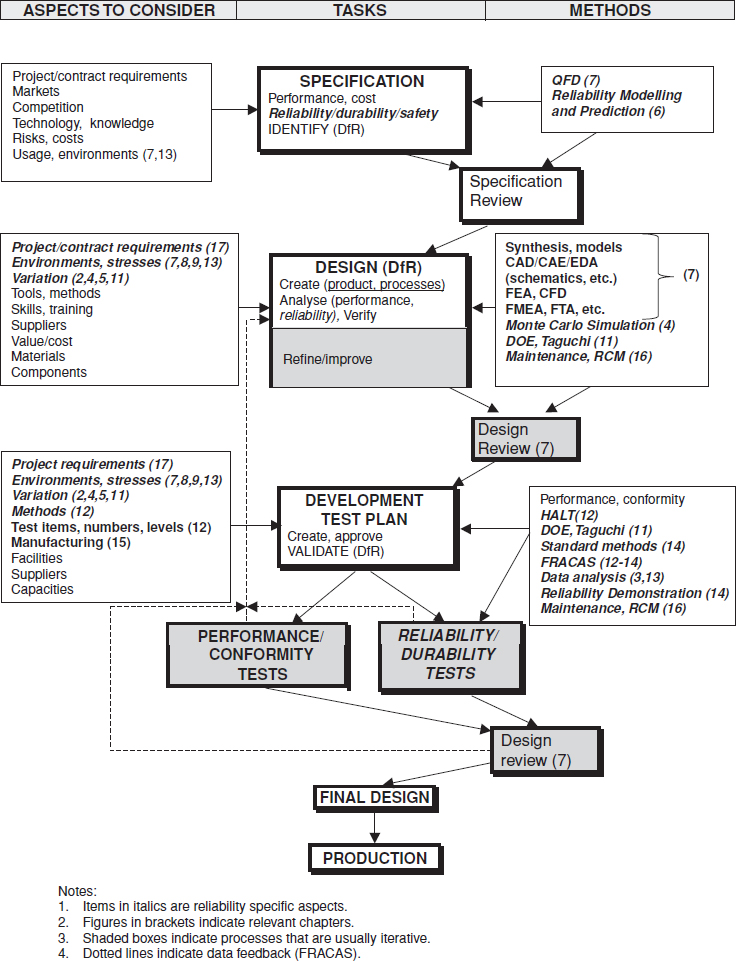

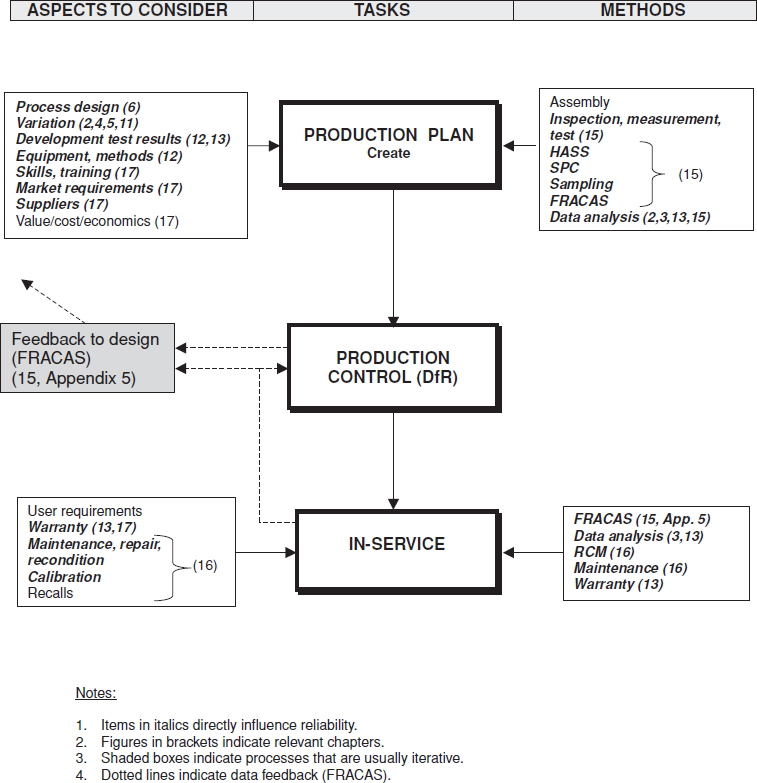

The elements of a comprehensive and integrated reliability programme are shown, related to the overall development, production and in-service programme, in Figure 17.1 and Figure 17.2. These show the continuous feedback of information, so that design iteration can be most effective. Most of the design for reliability (DfR) tools and activities (Chapter 7) feature in the Figure 17.1 flow.

Since production quality will affect reliability, quality control is an integral part of the reliability programme. Quality control cannot make up for design shortfalls, but poor quality can negate much of the reliability effort. The quality control effort must be responsive to the reliability requirement and must not be directed only at reducing production costs and the passing of a final test or inspection. Quality control can be made to contribute most effectively to the reliability effort if:

Figure 17.1 Reliability Programme Flow (design/development).

Figure 17.2 Reliability Programme Flow (production, in-service).

- Quality procedures, such as test and inspection criteria, are related to factors which can affect reliability, and not only to form and function. Examples are tolerances, inspection for flaws which can cause weakening, and the need for adequate screening when appropriate.

- Quality control test and inspection data are integrated with the other reliability data.

- Quality control personnel are trained to recognize the relevance of their work to reliability, and trained and motivated to contribute.

An integrated reliability programme must be disciplined. Whilst creative work such as design is usually most effective when not constrained by too many rules and guidelines, the reliability (and quality) effort must be tightly controlled and supported by mandatory procedures. The disciplines of design analysis, test, reporting, failure analysis and corrective action must be strictly imposed, since any relaxation can result in a reduction of reliability, without any reduction in the cost of the programme. There will always be pressure to relax the severity of design analyses or to classify a failure as non-relevant if doubt exists, but this must be resisted. The most effective way to ensure this is to have the agreed reliability programme activities written down as mandatory procedures, with defined responsibilities for completing and reporting all tasks, and to check by audit and during programme reviews that they have been carried out. More material on integrating reliability programmes can be found in Silverman (2010).

17.3 Reliability and Costs

Achieving high reliability is expensive, particularly when the product is complex or involves relatively untried technology. The techniques described in earlier chapters require the resources of trained engineers, management time, test equipment and products for testing, and it often appears difficult to justify the necessary expenditure in the quest for an inexact quantity such as reliability. It can be tempting to trust to good basic design and production and to dispense with specific reliability effort, or to provide just sufficient effort to placate a customer who insists upon a visible programme without interfering with the ‘real’ development activity. However, experience points to the fact that all well-managed reliability efforts pay off.

17.3.1 Costs of Reliability

There are usually practical limits to how much can be spent on reliability during a development programme. However, the authors are unaware of any programme in which experience indicated that too much effort was devoted to reliability or that the law of diminishing returns was observed to be operating to a degree which indicated that the programme was saturated. This is mainly due to the fact that nearly every failure mode experienced in service is worth discovering and correcting during development, owing to the very large cost disparity between corrective action taken during development and similar action (or the cost of living with the failure mode) once the equipment is in service. The earlier in a development programme that the failure mode is identified and corrected the cheaper it will be, and so the reliability effort must be instituted at the outset and as many failure modes as possible eliminated during the early analysis, review and test phases. Likewise, it is nearly always less costly to correct causes of production defects than to live with the consequences in terms of production costs and unreliability.

It is dangerous to generalize about the cost of achieving a given reliability value, or of the effect on reliability of stated levels of expenditure on reliability programme activities. Some texts show a relationship as in Figure 1.7 (Chapter 1) with the lowest total cost (life cycle cost – LCC) indicating the ‘optimum reliability’ (or quality) point. However, this can be a misleading picture unless all cost factors contributing to the reliability (and unreliability) are accounted for. The direct failure costs can usually be estimated fairly accurately, related to assumed reliability levels and yields of production processes, but the cost of achieving these levels is much more difficult to forecast. There are different models accounting for the cost of reliability. For example Kleyner and Sandborn (2008) propose a comprehensive life cycle cost model accounting for the various aspects of achieving and demonstrating reliability as well as the consequent warranty costs. As described above, the relationship is more likely to be a decreasing one, so that the optimum quality and reliability is in fact closer to 100%, as shown in Figure 1.9 (Chapter 1).

Several standard references on quality management suggest considering costs under three headings, so that they can be identified, measured and controlled. These quality costs are the costs of all activities specifically directed at reliability and quality control, and the costs of failure. Quality costs are usually considered in three categories:

- Prevention costs.

- Appraisal costs.

- Failure costs.

Prevention costs are those related to activities which prevent failures occurring. These include reliability efforts, quality control of bought-in components and materials, training and management.

Appraisal costs are those related to test and measurement, process control and quality audit.

Failure costs are the actual costs of failure. Internal failure costs are those incurred during manufacture. These cover scrap and rework costs (including costs of related work in progress, space requirements for scrap and rework, associated documentation, and related overheads). Failure costs also include external or post-delivery failure costs, such as warranty costs; these are the costs of unreliability.

Obviously it is necessary to minimize the sum of quality and reliability costs over a suitably long period. Therefore the immediate costs of prevention and appraisal must be related to the anticipated effects on failure costs, which might be affected over several years. Investment analysis related to quality and reliability is an uncertain business, because of the impossibility of accurately predicting and quantifying the results. Therefore the analysis should be performed using a range of assumptions to determine the sensitivity of the results to assumed effects, such as the yield at test stages and reliability in service.

For example, two similar products, developed with similar budgets, may have markedly different reliabilities, due to differences in quality control in production, differences in the quality of the initial design or differences in the way the reliability aspects of the development programme were managed. It is even harder to say by how much a particular reliability activity will affect reliability. $ 20 000 spent on FMECA might make a large or a negligible difference to achieved reliability, depending upon whether the failure modes uncovered would have manifested themselves and been corrected during the development phase, or the extent to which the initial design was free of shortcomings.

The value gained from a reliability programme must, to a large extent, be a subjective judgement based upon experience and related to the way the programme is managed. The reliability programme will usually be constrained by the resources which can be usefully applied within the development time-scale. Allocation of resources to reliability programme activities should be based upon an assessment of the risks. For a complex new design, design analysis must be thorough and searching, and performed early in the programme. For a relatively simple adaptation of an existing product, less emphasis may be placed on analysis. In both cases the test programme should be related to the reliability requirement, the risks assessed in achieving it and the costs of non-achievement. The two most important features of the programme are:

- The statement of the reliability aim in such a way that it is understood, feasible, mandatory and demonstrable.

- Dedicated, integrated management of the programme.

Provided these two features are present, the exact balance of resources between activities will not be critical, and will also depend upon the type of product. A strong test–analyse-fix programme can make up for deficiencies in design analysis, albeit at higher cost; an excellent design team, well controlled and supported by good design rules, can reduce the need for testing. The reliability programme for an electronic equipment will not be the same as for a power station. As a general rule, all the reliability programme activities described in this book are worth applying insofar as they are appropriate to the product, and the earlier in the programme they can be applied the more cost-effective they are likely to prove.

In a well-integrated design, development and production effort, with all contributing to the achievement of high quality and reliability, and supported by effective management and training, it is not possible to isolate the costs of reliability and quality effort. The most realistic and effective approach is to consider all such effort as investments to enhance product performance and excellence, and not to try to classify or analyse them as though they were burdens.

17.3.2 Costs of Unreliability

The costs of unreliability in service should be evaluated early in the development phase, so that the effort on reliability can be justified and requirements can be set, related to expected costs. The analysis of unreliability costs takes different forms, depending on the type of development programme and how the product is maintained. The example below illustrates a typical situation.

Example 17.1

A commercial electronic communication system is to be developed as a risk venture. The product will be sold outright, with a two year parts and labour warranty. Outline the LCC analysis approach and comment on the support policy options.

The analysis must take account of direct and indirect costs. The direct costs can be related directly to failure rate (or removal rate, which is likely to be higher).

The direct costs are:

- Warranty repair costs.

The annual warranty repair cost will be:

(Number of warranted units in use) × (annual call rate per unit) × (cost per call).

The number of warranted units will be obtained from market projections. The call rate will be related to MTBF and expected utilization.

- Spares production and inventory costs for warranty support.

Spares costs: to be determined by analysis (e.g. Poisson model, simulation) using inputs of call rate, proportion of calls requiring spares, spares costs, probability levels of having spares in stock, repair time to have spares back in stock, repair and stockholding costs.

- Net of profits on post-warranty repairs and spares.

Annual profit on post-warranty spares and repairs: analysis to be similar to warranty costs analysis, but related to post-warranty equipment utilization.

Indirect costs (not directly related to failure or removal rate):

- Service organization (training manuals, overheads). (Warranty period contribution).

- Product reputation.

These costs cannot be derived directly. A service organization will be required in any case and its performance will affect the product's reputation. However, a part of its costs will be related to servicing the warranty. A parametric estimate should be made under these headings, for example:

Service organization: 50% of annual warranty cost in first two years, 25% thereafter.

Product reputation: agreed function of call rate.

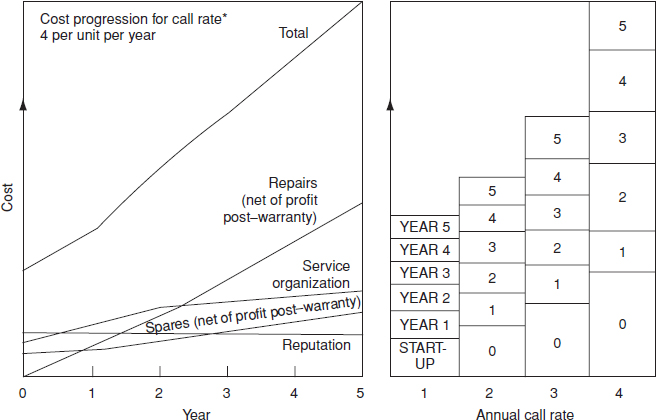

Since these costs will accrue at different rates during the years following launch, they must all be evaluated for, say, the first five years. The unreliability costs progression should then be plotted (Figure 17.3) to show the relationship between cost and reliability.

The net present values of unreliability cost should then be used as the basis for planning the expenditure on the reliability programme.

This situation would be worth analysing from the point of view of what support policy might show the lowest cost for varying call rates. For example, a very low call rate might make a ‘direct exchange, no repair’ policy cost-effective, or might make a longer warranty period worth considering, to enhance the product's reputation. Direct exchange would result in service department savings, but a higher spares cost.

The example given above involves very simple analysis and simplifying assumptions. A Monte Carlo simulation (Chapter 4) would be a more suitable approach if we needed to consider more complex dynamic effects, such as distributed repair times and costs, multi-echelon repair and progressive increase in units at risk. However, simple analysis is often sufficient to indicate the magnitude of costs, and in many cases this is all that is needed, as the input variables in logistics analysis are usually somewhat imprecise, particularly failure (removal) rate values. Simple analysis is adequate if relatively gross decisions are required. However, if it is necessary to attempt to make more precise judgments or to perform sensitivity analyses, then more powerful methods such as simulation should be considered.

There are of course other costs which can be incurred as a result of a product's unreliability. Some of these are hard to quantify, such as goodwill and market share, though these can be very large in a competitive situation and where consumer organizations are quick to publicize failure. In extreme cases unreliability can lead to litigation, especially if damage or injury results. An unreliability cost often overlooked is that due to failures in production due to unreliable features of the design. A reliable product will usually be cheaper to manufacture, and the production quality cost monitoring system should be able to highlight those costs which are due to design shortfalls.

Figure 17.3 Reliability cost progression (Example 17.1).

17.4 Safety and Product Liability

Product liability legislation in the United States, Europe and in other countries adds a new dimension to the importance of eliminating safety-related failure modes, as well as to the total quality assurance approach in product development and manufacture. Before product liability (PL), the law relating to risks in using a product was based upon the principle of caveat emptor (‘let the buyer beware’). PL introduced caveat venditor (‘let the supplier beware’). PL makes the manufacturer of a product liable for injury or death sustained as a result of failure of his product. A designer can now be held liable for a failure of his design, even if the product is old and the user did not operate or maintain it correctly. Claims for death or injury in many product liability cases can only be defended successfully if the producer can demonstrate that he has taken all practical steps towards identifying and eliminating the risk, and that the injury was entirely unrelated to failure or to inadequate design or manufacture. Since these risks may extend over ten years or even indefinitely, depending upon the law in the country concerned, long-term reliability of safety-related features becomes a critical requirement. The size of the claims, liability being unlimited in the United States, necessitates top management involvement in minimizing these risks, by ensuring that the organization and resources are provided to manage and execute the quality and reliability tasks which will ensure reasonable protection. PL insurance is a business area for the insurance companies, who naturally expect to see a suitable reliability and safety programme being operated by the manufacturers they insure.

Abbot and Tyler (1997) provide an overview of this topic.

17.5 Standards for Reliability, Quality and Safety

Reliability programme standard requirements have been issued by several large agencies which place development contracts on industry. The best known of these was US MIL-STD-785 – Reliability Programs for Systems and Equipments, Development and Production which covers all development programmes for the US Department of Defense. MIL-STD-785 was supported by other military standards, handbooks and specifications, and these have been referenced in earlier chapters. This book has referred mainly to US military documents, since in many cases they are the most highly developed and best known. However, in 1995 the US Department of Defense cancelled most military standards and specifications, including MIL-STD-785, and downgraded others to handbooks (HDBK) for guidance only. Military suppliers are now required to apply ‘best industry practices’, rather than comply with mandatory standards.

In the United Kingdom, Defence Standards 00–40 and 00–41 cover reliability programme management and methods for defence equipment, and BS 5760 has been published for commercial use, and can be referenced by any organization in developing contracts. ARMP-1 is the NATO standard on reliability and maintainability. Some large agencies such as NASA, major utilities and corporations issue their own reliability standards. International standards have also been issued, and some of these are described below.

These official standards generally (but not all) tend to over-emphasize documentation, quantitative analysis, and formal test. They do not reflect the integrated approach described in this book and used by many modern engineering companies. They suffer from slow response to new ideas. Therefore they are not much used outside the defence and related industries. However, it is necessary for people involved in a reliability programme, whether from the customer or supplier side, to be familiar with the appropriate standards.

17.5.1 ISO/IEC60300 (Dependability)

ISO/IEC60300 is the international standard for ‘dependability’, which is defined as covering reliability, maintainability and safety. It describes management and methods related to these aspects of product design and development. The methods covered include reliability prediction, design analysis, maintenance and support, life cycle costing, data collection, reliability demonstration tests, and mathematical/statistical techniques; most of these are described in separate standards within the ISO/IEC60000 series. Manufacturing quality aspects are not included in this standard. For more on IEC standards see Barringer (2011).

ISO/IEC60300 has not, so far, been made the subject of audits and registration in the way that ISO9000 has (see next section).

17.5.2 ISO9000 (Quality Systems)

The international standard for quality systems, IS09000, has been developed to provide a framework for assessing the quality management system which an organization operates in relation to the goods or services provided. The concept was developed from the US Military Standard for quality management, MIL-Q-9858, which was introduced in the 1950s as a means of assuring the quality of products built for the US military. However, many organizations and companies rely on ISO9000 registration to provide assurance of the quality of products and services they buy and to indicate quality of their products and services.

Registration is to the relevant standard within the ISO9000 ‘family’. ISO9001 is the standard applicable to organizations that design, develop and manufacture products. We will refer to ‘ISO9000 registration’ as a general indication.

ISO9000 does not specifically address the quality of products and services, nor does it prescribe methods for achieving quality, such as design analysis, test and quality control. It describes, in very general terms, the system that should be in place to assure quality. In principle, there is nothing in the standard to prevent an organization from producing poor quality goods or services, so long as written procedures exist and are followed. An organization with an effective quality system would normally be more likely to take corrective action and to improve processes and service, than would one which is disorganized. However, the fact of registration should not be considered as a guarantee of quality.

In the ISO9000 approach, suppliers’ quality management systems (organization, procedures, etc.) are audited by independent ‘third party’ assessors, who assess compliance with the standard and issue certificates of registration. Certain organizations are ‘accredited’ as ‘certification bodies’ by the appropriate national accreditation services. The justification given for third party assessment is that it removes the need for every customer to perform his own assessment of all of his suppliers. However, a matter as important as quality cannot safely be left to be assessed infrequently by third parties, who are unlikely to have the appropriate specialist knowledge, and who cannot be members of the joint supplier–purchaser team. The total quality management (TQM) philosophy (see Section 17.17.5) demands close, ongoing partnership between suppliers and purchasers.

Since its inception, ISO9000 has generated considerable controversy. The effort and expense that must be expended to obtain and maintain registration tend to engender the attitude that optimal standards of quality have been achieved. The publicity that typically goes with initial certification of a business supports this belief. The objectives of the organization, and particularly of the staff directly involved in obtaining and maintaining registration, are directed at the maintenance of procedures and at audits to ensure that staff work to them. It can become more important to work to procedures than to develop better ways of working. However, some organizations have generated real improvements as a result of certification. So why is the approach so widely used? The answer is partly cultural and partly coercive.

The cultural pressure derives from the tendency to believe that people perform better when told what to do, rather than when they are given freedom and the necessary skills and motivation to determine the best ways to perform their work. This belief stems from the concept of scientific management, as described in Drucker (1955) and O'Connor (2004).

The coercion to apply the standard comes from several directions. In practice, many agencies demand that bidders for contracts must be registered. All contractors and their subcontractors supplying the UK Ministry of Defence must be registered, since the MoD decided to drop its own assessments in favour of the third party approach, and the US Department of Defense decided to apply ISO9000 in place of MIL-STD-Q9858. Several large companies, as well as public utilities, demand that their suppliers are registered to ISO9000 or to industry versions, such as QS9000 for automotive and TL9000 for telecommunications applications. Defenders of ISO9000 say that the total quality management (TQM) approach is too severe for most organizations, and that ISO9000 can provide a ‘foundation’ for a subsequent total quality effort. However, the foremost teachers of modern quality management all argued against this view. They point out that any organization can adopt the TQM philosophy, and that it will lead to greater benefits than will registration to the standard, and at lower costs.

There are many books in publication that describe ISO9000 and its application: Hoyle (2009) is an example.

17.5.3 IEC61508 (Functional Safety of Electrical/Electronic/Programmable Electronic Safety-Related Systems)

IEC61508 is the international standard to set requirements for design, development, operation and maintenance of ‘safety-related’ control and protection systems which are based on electrical, electronics and software technologies. A system is ‘safety-related’ if any failure to function correctly can present a hazard to people. Thus, systems such as railway signalling, vehicle braking, aircraft controls, fire detection, machine safety interlocks, process plant emergency controls and car airbag initiation systems would be included. The standard lays down criteria for the extent to which such systems must be analysed and tested, including the use of independent assessors, depending on the criticality of the system. It also describes a number of methods for analysing hardware and software designs.

The extent to which the methods are to be applied is determined by the required or desired safety integrity level (SIL) of the safety function, which is stated on a range from 1 to 4. SIL 4 is the highest, relating to a ‘target failure measure’ between 10−5 and 10−4 per demand, or 10−9 and 10−8 per hour. For SIL 1 the figures are 10−2 − 10−1 and 10−6 − 10−5. For the quantification of failure probabilities reliability prediction methods such as those covered in Chapter 6 are recommended. The methods listed include ‘use of well-tried components’ (recommended for all SILs), ‘simulation’ (recommended for SIL 2, 3 and 4), and ‘modularization’ (‘highly recommended’ for all SILs).

In the early 2000's ISO produced an automotive version of this standard, IEC 26262. It addresses functional safety in a way similar to IEC61508 with appropriate adaptations for road vehicles.

The value and merits for both standards are somewhat questionable. The methods described are often inconsistent with accepted best industry practices. The issuing of these standards has lead to a growth of bureaucracy, auditors and consultants, and increased costs. It is unlikely to generate any improvements in safety, for the same reasons that ISO9000 does not necessarily improve quality. However, they exist and compliance is often mandatory, so system designers must be aware of them and ensure that the requirements are met.

17.6 Specifying Reliability

In order to ensure that reliability is given appropriate attention and resources during design, development and manufacture, the requirement must be specified. Before describing how to specify reliability adequately, we will cover some of the ways of how not to do it:

- Do not write vague requirements, such as ‘as reliable as possible’, ‘high reliability is to be a feature of the design’, or ‘the target reliability is to be 99%’. Such statements do not provide assurance against reliability being compromised.

- Do not write unrealistic requirements. ‘Will not fail under the specified operation conditions’ is a realistic requirement in many cases. However, an unrealistically high reliability requirement for, say, a complex electronic equipment will not be accepted as a credible design parameter, and could therefore be ignored.

The reliability specification must contain:

- A definition of failure related to the product's function. The definition should cover all failure modes relevant to the function.

- A full description of the environments in which the product will be stored, transported, operated and maintained.

- A statement of the reliability requirement, and/or a statement of failure modes and effects which are particularly critical and which must therefore have a very low probability of occurrence. Examples of reliability metrics to be used are discussed in Section 14.2. GMW 3172 (2004) can be used as an example of a detailed reliability specification. Also UK Defense Standard 00-40 covers the preparation of reliability specifications in detail.

17.6.1 Definition of Failure

Care must be taken in defining failure to ensure that the failure criteria are unambiguous. Failure should always be related to a measurable parameter or to a clear indication. A seized bearing indicates itself clearly, but a leaking seal might or might not constitute a failure, depending upon the leak rate, or whether or not the leak can be rectified by a simple adjustment. An electronic equipment may have modes of failure which do not affect function in normal operation, but which may do so under other conditions. For example, the failure of a diode used to block transient voltage spikes may not be apparent during functional test, and will probably not affect normal function. Defects such as changes in appearance or minor degradation that do not affect function are not usually relevant to reliability. However, sometimes a perceived degradation is an indication that failure will occur and therefore such incidents can be classified as failures.

Inevitably there will be subjective variations in assessing failure, particularly when data are not obtained from controlled tests. For example, failure data from repairs carried out under warranty might differ from data on the same equipment after the end of the warranty period, and both will differ from data derived from a controlled reliability demonstration. The failure criteria in reliability specifications can go a long way to reducing the uncertainty of relating failure data to the specification and in helping the designer to understand the reliability requirement.

17.6.2 Environmental Specifications

The environmental specification must cover all aspects of the many loads and other effects that can influence the product's strength or probability of failure. Without a clear definition of the conditions which the product will face, the designer will not be briefed on what he is designing against. Of course, aspects of the environmental specification might sometimes be taken for granted and the designer might be expected to cater for these conditions without an explicit instruction. It is generally preferable, though, to prepare a complete environmental specification for a new product, since the discipline of considering and analysing the likely usage conditions is a worthwhile exercise if it focuses attention on any aspect which might otherwise be overlooked in the design. Environments are covered in Section 7.3.2. For most design groups only a limited number of standard environmental specifications is necessary. For example, the environmental requirements and methods of test for military equipment are covered in specifications such as US MIL-STD-810 and UK Defence Standard 07–55. Another good example is the automotive validation standard GMW3172 (2004) mentioned earlier.

The environments to be covered must include handling, transport, storage, normal use, foreseeable misuse, maintenance and any special conditions. For example, the type of test equipment likely to be used, the skill level of users and test technicians, and the conditions under which testing might be performed should be stated if these factors might affect the observed reliability.

17.6.3 Stating the Reliability Requirement

The reliability requirement should be stated in a way which can be verified, and which makes sense relative to the use of the product. For example, there is little point in specifying a time between failures if the product's operation will be measured only in distance travelled, or if it will not be measured at all (either in a reliability demonstration or during service).

Levels of reliability can be stated as a success ratio, or as a life. For ‘one-shot’ items the success ratio is the only relevant criterion.

Reliability specifications based on life parameters must be framed in relation to the appropriate life distributions. The examples of reliability metrics appropriate for reliability specifications are covered in Section 14.2.

Specified life parameters must clearly state the life characteristic. For example, the life of a switch, a sequence valve or a data recorder cannot be usefully stated merely as a number of hours. The life must be related to the duty cycle (in these cases switch reversals and frequency, sequencing operations and frequency, and anticipated operating cycles on record, playback and switch on/off). The life parameter may be stated as some time-dependent function, for example distance travelled, switching cycles, load reversals, or it may be stated as a time, with a stipulated operating cycle.

17.7 Contracting for Reliability Achievement

Users of equipment which can have high unreliability costs have for some time imposed contractual conditions relative to reliability. Of course, every product warranty is a type of reliability contract. However, contracts which stipulate specific incentives or penalties related to reliability achievement have been developed, mainly by the military, but also by other major equipment users such as airlines and public utilities.

The most common form of reliability contract is one which ties an incentive or penalty to a reliability demonstration. The demonstration may either be a formal test (see the methods covered in Chapter 14) or may be based upon the user's experience. In either case, careful definition of what constitutes a relevant failure is necessary, and a procedure for failure classification must be agreed. If the contract is based only on incentive payments, it can be agreed that the customer will classify failures and determine the award, since no penalty is involved. One form of reliability incentive contract is that used for spacecraft, whereby the customer pays an incentive fee for successful operation for up to, say, two years.

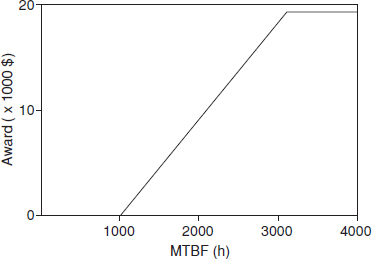

Figure 17.4 Reliability incentive structure.

Incentive payments have advantages over incentive/penalty arrangements. It is important to create a positive motivation, rather than a framework which can result in argument or litigation, and incentives are preferable in this respect. Also, an incentive is easier to negotiate, as it is likely to be accepted as offered. Incentive payments can be structured so that, whilst they represent a relatively small percentage of the customer's saving due to increased reliability, they provide a substantial increase in profit to the supplier. The receipt of an incentive fee has significant indirect advantages, as a morale booster and as a point worth quoting in future bid situations. A typical award fee structure is shown in Figure 17.4.

When planning incentive contracts it is necessary to ensure that other performance aspects are sufficiently well specified and, if appropriate, also covered by financial provisions such as incentives or guarantees, so that the supplier is not motivated to aim for the reliability incentive at the expense of other features. Incentive contracting requires careful planning so that the supplier's motivation is aligned with the customer's requirements. The parameter values selected must provide a realistic challenge and the fee must be high enough to make extra effort worthwhile.

17.7.1 Warranty Improvement Contracts

Billions of dollars are spent by manufacturers on warranty each year. Therefore in many industries manufacturers make the efforts to motivate their suppliers to improve their reliability and consequently reduce warranty. For example, some automotive manufacturers would cover the cost of warranty up to a certain failure rate (e.g., one part per thousand vehicles per year), and everything above that rate would be the supplier's responsibility. In recent years there have been more and more efforts on the part of manufacturers to force their suppliers to pay their ‘fair share’ of the warranty costs. Warranty Week (2011) newsletter publishes a comprehensive coverage of finance and management aspects of warranty for various industries and regions.

17.7.2 Total Service Contracts

A total service supply contract is one in which the supplier is required to provide the system, as well as all of the support. The purchaser does not specify a quantity of systems, but a level of availability. Railway rolling stock contracts in Europe epitomize the approach: the rail company specifies a timetable, and the supplier must determine and build the appropriate number of trains, provide all maintenance and other logistic support, including staffing and running the maintenance depots, spares provisioning, and so on. All the train company does is operate the trains. The contracts include terms to cover failures to meet the timetable due to train failures or non-availability. Similar contracts are used for the supply of electronic instrumentation to large buyers, medical equipment to hospitals, and some military applications such as trainer aircraft.

A total supply contract places the responsibility and risk aspects of reliability firmly with the supplier, and can therefore be highly motivating. However, there can be long-term disadvantages to the purchaser. The purchaser's organization can lose the engineering knowledge that might be important in optimizing trade-offs between engineering and operational aspects, and in planning future purchases. When the interface between engineering and operation is purely financial and legal, with separate companies working to different business objectives, conflicts of interests can arise, leading to sub-optimization and inadequate co-operation. Since the support contract, once awarded, cannot be practically changed or transferred, the supplier is in a monopoly situation. The case of Railtrack in the UK, which ‘outsourced’ all rail and other infrastructure maintenance to contractors, and in the process lost the knowledge necessary for effective management, resulting directly in a fatal crash and a network-wide crisis due to cracked rails, provides a stark warning of the potential dangers of the approach. It is interesting that, by contrast, the airlines continue to perform their own maintenance, and the commercial aircraft manufacturers concentrate on the business they know best.

17.8 Managing Lower-Level Suppliers

Lower-level suppliers can have a major influence on the reliability of systems. It is quite common that 80% or more of failures of systems such as trains, aircraft, ships, factory and infrastructure systems, and so on can be ‘bought’ from the lower-level suppliers. In smaller systems, such as machines and electronic equipment, items such as engines, hydraulic pumps and valves, power supplies, displays, and so on are nearly always bought from specialist suppliers, and their failures contribute to system unreliability. Therefore it is essential that the project reliability effort is directed as much to these suppliers as to internal design, development and manufacturing. Continuous globalization and outsourcing also affect the work with lower-level suppliers. It is not uncommon these days to have suppliers located all over the globe including regions with little knowledge depth regarding design and manufacturing processes and with a less robust quality system in place.

To ensure that lower-level suppliers make the best contributions to system reliability, the following guidelines should be applied:

- Rely on the existing commercial laws that govern trading relationships to provide assurance. In all cases this provides for redress if products or services fail to achieve the performance specified or implied. Therefore, if failures do occur, action to improve or other appropriate action can be demanded. In many cases warranty terms can also be exercised. However, if the contract stipulates a value of reliability to be achieved, such as MTBF or maximum proportion failing, then in effect failures are being invited. When failures do occur there is a tendency for discussion and argument to concentrate on statistical interpretations and other irrelevancies, rather than on engineering and management actions to prevent recurrence. We should specify success, not failure.

- Do not rely solely on ISO 9000 or similar schemes to provide assurance. As explained above, these approaches provide no direct assurance of product or service reliability or quality.

- Engineers should manage the selection and purchase of engineering products. It is a common practice for companies to assign this function to a specialized purchasing organization, and design engineers must submit specifications to them. This is based on the argument that engineers might not have knowledge of the business aspects that purchasing specialists do. However, only the engineers concerned can be expected to understand the engineering aspects, particularly the longer-term impact of reliability. Engineers can quickly be taught sufficient purchasing knowledge, or can be supported by purchasing experts, but purchasing people cannot be taught the engineering knowledge and experience necessary for effective selection of engineering components and sub-systems.

- Do not select suppliers on the basis of the price of the item alone. (This is point number 4 of Deming's famous ‘14 points for managers’, Deming, 1987.) Suppliers and their products must be selected on the basis of total value to the system, over its expected life. This includes performance, reliability, support, and so on as well as price.

- Create long-term partnerships with suppliers, rather than seek suppliers on a project-by-project basis or change suppliers for short-term advantage. In this way it becomes practicable to share information, rewards and risks, to the long-term benefit of both sides. Suppliers in such partnerships can teach application details to the system designers, and can respond more effectively to their requirements.

These points are discussed in more detail in O'Connor (2004).

17.9 The Reliability Manual

Just as most medium to large design and development organizations have internal manuals covering design practices, organizational structure, Quality Assurance (QA) procedures, and so on, so reliability management and engineering should be covered. Depending on the type of product and the organization adopted, reliability can be adequately covered by appropriate sections in the engineering and QA manuals, or a separate reliability manual may be necessary. In-house reliability procedures should not attempt to teach basic principles in detail, but rather should refer to appropriate standards and literature, which should of course then be made available. If reliability programme activities are described in military, national or industry standards, these should be referred to and followed when appropriate. The bibliographies at the end of each chapter of this book list the major references.

The in-house documents should cover, as a minimum the following subjects:

- Corporate policy for reliability.

- Organization for reliability.

- Reliability procedures in design (e.g. design analysis, parts derating policy, parts, materials and process selection approval and review, critical items listing, design review).

- Reliability test procedures.

- Reliability data collection, analysis and action system, including data from test, warranty, etc (see Appendix 5).

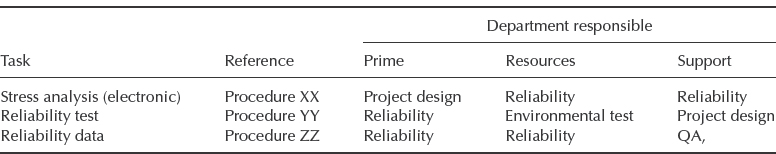

The written procedures must state, in every case, who carries responsibility for action and who is responsible for providing the resources and capability. They must also state who provides supporting services. A section from the reliability manual may appear as shown in Table 17.1.

Table 17.1 Reliability manual: responsibilities.

17.10 The Project Reliability Plan

Every project should create and work to a written reliability plan. It is normal for customer-funded development projects to include a requirement for a reliability plan to be produced.

The reliability plan should include:

- A brief statement of the reliability requirement.

- The organization for reliability.

- The reliability activities that will be performed (design analysis, test, reports).

- The timing of all major activities, in relation to the project development milestones.

- Reliability management of suppliers.

- The standards, specifications and internal procedures (e.g. the reliability manual) which will be used, as well as cross-references to other plans such as for test, safety, maintainability and quality assurance.

When a reliability plan is submitted as part of a response to a customer request for proposals (RFP) in a competitive bid situation, it is important that the plan reflects complete awareness and understanding of the requirements and competence in compliance.

A reliability plan prepared as part of a project development, after a contract has been accepted, is more comprehensive than an RFP response, since it gives more detail of activities, time-scales and reporting. Since the project reliability plan usually forms part of the contract once accepted by the customer, it is important that every aspect is covered clearly and explicitly.

A well-prepared reliability plan is useful for instilling confidence in the supplier's competence to undertake the tasks, and for providing a sound reliability management plan for the project to follow.

Appendix 6 shows an example of a reliability and maintainability plan, including safety aspects.

17.10.1 Specification Tailoring

Specification tailoring is a term used to describe the process of suggesting alternatives to the customer's specification. ‘Tailoring’ is often invited in RFPs and in development contracts. A typical example occurs when a customer specifies a system and requires a formal reliability demonstration. If a potential supplier can supply a system for which adequate in-service reliability records exist, the specification could be tailored by proposing that these data be used in place of the reliability demonstration. This could save the customer the considerable expense of the demonstration. Also, it is not uncommon for a potential supplier to take an exception to certain requirements in the RFP if they do not appear feasible or possible for the supplier to implement. Other examples might arise out of trade-off studies, which might show, for instance, that a reduced performance parameter could lead to cost savings or reliability improvement.

17.11 Use of External Services

The retention of staff and facilities for analysis and test and the maintenance of procedures and training can only be cost-effective when the products involve fairly intensive and continuous development. In advanced product areas such as defence and aerospace, electronic instrumentation, control and communications, vehicles, and for large manufacturers of less advanced products such as domestic equipment and less complex industrial equipment, a dedicated reliability engineering organization is necessary, even if it is not a contractual requirement. Smaller companies with less involvement in risk-type development may have as great a need for reliability engineering expertise, but not on a continuous basis. External reliability engineering services can fulfil the requirements of smaller companies by providing the specialist support and facilities when needed. Reliability engineering consultants and specialist test establishments can often be useful to larger companies also, in support of internal staff and facilities. Since they are engaged full time across a number of different types of project they should be considered whenever new problems arise. However, they should be selected carefully and integrated in the project team.

Small companies should also be prepared to seek the help of their major customers when appropriate. This cooperative approach benefits both supplier and customer.

17.12 Customer Management of Reliability

When a product is being developed under a development contract, as is often the case with military and other public purchasing, the purchasing organization plays an important role in the reliability and quality programme. As has been shown, such organizations often produce standards for application to development contracts, covering topics such as reliability programme management, design analysis methods and test methods.

A reliability manager should be assigned to each project, reporting to the project manager. Project reliability management by a centralized reliability department, not responsible to the project manager, is likely to result in lower effectiveness. A central reliability department is necessary to provide general standards, training and advice, but should not be relied upon to manage reliability programmes across a range of projects. If there is a tendency for this to happen it is usually an indication that inadequate standards or training have been provided for project staff, and these problems should then be corrected.

The prime responsibilities of the purchaser in a development reliability programme are to:

- Specify the reliability requirements (Sections 17.6 and 14.2).

- Specify the standards and methods to be used.

- Set up the financial and contractual framework (Section 17.7).

- Specify the reporting requirements.

- Monitor contract performance.

Proper attention to the first three items above should ensure that the supplier is effectively directed and motivated, so that the purchaser has visibility of activities and progress without having to become too deeply involved.

It is usually necessary to negotiate aspects of the specification and contract. During the specification and negotiation phases it is usual for a central reliability organization to be involved, since it is important that uniform approaches are applied. Specification tailoring (Section 17.10.1) is now a common feature of development contracting and this is an important aspect in the negotiation phase, requiring experience and knowledge of the situation of other contracts being operated or negotiated.

The supplier's reliability plan, prepared in response to the purchaser's requirement, should also be reviewed by the central organization, particularly for major contracts.

The contractor's reporting tasks are often specified in the statement of work (SOW). These usually include:

- The reliability plan.

- Design analysis reports and updates (prediction, FMECA, FTA, etc.).

- Test reports.

Reporting should be limited to what would be useful for monitoring performance. For example, a 50-page FMECA report, tabulating every failure mode in a system, is unlikely to be useful to the purchaser. Therefore the statement of work should specify the content, format and size of reports. The detailed analyses leading to the reports should be available for specific queries or for audit.

The purchaser should observe the supplier's design reviews. Some large organizations assign staff to supplier's premises, to monitor development and to advise on problems such as interpretation of specifications. This can be very useful on major projects such as aircraft, ships and plant, particularly if the assigned staff are subsequently involved in operation and maintenance of the system.

There are many purchasers of equipment who do not specify complete systems or let total development contracts. Also, many such purchasers do not have their own reliability standards. Nevertheless, they can usually influence the reliability and availability of equipment they buy. We will use an example to illustrate how a typical purchaser might do this.

Example 17.2

A medium-sized food-processing plant is being planned by a small group of entrepreneurs. Amongst other things, the plant will consist of:

- Two large continuous-feed ovens, which are catalogue items but have some modifications added by the supplier, to the purchaser's specification. These are the most expensive items in the plant. There is only one potential supplier.

- A conveyor feed system.

- Several standard machines (flakers, packaging machines, etc.).

- A process control system, operated by a central computer, for which both the hardware and software will be provided by a specialist supplier to the purchaser's specification.

The major installations except item 4 will be designed and fitted by a specialist contractor; the process control system integration will be handled by the purchasers. The plant must comply with the statutory safety standards, and the group is keen that both safety and plant availability are maximized. What should they do to ensure this?

The first step is to ensure that every supplier has, as far as can be ascertained, a good reputation for reliability and service. The purchasers should survey the range of equipment available, and if possible obtain information on reliability and service from other users. Equipment and supplier selection should be based to a large extent on these factors.

For the standard machines, the warranties provided should be studied. Since plant availability is important, the purchasers should attempt to negotiate service agreements which will guarantee up-time, for example for guaranteed repair or replacement within 24 hours. If this is not practicable, they should consider, in conjunction with the supplier, what spares they should hold.

Since the ovens are critical items and are being modified, the purchasers should ensure that the supplier's normal warranty applies, and service support should be guaranteed as for the standard items. They should consider negotiating an extended warranty for these items.

The process control system, being a totally new development (except for the computer), should be very carefully specified, with particular attention given to reliability, safety and maintainability, as described below. Key features of the specification and contract should be:

- Definition of safety-critical failure effects.

- Definition of operational failure effects.

- Validation of correct operation when installed.

- Guaranteed support for hardware and software, covering all repairs and corrections found to be necessary.

- Clear, comprehensive documentation (test, operating and maintenance instructions, program listings, program notes).

For this development work, the purchasers should consider invoking appropriate standards in the contract, such as BS 5760. For example, FMECA and FTA could be very valuable for this system, and the software development should be properly controlled and documented. The supplier should be required to show how those aspects of the specification and contract will be addressed, to ensure that the requirements are fully understood. A suitable consultant engineer might be employed to specify and manage this effort.

The installation contract should also cover reliability, safety and maintainability, and service.

During commissioning, all operating modes should be tested. Safety aspects should be particularly covered, by simulating as far as possible all safety-critical failure modes.

The purchasers should formulate a maintenance plan, based upon the guidelines given in Chapter 16. A consultant engineer might be employed for this work also.

Finally, the purchasers should insure themselves against the risks. They should use the record of careful risk control during development to negotiate favourable terms with their insurers.

17.13 Selecting and Training for Reliability

Within the reliability organization, staff are required who are familiar with the product (its design, manufacture and test) and with reliability engineering techniques. Therefore the same qualifications and experience as apply to the other engineering departments should be represented within the reliability organization. The objective should be to create a balanced organization, in which some of the staff are drawn from product engineering departments and given the necessary reliability training, and the others are specialists in the reliability engineering techniques who should receive training to familiarize them with the product. Reliability engineering should be included as part of the normal engineering staff rotation for career development purposes. By having a balanced department, and engineers in other departments with experience of reliability engineering, the reliability effort will have credibility and will make the most effective contribution.

Reliability engineers need not necessarily be specialists in particular disciplines, such as electronic circuit design or metallurgy. Rather, a more widely based experience and sufficient knowledge to understand the specialists’ problems is appropriate. The reliability engineer's task is not to solve design or production problems but to help to prevent them, and to ascertain causes of failure. He or she must, therefore, be a communicator, competent to participate with the engineering specialists in the team and able to demonstrate the value and relevance of the reliability methods applied. Experience and knowledge of the product, including manufacturing, operation and maintenance, enables the reliability engineer to contribute effectively and with credibility. Therefore engineers with backgrounds in areas such as test, product support, and user maintenance should be short-listed for reliability engineering positions.

Since reliability engineering and quality control have much in common, quality control work often provides suitable experience from which to draw, provided that the quality control (QC) experience has been deeper than the traditional test and inspection approach, with no design or development involvement. For those in the reliability organization providing data analysis and statistical engineering support, specialist training is relatively more important than product familiarity.

The qualities required of the reliability engineering staff obviously are equally relevant for the head of the reliability function. Since reliability engineering should involve interfaces with several other functions, including such non-engineering areas as marketing and finance, this position should not be viewed as the end of the line for moderately competent engineers, but rather as one in which potential top management staff can develop general talents and further insight into the overall business, as well as providing further reliability awareness at higher levels in due course.

Specialists in statistics and lately six-sigma black belt masters (see Section 17.17.2) can make a significant contribution to the integrated reliability effort. Such skills are needed for design of experiments and analysis of data, and not many engineers are suitably trained and experienced. It is important that statistics experts working in engineering are made aware of the ‘noisy’ nature of the statistics generated, as described in earlier chapters. They should be taught the main engineering and scientific principles of the problems being addressed, and integrated into the engineering teams. They also have an important role to play in training engineers to understand and use the appropriate statistical methods.

Whilst selection and training of reliability people is important, it is also necessary to train and motivate all other members of the engineering team (design, test, production, etc.). Since product failures are nearly always due to human shortcomings, in terms of lack of knowledge, skill or effort, all involved with the product must be trained so that the chances of such failures are minimized. For example, if electronics designers understand electromagnetic interference as it affects their system they are less likely to provide inadequate protection, and test engineers who understand variation will conduct more searching tests. Therefore the reliability training effort must be related to the whole team, and not just to the reliability specialists.

Despite its importance, quality and reliability education is paradoxically lacking in today's engineering curricula. Few engineering schools offer degree programmes or even a sufficient variety of courses in quality or reliability methods. Therefore, the majority of the quality and reliability practitioners receive their professional training from their colleagues as ‘on the job’ training.

17.14 Organization for Reliability

Because several different activities contribute to the reliability of a product it is difficult to be categorical about the optimum organization to ensure effective management of reliability. Reliability is affected by design, development, production quality control, control of suppliers and subcontractors, and maintenance. These activities need to be coordinated, and the resources applied to them must be related to the requirements of the product. The requirements may be determined by a market assessment, by warranty cost considerations or by the customer. The amount of customer involvement in the reliability effort varies. The military and other public organizations often stipulate the activities required in detail, and demand access to design data, test records and other information, particularly when the procurement agency funds the development. At the other extreme, domestic customers are not involved in any way directly with the development and production programme. Different activities will have greater or lesser importance depending on whether the product involves innovative or complex design, or is simple and based upon considerable experience. The reliability effort also varies as the project moves through the development, production and in-use phases, so that the design department will be very much involved to begin with, but later the emphasis will shift to the production, quality control and maintenance functions. However, the design must take account of production, test and maintenance, so these downstream activities must be considered by the specification writers and designers.

Since the knowledge, skills and techniques required for the reliability engineering tasks are essentially the same as those required for safety analysis and for maintainability engineering, it is logical and effective to combine these responsibilities in the same department or project team.

Reliability management must be integrated with other project management functions, to ensure that reliability is given the appropriate attention and resources in relation to all the other project requirements and constraints.

Two main forms of reliability organization have evolved. These are described below.

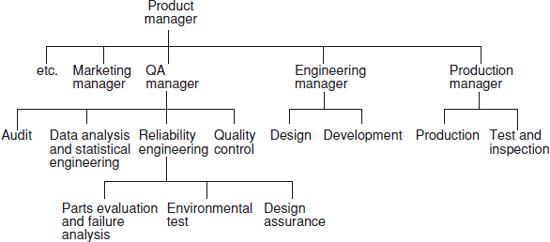

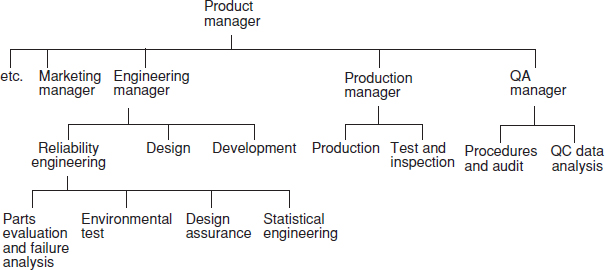

Figure 17.5 QA based reliability organization.

17.14.1 Quality Assurance Based Organization

The quality assurance (QA) based organization places responsibility for reliability with QA management, which then controls the ‘quality’ of design, maintenance, and so on, as well as of production. This organizational form is based upon the definition of quality as the totality of features which bear on a product's ability to satisfy the requirement. This is the formal European definition of quality. Consequently, in Europe the QA department or project QA manager is often responsible for all aspects of product reliability. Figure 17.5 shows a typical organization. The reliability engineering team interfaces mainly with the engineering departments, while quality control is mainly concerned with production. However, there is close coordination of reliability engineering and quality control, and shared functions: for example a common failure data collection and analysis system can be operated, covering development, production and in-use. The QA department then provides the feedback loop from in-use experience to future design and production. This form of organization is used by most manufacturers of commercial and domestic products.

17.14.2 Engineering Based Organization

In the engineering based organization, reliability is made the responsibility of the engineering manager. The QA (or quality control) manager is responsible only for controlling production quality and may report direct to the product manager or to the production manager. Figure 17.6 shows a typical organization. This type of organization is more common in the United States.

17.14.3 Comparison of Types of Organization

The QA based organization for reliability allows easier integration of some tasks that are common to design, development and production. The ability to operate a common failure data system has been mentioned. In addition, the statistical methods used to design experiments and to analyse development test and production failure data are the same, as is much of the test equipment and test methods. For example, the environmental test equipment used to perform reliability tests in development might be the same as that used for production reliability acceptance tests and screening. Engineers with experience or qualifications in QA are often familiar with reliability engineering methods, as their professional associations on both sides of the Atlantic include reliability in their areas of interest. However, for products or systems where a considerable amount of innovative design is required, the engineering based organization has advantages, since more of the reliability effort will have to be directed towards design assurance, such as stress analysis, design review and development testing.

Figure 17.6 Engineering based reliability organization.

The main question to be addressed in deciding upon which type of organization should be adopted is whether the split of responsibility for reliability activities inherent in the engineering based organization can be justified in relation to the amount of reliability effort considered necessary during design and development. In fact the type of organization adopted is much less important than the need to ensure integrated management of the reliability programme. So long as the engineers performing reliability activities are properly absorbed into the project team, and report to the same manager as the engineers responsible for other performance aspects, functional departmental loyalties are of secondary importance. To ensure an integrated team approach reliability engineers should be attached to and work alongside the design and other staff directly involved with the project. These engineers should have access to the departmental supporting services, such as data analysis and component evaluation, but their prime responsibility should be to the project.

17.15 Reliability Capability and Maturity of an Organization

The ability to evaluate how well an organization can handle reliability aspects of the design, development and manufacturing processes (i.e. have the required tool sets, expertise, resources, and the reliability-focused priorities) requires objective criteria. However industry was lacking methods to quantify the capability of an organization to develop and build reliable products. This problem was addressed by developing standardized measurement criteria for assessing and quantifying the reliability capability of an organization. The evaluation methods for organizational reliability processes are reliability capability and reliability maturity assessments. Sometimes those terms are used interchangeably in regards to an organization and its reliability processes, however there are some subtle differences in the ways they are evaluated.

17.15.1 Reliability Capability

Reliability capability is a measure of the practices within an organization that contribute to the reliability of the final product and the effectiveness of these practices in meeting the reliability requirements of customers (Tiku and Pecht, 2010). In order to produce industry-accepted criteria for assessing and quantifying the reliability capability IEEE has developed a standard IEEE Std 1624. Standardized and objective measurement criteria define the eight key reliability practices and their inputs, activities, and outputs.

These key reliability practices are the following:

- Reliability requirements and planning.

- Training and development.

- Reliability analysis.

- Reliability testing.

- Supply chain management.

- Failure data tracking and analysis.

- Verification and validation.

- Reliability improvements.

For each of these eight categories the standard defines the inputs, the required activities and the expected outputs. Each of the reliability practices are individually assessed (levels 1–5) with reference to the specified set of activities required to obtain a specific capability level. These five levels represent the metrics or measures of the organizational reliability capability and reflect stages in the evolutionary transition of that practice.

Five reliability capability levels of IEEE Std 1624 can be associated with five reliability maturity stages discussed in Silverman (2010). Those levels are: 1-Uncertainty; 2-Awakening, 3-Enlightenment, 4-Wisdom, 5-Certainty, which verbally characterize the levels of reliability maturity of an organization.

Although IEEE Std 1624 was developed for the electronics industry, it can be used for self-assessment by organizations or for supplier/customer relationship development in virtually any industry with no or minor adjustments.

17.15.2 Reliability Maturity

In 2004 the Automotive Industry Action Group (AIAG) published Reliability Methods Guideline (AIAG, 2004) containing 45 key reliability tools, some of which are covered in this chapter and the rest are in the other chapters. This activity was later expanded to develop an organizational capabilities maturity concept. The AIAG workgroup produced a reliability maturity assessment (RMA) manual that contains nine reliability categories:

A Reliability planning.

B Design for reliability.

C Reliability prediction and modelling.

D Reliability of mechanical components and systems.

E Statistical concepts.

F Failure reporting and analysis.

G Analysing reliability data.

H Reliability testing.

I Reliability in manufacturing.

Although the RMA category B is called ‘Design for reliability’, it contains only a subset of reliability tools discussed in Chapter 7. Each of the nine categories above included the appropriate set of reliability tools from ‘Reliability Methods Guideline’. For each reliability tool within the category the RMA suggested scoring criteria.

After assessment of the individual scores they are combined by categories resulting in a rating based on the percentage of the maximum available score for each category. The scores for each category can be combined based on the weighted averages to obtain the total score for an organization. Score above 60% is classified as B-level and above 80% is A-level. Scores below 60% are considered as reliability deficiencies.

Both reliability maturity and capability assessments provide important tools to evaluate the organizational capability from a product reliability perspective. The assessments can also be used for supplier selection process and can be conducted as self-assessment and/or 2nd or 3rd party assessment. These activities also help to identify gaps and weaknesses in the reliability process and can be instrumental in developing an efficient product and process improvement plan.

17.16 Managing Production Quality

The production department should have ultimate responsibility for the manufacturing quality of the product. It is often said that quality cannot be ‘inspected in’ or ‘tested in’ to a product. The QA department is responsible for assessing the quality of production but not for the operations which determine quality. QA thus has the same relationship to production as reliability engineering has to design and development.

In much modern production, inspection and test operations have become integrated with production operations. For example, in operator control of computerized machine tools the machine operator might carry out workpiece gauging and machine calibration. Also, the costs of inspection and test can be considered to be production costs, particularly when it is not practicable to separate the functions or when, as in electronics production, the test policy can have a great impact on production costs. For these reasons, there is a trend towards routine inspection and test work being made the responsibility of production, with QA providing support services such as patrol inspection, and possibly final inspection, as well as training, calibration, and so on. Determination of inspection and test policy methods and staffing should then be primarily a production responsibility, with QA in a supporting role, providing advice and ensuring that quality standards will be met.

This approach to modern quality results in much smaller QA departments than under the older system whereby production produced and passed the products to QA for inspection and test at each stage. It also obviously reduces the total cost of production (production cost plus inspection and test costs). Motivation for quality is enhanced and QA staff are better placed to contribute positively, rather than acting primarily in a policing role. The quality circles movement has also heavily influenced this trend; quality circles could not operate effectively under the old approach.

The QA department should be responsible for:

- Setting production quality criteria.

- Monitoring production quality performance and costs.

- QA training (SPC, motivational, etc.).

- Specialist facilities and services.

- Quality audit and registration.

These will be discussed in turn.

17.16.1 Setting Production Quality Criteria

The quality manager must decide the production quality criteria (such as tolerances, yields, etc.) to be met. These might have been set by the customer, as is often the case in commercial as well as in defence equipment manufacture, in which case the quality manager is the interface with the customer on production quality matters. Quality criteria apply to the finished product, to production processes and to bought-in materials and components. Therefore the quality manager should determine, or approve, the final inspection and test methods and criteria to ensure conformance. He or she should also determine such details as quality levels of components, quality control of suppliers and calibration requirements for test and measuring equipment.

17.16.2 Monitoring Production Quality Performance and Costs

The quality manager must be satisfied that the quality objectives are being attained or that action is being taken to ensure this. These include quality cost objectives, as described earlier. QA staff should therefore oversee and monitor functions such as failure reporting and final conformance inspection and test. The QA department should prepare or approve quality performance and cost reports, and should monitor and assist with problem-solving. The methods described in Chapters 11 and 15 are particularly appropriate for this task.

17.16.3 Quality Training

The quality manager is responsible for all quality control training. This is particularly important in training for operator control and quality circles, since all production people must understand and apply basic quality concepts such as simple SPC and data analysis.

17.16.4 Specialist Facilities and Services

The quality department provides facilities such as calibration services and records, vendor appraisal, component and material assessment, and data collection and analysis.

The assessment facilities used for testing components and materials so that their use can be approved are also the best services to use for failure investigation, since this makes the optimum use of expensive resources such as spectroscopic analysis equipment and scanning electron microscopes, and the associated specialist staff.

The joint use of these services in support of development, manufacturing and in-service is best achieved by operating an integrated approach to quality and reliability engineering.

17.16.5 Quality Audit and Registration

Quality audit is an independent appraisal of all of the operations, processes and management activities that can affect the quality of a product or service. The objective is to ensure that procedures are effective, that they are understood and that they are being followed.