Day 4 NUMB3RS

The Plan

Just yesterday we learned about different types of C variables, and we stressed the importance of using the right type of variable for each application to preserve a precious resource: RAM. I don’t know about you, but I am now very curious about putting those variables to work and seeing how the MPLAB© C32 compiler performs basic arithmetic on them. Knowing that the PIC32 has a set of 32 “working” registers and a 32-bit ALU, I am expecting to see some very efficient code, but I also want to compare the relative performance of the same operation performed on different data types and, in particular, floating-point types. Hopefully after today we will have a better understanding of how to balance performance and memory resources, real-time constraints, and complexity to better fit the needs of our embedded-control applications.

Preparation

This entire lesson will be performed exclusively with software tools that include the MPLAB IDE, MPLAB C32 compiler, and the MPLAB SIM simulator.

Use the New Project Setup checklist to create a new project called NUMB3RS and a new source file called NUMB3RS.c.

The Exploration

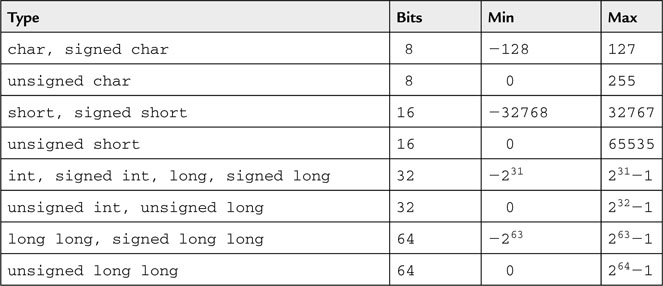

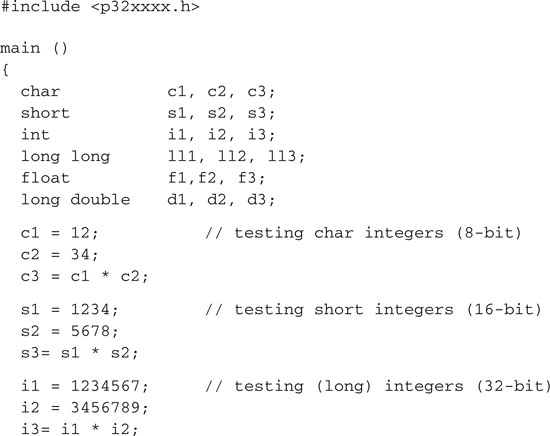

To review all the available data types, I recommend you take a look at the MPLAB C32 User Guide. You can start in Chapter 1.5, where you can find a first list of the supported integer types (see Table 4.1).

As you can see, there are 10 different integer types specified in the ANSI C standard, including char, int, short, long, and long long, both in the signed (default) and unsigned variant. The table shows the number of bits allocated specifically by the MPLAB C32 compiler for each type and, for your convenience, spells out the minimum and maximum values that can be represented.

It is expected that when the type is signed, one bit must be dedicated to the sign itself. The resulting absolute value is halved, while the numerical range is centered around zero. We have also noted before (in our previous explorations) how the MPLAB C32 compiler treats int and long as synonyms by allocating 32 bits (4 bytes) for both of them. In fact, 8-, 16-, and 32-bit quantities can be processed with equal efficiency by the PIC32 ALU. Most of the arithmetic and logic operations on these integer types can be coded by the compiler using single assembly instructions that can be executed very quickly—in most cases, in a single clock cycle.

The long long integer type (added to the ANSI C extensions in 1999) offers 64-bit support and requires 8 bytes of memory. Since the PIC32 core is based on the MIPS 32-bit architecture, operations on long long integers must be encoded by the compiler using short sequences of instructions inserted inline. Knowing this, we are already expecting a small performance penalty for using long long integers; what we don’t know is how large it will be.

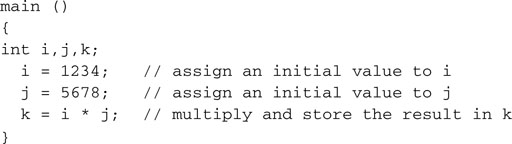

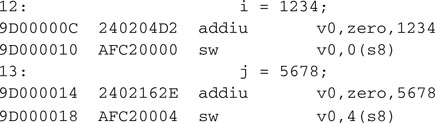

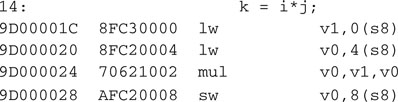

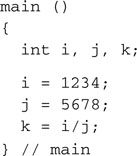

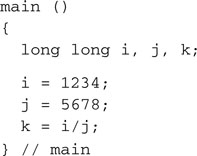

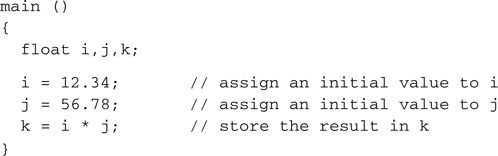

Let’s look at a first integer example; we’ll start by typing the following code:

After building the project (Project | Build All or Ctrl+F10), we can open the Disassembly window (View | Disassembly Listing) and take a look at the code generated by the compiler:

Even without knowing the PIC32 (MIPS) assembly language, we can easily identify the two assignments. They are performed by loading the literal values to register v0 first and from there to the memory locations reserved for the variable i (pointed to by the S8 register), and later for variable j (pointed to by the S8 register with an offset of 4).

In the following line, the multiplication is performed by transferring the values from the locations reserved for the two integer variables i and j back to registers v0 and v1 and then performing a single 32-bit multiplication mul instruction. The result, available in v0, is stored back into the locations reserved for k (pointed to by S8 with an offset of 8)—pretty straightforward!

Note

It is beyond the scope of this book to analyze in detail the MIPS assembly programming interface, but I am sure you will find it interesting to note that the mul instruction, like all other arithmetic instructions of the MIPS core, has three operands—although in this case the compiler is using the same register (v0) as both one of the sources and the destination. Note how the MIPS core belongs to the so-called load and store class of machines, as all arithmetic operands have first to be fetched from RAM into registers (load) before arithmetic operations can be performed, and later the result has to be transferred back to RAM (store). Finally, if you are even minimally interested in the MIPS assembly, note how the compiler chose to use the addiu instruction to load more efficiently a literal word into a register. In reality this performs an addition of an immediate value with a second operand that was chosen to be the aptly named register zero.

On Optimizations (or Lack Thereof)

You will notice how the overall program, as compiled, is somewhat redundant. The value of j, for example, is still available in register v0 when it is reloaded again—just before the multiplication. Can’t the compiler see that this operation is unnecessary?

In fact, the compiler does not see things this clearly; its role is to create “safe” code, avoiding (at least initially) any assumption and using standard sequences of instructions. Later on, if the proper optimization options are enabled, a second pass (or more) is performed to remove the redundant code. During the development and debugging phases of a project, though, it is always good practice to disable all optimizations because they might modify the structure of the code being analyzed and render single-stepping and breakpoint placement problematic. In the rest of this book we will consistently avoid using any compiler optimization option; we will verify that the required levels of performance are obtained regardless.

Testing

To test the code, we can choose to work with the simulator from the Disassembly Listing window itself, single-stepping on each assembly instruction. Or we can choose to work from the C source in the editor window, single-stepping through each C language statement (recommended). In both cases, we can:

If you need to repeat the test, perform a Reset (Debugger | Reset | Processor Reset), but don’t be surprised if the second time you run the code the contents of the local variables appear magically in place before you initialize them. Local variables (defined inside a function) are not cleared by the Startup code; therefore, if the RAM memory is not cleared between reruns, the RAM locations used to hold the variables i, j, and k will have preserved their contents.

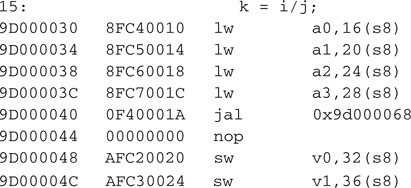

Going long long

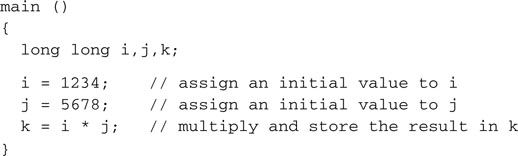

At this point, modifying only the first line of code, we can change the entire program to perform operations on 64-bit integer variables:

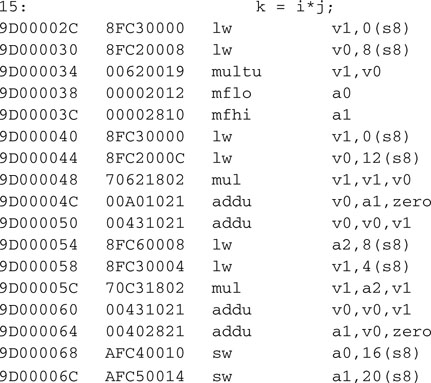

Rebuilding the project, and switching again to the Disassembly Listing window (if you had the editor window maximized and you did not close the Disassembly Listing window, you could use the Ctrl+ Tab command to quickly alternate between the editor and the Disassembly Listing), we can see how the newly generated code is a bit longer than the previous version. Though the initializations are still straightforward, the multiplication is now performed using several more instructions:

The PIC32 ALU can process only 32 bits at a time, so the 64-bit multiplication is actually performed as a sequence of 32-bit multiplications and additions. The sequence used by the compiler is generated with pretty much the same technique that we learned to use in elementary school, only performed on a 32-bit word at a time rather than one digit at a time. In practice, to perform a 64-bit multiplication using 32-bit instructions, there should be four multiplications and three additions, but you will note that the compiler has actually inserted only three multiplication instructions. What is going on here?

The fact is that multiplying two long long integers (64-bit each) will produce a 128-bit wide result. But in the previous example, we have specified that the result will be stored in yet another long long variable, therefore limiting the result to a maximum of 64 bits. Doing so, we have clearly left the door open for the possibility (not so remote) of an overflow, but we have also given the compiler the permission to safely ignore the most significant bits of the result. Knowing those bits are not going to be missed, the compiler has eliminated completely the fourth multiplication step, so in a way, this is already optimized code.

Note

Basic math tells us that the multiplication of two n-bit-wide integer values produces a 2n-bit-wide integer result. The C compiler knows this, but if we fail to provide a recipient with enough room to contain the result of the operation, or if there is simply no larger integer type available, as is the case of the multiplication of two long long integers, it has no choice but to discard (quietly) the most significant bits of the result. It is our responsibility not to let this happen by choosing the right integer types for the range of values used in our application. If necessary, you can predetermine the number of bits in the result of any product by finding the indexes of the first non-zero-bit (msb) for each operand and adding them together. If the sum is larger than the number of bits of the recipient type, you know there will be an overflow!

Integer Divisions

If we perform a similar analysis of the division operation on integer variables as in the previous examples, we will rapidly confirm how char, short, and int types are all treated the same as well:

The code produced by the compiler is extremely compact and uses a single div assembly instruction.

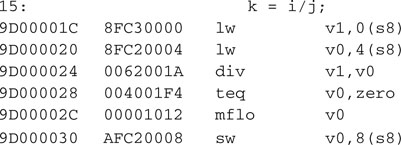

It is only when we analyze the case of a 64-bit division that we find that the compiler is using a different technique:

In fact, recompiling and inspecting the new code in the Disassembly Listing window we reveal a misleadingly short sequence of instructions leading to a subroutine call (jal).

The subroutine itself will appear in the disassembly listing, after all the main function code. This subroutine is clearly separated and identified by a comment line that indicates it is part of a library, a module called libgcc2.c. The source for this routine is actually available as part of the complete documentation of the MPLAB C32 compiler and can be found in a subdirectory under the same directory tree where the MPLAB C32 compiler has been installed on your hard disk.

By selecting a subroutine in this case, the compiler has clearly made a compromise. Calling the subroutine means adding a few extra instructions and using extra space on the stack. On the other hand, fewer instructions will be added each time a new division (among long long integers) is required in the program; therefore, overall code space will be preserved.

Floating Point

Beyond integer data types, the MPLAB C32 compiler offers support for a few more data types that can capture fractional values—the floating-point data types. There are three types to choose from (see Table 4.2) corresponding to two levels of resolution: float, double, and long double.

Table 4.2 MPLAB C32 floating-point types comparison table.

| Type | Bits |

| Float | 32 |

| Double | 64 |

| Long double | 64 |

Notice how the MPLAB C32 compiler, by default, allocates for both the double and the long double types the same number of bits, using the double precision floating-point format defined in the IEEE754 standard.

Since the PIC32 doesn’t have a hardware floating-point unit (FPU), all operations on floating-point types must be coded by the compiler using floating-point arithmetic libraries whose size and complexity are considerably larger/higher than any of the integer libraries. You should expect a major performance penalty if you choose to use these data types, but, again, if the problem calls for fractional quantities to be taken into account, the MPLAB C32 compiler certainly makes dealing with them easy.

Let’s modify our previous example to use floating-point variables:

After recompiling and inspecting the Disassembly Listing window, you will immediately notice that the compiler has chosen to use a subroutine instead of inline code.

Changing the program again to use a double-precision floating-point type, long double, produces very similar results. Only the initial assignments seem to be affected, and all we can see is, once more, a subroutine call.

The C compiler makes using any data type so easy that we might be tempted to always use the largest integer or floating-point type available, just to stay on the safe side and avoid the risk of overflows and underflows. On the contrary, though, choosing the right data type for each application can be critical in embedded control to balance performance and optimize the use of resources. To make an informed decision, we need to know more about the level of performance we can expect when choosing the various precision data types.

Measuring Performance

Let’s use what we have learned so far about simulation tools to measure the actual relative performance of the arithmetic libraries (integer and floating-point) used by the MPLAB C32 compiler. We can start by using the software simulator’s (MPLAB SIM) built-in StopWatch tool, with the following code:

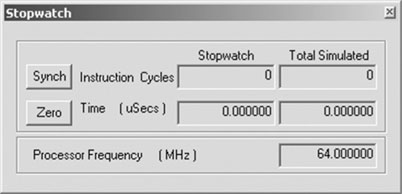

After compiling and linking the project, open the StopWatch window (Debugger | StopWatch) and position the window according to your preferences (see Figure 4.1). (Personally I like it docked to the bottom of the screen so that it does not overlap with the editor window and it is always visible and accessible.)

Zero the StopWatch timer and execute a Step-Over command (Debug | StepOver or press F8). As the simulator completes updating the StopWatch window, you can manually record the execution time required to perform the integer operation. The time is provided by the simulator in the form of a cycle count and an indication in microseconds derived by the cycle count multiplied by the simulated clock frequency, a parameter specified in the Debugger Settings (the Debugger | Settings | Osc/Trace tab).

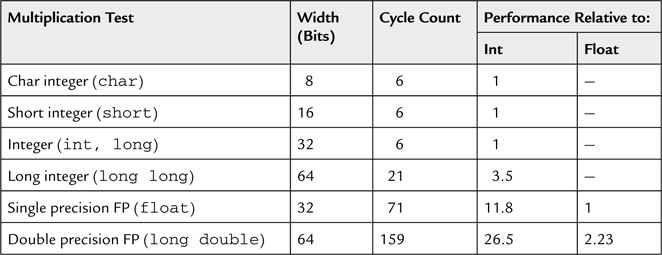

Proceed by setting the cursor over the next multiplication, and execute a Run To Cursor command or simply continue StepOver until you reach it. Again, Zero the StopWatch,execute a Step-Over, and record the second time. Continue until all five types have been tested (see Table 4.3).

Table 4.3 records the results (cycle counts) in the first column, with two more columns showing the relative performance ratios obtained by dividing the cycle count of each row by the cycle count recorded for two reference types. Don’t be alarmed if you happen to record different values; several factors can affect the measure. Future versions of the compiler could possibly use more efficient libraries, and/or optimization features could be introduced or enabled at the time of testing.

Keep in mind that this type of test lacks any of the rigorousness required by a true performance benchmark. What we are looking for here is just a basic understanding of the impact on performance that we can expect from choosing to perform our calculations using one data type versus another. We are looking for the big picture—relative orders of magnitude. For that purpose, the table we just obtained can already give us some interesting indications.

As expected, 32-bit operations appear to be the fastest, whereas long long integer (64-bit) multiplications are about four times slower. Single precision floating-point operations require more effort than integer operations. Multiplying 32-bit floating-point numbers requires one order of magnitude more effort than multiplying 32-bit integers. From here, going to double precision floating-point (64-bit) about doubles the number of cycles required.

So, when should we use floating-point, and when should we use integer arithmetic?

Beyond the obvious, from the little we have learned so far we can perhaps extract the following rules:

Keep in mind also that floating-point types offer the largest value ranges but also are always introducing approximations. As a consequence, floating-point types are not recommended for financial calculations. Use long long integers, if necessary, and perform all operations in cents (instead of dollars and fractions).

Debriefing

In this lesson, we have learned not only what data types are available and how much memory is allocated to them but also how they affect the resulting compiled program in terms of code size and execution speed. We used the MPLAB SIM simulator StopWatch tool to measure the number of instruction cycles required for the execution of a series of basic arithmetic operations. Some of the information we gathered will be useful to guide our actions in the future when we ’ re balancing our needs for precision and performance in embedded-control applications.

Notes for the Assembly Experts

The brave few assembly experts that have attempted to deal with floating-point numbers in their applications tend to be extremely pleased and forever thankful for the great simplification achieved by the use of the C compiler. Single or double precision arithmetic becomes just as easy to code as integer arithmetic has always been.

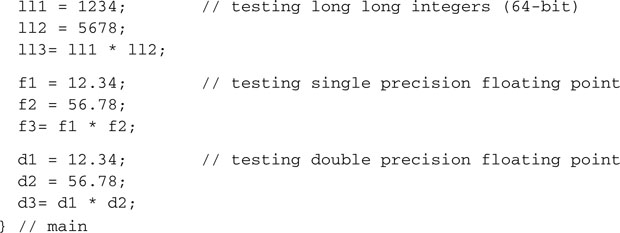

When using integer numbers, though, there is sometimes a sense of loss of control, because the compiler hides the details of the implementation and some operations might become obscure or much less intuitive/readable. Here are some examples of conversion and byte manipulation operations that can induce some anxiety:

The C language offers convenient mechanisms for covering all such cases via implicit type conversions, as in:

The value of s is transferred into the two LSBs of i, and the two MSBs of i are cleared.

Explicit conversions (called type casting) might be required in some cases where the compiler would otherwise assume an error, as in:

(short) is a type cast that results in the two MSBs of i to be discarded as i is forced into a 16-bit value.

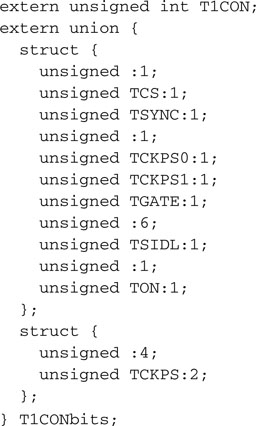

Bit fields are used to cover the conversion to and from integer types that are smaller than 1 byte. The PIC32 library files contain numerous examples of definitions of bit fields for the manipulation of all the control bits in the peripheral’s SFRs.

Here is an example extracted from the include file used in our project, where the Timer1 module control register T1CON is defined and each individual control bit is exposed in a structure defined as T1CONbits:

You can access each bit field using the “dot” notation, as in the following example:

![]()

Notes for the 8-Bit PIC® Microcontroller Experts

The PIC microcontroller user who is familiar with the 8-bit PIC microcontrollers and their respective compilers will notice a considerable improvement in performance, both with integer arithmetic and with floating-point arithmetic. The 32-bit ALU available in the PIC32 architecture is clearly providing a great advantage by manipulating up to four times the number of bits per cycle, but the performance improvement is further accentuated by the availability of up to 32 working registers, which make the coding of critical arithmetic routines and numerical algorithms more efficient.

Notes for the 16-Bit PIC and dsPIC® Microcontroller Experts

Users of the MPLAB C30 compiler will have probably noticed by now how the new MPLAB C32 compiler assigns different widths to common integer types. For example, the int and short types used to be synonyms of 16-bit integers for the MPLAB C30 compiler. Although short is still a 16-bit integer, for the MPLAB C32 compiler int is now really a synonym of the long integer type. In other words, int has doubled its size. You might be wondering what happens to the portability of code when such a dramatic change is factored in.

The answer depends on which way you are looking at the problem. If you are porting the code “up,” or, in other words, you are taking code written for a 16-bit PIC architecture to a 32-bit PIC architecture, most probably you are going to be fine. Global variables will use a bit more RAM space and the stack might grow as well, but it is also likely that the PIC32 microcontroller model you are going to use has much more RAM to offer. Since the new integer type is larger than that used in the original code, if the code was properly written, you don’t have to worry about overflows and underflows.

On the contrary, if you are planning on porting some code “down,” even if this is just being contemplated as a future option, you might want to be careful. If you are writing code for a PIC32 and rely on the int type to be 32-bit large, you might have a surprise later when the same code will be compiled into a 16-bit wide integer type by the MPLAB C30 compiler. The best way to avoid any ambiguity on the width of your integers is to use exact-width types.

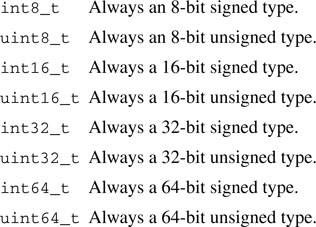

A special set of exact-width integer types is offered by the inttypes.h library. They include

If you use them when necessary, you can make your code more portable but also more readable because they will help highlight the portions of your code that are dependent on integer size.

Note

Another useful and sometimes misunderstood integer type is size_t, defined in the stddef.h library. It is meant to be used every time you need a variable to contain the size of an object in memory expressed in bytes. It is guaranteed by each ANSI compiler to have the right range so that it’s always able to contain the size of the largest object possible for a given architecture. As expected, the function sizeof(), but also most of the functions in the string.h library, makes ample use of it.

Tips & Tricks

Math Libraries

The MPLAB C32 compiler supports several standard ANSI C libraries, including these:

Complex Data Types

The MPLAB C32 compiler supports complex data types as an extension of both integer and floating-point types. Here is an example declaration for a single precision floating-point type:

![]()

Note

Notice the use of a double underscore before and after the keyword complex.

The variable z so defined has now a real and an imaginary part that can be individually addressed using, respectively, the syntax:

![]()

and

![]()

Similarly, the next declaration produces a complex variable of 32-bit integer type:

![]()

Complex constants are easily created adding the suffix i or j, as in the following examples:

![]()

All standard arithmetic operations (+,-,*,/) are performed correctly on complex data types. Additionally, the ~ operator produces the complex conjugate.

Complex types could be pretty handy in some types of applications, making the code more readable and helping avoid trivial errors. Unfortunately, as of this writing, the MPLAB IDE support of complex variables during debugging is only partial, giving access only to the “real” part through the Watch window and the mouse-over function.

Exercises

Books

Britton, Robert., MIPS Assembly Language Programming (Prentice Hall, 2003). It might seem strange to you that I am suggesting a book about assembly programming. Sure, we set off with the intention to learn programming in C, but if you’re like me, you won’t resist the curiosity and you will want to learn the assembly of the PIC32 MIPS core as well.

Links

http://en.wikipedia.org/wiki/Taylor_series. If you are curious, this site shows how the C compiler can approximate some of the functions in the math library.