CHAPTER 8

Monitoring and Analysis

In this chapter, you will learn about

• Events of interest

• Intrusion detection systems

• Security alerts and false positives

• Comparing network-based and host-based intrusion detection systems

• Comparing intrusion detection systems and intrusion prevention systems

• Detecting and preventing attacks

• Wireless intrusion detection and prevention systems

• Security information and event management tools

• Performing security testing and assessments

• Vulnerability assessments

• Penetration tests

Operating and Maintaining Monitoring Systems

Monitoring systems within an information technology (IT) network helps to prevent, detect, and correct potential security incidents. Intrusion detection systems provide continuous monitoring of a network and report events of interest that might be an incident. Intrusion prevention systems go a step further and prevent malicious traffic from entering a network, thereby preventing potential incidents. The following sections describe these systems in more depth.

Events of Interest

Monitoring systems including logging capabilities and they log a wide variety of events. Events are observable occurrences in a system or network and typically recorded in a log. Events of interest are known events that an organization wants to monitor. Some are potential security incidents that need to be investigated. Others are events that need to be monitored to ensure compliance with laws or regulations.

A protocol analyzer (sometimes called a packet sniffer or just a sniffer) can capture packets sent through a network. It’s also possible to configure it to capture only specific packets based on what traffic an organization wants to monitor. Packets are stored within a file commonly called a packet capture or a packet dump. Tools can then be used to analyze the packet capture to detect potential events of interest.

The following list describes some common events of interest:

• Anomalies An anomaly is anything that deviates from normal behavior. Anomaly-based detection methods (described later in this chapter) capture a baseline (sometimes called a security baseline) of activity and then compare current activity against the baseline to detect, log, and send an alert when a system detects an anomaly.

• Intrusions An intrusion typically indicates unauthorized access into a system or network. One of the most common types of intrusions occurs when an uneducated user clicks a malicious link or opens a malicious attachment within a phishing e-mail. When successful, attackers typically use the compromised system to gain more and more access within the computer’s network.

• Data exfiltration Attackers often capture data within a network and send it out of the network so that the criminals can harvest it. This can include files, e-mail from e-mail servers, databases, and any other data stored within the network. Attackers typically encrypt this data before exfiltrating it, so a jump in encrypted data leaving the network is very likely an item of interest that needs to be investigated.

• Unauthorized changes Change management processes (covered in Chapter 10) help prevent outages caused by unauthorized changes. Even if an unauthorized change doesn’t cause an outage, it should be investigated. Many monitoring systems can detect changes and log them.

• Compliance monitoring Many organizations need to monitor systems and networks to ensure they are in compliance with various laws and regulations. As an example, an organization that handles health-related data needs to comply with the Health Insurance Portability and Accountability Act (HIPAA). A continuous monitoring system can detect events of interest for this law (along with other laws and regulations) and log them.

Intrusion Detection Systems

Intrusion detection systems (IDSs) provide continuous monitoring of networks and hosts to help protect them from attacks. The goal is to detect an attack as it occurs and raise an alert so that administrators can take steps to stop the attack.

The IDS monitors and records events in a log. It provides alerts (such as via e-mail or a text message) when it detects potentially malicious events. It can also take action to modify the environment to stop the attack and reduce its effects. For example, it might modify an access control list (ACL) on a router to block traffic or close connections on a server.

IDSs are either network-based or host-based. A network-based IDS (NIDS) monitors network traffic, while a host-based IDS (HIDS) monitors traffic for an individual system such as a server or workstation.

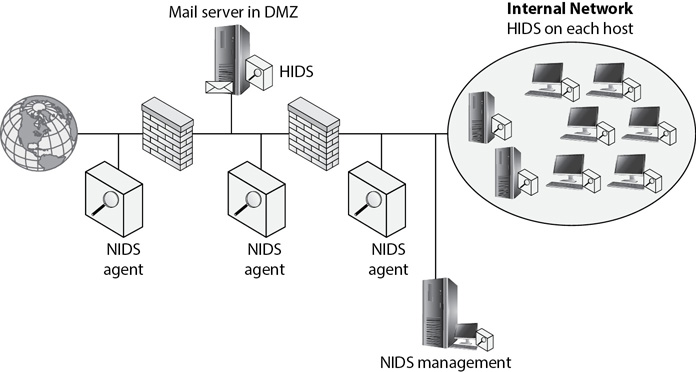

Figure 8-1 shows a network with both a NIDS and a HIDS. Notice that NIDS agents are located on different network segments, such as before the DMZ, inside the DMZ, and on the internal network. HIDS software is installed on each individual server and workstation.

Figure 8-1 Intrusion detection systems protecting a network and systems

A NIDS collects information from the NIDS agents. Administrators install these agents on servers, routers, firewalls, or any other device that can monitor potentially malicious traffic coming into the network. In some cases, the NIDS agent forwards logs from these event source systems, and in other cases, the NIDS agent creates its own log and forwards it to the NIDS management system. A source system is any device that logs events. This includes devices such as firewalls, routers, proxy servers, and security appliances.

IDS Alerts

When an IDS detects an attack, it logs it, and typically reports it as an alert or an alarm. These reports come in many forms. Many IDSs include the ability to send an alert via an e-mail or a text message.

It’s also possible to display the alert. As an example, a network operations center (NOC) typically has a wall of monitors in front of administrators and technicians. The administrators and technicians work on various tasks with desktop computers, but frequently glance at the monitors, and alerts displayed on these monitors will get their attention.

An alert or an alarm doesn’t necessarily indicate an attack is under way. Personnel investigate the alerts to determine if they are valid. Unfortunately, it’s common for an IDS to give false positives. In other words, an IDS might indicate a possible attack when an attack isn’t underway at all.

An IDS often triggers an alert when an event reaches a specific threshold. As an example, consider a port scan attack, where an attacker attempts to scan a system’s ports to identify open ports. If the scan detects that port 80 is open, the attacker knows that the system is probably a web server running Hypertext Transport Protocol (HTTP) because port 80 is the well-known port for HTTP. A port scan will scan a list of ports and record what ports elicited a response (and were open) and what ports did not elicit a response.

If a remote system scans one port, that is probably not an attack. However, if an unknown external system scans all 1,024 well-known ports in a 60-minute period, it is very likely an attack.

Here’s a trick question. If one port scan in an hour is not an attack, and 1,024 port scans in an hour is an attack, what is the lowest number between 1 and 1,024 that most likely indicates an attack? In other words, what should you set the threshold to so that the IDS detects a port scan attack?

There just isn’t a good answer to that question. Port scanners allow attackers to randomize the order of the ports they scan and set delays between queries. If the attacker sets the delay to five minutes, the scanner does 12 port scans in an hour. If you set the threshold to 15, your system would be under attack but the IDS would not detect it.

In contrast, if you configure the threshold to 2 so that the IDS sends an alert if it detects two port scans in an hour, it will probably create many false positives. Your IDS will be known as the IDS that cries wolf and administrators will ignore it. Many administrators consider a threshold of two port scans in a minute to be too low because it will create so many false positives.

When choosing a number for this type of threshold, administrators recognize that the IDS will probably generate some false positives. They would rather see some false positives than configure the threshold so high that the IDS doesn’t detect attacks in progress.

Network-based Intrusion Detection Systems

A NIDS monitors the network traffic for any type of attack. It typically has several nodes or agents stationed around the network. These agents are installed on routers, firewalls, and network appliances such as unified threat management (UTM) devices. Each of these nodes monitors the traffic and reports its findings to a NIDS management server.

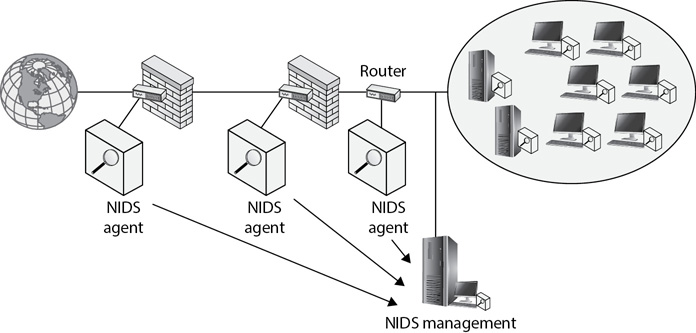

Figure 8-2 shows one possible configuration. It has NIDS agents installed on two firewalls and one router, and each of these agents reports events to the NIDS management server. The server monitors the traffic, and if it detects an attack, it provides an alert to administrator or security personnel.

Figure 8-2 Network-based intrusion detection system with a NIDS management server

As discussed in Chapter 4, many routers and switches support port mirroring. As a reminder, port mirroring sends a copy of all traffic passing through a device to a specific port, and this is one way for agents to send traffic to a NIDS management server. Administrators configure the device to use port mirroring and connect the NIDS management server to the mirrored port.

One of the primary benefits of a NIDS is that the monitoring is transparent. Attackers don’t have any clear indication that a NIDS is monitoring their activity. In contrast, it’s easier for attackers to detect and disable a HIDS.

Host-based Intrusion Detection Systems

A HIDS is installed on an individual system such as a server or workstation. It primarily monitors activity on the system such as system processes and running applications. It can only monitor activity on the host; it is not able to monitor overall network activity as a NIDS does. However, a HIDS can look at patterns on a host’s disk and within its random access memory (RAM) in ways that a NIDS cannot.

Some organizations install a HIDS on every system in the network, while other organizations install them only on a few systems such as critical servers. The key is that a HIDS monitors systems only, while NIDS agents are installed on network components and monitor network activity. While antivirus (AV) software isn’t technically a HIDS agent, many AV applications monitor the same resources (memory, disk usage, processes, and more) as a HIDS agent.

There are some drawbacks associated with a HIDS. First, the software can be very processor-intensive, slowing down an otherwise powerful computer. Second, installing a HIDS on each of your internal systems can be quite expensive. Last, many attackers can detect a HIDS, then disable it or erase the HIDS’s logs.

Intrusion Prevention Systems

An intrusion prevention system (IPS) is an advanced implementation of an IDS. However, IPSs have been around long enough that they are often lumped together with IDSs. For example, National Institute of Standards and Technology (NIST) Special Publication (SP) 800-94 is titled Guide to Intrusion Detection and Prevention Systems (IDPS).

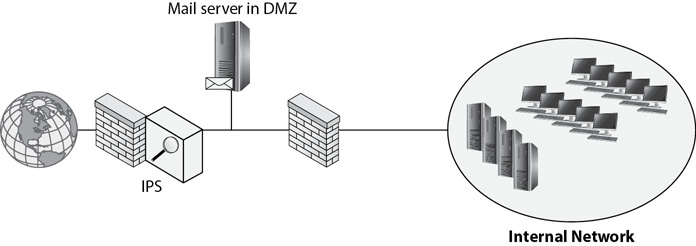

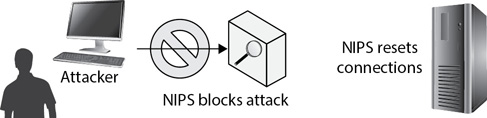

However, there is a key difference between an IDS and an IPS. Specifically, a network-based IPS is placed inline with the traffic. For example, Figure 8-3 shows an IPS placed at the boundary between the network and the Internet. It monitors all traffic going in and out of a network and actively blocks potentially malicious traffic.

Figure 8-3 Intrusion prevention system placed inline

Although Figure 8-3 doesn’t show additional IDSs such as HIDSs on individual systems, they can be used with an IPS as part of a defense-in-depth strategy. An IPS can be host-based (as a HIPS) or network-based (as a NIPS), just as a traditional IDS can be host-based or network-based.

Notice that the IPS is placed inline with the firewall and that all traffic to and from the network goes through both the firewall and the IPS. The firewall protects a network from some malicious traffic, and the IPS helps protect the network from attacks that get through the firewall. Just as an IDS can modify the environment, an IPS can also modify the environment. For example, it might modify the ACL on a router if it detects a port scan from a specific IP address.

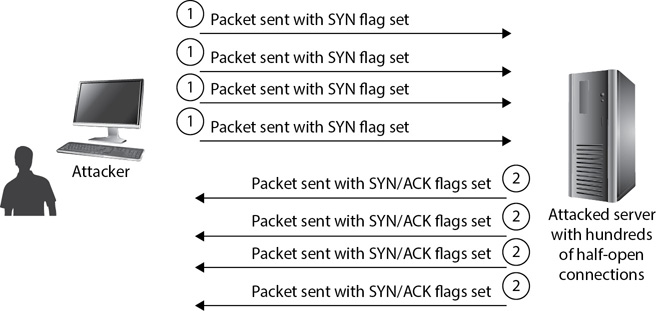

As another example, consider Figure 8-4. It shows a server being attacked with a SYN flood attack. Chapter 5 covers SYN flood attacks, but as a reminder, an attacker disrupts the Transmission Control Protocol (TCP) three-way handshake process, leaving half-open connections on a server. The attacker sends the first SYN packet, the server responds with the SYN/ACK packet, and then the server waits for the ACK packet. However, the attacker never replies with the ACK packet. The server can have hundreds of half-open connections waiting for the third packet in each.

Figure 8-4 SYN flood attack

The IPS can detect this attack and block any more traffic from the attacker by modifying the ACL on the router to stop the attack. It can also close the half-open connections to free up the resources on the server that are being consumed by the attack, as shown in Figure 8-5.

Figure 8-5 IPS blocking a SYN flood attack

It’s worth emphasizing the differences between an IPS and an IDS. An IDS logs the event and provides notification. All IDSs and IPSs have this ability. The IPS is placed inline with the traffic and can take action to modify the environment and prevent the attack from entering the network.

Also, it’s important to realize that neither an IDS nor an IPS launches attacks against an attacker. An IPS will change the environment to block the attack, but does not take any aggressive action against the attacker. Many organizations have security professionals with specialized skills who follow attackers through the Internet and launch attacks on them, but these skills are well beyond the scope of basic IT security professionals.

One of the basic risks of attacking an attacker is drawing attention to your organization. If an attacker attempts an attack and fails, that attacker may simply move on to another, easier target. However, if an attacker is attacked by an organization, that attacker may take it personally and launch protracted attacks against the company, holding a grudge for years. Additionally, there may be legal or liability issues with an organization launching an attack on a suspected attacker. Worse, because many attackers spoof their IP addresses or launch attacks through zombies controlled via a botnet, you may be attacking an unsuspecting victim.

Detection Methods

IDSs and IPSs use one of the following types of detection methods to detect attacks: signature-based, anomaly-based, or a hybrid of the two. Any type of IDS or IPS can use these methods.

Signature-based

A signature-based (sometimes called knowledge-based) detection method is similar in concept to AV signatures. Recall that malware has unique characteristics, called signatures, that identify them. AV software has a database of signatures and compares potential malware against the database. If it gets a match, the software identifies the malware and attempts to clean or delete it.

Similarly, many attacks have unique characteristics documented in signature files. The IDS/IPS uses these signature files to identify and detect attacks. Both the vendor supplying the IDS and then the organization using the IDS must update these signature files regularly to detect newer attacks.

This shows a primary drawback with signature-based detection: It’s good with known attack tools, but attacks may go unnoticed if an attacker uses modified attack methods.

Anomaly-based

Another method of detection is anomaly-based (sometimes called behavior-based). Administrators configure the device to monitor normal behavior to create a baseline. The device then observes activity and compares it to the baseline. If the current activity differs significantly from the baseline, the device issues an alert on the activity.

Anomaly-based systems go by many names, including behavior-based and heuristics-based. The important point is that they start by identifying normal activity and documenting this in a baseline, and then they detect abnormal activity that is outside the normal range of the baseline.

Artificial intelligence (AI) has advanced quite a bit in recent years and some companies are claiming that their anomaly-based systems are now using AI. As an example, IBM’s Watson Explorer uses a cognitive miner and machine learning–based cognitive capabilities. Combined, these abilities allow Watson Explorer to analyze terabytes of information to detect patterns and build solutions. The goal is to provide administrators with actionable data as quick as possible.

A false positive is when an IDPS detects an attack but an attack is not occurring. In contrast, a false negative is when an attack is occurring but the IDPS does not detect it. All IDPSs are susceptible to false positives, and anomaly-based systems have a higher level of false positives than a signature-based system. For example, if an organization has a successful e-mail campaign resulting in a large customer response, this increased customer activity could be interpreted as an attack. Some IDPSs can be tuned to balance the acceptable level of false positives versus false negatives.

If the monitored system is modified, it’s important to update the baseline. For example, if you are using a NIDS and you install software that increases network activity, the NIDS might generate many false positives if the baseline isn’t updated.

Hybrid

A hybrid detection method simply uses a combination of both signature-based and anomaly-based detection methods. It’s common for many IDSs and IPSs to use a combination of both detection methods to increase their overall performance.

Whitelisting

Although it isn’t formally identified as a detection method for IDSs, the use of whitelisting is rising as an alternative to host-based IDSs. The whitelist detects abnormal activity by specifically identifying authorized activity. For example, an application whitelist identifies approved applications and processes. A system blocks applications and processes that aren’t on the list.

Similarly, a switch can have a listing of system media access control (MAC) addresses authorized to communicate through a switch. Any systems not on the list that attempt to communicate through the path raise an alarm. This strategy isn’t feasible for a large number of computers, but it can be useful for smaller networks. Still, it’s important to realize that attackers can spoof MAC addresses. Whitelisting can be part of an overall strategy, but shouldn’t be a primary method.

Wireless Intrusion Detection and Prevention Systems

A wireless intrusion detection system (WIDS) works similarly to an IDS, but for wireless networks, and a wireless intrusion prevention system (WIPS) works similarly to an IPS, but for wireless networks. They monitor wireless network traffic, looking for suspicious activity.

Chapter 5 mentions WIDS and WIPS as countermeasures against wireless attacks. They are effective at detecting common attacks, such as from rogue access points, evil twins, and MAC spoofing. They use signature-based detection methods to detect traffic patterns from known attacks, and most include anomaly-based detection methods too. Additionally, they typically have the ability to inspect access points and identify configuration problems.

Analyze Monitoring Results

An important element of continuous monitoring is regular analysis of the monitored results. Administrators commonly use automated tools to assist in the analysis. The following list describes some common methods of analyzing the data:

• Event data analysis Individual events must be analyzed to determine if they are actual incidents. Administrators often use a variety of tools in the analysis. This includes logs on servers, routers, and firewalls in addition to logs collected by the IDS or IPS. In some cases, administrators need to collect additional data, such as by using a sniffer to capture and analyze the packets during an active attack, or the memory contents of an attacked system.

• Reviewing security analytics and metrics Administrators attempt to pull relevant data from the logs to create actionable information. For example, it’s possible to measure the number of false positives for each week. These findings may show that the alert threshold is too high or too low and needs to be adjusted. Many systems include dashboards that make it easy to pull this data and view it in a timeline format.

• Identifying trends Metrics help identify a baseline and changes to that baseline. For example, an organization might be experiencing a steady increase in denial of service attacks. Analysts can compare these attacks to performance issues with the attacked servers to determine if the security controls are providing adequate protection.

• Creating graphics for visualization You don’t necessarily need pictures, but many tools allow you to create graphs and other graphics that are easily worth a thousand words. It is much easier to identify trends based on collected metrics with a graphic than it is to wade through text.

• Document and communicating findings After completing an analysis, it’s important to document and communicate the findings. Organizations have different processes in place for this. Some organizations might have personnel use informal reports during the analysis, followed by a formal report after the analysis is complete. Based on the event, it might also be necessary to escalate the event.

Detection Systems and Logs

One of the challenges of detecting attacks is that attackers often try to erase their tracks. Logs will record what the attacker has done, but if the attacker is able to delete or modify the logs, the record is lost. With this in mind, it’s important to realize that any logs on a local system that has been attacked are suspect. You need to investigate to determine whether the logs were modified during the attack.

On the other hand, logs stored on remote systems are the most valuable after an attack. For example, NIDS agents send a copy of their logs to a central NIDS management server. If a network firewall or resources within a DMZ are attacked, the logs on those resources may have been modified, but the logs on the NIDS server will still be intact and valid.

Detecting Unauthorized Changes

One of the ways that an IDS can detect an attack is by detecting unauthorized changes. In some cases, the changes can indicate a loss of integrity, and in other cases, they can indicate an attack in progress.

File Integrity Checkers

Many IDSs and antivirus applications use file integrity checkers to detect unauthorized changes to critical system files. A file integrity checker uses hashing techniques to detect these changes.

Chapter 1 introduces hashing and Chapter 14 covers it in more depth. You may remember that a hash is simply a number. A hashing algorithm creates the hash when it is executed against data, and no matter how many times the algorithm is executed against the same data, it will always create the same hash. However, if the data is modified, a new calculated hash will be different from the original hash. By comparing hashes created at different times, it’s possible to determine whether the original file has lost integrity.

A file integrity checker calculates hashes on critical system files to establish a baseline. It then periodically recalculates the hashes of these files and compares the two hashes. If the hashes are different, it detects the change and sends an alert.

Of course, some files are modified as a part of normal system operation. For example, users modify their own documents regularly, so the file integrity checker doesn’t monitor these files. However, it’s rare for executable files to be modified except through authorized updates or malware infections. If the file integrity checker identifies unauthorized changes in executable files, it often indicates a problem such as a malware infection.

Unauthorized Connections

Many IDSs monitor the connections on systems and attempt to detect unauthorized connections. For example, if a server is hosted in an extranet and should be accessed only by a partner organization, an IDS can detect any unauthorized connections from unauthorized entities.

If a server is hosted within a DMZ and accessible to anyone on the Internet, detection of unauthorized connections isn’t so easy. However, an IDS can identify connection attempts that are outside the scope of the server’s purpose. For example, it’s normal for a web server to have connections using HTTP and HTTP Secure (HTTPS). However, connection attempts using other protocols such as Telnet or Simple Mail Transport Protocol (SMTP) may not be authorized and indicate a potential incident.

Attackers often use scanning tools to learn information about a system, and these scanning tools often give telltale signs of their intentions. An IDS can detect these scans and send an alert.

Honeypot

A honeypot is a computer set up to entice would-be attackers. Administrators copy dummy data onto the server and configure it with weak security so that an attacker can easily hack into it. In essence, it’s a trap designed to look appealing to attackers, just as a pot of honey looks appealing to Pooh Bear of Winnie the Pooh fame.

As an example, an organization can set up an FTP server with weak security that looks like it is hosting company data. Attackers may see the server, bypass the weak security controls, and attempt to download or upload data. While the attacker is attacking and probing the honeypot, that attacker is not in the actual network. Additionally, while the attacker is attacking, security professionals have an opportunity to observe the tactics used by the attacker. This observation capability is especially useful when attackers use different methods or new exploits to attack a system.

A honeynet is an extension of a honeypot and includes two or more servers configured as honeypots. The goal of the honeynet is to simulate network activity similar to what an attacker would see in a live environment. In contrast, a single honeypot may not have any activity, enabling an intelligent attacker to determine that it is a trap.

Using Security Information and Event Management Tools

Security information and event management (SIEM) applications monitor an enterprise for security events. They are very useful in large enterprises that have massive amounts of data and activity to monitor, but smaller organizations also use them. Other names for these solutions include security information management (SIM), system event management, and security event management (SEM) applications.

Consider an organization with over 3,000 servers. When a situation arises on just one of those servers, administrators need to know as quickly as possible. The SIEM application provides constant monitoring and reporting capabilities to provide real-time reporting.

A typical SIEM application can collect data from multiple sources. This includes firewalls, routers, proxy servers, IDSs, IPSs, proxy servers, and more. While this data is rarely logged in the same manner, the SIEM application uses aggregation and correlation techniques to combine the dissimilar data into a single database that can easily be searched and analyzed. In some cases, the SIEM application will include its own IDPS component, but it can collect data from an existing IDPS.

Much of the data that a SIEM application captures is called machine data. Machine data is the digital information created by a computing system’s activity. On typical computers and servers, machine data is all the logs created on the computers and servers. However, machine data refers to more than just the logs on typical computers and servers. It also includes data on mobile devices (such as smartphones and tablets), embedded systems, and Internet of Things (IoT) devices. An embedded system is any device that has a central processing unit (CPU), an operating system, and one or more applications that perform a dedicated function. IoT devices are embedded systems that have Internet access. They include digital cameras, smart televisions, and home automation systems.

Continuous Monitoring

A SIEM application provides continuous monitoring with a combination of tools. These tools within the SIEM application are combined into a single unified solution with several benefits, including the following:

• Dashboard to monitor all activity Administrators don’t need to monitor multiple tools because the SIEM application provides a single interface (often called a dashboard). The dashboard can display the data in multiple ways, including graphics to provide a visualization of the data, timelines to show activity over time, and metrics that provide numbers to compare different data points. These various displays allow administrators to easily detect trends.

• Database capabilities Collected data is stored in a database that can easily be searched and analyzed for items of interest. Data includes logs collected from servers and network devices such as firewalls, routers, and switches. Log types include security logs, system logs, firewall logs, backup logs, and more. An IDPS typically includes a packet capture (sometimes called a packet dump) capability to capture all traffic that passes through the network, and the SIEM application can store these packet captures for later analysis.

• Ability to define or fine-tune items of interest SIEM applications come with predefined alerts, but they can be modified with different threshold levels or different items to search. For example, if management is interested in specific events occurring on a server, such as the use of privileges by anyone with an administrator account, they can have personnel configure the SIEM application to issue an alert each time these events occur.

• Alerting capabilities SIEM applications have the ability to issue an alert on any item of interest and provide different types of notifications. They can provide visual indications, such as pop-ups through the application’s interface, and send notifications via e-mail or text messages.

• Secure storage of data Because a SIEM application is centrally located, the data can be protected from an attacker. In other words, the logs for individual monitored systems are stored remotely with the SIEM application. If any of the systems are attacked, the logs stored with the SIEM application remain intact.

When using a SIEM application, it is sometimes necessary to use time synchronization techniques to ensure the data remains meaningful. This isn’t necessary if all the data comes from devices in a single location. However, if a SIEM application is collecting data from multiple locations and these locations are in different time zones, time synchronization techniques are required. One method is to convert all time to Greenwich Mean Time (GMT), which is the time at the Royal Observatory in Greenwich, London.

Document and Communicate Findings

IT security administrators evaluate the output of monitoring systems looking for trends and events of interest. While many tools provide automated reports, administrators evaluate the reports and determine if they need to communicate their findings to others in the organization.

Imagine a SIEM application alerts on a potential DDoS attack and administrators verify the attack is real. The administrators would escalate the incident to appropriate personnel by communicating their findings.

An incident response plan typically identifies who to notify. As an example, a malware infection might only require the notification of a technician in the IT department to remove the malware using established procedures. A protracted DDoS attack might require notification of a chief technology officer (CTO) or chief information officer (CIO), again depending on the requirements dictated in the incident response plan.

Performing Security Tests and Assessments

Many organizations perform regular security assessments. Administrators use them to check the security posture of the organization and discover vulnerabilities. After performing a security assessment, they report their findings and recommendations, if appropriate, to management. Management can approve the recommendations to implement security controls to reduce the identified vulnerabilities, increasing overall security.

The most common type of security assessment is a vulnerability assessment. Organizations may take this a step further and perform a penetration test that attempts to exploit weaknesses discovered by a vulnerability assessment. Penetration tests are more intrusive and have the capability to cause damage if not approached cautiously.

Vulnerability Assessments

Vulnerability assessments attempt to discover vulnerabilities and can take many forms. Some assessments attempt to discover whether an organization is susceptible to social engineering attacks. Other assessments attempt to discover whether the organization has technical weaknesses.

For example, phishing attacks are common, and an organization may want to test its employees’ knowledge of phishing to see whether they are likely to respond. An online company, KnowBe4 (https://www.knowbe4.com), provides a free service that will send a bogus phishing e-mail to employees to determine how many employees are likely to respond to a phishing e-mail. Ideally, zero employees will respond, but if even one employee responds, it could compromise the organization’s resources. Tests such as the one provided by KnowBe4 often show that many employees aren’t educated about the risks of phishing e-mails and respond or click links.

Other security consultants use social engineering tactics to gain knowledge of an organization and then use this knowledge to gain more and more access. For example, an organization may hire a security consultant to see what information the consultant can learn about the organization just from calling employees or going through its trash.

Technical vulnerability assessments attempt to discover technical weaknesses. For example, vulnerability scanners can scan systems and networks to discover existing vulnerabilities.

Test Types

Personnel performing a vulnerability assessment have different levels of knowledge, based on the type of test they are performing. There are three common test types:

• White box or internal testing Testers perform the assessment within the organization. They have full access to the internal network and know the network infrastructure, including what systems it hosts. Security professionals who are employees within the organization commonly do internal testing. Other names for this type of testing include clear box testing, glass box testing, and transparent box testing.

• Black box or external testing Testers don’t have any knowledge of the internal network prior to starting the testing. Consultants hired to test an organization’s security vulnerabilities often do black box testing.

• Gray box testing This is a cross between white box testing and black box testing. It can be done internally or externally, but the testers have at least some level of knowledge about the network.

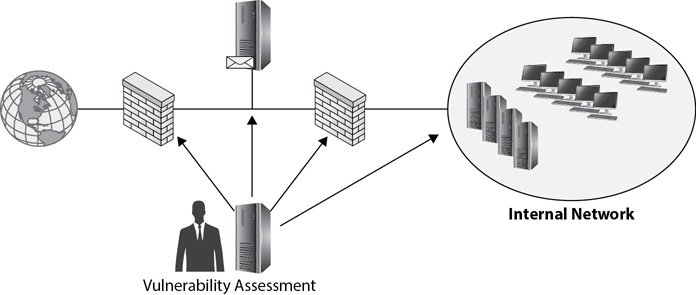

Figure 8-6 shows how a white box internal test is able to test multiple systems on the internal network, the DMZ, and even firewalls protecting the network. Chapter 5 defines white hats, black hats, and gray hats. These terms are completely different from white box testing, black box testing, and gray box testing. For example, testers working for an organization with no malicious intent are white hat testers. White hat testers can perform white box testing, black box testing, or gray box testing.

Figure 8-6 White box testing launched internally to check systems

It’s worth stressing that many attackers use the same tools organizations use to assess an organization’s vulnerabilities for potential attacks. In other words, if you can discover a vulnerability with one of these tools, the odds are very good that an attacker can also discover these vulnerabilities.

Vulnerability-Scanning Tools

Many vulnerability-scanning tools are available to automate scans of a network. Some tools are comprehensive and do multiple functions, while other tools perform a single function. These tools attempt to perform overall reconnaissance of a network. The result is a listing of the machines that are operating on the network along with the likely services running on each system.

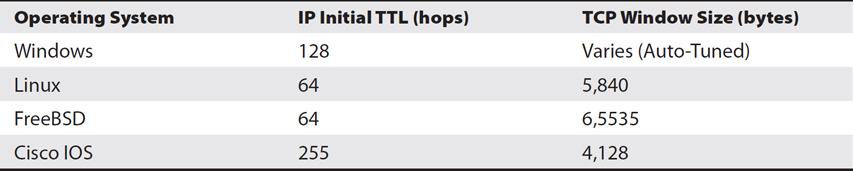

Some of these tools start with an Internet Control Message Protocol (ICMP) sweep to identify computers based on their IP addresses. They follow this with a port scan on each of the computers to identify which ports are open on individual computers. Next, they try to identify more details about the system, typically by analyzing packets sent from the system.

As an example, Table 8-1 shows the values of two observable items in a packet. The recommended default value for the initial Internet Protocol (IP) Time To Live (TTL) is 64, but this is only a recommendation and different operating systems have different values. It refers to the number of routers, or hops, a packet will traverse before it is discarded. Similarly, different operating systems use different TCP window sizes for the initial packets in a TCP session. In some cases, an operating system version may be using different values.

Table 8-1 TTL and Window Sizes

The initial TTL and TCP window size are only two items in a packet that identify details about a system. Vulnerability scanners use multiple comparisons to identify a system. This process is often referred to as fingerprinting the system.

Nmap (short for Network Mapper) can locate and document all the devices on a network as part of a reconnaissance scan. Nmap uses scanning methods first to discover all the devices based on their IP addresses, and then uses scans on each individual system to identify services running on each system. Nmap can often identify a system’s operating system, services running on the system, the existence of a host-based firewall, and much more.

Nessus is a vulnerability-scanning tool used to discover vulnerabilities in individual systems. It can use Nmap to discover systems on the network during an initial reconnaissance scan. It then uses other methods for the fingerprinting scan. It can perform detailed analysis of individual systems, including auditing passwords for complexity, checking systems for compliance with specific security policies, and checking for the existence of personally identifiable information (PII) on a system.

There are many other vulnerability-scanning tools available. Security professionals testing networks for vulnerabilities can use these tools, but remember that criminals attempting to break into networks can also use them.

No matter which tool you use, you can expect the security professionals to provide detailed reports documenting the findings. Of course, an extremely important step in the vulnerability assessment process is to analyze these results and address any vulnerabilities that can be mitigated. Data in these reports is sensitive and should be protected. If attackers have access to the reports, they can use the information to launch attacks on documented vulnerabilities.

Reviewing Infrastructure Security Configurations

Infrastructure security refers to securing the hardware elements of a network. It’s important to review these security elements periodically to verify they are up to date with current security practices.

Administrators performing daily tasks in a network often get used to the configuration and can overlook some things. Because of this, organizations often bring in an outside consultant to examine the infrastructure and provide recommendations. A security consultant will typically perform the following tasks and provide recommendations to improve security:

• Assess network vulnerabilities This can be done by running various vulnerability-scanning tools such as Nessus.

• Verify appropriate host hardening This check ensures that servers are running only the protocols and services they need, and all other protocols and services are disabled. It also ensures that host-based firewalls are enabled, and systems are up to date with current patches.

• Check border security This includes checking all paths into the network from external sources such as the Internet. The consultant would check network devices such as routers, firewalls, UTM appliances, and the DMZ configuration.

Instead of hiring outside consultants to perform these reviews, some organizations have dedicated IT security staff. If these personnel are given the time and resources to focus on security, they can perform these assessments on a routine basis.

Order of Steps

Vulnerability assessments typically use the following order of steps:

1. Gain permission from management. The importance of this step cannot be overstated. Senior management from any organization typically interprets unapproved vulnerability scans as attacks. This often results in the loss of a job or even legal action. Most security professionals require written approval before starting an assessment. This is so important that if someone is willing to perform an assessment without written approval, they should be viewed with suspicion.

2. Discovery. In this step, personnel perform vulnerability scans on the network to identify weaknesses. It often includes reconnaissance steps to document the environment, followed by fingerprinting scans to identify specifics about the systems, such as the operating system or running services. The detailed scans on individual systems can detect specific vulnerabilities for each of the scanned systems. Testers often do both credentialed scans and noncredentialed scans. A credentialed scan checks for vulnerabilities with a privileged account, and a noncredentialed scan checks for vulnerabilities without being logged on.

3. Analyze results. Vulnerability scanners provide reports that personnel must analyze to determine their validity. It’s possible for a scanner to misidentify a system or report a false positive. Security professionals who regularly run scans recognize the weaknesses and the strengths of the tools. For example, a scan may regularly report that a firewall is not enabled on a host simply because it doesn’t recognize a third-party firewall. However, when new vulnerabilities appear on reports, they deserve extra scrutiny to determine their validity.

4. Document vulnerabilities. Next, personnel document the vulnerabilities in a report. Some organizations want this report turned in as soon as possible so that management has an idea of the vulnerabilities. For example, in one large network operations center where I worked, a security group performed detailed scans once a month. As soon as the group was able to compile the results, it published the report, making it available to key management personnel in other departments. In this situation, the security group simply performed the scans and reported the results, and other departments were responsible for identifying and recommending methods to remediate the vulnerabilities.

5. Identify and recommend methods to reduce the vulnerabilities. Next, personnel identify methods that can mitigate the vulnerabilities. These could be as simple as recommending a configuration change or as complex as recommending a detailed patch management process to ensure all systems are kept up to date.

6. Present recommendations to management. Management has the responsibility to examine recommendations and either approve or reject the recommendations. They have the overall view of the organization and the responsibility for any losses associated with residual risks for any rejected recommendations.

7. Remediate. Last, personnel implement any recommendations approved by management. It’s worth stating the obvious here: If a fix isn’t implemented, the vulnerability remains and attackers can exploit it. If management has approved a recommendation, it should be implemented as soon as possible.

Remediation Validation

Remediation validation is the process of verifying that approved fixes are implemented. Some approved changes can be implemented very quickly. For example, if a vulnerability assessment found that Telnet was enabled on many servers in the network, the remediation is to disable Telnet on all the servers. Administrators might be able to use Group Policy to remove Telnet from all the servers, assuming they are all Microsoft servers within an Active Directory domain.

In contrast, some changes might take much longer. As an example, an assessment using a different assessment tool might discover many vulnerabilities that weren’t previously known. Security personnel might take the time to prioritize the vulnerabilities and create a plan of action to address each based on the severity of the vulnerabilities. IT administrators would then begin implementing the changes (typically using a change control process).

The remediation validation timeline depends on the timeline of fixes. However, the key is to take action to validate or verify that the fixes are implemented.

Audit Finding Remediation

Organizations often perform audits to verify that an IT security system is complying with the organization’s security policy, guidelines and requirements from partners, and relevant laws. These audits can be performed by personnel within the organization or by personnel outside the organization.

As an example, the Payment Card Industry Data Security Standard (PCI DSS) is a standard used by organizations that handle many major credit cards. Its purpose is to help ensure these organizations comply with minimum IT security standards. Many organizations can certify compliance by answering questions within a Self-Assessment Questionnaire (SAQ). However, other organizations must bring in an external auditor to verify compliance. These external auditors are referred to as assessors and are certified by PCI Security Standards Council to perform the audits.

The auditor creates a report documenting the results of the audit, commonly called the audit findings. Audit findings verify an organization is in compliance with the requirements and document any instances in which an organization is not in compliance. If an organization is not in compliance, it creates a plan to resolve the issues.

Just as an organization should verify that approved changes from an assessment have been completed, it’s also important to verify that audit findings have been addressed.

False Positives and the Importance of Results Analysis

In some cases, a vulnerability assessment may indicate that a vulnerability exists even though it doesn’t. This is a false positive. For example, a scanning tool may indicate that a server is missing a patch, but investigation verifies that the patch is installed. This is often a weakness in the vulnerability-scanning tool, and some tools are more prone to false positives than other tools are.

However, the important thing to recognize is that false positives can occur. Just because the tool indicates a vulnerability exists, that doesn’t mean it does. Administrators or security analysts examine the results to determine their validity.

Vulnerability tests can sometimes give false negatives also. As an example, imagine management decides not to implement an operating system patch on a server because it breaks an application. A vulnerability scan should indicate the system is vulnerable because the patch isn’t applied, but if it doesn’t, it’s a false negative. This can give a false sense of security because a vulnerability scan may indicate that a network is secure, yet actual vulnerabilities exist.

Penetration Tests

Penetration testing takes a vulnerability assessment one step further. Instead of stopping after discovering vulnerabilities, a penetration test attempts to exploit the vulnerability. While a penetration test provides verification that an attacker can (or can’t) actually exploit a system, it comes with significant risks.

It’s important for testers to recognize the potential impact of security testing. It’s possible for a penetration test to cause damage resulting in the loss of confidentiality, integrity, or availability of a system or data. This is never a goal, but due to the nature of penetration testing, it is possible, so testers must be cautious when testing.

Both internal and external penetration tests are possible, and they are classified as white box (full knowledge), black box (zero knowledge), and gray box (partial knowledge) tests, just as vulnerability tests are classified. The amount of information given to the testers is dependent on the goals of the test.

In many scenarios, penetration testers are given specific goals. As an example, they may be tasked with locating user databases within a network, exfiltrating them, and then cracking the password hashes for various users. If successful, they can provide management with a listing of usernames and passwords. As another example, testers may be tasked with gaining access to an employee’s computer, using privilege escalation techniques to gain additional access, and executing scripts to map the network.

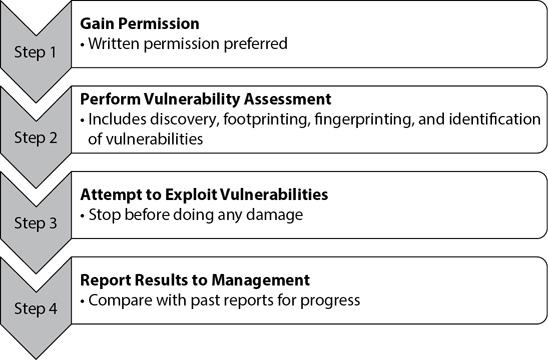

Figure 8-7 shows the overall steps of a penetration test, and the following sections cover them in more depth.

Figure 8-7 Penetration test steps

Gain Permission

Gaining permission is an even more important step in a penetration test than it is with a vulnerability test because it’s possible that a penetration test can actually cause a loss in confidentiality, integrity, and/or availability of a system. The permission needs to be in writing and should identify the goals of the test.

Perform Vulnerability Assessment

In the vulnerability assessment stage, you go through similar steps as you would in a typical vulnerability assessment. While the actual steps of the vulnerability assessment can vary depending on the scope, it’s very likely it will include the following components:

• Discovery or reconnaissance In this stage, you collect information about the targets using any available means. This could use tools such as Nmap to complete a reconnaissance scan, or use existing documentation if the test is carried out as a white box test. Black box testers often need to use a variety of social engineering tactics to gain information about the organization and the network.

• Fingerprinting Once the network is mapped, individual systems are identified and probed to discover details about them. This includes the operating system, service packs, patches, open ports, running services, and running applications.

• Identify vulnerabilities The tester then analyzes the collected data to determine what vulnerabilities exist and can be exploited.

If this were a vulnerability test, the data would be put into a report and the test would be complete. However, a penetration test adds the next step: an attempt to exploit one or more vulnerabilities.

Attempt to Exploit Vulnerabilities

In this step, the tester attempts to use methods similar to what an attacker would use to exploit the vulnerabilities. At this stage, it’s very important to understand the scope and purpose of the test. Most organizations want to know what can be exploited, but not at the expense of an outage. In other words, the tester should take the test as far as possible without causing any damage.

Of course, the danger with this strategy is that you don’t always know how far is too far until you get there. Testers should be acutely aware of the potential damage that an exploit can cause and be very cautious when the exploit can be damaging.

Some exploits result in the disclosure of data. In this case, testers can gather as much data as possible without causing harm. The testers can show the data they’ve collected to demonstrate that an attacker can also access it. Other exploits can modify or corrupt data, and these types of tests should not be performed on live production servers. However, it is possible to create a test server configured identically to the production server. Testers then attempt the penetration test on the test server. If the test on the test server succeeds, it provides proof that the exploit is possible without affecting actual resources.

Report Results to Management

The results of the penetration test are compiled into a report, along with recommendations for controls or safeguards to mitigate any of the vulnerabilities. Just as with a vulnerability report, management decides on what risks to mitigate and what risks to accept.

Past reports are valuable to compare. For example, a past report may have documented a vulnerability, and management may have approved a control to mitigate the risk. If the vulnerability still exists, it deserves some investigation. Was the control implemented? Was it completely ineffective, or did it actually reduce the vulnerability? Has the threat technique been modified to circumvent the original control? Answers to these questions help determine the next steps to take.

Chapter Review

Events of interest are known events that an organization wants to detect and record. Some events of interest are potential security incidents that need to be investigated. Others are events logged for compliance monitoring.

An intrusion detection system (IDS) provides continuous monitoring and sends alerts to report suspicious activity. Administrators investigate alerts to determine whether they are actual attacks or false positives. NIDS agents are installed on network devices and send data back to a central network-based IDS (NIDS) management server. This server analyzes the activity throughout the network and provides notification when an alert occurs. A host-based IDS (HIDS) monitors activity on an individual system, such as a server or workstation, and provides notifications as alerts. Notifications are typically recorded in logs but can also be sent as e-mails or text messages. An intrusion prevention system (IPS) is placed inline with the traffic and can modify the environment to block attacks in progress.

IDSs and IPSs use two primary detection methods. A signature-based (or knowledge-based) system has a database of known attacks and compares live activity with this data. This is similar to how AV software uses signature files to detect malware. An anomaly-based (or behavior-based) system starts with a baseline of normal activity. It then monitors ongoing activity to determine whether there is a significant change or anomaly. Anomalies are reported as alerts and need to be investigated. Many systems use a hybrid model of both signature-based and anomaly-based detection methods.

One of the benefits of NIDSs over HIDSs is that the log files are stored remotely on the central server. A HIDS stores the logs on the host, and an attacker can modify or delete the logs, erasing the record of the attack.

Many times an organization wants to detect unauthorized changes. Tools such as file integrity checkers monitor files and can detect unauthorized modifications.

Honeypots are systems with fake data designed to attract attackers, diverting them from a live network. They also give security professionals an opportunity to observe attacks in action. Honeynets are groups of two or more honeypots to simulate a network.

Security information and event management (SIEM) applications are unified continuous monitoring systems. They collect data from multiple sources and typically include tools to aggregate, correlate, and analyze the data. IT administrators analyze the output of a SIEM application and escalate incidents when appropriate by communicating their findings to other personnel.

Most organizations perform security assessments to detect potential security issues. A vulnerability test attempts to discover vulnerabilities and provide recommendations to mitigate the associated risks. Testers can use nontechnical means, such as social engineering tactics, and use technical means with tools such as Nmap and Nessus.

Penetration tests go a step further than a vulnerability test. They attempt to exploit a vulnerability. Penetration tests are more intrusive and have the potential to cause damage, so they should be performed with utmost caution and stopped before inflicting any actual harm. Testers should obtain written permission prior to beginning either a vulnerability assessment or a penetration test. Both vulnerability assessments and penetration tests provide a report to management as the last step.

Both vulnerability tests and penetration tests can be white box (full knowledge), black box (zero knowledge), or gray box (partial knowledge) tests.

Questions

1. You want to monitor the network for possible intrusions or attacks and report on any activity. What would you use?

A. HIPS

B. HIDS

C. NIDS

D. AV software

2. You want to monitor a server for potential attacks. Of the following choices, what is the best choice?

A. HIDS

B. NIDS

C. Anomaly-based IDS

D. Signature-based IDS

3. Which of the following choices identifies a major drawback associated with a host-based IDS (HIDS)?

A. It is very processor intensive and can affect the computer’s performance.

B. The signatures must be updated frequently.

C. It does not support anomaly-based detection.

D. It stores the logs on remote systems.

4. Of the following choices, what best describes an IPS?

A. An active antivirus program that can detect malware

B. An inline monitoring system that can perform penetration testing

C. An inline monitoring system that can perform vulnerability assessments

D. An inline monitoring system that can modify the environment to block an attack

5. Which of the following identifies a system that requires a database to detect attacks?

A. Anomaly-based IDS

B. Signature-based IDS

C. HIPS

D. NIPS

6. You have recently modified the network infrastructure within your network. What should be re-created to ensure that the anomaly-based NIDS continues to work properly?

A. Signature database file

B. Baseline

C. Router gateways

D. Firewalls

7. How does an anomaly-based IDS detect attacks?

A. It compares current activity against a baseline.

B. It compares current activity against a database of known attack methods.

C. It compares current activity with antivirus signatures.

D. It monitors activity on firewalls.

8. What logs are most valuable after an attack?

A. Logs on a remote system

B. Logs on local systems that have been attacked

C. Logs for local firewalls

D. Logs for antivirus events

9. Which of the following can detect if a system file has been modified?

A. Encryption algorithm

B. Anomaly-based detection

C. Signature-based detection

D. File integrity checker

10. You are an IT administrator working in a 24-hour network operations center. One of your tasks is to evaluate alerts from various tools and determine if any are events of interest. Which of the following events would you most likely flag as events of interest? (Select all that apply.)

A. An apparent network intrusion caused by a user clicking a malicious link within an e-mail.

B. An unauthorized change updating an application on a web server.

C. An alert on a DoS attack that was proven to be a false positive.

D. Privacy data being sent out of the network by a user via e-mail.

11. Administrators in your organization recently installed a new SIEM application within your network. Which of the following best describes the capabilities of a typical SIEM application?

A. Collect, aggregate, and correlate data from multiple devices in a network

B. Protect a web server from attacks

C. Monitor traffic going through DMZ firewalls and alert on potential attacks

D. Perform automated vulnerability assessments

12. Your organization handles and transmits a significant amount of privacy data. As a result, it needs to comply with several laws and regulations. Which of the following tools would you most likely find within the network to provide continuous monitoring of all network traffic?

A. Vulnerability scanner

B. Penetration test

C. File integrity checker

D. SIEM

13. An external organization is performing a vulnerability test for a company. Officials from the company give this group some information on the company’s network prior to the test. What type of test is this?

A. White box test

B. Gray box test

C. Black box test

D. Internal test

14. Your organization has contracted with a security organization to test your network’s vulnerability. The security organization is not given access to any internal information from the company. What type of test will the organization perform?

A. White box testing

B. Gray box testing

C. Black box testing

D. Partial knowledge testing

15. An organization has hired you to perform a vulnerability assessment. Which of the following steps would you perform first?

A. Document vulnerabilities

B. Fingerprint systems

C. Perform reconnaissance

D. Gain approval

16. An outside security consultant recently performed a vulnerability assessment on your organization’s network. She recommended several security controls to mitigate some risks. Which of the following steps would be the last step to complete the assessment?

A. Discovery

B. Analysis

C. Remediation

D. Document vulnerabilities

17. A security expert in your organization regularly scans your network to detect potential vulnerabilities using a vulnerability scanner. How would this vulnerability scanner most likely fingerprint a system?

A. With a biometric scanner

B. Using an ICMP sweep

C. By identifying its IP address

D. By analyzing packets

18. A vulnerability assessment reports that a patch is not installed on a system, but you’ve verified that the patch is installed. What is this called?

A. Anomaly-based vulnerability

B. Signature-based vulnerability

C. False negative

D. False positive

19. What’s the primary difference between a penetration test and a vulnerability assessment?

A. A vulnerability assessment includes a penetration test, but a penetration test does not include a vulnerability assessment.

B. A penetration test is intrusive and can cause damage, while a vulnerability assessment would not cause damage.

C. A vulnerability assessment is intrusive and can cause damage, while a penetration test is passive.

D. They are basically the same, but with different names.

20. When should a penetration test stop?

A. After discovering the vulnerabilities

B. After discovering the threats

C. Before causing any damage

D. Before discovering the exploits

Answers

1. C. A network-based IDS (NIDS) monitors the network in real time and sends alerts to report suspicious activity. A host-based intrusion detection or prevention system (HIDS or HIPS) cannot monitor network activity. AV software detects malware, but not necessarily network attacks.

2. A. A host-based IDS (HIDS) can monitor a single computer (such as a server) for possible attacks and intrusions. A network-based IDS (NIDS) monitors network activity. Both HIDS and NIDS can use either anomaly-based detection or signature-based detection.

3. A. A HIDS is very processor intensive and can negatively affect the computer’s performance. All IDSs using signature-based detection need to have their signatures updated frequently. A HIDS will typically support both signature-based detection and anomaly-based detection. Another drawback of a HIDS is that it stores the logs on the local computer, and an attacker might be able to delete the logs.

4. D. An intrusion prevention system is an inline monitoring and detection system that can modify the environment (such as by changing ACLs or closing half-open connections) to block an attack. Although it may be able to detect some malware such as worms, this isn’t the best definition. It is not used for vulnerability assessments or penetration tests.

5. B. A signature-based IDS compares activity against a signature file (or database of signatures) to identify attacks. An anomaly-based IDS requires a baseline. Both a host-based IPS (HIPS) and a network-based IPS (NIPS) can use either anomaly-based or signature-based detection methods.

6. B. An anomaly-based NIDS compares current activity against a baseline to determine abnormal behavior, and this baseline should be updated when the network is modified. This provides the NIDS with an accurate baseline. A signature-based NIDS uses a signature database file. Router gateways and firewalls do not need to be re-created for the NIDS.

7. A. An anomaly-based IDS detects attacks by comparing current activity against a baseline. A signature-based IDS detects attacks by comparing network activity with a database of known attack methods. An IDS does not use antivirus signatures. While an IDS monitors activity on firewalls, this doesn’t identify how it detects attacks.

8. A. Logs held on remote systems are the most valuable because an attacker is less likely to have modified them. Attackers can modify logs on a local system (including local firewall logs) during an attack. Although AV logs may be useful in attacks involving malware, they aren’t very useful if attackers didn’t use malware during the attack.

9. D. File integrity checkers use hashing techniques to detect whether modifications of system or other important files have occurred. File integrity checkers use hashing algorithms, not encryption algorithms. Intrusion detection and prevention systems use signature-based detection to detect known attacks and use anomaly-based detection to detect previously unknown attacks, but these are not related to detecting modifications to system files.

10. A, B, D. Events of interest include intrusions (including those caused by a user clicking a malicious link), unauthorized changes, data exfiltration (including from an internal user), and more. A false positive is not an event of interest.

11. A. A security information and event management (SIEM) application collects data from multiple sources, then aggregates and correlates the data. It combines the dissimilar data into a single database and analyzes the data to detect potential events of interest. While it might detect attacks on a web server, it does much more. Similarly, it would probably monitor traffic going through demilitarized zone (DMZ) firewalls, but not just the DMZ firewall traffic. A SIEM application wouldn’t perform vulnerability assessments.

12. D. A security information and event management (SIEM) application collects data from multiple sources and provides continuous monitoring. When configured to do so, a SIEM application can monitor all network traffic. None of the other answers provide continuous monitoring. A vulnerability scanner will scan a network at a point in time. A penetration test is performed during a specific period, but it ends at some point. A file integrity checker can detect modifications in files.

13. B. A gray box test (also called a partial knowledge test) is performed with some internal knowledge and can be performed either internally or externally. A white box test (also called a full knowledge test) is performed with full access to internal documentation. A black box test (also called a zero knowledge test) is performed without any inside knowledge of the organization. It is not an internal test because an external organization is performing it.

14. C. A black box test (also called a zero knowledge test) is performed without any inside knowledge of the organization. A white box test (also called a full knowledge test) is performed with full access to internal documentation. A gray box test (also called a partial knowledge test) is performed with some internal knowledge.

15. D. An important first step in a vulnerability assessment is gaining written approval from management. Discovery includes a reconnaissance scan and fingerprinting techniques, but should not be done without approval. Documenting the vulnerabilities comes after discovery and analysis.

16. C. The last step in a vulnerability assessment is remediation of vulnerabilities using controls approved by management. Discovery, analysis, and documentation all occur after gaining approval from management, but before remediation. In this scenario, the outside consultant would perform the assessment and provide a report to management. Management approves some or all of the security controls, and personnel then implement the controls to remediate the vulnerabilities.

17. D. A vulnerability scanner fingerprints a system by analyzing packets sent out by the system. In this context, fingerprinting is a metaphor and it doesn’t use a biometric scanner. An Internet Control Message Protocol (ICMP) sweep identifies IP addresses on a network, but the IP addresses do not fingerprint a system.

18. D. A false positive occurs when a vulnerability assessment tool indicates that a vulnerability exists when it actually does not exist. A false negative occurs when a vulnerability assessment tool indicates that a vulnerability doesn’t exist when it actually does exist. Anomaly-based and signature-based vulnerabilities are detection methods of IDSs and are not associated with vulnerability assessment tools.

19. B. A penetration test is intrusive; it includes a vulnerability assessment, attempts to exploit discovered vulnerabilities, and can cause damage. Vulnerability assessments are not intrusive and do not cause damage.

20. C. Most penetration steps stop before executing an exploit that can cause damage. Penetration steps include the basic vulnerability assessment components of identifying vulnerabilities, threats, and exploits, and then follow these steps with an attempt to exploit a discovered vulnerability.