HOUR 11

Selecting Network Hardware and Software

What You’ll Learn in This Hour:

• Key factors in selecting network hardware and software

• Additional guidance on servers, routers, and NOSs

• Ethernet considerations

• Pros and cons of client/server and peer-to-peer networks

• The network “bottleneck”

As you see from its title, this hour is devoted to the topic of selecting the hardware and software for your up-and-coming network. Of course, in many situations (and emphasized in Hour 10, “Designing a Network”), an enterprise will already have one or more networks in place. Nowadays, it’s rare to come across a company meandering along in a nonnetworking environment. So, be aware that this hour pertains to creating new networks as well as upgrading existing networks.

The hour is also designed to tie together many of the general concepts about the subject that were set forth in previous hours. It also includes guidance on dealing with vendors. Specifics on this latter subject, such as creating detailed vendor evaluation guidelines and writing legally binding contracts, are beyond the scope of this book.

We’ve discussed issues relating to planning and documenting the new or enhanced network. In this hour, we look at making decisions related to the physical structure of the network and the client/server or peer-to-peer operating systems (OSs) that will be deployed on the network. Also in this hour, the pros and cons of these two approaches are discussed in more detail.

Evaluating the Server Hardware

For server hardware, you have many vendors and many vendor models from which to choose. Vendors also offer a variety of additional features to improve the performance of their products. As introduced in Hour 4, “Computer Concepts,” the processor, motherboard, memory, hard drives, and interface cards are important components to evaluate during the hardware selection process. Other key factors are the nature of the server backup and the media to connect servers to other machines.

The server (or servers) will likely play a key role in your network. Make sure it’s located in a well-ventilated and physically secure room. As a rule, it’s recommended you purchase a server with two or more processors. This approach enables the server to handle more simultaneous tasks from clients, resulting in faster response time and increased throughput. Most vendors offer products with two processors. Some support a concept called hyperthreading, which gives the illusion of a single processor appearing as two processors to the server OS.

For some servers, a low-end processor will operate at 300 megahertz (MHz)—not very fast. You and your users will be well served if you do not lowball this part of the hardware selection. My recommendation is to purchase the highest speed and largest number of processors that the company’s budget will allow.

A low-end server does not need much RAM memory—only 256 megabytes (MB). However, like the processor decision, it is recommended you configure the server with a lot of memory. How much? As much as you can afford—but with a caveat. Memory is easy to add later. So, perhaps you start with a few gigabytes (GB) and then add more later, if needed. But make sure your OS is capable of addressing the additional memory. For example, Vista 64 is needed to address the 4th GB of memory.

Modern server-based disk drives come in capacities ranging from a modest 40GB to terabyte (TB) capacities. However, servers support multiple hard drives, so you do not need to purchase the biggest drive. You can add more hard drive capacity later.

The issue of power consumption should also be considered when evaluating potential disk systems. Ask the vendor to furnish data on power usage; ask for an explanation of the vendor’s design rationale for the efficient use of power for the drives.

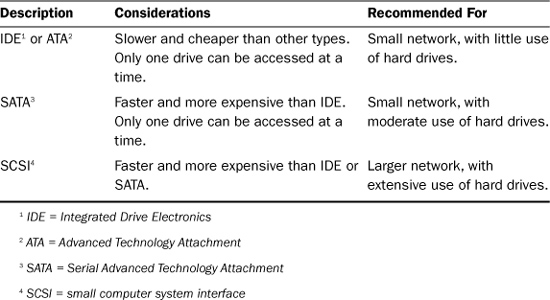

Make certain your server selection includes sufficient network interface cards (NICs), associated connectors, and disk drives for proper backup of the company’s data. Table 11.1 is a summary with associated considerations and recommendations, courtesy of Microsoft.

TABLE 11.1 Comparison of Drives

Don’t forget the redundant array of inexpensive disks (RAID) discussion from Hour 5, “Network Concepts.” With this idea in mind, it is recommended you purchase more than one disk drive and implement at least RAID level 1 or 5.

Evaluating the “Interworking” Hardware

You will need to select one or more devices to tie together the servers and computers to allow the machines to “interwork.” In previous hours, we called these machines by various names. For this discussion, we’ll be more specific. But before explaining them, bear in mind that the previous discussion about server hardware components generally pertains to interworking hardware as well. That is, processor, memory, and disk capacity are as important for these machines as they are for servers. Here’s a summary of these devices:

• Hub—This device sends data from one computer to all other computers on the network. It’s a low-cost, low-function machine that usually operates at Layer 1 of the OSI model and ties together the computers attached to it through multiple (twisted pair or optical) ports. It acts as a repeater for a signal passed from, say, port 1 (through the repeater) to port 2. For Ethernet hubs, it participates in the collision detection operations, which can affect response time and throughput. In addition, the need for hubs to detect collisions restricts the size of the network and the number of hubs that can be installed.

Don’t overlook hubs; they’re inexpensive and easy to install and maintain. However, they are declining in use.

• Switch—This term is one of the more confusing buzzwords in the industry. Because of its early use in telephone networks (the famous circuit switch) and packet networks (the famous X.25 packet switch), it has evolved though many years to have more than one meaning. First, it (now) is usually associated with a device that relays traffic by using a Layer 2 address. As examples, an Ethernet MAC address, or an ATM/MPLS label. Second, it usually contains software that supports the building of routing tables—a technique to improve traffic flow in a network, and a substantial improvement over a hub.

• Bridge—To confuse matters, the bridge performs the same operations as those just described for a switch. However, be careful here. The term bridge is often associated with a LAN device that uses Ethernet MAC addresses for routing. Thus, there’s no such thing as an ATM or MPLS bridge. They’re called Asynchronous Transfer Mode (ATM) or Multiprotocol Label Switching (MPLS) switches. (On behalf of the data communications industry, I offer apologies for these confusing terms; thanks for bearing with me.)

• Router—A router, which was introduced in Hour 5, is a more powerful device than a hub, switch, or bridge. Its principal operations are at OSI Layer 3 with IP addresses. High-end routers can also be configured as firewalls and extensive network management features. Some can be configured for their ports to operate as Layer 2 bridges. They are flexible and powerful machines.

• Wireless access point (WAP)—The WAP (introduced in Hour 7, “Mobile Wireless Networking”) interworks the wired and wireless worlds. We learned that most WAPs operate with the IEEE 802.11 specifications. Many routers have the capacity to act as WAPs.

So, which do you choose? The decision depends on the size and geographical range of your user base. That stated, as a general practice, I opt for routers with WAP interfaces. You can purchase them for a modest price for a home network at your local office store or acquire them for a large enterprise network with a variety of capabilities.

Hardware Selection Considerations for Ethernet Networks

It’s highly probable that you and your design team will be making decisions about local Ethernets.

Ethernet is the easiest network architecture in terms of shopping around for wiring, network cards, switches, routers, and other equipment. Even the most bare-bones computer equipment store will provide connectivity devices and Ethernet NICs.

As mentioned in Hour 3, “Getting Data from Here to There: How Networking Works,” Ethernet provides different flavors such as 10BASE-2, 10BASE-5, and 10BASE-T. The 10BASE-2 and 10BASE-5 implementations are old technologies, and you will have to search around to find one of these Ethernet types that’s still up and running. Although 10BASE-T has been the Ethernet standard for several years, nearly all new deployments of Ethernet are 100BASE-T or 1000BASE-T.

100BASE-TX and 1000BASE-T are in many products. These standards use twisted pair cables standard (RJ-45) connectors. They run at 100 megabits per second (Mbps) and 1 gigabit per second (Gbps), respectively. However, each version has become steadily more selective about the cable it runs on, and some installers have avoided 1000BASE-T for everything except short connections to servers.

Given their ubiquity, Ethernet 100BASE-T and 1000BASE-T are the clear choices for the majority of networks. Both are inexpensive and integrated into almost every new network device, and most network equipment manufacturers offer them. They’re also standardized, which helps ensure interoperability between equipment made by different vendors. You probably already know that Internet service providers (ISPs) use Ethernet connections and NICs to connect home and small office users to digital subscriber line (DSL) and cable modem networks for Internet access.

Working with Ethernet 100BASE-T

For the sake of discussion, let’s say you and your team opt for Ethernet LANs (specifically, 100BASE-T). 100BASE-T is versatile, widely available, and scales easily. It’s simple to install and maintain, and it’s reliable. Many manufacturers make network cards for everything from Intel-compatible desktop systems to Macintoshes to laptops. You can find a 100BASE-T Ethernet card for almost any system.

Additionally, 100BASE-T cabling offers an upgrade path. If you use the proper type of wire or cabling (called 568A or B), the network can support 100BASE-T networks. This upgrade path is part of what makes 100BASE-T a solid choice for a network topology. And because you can chain 100BASE-T hubs together (if you use hubs), you can increase the size of a network relatively with relative ease.

Working with Ethernet 1000BASE-T

If you’re concerned a 100Mbps LAN will not meet your capacity needs, don’t hesitate to examine 1000BASE-T. In the past few years, the marketplace and product lines for Gigabit Ethernet have matured. Gigabit Ethernet is now widely available in off-the-shelf products.

If your installation already has 100BASE-T hardware and software and you want to begin an upgrade to 1000BASE-T, make sure your evaluations include assessments of the gigabit products being backward compatible with the megabit products. If they are, your network will still be restricted to the lower rate, but you’ll be on a path to migrate to a higher-capacity technology.

Implementation Ideas for Megabit Ethernet and Gigabit Ethernet

100BASE-T and 1000BASE-T are used in network peripherals such as printers and file servers. Almost all these devices have a 100BASE-T port, and many of them now support a gigabit port. By the time this book is published, I suspect Gigabit Ethernet will be as common as Megabit Ethernet.

Here’s a recipe for the ingredients necessary for a 100BASE-T or 1000BASE-T network:

• Two or more computers. Almost all computers can use some form of Ethernet, from laptops to desktop PCs to UNIX systems and Macs.

• One Ethernet network card per computer.

• One hub/bridge/router with enough ports to connect all your computers, or the use of Wi-Fi if machines are confined to a local area.

• Enough patch cords to connect each network card’s RJ-45 socket to the router’s RJ-45 socket. A patch cord is an eight-conductor cord (meaning four pairs of wires) with an RJ-45 jack at both ends. (It looks like a really fat phone cord.) Patch cords are available at computer stores for a couple dollars per cable.

100BASE-T and 1000BASE-T are star topologies, which mean that everything has to connect to a concentrator—or, more correctly, a hub, bridge, or router.

Selecting the Network Type: Client/Server or Peer to Peer

Earlier in this book, we discussed the pros and cons of client/server and peer-to-peer networking strategies. In the next section, you’ll have the opportunity to see how you can justify your decision to go with client/server or peer to peer. Earlier, I assumed you and your team had chosen the client/server technology, but it’s a good idea to lay out in more detail how these systems operate and offer some points for your consideration in making selection choices.

As discussed, two important issues relating to choosing the type of network are scale and cost. A small office with little or no expansion will not need to deploy an expensive network server and run a Network Operating System (NOS) so that only a few users can share a few files and a printer. With this thought in mind, let’s discuss client/server or server-based networking. We then take a look a peer-to-peer networking.

Client/Server Networking

Client/server networking entails two basic operations that are provided by a centralized server: authentication and support services. Workstations on the network don’t require services from other workstations on the network. The service architecture of the network resides on one or more redundant, protected, regularly maintained, and backed up servers. These servers are dedicated to specific tasks: file services, Internet/wide area network (WAN) connectivity, remote access, authentication, backend distributed applications, and so forth.

In other words, workstations connected to a “pure” client/server network see only servers—they never see one another. A client/server network is a one-to-many scheme, with the server being the one and the workstations being the many. This architecture is the model used by large commercial websites, such as Amazon. The client is a small graphical front end that’s delivered fresh from the server each time it’s opened and a large server architecture at the back end that handles ordering, billing, and authentication. No user of Amazon knows another user is currently online—there’s no interaction between users, just between the servers and the client system.

The client/server model is useful for large businesses that have to manage their computer users’ computer resources efficiently. In a pure client/server environment, a great deal of the software that clients use at their workstations is stored on a server hard drive rather than on users’ own hard drives. In this configuration, if a user’s workstation fails, it is relatively easy to get the user back online quickly by simply replacing the computer on the desktop. When the user logs back in to the network, she’ll have access to the applications needed to work.

The TCP/IP-based technology of the Internet has changed the accepted definition of client/server somewhat, with the focus being on distributed applications, where a “thin” client (such as a web page application running in a browser) works in tandem with a much larger server. The advantages of this model stem from the application’s capability to run in a browser. Because browsers are universal—that is, they can run on Windows machines, Macs, UNIX boxes, and other systems—applications can be distributed quickly and effectively. Only the copy at the server needs to be changed for a web-enabled application, because the changes will be distributed when users open the new page.

A client/server architecture is appropriate if one or more of the following conditions apply to your situation:

• Your network user population is large, perhaps more than 20 computers. On a larger network, it might not be wise to leave resources entirely decentralized as you would on a peer-to-peer network, simply because there’s no way to control the data and software on the machines. However, the size of the company’s user base shouldn’t be the only criteria. Indeed, size might be irrelevant, if the users are working on independent projects with little or no data sharing.

• Your network requires robust security. Using secure firewalls, gateways, and secured servers ensures that access to network resources is controlled. However, and once again, installing a firewall in the router that connects your LAN to the Internet may be all you need for security. In this situation, your company doesn’t need a dedicated security server. Even more, the individual workstations will be running their own security software and performing virus scans periodically.

• Your network requires that the company’s data be free from the threat of accidental loss. This means taking data stored on a server and backing it up from a central location.

• Your network needs users to focus on server-based applications rather than on workstation-based applications and resources. The users access data via client/server-based technologies such as large databases, groupware software, and other applications that run from a server.

Peer-to-Peer Networking

Peer-to-peer networking is based on the idea that a computer that connects to a network should be capable of sharing its resources with any other computer. In contrast to client/server, peer-to-peer networking is a many-to-many scheme.

The decentralized nature of peer-to-peer networking means the system is inherently less organized. Knowing this, also note that the recent computer OS offering from Microsoft (Windows Vista) has powerful peer-to-peer networking functions.

Peer-to-peer networks that grow to more than a few computers tend to interact more by convenience or even chance than by design. As examples, Windows Vista and Windows XP provide a Networking Wizard that automatically sets up basic network configurations; it’s a fine service, especially for network neophytes.

Peer-to-peer networking is similar to the Internet: There are no hard-and-fast rules about basic user-to-user interactions. With a client/server model, you can establish rules for user-to-user interactions through the server(s).

Peer-to-peer networking is appropriate for your network if the following conditions apply:

• Your network is relatively small (fewer than 10 computers, although depending on the number of users accessing any one computer for resources, you could get by with 15–20).

• Your network doesn’t require robust security regarding access to resources.

• Your network does not require the company data be free from the threat of accidental loss.

• Your network needs users to focus on workstation-based applications rather than on server-based applications and resources. This means that users run applications such as productivity software (Microsoft Office, for example) installed on each computer. Each user works in a closed system until she has to access data on another peer-to-peer computer or a shared printer.

Most of the time, home networks can use peer-to-peer networking without a problem. The only piece of the network that you likely need to centralize is Internet access, and you can easily obtain this operation via a combination device that serves as a hub/switch/router, a firewall, and a DSL modem.

Now that we’ve looked at client/server and peer-to-peer networking, let’s look at client OSs—specifically those that provide peer-to-peer services—and then we can tackle NOSs. This latter subject is highlighted in this hour, which acts as an introduction to more detailed discussions in Hours 16 and 17.

Peer-to-Peer OSs

In a peer-to-peer network, there’s no NOS per se. Instead, each user’s workstation has desktop OS software that can share resources with other computers as desired. Typically, OSs include the capability to configure a protocol and share resources. Most peer-to-peer OSs provide a relatively limited range of sharable devices, although file sharing (or shared disk space) and networked printing are standard features.

Following is a summary of client OSs that provide peer-to-peer services:

• Microsoft Windows—Microsoft Windows has provided peer-to-peer networking capabilities since Microsoft Windows for Workgroups 3.11. Each of the subsequent versions of Windows has provided increasingly more powerful peer-to-peer capabilities. Microsoft Windows (both the Home and Professional versions) even provides a Network Setup Wizard that makes it easy to configure a Windows workgroup (a workgroup being a peer-to-peer network). We’ll look more closely at Windows peer-to-peer networking in the next section.

• Linux—Linux has become a flexible and cost-effective OS for both the home and workplace. Numerous Linux distributions are available that vary in their degree of user friendliness. Linux provides several ways to share files and other resources such as printers. It includes the Network File System (NFS), where a Linux computer can act as both a server and a client.

• Macintosh OSX—Although our discussion has centered around Intel-based computers, we should mention the peer-to-peer networking capabilities of the Apple Macintosh and its various offspring such as the Mac PowerBook. Peer-to-peer networking has been part of the Mac OS since its beginning.

Windows is the most dominant desktop OS in terms of installations. Let’s take a closer look at peer-to-peer networking with the most widely used version of Windows, Microsoft Windows XP. This discussion includes configuration examples, which should help you in making selection decisions about using them.

Peer-to-Peer Networking with Microsoft Windows

As mentioned, Windows for Workgroups was the original “do-it-yourself” network for Intel-compatible personal computers. It was designed around Microsoft’s MS-DOS OS and Windows shell. A number of different versions of Windows have come and gone; we’ve seen Windows 95, Windows 98, Windows Millennium Edition (Windows Me) for the home user, and Windows NT and Windows 2000 for business network users. The most widely used version of Windows, Windows XP, comes in two versions: Home and Professional.1

1 Windows Vista is Microsoft’s latest OS product that I’ve mentioned several times thus far. To date, it hasn’t cornered the market and has met with increasing skeptics, including me. Thus, we concentrate on Windows XP.

The Home version is designed for the home or small office user who will work in a Microsoft workgroup (meaning a peer-to-peer network). Windows XP Professional provides additional features and is designed to serve as a client on a server-based network. Don’t buy the Home edition if you’re going to implement a client/server network.

Microsoft’s peer-to-peer networking products are based on the idea of a workgroup, or a set of computers that belong to a common group and share resources among themselves. Microsoft peer-to-peer networking is quite versatile and can include computers running any version of Microsoft Windows.

Additionally, on a given physical network, multiple workgroups can coexist. For example, if you have three salespeople, they can all be members of the SALES workgroup; the members of your accounting department can be members of the ACCOUNTS workgroup. Of course, there’s a common administrative user with accounts on all machines in all workgroups, so central administration is possible to a limited extent, but it isn’t an efficient administrative solution.

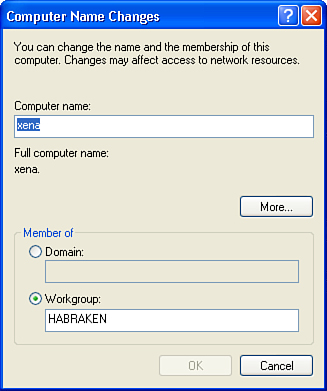

Windows peer-to-peer networking is straightforward. Computers running Windows XP (or earlier versions of Windows) are configured so that they’re in the same workgroup. You can do this in the Windows XP Computer Name Changes dialog box (shown in Figure 11.1), which is reached via the System’s Properties dialog box. (Right-click on My Computer and select Properties.)

FIGURE 11.1 Configure a Windows XP computer to be part of a workgroup

The alternative to configuring the workgroup manually is to use the Network Setup Wizard.

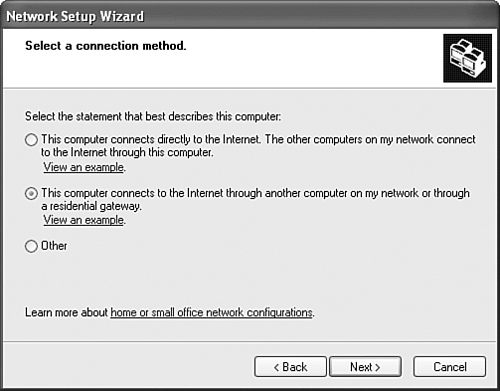

The wizard walks you through the steps of configuring the workgroup and can even generate a file that you can use to add other Windows computers to the workgroup—even computers running earlier versions of Windows. You start the wizard via the Windows Control Panel. Select the Network and Internet Connection icon in the Control Panel, and then select Set Up or Change Your Home or Small Office Network. Figure 11.2 shows the wizard screen that allows you to select a connection method.

FIGURE 11.2 The Network Setup Wizard walks you through the steps of creating the workgroup.

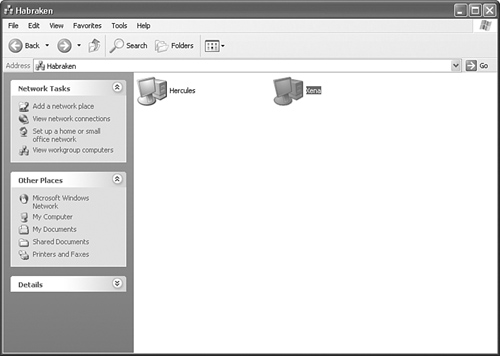

After the workgroup is up and running, any user in the workgroup can browse the workgroup for shared folders and printers. You can map folders that users access regularly to a member computer as a network drive. Workgroup members can view workgroup computers and their shared resources using the My Network Places window. Figure 11.3 shows the two members of a workgroup named Habraken.

FIGURE 11.3 Workgroup members can browse the workgroup member computers.

Workgroups are fine when users can collaborate in a friendly atmosphere and are computer savvy enough to ensure that important data is used appropriately (and backed up). Because each resource (such as folders) can require a separate password, any more than a few users can create an environment of confusion. If your company has more than 10 users or the company’s automated resources are valuable and sensitive, you should examine the option of deploying a server running a NOS, which is our next topic.

Evaluating NOSs

This section continues the introductory material on the subject that was included in Hour 4. If you’re building a network with a sizable chunk of centralized services, one of the following client/server NOSs is for you. Whereas peer-to-peer networks are similar to a cooperative group of people with diverse functions but no single leader, a client/server network is similar to a hierarchy—the server is the leader, the one with all the knowledge and the resources.

The following sections provide more details of NOSs, which are used in client/server networks. As we describe each of them, keep in mind that you and your design team must make decision about which one will be installed in your network...assuming you opt for a client/server environment. We’ll look at the following:

• Novell NetWare

• Microsoft Windows Server

• Linux/UNIX

Novell NetWare

You and your team will face a problem during your assessment of Novell’s NOS. It’s a powerful software platform, but its market share is declining. How you react to this reality must be based on your team’s view of NetWare and your company’s view of Novell. Our focus here is on the technical aspects of the situation. First, here’s a bit of history.

In the early days of PC internetworking, Ray Noorda’s Novell invented NetWare. It came as a balm to early PC-networking system administrators who were used to dealing with the innumerable networking schemes that appeared in the early to mid-1980s. NetWare provided reliable, secure, and relatively simple networking. In the early years, the business world snapped up as many copies as Novell could turn out.

Over the years, NetWare matured. Its focus broadened beyond the local LAN into WAN configurations. With the advent of NetWare 5 and NetWare Directory Services (NDS), Novell had a product that enabled a global network to provide its users with the same resources, no matter where on the network those users logged in.

But in 1994 and 1995, two things happened that made Novell stumble. The first was the introduction of Microsoft’s Windows NT, which Novell failed to view as serious competition. Microsoft’s aggressive marketing and the ease of use of Windows NT quickly made inroads into Novell’s market share.

Novell’s second slip was in failing to realize that the rise in public consciousness of the Internet fundamentally changed the playing field for NOS manufacturers. Novell had used its Internetwork Packet Exchange (IPX) protocol for close to 15 years; it saw no reason to change.

Novell has made up for a number of earlier missteps in relation to NetWare. The NOS now embraces Transmission Control Protocol/Internet Protocol (TCP/IP) as its default network protocol. A recent version of Novell’s NOS, NetWare 6.5, also integrates several open source services from the Linux platform, including Apache Web Server, which is one of the most popular web server platforms in use.

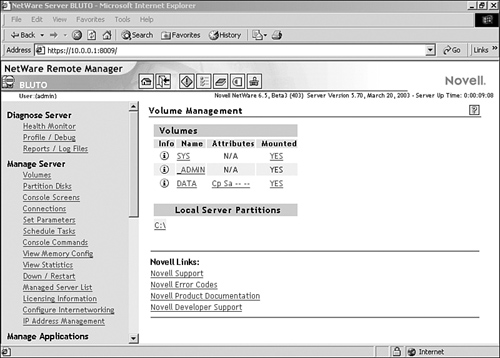

Administrative tools for managing NetWare were also rather meager in earlier versions of NetWare. However, NetWare 6.5 provides many new tools, including the NetWare Remote Manager, which you can use to manage network volumes and monitor server settings. Remote Manager is accessed using a web browser, which makes it easy for an administrator to open it from any workstation on the network. Figure 11.4 shows the NetWare Remote Manager in a web browser window.

FIGURE 11.4 The NetWare Remote Manager

In 2003, Novell announced Open Enterprise Server (OES) and released it in March 2005. OES consists of a set of applications (such as the eDirectory) that can run over a Linux or a NetWare platform. Some network experts state Novell is shifting away from NetWare and toward Linux. As we learned in Hour 5, the company has assured its customers that it will support whatever NOS they want.

NetWare might well be appropriate for your company’s network. It’s fast, efficient, and easy to install and configure. The Novell Directory Service hierarchy for network objects (such as users and groups) has been upgraded to the Novell eDirectory to provide an easy-to-use hierarchical system for tracking servers and other objects on the network. NetWare can also accommodate situations in which your network spans multiple LANs (across WANs). In addition, it provides the scalability expected from a well-performing NOS platform.

With recent changes to NetWare’s software and changes in NetWare licensing structure, it’s certainly worth your while to take a serious look at NetWare when you’re working through the process of determining the best NOS for your network.

Microsoft Windows Server

Windows NT Server, the first edition of Microsoft’s popular server software, emerged out of the breakup of IBM and Microsoft’s shared OS/2 initiative. Although Windows Server OSs offer a fairly simple graphical user interface (GUI), it’s a true multitasking, multithreaded NOS.

Since Windows NT was introduced in 1993–1994, Microsoft has weathered a storm of criticism regarding its reliability, scalability, and robustness. To be fair, some of this criticism has been deserved because some releases of the Windows NOS have had a number of flaws. However, Microsoft has persevered and continued to refine its NOS as it passes through product cycles (NT Server 3.5 to NT Server 4 to Windows 2000 Server to Windows Server 2003), up to and including the current iteration, Windows Server 2008.

The most significant change in the Microsoft server products was the upgrade of the Microsoft NOS from NT Server 4 to Windows 2000 Server. Microsoft’s flat domain model was replaced by a hierarchical directory service called Active Directory. Active Directory holds all the objects that exist on the network, including domains, servers, users, and groups. We discuss how the Microsoft domain model works in comparison to Active Directory in Hour 16, “Microsoft Networking.” Active Directory allowed Microsoft to compete on a level playing field with NetWare’s NDS (now eDirectory) and Sun’s Network Information Service.

UNIX and Linux

Unlike NetWare or Windows NT, UNIX is not a monolithic OS owned by a single corporation. Instead, it’s represented by a plethora of manufacturers with only a few clear standouts. The most common UNIX systems are Sun Microsystems’ Solaris, IBM’s AIX, and Hewlett-Packard’s HP-UX.

In the PC-hardware world, Linux, a UNIX clone, has trumped various UNIX systems, including commercial variants such as Santa Cruz Operation’s SCO UNIX, Novell’s UNIXWare, and Sun’s Solaris for Intel. Linux has also grabbed greater market share than other community-based OS developments such as BSD (Berkeley Standard Distribution), OpenBSD, and FreeBSD.

UNIX and UNIX-like OSs come in many flavors, and some features and commands vary widely between versions. In the end, though, it remains the most widely used high-end server OS in the world.

However, the UNIX world is fragmented by a host of issues that derive from UNIX’s basic design: UNIX is open-ended and is available for almost any hardware platform. UNIX has existed for more than 30 years, and its design has been optimized, revised, and improved to the point at which it’s quite reliable.

Unfortunately, the availability of UNIX across many different platforms has led to one significant problem that blocks its widespread adoption: Software written for one version of UNIX usually doesn’t run on other versions. This lack of compatibility has led to UNIX receiving a dwindling share of the server market except at the very high end where absolute reliability is a must. Linux and the Open Source/GNU movement have ameliorated a good deal of the incompatibility issues by ensuring that the source code for most Linux-based software is available. This means that with a copy of Linux with a C-language compiler, you can compile—that is, translate from source code to machine instructions—a variety of software.

UNIX has a reputation for being difficult to master. It’s complex; there’s no doubt about that. But after you assimilate the basics (which can prove daunting), UNIX’s raw power and versatility make it an attractive server platform.

Interestingly, Linux, which is essentially a UNIX clone, has begun to make a great deal of headway in the server and workstation market. It’s begun to put a dent in Microsoft’s market share both in the server and desktop OS categories.

In spite of its complexity, however, any version of UNIX makes for efficient file, print, and application servers. Because of 30 years of refinement, the reliability of a UNIX system is usually a step above that of other platforms. UNIX uses the TCP/IP networking protocol stack natively; TCP/IP was created on and for UNIX systems, and the “port-and-socket” interface that lies under TCP/IP has its fullest expression on UNIX systems.

We will look more closely at the UNIX and Linux platforms in Hour 17, “UNIX and Linux Networking.” Most of our discussion will relate to Linux because its open source development makes it an inexpensive and intriguing NOS to explore. Linux has become a cost-effective and viable alternative to some of the standard NOSs, such as NetWare and Microsoft Windows Server.

The Network “Bottleneck”

For this discussion, let’s assume you and your team have decided on the following technologies for your LANs:

• Gigabit Ethernet—IEEE 1000BASE-T

• Wi-Fi—IEEE 802.11g

For your connection to the Internet, you’ve selected the following:

• DSL from the local phone company

Be aware your connection from the Internet to your users’ computers and the network servers is only as fast as the lowest-speed link in the communications chain. Here are the three links to this chain:

• DSL—3Mbps (offerings vary)

• Ethernet—1Gbps

• Wi-Fi—54Mbps

Consequently, for a session with the Internet when, say, high-resolution video images are streaming down to a user computer, they will be “streaming” at roughly 3Mbps, the lowest bit rate in the links’ chain. The software involved in this process is quite powerful and can mitigate (to some extent) the differences in the links’ capacity. Buffering the data and using time stamps to “play out” the packets in a consistent manner are two examples of this wonderful “soft stuff” in operation. But the software can’t create extra bits to fill the Ethernet/Wi-Fi pipes.

I think it’s fair to say that most users in small businesses will not notice the DSL bottleneck. If it becomes a problem, and it likely will when scores, hundreds, or thousands of users are using this DSL user-network interface (UNI), you and your team must move to a higher-capacity link to the Internet. We examined the selection options in earlier hours. To review briefly:

• Leasing a DS3 (T3) link

• Determining if Wi-Fi is available as a UNI with the ISP

• Bringing Synchronous Optical Network (SONET) to your premises

Make certain your user community needs these expensive links for real-time processing. Real-time means immediate, perhaps interactive access to the data coming from the Internet or an internet. Perhaps the users can simply download the data onto hard disk and later play it back through the high-capacity LAN links.

On the other hand, for larger companies, it’s likely a conventional DSL link will not provide sufficient bandwidth for the entire user community. Advice: Make sure you have budgeted for this contingency.

A Word Regarding Network Protocols

Although selecting the appropriate NOS platform is an important task, selecting the network protocol you will use to move data on your network is just as crucial. Because most corporations, businesses, and home users want to be connected to the Internet, it has become essential for network servers and clients to be configured for TCP/IP.

As you’ve probably noticed in the discussion of NOSs provided in this hour, all the current versions of the most widely used server platforms execute TCP/IP as their default communications protocol stack. In fact, if you want to use additional network protocols, you’ll have to add them yourself. I recommend that you opt for no other protocol stack than TCP/IP.

Our discussions have dealt with ideas about “selecting” network hardware and software. Regarding the selection of so-called network protocols, you really have an easy job: Go with the TCP/IP protocol stack. In the final analysis, it’s the last remaining soldier. NetWare’s IPX, Xerox’s legendary protocol stack, AppleTalk’s Layer 3, and IBM’s SNA Layer 3 have been left in the networking dust.

Summary

In this hour, we discussed ideas for selecting network hardware and software. We highlighted Ethernet, which is the most widely deployed LAN technology. We also examined peer-to-peer versus server-based networking and discussed Microsoft’s peer-to-peer offerings. Our discussions also included an introduction to popular network server platforms. We learned about the potential bottleneck problem that might crop up on the link between your company’s network to the Internet. We also learned how to overcome this bottleneck: Pay more money for more bandwidth.

Q&A

Q. What’s the most widely used LAN network architecture?

A. Ethernet is the most widely used. Most new implementations are based on 1000BASE-T.

Q. What are typical peer-to-peer OSs?

A. Peer-to-peer OSs include Microsoft Windows XP (and earlier versions of Windows) and various distributions of Linux.

Q. What are the most common PC NOSs?

A. NOSs include NetWare, Windows Server 2003 and 2008 (and Windows 2000 Server), various UNIX platforms, as well as a number of Linux implementations.

Q. What is the de facto standard for network protocols?

A. TCP/IP and the TCP/IP-related protocols are the de facto standard.