Chapter Sampling

Hardware characteristics such as nonlinearity, response times, and susceptibility to noise can have important consequences in a data-acquisition system. They often limit performance and may necessitate countermeasures to be implemented in software. A detailed knowledge of the transfer characteristics and temporal performance of each element of the data acquisition and control (DA&C) system is a prerequisite for writing reliable interface software. The purpose of this chapter is to draw your attention to those attributes of sensors, actuators, signal conditioning, and digitization circuitry that have a direct bearing on software design. While precise details are generally to be found in manufacturer' literature, the material presented in the following sections high- lights some of the fundamental considerations involved. Readers are referred to Eggebrecht (1990) or Tompkins and Webster (1988) for additional information.

1. Introduction

DA&C involves measuring the parameters of some physical process, manipulating the measurements within a computer, and then issuing signals to control that process. Physical variables such as temperature, force, or position are measured with some form of sensor. This converts the quantity of interest into an electrical signal which can then be processed and passed to the PC. Control signals issued by the PC are usually used to drive external equipment via an actuator such as a solenoid or electric motor.

Many sensors are actually types of transducer. The two terms have different meanings, although they are used somewhat interchangeably in some texts. Transducers are devices that convert one form of energy into another. They encompass both actuators and a subset of the various types of sensor.

1.1. Signal Types

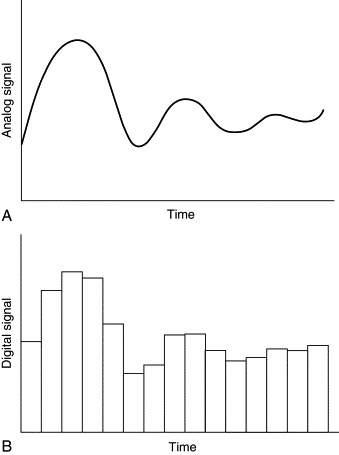

The signals transferred in and out of the PC may each be one of two basic types: analog or digital. All signals will generally vary in time. In changing from one value to another, analog signals vary smoothly (i.e., continuously), always assuming an infinite sequence of intermediate values during the transition. Digital signals, on the other hand, are discontinuous, changing only in discrete steps as shown in Figure 1.

Figure 1. Diagram contrasting (A) analog and (B) digital signals.

Digital data are generally stored and manipulated within the PC as binary integers. As most readers will know, each binary digit (bit) may assume only one of two states: low or high. Each bit can, therefore, represent only a zero or a one. Larger numbers, which are needed to represent analog quantities, are generally coded as combinations of typically 8, 12, or 16 bits. Binary numbers can only change in discrete steps equal in size to the value represented by the least significant bit (LSB). Because of this, binary (i.e., digital) representations of analog signals cannot reflect signal variations smaller than the value of the LSB. The principal advantage of digital signals is that they tend to be less susceptible than their analog counterparts to distortion and noise. Given the right communication medium, digital signals are better suited to long-distance transmission and to use in noisy environments.

Pulsed signals are an important class of digital signals. From a physical point of view, they are basically the same as single-bit digital signals. The only difference is in the way in which they are applied and interpreted. It is the static bit patterns (the presence, or otherwise, of certain bits) that are the important element in the case of digital signals. Pulsed signals, on the other hand, carry information only in their timing. The frequency, duration, duty cycle, or absolute number of pulses are generally the only significant characteristics of pulsed signals. Their amplitude does not carry any information.

Analog signals carry information in their magnitude (level) or shape (variation over time). The shape of analog signals can be interpreted either in the time or frequency domain. Most “real-world” processes that we might wish to measure or control are intrinsically analog in nature.

It is important to remember, however, that the PC can read and write only digital signals. Some sensing devices, such as switches or shaft encoders, generate digital signals that can be directly interfaced to one of the PC's I/O ports. Certain types of actuator, such as stepper motors or solenoids, can also be controlled via digital signals output directly from the PC. Nevertheless, most sensors and actuators are purely analog devices and the DA&C system must, consequently, incorporate components to convert between analog and digital representations of data. These conversions are carried out by means of devices known as analog-to-digital converters (ADCs) or digital-to-analog converters (DACs).

1.2. Elements of a DA&C System

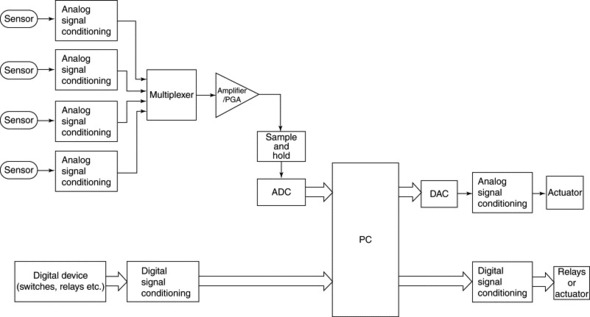

A typical PC-based DA&C system might be designed to accept analog inputs from sensors as well as digital inputs from switches or counters. It might also be capable of generating analog and digital outputs for controlling actuators, lamps, or relays. Figure 2 illustrates the principal elements of such a system. Note that, for clarity, this figure does not include control signals. You should bear in mind that, in reality, a variety of digital control lines will be required by devices such as multiplexers, programmable-gain amplifiers, and ADCs. Depending upon the type of system in use, the device generating the control signals may be either the PC itself or dedicated electronic control circuitry.

Figure 2. A typical PC-based DA&C system.

The figure shows four separate component chains representing analog input, analog output, digital input, and digital output. An ADC and DAC shown in the analog I/O chains facilitate conversion between analog and digital data formats.

Digital inputs can be generated by switches, relays, or digital electronic components such as timer/counter ICs. These signals usually have to undergo some form of digital signal conditioning, which might include voltage level conversion, isolation, or buffering, before being input via one of the PC's I/O ports. Equally, low-level digital outputs generated by the PC normally have to be amplified and conditioned in order for them to drive actuators or relays.

A similar consideration applies to analog outputs. Most actuators have relatively high current requirements which cannot be satisfied directly by the DAC. Amplification and buffering (implemented by the signal conditioning block) is, therefore, usually necessary in order to drive motors and other types of actuator.

The analog input chain is the most complex. It usually incorporates not only signal-conditioning circuits, but also components such as a multiplexer, programmable-gain amplifier (PGA), and sample-and-hold (S/H) circuit. These devices are discussed later in this chapter. The example shown is a four-channel system. Signals from four sensors are conditioned and one of the signals is selected by the multiplexer under software control. The selected signal is then amplified and digitized before being passed to the PC.

The distinction between elements in the chain is not always obvious. In many real systems the various component blocks are grouped within different physical devices or enclosures. To minimize noise, it is common for the signal-conditioning and preamplification electronics to be separated from the ADC and from any other digital components. Although each analog input channel has only one signal-conditioning block in Figure 2, this block may, in reality, be physically distributed along the analog input chain. It might be located within the sensor or at the input to the ADC. In some systems, additional components are included within the chain, or some elements, such as the S/H circuit, might be omitted.

The digital links in and out of the PC can take a variety of forms. They may be direct (although suitably buffered) connections to the PC's expansion bus, or they may involve serial or parallel transmission of data over many meters. In the former case, the ADC, DAC, and associated interface circuitry are often located on I/O cards which can be inserted in one of the PC's expansion bus slots or into a PCMCIA slot. In the case of devices that interface via the PC's serial or parallel ports, the link is implemented by appropriate transmitters, bus drivers, and interface hardware (which are not shown in Figure 2).

2. Digital I/O

Digital (including pulsed) signals are used for interfacing to a variety of computer peripherals as well as for sensing and controlling DA&C devices. Some sensing devices, such as magnetic reed switches, inductive proximity switches, mechanical limit switches, relays, or digital sensors, are capable of generating digital signals that can be read into the PC. The PC may also issue digital signals for controlling solenoids, audio-visual indicators, or stepper motors. Digital I/O signals are also used for interfacing to digital electronic devices such as timer/counter ICs or for communicating with other computers and programmable logic controllers (PLCs).

Digital signals may be encoded representations of numeric data or they may simply carry control or timing information. The latter are often used to synchronize the operation of the PC with external equipment using periodic clock pulses or handshaking signals. Handshaking signals are used to inform one device that another is ready to receive or transmit data. They generally consist of level-active, rather than pulsed, digital signals and they are essential features of most parallel and serial communication systems. Pulsed signals are not only suitable for timing and synchronization, they are also often used for event counting or frequency measurement. Pulsed inputs, for pacing or measuring elapsed time, can be generated either by programmable counter/timer ICs on plug-in DA&C cards or by programming the PC's own built-in timers. Pulsed inputs are often used to generate interrupts within the PC in response to specific external events.

2.1. TTL-Level Digital Signals

Transistor/transistor logic (TTL) is a type of digital signal characterized by nominal “high” and “low” voltages of +5V and 0V. TTL devices are capable of operating at high speeds. They can switch their outputs in response to changing inputs within typically 20 ns and can deal with pulsed signals at frequencies up to several tens of megahertz. TTL devices can also be directly interfaced to the PC. The main problem with using TTL signals for communicating with external equipment is that TTL ICs have a limited current capacity and are suitable for directly driving only low-current (i.e., a few milliamps) devices such as other TTL ICs, LEDs, and transistors. Another limitation is that TTL is capable of transmission over only relatively short distances. While it is ideal for communicating with devices on plug-in DA&C cards, it cannot be used for long-distance transmission without using appropriate bus transceivers.

The PC's expansion bus, and interface devices such as the Intel 8255 programmable peripheral interface (PPI), provide TTL-level I/O ports through which it is possible to communicate with peripheral equipment. Many devices that generate or receive digital level or pulsed signals are TTL compatible and so no signal conditioning circuits, other than perhaps simple bus drivers or tristate buffers, are required. Buffering, optical isolation, electromechanical isolation, and other forms of digital signal conditioning may be needed in order to interface to remote or high-current devices such as electric motors or solenoids.

2.2. Digital Signal Conditioning and Isolation

Digital signals often span a range of voltages other than the 0 to 5V encompassed by TTL. Many pulsed signals are TTL compatible, but this is not always true of digital level signals. Logic levels higher or lower than the standard TTL voltages can easily be accommodated by using suitable voltage attenuating or amplification components. Depending upon the application, the way in which digital I/O signals are conditioned will vary. Many applications demand a degree of isolation and/or current driving capability. The signal-conditioning circuits needed to achieve this may reside either on digital I/O interface cards which are plugged into the PC's expansion bus or they may be incorporated within some form of external interface module. Interface cards and DA&C modules are available with various degrees of isolation and buffering. Many low-cost units provide only TTL-level I/O lines. A greater degree of isolation and noise immunity is provided by devices that incorporate optical isolation and/or mechanical relays.

TTL devices can operate at high speeds with minimal propagation delay. Any time delays that may be introduced by TTL devices are generally negligible when compared with the execution time of software I/O instructions. TTL devices and circuits can thus be considered to respond almost instantaneously to software IN and OUT instructions. However, this is not generally true when additional isolating or conditioning devices are used. Considerable delays can result from using relays in particular, and these must be considered by the designer of the DA&C software.

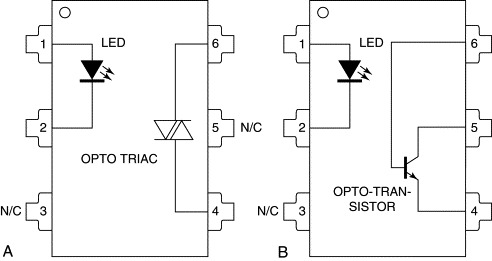

2.2.1. Opto-Isolated I/O

It is usually desirable to electrically isolate the PC from external switches or sensors in order to provide a degree of overvoltage and noise protection. Opto-isolators can provide isolation from typically 500V to a few kilovolts at frequencies up to several hundred kilohertz. These devices generally consist of an infrared LED optically coupled to a phototransistor within a standard DIL package as shown in Figure 3. The input and output parts of the circuit are electrically isolated. The digital signal is transferred from the input (LED) circuit to the output (phototransistor) by means of an infrared light beam. As the input voltage increases (i.e., when a logical high level is applied), the photodiode emits light which causes the phototransistor to conduct. Thus the output is directly influenced by the input state while remaining electrically isolated from it.

Figure 3. Typical opto-isolator DIL packages: (A) an opto-triac suitable for mains switching, and (B) a simple opto-transistor device.

Some opto-isolating devices clean and shape the output pulse by means of built-in Schmitt triggers. Others include Darlington transistors for driving medium current loads such as lamps or relays. Mains and other AC loads maybe driven by solid-state relays which are basically opto-isolators with a high AC current switching capability.

Opto-isolators tend to be quite fast in operation, although somewhat slower than TTL devices. Typical switching times range from about 3 ms to 100 ms, allowing throughputs of about 10–300 Kbit/s. Because of their inherent isolation and slower response times, opto-isolators tend to provide a high degree of noise immunity and are ideally suited to use in noisy industrial environments. To further enhance rejection of spurious noise spikes, opto-isolators are sometimes used in conjunction with additional filtering and pulse-shaping circuits. Typical filters can increase response times to, perhaps, several milliseconds. It should be noted that opto-couplers are also available for isolating analog systems. The temporal response of any such devices used in analog I/O channels should be considered as it may have an important bearing on the sampling rate and accuracy of the measuring system.

2.2.2. Mechanical Relays and Switches

Relays are electromechanical devices that permit electrical contacts to be opened or closed by small driving currents. The contacts are generally rated for much larger currents than that required to initiate switching. Relays are ideal for isolating high-current devices, such as electric motors, from the PC and from sensitive electronic control circuits. They are commonly used on both input and output lines. A number of manufacturers provide plug-in PC interface cards with typically 8 or 16 PCB-mounted relays. Other digital output cards are designed to connect to external arrays or racks of relays.

Most relays on DA&C interface cards are allocated in arrays of 8 or 16, each one corresponding to a single bit in one of the PC's I/O ports. In many (but not all) cases, a high bit will energize the relay. Relays provide either normally open (NO) or normally closed (NC) contacts or both. NO contacts remain open until the relay coil is energized, at which point they close. NC contacts operate in the opposite sense. Ensure that you are aware of the relationship between the I/O bit states and the state of the relay contacts you are using. It is prudent to operate relays in fail-safe mode, such that their contacts return to an inactive (and safe) state when deenergized. Exactly what state is considered inactive will depend upon the application.

Because of the mass of the contacts and other mechanical components, relay switching operations are relatively slow. Small relays with low current ratings tend to operate faster than larger devices. Reed relays rated at around 1A, 24V (DC) usually switch within about 0.25 to 1 ms. The operating and release times of miniature relays rated at 1 to 3A usually fall in the range from about 2 to 5 ms. Larger relays for driving high-power DC or AC mains loads might take up to 10 or 20 ms to switch. These figures are intended only as rough guidelines. You should consult your hardware manufacturer's literature for precise switching specifications.

Switch and Relay Debouncing

When mechanical relay or switch contacts close, they tend to vibrate or bounce for a short period. This results in a sequence of rapid closures and openings before the contacts settle into a stable state. The time taken for the contacts to settle (known as the bounce time) may range from a few hundred microseconds for small reed relays up to several milliseconds for high-power relays. Because bouncing relay contacts make and break several times, it can appear to the software monitoring the relay that several separate switching events occur each time the relay is energized or deenergized. This can be problematic, particularly if the system is designed to generate interrupts as a result of each contact closure.

There are two ways in which this problem can be overcome: hardware debouncing and software debouncing. The hardware method involves averaging the state of the switch circuit over an interval of a few milliseconds so that any short-lived transitions are smoothed out and only a gradual change is recorded. A typical method is to use a resistor/capacitor (RC) network in conjunction with an inverting Schmitt buffer. Tooley (1995) discusses hardware debouncing in more detail and illustrates several simple debouncing circuits.

The software debouncing technique is suitable only for digital inputs driven from relays and switches. It cannot of course be applied to relay signals generated by the PC. The technique works by repeatedly reading the state of the relay contact. The input should be sensed at least twice and a time delay sufficient to allow the contacts to settle should be inserted between the two read operations. If the state of the contacts is the same during both reads, that state is recorded. If it has changed, further delays and read operations should be performed until two successive read operations return the same result. An appropriate limit must, of course, be imposed on the number of repeats that are allowed during the debounce routine in order to avoid the possibility of unbounded software loops. Listing 1 illustrates the debouncing technique. It assumes that the state of the relay contacts is indicated by bit 0 of I/O port 300h. The routine exits with a nonzero value in CX and the debounced relay state in bit 0 of AL. If the relay does not reach a steady state after four read operations (i.e., three delay periods), CX contains zero to indicate the error condition. The routine can easily be adapted to deal with a different bit or I/O port address.

Listing 1. Contact debouncing algorithm

- mov dx,300h ;Port number 300h for sensing relay

- mov cx,4 ;Initialize timeout counter

- DBRead: in al,dx ;Read relay I/O port

- and al,01h ;Isolate relay status bit (bit 0)

- cmp cx,4 ;Is this the first read ?

- je DBLoop ; - Yes, do another

- cmp al,bl ; - No, was relay the same as last time ?

- je DBExit ; - Yes, relay in steady state so exit

- DBLoop: mov bl,al ;Store current relay state

- call DBDelay ;Do delay to allow relay contacts to settle

- loop DBRead ;Read again, unless timed out

- DBExit:

The delay time between successive read operations (implemented by the DBDelay subroutine which is not shown) should be chosen to be just long enough to encompass the maximum contact bounce period expected. For most mechanical switches, this will be typically several milliseconds (or even tens of milliseconds for some larger devices). As a rough rule of thumb, the smaller the switch (i.e., the lower the mass of the moving contact), the shorter will be the contact bounce period. In choosing the delay time, remember to take account of the time constant of any other circuitry that forms part of the digital input channel.

Listing 1 is not totally foolproof: It will fail if the contact bounce period exactly coincides with the time period between samples. To improve the efficiency of this technique, you may wish to adapt Listing 1 in order to check that the final relay state actually remains stable for a number of consecutive samples over an appropriate time interval.

3. Sensors for Analog Signals

Sensors are the primary input element involved in reading physical quantities (such as temperature, force, or position) into a DA&C system. They are generally used to measure analog signals although the term sensor does in fact encompass some digital devices such as proximity switches. In this section we will deal only with sensing analog signals.

Analog signals can be measured with sensors that generate either analog or digital representations of the quantity to be measured (the measurand). The latter are often the simplest to interface to the PC as their output can be read directly into one the PC's I/O ports via a suitable digital input card. Examples of sensors with digital outputs include shaft encoders and some types of flow sensor.

Most types of sensor operate in a purely analog manner, converting the measurand to an equivalent analog signal. The sensor output generally takes the form of a change in some electrical parameter such as voltage, current, capacitance, or resistance. The primary purpose of the analog signal-conditioning blocks shown in Figure 2 is to precondition the sensors' electrical outputs and to convert them into voltage form for processing by the ADC.

You should be aware of a number of important sensor characteristics in order to successfully design and write interface software. Of most relevance are accuracy, dynamic range, stability, linearity, susceptibility to noise, and response times. The last includes rise time and settling time and is closely related to the sensor's frequency response.

Sensor characteristics cannot be considered in isolation. Sensors are often closely coupled to their signal-conditioning circuits and we must, therefore, also take into account the performance of this component when designing a DA&C system. Signal-conditioning and digitization circuitry can play an important (if not the most important) role in determining the characteristics of the measuring system as a whole. Although signal-conditioning circuits can introduce undesirable properties of their own, such as noise or drift, they are usually designed to compensate for inadequacies in the sensor's response. If properly matched, signal-conditioning circuits are often able to cancel out sensor offsets, nonlinearities, or temperature dependencies. We will discuss signal conditioning later in this chapter.

3.1. Accuracy

Accuracy represents the precision with which a sensor can respond to the measurand. It refers to the overall precision of the device resulting from the combined effect of offsets and proportional measurement errors. When assessing accuracy, one must take account of manufacturers' figures for repeatability, hysteresis, stability, and if appropriate, resolution. Although a sensor's accuracy figure may include the effect of resolution, the two terms must not be confused. Resolution represents the smallest change in the measurand that the sensor can detect. Accuracy includes this but also encompasses other sources of error.

3.2. Dynamic Range

A sensor's dynamic range is the ratio of its full-scale value to the minimum detectable signal variation. Some sensors have very wide dynamic ranges, and if the full range is to be accommodated, it may be necessary to employ high-resolution ADCs or Programmable- gain amplifiers. Using a PGA might increase the system's data-storage requirements, because of the addition of an extra variable (i.e., gain). These topics are discussed further in the section “Amplification and Extending Dynamic Range” later in this chapter.

3.3. Stability and Repeatability

The output from some sensors tends to drift over time. Instabilities may be caused by changes in operating temperature or by other environmental factors. If the sensor is likely to exhibit any appreciable instability, you should assess how this can be compensated for in the software. You might wish, for example, to include routines that force the operator to recalibrate or simply rezero the sensor at periodic intervals. Stability might also be compromised by small drifts in the supplied excitation signals. If this is a possibility, the software should be designed to monitor the excitation voltage using a spare analog input channel and to correct the measured sensor readings accordingly.

3.4. Linearity

Most sensors provide a linear output—that is, their output is directly proportional to the value of the measurand. In such cases the sensor response curve consists of a straight line. Some devices such as thermocouples do not exhibit this desirable characteristic. If the sensor output is not linearized within the signal-conditioning circuitry, it will be necessary for the software to correct for any nonlinearities present.

3.5. Response Times

The time taken by the sensor to respond to an applied stimulus is obviously an important limiting factor in determining the overall throughput of the system. The sensor's response time (sometimes expressed in terms of its frequency response) should be carefully considered, particularly in systems that monitor for dangerous, overrange, or otherwise erroneous conditions. Many sensors provide a virtually instantaneous response and in these cases it is usually the signal-conditioning or digitization components (or, indeed, the software itself) that determines the maximum possible throughput. This is not generally the case with temperature sensors, however. Semiconductor sensors, thermistors, and thermocouples tend to exhibit long response times (upwards of 1 s). In these cases, there is little to be gained (other than the ability to average out noise) by sampling at intervals shorter than the sensor's time constant.

You should be careful when interpreting response times published in manufacturers' literature. They often relate to the time required for the sensor's output to change by a fixed fraction in response to an applied step change in temperature. If a time constant is specified it generally defines the time required for the output to change by 1 – e−1 (i.e., about 63.21%) of the difference between its initial and final steady state outputs. The response time will be longer if quoted for a greater fractional change. The response time of thermal sensors will also be highly dependent upon their environment. Thermal time constants are usually quoted for still air, but much faster responses will apply if the sensor is immersed in a free-flowing or stirred liquid such as oil or water.

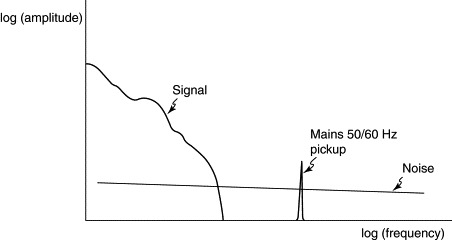

3.6. Susceptibility to Noise

Noise is particularly problematic with sensors that generate only low-level signals (e.g., thermocouples and strain gauges). Low-pass filters can be used to remove noise which often occurs predominantly at higher frequencies than the signals to be measured. Steps should always be taken to exclude noise at its source by adopting good shielding and grounding practices. As signal-conditioning circuits and cables can introduce noise themselves, it is essential that they are well designed. Even when using hardware and electronic filters, there may still be some residual noise on top of the measured signal. A number of filtering techniques can be employed in the software and some of these are discussed later in the chapter.

3.7. Some Common Sensors

This section describes features of several common sensors that are relevant to DA&C software design. Unfortunately, space does not permit an exhaustive list. Many sensors that do not require special considerations or software techniques are excluded from this section. Some less widely used devices, such as optical and chemical sensors are also excluded, even though they are often associated with problems such as long response times and high noise levels. Details of the operation of these devices may be found in specialist books such as Tompkins and Webster (1988), Parr (1986), or Warring and Gibilisio (1985).

The information provided here is typical for each type of sensor described. However, different manufacturers' implementations vary considerably. The reader is advised to consult manufacturers' data sheets for precise details of the sensor and signal-conditioning circuits that they intend to use.

3.8. Digital Sensors and Encoders

Some types of sensor convert the analog measurand into an equivalent digital representation that can be transferred directly to the PC. Digital sensors tend to require minimal signal conditioning.

As mentioned previously the simplest form of digital sensor is the switch. Examples include inductive proximity switches and mechanical limit switches. These produce a single-bit input that changes state when some physical parameter (e.g., spatial separation or displacement) rises above, or falls below, a predefined limit. However, to measure the magnitude of an analog quantity, we need a sensor with a response that varies in many (typically several hundred or more) steps over its measuring range. Such sensors are more correctly known as encoders as they are designed to encode the measurand into a digital form.

Sensors such as the rotor tachometer employ magnetic pickups which produce a stream of digital pulses in response to the rotation of a ferrous disk. Angular velocity or incremental changes in angular position can be measured with these devices. The pulse rate is proportional to the angular velocity of the disk. Similar sensors are available for measuring linear motion.

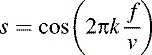

Shaft encoders are used for rotary position or velocity measurement in a wide range of industrial applications. They consist of a binary encoded disk that is mounted on a rotating shaft or spindle and located between some form of optical transmitter and matched receiver (e.g., infrared LEDs and phototransistors). The bit pattern detected by the receiver will depend upon the angular position of the encoded disk. The resolution of the system might be typically ±1°.

A disk encoded in true (natural) binary has the potential to produce large errors. If, for example, the disk is very slightly misaligned, the most significant bit might change first during a transition between two adjacent encoded positions. Such a situation can give rise to a momentary 180° error in the output. This problem is circumvented by using the Gray code. This a binary coding scheme in which only one bit changes between adjacent coded positions. The outputs from these encoders are normally converted to digital pulse trains which carry rotary position, speed, and direction information. Because of this it is rarely necessary for the DA&C programmer to use binary Gray codes directly. We will, however, discuss other binary codes later in this chapter.

The signals generated by digital sensors are often not TTL compatible, and in these cases additional circuitry is required to interface to the PC. Some or all of this circuitry may be supplied with (or as part of) the sensor, although certain TTL buffering or opto-isolation circuits may have to be provided on separate plug-in digital interface cards.

Digital position encoders are inherently linear, stable, and immune to electrical noise. However, care has to be taken when absolute position measurements are required, particularly when using devices that produce identical pulses in response to incremental changes in position. The measurement must always be accurately referenced to a known zero position. Systematic measurement errors can result if pulses are somehow missed or not counted by the software. Regular zeroing of such systems is advisable if they are to be used for repeated position measurements.

3.8.1. Potentiometric Sensors

These very simple devices are usually used for measurement of linear or angular position. They consist of a resistive wire and sliding contact. The resistance to the current flowing through the wire and contact is a measure of the position of the contact. The linearity of the device is determined by the resistance of the output load, but with appropriate signal conditioning and buffering, nonlinearities can generally be minimized and may, in fact, be negligible. Most potentiometric sensors are based on closely wound wire coils. The contact slides along the length of the coil, and as it moves across adjacent windings, it produces a stepped change in output. These steps may limit the resolution of the device to typically 25 to 50 mm.

3.8.2. Semiconductor Temperature Sensors

This class of temperature sensor includes devices based on discrete diodes and transistors as well as temperature-sensitive integrated circuits. Most of these devices are designed to exhibit a high degree of stability and linearity. Their working range is, however, relatively limited. Most operate from about −50 to +150° C, although some devices are suitable for use at temperatures down to about −230° C or lower. IC temperature sensors are typically linear to within a few degrees centigrade. A number of ICs and discrete transistor temperature sensors are somewhat more linear than this: perhaps ±0.5 to ±2° C or better. The repeatability of some devices may be as low as ±0.01° C.

All thermal sensors tend to have quite long response times. Their time constants are dependent upon the rate at which temperature changes are conducted from the surrounding medium. The intrinsic time constants of semiconductor sensors are usually of the order of 1–10s. These figures assume efficient transmission of thermal energy to the sensor. If this is not the case, much longer time constants will apply (e.g., a few seconds to about a minute in still air).

Most semiconductor temperature sensors provide a high-level current or voltage output that is relatively immune to noise and can be interfaced to the PC with minimal signal conditioning. Because of the long response times, software filtering can be easily applied should noise become problematic.

3.8.3. Thermocouples

Thermocouples are very simple temperature measuring devices. They consist of junctions of two dissimilar metal wires. An electromotive force (emf) is generated at each of the thermocouple's junctions by the Seeback effect. The magnitude of the emf is directly related to the temperature of the junction. Various types of thermocouple are available for measuring temperatures from about −200° C to in excess of 1800° C. There are a number of considerations that must be borne in mind when writing interface software for thermocouple systems.

Depending upon the type of material from which the thermocouple is constructed, its output ranges from about 10 to 70 μmV/° C. Thermocouple response characteristics are defined by various British and international standards. The sensitivity of thermocouples tends to change with temperature and this gives rise to a nonlinear response. The nonlinearity may not be problematic if measurements are to be confined to a narrow enough temperature range, but in most cases there is a need for some form of linearization. This may be handled by the signal conditioning circuits, but it is often more convenient to linearize the thermocouple' output by means of suitable software algorithms.

Even when adequately linearized, thermocouple-based temperature measuring systems are not awfully accurate, although it has to be said that they are often more than adequate for many temperature- sensing applications. Thermocouple accuracy is generally limited by variations in manufacturing processes or materials to about 1 to 4° C.

Like other forms of temperature sensor, thermocouples have long response times. This depends upon the mass and shape of the thermocouple and its sheath. According to the Labfacility Ltd. temperature sensing handbook (1987), time constants for thermocouples in still air range from 0.05 to around 40s.

Thermocouples are rather insensitive devices. They output only low-level signals—typically less than 50 mV—and are, therefore, prone to electrical noise. Unless the devices are properly shielded, mains pickup and other forms of noise can easily swamp small signals. However, because thermocouples respond slowly, their outputs are very amenable to filtering. Heavy software filtering can usually be applied without losing any important temperature information.

3.8.4. Cold-Junction Compensation

In order to form a complete circuit, the conductors that make up the thermocouple must have at least two junctions. One (the sensing junction) is placed at an unknown temperature (i.e., the temperature to be measured) and the remaining junction (known as the cold junction or reference junction) is either held at a fixed reference temperature or allowed to vary (over a narrow range) with ambient temperature. The reference junction generates its own temperature-dependent emf which must be taken into account when interpreting the total measured thermocouple voltage.

Thermocouple outputs are usually tabulated in a form that assumes that the reference junction is held at a constant temperature of 0° C. If the temperature of the cold junction varies from this fixed reference value, the additional thermal emf will offset the sensor's response. It is not possible to calibrate out this offset unless the temperature of the cold junction is known and is constant. Instead, the cold junction's temperature is normally monitored in order that a dynamic correction may be applied to the measured thermocouple voltage.

The cold-junction temperature can be sensed using an independent device such as a semiconductor (transistor or IC) temperature sensor. In some signal-conditioning circuits, the output from the semiconductor sensor is used to generate a voltage equal in magnitude, but of opposite sign, to the thermal emf produced by the cold junction. This voltage is then electrically added to the thermocouple signal so as to cancel any offset introduced by the temperature of the cold junction.

It is also possible to perform a similar offset-canceling operation within the data-acquisition software. If the output from the semiconductor temperature sensor is read via an ADC, the program can gauge the cold-junction temperature. As the thermocouple's response curve is known, the software is able to calculate the thermal emf produced by the cold junction—that is, the offset value. This is then applied to the total measured voltage in order to determine that part of the thermocouple output due only to the sensing junction. This is accomplished as follows.

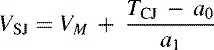

The response of the cold junction and the sensing junction both generally follow the same nonlinear form. As the temperature of the cold junction is usually limited to a relatively narrow range, it is often practicable to approximate the response of the cold junction by a straight line: (1)

![]() where TCJ is the temperature of the cold junction in degrees Centigrade, VCJ is the corresponding thermal emf and a0 and a1 are constants that depend upon the thermocouple type and the temperature range over which the straight-line approximation

is made. Table 1 lists the parameters of straight-line approximations to the response curves of a range of different thermocouples over the temperature range from 0 to 40° C.

where TCJ is the temperature of the cold junction in degrees Centigrade, VCJ is the corresponding thermal emf and a0 and a1 are constants that depend upon the thermocouple type and the temperature range over which the straight-line approximation

is made. Table 1 lists the parameters of straight-line approximations to the response curves of a range of different thermocouples over the temperature range from 0 to 40° C.

Table 1. Parameters of straight-line fits to thermocouple response curves over the range 0 to 40° C, for use in software cold-junction compensation

| Type | a0(° C) | a1(° C mV−1) | Accuracy (° C) |

|---|---|---|---|

| K | 0.130 | 24.82 | ±0.25 |

| J | 0.116 | 19.43 | ±0.25 |

| R | 0.524 | 172.0 | ±1.00 |

| S | 0.487 | 170.2 | ±1.00 |

| T | 0.231 | 24.83 | ±0.50 |

| E | 0.174 | 16.53 | ±0.30 |

| N | 0.129 | 37.59 | ±0.40 |

The measured thermocouple voltage VM is equal to the difference between the thermal emf produced by the sensing junction (VSJ) and the cold junction (VCJ): (2)

![]()

As we are interested only in the difference in junction voltages, VSJ and VCJ can be considered to represent either the absolute thermal emfs produced by each junction or the emfs relative to whatever junction voltage might be generated at some convenient temperature origin. In the following discussion we will choose the origin of the temperature scale to be 0° C (so that 0° C is considered to produce a zero junction voltage). In fact, the straight-line parameters listed in Table 1 represent an approximation to a 0° C-based response curve (a0 is close to zero).

Rearranging Equation (1) and substituting for VCJ in Equation (2), we see that (3)

The values of a0 and a1 for the appropriate type of thermocouple can be substituted from Table 1 into this equation in order to compensate for the temperature of the cold junction. All voltage values should be in millivolts and TCJ should be expressed in degrees Centigrade. The temperature of the sensing junction can then be calculated by applying a suitable linearizing polynomial to the VSJ value. Note that the polynomial must also be constructed for a coordinate system with an origin at V = 0 mV, T = 0° C.

It is interesting to note that the type B thermocouple is not amenable to this method of cold-junction compensation as it exhibits an unusual behavior at low temperatures. As the temperature rises from zero to about 21° C, the thermoelectric voltage falls to approximately −3 μV. It then begins to rise, through 0V at about 41° C, and reaches +3 μV at 52° C. It is, therefore, not possible to accurately fit a straight line to the thermocouple's response curve over this range. Fortunately, if the cold-junction temperature remains within 0 to 52° C it contributes only a small proportion of the total measured voltage (less than about ±3 μV). If the sensing junction is used over its normal working range of 600 to 1700° C, the measurement error introduced by completely ignoring the cold-junction emf will be less than ±0.6° C.

The accuracy figures quoted in Table 1 are generally better than typical thermocouple tolerances and so the a0 and a1 parameters should be usable in most situations. More precise compensation factors can be obtained by fitting the straight line over a narrower temperature range or by using a lookup table with the appropriate interpolation routines. You should calculate your own compensation factors if a different cold-junction temperature range is to be used.

3.9. Resistive Temperature Sensors (Thermistors and RTDs)

Thermistors are semiconductor or metal oxide devices whose resistance changes with temperature. Most exhibit negative temperature coefficients (i.e., their resistance decreases with increasing temperature) although some have positive temperature coefficients. Thermistor temperature coefficients range from about 1 to 5%/° C. They tend to be usable in the range −70 to +150° C, but some devices can measure temperatures up to 300° C. Thermistor-based measuring systems can generally resolve temperature changes as small as ±0.01° C, although typical devices can provide absolute accuracies no better than ±0.1 to 0.5° C. The better accuracy figure is often only achievable in devices designed for use over a limited range (e.g., 0 to 100° C).

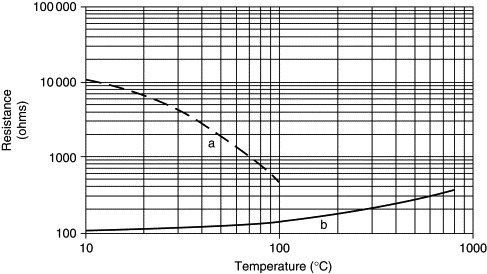

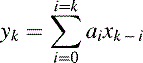

As shown in Figure 4, thermistors tend to exhibit a highly nonlinear response. This can be corrected by means of suitable signal-conditioning circuits or by combining thermistors with positive and negative temperature coefficients. Although this technique can provide a high degree of linearity, it may be preferable to carry out linearization within the DA&C software. A third-order logarithmic polynomial is usually appropriate. The response time of thermistors depends upon their size and construction. They tend to be comparable with semiconductor temperature sensors in this respect, but because of the range of possible constructions, thermistor time constants may be as low as several tens of milliseconds or as high as 100–200s.

Figure 4. Typical resistance vs. temperature characteristics for (A) negative temperature coefficient thermistors and (B) platinum RTDs.

Resistance temperature detectors (RTDs) also exhibit a temperature-dependent resistance. These devices can be constructed from a variety of metals, but platinum is the most widely used. They are suitable for use over ranges of about −270 to 660° C, although some devices have been employed for temperatures up to about 1000° C. RTDs are accurate to within typically 0.2 to 4° C, depending on temperature and construction. They also exhibit a good long-term stability, so frequent recalibration may not be necessary. Their temperature coefficients are generally on the order of 0.4 Ω/° C. However, their sensitivity falls with increasing temperature, leading to a slightly nonlinear response. This nonlinearity is often small enough, over limited temperature ranges (e.g., 0 to 100° C), to allow a linear approximation to be used. Wider temperature ranges require some form of linearization to be applied: A third-order polynomial correction usually provides the optimum accuracy. Response times are comparable with those of thermistors.

3.10. Resistance Sensors and Bridges

A number of other types of resistance sensor are available. Most notable amongst these are strain gauges. These take a variety of forms, including semiconductors, metal wires, and metal foils. They are strained when subjected to a small displacement, and as the gauge becomes deformed, its resistance changes slightly. It is this resistance that is indirectly measured in order to infer values of strain, force, or pressure. The light dependent resistor (LDR) is another example of a resistance sensor. The resistance of this device changes in relation to the intensity of light impinging upon its surface.

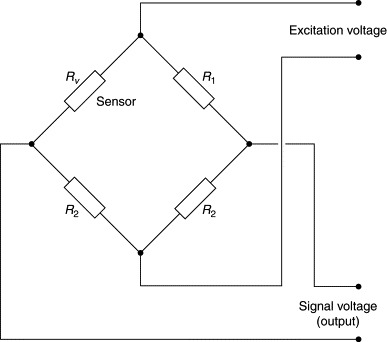

Both thermistors and RTDs can be used in simple resistive networks, but because devices such as RTDs and strain gauges have low sensitivities, it can be difficult to directly measure changes in resistance. Bridge circuits such as that shown in Figure 5 are, therefore, often used to obtain optimum precision. The circuit is designed (or adjusted) so that the voltage output from the bridge is zero at some convenient value of the measurand (e.g., zero strain in the case of a strain gauge bridge). Any changes in resistance induced by changes in the measurand cause the bridge to become unbalanced and to produce a small output voltage. This can be amplified and measured independently of the much larger bridge-excitation voltage. Although bridge circuits are used primarily with insensitive devices, they can also be used with more responsive resistance sensors such as thermistors.

Figure 5. Bridge circuit for measuring resistance changes in strain gauges and RTDs.

Bridges often contain two or four sensing elements (replacing the fixed resistors shown in Figure 5). These are arranged in such a way as to enhance the overall sensitivity of the bridge and, in the case of nonthermal sensors, to compensate for temperature dependencies of the individual sensing elements. This approach is used in the design of strain-gauge-based sensors such as load cells or pressure transducers.

Bridges with one sensing element exhibit a nonlinear response. Two-active-arm bridges, which have sensors placed in opposite arms, are also nonlinear. However, provided that only small fractional changes occur in the resistance of the sensing element(s), the nonlinearities of one- and two-arm bridges are often small enough that they can be ignored. Strain-gauge bridges with four active sensors generate a linear response provided that the sensors are arranged so that the resistance change occurring in one diagonally opposing pair of gauges is equal and opposite to that occurring in the other (Pople, 1979). When using resistance sensors in a bridge configuration, it is advisable to check for and, if necessary, correct any nonlinearities that may be present.

Conduction of the excitation current can cause self-heating within each sensing element. This can be problematic with thermal sensors—thermistors in particular. Temperature rises within strain gauges can also cause errors in the bridge output. Because of this, excitation currents and voltages have to be kept within reasonable limits. This often results in low signal levels. For example, in most implementations, strain-gauge bridges generate outputs of the order of a few millivolts. Because of this, strain-gauge and RTD-based measuring systems are susceptible to noise, and a degree of software or hardware filtering is frequently required.

Lead resistance must also be considered when using resistance sensors. This is particularly so in the case of low-resistance devices such as strain gauges and RTDs, which have resistances of typically 120 to 350Ω and 100 to 200Ω, respectively. In these situations even the small resistance of the lead wires can introduce significant measurement errors. The effect of lead resistance can be minimized by means of compensating cables and suitable signal conditioning. This is usually the most efficient approach. Alternatively, the same type of compensation can be performed in software by using a spare ADC channel to directly measure the excitation voltage at the location of the sensor or bridge.

3.10.1. Linear Variable Differential Transformers

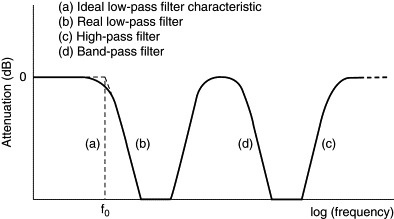

Linear variable differential transformers (LVDTs) are used for measuring linear displacement. They consist of one primary and two secondary coils. The primary coil is excited with a high-frequency (typically several hundred to several thousand hertz) voltage. The magnetic-flux linkage between the concentric primary and secondary coils depends upon the position of a ferrite core within the coil geometry. Induced signals in the secondary coils are combined in a differential manner such that movement of the core along the axis of the coils results in a variation in the amplitude and phase of the combined secondary-coil output. The output changes phase at the central (null) position and the amplitude of the output increases with displacement from the null point. The high-frequency output is then demodulated and filtered in order to produce a DC voltage in proportion to the displacement of the ferrite core from its null position. The filter used is of the low-pass type which blocks the high-frequency ripple but passes lower-frequency variations due to core movement.

Obviously the excitation frequency must be high in order to allow the filter's cutoff frequency to be designed such that it does not adversely affect the response time of the sensing system. The excitation frequency should be considerably greater than the maximum frequency of core movement. This is usually the case with LVDTs. However, the filtration required with low-frequency excitation (less than a few hundred hertz) may significantly affect the system's response time and must be taken into account by the software designer.

The LVDT offers a high sensitivity (typically 100–200 mV/V at its full-scale position) and high-level voltage output that is relatively immune to noise. Software filtering can, however, enhance noise rejection in some situations.

The LVDT's intrinsic null position is very stable and forms an ideal reference point against which to position and calibrate the sensor. The resolution of an LVDT is theoretically infinite. In practice, however, it is limited by noise and the ability of the signal-conditioning circuit to sense changes in the LVDT's output. Resolutions of less than 1 mm are possible. The device's repeatability is also theoretically infinite, but is limited in practice by thermal expansion and mechanical stability of the sensor's body and mountings. Typical repeatability figures lie between ±0.1 and ±10 mm, depending upon the working range of the device. Temperature coefficients are also an important consideration. These are usually on the order of 0.01%/° C. It is wise to periodically recalibrate the sensor, particularly if it is subject to appreciable temperature variations.

LVDTs offer quite linear responses over their working range. Designs employing simple parallel coil geometries are capable of maintaining linearity over only a short distance from their null position. Nonlinearities of up to 10% or more become apparent if the device is used outside this range. In order to extend their operating range, LVDTs are usually designed with more complex and expensive graduated or stepped windings. These provide linearities of typically 0.25%. An improved linearity can sometimes be achieved by applying software linearization techniques.

4. Handling Analog Signals

Signal levels and current-loading requirements of sensors and actuators usually preclude their direct connection to ADCs and DACs. For this reason, data acquisition and control systems generally require analog signals to be processed before being input to the PC or after transmission from it. This usually involves conditioning (i.e., amplifying, filtering, and buffering) the signal. In the case of analog inputs, it may also entail selecting and capturing the signal-using devices such as multiplexers and sample-and-hold circuits.

4.1. Signal Conditioning

Signal conditioning is normally required on both inputs and outputs. In this section we will concentrate on analog inputs, but analogous considerations will apply to analogue outputs; for example, the circuits used to drive actuators.

4.1.1. Conditioning Analog Inputs

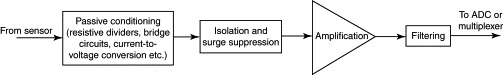

Signal conditioning serves a number of purposes. It is needed to clean and shape signals, to supply excitation voltages, to amplify and buffer low level signals, to linearize sensor outputs, to compensate for temperature-induced drifts, and to protect the PC from electrical noise and surges. The signal-conditioning blocks shown in Figure 2 may consist of a number of separate circuits and components. These elements are illustrated in Figure 6.

Figure 6. Elements of a typical analog input signal-conditioning circuit.

Certain passive signal-conditioning elements such as potential dividers, bridge circuits, and current-to-voltage conversion resistors are often closely coupled to the sensor itself and, indeed, may be an integral part of it. The sensor is sometimes isolated from the remaining signal-conditioning circuits and from the PC by means of linear opto-couplers or capacitively coupled devices. Surge-suppression components such as zener diodes and metal oxide varistors may also be used in conjunction with RC networks to protect against transient voltage spikes.

Because typical ADCs have sensitivities of a few millivolts per bit, it is essential to amplify the low-level signals from thermocouples, strain gauges, and RTDs (which may be only a few tens of millivolts at full scale). Depending upon the type of sensor in use, activities such as AC demodulation or thermocouple cold-junction compensation might also be performed prior to amplification. Finally, a filtering stage might be employed to remove random noise or AC excitation ripple. Low-pass filters also serve an antialiasing function as described in later in the chapter.

So what relevance does all this have to the DA&C programmer? In well-designed systems, very little—the characteristics of the signal conditioning should have no significant limiting affect on the design or performance of the software, and most of the characteristics of the sensor and signal conditioning should be transparent to the programmer. Unfortunately this is not always the case.

The amplifier and other circuits can give rise to temperature-dependent offsets or gain drifts (typically on the order of 0.002–0.010% of full scale per degree Centigrade) which may necessitate periodic recalibration or linearization. When designing DA&C software you should consider the following:

- The frequency of calibration

- The need to enforce calibration or to prompt the operator when calibration is due

- How calibration data will be input, stored, and archived

- The necessity to rezero sensors after each data-acquisition cycle

You should also consider the frequency response (or bandwidth) of the signal-conditioning circuitry. This can affect the sampling rate and limit throughput in some applications (see later). Typical bandwidths are on the order of a few hundred hertz, but this does, of course, vary considerably between different types of signal-conditioning circuit and depends upon the degree of filtration used. High-gain signal-conditioning circuits, which amplify noisy low-level signals, often require heavy filtering. This may limit the bandwidth to typically 100 to 200 Hz. Systems employing low frequency LVDTs can have even lower bandwidths. Bandwidth may not be an important consideration when monitoring slowly varying signals (e.g., temperature), but it can prove to be problematic in high-speed applications involving, for example, dynamic force or strain measurement.

If high-gain amplifiers are used and/or if hardware filtration is inadequate, it may be necessary to incorporate filtering algorithms within the software. If this is the case, you should carefully assess which signal frequencies you wish to remove and which frequencies you will need to retain, and then reconcile this with the proposed sampling rate and the software's ability to reconstruct an accurate representation of the underlying noise-free signal. Sampling considerations and software filtering techniques are discussed later in the chapter.

It may also, in some situations, be necessary for the software to monitor voltages at various points within the signal-conditioning circuit. We have already mentioned monitoring of bridge excitation levels to compensate for voltage drops due to lead-wire resistance. The same technique (sometimes known as ratiometric correction) can also be used to counteract small drifts in excitation supply. If lead-wire resistance can be ignored, the excitation voltage may be monitored either at its source or at the location of the sensor.

There is another (although rarer) instance when it might be necessary to monitor signal-conditioning voltage levels. This is when pseudo-differential connections are employed on the input to an amplifier. Analog signal connections may be made in two ways: single ended or differential. Single-ended signals share a common ground or return line. Both the signal source voltage and the input to the amplifier(s) exist relative to the common ground. For this method to work successfully, the ground potential difference between the source and amplifier must be negligible, otherwise the signal to be measured appears superimposed on a nonzero (and possibly noisy) ground voltage. If a significant potential difference exists between the ground connections, currents can flow along the ground wire causing errors in the measured signals.

Differential systems circumvent this problem by employing two wires for each signal. In this case, the signal is represented by the potential difference between the wires. Any ground-loop-induced voltage appears equally (as a common-mode signal) on each wire and can be easily rejected by a differential amplifier.

An alternative to using a full differential system is to employ pseudo-differential connections. This scheme is suitable for applications in which the common-mode voltage is moderately small. It makes use of single-ended channels with a common ground connection. This allows cheaper operational amplifiers to be used. The potential of the common ground return point is measured using a spare ADC input in order to allow the software to correct for any differences between the local and remote ground voltages. Successful implementation of this technique obviously requires the programmer to have a reasonably detailed knowledge of the signal conditioning circuitry. Unless the common-mode voltage is relatively static, this technique also necessitates concurrent sampling of the signal and ground voltages. In this case simultaneous sample-and-hold circuits (discussed later in this chapter) or multiple ADCs may have to be used.

4.1.2. Conditioning Analog Outputs

Some form of signal conditioning is required on most analog outputs, particularly those that are intended to control motors and other types of actuator. Space limitations preclude a detailed discussion of this topic, but in general, the conditioning circuits include current-driving devices and power amplifiers and the like. The nature of the signal conditioning used is closely related to the type of actuator. As in the case of analog inputs, it is prudent for the programmer to gain a thorough understanding of the actuator and associated signal-conditioning circuits in order that the software can be designed to take account of any nonlinearities or instabilities that might be present.

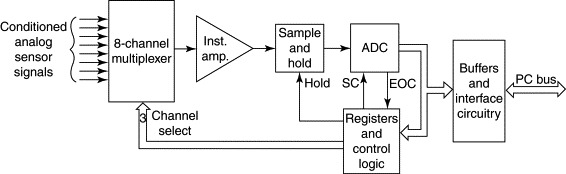

4.2. Multiplexers

Multiplexers allow several analog input channels to be serviced by a single ADC. They are basically software-controlled analog switches that can route 1 of typically 8 or 16 analog signals through to the input of the system's ADC. A four-channel multiplexed system is illustrated in Figure 2. A multiplexer used in conjunction with a single ADC (and possibly amplifier) can take the place of several ADCs (and amplifiers) operating in parallel. This is normally considerably cheaper, and uses less power, than an array of separate ADCs and for this reason analog multiplexers are commonly used in multichannel data acquisition systems.

However, some systems do employ parallel ADCs in order to maximize throughput. The ADCs must, of course, be well matched in terms of their offset, gain and integral nonlinearity errors. In such systems, the digitized readings from each channel (i.e., ADC) are digitally multiplexed into a data register or into one of the PC's I/O ports. From the point of view of software design, there is little to be said about digital multiplexers. In this section, we will deal only with the properties of their analog counterparts.

In an analog multiplexed system, multiple channels share the same ADC and the associated sensors must be read sequentially, rather than in parallel. This leads to a reduction in the number of channels that can be read per second. The decrease in throughput obviously depends upon how efficiently the software controls the digitization and data input sequence.

A related problem is skewing of the acquired data. Unless special S/H circuitry is used, simultaneous sampling is not possible. This is an obvious disadvantage in applications that must determine the temporal relationship or relative phase of two or more inputs.

Multiplexers can be operated in a variety of ways. The desired analog channel is usually selected by presenting a 3- or 4-bit address (i.e., channel number) to its control pins. In the case of a plug-in ADC card, the address-control lines are manipulated from within the software by writing an equivalent bit pattern to one of the card's registers (which usually appear in the PC's I/O space). Some systems can be configured to automatically scan a range of channels. This is often accomplished by programming the start and end channel numbers into a “scan register.” In contrast, some intelligent DA&C units require a high-level channel-selection command to be issued. This often takes the form of an ASCII character string transmitted via a serial or parallel port.

Whenever the multiplexer is switched between channels, the input to the ADC or S/H will take a finite time to settle. The settling time tends to be longer if the multiplexer's output is amplified before being passed to the S/H or ADC. An instrumentation amplifier may take typically 1–10 ms to settle to a 12-bit (0.025%) accuracy. The exact settling time will vary, but will generally be longest with high-gain PGAs or where the amplifier is required to settle to a greater degree of accuracy.

The settling time can be problematic. If the software scans the analog channels (i.e., switches the multiplexer) too rapidly, the input to the S/H or ADC will not settle sufficiently and a degree of apparent cross-coupling may then be observed between adjacent channels. This can lead to measurement errors of several percent, depending upon the scanning rate and the characteristics of the multiplexer and amplifier used. These problems can be avoided by careful selection of components in relation to the proposed sampling rate. Bear in mind that the effects of cross-coupling may be dependent upon the sequence as well as the frequency with which the input channels are scanned. Cross-coupling may not even be apparent during some operations. A calibration facility, in which only one channel is monitored, will not exhibit any cross-coupling, while a multichannel scanning sequence may be badly affected. It is advisable to check for this problem at an early stage of software development as, if present, it can impose severe restrictions on the performance of the system.

4.2.1. Sample-and-Hold Circuits

Many systems employ a sample-and-hold circuit on the input to the ADC to freeze the signal while the ADC digitizes it. This prevents errors due to changes in the signal during the digitization process (see later in the chapter). In some implementations, the multiplexer can be switched to the next channel in a sequence as soon as the signal has been grabbed by the S/H. This allows the digitization process to proceed in parallel with the settling time of the multiplexer and amplifier, thereby enhancing throughput. S/H circuits can also be used to capture transient signals. Software-controlled systems are not capable of responding to very high-speed transient signals (i.e., those lasting less than a few microseconds) and so, in these cases, the S/H and digitization process may be initiated by means of special hardware (e.g., a pacing clock). The software is then notified (by means of an interrupt, for example) when the digitization process is complete.

S/H circuits require only a single digital control signal to switch them between their “sample” and “hold” modes. The signal may be manipulated by software via a control register mapped to one of the PC's I/O ports, or it may be driven by dedicated onboard hardware. S/H circuits present at the input to ADCs are often considered to be an integral part of the digitization circuitry. Indeed, the command to start the analog-to-digital conversion process may also automatically activate the S/H for the required length of time.

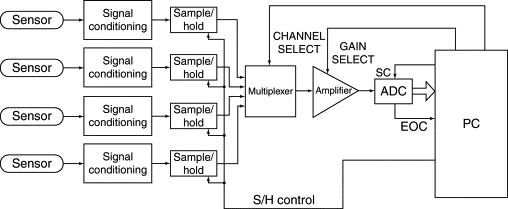

4.2.2. Simultaneous S/H

In multiplexed systems like that represented in Figure 2, analog input channels have to be read sequentially. This introduces a time lag between the samples obtained from successive channels. Assuming typical times for ADC conversion and multiplexer/amplifier settling, this time lag can vary from several tens to several hundreds of microseconds. The consequent skewing of the sample matrix can be problematic if you wish to measure the phase relationship between dynamically varying signals. Simultaneous S/H circuits are often used to overcome this problem. Figure 7 illustrates a four-channel analog input system employing simultaneous S/H.

Figure 7. Analog input channels with simultaneous sample and hold.

The system is still multiplexed, so very little improvement is gained in the overall throughput (total number of channels read per second), but the S/H circuits allow data to be captured from all inputs within a very narrow time interval (see the following section). Simultaneous S/H circuits may be an integral part of the signal conditioning unit or they may be incorporated in the digitization circuitry (e.g., on a plug-in ADC card). In either case they tend to be manipulated by a single digital signal generated by the PC.

4.2.3. Characteristics of S/H Circuits

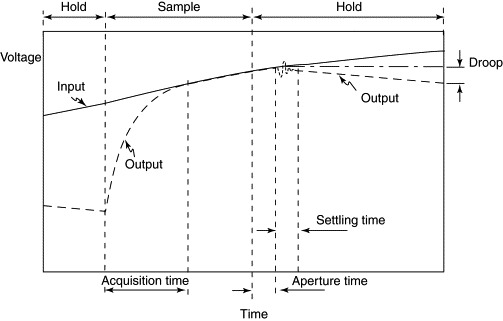

When not in use, the S/H circuit can be maintained in either the sample or hold mode. To operate the device, it must first be switched into sample mode for a short period and then into hold mode in order to freeze the signal before analog-to-digital conversion begins. When switched to sample mode, the output of the S/H takes a short, but sometimes significant, time to react to its input. This time delay arises because the device has to charge up an internal capacitor to the level of the input signal. The rate of charging follows an exponential form and so a greater degree of accuracy is achieved if the capacitor is allowed to charge for a longer time. This charging time is known as the acquisition time. It varies considerably between different types of S/H circuit and, of course, depends upon the size of the voltage swing at the S/H's input. The worst case acquisition time is usually quoted and this is generally on the order of 0.5–20 ms. Acquisition time is illustrated, together with other S/H characteristics, in Figure 8. Accuracies of 0.01% are often attainable with acquisition times greater than about 10 ms. Lower accuracies (e.g., 0.1%) are typical of S/H devices working with shorter acquisition times.

Figure 8. Idealized sample-and-hold circuit response characteristic.

While in sample mode, the S/H' output follows its input (provided that the hold capacitor has been accurately charged and that the signal does not change too quickly). When required, the device is switched into hold mode. A short delay then ensues before digitization can commence. The delay is actually composed of two constituent delay times known as the aperture time and the settling time. The former, which is due to the internal switching time of the device, is very short, typically less than 50 ns. Variations in the aperture time, known as aperture jitter (or aperture uncertainty time), are the limiting factor in determining the temporal precision of each sample. These variations are generally on the order of 1 ns, so aperture jitter can be ignored in all but the highest-speed applications (see later for more on the relationship between aperture jitter and maximum sampling rate). The settling time is the time required for the output to stabilize after the switch and determines the rate at which samples can be obtained. It is usually on the order of 1 μs, but some systems exhibit much longer or shorter settling times.

When the output settles to a stable state, it can be digitized by the ADC. Digitization must be completed within a reasonably short time interval because the charge on the hold capacitor begins to decay, causing the S/H's output to “droop.” Droop rates vary between different devices, but are typically on the order of 1 mV/ms. Devices are available with both higher and lower droop rates. S/H circuits with low droop rates are usually required in simultaneous sample-and-hold systems. Large hold capacitors are needed to minimize droop and these can adversely affect the device's acquisition time.

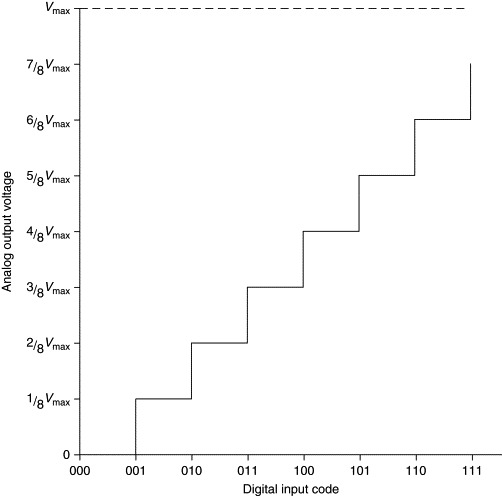

5. Digitization and Signal Conversion

The PC is capable of reading and writing only digital signals. To permit interfacing of the PC to external analog systems, ADCs and DACs must be used to convert signals from analog to digital form and vice versa. This section describes the basic principles of the conversion processes. It also illustrates some of the characteristics of ADCs and DACs of which you should be aware when writing interface software.

5.1. Binary Coding

In order to understand the digitization process, it is important to consider the ways in which analog signals can be represented digitally. Computers store numbers in binary form. There are several binary coding schemes. Most positive integers, for example, are represented in true binary (sometimes called natural or straight binary). Just as the digits in a decimal number represent units, tens, hundreds, and so forth, true binary digits represent ones, twos, fours, eights, and so on. Floating-point numbers, on the other hand, are represented within the computer in a variety of different binary forms. Certain fields within the floating-point bit pattern are set aside for exponents or to represent the sign of the number. Although floating-point representations are needed to scale, linearize, and otherwise manipulate data within the PC, all digitized analog data are generally transferred in and out of the computer in the form of binary integers.

Analog signals may be either unipolar or bipolar. Unipolar signals range from zero up to some positive upper limit, while bipolar signals can span zero, varying between nonzero negative and positive limits.

5.2. Encoding Unipolar Signals

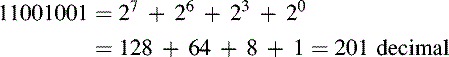

Unipolar signals are perhaps the most common and are the simplest to represent in binary form. They are generally coded as true binary numbers with which most readers should already be familiar. As mentioned previously the least significant bit has a weight (value) of 1 in this scheme, and the weight of each successive bit doubles as we move toward the most significant bit (MSB). If we allocate an index number, i, to each bit, starting with 0 for the LSB, the weight of any one bit is given by 2i. Bit 6 would, for example, represent the value 26(=64 decimal). To calculate the value represented by a complete binary number, the weights of all nonzero bits must be added. For example, the following 8-bit true binary number would be evaluated as shown. (4)

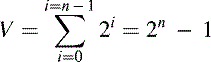

The maximum value that can be represented by a true binary number has all bits set to 1. Thus, a true binary number with n bits can represent values from 0 to V, where (5)

An 8-bit true binary number can, therefore, represent integers in the range 0 to 255 decimal (=28 – 1). A greater range can be represented by binary numbers having more bits. Similar calculations for other numbers of bits yield the results shown in Table 2. The accuracies with which each true binary number can represent an analog quantity are also shown.

Table 2. Ranges of true binary numbers

| Number of bits | Range (true binary) | Accuracy (%) |

|---|---|---|

| 6 | 0 to 63 | 1.56 |

| 8 | 0 to 225 | 0.39 |

| 10 | 0 to 1 023 | 0.098 |

| 12 | 0 to 4 095 | 0.024 |

| 14 | 0 to 16 383 | 0.0061 |

| 18 | 0 to 65 535 | 0.0015 |

The entries in this table correspond to the numbers of bits employed by typical ADCs and DACs. It should be apparent that converters with a higher resolution (number of bits) provide the potential for a greater degree of conversion accuracy.

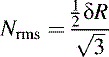

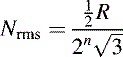

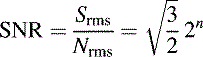

When true binary numbers are used to represent an analog quantity, the range of that quantity should be matched to the range (i.e., V) of the ADC or DAC. This is generally accomplished by choosing a signal-conditioning gain that allows the full-scale range of a sensor to be matched exactly to the measurement range of the ADC. A similar consideration applies to the range of DAC outputs required to drive actuators. Assuming a perfect match (and that there are no digitizing errors), the limiting accuracy of any ADC or DAC system depends upon the number of bits available. An n-bit system can represent some physical quantity that varies over a range 0 to R, to a fractional accuracy ±½δ where (6)

![]()

This is equal to the value represented by one LSB. True binary numbers are important in this respect as they are the basis for measuring the resolution of an ADC or DAC.

5.2.1. Encoding Bipolar Signals

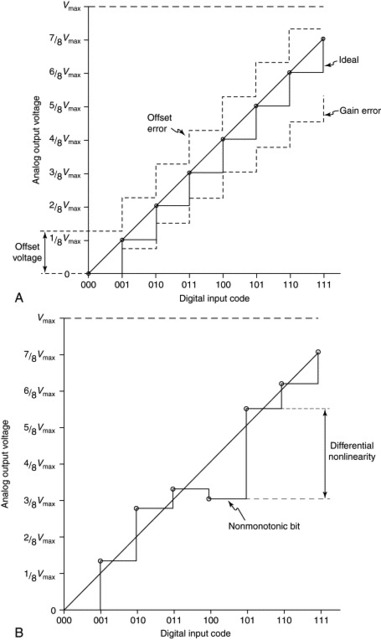

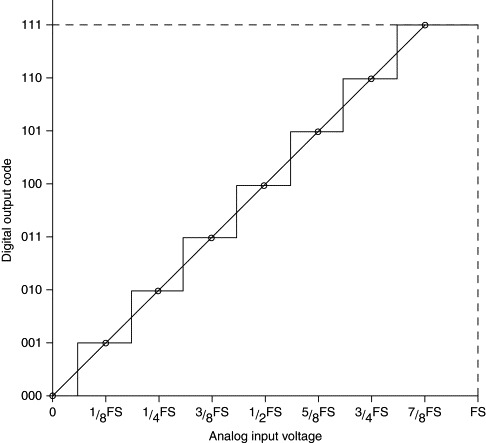

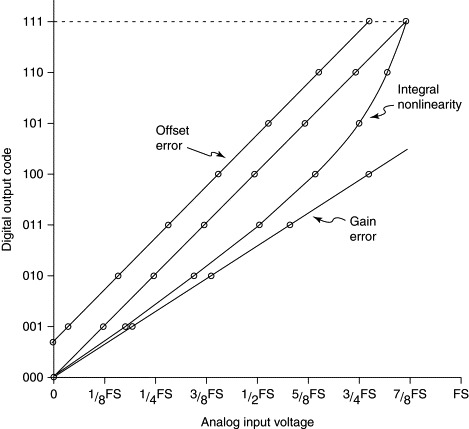

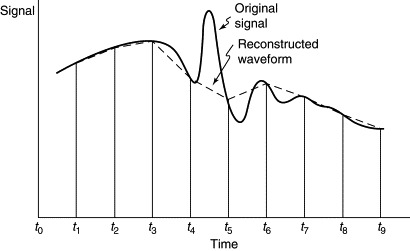

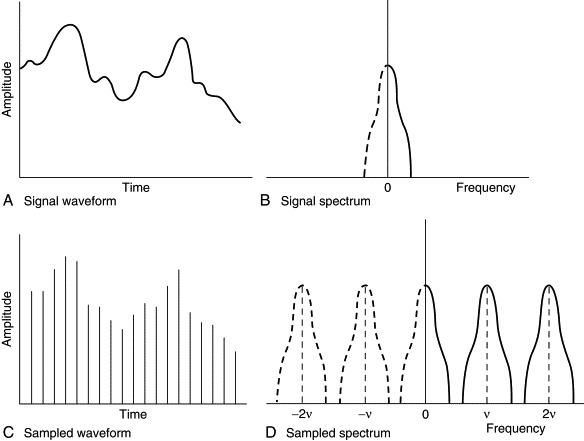

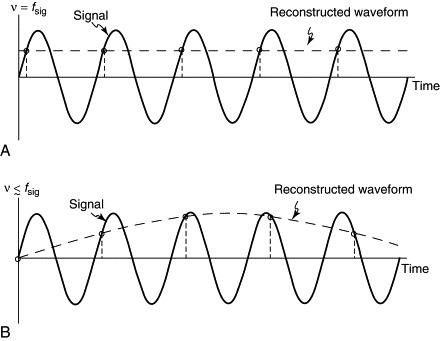

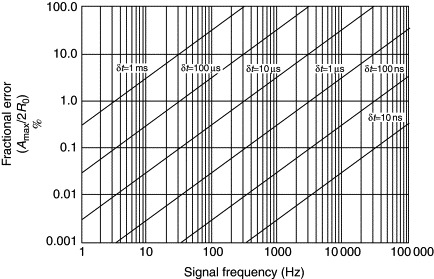

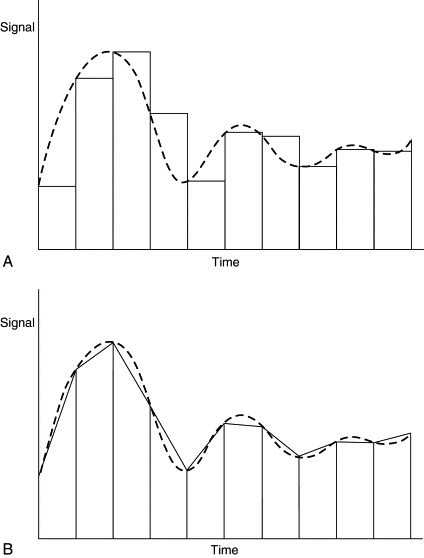

Many analog signals can take on a range of positive and negative values. It is, therefore, essential to be able to represent readings on both sides of zero as digitized binary numbers. Several different binary coding schemes can be used for this purpose. One of the most convenient and widely used is offset binary. As its name suggests, this scheme employs a true binary coding, which is simply offset from zero. This is best illustrated by an example. Consider a system in which a unipolar 0–10-V signal is represented in 12-bit true binary by the range of values from 0 to 4095. We can also represent a bipolar signal in the range −5V to +5V by using the same scaling factor (i.e., volts per bit) and simply shifting the 0-volt point halfway along the binary scale to 2048. An offset binary value of 0 would, in this case, be equivalent to −5V, and a value of 4095 would represent +5V. Offset binary codes can, of course, be used with any number of bits.