Appendix III — Generic Text Completion with GPT-2

This appendix is the detailed explanation of the Generic text completion with GPT-2 section in Chapter 7, The Rise of Suprahuman Transformers with GPT-3 Engines. This section describes how to implement a GPT-2 transformer model for generic text complexion.

You can read the usage of this notebook directly in Chapter 7 or build the program and run it in this appendix to get more profound knowledge of how a GPT model works.

We will clone the OpenAI_GPT_2 repository, download the 345M-parameter GPT-2 transformer model, and interact with it. We will enter context sentences and analyze the text generated by the transformer. The goal is to see how it creates new content.

This section is divided into nine steps. Open OpenAI_GPT_2.ipynb in Google Colaboratory. The notebook is in the AppendixIII directory of the GitHub repository of this book. You will notice that the notebook is also divided into the same nine steps and cells as this section.

Run the notebook cell by cell. The process is tedious, but the result produced by the cloned OpenAI GPT-2 repository is gratifying. We saw that we could run a GPT-3 engine in a few lines. But this appendix gives you the opportunity, even if the code is not optimized anymore, to see how GPT-2 models work.

Hugging Face has a wrapper that encapsulates GPT-2 models. It’s useful as an alternative to the OpenAI API. However, the goal in this appendix is not to avoid the complexity of the underlying components of a GPT-2 model but to explore them!

Finally, It is important to stress that we are running a low-level GPT-2 model and not a one-line call to obtain a result. That is why we are avoiding pre-packaged versions (the OpenAI GPT-3 API, Hugging Face wrappers, others). We are getting our hands dirty to understand the architecture of GPT-2 from scratch. As a result, you might get some deprecation messages. However, the effort is worthwhile to become an Industry 4.0 AI guru.

Let’s begin by activating the GPU.

Step 1: Activating the GPU

We must activate the GPU to train our GPT-2 345M-parameter transformer model.

To activate the GPU, go to the Runtime menu in Notebook settings to get the most out of the VM:

Figure III.1: The GPU hardware accelerator

We can see that activating the GPU is a prerequisite for better performance that will give us access to the world of GPT transformers. So let’s now clone the OpenAI GPT-2 repository.

Step 2: Cloning the OpenAI GPT-2 repository

OpenAI still lets us download GPT-2 for now. This may be discontinued in the future, or maybe we will get access to more resources. At this point, the evolution of transformers and their usage moves so fast that nobody can foresee how the market will evolve, even the major research labs themselves.

We will clone OpenAI’s GitHub directory on our VM:

#@title Step 2: Cloning the OpenAI GPT-2 Repository

!git clone https://github.com/openai/gpt-2.git

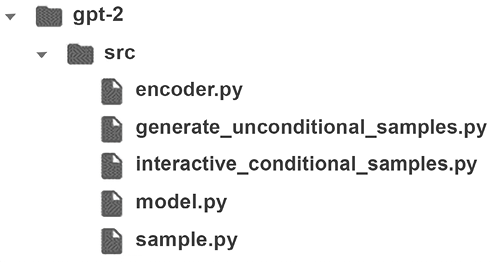

When the cloning is over, you should see the repository appear in the file manager:

Figure III.2: Cloned GPT-2 repository

Click on src, and you will see that the Python files we need from OpenAI to run our model are installed:

Figure III.3: The GPT-2 Python files to run a model

You can see that we do not have the Python training files we need. We will install them when we train the GPT-2 model in the Training a GPT-2 language model section of Appendix IV, Custom Text Completion with GPT-2.

Let’s now install the requirements.

Step 3: Installing the requirements

The requirements will be installed automatically:

#@title Step 3: Installing the requirements

import os # when the VM restarts import os necessary

os.chdir("/content/gpt-2")

!pip3 install -r requirements.txt

When running cell by cell, we might have to restart the VM and thus import os again.

The requirements for this notebook are:

Fire 0.1.3to generate command-line interfaces (CLIs)regex 2017.4.5for regex usageRequests 2.21.0, an HTTP librarytqdm 4.31.1to display a progress meter for loops

You may be asked to restart the notebook.

Do not restart it now. Let’s wait until we check the version of TensorFlow.

Step 4: Checking the version of TensorFlow

The GPT-2 345M transformer model provided by OpenAI uses TensorFlow 1.x. This will lead to several warnings when running the program. However, we will ignore them and run at full speed on the thin ice of training GPT models ourselves with our modest machines.

In the 2020s, GPT models have reached 175 billion parameters, making it impossible for us to train them ourselves efficiently without having access to a supercomputer. The number of parameters will only continue to increase.

The corporate giants’ research labs, such as Facebook AI and OpenAI, and Google Research/Brain, are speeding toward super-transformers and are leaving what they can for us to learn and understand. But, unfortunately, they do not have time to go back and update all the models they share. However, we still have this notebook!

TensorFlow 2.x is the latest TensorFlow version. However, older programs can still be helpful. This is one reason why Google Colaboratory VMs have preinstalled versions of both TensorFlow 1.x and TensorFlow 2.x.

We will be using TensorFlow 1.x in this notebook:

#@title Step 4: Checking the Version of TensorFlow

#Colab has tf 1.x and tf 2.x installed

#Restart runtime using 'Runtime' -> 'Restart runtime...'

%tensorflow_version 1.x

import tensorflow as tf

print(tf.__version__)

The output should be:

TensorFlow 1.x selected.

1.15.2

Whether the tf 1.x version is displayed or not, rerun the cell to make sure, and then restart the VM. Rerun this cell to make sure before continuing.

If you encounter a TensforFlow error during the process (ignore the warnings), rerun this cell, restart the VM, and rerun to make sure.

Do this every time you restart the VM. The default version of the VM is tf.2.

We are now ready to download the GPT-2 model.

Step 5: Downloading the 345M-parameter GPT-2 model

We will now download the trained 345M-parameter GPT-2 model:

#@title Step 5: Downloading the 345M parameter GPT-2 Model

# run code and send argument

import os # after runtime is restarted

os.chdir("/content/gpt-2")

!python3 download_model.py '345M'

The path to the model directory is:

/content/gpt-2/models/345M

It contains the information we need to run the model:

Figure III.4: The GPT-2 Python files of the 345M-parameter model

The hparams.json file contains the definition of the GPT-2 model:

"n_vocab":50257, the size of the vocabulary of the model"n_ctx":1024, the context size"n_embd":1024, the embedding size"n_head":16, the number of heads"n_layer":24, the number of layers

encoder.json and vacab.bpe contain the tokenized vocabulary and the BPE word pairs. If necessary, take a few minutes to go back and read the Step 3: Training a tokenizer subsection in Chapter 4, Pretraining a RoBERTa Model from Scratch.

The checkpoint file contains the trained parameters at a checkpoint. For example, it could contain the trained parameters for 1,000 steps, as we will do in the Step 9: Training a GPT-2 model section of Appendix IV, Custom Text Completion with GPT-2.

The checkpoint file is saved with three other important files:

model.ckpt.metadescribes the graph structure of the model. It containsGraphDef,SaverDef, and so on. We can retrieve the information withtf.train.import_meta_graph([path]+'model.ckpt.meta').model.ckpt.indexis a string table. The keys contain the name of a tensor, and the value isBundleEntryProto, which contains the metadata of a tensor.model.ckpt.datacontains the values of all the variables in a TensorBundle collection.

We have downloaded our model. We will now go through some intermediate steps before activating the model.

Steps 6-7: Intermediate instructions

In this section, we will go through Steps 6, 7, and 7a, which are intermediate steps leading to Step 8, in which we will define and activate the model.

We want to print UTF-encoded text to the console when we are interacting with the model:

#@title Step 6: Printing UTF encoded text to the console

!export PYTHONIOENCODING=UTF-8

We want to make sure we are in the src directory:

#@title Step 7: Project Source Code

import os # import after runtime is restarted

os.chdir("/content/gpt-2/src")

We are ready to interact with the GPT-2 model. We could run it directly with a command, as we will do in the Training a GPT-2 language model section of Appendix IV, Custom Text Completion with GPT-2. However, in this section, we will go through the main aspects of the code.

interactive_conditional_samples.py first imports the necessary modules required to interact with the model:

#@title Step 7a: Interactive Conditional Samples (src)

#Project Source Code for Interactive Conditional Samples:

# /content/gpt-2/src/interactive_conditional_samples.py file

import json

import os

import numpy as np

import tensorflow as tf

We have gone through the intermediate steps leading to the activation of the model.

Steps 7b-8: Importing and defining the model

We will now activate the interaction with the model with interactive_conditional_samples.py.

We need to import three modules that are also in /content/gpt-2/src:

import model, sample, encoder

The three programs are:

model.pydefines the model’s structure: the hyperparameters, the multi-attentiontf.matmuloperations, the activation functions, and all the other properties.sample.pyprocesses the interaction and controls the sample that will be generated. It makes sure that the tokens are more meaningful.Softmax values can sometimes be blurry, like looking at an image in low definition.

sample.pycontains a variable namedtemperaturethat will make the values sharper, increasing the higher probabilities and softening the lower ones.sample.pycan activate Top-k sampling. Top-k sampling sorts the probability distribution of a predicted sequence. The higher probability values of the head of the distribution are filtered up to the k-th token. The tail containing the lower probabilities is excluded, preventing the model from predicting low-quality tokens.sample.pycan also activate Top-p sampling for language modeling. Top-p sampling does not sort the probability distribution. Instead, it selects the words with high probabilities until the sum of this subset’s probabilities or the nucleus of a possible sequence exceeds p.encoder.pyencodes the sample sequence with the defined model,encoder.json, andvocab.bpe. It contains both a BPE encoder and a text decoder.

You can open these programs to explore them further by double-clicking on them.

interactive_conditional_samples.py will call the functions required to interact with the model to initialize the following information: the hyperparameters that define the model from model.py, and the sample sequence parameters from sample.py. It will encode and decode sequences with encode.py.

interactive_conditional_samples.py will then restore the checkpoint data defined in the Step 5: Downloading the 345M-parameter GPT-2 model subsection of this section.

You can explore interactive_conditional_samples.py by double-clicking on it and experiment with its parameters:

model_nameis the model name, such as"124M"or"345M,"and relies onmodels_dir.models_dirdefines the directory containing the models.seedsets a random integer for random generators. The seed can be set to reproduce results.nsamplesis the number of samples to return. If it is set to0, it will continue to generate samples until you double-click on the run button of the cell or press Ctrl + M.batch_sizedetermines the size of a batch and has an impact on memory and speed.lengthis the number of tokens of generated text. If set tonone, it relies on the hyperparameters of the model.temperaturedetermines the level of Boltzmann distributions. If the temperature is high, the completions will be more random. If the temperature is low, the results will become more deterministic.top_kcontrols the number of tokens taken into consideration by Top-k at each step.0means no restrictions.40is the recommended value.top_pcontrols Top-p.

For the program in this section, the scenario of the parameters we just explored will be:

model_name = "345M"seed = Nonensamples = 1batch_size = 1length = 300temperature = 1top_k = 0models_dir = '/content/gpt-2/models'

These parameters will influence the model’s behavior, the way it is conditioned by the context input, and generate text completion sequences. First, run the notebook with the default values. You can then change the code’s parameters by double-clicking on the program, editing it, and saving it. The changes will be deleted at each restart of the VM. Save the program and reload it if you wish to create interaction scenarios.

The program is now ready to prompt us to interact with it.

Step 9: Interacting with GPT-2

In this section, we will interact with the GPT-2 345M model.

There will be more messages when the system runs, but as long as Google Colaboratory maintains tf 1.x, we will run the model with this notebook. One day, we might have to use GPT-3 engines if this notebook becomes obsolete, or we will have to use Hugging Face GPT-2 wrappers, for example, which might be deprecated as well in the future.

In the meantime, GPT-2 is still in use so let’s interact with the model!

To interact with the model, run the interact_model cell:

#@title Step 9: Interacting with GPT-2

interact_model('345M',None,1,1,300,1,0,'/content/gpt-2/models')

You will be prompted to enter some context:

Figure III.5: Context input for text completion

You can try any type of context you wish since this is a standard GPT-2 model.

We can try a sentence written by Emmanuel Kant:

Human reason, in one sphere of its cognition, is called upon to

consider questions, which it cannot decline, as they are presented by

its own nature, but which it cannot answer, as they transcend every

faculty of the mind.

Press Enter to generate text. The output will be relatively random since the GPT-2 model was not trained on our dataset, and we are running a stochastic model anyway.

Let’s have a look at the first few lines the GPT model generated at the time I ran it:

"We may grant to this conception the peculiarity that it is the only causal logic.

In the second law of logic as in the third, experience is measured at its end: apprehension is afterwards closed in consciousness.

The solution of scholastic perplexities, whether moral or religious, is not only impossible, but your own existence is blasphemous."

To stop the cell, double-click on the run button of the cell.

You can also press Ctrl + M to stop generating text, but it may transform the code into text, and you will have to copy it back into a program cell.

The output is rich. We can observe several facts:

- The context we entered conditioned the output generated by the model.

- The context was a demonstration of the model. It learned what to say from the model without modifying its parameters.

- Text completion is conditioned by context. This opens the door to transformer models that do not require fine-tuning.

- From a semantic perspective, the output could be more interesting.

- From a grammatical perspective, the output is convincing.

You can see if we could obtain more impressive results by training the model on a customized dataset in Appendix IV, Custom Text Completion with GPT-2.

References

- OpenAI GPT-2 GitHub repository: https://github.com/openai/gpt-2

- N Shepperd’s GitHub repository: https://github.com/nshepperd/gpt-2

Join our book’s Discord space

Join the book’s Discord workspace:

https://www.packt.link/Transformers