The following section describes key aspects of the webbot's script. The latest version of this script is available for download at this book's website.

Note

If you want to experiment with the code, you should download the webbot's script. I have simplified the scripts shown here for demonstration purposes.

Initialization consists of including libraries and identifying the subject website and search criteria, as shown in Listing 11-1.

# Initialization

// Include libraries

include("LIB_http.php");

include("LIB_parse.php");

// Identify the search term and URL combination

$desired_site = "www.loremianam.com";

$search_term = "webbots";

// Initialize other miscellaneous variables

$page_index = 0;

$url_found = false;

$previous_target = "";

// Define the target website and the query string for the search term

$target = "http://www.schrenk.com/nostarch/webbots/search

$target = $target."?q=".urlencode(trim($search_term));

# End: Initialization

Listing 11-1: Initializing the search-ranking webbot

The target is the page we're going to download, which in this case is a demonstration search page on this book's website. That URL also includes the search term in the query string. The webbot URL encodes the search term to guarantee that none of the characters in the search term conflict with reserved URL character combinations. For example, the PHP built-in function urlencode() changes Karen Susan Terri to Karen+Susan+Terri. If the search term contains characters that are illegal in a URL—for example, the comma or ampersand in Karen, Susan & Terri—it would be safely encoded to Karen%2C+Susan+%26+Terri.

The webbot loops through the main section of the code, which requests pages of search results and searches within those pages for the desired site, as shown in Listing 11-2.

# Initialize loop

while($url_found==false)

{

$page_index++;

echo "Searching for ranking on page #$page_index

";

Listing 11-2: Starting the main loop

Within the loop, the script removes any HTML special characters from the target to ensure that the values passed to the target web page only include legal characters, as shown in Listing 11-3. In particular, this step replaces & with the preferred & character.

// Verify that there are no illegal characters in the URLs

$target = html_entity_decode($target);

$previous_target = html_entity_decode($previous_target);

Listing 11-3: Formatting characters to create properly formatted URLs

This particular step should not be confused with URL encoding, because while & is a legal character to have in a URL, it will be interpreted as $_GET['amp'] and return invalid results.

The webbot tries to simulate the action of a person who is manually looking for a website in a set of search results. The webbot uses two techniques to accomplish this trick. The first is the use of a random delay of three to six seconds between fetches, as shown in Listing 11-4.

sleep(rand(3, 6));

Listing 11-4: Implementing a random delay

Taking this precaution will make it less obvious that a webbot is parsing the search results. This a good practice for all webbots you design.

The second technique simulates a person manually clicking the Next button at the bottom of the search result page to see the next page of search results. Our webbot "clicks" on the link by specifying a referer variable, which in our case is always the target used in the previous iteration of the loop, as shown in Listing 11-5. On the initial fetch, this value is an empty string.

$result = http_get($target, $ref=$previous_target, GET, "", EXCL_HEAD);

$page = $result['FILE'];

Listing 11-5: Downloading the next page of search results from the target and specifying a referer variable

The actual contents of the fetch are returned in the FILE element of the returned $result array.

This webbot uses a parsing technique referred to as an insertion parse because it inserts special parsing tags into the fetched web page to facilitate an easy parse (and easy debug). Consider using the insertion parse technique when you need to parse multiple blocks of data that share common separators. The insertion parse is particularly useful when web pages change frequently or when the information you need is buried deep within a complicated HTML table structure. The insertion technique also makes your code much easier to debug, because by viewing where you insert your parsing tags, you can figure out where your parsing script thinks the desired data is.

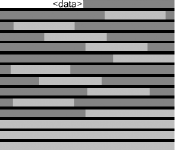

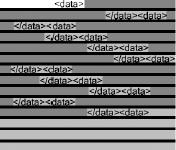

Think of the text you want to parse as blocks of text surrounded by other blocks of text you don't need. Imagine that the web page you want to parse looks like Figure 11-5, where the desired text is depicted as the dark blocks. Find the beginning of the first block you want to parse. Strip away everything prior to this point and insert a <data> tag at the beginning of this block (Figure 11-6). Replace the text that separates the blocks of text you want to parse with </data> and <data> tags. Now every block of text you want to parse is sandwiched between <data> and </data> tags (see Figure 11-7). This way, the text can be easily parsed with the parse_array() function. The final <data> tag is an artifact and is ignored.

The script that performs the insertion parse is straightforward, but it depends on accurately identifying the text that surrounds the blocks we want to parse. The first step is to locate the text that identifies that start of the first block. The only way to do this is to look at the HTML source code of the search results. A quick examination reveals that the first organic is immediately preceded by <!--@gap;-->.[37] The next step is to find some common text that separates each organic search result. In this case, the search terms are also separated by <!--@gap;-->.

To place the <data> tag at the beginning of the first block, the webbot uses the strops() function to determine where the first block of text begins. That position is then used in conjunction with substr() to strip away everything before the first block. Then a simple string concatenation places a <data> tag in front of the first block, as shown in Listing 11-6.

// We need to place the first <data> tag before the first piece

// of desired data, which we know starts with the first occurrence

// of $separator

$separator = "<!--@gap;-->";

// Find first occurrence of $separator

$beg_position = strpos($page, $separator);

// Get rid of everything before the first piece of desired data

// and insert a <data> tag before the data

$page = substr($page, $beg_position, strlen($page));

$page = "<data>".$page;

Listing 11-6: Inserting the initial insertion parse tag (as in Figure 11-6)

The insertion parse is completed with the insertion of the </data> and <data> tags. The webbot does this by simply replacing the block separator that we identified earlier with our insertion tags, as shown in Listing 11-7.

$page = str_replace($separator, "</data> <data>", $page);

// Put all the desired content into an array

$desired_content_array = parse_array($page, "<data>", "</data>", EXCL);

Listing 11-7: Inserting the insertion delimiter tags (as in Figure 11-7)

Once the insertion is complete, each block of text is sandwiched between tags that allow the webbot to use the parse_array() function to create an array in which each array element is one of the blocks. Could you perform this parse without the insertion parse technique? Of course. However, the insertion parse is more flexible and easier to debug, because you have more control over where the delimiters are placed, and you can see where the file will be parsed before the parse occurs.

Once the search results are parsed and placed into an array, it's a simple process to compare them with the web page we're ranking, as in Listing 11-8.

for($page_rank=0; $page_rank<count($desired_content_array); $page_rank++)

{

// Look for the $subject_site to appear in one of the listings

if(stristr($desired_content_array[$page_rank], $subject_site))

{

$url_found_rank_on_page = $page_rank;

$url_found=true;

}

}

Listing 11-8: Determining if an organic matches the subject web page

If the web page we're looking for is found, the webbot records its ranking and sets a flag to tell the webbot to stop looking for additional occurrences of the web page in the search results.

If the webbot doesn't find the website in this page, it finds the URL for the next page of search results. This URL is the link that contains the string Next. The webbot finds this URL by placing all the links into an array, as shown in Listing 11-9.

// Create an array of links on this page

$search_links = parse_array($result['FILE'], "<a", "</a>", EXCL);

Listing 11-9: Parsing the page's links into an array

The webbot then looks at each link until it finds the hyperlink containing the word Next. Once found, it sets the referer variable with the current target and uses the new link as the next target. It also inserts a random three-to-six second delay to simulate human interaction, as shown in Listing 11-10.

for($xx=0; $xx<count($search_links); $xx++)

{

if(strstr($search_links[$xx], "Next"))

{

$previous_target = $target;

$target = get_attribute($search_links[$xx], "href");

// Remember that this path is relative to the target page, so add

// protocol and domain

$target = "http://www.schrenk.com/nostarch/webbots/search/".$target;

}

}

Listing 11-10: Looking for the URL for the next page of search results

[37] Comments are common parsing landmarks, especially when web pages are created with an HTML generator like Adobe Dreamweaver.