Adding Images and Other Files to the App

In the previous section, you saw how the Bundle class lets you read values from the app’s Info.plist. Actually, it offers much more than that. You can use the Xcode project to include any kind of file inside your app bundle, then find and read it at runtime.

Finding Arbitrary Files in the App Bundle

For example, let’s say you add a new file to a project, using the Property List template. In the File Inspector (⌥⌘) on the right, under “Target Membership”, the check box for the app target will be checked, meaning this file will automatically be copied into the app at runtime. The following figure shows the plist’s target membership:

The way you find it is with the Bundle class. The app itself is the “main” bundle, represented by Bundle’s class property main. You can ask the bundle to search for a file by name and extension and either return a String (with path(forResorce:ofType:) and its variants) path or a URL (with url(forResource:withExtension:), etc.).

In iOS 11, the new PropertyListDecoder class makes bundling a plist particularly appealing. Given the structure of the property list in the above screenshot—Strings for title and subtitle, arrays of Strings for authors and coauthors—you can represent the contents as a Swift struct like this:

| | struct BookMetadata: Decodable { |

| | let title: String |

| | let subtitle: String |

| | let authors: [String] |

| | let coauthors: [String] |

| | } |

Since the struct is marked as implementing the Decodable protocol, you can use it with the PropertyListDecoder. The trick is, how do you get the property list as Data to feed to the decoder? The answer, of course, is to have the main Bundle find the file and load its contents as Data. Here’s the recipe for that:

| | do { |

| | if let metadataURL = Bundle.main.url(forResource: "book-metadata", |

| | withExtension: "plist") { |

| | let metadataData = try Data(contentsOf: metadataURL) |

| | let decoder = PropertyListDecoder() |

| | let metadata = try decoder.decode(BookMetadata.self, |

| | from: metadataData) |

| | titleLabel.text = metadata.title |

| | } |

| | } catch { |

| | print ("couldn't get metadata: (error)") |

| | } |

So, the technique here is that you just add a non-source file to the project, ensure that its Target Membership check box is set, and then you can find it at runtime, using the methods in Bundle.

Using Images in Apps

Simple enough, right? Now, what if you want to do the same thing with images? Obviously, you could use the same technique, finding image files in the bundle and loading their data into UIImage instances, like this:

| | if let imageURL = Bundle.main.url(forResource: "original-cover", |

| | withExtension: ".jpg"), |

| | let imageData = try? Data(contentsOf: imageURL) { |

| | let image = UIImage(data: imageData) |

| | imageView.image = image |

| | } else { |

| | print ("didn't find") |

| | } |

This will work, but it’s a really bad idea. Don’t do this! The most obvious problem here—there are several, but this is the worst—is that this doesn’t account for the different resolutions of iOS devices. No one resolution is ideal for all devices; a given image either has less resolution than a top-of-the-line device like an iPhone X wants, or more resolution than the lesser devices can use. Maybe that can’t be helped for images you download at runtime, but for images supplied with the app, there’s no excuse not to tailor each exactly to the target devices.

The right way to bundle images with your app is to use an asset catalog. An asset catalog can hold the same image at multiple resolutions. By default, an app project will have an asset catalog named Assets.xcassets, which contains an empty entry for the app’s icon. You can add more assets of various types (3D textures, sticker packs, Apple TV image stacks, etc.). For an image asset, you’re expected to provide the image at “normal” resolution (one on-screen pixel per virtual point), and then double and triple sizes (usually denoted with a @2x and @3x in their filenames). In the following screenshot, we’ve added appropriately scaled images for the cover of the iOS 10 SDK Development book, giving this asset the name adios4-cover:

The advantage of this approach is that thanks to app slicing, only the resources relevant to a given device will be sent to that device when it downloads your app from the App Store. That means that the “Plus” phones will only get the triple-size image, while smaller phones will only get the double-size. Either way, neither device wastes bandwidth or storage on resources it doesn’t need.

As a bonus to you, the developer, loading the image gets easier, because you can use the name from the asset catalog as the argument to the UIImage(named:) initializer:

| | if let image = UIImage(named: "adios4-cover") { |

| | imageView.image = image |

| | } |

In fact, even that isn’t the best way to load an image. After all, you might misspell the name (which is why the initializer is failable, and you have to use an if let). Now, this will really blow your mind, but if you just start typing the name of the image from the asset catalog, it will be offered as an autocomplete as shown in the figure.

Accept the autocomplete with the return key, and an image literal will be added to your source. You’re literally saying “set the image property of imageView to this specific image from the asset catalog. And thus, a tiny thumbnail of the image will be shown, inline, in your source code:

This seems super crazy at first, to have an image sitting in the middle of your code, but it’s actually great. Because Xcode knows what’s in your asset library, it can guarantee that an image can be loaded at runtime, so it can assign it directly like this, eliminating the if let dance required for UIImage(named:).

Using Colors in Apps

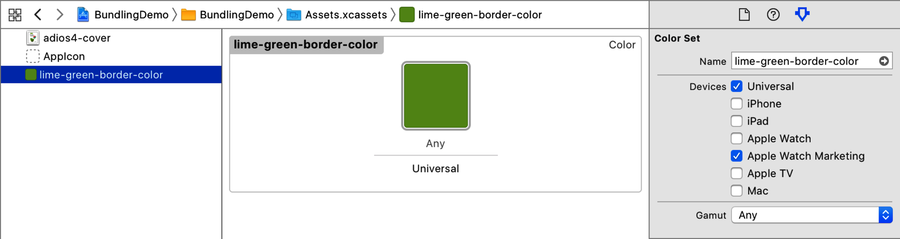

In a similar way, you can use the asset catalog to define colors. For example, you can choose a color with the standard macOS color pickers and define it as lime-green-border-color in the asset catalog, as shown in the following figure:

Then you can load it by name with the initializer UIColor(named:), like this:

| | if let borderColor = UIColor(named: "lime-green-border-color") { |

| | imageView.layer.borderColor = borderColor.cgColor |

| | imageView.layer.borderWidth = 10.0 |

| | imageView.layer.cornerRadius = 10.0 |

| | } |

However, color literals in code work differently than images. Xcode doesn’t let you just type lime-green-border-color and autocomplete it to your color. You can autocomplete “Color literal” to get an editable color inline with your code, and then set it to any color you like, but it has no relationship to the asset catalog.

That’s kind of a bummer, because between images, colors, and storyboards (which we’ll visit in the next chapter), it’s possible (at least in theory) to send the Xcode project to a non-developer designer, and have them do most or all of their work directly in Xcode, rather than sending Photoshop files to developers to implement. But for such a workflow to be dependable, you really want the designer to be working with named colors in the asset catalog, not individual color splotches in source files.

Still, compared to the old ways of doing things—writing “theme” classes with dozens or hundreds of class methods to create and return each color or image used in the app—asset catalogs are a big win.