Model Order Selection

Visa Koivunen and Esa Ollila, Department of Signal Processing and Acoustics, School of Electrical Engineering, Aalto University, Finland

Abstract

In this chapter we provide an overview of methods used for model order selection. One looks for the best dimension for a parametric model given a set of observed data. Most widely used model order selection techniques stem either form statistical inference or information theory. Among the commonly used statistical methods are Bayesian Information Criterion (BIC) and generalized likelihood ratio tests (GLRT), whereas the Minimum Description Length (MDL) and Akaike Information Criterion (AIC) have their roots in information and coding theory. We also show that likelihood function plays an important role in most model selection techniques. Application examples how these methods may be applied in practice are provided.

Keywords

Model order estimation; Parametric model; Statistical inference; Information theory; Stochastic complexity; Likelihood function

3.02.1 Introduction

In model selection the main task is to choose a mathematical model for a phenomenon from a collection of models given some evidence such as the observed data at hand. Informally, one aims at identifying a proper complexity for the model. Typical application examples include finding the number of radio frequency signals impinging an antenna array, choosing the order of an ARMA model used for analyzing time series data, variable selection in the regression model in statistics, estimating the channel order in wireless communication receiver and selecting the order of a state-space model used in tracking a maneuvering target in radar.

Model order selection uses a set of observed data and seeks the best dimension for the parametric model used. This is related to the idea of the inverse experiment and the maximum likelihood estimation where one looks for the parameters that most likely have produced the observed data. Various methods for model selection or model order estimation stem from the detection and estimation theory, statistical inference, information and coding theory and machine learning. The outcome of a model order selection is an integer-value describing the dimension of the model.

One of the well known heuristic approach used in model selection is Occam’s razor (e.g., [1]). It usually favors parsimony, i.e., simpler explanations instead of more complex ones while retaining the explanatory power of the model. Hence, Occam’s razor chooses the simplest model among the candidate models. Simpler models tend to capture the underlying structure of the phenomenon (such as signal of interest) better, and consequently provide better performance in predicting the output when new observations are processed. More complex models tend to overfit the observed data and to be more sensitive to noise. Moreover, the model may not generalize well to new data. The same problem is addressed in statistical inference where there is a trade-off between bias and variance when explaining the observed data. Too simple models lead to a bigger systematic error (bias), while too complex models may lead to an increased variance of estimated parameters and higher Cramer-Rao Bound as well as to finding structures in the data that do not actually exist. Parsimony is also favored in the probability theory, since the probability of making an error will increase by introducing additional assumptions that may be invalid or unnecessary.

Traditionally it is assumed that there is a single correct hypothesis within the set of hypotheses considered that describe the (true) model generating the given data. In practice, any model that we assume will be only an approximation to the truth: for example, a data does not necessarily follow an exactly normal distribution. Yet the described criteria are still useful in selecting the “best model” from the set which is most supported by the data within the mathematical paradigm of the selected information criteria. There are many practical consequences for a misspecified model order. In state-space models and Kalman filtering, models with too low order make the innovation sequence correlated and the optimality of the filter is lost. On the other hand, if the order is too high, one may end up with tracking the noise that can lead to divergence of the Kalman filter. In regression, models with too low order may lead to biased estimates since they do not have enough flexibility to fit the data with high fidelity. On the other hand, too many parameters increase the variance of the estimates unnecessarily and cause the loss of optimality.

Model selection is of fundamental interest to the investigator seeking a better understanding of the phenomenon under study and the literature on this subject is considerable. Several review articles [2–4] are noticeable among others as well as the full length text-books [1,5,6]. In this overview, we first review the classical model selection paradigms: the Bayesian Information Criterion (BIC) with an emphasis on its asymptotic approximation due to Schwarz [7], Akaike’s Information Criterion (AIC) [8,9], stochastic complexity [10] and its early development, the Minimum Description Length (MDL) criterion due to Rissanen [11] and the generalized likelihood ratio test (GLRT-) based sequential hypothesis testing (see, e.g., [12]). Among the methods considered, the MDL and AIC have their foundations in the information theory and coding whereas BIC and GLRT methods have their roots in major statistical inference paradigms (Bayesian and likelihood principle). The AIC is based on the asymptotic approximation of the Kullback-Leibler (K-L) divergence, a fundamental notion in information theory, whereas Rissanen’s MDL is based on the coding theory. However, both of these methods can also be linked with the likelihood principle and the classification of the statistical inference and the information theory based methods is provided mainly for pedagogic and informative purpose. There are some more recent contributions such as the exponentially embedded family (EEF) method by Kay [13] and Bozdogan’s extensions of AIC [14] among others which are not reviewed herein.

This chapter is organized as follows. First, Section 3.02.2 describes the basic principles, challenges and the complexity of the model selection problems with a simple example: variable selection in the multiple linear regression model. Especially, the need for compromise with the goodness of fit and concision (“principle of parsimony”), which is the key idea of model selection, is illustrated and the forward stepwise regression variable selection principle using the AIC is explained along with the cross-validation principle. Section 3.02.3 addresses statistical inference based methods (BIC, GLRT) in detail. Section 3.02.4 introduces the AIC, the MDL and its variants and enhancements. In Section 3.02.5, the model selection methods are derived and illustrated using a practical engineering example in determining the dimension of the signal subspace; such problems arise commonly in sensor array processing, and in harmonic retrieval; see, e.g., [15–18].

3.02.2 Example: variable selection in regression

In the multiple linear regression model the data ![]() is modeled as

is modeled as ![]() , where

, where ![]() denotes the unknown vector of regression coefficients, X is the known

denotes the unknown vector of regression coefficients, X is the known ![]() matrix of explanatory variables (

matrix of explanatory variables (![]() ) and

) and ![]() is the N-variate noise vector with i.i.d. components from normal distribution

is the N-variate noise vector with i.i.d. components from normal distribution ![]() with an unknown variance

with an unknown variance ![]() . Let us call the columns of X (explanatory) variables following the convention in statistics, i.e., we use

. Let us call the columns of X (explanatory) variables following the convention in statistics, i.e., we use ![]() to denote both the column of X and the ith variable of the model. Thus,

to denote both the column of X and the ith variable of the model. Thus, ![]() and the p.d.f. and the log-likelihood are

and the p.d.f. and the log-likelihood are

where ![]() denotes the parameter vector. Commonly there is a large set of explanatory variables and the main interest is choosing the subset of explanatory variables that significantly contribute to the data (response variable) y; see [19,20]. Thus, we consider the hypotheses

denotes the parameter vector. Commonly there is a large set of explanatory variables and the main interest is choosing the subset of explanatory variables that significantly contribute to the data (response variable) y; see [19,20]. Thus, we consider the hypotheses

![]()

where ![]() , denotes the index set of the contributing variables. Then under

, denotes the index set of the contributing variables. Then under ![]() , the MLEs of the regression coefficient and the noise variance correspond to the classic least squares (LS-)estimates

, the MLEs of the regression coefficient and the noise variance correspond to the classic least squares (LS-)estimates ![]() and the residual sample variance,

and the residual sample variance, ![]() , respectively, where

, respectively, where ![]() is the residual sum of squares. Hence the −2

is the residual sum of squares. Hence the −2 ![]() (maximum log-likelihood) under hypothesis

(maximum log-likelihood) under hypothesis ![]() becomes

becomes

![]() (2.2)

(2.2)

which always decrease when we include additional variables in the model. This is because ![]() , where

, where ![]() denotes the residual variance with one additional variable in the model. Then,

denotes the residual variance with one additional variable in the model. Then, ![]() if we introduce as many explanatory variables as responses. More generally, if two models that are compared are nested, then the one with more parameters will always yield a larger maximum log-likelihood, even though it is not necessarily a better model.

if we introduce as many explanatory variables as responses. More generally, if two models that are compared are nested, then the one with more parameters will always yield a larger maximum log-likelihood, even though it is not necessarily a better model.

3.02.2.1 AIC and the stepwise regression

Previously, we noticed that minimizing ![]() cannot be used as the criterion. An intuitive approach is to introduce an additive term that penalizes overfitting. The penalty term needs to be an increasing function of

cannot be used as the criterion. An intuitive approach is to introduce an additive term that penalizes overfitting. The penalty term needs to be an increasing function of ![]() , so that the criterion −2

, so that the criterion −2 ![]() (maximum log-likelihood) decreases as the penalty term increases yielding a trade-off between goodness of fit and simplicity. This principle of parsimony is the fundamental idea of model selection. As we shall see, the classic approximations of information criteria reduce to such forms. For example, the AIC can be stated as

(maximum log-likelihood) decreases as the penalty term increases yielding a trade-off between goodness of fit and simplicity. This principle of parsimony is the fundamental idea of model selection. As we shall see, the classic approximations of information criteria reduce to such forms. For example, the AIC can be stated as

![]()

that is, ![]() , and the regression model (hypothesis

, and the regression model (hypothesis ![]() ) that minimize the criterion is chosen. Here, the penalty term is

) that minimize the criterion is chosen. Here, the penalty term is ![]() which increases with

which increases with ![]() and hence penalizes selecting too many variables. In the regression setting,

and hence penalizes selecting too many variables. In the regression setting,

![]()

where we have suppressed the irrelevant additive constant N from (2.2) and the “plus one” term from ![]() which does not depend on the number of explanatory variables

which does not depend on the number of explanatory variables ![]() in the model. Note that the robust versions of AIC have been proposed, e.g., [21] which utilizes robust M-estimation.

in the model. Note that the robust versions of AIC have been proposed, e.g., [21] which utilizes robust M-estimation.

The AIC is not computationally feasible for variable selection when the number of explanatory variable candidates k is large. We need to consider all index sets I of size ![]() for each

for each ![]() and for each m, there are

and for each m, there are ![]() possible index sets. More practical and commonly used approach is to use stepwise regression variable selection method. In the forward stepwise search strategy one starts with a model containing only an intercept term (or a subset of explanatory variables), and then includes the variable that yields the largest reduction in the AIC, i.e., the largest reduction in the RSS (and thus improving the fit the most). One stops when no variable produces a normalized reduction greater than a threshold. There are several variants of stepwise regression which differ based on the criterion used for inclusion/exclusion of variables. For example, instead of AIC, Mallows Cp, BIC, etc. can be used. One particularly simple stepwise regression is to include in each step the variable that is most correlated with the current residual

possible index sets. More practical and commonly used approach is to use stepwise regression variable selection method. In the forward stepwise search strategy one starts with a model containing only an intercept term (or a subset of explanatory variables), and then includes the variable that yields the largest reduction in the AIC, i.e., the largest reduction in the RSS (and thus improving the fit the most). One stops when no variable produces a normalized reduction greater than a threshold. There are several variants of stepwise regression which differ based on the criterion used for inclusion/exclusion of variables. For example, instead of AIC, Mallows Cp, BIC, etc. can be used. One particularly simple stepwise regression is to include in each step the variable that is most correlated with the current residual ![]() , where

, where ![]() is the LS fit based on the current selection of variables. This strategy is called the orthogonal matching pursuit[22] in the engineering literature. Some modern approaches for variable selection in regression that can be related to the forward stepwise regression are the LASSO (least absolute shrinkage and selection operator) [23] and the LARS (least angle regression) [24]. LASSO minimizes the usual LS criterion (the sum of squared residuals) with an additional

is the LS fit based on the current selection of variables. This strategy is called the orthogonal matching pursuit[22] in the engineering literature. Some modern approaches for variable selection in regression that can be related to the forward stepwise regression are the LASSO (least absolute shrinkage and selection operator) [23] and the LARS (least angle regression) [24]. LASSO minimizes the usual LS criterion (the sum of squared residuals) with an additional ![]() -norm constraint on the regression coefficient. As a result, some regression coefficients are shrunk to(wards) zero. LARS is a forward stepwise regression method where the selection of variables is done differently: namely a regression coefficient of the variable is increased until that variable is no longer the one most correlated with the residual r. A simple modification of the LARS algorithm can be used to compute the LASSO solution [24].

-norm constraint on the regression coefficient. As a result, some regression coefficients are shrunk to(wards) zero. LARS is a forward stepwise regression method where the selection of variables is done differently: namely a regression coefficient of the variable is increased until that variable is no longer the one most correlated with the residual r. A simple modification of the LARS algorithm can be used to compute the LASSO solution [24].

3.02.2.2 Cross-validation and bootstrap methods

Cross-validation (CV) is a widely used technique for model order selection in machine learning, regression analysis, pattern recognition, and density estimation; see [25]. In CV one is interested in not only finding a statistical model for the observed data but also in prediction performance for other independent data sets. The goal is to ensure that the model generalizes well, not adapting too much to the particular data set at hand. Unlike many other model selection techniques, CV can be considered a computer-based heuristic method that replaces some statistical analyses by repeated computations over subsets of data.

In CV, one splits the data set into subsets, one training set for the initial statistical analysis and the other (potentially multiple) subsets for testing or validating the analysis. If the test data are independent and identically distributed (i.i.d.), they can be viewed as new data in prediction. If the sample size for the training set is small or the dimension of the initial model is high, it is likely that the obtained model will fit the set of observations with too high fidelity. Obviously, this specific model will not fit the other test data sets equally well and the prediction estimates produced by the initial model using the training set may contain significantly off-values than expected ones.

The prediction results from the testing (validation) sets are used to evaluate the goodness of the model optimized for the training set. The original model may then be adjusted by combining with the models estimated from the test sets to produce a single model, for example by averaging. CV may also be used in cases where the response is indicator of a class membership instead of being continuous valued. This is the case in M-ary detection problems and pattern recognition, for example.

There are many ways to subdivide the data into training and test sets. The assignment may be random such that the training set and the test set have equal number of observations. These two data sets may be used for training and testing in an alternating manner. A very commonly used approach is so-called leave-one-out (LOO) cross-validation. Each individual observation in the data set is serving a test set at its turn while the remaining data form the training set. This is done exhaustively until all the observations have been used as a test set. It is obvious that LOO has a high computational complexity since the training set is different and training process is repeated every time.

Cross-validation can also be used for model order selection in regression. Let us assume that we have a “supermodel” of large number of explaining variables and we want to select which subset of explaining or predictor variables gives the best model in terms of its prediction performance. By using the cross-validation approach with the test sets of observations one may find a subset of explaining variables that gives the best prediction performance measured, for example, in terms of mean square error. If cross-validation is not used, adding more predictor variables in the model decreases the risk or error measure, such as mean square error, sum of squared residuals or classification error for the training set but the predictive performance may be very different.

A few remarks on the statistical performance of the CV method are in place. CV gives a too positive view on the quality of the model optimized for the training set. The risk or error measure such as MSE will be smaller for the training set than the one for the test set. The variance of the cross-validation estimates depends on the data splitting scheme and it is difficult to analyze the performance of the method in a general case. However, it has been established in some special cases, see [25]. If the model is estimated for each subset of test data, the values of estimates will vary (estimator is a random variable).

The CV estimators often have some bias that depends on the size of the training sample relative to the whole sample size. It tends to decrease as the training sample size increases. For the same size of training set the bias remains the same but differences may be attested in variance (accuracy). The overall performance of the CV estimators depends on many factors including the size of training data set, validation data set, and the number of data subsets.

Bootstrapping [26] is a resampling method that is typically used for characterizing confidence intervals. It has found applications in hypothesis testing and model order estimation as well, see, e.g., [27]. Assuming independent and identically distributed (i.i.d.) data samples, it resamples the observed data set with replacement to simulate new data called bootstrap data. Then the parameter of interest such as the variance or the mean is computed for the bootstrap sample. It is a computer-based method since it requires that a very large number of resamples are drawn, and bootstrap estimates for each bootstrap sample are computed. Availability of highly powerful computing machinery has made the use of bootstrap more feasible in different applications as well as allowed to increase the sample sizes. The bootstrap method does not make any assumptions on the underlying distribution of the observed data. It is a nonparametric method and allows for characterizing the distribution of the observed data. This is particularly useful for non-Gaussian data where the distribution may not be symmetric or unimodal. An example of model order estimation in a sensor array signal processing application is provided in [27].

3.02.3 Methods based on statistical inference paradigms

In this section, we review the model selection methods based on two main stream statistical inference paradigms (Bayesian and likelihood principles). The widely used asymptotic approximation of the Bayesian Information Criterion (BIC) due to Schwarz [7] is reviewed first, followed by the GLRT-based model selection method which is based on the sequential use of the generalized likelihood ratio test (GLRT). The latter method is one of the oldest approaches in model selection.

3.02.3.1 Bayesian Information Criterion (BIC)

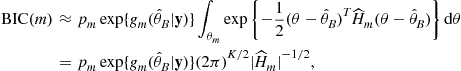

We start by recalling the steps and assumptions that lead to the classic asymptotic approximation of BIC due to Schwarz [7] which can be stated as follows:

![]()

so that ![]() and the hypothesis

and the hypothesis ![]() (mth model) that minimizes the criterion is to be chosen. This approximation is based on the second-order Taylor expansion of the log posterior density and some regularity assumptions on the log-likelihood and log posterior. Recall from earlier discussion on the nested hypotheses, the term −2

(mth model) that minimizes the criterion is to be chosen. This approximation is based on the second-order Taylor expansion of the log posterior density and some regularity assumptions on the log-likelihood and log posterior. Recall from earlier discussion on the nested hypotheses, the term −2 ![]() (maximum log-likelihood) monotonically decreases with an increasing m (model index), and cannot be used alone as it leads to the choice of the model with the largest number of parameters. The second term is the penalty term that increases with

(maximum log-likelihood) monotonically decreases with an increasing m (model index), and cannot be used alone as it leads to the choice of the model with the largest number of parameters. The second term is the penalty term that increases with ![]() and hence penalizes the use of too many parameters.

and hence penalizes the use of too many parameters.

In the Bayesian framework, the parameter vector is taken to be a random vector (r.v.) with a prior density ![]() . For the mth model, let

. For the mth model, let

![]()

denote the log posterior density (neglecting the irrelevant additive constant term). The full BIC is obtained by integrating out the nuisance variable ![]() in the posterior density of

in the posterior density of ![]() given the data y and the model m:

given the data y and the model m:

![]() (2.3)

(2.3)

The mth model that maximizes ![]() over

over ![]() is selected for the data. In most cases, the integration cannot be solved in a closed-form and approximations are sought for.

is selected for the data. In most cases, the integration cannot be solved in a closed-form and approximations are sought for.

In Bayesian approach, the maximum-a-posteriori probability (MAP) estimate ![]() is a natural point estimate of

is a natural point estimate of ![]() and assume that it is unique. An approximation of

and assume that it is unique. An approximation of ![]() around the MAP estimate based on second-order Taylor expansion yields

around the MAP estimate based on second-order Taylor expansion yields

![]()

Note that the first-order term vanishes when evaluated at the maximum. Let us assume that ![]() is twice continuously differentiable (

is twice continuously differentiable (![]() ). This means that its second-order partial derivatives w.r.t.

). This means that its second-order partial derivatives w.r.t. ![]() exist on

exist on ![]() and that the resulting

and that the resulting ![]() (negative) Hessian matrix

(negative) Hessian matrix ![]() is symmetric. Substituting the approximation into (2.3) yields

is symmetric. Substituting the approximation into (2.3) yields

where the last equality follows by noticing that the integrand is the density of ![]() -variate

-variate ![]() distribution up to a constant term

distribution up to a constant term ![]() , where

, where ![]() denotes the matrix determinant. Equivalently, we can minimize the log of BIC. In logarithmic form, the BIC becomes

denotes the matrix determinant. Equivalently, we can minimize the log of BIC. In logarithmic form, the BIC becomes

![]() (2.4)

(2.4)

An alternative approximation for the BIC can be derived with the following assumption:

(A) the prior distribution ![]() is sufficiently flat around the MAP estimate

is sufficiently flat around the MAP estimate ![]() and does not depend on N for

and does not depend on N for ![]() .

.

Due to (A), when evaluating the integral in (2.3), we may extract the ![]() term from the integrand as a constant proportional to

term from the integrand as a constant proportional to ![]() , yielding the approximation

, yielding the approximation

![]() (2.5)

(2.5)

![]() (2.6)

(2.6)

where ![]() is the Fisher information matrix of the mth model evaluated at the unique MLE

is the Fisher information matrix of the mth model evaluated at the unique MLE ![]() . Note that (2.6) is an approximation of (2.5) using (similarly as earlier) the second-order Taylor expansion on

. Note that (2.6) is an approximation of (2.5) using (similarly as earlier) the second-order Taylor expansion on ![]() around the MLE. Thus in terms of logarithms, we have

around the MLE. Thus in terms of logarithms, we have

![]() (2.7)

(2.7)

Note that approximations (2.4) and (2.7) differ as the former (resp. the latter) is obtained for the case that the full log posterior (resp. log-likelihood) is expanded to the second-order terms. Furthermore, (2.7) relies upon (A).

Next, note that under some regularity conditions and due to consistency of the MLE ![]() , we can in most cases approximate

, we can in most cases approximate ![]() for a sufficiently large N as

for a sufficiently large N as

![]() (2.8)

(2.8)

Since the three first terms in (2.7) are independent of N [recall assumption (A)], we may neglect the first three terms in (2.7) for large N, yielding after multiplying by ![]() the earlier stated classic approximation due to Schwarz,

the earlier stated classic approximation due to Schwarz,

![]() (2.9)

(2.9)

Let us recall the assumptions for the above result. First of all, the log-likelihood function ![]() ought to be a

ought to be a ![]() -function around the neighborhood of the unique MLE

-function around the neighborhood of the unique MLE ![]() . Second, the prior density is assumed to verify Assumption (A). Third, sufficient regularity conditions based on likelihood theory, e.g., consistency of

. Second, the prior density is assumed to verify Assumption (A). Third, sufficient regularity conditions based on likelihood theory, e.g., consistency of ![]() , are needed for the approximation (2.8) to hold. Note that the classic BIC approximation, in contrast to approximations (2.4) or (2.7), is based on asymptotic arguments, i.e., N is assumed to be sufficiently large. Naturally, how large N is required, depends on the application at hand. The benefit of such an approximation is its simplicity and good performance observed in many applications. As we shall see in Section 3.02.4, Rissanen’s MDL derived using arguments in coding theory can be asymptotically approximated in the same form as BIC in (2.9). This sometimes leads to misunderstanding that MDL and BIC are the same.

, are needed for the approximation (2.8) to hold. Note that the classic BIC approximation, in contrast to approximations (2.4) or (2.7), is based on asymptotic arguments, i.e., N is assumed to be sufficiently large. Naturally, how large N is required, depends on the application at hand. The benefit of such an approximation is its simplicity and good performance observed in many applications. As we shall see in Section 3.02.4, Rissanen’s MDL derived using arguments in coding theory can be asymptotically approximated in the same form as BIC in (2.9). This sometimes leads to misunderstanding that MDL and BIC are the same.

If we assume that the set of hypotheses ![]() includes the true model

includes the true model ![]() that generated the data, then the probability that the true

that generated the data, then the probability that the true ![]() th model is selected can often be calculated analytically. For example, in the regression setting, the BIC estimate has proven to be consistent [28], that is,

th model is selected can often be calculated analytically. For example, in the regression setting, the BIC estimate has proven to be consistent [28], that is, ![]() as

as ![]() . This can be contrasted with the AIC estimate that fails to be consistent, due to a tendency of overfitting [28].

. This can be contrasted with the AIC estimate that fails to be consistent, due to a tendency of overfitting [28].

3.02.3.2 GLRT-based sequential hypothesis testing

Let us assume that sequence of model p.d.f.’s ![]() , belong to the same parametric family (i.e., share the same functional form, so

, belong to the same parametric family (i.e., share the same functional form, so ![]() for

for ![]() ) and are ordered in the sense that

) and are ordered in the sense that ![]() . Hence, we have a nested set of hypotheses

. Hence, we have a nested set of hypotheses ![]() . We can consider

. We can consider ![]() as the “full model” and

as the “full model” and ![]() , a parsimonious model presuming that a subset of the parameters of the full model are equal to zero. This type of situation can arise in many engineering applications; a practical application is given in Section 3.02.5. In such a scenario, the GLRT statistic, defined as the

, a parsimonious model presuming that a subset of the parameters of the full model are equal to zero. This type of situation can arise in many engineering applications; a practical application is given in Section 3.02.5. In such a scenario, the GLRT statistic, defined as the ![]() the log of the ratio of the likelihood functions maximized under

the log of the ratio of the likelihood functions maximized under ![]() and under the full model

and under the full model ![]() :

:

(2.10)

(2.10)

where ![]() and

and ![]() and

and ![]() denotes the log-likelihood. By the general maximum likelihood theory,

denotes the log-likelihood. By the general maximum likelihood theory, ![]() has a

has a ![]() distribution asymptotically (i.e., chi-squared distribution with

distribution asymptotically (i.e., chi-squared distribution with ![]() degrees of freedom), where

degrees of freedom), where

![]()

The null hypothesis ![]() is rejected if

is rejected if ![]() exceeds a rejection threshold

exceeds a rejection threshold ![]() , where

, where ![]() is

is ![]() th quantile of the

th quantile of the ![]() distribution (i.e.,

distribution (i.e., ![]() when

when ![]() ). Such a detector has an asymptotic probability of false alarm (PFA) equal to

). Such a detector has an asymptotic probability of false alarm (PFA) equal to ![]() . For example, choosing PFA equal to

. For example, choosing PFA equal to ![]() is quite reasonable for many applications; however, in radar applications, smaller PFA is often desired.

is quite reasonable for many applications; however, in radar applications, smaller PFA is often desired.

The sequential GLRT-based model selection procedure chooses the first hypothesis ![]() (and hence

(and hence ![]() as the model order) that is not rejected by the GLRT statistic. In other words, we first test

as the model order) that is not rejected by the GLRT statistic. In other words, we first test ![]() and if it is rejected by the GLRT statistic (2.10) (

and if it is rejected by the GLRT statistic (2.10) (![]() for some predetermined PFA

for some predetermined PFA ![]() ), then test

), then test ![]() , etc. until acceptance, i.e., until

, etc. until acceptance, i.e., until ![]() . In case that all hypotheses are rejected, then we accept the full model

. In case that all hypotheses are rejected, then we accept the full model ![]() . Note that the above decision sequence is not statistically independent, even asymptotically. Hence, even though the asymptotic PFA of each individual GLRT for testing

. Note that the above decision sequence is not statistically independent, even asymptotically. Hence, even though the asymptotic PFA of each individual GLRT for testing ![]() is

is ![]() , it does not mean that the asymptotic PFA of the GLRT-based model selection is

, it does not mean that the asymptotic PFA of the GLRT-based model selection is ![]() . It is also possible to use a backward testing strategy, i.e., test the sequence of hypotheses

. It is also possible to use a backward testing strategy, i.e., test the sequence of hypotheses ![]() from the reverse direction. Then we select the model in the sequence before the first rejection. That is, we first test

from the reverse direction. Then we select the model in the sequence before the first rejection. That is, we first test ![]() and if it is accepted, then test

and if it is accepted, then test ![]() . Continue until the first rejection and select the hypothesis tested prior the rejection. If it is known a priori that a large model order is more likely than a small model order, then the backward strategy might be preferred from pure computational point of view.

. Continue until the first rejection and select the hypothesis tested prior the rejection. If it is known a priori that a large model order is more likely than a small model order, then the backward strategy might be preferred from pure computational point of view.

3.02.4 Information and coding theory based methods

The information and coding theory based approaches for model order selection are frequently used. We will consider two such techniques, Information Criterion proposed by Akaike [8,9] and Minimum Description Length (MDL) methods proposed by Rissanen [10,11]. The enhanced versions of both methods have been introduced in order to provide more accurate results for finite sample sizes and in some cases to relax unnecessary assumptions on underlying distribution models.

3.02.4.1 Akaike Information Criterion (AIC)

The AIC criterion is based on an asymptotic approximation of the K-L divergence. Let ![]() denote the true p.d.f. of the data and let

denote the true p.d.f. of the data and let ![]() denote the approximating model. The Kullback-Leibler (K-L) divergence (or relative entropy), can be defined as the integral (as f and

denote the approximating model. The Kullback-Leibler (K-L) divergence (or relative entropy), can be defined as the integral (as f and ![]() are continuous functions)

are continuous functions)

![]()

and it can be viewed as the information loss when the model f is used to approximate ![]() or as a “distance” (discrepancy) between f and

or as a “distance” (discrepancy) between f and ![]() since it verifies

since it verifies

![]()

Clearly, among the models in the set of hypotheses ![]() , the best one loses the least information (have smallest value of

, the best one loses the least information (have smallest value of ![]() ) relative to the other models. Note that the K-L divergence can be expressed as

) relative to the other models. Note that the K-L divergence can be expressed as

![]()

where the model dependent part is ![]() . When finding the minimum of

. When finding the minimum of ![]() over the set of models considered is equivalent to finding the maximum of the function

over the set of models considered is equivalent to finding the maximum of the function

![]()

among all the models. The function ![]() , sometimes referred to as relative K-L information, cannot be used directly in model selection because it requires the full knowledge of the true data p.d.f.

, sometimes referred to as relative K-L information, cannot be used directly in model selection because it requires the full knowledge of the true data p.d.f. ![]() and the true value of the parameter

and the true value of the parameter ![]() in the approximate model

in the approximate model ![]() , both of which are unknown. Hence we settle with its estimate

, both of which are unknown. Hence we settle with its estimate

![]() (2.11)

(2.11)

where w is an (imaginary) independent data vector from the same unknown true distribution ![]() as the observed data y and

as the observed data y and ![]() denotes the MLE of

denotes the MLE of ![]() based on the assumed model f and the data y. Note that the statistical expectations above are w.r.t. to the true (unknown) p.d.f.

based on the assumed model f and the data y. Note that the statistical expectations above are w.r.t. to the true (unknown) p.d.f. ![]() and we maximize (2.11) instead of

and we maximize (2.11) instead of ![]() . Key finding is that for a large sample size N, the maximized log-likelihood

. Key finding is that for a large sample size N, the maximized log-likelihood ![]() is a biased estimate of (2.11) where the bias is approximately equal to

is a biased estimate of (2.11) where the bias is approximately equal to ![]() , the number of the estimable parameters in the model. The maximum log-likelihood corrected by the asymptotic bias, i.e.,

, the number of the estimable parameters in the model. The maximum log-likelihood corrected by the asymptotic bias, i.e., ![]() , can be viewed (under regularity conditions) as an unbiased estimator of

, can be viewed (under regularity conditions) as an unbiased estimator of ![]() when N is large. Multiplying by

when N is large. Multiplying by ![]() gives the famous Akaike’s Information Criterion

gives the famous Akaike’s Information Criterion

![]()

Unlike the BIC, the AIC is not consistent, namely the probability of correctly identifying the true model does not converge to one with the sample length. It can be shown that for nested hypotheses and under fairly general conditions,

![]()

that is, AIC tends to overfit, i.e., choosing model order that is larger then the true model order. See, e.g., [15].

Some enhanced versions have been introduced where the penalty term is larger to reduce the chance of overfitting. The following corrected AIC rule [29,30]

![]() (2.12)

(2.12)

![]() (2.13)

(2.13)

provides a finite sample (second-order bias correction) adjustment to AIC; asymptotically they are the same since ![]() when

when ![]() , but

, but ![]() for finite N. Consequently the penalty term of

for finite N. Consequently the penalty term of ![]() is always larger than that of AIC, which means that the criterion is less likely to overfit. On the other hand, this comes with an increased risk of underfitting, i.e., choosing a smaller model order than the true one. Since the underfitting risk does not seem to be unduly large in many practical experiments, many researchers recommend the use of

is always larger than that of AIC, which means that the criterion is less likely to overfit. On the other hand, this comes with an increased risk of underfitting, i.e., choosing a smaller model order than the true one. Since the underfitting risk does not seem to be unduly large in many practical experiments, many researchers recommend the use of ![]() instead of AIC. It should be noted, however, that

instead of AIC. It should be noted, however, that ![]() possess a sound theoretical justification only in the regression model with normal errors, where it is an unbiased estimate of the relative K-L information; note that AIC is by its construction only an asymptotically unbiased estimate of the relative K-L information. Besides the regression model, the reasonings for

possess a sound theoretical justification only in the regression model with normal errors, where it is an unbiased estimate of the relative K-L information; note that AIC is by its construction only an asymptotically unbiased estimate of the relative K-L information. Besides the regression model, the reasonings for ![]() , lay merely on its property of reducing the risk of overfitting. The so-called generalized information criteria (GIC) [2], defined as in (2.13) as

, lay merely on its property of reducing the risk of overfitting. The so-called generalized information criteria (GIC) [2], defined as in (2.13) as

![]()

can often outperform AIC for ![]() . For example, when

. For example, when ![]() the

the ![]() is obtained and the choice

is obtained and the choice ![]() yields the MDL criterion.

yields the MDL criterion.

3.02.4.2 Minimum Description Length

The Minimum Description Length [11] method stems from the algorithmic information and coding theory. The basic idea is to find any regularity in the observed data that can be used to compress the data. This allows for condensing the data so that less symbols are needed to present it. The code used for compressing the data should be such that the total length (in bits) of description of the code and the description of the data is minimal. There exists a simple and refined version of the MDL method. The concept of stochastic complexity will be employed in estimating the model order. It defines the code length of the data with respect to the employed probability distribution model. It also establishes a connection to statistical model order estimation methods such as BIC and MDL. In some special cases, the approximation of stochastic complexity and BIC leads to the same criterion. Detailed descriptions of the MDL method can be found in [1,4].

The MDL method does not make any assumptions on the underlying distribution of the data. In fact, it is considered to be less important since the task at hand is not to estimate the distribution that generated the data but rather to extract useful information or model from the data that facilitates by compressing it efficiently. However, codes used for compressing data and the probability mass functions are related. The Kraft inequality establishes a correspondence between code lengths and probabilities whereas the information inequality relates the optimal code in terms of the minimum expected code length with the distribution of the data. If the data obeys the distribution ![]() , then the code with the length

, then the code with the length ![]() achieves the minimum code length on average.

achieves the minimum code length on average.

A simple version of the MDL principle may now be stated as follows. Let ![]() be a class of candidate models or codes used to explain the data y. The best model

be a class of candidate models or codes used to explain the data y. The best model ![]() is the one that minimizes

is the one that minimizes

![]()

i.e., the sum of the length of the description of the model and the length of the data encoded using the model. Trading-off between complexity of the code and complexity of the data is built in the MDL method. Hence, it is less likely to overestimate the model order and overfit the data than AIC, for example.

A probability distribution where the parameter ![]() may vary defines a family of distributions. The task of interest is to optimally encode the data and to use the whole family of distributions in order to make the process efficient. An obvious choice from the distribution family would be to use the code corresponding to

may vary defines a family of distributions. The task of interest is to optimally encode the data and to use the whole family of distributions in order to make the process efficient. An obvious choice from the distribution family would be to use the code corresponding to ![]() where

where ![]() is the MLE of

is the MLE of ![]() for the observed data, i.e.,

for the observed data, i.e., ![]() that most likely would have produced the data set. Consequently, the code corresponding to the maximum likelihood (or the probability model) depends on the data and the code cannot be specified before observing the data. This makes this approach less practical. One could avoid this problem by using an universal distribution model that is representative of the entire family of distributions and would allow coding the data set almost as efficiently as the code associated with the maximum likelihood function. The refined MDL uses normalized maximum likelihood approach for finding an universal distribution model used to assist the coding. This will lead to an excessive code length that is often called regret. The Kullback-Leibler divergence

that most likely would have produced the data set. Consequently, the code corresponding to the maximum likelihood (or the probability model) depends on the data and the code cannot be specified before observing the data. This makes this approach less practical. One could avoid this problem by using an universal distribution model that is representative of the entire family of distributions and would allow coding the data set almost as efficiently as the code associated with the maximum likelihood function. The refined MDL uses normalized maximum likelihood approach for finding an universal distribution model used to assist the coding. This will lead to an excessive code length that is often called regret. The Kullback-Leibler divergence ![]() may be used as a starting point where p is employed to approximate the distribution f. Finding the optimal universal distribution

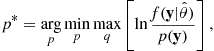

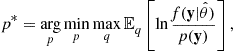

may be used as a starting point where p is employed to approximate the distribution f. Finding the optimal universal distribution ![]() can be formulated as a minimax problem:

can be formulated as a minimax problem:

where q denotes the set of all feasible distributions. The distribution ![]() represents the worst case approximation of the maximum likelihood code and it is allowed to be almost any distribution within a model class of feasible distributions. Hence, the assumption on the true distribution generating the data may be considered to be relaxed. The so-called normalized maximum likelihood (NML) distribution satisfies the above optimization problem. It may be written as [31]

represents the worst case approximation of the maximum likelihood code and it is allowed to be almost any distribution within a model class of feasible distributions. Hence, the assumption on the true distribution generating the data may be considered to be relaxed. The so-called normalized maximum likelihood (NML) distribution satisfies the above optimization problem. It may be written as [31]

![]()

where ![]() denotes the maximum likelihood estimate of

denotes the maximum likelihood estimate of ![]() over the observation set. The denominator is a normalizing factor that is the sum of the maximum likelihoods of all potential observation sets Y acquired in experiments, and the numerator represents the maximized likelihood value. With this solution, the worst case data regret is minimized. It has been further shown that by solving a related minimax problem

over the observation set. The denominator is a normalizing factor that is the sum of the maximum likelihoods of all potential observation sets Y acquired in experiments, and the numerator represents the maximized likelihood value. With this solution, the worst case data regret is minimized. It has been further shown that by solving a related minimax problem

the worst case of the expected regret is considered instead of the regret for the observed data set, see [32].

The code length associated with normalized maximum likelihood is called the stochastic complexity of the data for a distribution family. It minimizes the worst case expected regret

The MDL method employs first the normalized maximum likelihood distributions to order the candidate models and chooses the model that gives minimal stochastic complexity. An early result by Rissanen [11] and a frequently used approximation for the stochastic complexity is given as follows:

![]()

which is the same result as the approximation of BIC by Schwarz given earlier in this section. Other approximations of the stochastic complexity that are more accurate avoid some pitfalls of the above approximation, for example:

![]()

where ![]() denotes the Fischer information matrix of sample size 1. The term

denotes the Fischer information matrix of sample size 1. The term ![]() contains the higher order terms and vanishes as

contains the higher order terms and vanishes as ![]() . The integral term with Fischer information needs to be finite. See [33] for more detail on other approximations and their computation. The MDL principle and the normalized maximum likelihood (NML) lead to the same expression as the Bayesian Information Criterion (BIC) in some special cases, for example, when Jeffrey’s noninformative prior is used in a Bayesian model and

. The integral term with Fischer information needs to be finite. See [33] for more detail on other approximations and their computation. The MDL principle and the normalized maximum likelihood (NML) lead to the same expression as the Bayesian Information Criterion (BIC) in some special cases, for example, when Jeffrey’s noninformative prior is used in a Bayesian model and ![]() . In general, however, the NML and BIC have different formulations. The MDL has been shown to provide consistent estimates of the model order, e.g., for estimating the dimensionality of the signal subspace in the presence of white noise [34]. This problem is reviewed in detail in the next section.

. In general, however, the NML and BIC have different formulations. The MDL has been shown to provide consistent estimates of the model order, e.g., for estimating the dimensionality of the signal subspace in the presence of white noise [34]. This problem is reviewed in detail in the next section.

3.02.5 Example: estimating number of signals in subspace methods

We consider the problem of estimating the dimensionality of the signal subspace. Such a problem commonly arises in array processing, time-delay estimation, frequency estimation, channel order estimation, for example. In these applications, if the signals are not coherent and signals are narrow-band, the dimension of the signal subspace defines the number of signals.

We consider the model, where the received data ![]() consists of (unobservable) random zero mean signal vector

consists of (unobservable) random zero mean signal vector ![]() plus additive zero mean white noise

plus additive zero mean white noise ![]() (

(![]() with

with ![]() unknown) uncorrelated with the signal v which is supposed to lie in an m-dimensional subspace of

unknown) uncorrelated with the signal v which is supposed to lie in an m-dimensional subspace of ![]() (

(![]() ), called the signal subspace. The covariance of the data

), called the signal subspace. The covariance of the data ![]() is then

is then ![]() , where the signal covariance matrix

, where the signal covariance matrix ![]() is of

is of ![]() . Let

. Let ![]() denote the ordered eigenvalues of

denote the ordered eigenvalues of ![]() . If

. If ![]() , then the smallest eigenvalue

, then the smallest eigenvalue ![]() equals

equals ![]() and has multiplicity

and has multiplicity ![]() , whereas the m largest eigenvalues are equal to the nonzero eigenvalues of

, whereas the m largest eigenvalues are equal to the nonzero eigenvalues of ![]() plus

plus ![]() . Hence, testing that the signal subspace has dimensionality m (

. Hence, testing that the signal subspace has dimensionality m (![]() ) can be formulated as a hypothesis of the equality of the eigenvalues

) can be formulated as a hypothesis of the equality of the eigenvalues

![]() (2.14)

(2.14)

and a GLRT can be formed against a general alternative ![]() arbitrary. Thus, we have a set of hypotheses

arbitrary. Thus, we have a set of hypotheses ![]() and a model selection method can be used to determine the best model given the data. To this end, note that

and a model selection method can be used to determine the best model given the data. To this end, note that ![]() corresponds to the noise-only hypothesis. Let us assume that v and n possess M-variate circular complex normal (CN) distribution in which case the received data y has a zero mean CN distribution with covariance matrix

corresponds to the noise-only hypothesis. Let us assume that v and n possess M-variate circular complex normal (CN) distribution in which case the received data y has a zero mean CN distribution with covariance matrix ![]() . Further suppose that an i.i.d. random sample

. Further suppose that an i.i.d. random sample ![]() is observed from

is observed from ![]() .

.

For the above problem, GLRT-based sequential test or the MDL and AIC criteria have been widely in sensor array signal processing and subspace estimation methods; see, e.g., [15–17], where the task at hand is to determine the number of signals m impinging on the sensor array. In this case, the signal v has a representation ![]() , where

, where ![]() denote the array response (steering vector) for a random source signal

denote the array response (steering vector) for a random source signal ![]() impinging on the array from the direction-of-arrival (DOA)

impinging on the array from the direction-of-arrival (DOA) ![]() (

(![]() ). In this application, the sample

). In this application, the sample ![]() represents the array snapshots at time instants

represents the array snapshots at time instants ![]() and the SCM

and the SCM ![]() is the conventional estimate of the array covariance matrix

is the conventional estimate of the array covariance matrix ![]() , where the signal covariance matrix

, where the signal covariance matrix ![]() has a representation

has a representation ![]() , where

, where ![]() denotes the array steering matrix and

denotes the array steering matrix and ![]() the vector of source signals. Another type of the problem such as determining the degree of non-circularity of complex random signals is illustrated in detail in [35].

the vector of source signals. Another type of the problem such as determining the degree of non-circularity of complex random signals is illustrated in detail in [35].

Let us proceed by first constructing the sequential GLRT-based model order selection procedure for the problem at hand. The p.d.f. of ![]() is

is ![]() , and thus the log-likelihood function of the sample is

, and thus the log-likelihood function of the sample is

where ![]() denotes the sample covariance matrix (SCM),

denotes the sample covariance matrix (SCM), ![]() denotes matrix trace and

denotes matrix trace and ![]() denotes the vector consisting of functionally independent real-valued parameters in

denotes the vector consisting of functionally independent real-valued parameters in ![]() . Naturally,

. Naturally, ![]() has different parameter space

has different parameter space ![]() under each hypothesis

under each hypothesis ![]() dimension and hence also different order

dimension and hence also different order ![]() . Under no-structure hypothesis

. Under no-structure hypothesis ![]() . This follows as

. This follows as ![]() is Hermitian and hence there are

is Hermitian and hence there are ![]() estimable parameters due to

estimable parameters due to ![]() and

and ![]() for

for ![]() and M estimable parameters

and M estimable parameters ![]() . For

. For ![]() , where

, where ![]() is the number of functionally independent real parameters in

is the number of functionally independent real parameters in ![]() with rank m plus 1 due to

with rank m plus 1 due to ![]() . The eigenvalue decomposition of

. The eigenvalue decomposition of ![]() is

is ![]() where

where ![]() represent its nonzero eigenvalues and

represent its nonzero eigenvalues and ![]() s the corresponding eigenvectors. There are

s the corresponding eigenvectors. There are ![]() real parameters in the eigenvectors but they are tied by

real parameters in the eigenvectors but they are tied by ![]() constraints due to the scale and phase indeterminacy of each eigenvector1 and an additional

constraints due to the scale and phase indeterminacy of each eigenvector1 and an additional ![]() constraints due to orthogonality of the eigenvectors,

constraints due to orthogonality of the eigenvectors, ![]() for

for ![]() . Since there are m eigenvalues

. Since there are m eigenvalues ![]() and

and ![]() , the total number of estimable parameters in

, the total number of estimable parameters in ![]() is

is

![]() (2.15)

(2.15)

when ![]() .

.

Let us then derive the GLRT statistic ![]() as defined in (2.10). Under

as defined in (2.10). Under ![]() for

for ![]() , the maximum log-likelihood is

, the maximum log-likelihood is

![]() (2.16)

(2.16)

where ![]() denote the eigenvalues of the SCM

denote the eigenvalues of the SCM ![]() and

and ![]() . The proof of (2.16) follows similarly as in Anderson [36, Theorem 2] in the real case. Then given the expression (2.16), it is easy to verify the GLRT statistic

. The proof of (2.16) follows similarly as in Anderson [36, Theorem 2] in the real case. Then given the expression (2.16), it is easy to verify the GLRT statistic ![]() , simplifies into the form

, simplifies into the form

![]() (2.17)

(2.17)

where

![]() (2.18)

(2.18)

is the ratio of the geometric to the arithmetic mean of the noise subspace eigenvalues of the SCM. If ![]() holds, this ratio tends to unity as

holds, this ratio tends to unity as ![]() . Now recall from Section 3.02.3.2 that the GLRT statistic

. Now recall from Section 3.02.3.2 that the GLRT statistic ![]() in (2.17) has a limiting

in (2.17) has a limiting ![]() distribution, where

distribution, where

![]()

The (forward) GLRT-based model selection procedure then chooses the first ![]() not rejected by the GLRT, i.e., the first m which verifies

not rejected by the GLRT, i.e., the first m which verifies ![]() , where (as discussed in Section 3.02.3.2) the detection threshold

, where (as discussed in Section 3.02.3.2) the detection threshold ![]() can be set as the

can be set as the ![]() th quantile of the

th quantile of the ![]() . This choice yields the asymptotic PFA

. This choice yields the asymptotic PFA ![]() for testing hypothesis (2.14).

for testing hypothesis (2.14).

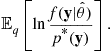

Let us now derive the AIC and MDL criteria. Let us first point out that the ![]() term in (2.16) can be dropped as it does not depend on m whereas any constant term (namely,

term in (2.16) can be dropped as it does not depend on m whereas any constant term (namely, ![]() ) not dependent on m can be added, thus showing that

) not dependent on m can be added, thus showing that ![]() in (2.16) can be written compactly as

in (2.16) can be written compactly as ![]() up to irrelevant additive constant not dependent on m. Further noting that the +1 can be dropped from the penalty term

up to irrelevant additive constant not dependent on m. Further noting that the +1 can be dropped from the penalty term ![]() in (2.15), we have the result that the AIC and the MDL criteria can be written in the following forms:

in (2.15), we have the result that the AIC and the MDL criteria can be written in the following forms:

An estimate of the AIC/MDL model order, i.e., the dimensionality of the signal subspace m, is found by choosing ![]() that minimizes the AIC/MDL criterion above. As already pointed out, the penalty term of the MDL is

that minimizes the AIC/MDL criterion above. As already pointed out, the penalty term of the MDL is ![]() times the penalty term of AIC. Since

times the penalty term of AIC. Since ![]() already at small sample lengths (

already at small sample lengths (![]() ), the MDL method is less prone to overfitting the data compared to the AIC. Note that first term in the MDL and AIC criterions above is simply the GLRT statistic

), the MDL method is less prone to overfitting the data compared to the AIC. Note that first term in the MDL and AIC criterions above is simply the GLRT statistic ![]() . Wax and Kailath [15] showed the MDL rule consistent in choosing the true dimensionality whereas the AIC tends to overestimate the dimensionality (in the large sample limit). Later [34] proved the consistency of the MDL rule when the CN assumption does not hold.

. Wax and Kailath [15] showed the MDL rule consistent in choosing the true dimensionality whereas the AIC tends to overestimate the dimensionality (in the large sample limit). Later [34] proved the consistency of the MDL rule when the CN assumption does not hold.

3.02.6 Conclusion

In this section, we provided an overview of model order estimation methods. The majority of well-defined methods stem from statistical inference or coding and information theory. The likelihood function plays a crucial role in most methods based on solid theoretical foundation. Statistical and information theoretical methods stem from fundamentally different theoretical background but have been shown to lead to identical results under certain restrictive assumptions and model classes. A practical example of model order estimation in case of low-rank model that is commonly used in sensor array signal processing and many other tasks that require high-resolution capability was provided.

References

1. Grünwald PD. The Minimum Description Length Principle. MIT Press 2007.

2. Stoica P, Selen Y. Model-order selection: a review of information criterion rules. IEEE Signal Process Mag. 2004;21(4):36–47.

3. Lanterman AD. Schwarz, Wallace and Rissanen: intertwining themes in theories of model selection. Int Stat Rev. 2001;69(2):185–212.

4. Grünwald PD, Myung I, Pitt M, eds. Advances in Minimum Description Length: Theory and Applications. MIT Press 2005.

5. H. Linhart, W. Zucchini, Model Selection, Wiley, New York.

6. Burnham K, Anderson D. Model Selection and Inference: A Practical Information-Theoretic Approach. New York: Springer; 1998.

7. Schwarz G. Estimating the dimension of a model. Ann Stat. 1978;6(2):461–464.

8. Akaike H. A new look at the statistical model identification. IEEE Trans Automat Control. 1974;19:716–723.

9. Akaike H. On the likelihood of a time series model. The Statistician. 1978;27(3):217–235.

10. Rissanen J. Stochastic complexity and modeling. Ann Stat. 1986;14(3):1080–1100.

11. Rissanen J. Modeling by the shortest data description. Automatica. 1978;14:465–471.

12. Kay SM. Fundamentals of Statistical Signal Processing: Detection Theory. Prentice Hall 1998.

13. Kay S. Exponentially embedded families—new approaches to model order estimation. IEEE Trans Aerosp Electron Syst. 2005;41(1):333–344.

14. Bozdogan H. Model selection and Akaike’s information criterion (AIC): the general theory and its analytical extensions. Psychometrika. 1987;52(3):345–370.

15. Wax T, Kailath T. Detection of signals by information theoretic criteria. IEEE Trans Acoust Speech Signal Process. 1985;33(2):387–392.

16. Zhang QT, Wong KM. Information theoretic criteria for the determination of the number of signals in spatially correlated noise. IEEE Trans Signal Process. 1993;41(4):1652–1663.

17. Xu G, Roy RHI, Kailath T. Detection of number of sources via exploitation of centro-symmetry property. IEEE Trans Signal Process. 1994;42(1):102–112.

18. Shah AA, Tufts DW. Determination of the dimension of a signal subspace from short data records. IEEE Trans Signal Process. 1994;42(9):2531–2535.

19. Weisberg S. Applied Linear Regression. New York: Wiley; 1980.

20. Draper NR, Smith H. Applied Regression Analysis. third ed. New York: Wiley; 1998.

21. Ronchetti E. Robust model selection in regression. Stat Prob Lett. 1985;3:21–23.

22. Tropp JA, Gilbert AC. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans Inform Theory. 2007;53(12):4655–4666.

23. Tibshirani R. Regression shrinkage and selection via the lasso. J Roy Stat Soc Ser B. 1996;58:267–288.

24. Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. Ann Stat. 2004;32(2):407–451.

25. Arlot S, Celisse A. A survey of cross-validation procedures for model selection. Stat Surv. 2010;4:40–79.

26. Efron B. Bootstrap methods: another look at the jackknife. Ann Stat. 1979;7(1):1–26.

27. R. Brcich, A.M. Zoubir, P. Pelin, Detection of sources using bootstrap techniques, IEEE Trans. Signal Process. 50 (2) 206–215.

28. Nishii R. Asymptotic properties of criteria for selection of variables in multiple regression. Ann Stat. 1984;2:758–765.

29. Cavanaugh JE. Unifying the derivations for the Akaike and corrected Akaike information criteria. Stat Prob Lett. 1977;33:201–208.

30. Sugiura N. Further analysis of the data by Akaike information criterion and the finite corrections. Commun Stat Theory Methods. 1978;7:13–26.

31. Shtarkov Y. Block universal sequential coding of single messages. Prob Inform Transmission. 1987;23(3):175–186.

32. Myung J, Navarro D, Pitt M. Model selection by normalized maximum likelihood. J Math Psychol. 2006;50:167–179.

33. Kontkanen P, Buntine W, Myllymaki P, Rissanen J, Tirri H. Efficient computation of stochastic complexity. In: Proceedings of International Conference on Artificial Intelligence and, Statistics. 2003;233–238.

34. Zhao LC, Krishnaiah PR, Bai ZD. On detection of the number of signals in presence of white noise. J Multivar Anal. 1986;20(1):1–25.

35. Novey M, Ollila E, Adali T. On testing the extent of noncircularity. IEEE Trans Signal Process. 2011;59(11):5632–5637.

36. Anderson TW. Asymptotic theory for principal component analysis. Ann Math Stat. 1963;1:122–148.

1Recall that scale indeterminacy is fixed by assuming ![]() and the phase of

and the phase of ![]() can be fixed similarly by any suitable convention.

can be fixed similarly by any suitable convention.