3.09.7 Comparing the performance of cooperative strategies

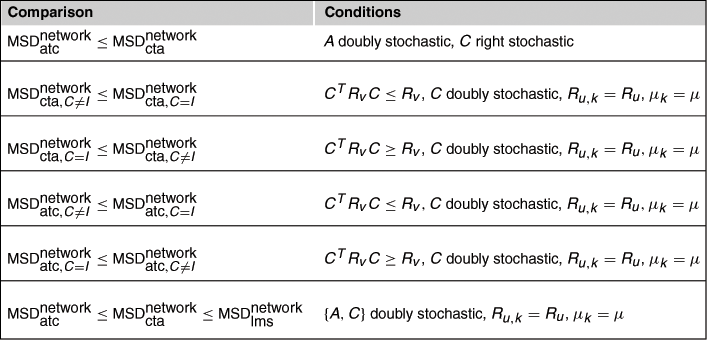

Using the expressions just derived for the MSD of the network, we can compare the performance of various cooperative and non-cooperative strategies. Table 9.6 further ahead summarizes the results derived in this section and the conditions under which they hold.

3.09.7.1 Comparing ATC and CTA strategies

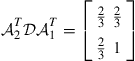

We first compare the performance of the adaptive ATC and CTA diffusion strategies (9.153) and (9.154) when they employ a doubly stochastic combination matrix ![]() . That is, let us consider the two scenarios:

. That is, let us consider the two scenarios:

![]() (9.332)

(9.332)

![]() (9.333)

(9.333)

where ![]() is now assumed to be doubly stochastic, i.e.,

is now assumed to be doubly stochastic, i.e.,

![]() (9.334)

(9.334)

with its rows and columns adding up to one. For example, these conditions are satisfied when ![]() is left stochastic and symmetric. Then, expressions (9.307) and (9.309) give:

is left stochastic and symmetric. Then, expressions (9.307) and (9.309) give:

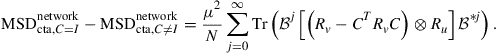

![]() (9.335)

(9.335)

![]() (9.336)

(9.336)

where

![]() (9.337)

(9.337)

Following [18], introduce the auxiliary nonnegative-definite matrix

![]() (9.338)

(9.338)

Then, it is immediate to verify from (9.306) that

(9.339)

(9.339)

(9.340)

(9.340)

so that

(9.341)

(9.341)

Now, since ![]() is doubly stochastic, it also holds that the enlarged matrix

is doubly stochastic, it also holds that the enlarged matrix ![]() is doubly stochastic. Moreover, for any doubly stochastic matrix

is doubly stochastic. Moreover, for any doubly stochastic matrix ![]() and any nonnegative-definite matrix

and any nonnegative-definite matrix ![]() of compatible dimensions, it holds that (see part (f) of Theorem C.3):

of compatible dimensions, it holds that (see part (f) of Theorem C.3):

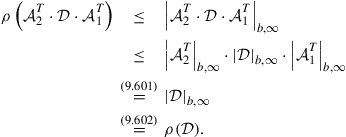

![]() (9.342)

(9.342)

Applying result (9.342) to (9.341) we conclude that

![]() (9.343)

(9.343)

so that the adaptive ATC strategy (9.153) outperforms the adaptive CTA strategy (9.154) for doubly stochastic combination matrices ![]() .

.

3.09.7.2 Comparing strategies with and without information exchange

We now examine the effect of information exchange ![]() on the performance of the adaptive ATC and CTA diffusion strategies (9.153) and (9.154) under conditions (9.310)–(9.312) for uniform data profile.

on the performance of the adaptive ATC and CTA diffusion strategies (9.153) and (9.154) under conditions (9.310)–(9.312) for uniform data profile.

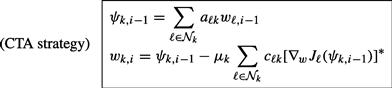

3.09.7.2.1 CTA Strategies

We start with the adaptive CTA strategy (9.154), and consider two scenarios with and without information exchange. These scenarios correspond to the following selections in the general description (9.201)–(9.203):

![]() (9.344)

(9.344)

![]() (9.345)

(9.345)

Then, expressions (9.322) and (9.323) give:

![]() (9.346)

(9.346)

![]() (9.347)

(9.347)

where the matrix ![]() is defined by (9.319). Note that

is defined by (9.319). Note that ![]() , so we denote them simply by

, so we denote them simply by ![]() in the derivation that follows. Then, from expression (9.306) for the network MSD we get:

in the derivation that follows. Then, from expression (9.306) for the network MSD we get:

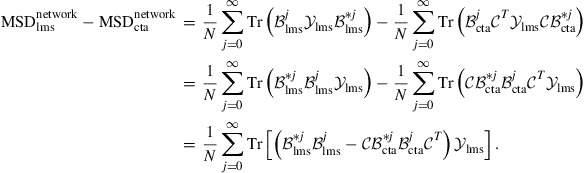

(9.348)

(9.348)

It follows that the difference in performance between both CTA implementations depends on how the matrices ![]() and

and ![]() compare to each other:

compare to each other:

![]() (9.349)

(9.349)

so that a CTA implementation with information exchange performs better than a CTA implementation without information exchange. Note that the condition on ![]() corresponds to requiring

corresponds to requiring

![]() (9.350)

(9.350)

which can be interpreted to mean that the cooperation matrix ![]() should be such that it does not amplify the effect of measurement noise. For example, this situation occurs when the noise profile is uniform across the network, in which case

should be such that it does not amplify the effect of measurement noise. For example, this situation occurs when the noise profile is uniform across the network, in which case ![]() . This is because it would then hold that

. This is because it would then hold that

![]() (9.351)

(9.351)

in view of the fact that ![]() since

since ![]() is doubly stochastic (cf. property (e) in Lemma C.3).

is doubly stochastic (cf. property (e) in Lemma C.3).

![]() (9.352)

(9.352)

so that a CTA implementation without information exchange performs better than a CTA implementation with information exchange. In this case, the condition on ![]() indicates that the combination matrix

indicates that the combination matrix ![]() ends up amplifying the effect of noise.

ends up amplifying the effect of noise.

3.09.7.2.2 ATC Strategies

We can repeat the argument for the adaptive ATC strategy (9.153), and consider two scenarios with and without information exchange. These scenarios correspond to the following selections in the general description (9.201)–(9.203):

![]() (9.353)

(9.353)

![]() (9.354)

(9.354)

Then, expressions (9.322) and (9.323) give:

![]() (9.355)

(9.355)

![]() (9.356)

(9.356)

Note again that ![]() , so we denote them simply by

, so we denote them simply by ![]() . Then,

. Then,

(9.357)

(9.357)

It again follows that the difference in performance between both ATC implementations depends on how the matrices ![]() and

and ![]() compare to each other and we obtain:

compare to each other and we obtain:

![]() (9.358)

(9.358)

and

![]() (9.359)

(9.359)

3.09.7.3 Comparing diffusion strategies with the non-cooperative strategy

We now compare the performance of the adaptive CTA strategy (9.154) to the non-cooperative LMS strategy (9.207) assuming conditions (9.310)–(9.312) for uniform data profile. These scenarios correspond to the following selections in the general description (9.201)–(9.203):

![]() (9.360)

(9.360)

![]() (9.361)

(9.361)

where ![]() is further assumed to be doubly stochastic (along with

is further assumed to be doubly stochastic (along with ![]() ) so that

) so that

![]() (9.362)

(9.362)

Then, expressions (9.322) and (9.323) give:

![]() (9.363)

(9.363)

![]() (9.364)

(9.364)

Now recall that

![]() (9.365)

(9.365)

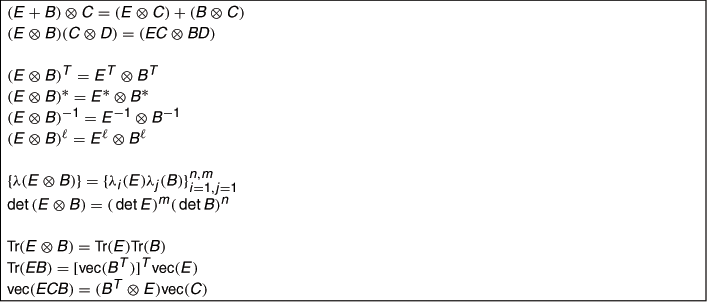

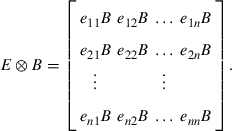

so that, using the Kronecker product property (9.317),

(9.366)

(9.366)

Then,

(9.367)

(9.367)

Let us examine the difference:

(9.368)

(9.368)

Now, due to the even power, it always holds that ![]() . Moreover, since

. Moreover, since ![]() and

and ![]() are doubly stochastic, it follows that

are doubly stochastic, it follows that ![]() is also doubly stochastic. Therefore, the matrix

is also doubly stochastic. Therefore, the matrix ![]() is nonnegative-definite as well (cf. property (e) of Lemma C.3). It follows that

is nonnegative-definite as well (cf. property (e) of Lemma C.3). It follows that

![]() (9.369)

(9.369)

But since ![]() , we conclude from (9.367) that

, we conclude from (9.367) that

![]() (9.370)

(9.370)

This is because for any two Hermitian nonnegative-definite matrices ![]() and

and ![]() of compatible dimensions, it holds that

of compatible dimensions, it holds that ![]() ; indeed if we factor

; indeed if we factor ![]() with

with ![]() full rank, then

full rank, then ![]() . We conclude from this analysis that adaptive CTA diffusion performs better than non-cooperative LMS under uniform data profile conditions and doubly stochastic

. We conclude from this analysis that adaptive CTA diffusion performs better than non-cooperative LMS under uniform data profile conditions and doubly stochastic ![]() . If we refer to the earlier result (9.343), we conclude that the following relation holds:

. If we refer to the earlier result (9.343), we conclude that the following relation holds:

![]() (9.371)

(9.371)

Table 9.6 lists the comparison results derived in this section and lists the conditions under which the conclusions hold.

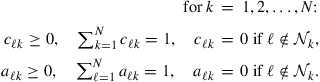

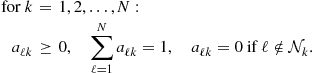

3.09.8 Selecting the combination weights

The adaptive diffusion strategy (9.201)–(9.203) employs combination weights ![]() or, equivalently, combination matrices

or, equivalently, combination matrices ![]() , where

, where ![]() and

and ![]() are left-stochastic matrices and

are left-stochastic matrices and ![]() is a right-stochastic matrix. There are several ways by which these matrices can be selected. In this section, we describe constructions that result in left-stochastic or doubly-stochastic combination matrices,

is a right-stochastic matrix. There are several ways by which these matrices can be selected. In this section, we describe constructions that result in left-stochastic or doubly-stochastic combination matrices, ![]() . When a right-stochastic combination matrix is needed, such as

. When a right-stochastic combination matrix is needed, such as ![]() , then it can be obtained by transposition of the left-stochastic constructions shown below.

, then it can be obtained by transposition of the left-stochastic constructions shown below.

3.09.8.1 Constant combination weights

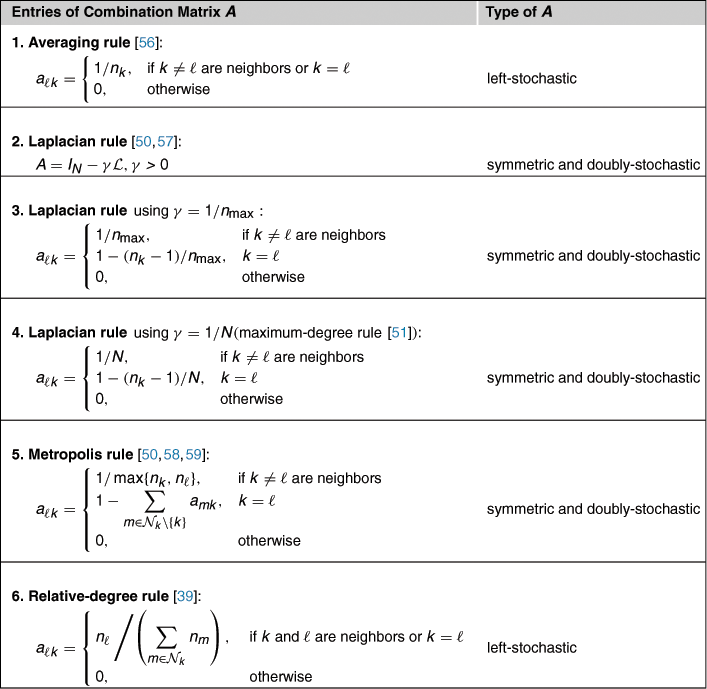

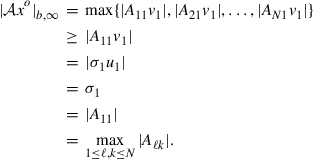

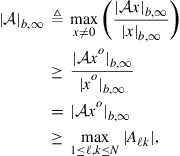

Table 9.7 lists a couple of common choices for selecting constant combination weights for a network with ![]() nodes. Several of these constructions appeared originally in the literature on graph theory. In the table, the symbol

nodes. Several of these constructions appeared originally in the literature on graph theory. In the table, the symbol ![]() denotes the degree of node

denotes the degree of node ![]() , which refers to the size of its neighborhood. Likewise, the symbol

, which refers to the size of its neighborhood. Likewise, the symbol ![]() refers to the maximum degree across the network, i.e.,

refers to the maximum degree across the network, i.e.,

![]() (9.372)

(9.372)

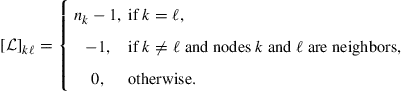

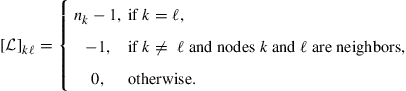

The Laplacian rule, which appears in the second line of the table, relies on the use of the Laplacian matrix ![]() of the network and a positive scalar

of the network and a positive scalar ![]() . The Laplacian matrix is defined by (9.574) in Appendix B, namely, it is a symmetric matrix whose entries are constructed as follows [60–62]:

. The Laplacian matrix is defined by (9.574) in Appendix B, namely, it is a symmetric matrix whose entries are constructed as follows [60–62]:

(9.373)

(9.373)

The Laplacian rule can be reduced to other forms through the selection of the positive parameter ![]() . One choice is

. One choice is ![]() while another choice is

while another choice is ![]() and leads to the maximum-degree rule. Obviously, it always holds that

and leads to the maximum-degree rule. Obviously, it always holds that ![]() so that

so that ![]() . Therefore, the choice

. Therefore, the choice ![]() ends up assigning larger weights to neighbors than the choice

ends up assigning larger weights to neighbors than the choice ![]() . The averaging rule in the first row of the table is one of the simplest combination rules whereby nodes simply average data from their neighbors.

. The averaging rule in the first row of the table is one of the simplest combination rules whereby nodes simply average data from their neighbors.

In the constructions in Table 9.7, the values of the weights ![]() are largely dependent on the degree of the nodes. In this way, the number of connections that each node has influences the combination weights with its neighbors. While such selections may be appropriate in some applications, they can nevertheless degrade the performance of adaptation over networks [63]. This is because such weighting schemes ignore the noise profile across the network. And since some nodes can be noisier than others, it is not sufficient to rely solely on the amount of connectivity that nodes have to determine the combination weights to their neighbors. It is important to take into account the amount of noise that is present at the nodes as well. Therefore, designing combination rules that are aware of the variation in noise profile across the network is an important task. It is also important to devise strategies that are able to adapt these combination weights in response to variations in network topology and data statistical profile. For this reason, following [64,65], we describe in the next subsection one adaptive procedure for adjusting the combination weights. This procedure allows the network to assign more or less relevance to nodes according to the quality of their data.

are largely dependent on the degree of the nodes. In this way, the number of connections that each node has influences the combination weights with its neighbors. While such selections may be appropriate in some applications, they can nevertheless degrade the performance of adaptation over networks [63]. This is because such weighting schemes ignore the noise profile across the network. And since some nodes can be noisier than others, it is not sufficient to rely solely on the amount of connectivity that nodes have to determine the combination weights to their neighbors. It is important to take into account the amount of noise that is present at the nodes as well. Therefore, designing combination rules that are aware of the variation in noise profile across the network is an important task. It is also important to devise strategies that are able to adapt these combination weights in response to variations in network topology and data statistical profile. For this reason, following [64,65], we describe in the next subsection one adaptive procedure for adjusting the combination weights. This procedure allows the network to assign more or less relevance to nodes according to the quality of their data.

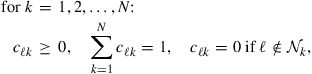

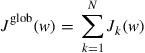

3.09.8.2 Optimizing the combination weights

Ideally, we would like to select ![]() combination matrices

combination matrices ![]() in order to minimize the network MSD given by (9.302) or (9.306). In [18], the selection of the combination weights was formulated as the following optimization problem:

in order to minimize the network MSD given by (9.302) or (9.306). In [18], the selection of the combination weights was formulated as the following optimization problem:

(9.374)

(9.374)

We can pursue a numerical solution to (9.374) in order to search for optimal combination matrices, as was done in [18]. Here, however, we are interested in an adaptive solution that becomes part of the learning process so that the network can adapt the weights on the fly in response to network conditions. We illustrate an approximate approach from [64,65] that leads to one adaptive solution that performs reasonably well in practice.

We illustrate the construction by considering the ATC strategy (9.158) without information exchange where ![]()

![]() , and

, and ![]() . In this case, recursions (9.204)–(9.206) take the form:

. In this case, recursions (9.204)–(9.206) take the form:

![]() (9.375)

(9.375)

![]() (9.376)

(9.376)

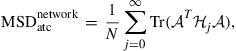

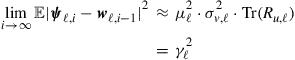

and, from (9.306), the corresponding network MSD performance is:

(9.377)

(9.377)

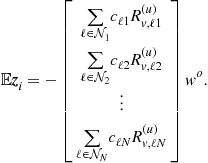

where

![]() (9.378)

(9.378)

![]() (9.379)

(9.379)

![]() (9.380)

(9.380)

![]() (9.381)

(9.381)

![]() (9.382)

(9.382)

![]() (9.383)

(9.383)

Minimizing the MSD expression (9.377) over left-stochastic matrices ![]() is generally non-trivial. We pursue an approximate solution.

is generally non-trivial. We pursue an approximate solution.

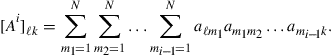

To begin with, for compactness of notation, let ![]() denote the spectral radius of the

denote the spectral radius of the ![]() block matrix

block matrix ![]() :

:

![]() (9.384)

(9.384)

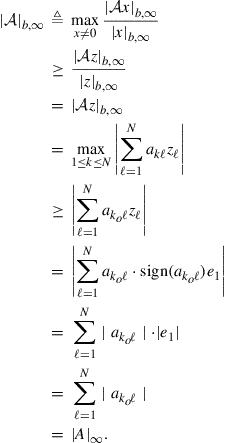

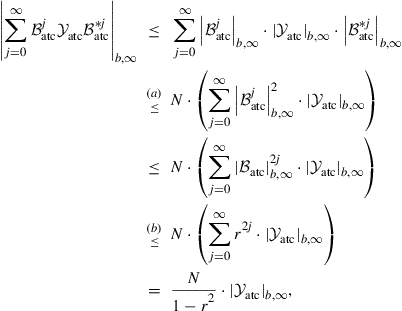

We already know, in view of the mean and mean-square stability of the network, that ![]() . Now, consider the series that appears in (9.377) and whose trace we wish to minimize over

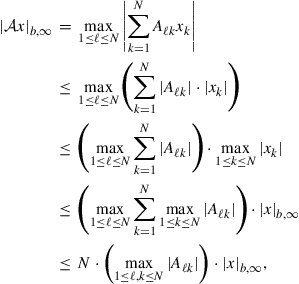

. Now, consider the series that appears in (9.377) and whose trace we wish to minimize over ![]() . Note that its block maximum norm can be bounded as follows:

. Note that its block maximum norm can be bounded as follows:

(9.385)

(9.385)

where for step (b) we use result (9.602) to conclude that

(9.386)

(9.386)

To justify step (a), we use result (9.584) to relate the norms of ![]() and its complex conjugate,

and its complex conjugate, ![]() , as

, as

![]() (9.387)

(9.387)

Expression (9.385) then shows that the norm of the series appearing in (9.377) is bounded by a scaled multiple of the norm of ![]() , and the scaling constant is independent of

, and the scaling constant is independent of ![]() . Using property (9.586) we conclude that there exists a positive constant

. Using property (9.586) we conclude that there exists a positive constant ![]() , also independent of

, also independent of ![]() , such that

, such that

(9.388)

(9.388)

Therefore, instead of attempting to minimize the trace of the series, the above result motivates us to minimize an upper bound to the trace. Thus, we consider the alternative problem of minimizing the first term of the series (9.377), namely,

(9.389)

(9.389)

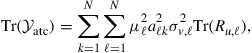

Using (9.379), the trace of ![]() can be expressed in terms of the combination coefficients as follows:

can be expressed in terms of the combination coefficients as follows:

(9.390)

(9.390)

so that problem (9.389) can be decoupled into ![]() separate optimization problems of the form:

separate optimization problems of the form:

(9.391)

(9.391)

With each node ![]() , we associate the following nonnegative noise-data-dependent measure:

, we associate the following nonnegative noise-data-dependent measure:

![]() (9.392)

(9.392)

This measure amounts to scaling the noise variance at node ![]() by

by ![]() and by the power of the regression data (measured through the trace of its covariance matrix). We shall refer to

and by the power of the regression data (measured through the trace of its covariance matrix). We shall refer to ![]() as the noise-data variance product (or variance product, for simplicity) at node

as the noise-data variance product (or variance product, for simplicity) at node ![]() . Then, the solution of (9.391) is given by:

. Then, the solution of (9.391) is given by:

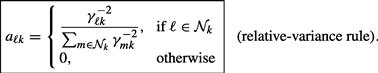

(9.393)

(9.393)

We refer to this combination rule as the relative-variance combination rule [64]; it leads to a left-stochastic matrix ![]() . In this construction, node

. In this construction, node ![]() combines the intermediate estimates

combines the intermediate estimates ![]() from its neighbors in (9.376) in proportion to the inverses of their variance products,

from its neighbors in (9.376) in proportion to the inverses of their variance products, ![]() . The result is physically meaningful. Nodes with smaller variance products will generally be given larger weights. In comparison, the following relative-degree-variance rule was proposed in [18] (a typo appears in Table III in [18], where the noise variances appear written in the table instead of their inverses):

. The result is physically meaningful. Nodes with smaller variance products will generally be given larger weights. In comparison, the following relative-degree-variance rule was proposed in [18] (a typo appears in Table III in [18], where the noise variances appear written in the table instead of their inverses):

(9.394)

(9.394)

This second form also leads to a left-stochastic combination matrix ![]() . However, rule (9.394) does not take into account the covariance matrices of the regression data across the network. Observe that in the special case when the step-sizes, regression covariance matrices, and noise variances are uniform across the network, i.e.,

. However, rule (9.394) does not take into account the covariance matrices of the regression data across the network. Observe that in the special case when the step-sizes, regression covariance matrices, and noise variances are uniform across the network, i.e., ![]() ,

, ![]() and

and ![]() for all

for all ![]() , expression (9.393) reduces to the simple averaging rule (first line of Table 9.7). In contrast, expression (9.394) reduces the relative degree rule (last line of Table 9.7).

, expression (9.393) reduces to the simple averaging rule (first line of Table 9.7). In contrast, expression (9.394) reduces the relative degree rule (last line of Table 9.7).

3.09.8.3 Adaptive combination weights

To evaluate the combination weights (9.393), the nodes need to know the variance products, ![]() , of their neighbors. According to (9.392), the factors

, of their neighbors. According to (9.392), the factors ![]() are defined in terms of the noise variances,

are defined in terms of the noise variances, ![]() , and the regression covariance matrices,

, and the regression covariance matrices, ![]() , and these quantities are not known beforehand. The nodes only have access to realizations of

, and these quantities are not known beforehand. The nodes only have access to realizations of ![]() . We now describe one procedure that allows every node

. We now describe one procedure that allows every node ![]() to learn the variance products of its neighbors in an adaptive manner. Note that if a particular node

to learn the variance products of its neighbors in an adaptive manner. Note that if a particular node ![]() happens to belong to two neighborhoods, say, the neighborhood of node

happens to belong to two neighborhoods, say, the neighborhood of node ![]() and the neighborhood of node

and the neighborhood of node ![]() , then each of

, then each of ![]() and

and ![]() need to evaluate the variance product,

need to evaluate the variance product, ![]() , of node

, of node ![]() . The procedure described below allows each node in the network to estimate the variance products of its neighbors in a recursive manner.

. The procedure described below allows each node in the network to estimate the variance products of its neighbors in a recursive manner.

To motivate the algorithm, we refer to the ATC recursion (9.375), (9.376) and use the data model (9.208) to write for node ![]() :

:

![]() (9.395)

(9.395)

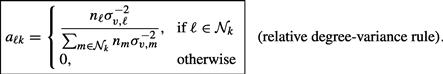

so that, in view of our earlier assumptions on the regression data and noise in Section 3.09.6.1, we obtain in the limit as ![]() :

:

![]() (9.396)

(9.396)

We can evaluate the limit on the right-hand side by using the steady-state result (9.284). Indeed, we select the vector ![]() in (9.284) to satisfy

in (9.284) to satisfy

![]() (9.397)

(9.397)

Then, from (9.284),

![]() (9.398)

(9.398)

Now recall from expression (9.379) for ![]() that for the ATC algorithm under consideration we have

that for the ATC algorithm under consideration we have

![]() (9.399)

(9.399)

so that the entries of ![]() depend on combinations of the squared step-sizes,

depend on combinations of the squared step-sizes, ![]() . This fact implies that the first term on the right-hand side of (9.396) depends on products of the form

. This fact implies that the first term on the right-hand side of (9.396) depends on products of the form ![]() ; these fourth-order factors can be ignored in comparison to the second-order factor

; these fourth-order factors can be ignored in comparison to the second-order factor ![]() for small step-sizes so that

for small step-sizes so that

(9.400)

(9.400)

in terms of the desired variance product, ![]() . Using the following instantaneous approximation at node

. Using the following instantaneous approximation at node ![]() (where

(where ![]() is replaced by

is replaced by ![]() ):

):

![]() (9.401)

(9.401)

We can now motivate an algorithm that enables node ![]() to estimate the variance product,

to estimate the variance product, ![]() of its neighbor

of its neighbor ![]() . Thus, let

. Thus, let ![]() denote an estimate for

denote an estimate for ![]() that is computed by node

that is computed by node ![]() at time

at time ![]() . Then, one way to evaluate

. Then, one way to evaluate ![]() is through the recursion:

is through the recursion:

![]() (9.402)

(9.402)

where ![]() is a positive coefficient smaller than one. Note that under expectation, expression (9.402) becomes

is a positive coefficient smaller than one. Note that under expectation, expression (9.402) becomes

![]() (9.403)

(9.403)

so that in steady-state, as ![]() ,

,

![]() (9.404)

(9.404)

Hence, we obtain

![]() (9.405)

(9.405)

That is, the estimator ![]() converges on average close to the desired variance product

converges on average close to the desired variance product ![]() . In this way, we can replace the optimal weights (9.393) by the adaptive construction:

. In this way, we can replace the optimal weights (9.393) by the adaptive construction:

(9.406)

(9.406)

Equations (9.402) and (9.406) provide one adaptive construction for the combination weights ![]() .

.

3.09.9 Diffusion with noisy information exchanges

The adaptive diffusion strategy (9.201)–(9.203) relies on the fusion of local information collected from neighborhoods through the use of combination matrices ![]() . In the previous section, we described several constructions for selecting such combination matrices. We also motivated and developed an adaptive scheme for the ATC mode of operation (9.375) and (9.376) that computes combination weights in a manner that is aware of the variation of the variance-product profile across the network. Nevertheless, in addition to the measurement noises

. In the previous section, we described several constructions for selecting such combination matrices. We also motivated and developed an adaptive scheme for the ATC mode of operation (9.375) and (9.376) that computes combination weights in a manner that is aware of the variation of the variance-product profile across the network. Nevertheless, in addition to the measurement noises ![]() at the individual nodes, we also need to consider the effect of perturbations that are introduced during the exchange of information among neighboring nodes. Noise over the communication links can be due to various factors including thermal noise and imperfect channel information. Studying the degradation in mean-square performance that results from these noisy exchanges can be pursued by straightforward extension of the mean-square analysis of Section 3.09.6, as we proceed to illustrate. Subsequently, we shall use the results to show how the combination weights can also be adapted in the presence of noisy exchange links.

at the individual nodes, we also need to consider the effect of perturbations that are introduced during the exchange of information among neighboring nodes. Noise over the communication links can be due to various factors including thermal noise and imperfect channel information. Studying the degradation in mean-square performance that results from these noisy exchanges can be pursued by straightforward extension of the mean-square analysis of Section 3.09.6, as we proceed to illustrate. Subsequently, we shall use the results to show how the combination weights can also be adapted in the presence of noisy exchange links.

3.09.9.1 Noise sources over exchange links

To model noisy links, we introduce an additive noise component into each of the steps of the diffusion strategy (9.201)–(9.203) during the operations of information exchange among the nodes. The notation becomes a bit cumbersome because we need to account for both the source and destination of the information that is being exchanged. For example, the same signal ![]() that is generated by node

that is generated by node ![]() will be broadcast to all the neighbors of node

will be broadcast to all the neighbors of node ![]() . When this is done, a different noise will interfere with the exchange of

. When this is done, a different noise will interfere with the exchange of ![]() over each of the edges that link node

over each of the edges that link node ![]() to its neighbors. Thus, we will need to use a notation of the form

to its neighbors. Thus, we will need to use a notation of the form ![]() , with two subscripts

, with two subscripts ![]() and

and ![]() , to indicate that this is the noisy version of

, to indicate that this is the noisy version of ![]() that is received by node

that is received by node ![]() from node

from node ![]() . The subscript

. The subscript ![]() indicates that

indicates that ![]() is the source and

is the source and ![]() is the sink, i.e., information is moving from

is the sink, i.e., information is moving from ![]() to

to ![]() . For the reverse situation where information flows from node

. For the reverse situation where information flows from node ![]() to

to ![]() , we would use instead the subscript

, we would use instead the subscript ![]() .

.

With this notation in mind, we model the noisy data received by node ![]() from its neighbor

from its neighbor ![]() as follows:

as follows:

![]() (9.407)

(9.407)

![]() (9.408)

(9.408)

![]() (9.409)

(9.409)

![]() (9.410)

(9.410)

where ![]() , and

, and ![]() are vector noise signals, and

are vector noise signals, and ![]() is a scalar noise signal. These are the noise signals that perturb exchanges over the edge linking source

is a scalar noise signal. These are the noise signals that perturb exchanges over the edge linking source ![]() to sink

to sink ![]() (i.e., for data sent from node

(i.e., for data sent from node ![]() to node

to node ![]() ). The superscripts

). The superscripts ![]() in each case refer to the variable that these noises perturb. Figure 9.14 illustrates the various noise sources that perturb the exchange of information from node

in each case refer to the variable that these noises perturb. Figure 9.14 illustrates the various noise sources that perturb the exchange of information from node ![]() to node

to node ![]() . The figure also shows the measurement noises

. The figure also shows the measurement noises ![]() that exist locally at the nodes in view of the data model (9.208).

that exist locally at the nodes in view of the data model (9.208).

Figure 9.14 Additive noise sources perturb the exchange of information from node ![]() to node

to node ![]() . The subscript

. The subscript ![]() in this illustration indicates that

in this illustration indicates that ![]() is the source node and

is the source node and ![]() is the sink node so that information is flowing from

is the sink node so that information is flowing from ![]() to

to ![]() .

.

We assume that the following noise signals, which influence the data received by node ![]() ,

,

![]() (9.411)

(9.411)

are temporally white and spatially independent random processes with zero mean and variances or covariances given by

![]() (9.412)

(9.412)

Obviously, the quantities

![]() (9.413)

(9.413)

are all zero if ![]() or when

or when ![]() . We further assume that the noise processes (9.411) are independent of each other and of the regression data

. We further assume that the noise processes (9.411) are independent of each other and of the regression data ![]() for all

for all ![]() and

and ![]() .

.

3.09.9.2 Error recursion

Using the perturbed data (9.407)–(9.410), the adaptive diffusion strategy (9.201)–(9.203) becomes

![]() (9.414)

(9.414)

![]() (9.415)

(9.415)

![]() (9.416)

(9.416)

Observe that the perturbed quantities ![]() , with subscripts

, with subscripts ![]() , appear in (9.414)–(9.416) in place of the original quantities

, appear in (9.414)–(9.416) in place of the original quantities ![]() that appear in (9.201)–(9.203). This is because these quantities are now subject to exchange noises. As before, we are still interested in examining the evolution of the weight-error vectors:

that appear in (9.201)–(9.203). This is because these quantities are now subject to exchange noises. As before, we are still interested in examining the evolution of the weight-error vectors:

![]() (9.417)

(9.417)

For this purpose, we again introduce the following ![]() block vector, whose entries are of size

block vector, whose entries are of size ![]() each:

each:

(9.418)

(9.418)

and proceed to determine a recursion for its evolution over time. The arguments are largely similar to what we already did before in Section 3.09.6.3 and, therefore, we shall emphasize the differences that arise. The main deviation is that we now need to account for the presence of the new noise signals; they will contribute additional terms to the recursion for ![]() —see (9.442) further ahead. We may note that some studies on the effect of imperfect data exchanges on the performance of adaptive diffusion algorithms are considered in [66–68]. These earlier investigations were limited to particular cases in which only noise in the exchange of

—see (9.442) further ahead. We may note that some studies on the effect of imperfect data exchanges on the performance of adaptive diffusion algorithms are considered in [66–68]. These earlier investigations were limited to particular cases in which only noise in the exchange of ![]() was considered (as in (9.407)), in addition to setting

was considered (as in (9.407)), in addition to setting ![]() (in which case there is no exchange of

(in which case there is no exchange of ![]() ), and by focusing on the CTA case for which

), and by focusing on the CTA case for which ![]() . Here, we consider instead the general case that accounts for the additional sources of imperfections shown in (9.408)–(9.410), in addition to the general diffusion strategy (9.201)–(9.203) with combination matrices

. Here, we consider instead the general case that accounts for the additional sources of imperfections shown in (9.408)–(9.410), in addition to the general diffusion strategy (9.201)–(9.203) with combination matrices ![]() .

.

To begin with, we introduce the aggregate ![]() zero-mean noise signals:

zero-mean noise signals:

![]() (9.419)

(9.419)

These noises represent the aggregate effect on node ![]() of all exchange noises from the neighbors of node

of all exchange noises from the neighbors of node ![]() while exchanging the estimates

while exchanging the estimates ![]() during the two combination steps (9.201) and (9.203). The

during the two combination steps (9.201) and (9.203). The ![]() covariance matrices of these noises are given by

covariance matrices of these noises are given by

![]() (9.420)

(9.420)

These expressions aggregate the exchange noise covariances in the neighborhood of node ![]() ; the covariances are scaled by the squared coefficients

; the covariances are scaled by the squared coefficients ![]() . We collect these noise signals, and their covariances, from across the network into

. We collect these noise signals, and their covariances, from across the network into ![]() block vectors and

block vectors and ![]() block diagonal matrices as follows:

block diagonal matrices as follows:

![]() (9.421)

(9.421)

![]() (9.422)

(9.422)

![]() (9.423)

(9.423)

![]() (9.424)

(9.424)

We further introduce the following scalar zero-mean noise signal:

![]() (9.425)

(9.425)

whose variance is

![]() (9.426)

(9.426)

In the absence of exchange noises for the data ![]() , the signal

, the signal ![]() would coincide with the measurement noise

would coincide with the measurement noise ![]() . Expression (9.425) is simply a reflection of the aggregate effect of the noises in exchanging

. Expression (9.425) is simply a reflection of the aggregate effect of the noises in exchanging ![]() on node

on node ![]() . Indeed, starting from the data model (9.208) and using (9.409) and (9.410), we can easily verify that the noisy data

. Indeed, starting from the data model (9.208) and using (9.409) and (9.410), we can easily verify that the noisy data ![]() are related via:

are related via:

![]() (9.427)

(9.427)

We also define (compare with (9.234) and (9.235) and note that we are now using the perturbed regression vectors ![]() ):

):

![]() (9.428)

(9.428)

![]() (9.429)

(9.429)

It holds that

![]() (9.430)

(9.430)

where

(9.431)

(9.431)

When there is no noise during the exchange of the regression data, i.e., when ![]() , the expressions for

, the expressions for ![]() reduce to expressions (9.234), (9.235), and (9.182) for

reduce to expressions (9.234), (9.235), and (9.182) for ![]() .

.

Likewise, we introduce (compare with (9.239)):

![]() (9.432)

(9.432)

![]() (9.433)

(9.433)

Compared with the earlier definition for ![]() in (9.239) when there is no noise over the exchange links, we see that we now need to account for the various noisy versions of the same regression vector

in (9.239) when there is no noise over the exchange links, we see that we now need to account for the various noisy versions of the same regression vector ![]() and the same signal

and the same signal ![]() . For instance, the vectors

. For instance, the vectors ![]() and

and ![]() would denote two noisy versions received by nodes

would denote two noisy versions received by nodes ![]() and

and ![]() for the same regression vector

for the same regression vector ![]() transmitted from node

transmitted from node ![]() . Likewise, the scalars

. Likewise, the scalars ![]() and

and ![]() would denote two noisy versions received by nodes

would denote two noisy versions received by nodes ![]() and

and ![]() for the same scalar

for the same scalar ![]() transmitted from node

transmitted from node ![]() . As a result, the quantity

. As a result, the quantity ![]() is not zero mean any longer (in contrast to

is not zero mean any longer (in contrast to ![]() , which had zero mean). Indeed, note that

, which had zero mean). Indeed, note that

(9.434)

(9.434)

(9.435)

(9.435)

Although we can continue our analysis by studying this general case in which the vectors ![]() do not have zero-mean (see [65,69]), we shall nevertheless limit our discussion in the sequel to the case in which there is no noise during the exchange of the regression data, i.e., we henceforth assume that:

do not have zero-mean (see [65,69]), we shall nevertheless limit our discussion in the sequel to the case in which there is no noise during the exchange of the regression data, i.e., we henceforth assume that:

![]() (9.436)

(9.436)

We maintain all other noise sources, which occur during the exchange of the weight estimates ![]() and the data

and the data ![]() . Under condition (9.436), we obtain

. Under condition (9.436), we obtain

![]() (9.437)

(9.437)

![]() (9.438)

(9.438)

![]() (9.439)

(9.439)

Then, the covariance matrix of each term ![]() is given by

is given by

![]() (9.440)

(9.440)

and the covariance matrix of ![]() is

is ![]() block diagonal with blocks of size

block diagonal with blocks of size ![]() :

:

![]() (9.441)

(9.441)

Now repeating the argument that led to (9.246) we arrive at the following recursion for the weight-error vector:

![]() (9.442)

(9.442)

For comparison purposes, we repeat recursion (9.246) here (recall that this recursion corresponds to the case when the exchanges over the links are not subject to noise):

![]() (9.443)

(9.443)

Comparing (9.442) and (9.443) we find that:

1. The covariance matrix ![]() in (9.443) is replaced by

in (9.443) is replaced by ![]() . Recall from (9.429) that

. Recall from (9.429) that ![]() contains the influence of the noises that arise during the exchange of the regression data, i.e., the

contains the influence of the noises that arise during the exchange of the regression data, i.e., the ![]() . But since we are now assuming that

. But since we are now assuming that ![]() , then

, then ![]() .

.

2. The term ![]() in (9.443) is replaced by

in (9.443) is replaced by ![]() . Recall from (9.432) that

. Recall from (9.432) that ![]() contains the influence of the noises that arise during the exchange of the measurement data and the regression data, i.e., the

contains the influence of the noises that arise during the exchange of the measurement data and the regression data, i.e., the ![]() .

.

3. Two new driving terms appear involving ![]() and

and ![]() . These terms reflect the influence of the noises during the exchange of the weight estimates

. These terms reflect the influence of the noises during the exchange of the weight estimates ![]() .

.

a. The term involving ![]() accounts for noise introduced at the information-exchange step (9.414) before adaptation.

accounts for noise introduced at the information-exchange step (9.414) before adaptation.

b. The term involving ![]() accounts for noise introduced during the adaptation step (9.415).

accounts for noise introduced during the adaptation step (9.415).

c. The term involving ![]() accounts for noise introduced at the information-exchange step (9.416) after adaptation.

accounts for noise introduced at the information-exchange step (9.416) after adaptation.

Therefore, since we are not considering noise during the exchange of the regression data, the weight-error recursion (9.442) simplifies to:

![]() (9.444)

(9.444)

where we used the fact that ![]() under these conditions.

under these conditions.

3.09.9.3 Convergence in the mean

Taking expectations of both sides of (9.444) we find that the mean error vector evolves according to the following recursion:

![]() (9.445)

(9.445)

with ![]() defined by (9.181). This is the same recursion encountered earlier in (9.248) during perfect data exchanges. Note that had we considered noises during the exchange of the regression data, then the vector

defined by (9.181). This is the same recursion encountered earlier in (9.248) during perfect data exchanges. Note that had we considered noises during the exchange of the regression data, then the vector ![]() in (9.444) would not be zero mean and the matrix

in (9.444) would not be zero mean and the matrix ![]() will have to be used instead of

will have to be used instead of ![]() . In that case, the recursion for

. In that case, the recursion for ![]() will be different from (9.445); i.e., the presence of noise during the exchange of regression data alters the dynamics of the mean error vector in an important way—see [65,69] for details on how to extend the arguments to this general case with a driving non-zero bias term. We can now extend Theorem 9.6.1 to the current scenario.

will be different from (9.445); i.e., the presence of noise during the exchange of regression data alters the dynamics of the mean error vector in an important way—see [65,69] for details on how to extend the arguments to this general case with a driving non-zero bias term. We can now extend Theorem 9.6.1 to the current scenario.

3.09.9.4 Mean-square convergence

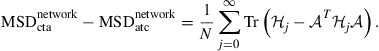

Recall from (9.264) that we introduced the matrix:

![]() (9.447)

(9.447)

We further introduce the ![]() block matrix with blocks of size

block matrix with blocks of size ![]() each:

each:

![]() (9.448)

(9.448)

Then, starting from (9.444) and repeating the argument that led to (9.279) we can establish the validity of the following variance relation:

![]() (9.449)

(9.449)

for an arbitrary nonnegative-definite weighting matrix ![]() with

with ![]() , and where

, and where ![]() is the same matrix defined earlier either by (9.276) or (9.277). We can therefore extend the statement of Theorem 9.6.7 to the present scenario.

is the same matrix defined earlier either by (9.276) or (9.277). We can therefore extend the statement of Theorem 9.6.7 to the present scenario.

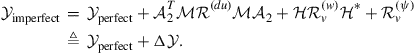

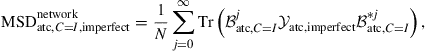

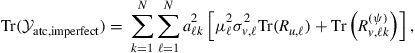

We conclude from the previous two theorems that the conditions for the mean and mean-square convergence of the adaptive diffusion strategy are not affected by the presence of noises over the exchange links (under the assumption that the regression data are exchanged without perturbation; otherwise, the convergence conditions would be affected). The mean-square performance, on the other hand, is affected as follows. Introduce the ![]() block matrix:

block matrix:

![]() (9.451)

(9.451)

which should be compared with the corresponding quantity defined by (9.280) for the perfect exchanges case, namely,

![]() (9.452)

(9.452)

When perfect exchanges occur, the matrix ![]() reduces to

reduces to ![]() . We can relate

. We can relate ![]() and

and ![]() as follows. Let

as follows. Let

(9.453)

(9.453)

Then, using (9.438) and (9.441), it is straightforward to verify that

![]() (9.454)

(9.454)

and it follows that:

(9.455)

(9.455)

Expression (9.455) reflects the influence of the noises ![]() . Substituting the definition (9.451) into (9.449), and taking the limit as

. Substituting the definition (9.451) into (9.449), and taking the limit as ![]() , we obtain from the latter expression that:

, we obtain from the latter expression that:

![]() (9.456)

(9.456)

which has the same form as (9.284); therefore, we can proceed analogously to obtain:

![]() (9.457)

(9.457)

and

![]() (9.458)

(9.458)

Using (9.455), we see that the network MSD and EMSE deteriorate as follows:

![]() (9.459)

(9.459)

![]() (9.460)

(9.460)

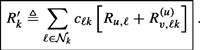

3.09.9.5 Adaptive combination weights

We can repeat the discussion from Sections 3.09.8.2 and 3.09.8.3 to devise one adaptive scheme to adjust the combination coefficients in the noisy exchange case. We illustrate the construction by considering the ATC strategy corresponding to ![]() , so that only weight estimates are exchanged and the update recursions are of the form:

, so that only weight estimates are exchanged and the update recursions are of the form:

![]() (9.461)

(9.461)

![]() (9.462)

(9.462)

where from (9.408):

![]() (9.463)

(9.463)

In this case, the network MSD performance (9.457) becomes

(9.464)

(9.464)

where, since now ![]() and

and ![]() , we have

, we have

![]() (9.465)

(9.465)

![]() (9.466)

(9.466)

![]() (9.467)

(9.467)

![]() (9.468)

(9.468)

![]() (9.469)

(9.469)

![]() (9.470)

(9.470)

![]() (9.471)

(9.471)

![]() (9.472)

(9.472)

To proceed, as was the case with (9.389), we consider the following simplified optimization problem:

(9.473)

(9.473)

Using (9.466), the trace of ![]() can be expressed in terms of the combination coefficients as follows:

can be expressed in terms of the combination coefficients as follows:

(9.474)

(9.474)

so that problem (9.473) can be decoupled into ![]() separate optimization problems of the form:

separate optimization problems of the form:

(9.475)

(9.475)

With each node ![]() , we associate the following nonnegative variance products:

, we associate the following nonnegative variance products:

![]() (9.476)

(9.476)

This measure now incorporates information about the exchange noise covariances ![]() . Then, the solution of (9.475) is given by:

. Then, the solution of (9.475) is given by:

(9.477)

(9.477)

We continue to refer to this combination rule as the relative-variance combination rule [64]; it leads to a left-stochastic matrix ![]() . To evaluate the combination weights (9.477), the nodes need to know the variance products,

. To evaluate the combination weights (9.477), the nodes need to know the variance products, ![]() , of their neighbors. As before, we can motivate one adaptive construction as follows.

, of their neighbors. As before, we can motivate one adaptive construction as follows.

We refer to the ATC recursion (9.461)–(9.463) and use the data model (9.208) to write for node ![]() :

:

![]() (9.478)

(9.478)

so that, in view of our earlier assumptions on the regression data and noise in Sections 3.09.6.1 and 3.09.9.1, we obtain in the limit as ![]() :

:

![]() (9.479)

(9.479)

In a manner similar to what was done before for (9.396), we can evaluate the limit on the right-hand side by using the corresponding steady-state result (9.456). We select the vector ![]() in (9.456) to satisfy:

in (9.456) to satisfy:

![]() (9.480)

(9.480)

Then, from (9.456),

![]() (9.481)

(9.481)

Now recall from expression (9.466) that the entries of ![]() depend on combinations of the squared step-sizes,

depend on combinations of the squared step-sizes, ![]() , and on terms involving

, and on terms involving ![]() . This fact implies that the first term on the right-hand side of (9.479) depends on products of the form

. This fact implies that the first term on the right-hand side of (9.479) depends on products of the form ![]() ; these fourth-order factors can be ignored in comparison to the second-order factor

; these fourth-order factors can be ignored in comparison to the second-order factor ![]() for small step-sizes. Moreover, the same first term on the right-hand side of (9.479) depends on products of the form

for small step-sizes. Moreover, the same first term on the right-hand side of (9.479) depends on products of the form ![]() , which can be ignored in comparison to the last term,

, which can be ignored in comparison to the last term, ![]() in (9.479), which does not appear multiplied by a squared step-size. Therefore, we can approximate:

in (9.479), which does not appear multiplied by a squared step-size. Therefore, we can approximate:

(9.482)

(9.482)

in terms of the desired variance product, ![]() . Using the following instantaneous approximation at node

. Using the following instantaneous approximation at node ![]() (where

(where ![]() is replaced by

is replaced by ![]() ):

):

![]() (9.483)

(9.483)

we can motivate an algorithm that enables node ![]() to estimate the variance products

to estimate the variance products ![]() . Thus, let

. Thus, let ![]() denote an estimate for

denote an estimate for ![]() that is computed by node

that is computed by node ![]() at time

at time ![]() . Then, one way to evaluate

. Then, one way to evaluate ![]() is through the recursion:

is through the recursion:

![]() (9.484)

(9.484)

where ![]() is a positive coefficient smaller than one. Indeed, it can be verified that

is a positive coefficient smaller than one. Indeed, it can be verified that

![]() (9.485)

(9.485)

so that the estimator ![]() converges on average close to the desired variance product

converges on average close to the desired variance product ![]() . In this way, we can replace the weights (9.477) by the adaptive construction:

. In this way, we can replace the weights (9.477) by the adaptive construction:

(9.486)

(9.486)

Equations (9.484) and (9.486) provide one adaptive construction for the combination weights ![]() .

.

3.09.10 Extensions and further considerations

Several extensions and variations of diffusion strategies are possible. Among those variations we mention strategies that endow nodes with temporal processing abilities, in addition to their spatial cooperation abilities. We can also apply diffusion strategies to solve recursive least-squares and state-space estimation problems in a distributed manner. In this section, we highlight select contributions in these and related areas.

3.09.10.1 Adaptive diffusion strategies with smoothing mechanisms

In the ATC and CTA adaptive diffusion strategies (9.153) and (9.154), each node in the network shares information locally with its neighbors through a process of spatial cooperation or combination. In this section, we describe briefly an extension that adds a temporal dimension to the processing at the nodes. For example, in the ATC implementation (9.153), rather than have each node ![]() rely solely on current data,

rely solely on current data, ![]() , and on current weight estimates received from the neighbors,

, and on current weight estimates received from the neighbors, ![]() , node

, node ![]() can be allowed to store and process present and past weight estimates, say,

can be allowed to store and process present and past weight estimates, say, ![]() of them as in

of them as in ![]() . In this way, previous weight estimates can be smoothed and used more effectively to help enhance the mean-square-deviation performance especially in the presence of noise over the communication links.

. In this way, previous weight estimates can be smoothed and used more effectively to help enhance the mean-square-deviation performance especially in the presence of noise over the communication links.

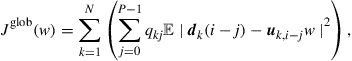

To motivate diffusion strategies with smoothing mechanisms, we continue to assume that the random data ![]() satisfy the modeling assumptions of Section 3.09.6.1. The global cost (9.92) continues to be the same but the individual cost functions (9.93) are now replaced by

satisfy the modeling assumptions of Section 3.09.6.1. The global cost (9.92) continues to be the same but the individual cost functions (9.93) are now replaced by

(9.487)

(9.487)

so that

(9.488)

(9.488)

where each coefficient ![]() is a nonnegative scalar representing the weight that node

is a nonnegative scalar representing the weight that node ![]() assigns to data from time instant

assigns to data from time instant ![]() . The coefficients

. The coefficients ![]() are assumed to satisfy the normalization condition:

are assumed to satisfy the normalization condition:

(9.489)

(9.489)

When the random processes ![]() and

and ![]() are jointly wide-sense stationary, as was assumed in Section 3.09.6.1, the optimal solution

are jointly wide-sense stationary, as was assumed in Section 3.09.6.1, the optimal solution ![]() that minimizes (9.488) is still given by the same normal equations (9.40). We can extend the arguments of Sections 3.09.3 and 3.09.4 to (9.488) and arrive at the following version of a diffusion strategy incorporating temporal processing (or smoothing) of the intermediate weight estimates [70,71]:

that minimizes (9.488) is still given by the same normal equations (9.40). We can extend the arguments of Sections 3.09.3 and 3.09.4 to (9.488) and arrive at the following version of a diffusion strategy incorporating temporal processing (or smoothing) of the intermediate weight estimates [70,71]:

![]() (9.490)

(9.490)

(9.491)

(9.491)

![]() (9.492)

(9.492)

where the nonnegative coefficients ![]() satisfy:

satisfy:

(9.493)

(9.493)

(9.494)

(9.494)

(9.495)

(9.495)

![]() (9.496)

(9.496)

Since only the coefficients ![]() are needed, we alternatively denote them by the simpler notation

are needed, we alternatively denote them by the simpler notation ![]() in the listing in Table 9.8. These are simply chosen as nonnegative coefficients:

in the listing in Table 9.8. These are simply chosen as nonnegative coefficients:

![]() (9.497)

(9.497)

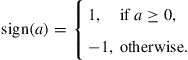

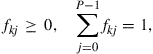

Note that algorithm (9.490)–(9.492) involves three steps: (a) an adaptation step (A) represented by (9.490); (b) a temporal filtering or smoothing step (T) represented by (9.491), and a spatial cooperation step (S) represented by (9.492). These steps are illustrated in Figure 9.15. We use the letters (A, T, S) to label these steps; and we use the sequence of letters (A, T, S) to designate the order of the steps. According to this convention, algorithm (9.490)–(9.492) is referred to as the ATS diffusion strategy since adaptation is followed by temporal processing, which is followed by spatial processing. In total, we can obtain six different combinations of diffusion algorithms by changing the order by which the temporal and spatial combination steps are performed in relation to the adaptation step. The resulting variations are summarized in Table 9.8. When we use only the most recent weight vector in the temporal filtering step (i.e., set ![]() ), which corresponds to the case

), which corresponds to the case ![]() , the algorithms of Table 9.8 reduce to the ATC and CTA diffusion algorithms (9.153) and (9.154). Specifically, the variants TSA, STA, and SAT (where spatial processing S precedes adaptation A) reduce to CTA, while the variants TAS, ATS, and AST (where adaptation A precedes spatial processing S) reduce to ATC.

, the algorithms of Table 9.8 reduce to the ATC and CTA diffusion algorithms (9.153) and (9.154). Specifically, the variants TSA, STA, and SAT (where spatial processing S precedes adaptation A) reduce to CTA, while the variants TAS, ATS, and AST (where adaptation A precedes spatial processing S) reduce to ATC.

Figure 9.15 Illustration of the three steps involved in an ATS diffusion strategy: adaptation, followed by temporal processing or smoothing, followed by spatial processing.

The mean-square performance analysis of the smoothed diffusion strategies can be pursued by extending the arguments of Section 3.09.6. This step is carried out in [70,71] for doubly stochastic combination matrices ![]() when the filtering coefficients

when the filtering coefficients ![]() do not change with

do not change with ![]() . For instance, it is shown in [71] that whether temporal processing is performed before or after adaptation, the strategy that performs adaptation before spatial cooperation is always better. Specifically, the six diffusion variants can be divided into two groups with the respective network MSDs satisfying the following relations:

. For instance, it is shown in [71] that whether temporal processing is performed before or after adaptation, the strategy that performs adaptation before spatial cooperation is always better. Specifically, the six diffusion variants can be divided into two groups with the respective network MSDs satisfying the following relations:

![]() (9.498)

(9.498)

![]() (9.499)

(9.499)

Note that within groups 1 and 2, the order of the A and T operations is the same: in group 1, T precedes A and in group 2, A precedes T. Moreover, within each group, the order of the A and S operations determines performance; the strategy that performs A before S is better. Note further that when ![]() , so that temporal processing is not performed, then TAS reduces to ATC and TSA reduces to CTA. This conclusion is consistent with our earlier result (9.343) that ATC outperforms CTA.

, so that temporal processing is not performed, then TAS reduces to ATC and TSA reduces to CTA. This conclusion is consistent with our earlier result (9.343) that ATC outperforms CTA.

In related work, reference [72] started from the CTA algorithm (9.159) without information exchange and added a useful projection step to it between the combination step and the adaptation step; i.e., the work considered an algorithm with an STA structure (with spatial combination occurring first, followed by a projection step, and then adaptation). The projection step uses the current weight estimate, ![]() , at node

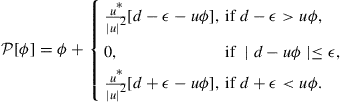

, at node ![]() and projects it onto hyperslabs defined by the current and past raw data. Specifically, the algorithm from [72] has the following form:

and projects it onto hyperslabs defined by the current and past raw data. Specifically, the algorithm from [72] has the following form:

![]() (9.500)

(9.500)

![]() (9.501)

(9.501)

(9.502)

(9.502)

where the notation ![]() refers to the act of projecting the vector

refers to the act of projecting the vector ![]() onto the hyperslab

onto the hyperslab ![]() that consists of all

that consists of all ![]() vectors

vectors ![]() satisfying (similarly for the projection

satisfying (similarly for the projection ![]() ):

):

![]() (9.503)

(9.503)

![]() (9.504)

(9.504)

where ![]() are positive (tolerance) parameters chosen by the designer to satisfy

are positive (tolerance) parameters chosen by the designer to satisfy ![]() . For generic values

. For generic values ![]() , where

, where ![]() is a scalar and

is a scalar and ![]() is a row vector, the projection operator is described analytically by the following expression [73]:

is a row vector, the projection operator is described analytically by the following expression [73]:

(9.505)

(9.505)

The projections that appear in (9.501) and (9.502) can be regarded as another example of a temporal processing step. Observe from the middle plot in Figure 9.15 that the temporal step that we are considering in the algorithms listed in Table 9.8 is based on each node ![]() using its current and past weight estimates, such as

using its current and past weight estimates, such as ![]() , rather than only

, rather than only ![]() and current and past raw data

and current and past raw data ![]() . For this reason, the temporal processing steps in Table 9.8 tend to exploit information from across the network more broadly and the resulting mean-square error performance is generally improved relative to (9.500)–(9.502).

. For this reason, the temporal processing steps in Table 9.8 tend to exploit information from across the network more broadly and the resulting mean-square error performance is generally improved relative to (9.500)–(9.502).

3.09.10.2 Diffusion recursive least-squares

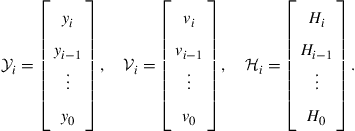

Diffusion strategies can also be applied to recursive least-squares problems to enable distributed solutions of least-squares designs [38,39]; see also [74]. Thus, consider again a set of ![]() nodes that are spatially distributed over some domain. The objective of the network is to collectively estimate some unknown column vector of length

nodes that are spatially distributed over some domain. The objective of the network is to collectively estimate some unknown column vector of length ![]() , denoted by

, denoted by ![]() , using a least-squares criterion. At every time instant

, using a least-squares criterion. At every time instant ![]() , each node

, each node ![]() collects a scalar measurement,

collects a scalar measurement, ![]() , which is assumed to be related to the unknown vector

, which is assumed to be related to the unknown vector ![]() via the linear model:

via the linear model:

![]() (9.506)

(9.506)

In the above relation, the vector ![]() denotes a row regression vector of length

denotes a row regression vector of length ![]() , and

, and ![]() denotes measurement noise. A snapshot of the data in the network at time

denotes measurement noise. A snapshot of the data in the network at time ![]() can be captured by collecting the measurements and noise samples,

can be captured by collecting the measurements and noise samples, ![]() from across all nodes into column vectors

from across all nodes into column vectors ![]() and

and ![]() of sizes

of sizes ![]() each, and the regressors

each, and the regressors ![]() into a matrix

into a matrix ![]() of size

of size ![]() :

:

(9.507)

(9.507)

Likewise, the history of the data across the network up to time ![]() can be collected into vector quantities as follows:

can be collected into vector quantities as follows:

(9.508)

(9.508)

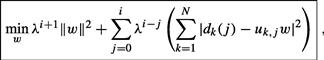

Then, one way to estimate ![]() is by formulating a global least-squares optimization problem of the form:

is by formulating a global least-squares optimization problem of the form:

![]() (9.509)

(9.509)

where ![]() represents a Hermitian regularization matrix and

represents a Hermitian regularization matrix and ![]() represents a Hermitian weighting matrix. Common choices for

represents a Hermitian weighting matrix. Common choices for ![]() and

and ![]() are

are

![]() (9.510)

(9.510)

![]() (9.511)

(9.511)

where ![]() is usually a large number and

is usually a large number and ![]() is a forgetting factor whose value is generally very close to one. In this case, the global cost function (9.509) can be written in the equivalent form:

is a forgetting factor whose value is generally very close to one. In this case, the global cost function (9.509) can be written in the equivalent form:

(9.512)

(9.512)

which is an exponentially weighted least-squares problem. We see that, for every time instant ![]() , the squared errors,

, the squared errors, ![]() , are summed across the network and scaled by the exponential weighting factor

, are summed across the network and scaled by the exponential weighting factor ![]() . The index

. The index ![]() denotes current time and the index

denotes current time and the index ![]() denotes a time instant in the past. In this way, data occurring in the remote past are scaled more heavily than data occurring closer to present time.The global solution of (9.509) is given by [5]:

denotes a time instant in the past. In this way, data occurring in the remote past are scaled more heavily than data occurring closer to present time.The global solution of (9.509) is given by [5]:

![]() (9.513)

(9.513)

and the notation ![]() , with a subscript

, with a subscript ![]() , is meant to indicate that the estimate

, is meant to indicate that the estimate ![]() is based on all data collected from across the network up to time

is based on all data collected from across the network up to time ![]() . Therefore, the

. Therefore, the ![]() that is computed via (9.513) amounts to a global construction.

that is computed via (9.513) amounts to a global construction.

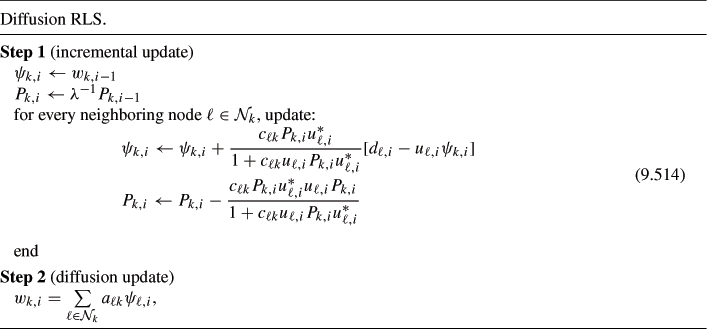

In [38,39] a diffusion strategy was developed that allows nodes to approach the global solution ![]() by relying solely on local interactions. Let

by relying solely on local interactions. Let ![]() denote a local estimate for

denote a local estimate for ![]() that is computed by node

that is computed by node ![]() at time

at time ![]() . The diffusion recursive-least-squares (RLS) algorithm takes the following form. For every node

. The diffusion recursive-least-squares (RLS) algorithm takes the following form. For every node ![]() , we start with the initial conditions

, we start with the initial conditions ![]() and

and ![]() where

where ![]() is an

is an ![]() matrix. Then, for every time instant

matrix. Then, for every time instant ![]() , each node

, each node ![]() performs an incremental step followed by a diffusion step as follows:

performs an incremental step followed by a diffusion step as follows:

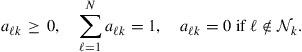

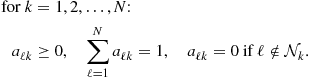

where the symbol ![]() denotes a sequential assignment, and where the scalars

denotes a sequential assignment, and where the scalars ![]() are nonnegative coefficients satisfying:

are nonnegative coefficients satisfying:

(9.515)

(9.515)

(9.516)

(9.516)

The above algorithm requires that at every instant ![]() , nodes communicate to their neighbors their measurements

, nodes communicate to their neighbors their measurements ![]() for the incremental update, and the intermediate estimates

for the incremental update, and the intermediate estimates ![]() for the diffusion update. During the incremental update, node

for the diffusion update. During the incremental update, node ![]() cycles through its neighbors and incorporates their data contributions represented by

cycles through its neighbors and incorporates their data contributions represented by ![]() into

into ![]() . Every other node in the network is performing similar steps. At the end of the incremental step, neighboring nodes share their intermediate estimates

. Every other node in the network is performing similar steps. At the end of the incremental step, neighboring nodes share their intermediate estimates ![]() to undergo diffusion. Thus, at the end of both steps, each node

to undergo diffusion. Thus, at the end of both steps, each node ![]() would have updated the quantities

would have updated the quantities ![]() to

to ![]() . The quantities

. The quantities ![]() are matrices of size

are matrices of size ![]() each. Observe that the diffusion RLS implementation (9.514) does not require the nodes to share their matrices

each. Observe that the diffusion RLS implementation (9.514) does not require the nodes to share their matrices ![]() , which would amount to a substantial burden in terms of communications resources since each of these matrices has

, which would amount to a substantial burden in terms of communications resources since each of these matrices has ![]() entries. Only the quantities

entries. Only the quantities ![]() are shared. The mean-square performance and convergence of the diffusion RLS strategy are studied in some detail in [39].

are shared. The mean-square performance and convergence of the diffusion RLS strategy are studied in some detail in [39].

The incremental step of the diffusion RLS strategy (9.514) corresponds to performing a number of ![]() successive least-squares updates starting from the initial conditions

successive least-squares updates starting from the initial conditions ![]() and ending with the values

and ending with the values ![]() that move onto the diffusion update step. It can be verified from the properties of recursive least-squares solutions [4,5] that these variables satisfy the following equations at the end of the incremental stage (step 1):

that move onto the diffusion update step. It can be verified from the properties of recursive least-squares solutions [4,5] that these variables satisfy the following equations at the end of the incremental stage (step 1):

![]() (9.517)

(9.517)

![]() (9.518)

(9.518)

Introduce the auxiliary ![]() variable:

variable:

![]() (9.519)

(9.519)

Then, the above expressions lead to the following alternative form of the diffusion RLS strategy (9.514).

Under some approximations, and for the special choices ![]() and

and ![]() , the diffusion RLS strategy (9.520) can be reduced to a form given in [75] and which is described by the following equations:

, the diffusion RLS strategy (9.520) can be reduced to a form given in [75] and which is described by the following equations:

![]() (9.521)

(9.521)

![]() (9.522)

(9.522)

![]() (9.523)

(9.523)

Algorithm (9.521)–(9.523) is motivated in [75] by using consensus-type arguments. Observe that the algorithm requires the nodes to share the variables ![]() , which corresponds to more communications overburden than required by diffusion RLS; the latter only requires that nodes share

, which corresponds to more communications overburden than required by diffusion RLS; the latter only requires that nodes share ![]() . In order to illustrate how a special case of diffusion RLS (9.520) can be related to this scheme, let us set

. In order to illustrate how a special case of diffusion RLS (9.520) can be related to this scheme, let us set

![]() (9.524)

(9.524)

Then, Eqs. (9.520) give:

Comparing these equations with (9.521)–(9.523), we find that algorithm (9.521)–(9.523) of [75] would relate to the diffusion RLS algorithm (9.520) when the following approximations are justified:

![]() (9.526)

(9.526)

(9.527)

(9.527)

![]() (9.528)

(9.528)

It was indicated in [39] that the diffusion RLS implementation (9.514) or (9.520) leads to enhanced performance in comparison to the consensus-based update (9.521)–(9.523).

3.09.10.3 Diffusion Kalman filtering

Diffusion strategies can also be applied to the solution of distributed state-space filtering and smoothing problems [35,40,41]. Here, we describe briefly the diffusion version of the Kalman filter; other variants and smoothing filters can be found in [35]. We assume that some system of interest is evolving according to linear state-space dynamics, and that every node in the network collects measurements that are linearly related to the unobserved state vector. The objective is for every node to track the state of the system over time based solely on local observations and on neighborhood interactions.

Thus, consider a network consisting of ![]() nodes observing the state vector,

nodes observing the state vector, ![]() of size

of size ![]() of a linear state-space model. At every time

of a linear state-space model. At every time ![]() , every node

, every node ![]() collects a measurement vector

collects a measurement vector ![]() of size

of size ![]() , which is related to the state vector as follows:

, which is related to the state vector as follows:

![]() (9.529)

(9.529)

![]() (9.530)

(9.530)

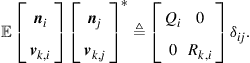

The signals ![]() and

and ![]() denote state and measurement noises of sizes

denote state and measurement noises of sizes ![]() and

and ![]() , respectively, and they are assumed to be zero-mean, uncorrelated and white, with covariance matrices denoted by

, respectively, and they are assumed to be zero-mean, uncorrelated and white, with covariance matrices denoted by

(9.531)

(9.531)

The initial state vector, ![]() is assumed to be zero-mean with covariance matrix

is assumed to be zero-mean with covariance matrix

![]() (9.532)

(9.532)

and is uncorrelated with ![]() and

and ![]() , for all

, for all ![]() and

and ![]() . We further assume that

. We further assume that ![]() . The parameter matrices

. The parameter matrices ![]() are assumed to be known by node

are assumed to be known by node ![]() .

.

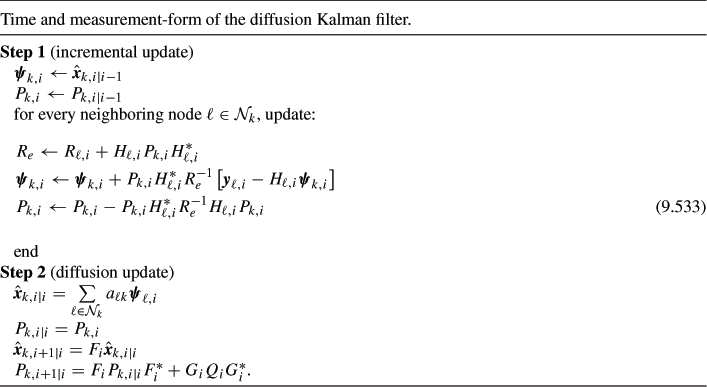

Let ![]() denote a local estimator for

denote a local estimator for ![]() that is computed by node

that is computed by node ![]() at time

at time ![]() based solely on local observations and on neighborhood data up to time

based solely on local observations and on neighborhood data up to time ![]() . The following diffusion strategy was proposed in [35,40,41] to approximate predicted and filtered versions of these local estimators in a distributed manner for data satisfying model (9.529)–(9.532). For every node

. The following diffusion strategy was proposed in [35,40,41] to approximate predicted and filtered versions of these local estimators in a distributed manner for data satisfying model (9.529)–(9.532). For every node ![]() , we start with

, we start with ![]() and

and ![]() , where

, where ![]() is an

is an ![]() matrix. At every time instant

matrix. At every time instant ![]() , every node

, every node ![]() performs an incremental step followed by a diffusion step:

performs an incremental step followed by a diffusion step:

where the symbol ![]() denotes a sequential assignment, and where the scalars

denotes a sequential assignment, and where the scalars ![]() are nonnegative coefficients satisfying:

are nonnegative coefficients satisfying:

(9.534)

(9.534)

The above algorithm requires that at every instant ![]() , nodes communicate to their neighbors their measurement matrices

, nodes communicate to their neighbors their measurement matrices ![]() , the noise covariance matrices

, the noise covariance matrices ![]() , and the measurements

, and the measurements ![]() for the incremental update, and the intermediate estimators

for the incremental update, and the intermediate estimators ![]() for the diffusion update. During the incremental update, node

for the diffusion update. During the incremental update, node ![]() cycles through its neighbors and incorporates their data contributions represented by

cycles through its neighbors and incorporates their data contributions represented by ![]() into

into ![]() . Every other node in the network is performing similar steps. At the end of the incremental step, neighboring nodes share their updated intermediate estimators