DOA Estimation Methods and Algorithms

Pei-Jung Chung*, Mats Viberg† and Jia Yu*, *The University of Edinburgh, UK, †Chalmers University of Technology, Sweden

Abstract

Estimation of direction of arrival (DOA) from data collected by sensor arrays is of fundamental importance to a variety of applications such as radar, sonar, wireless communications, geophysics and biomedical engineering. Significant progress in the development of algorithms has been made over the last three decades. This article provides an overview of DOA estimation methods that are relevant in theory and practice. We will present estimators based on beamforming, subspace and parametric approaches and compare their performance in terms of estimation accuracy, resolution capability and computational complexity. Methods for processing broadband data and signal detection will be discussed as well. Finally, a brief discussion will be given to application specific algorithms.

Keywords

Sensor array processing; Direction of arrival (DOA); Estimation; Beamforming; Subspace methods; Maximum likelihood; High resolution methods; Signal detection

Acknowledgments

The authors would like to thank Prof. Johann F. Böhme and Prof. Jean-Pierre Delmas for their valuable comments and suggestions that significantly improve this paper.

3.14.1 Background

The problem of retrieving information conveyed in propagating waves occurs in a wide range of applications including radar, sonar, wireless communications, geophysics and biomedical engineering. Methods for processing data measured by sensor arrays have attracted lots of attention of many researchers over last three decades. Recent advances in computational technology have enabled implementation of sophisticated algorithms in practical systems.

Early space-time processing techniques view direction of arrival (DOA) as a spatial spectrum. The Fourier transform based conventional beamformer is subject to resolution limitation due to finite array aperture. Similar to its temporal counterpart, the spatial periodogram can not benefit from increasing signal to noise ratio (SNR) or number of samples. Better estimates can be achieved by applying windowing function to reduce spectral leakage effects. The minimum variance distortionless (MVDR) beamformer [1] overcomes the resolution limitation of Fourier based techniques by formulating the spectrum estimation as a constrained optimization problem. Also, its performance can be enhanced by high SNR. The multiple signal classification (MUSIC) algorithm [2] is representative of subspace methods based on eigenstructure of the spatial correlation matrix. In addition to high resolution, MUSIC takes advantage of SNR, number of sensors and number of samples. It improves estimation accuracy with respect to all dimensions and is statistically efficient. However, in the presence of correlated source signals, subspace methods degrade dramatically as the signal subspace suffers from rank deficiency. On the other hand, parametric methods such as the maximum likelihood (ML) approach exploit the data model fully, leading to statistically efficient estimators. More importantly, they remain robust in critical scenarios involving signal coherence, closely located signals and low SNRs. The optimal properties come along with increased computational complexity. Hence, efficient implementation is crucial for parametric methods.

The importance of array processing methods has been reflected by the huge amount of publications. Among these contributions, several review articles [3–5] have proven to be an excellent guide for first exposure to this research field, while the textbooks [6,7] have been valuable references for in-depth learning. Thanks to the advances in both theoretical methods and computational powers over the last decade, array processing methods have been re-examined from various aspects to address new challenges arising in these new application areas such as wireless communications. The purpose of this article is to provide interested readers an overview of traditional array processing methods and recent development in the field.

The organization of this article is as follows. The data model based on plane wave propagation is introduced in Section 3.14.2. Important quantities including array response vector and second order statistics are derived therein. Standard direction finding algorithms are covered in Sections 3.14.3–3.14.5. Section 3.14.3 is devoted to spectral analysis based methods: conventional beamforming, MVDR beamforming techniques. The sparse data representation based approach is presented in the same section. The subspace methods and related issues are treated in Section 3.14.4. In the subsequent Section 3.14.5, the parametric approach is illustrated. Important algorithms based on the maximum likelihood principle and subspace fitting are illustrated. The implementation of the aforementioned estimators are also discussed. While the algorithms discussed in Sections 3.14.3–3.14.5 deal with narrow band data, we treat the broadband case separately in Section 3.14.6. The number of signals is a fundamental assumption in most array processing methods. The problem of signal detection is addressed in Section 3.14.7. In Section 3.14.8, we treat scenarios with non-standard assumptions by highlighting relevant techniques and references. A brief discussion is given in Section 3.14.9.

3.14.2 Data model

Propagating waves carry energy radiated by sources to sensors. To extract information conveyed in the propagating waves, such as source location or propagation direction, one needs a proper description of wavefields. In Section 3.14.2.1, we start with a brief discussion on the physics of wave propagation and formulate the transmission between signal sources and receiving sensors as a linear time invariant system. Then a frequency domain data model for far-field sources is developed in Section 3.14.2.2. The fundamental issue on identifiability is discussed in Section 3.14.2.3.

3.14.2.1 Wave propagation

The physics of propagating waves is governed by the wave equation for medium of interest. The homogeneous wave equation for a general scalar field ![]() at time instant t and location

at time instant t and location ![]() is given by

is given by

![]() (14.1)

(14.1)

where the parameter c represents the propagation velocity. ![]() can be an electric density field in electromagnetics or acoustic pressure in acoustic waves.

can be an electric density field in electromagnetics or acoustic pressure in acoustic waves.

A solution of special interest to (14.1) takes a complex exponential form:

![]() (14.2)

(14.2)

where ![]() is the temporal frequency and

is the temporal frequency and ![]() is the wave number vector. Here

is the wave number vector. Here ![]() is considered as the spatial frequency of this mono-chromatic wave. Inserting (14.2) into (14.1), one can readily see how temporal frequency

is considered as the spatial frequency of this mono-chromatic wave. Inserting (14.2) into (14.1), one can readily see how temporal frequency ![]() and the spatial frequency k are related:

and the spatial frequency k are related:

![]() (14.3)

(14.3)

Replacing the propagation velocity with ![]() in (14.3) where

in (14.3) where ![]() is the wavelength, the magnitude of the wave number vector is given by

is the wavelength, the magnitude of the wave number vector is given by ![]() .

.

The elementary wave (14.2) also represents a propagating wave

![]() (14.4)

(14.4)

where ![]() is termed slowness vector. It points in the same direction as

is termed slowness vector. It points in the same direction as ![]() and has the magnitude

and has the magnitude ![]() , which is the inverse of the propagation velocity. From (14.4), it can be readily seen that the direction of propagation is given by

, which is the inverse of the propagation velocity. From (14.4), it can be readily seen that the direction of propagation is given by ![]() . Let the origin of the coordinate system be close to the sensor array. The slowness vector

. Let the origin of the coordinate system be close to the sensor array. The slowness vector ![]() can then be expressed as

can then be expressed as

(14.5)

(14.5)

where ![]() and

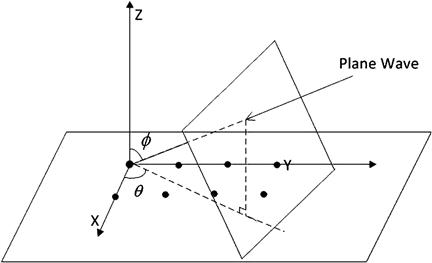

and ![]() denote the elevation and azimuth angles, respectively. Both parameters characterize the direction of propagation and are referred to as direction of arrival (DOA) (see Figure 14.1).

denote the elevation and azimuth angles, respectively. Both parameters characterize the direction of propagation and are referred to as direction of arrival (DOA) (see Figure 14.1).

Far-field assumption: For far-field sources, the propagation distance to a sensor array is much larger than the aperture of the array, the DOA parameter is approximately the same to all sensors. According to (14.2), the wave front of constant phase at time instant t is a plane perpendicular to the propagating direction given by ![]() . The term, plane wave assumption is alternatively used in the literature.

. The term, plane wave assumption is alternatively used in the literature.

3.14.2.2 Frequency domain description

In an ideal medium, the propagation between signal sources and a sensor array is considered as a linear time-invariant system. Due to the applicability of the superposition principle and the Fourier transform, the analysis of wave propagation is greatly simplified. In the following, we will develop a general frequency domain model and discuss the narrow band case. The general model is useful for broadband data that may be measured in underwater acoustical or geophysical experiments. The narrow band model is preferred in applications where the signal bandwidth is much smaller than the carrier frequency, for example, wireless communications and radar.

3.14.2.2.1 General model

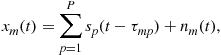

Consider an array of sensors located at ![]() receiving signals generated by P sources. Without loss of generality, the first sensor coincides with the origin of the coordinate system. Let

receiving signals generated by P sources. Without loss of generality, the first sensor coincides with the origin of the coordinate system. Let ![]() denote the signals received by the first sensor. The signal observed by the mth sensor is the sum of a time-delayed version of original signals corrupted by noise

denote the signals received by the first sensor. The signal observed by the mth sensor is the sum of a time-delayed version of original signals corrupted by noise ![]() :

:

(14.6)

(14.6)

where ![]() denotes the propagation time difference between the origin and the mth sensors. The time difference is given by

denotes the propagation time difference between the origin and the mth sensors. The time difference is given by ![]() where

where ![]() is associated with the pth wave. It is related to the DOA parameter through

is associated with the pth wave. It is related to the DOA parameter through ![]() and (14.5).

and (14.5).

Applying the Fourier transform to the array output ![]() , the time delay

, the time delay ![]() translates to a phase shift

translates to a phase shift ![]() . In the presence of noise, the array output vector

. In the presence of noise, the array output vector ![]() can be written as

can be written as

(14.7)

(14.7)

where the steering vector ![]() is the spatial signature for the pth incoming wave:

is the spatial signature for the pth incoming wave:

(14.8)

(14.8)

Define the steering matrix as

(14.9)

(14.9)

A compact expression for (14.7) is as follows:

![]() (14.10)

(14.10)

where the signal vector ![]() . According to the asymptotic theory of Fourier transform, the frequency domain data is complex normally distributed regardless of the distribution in the time domain. In addition, frequency bins are mutually independent. These properties form the basis for broadband DOA estimation.

. According to the asymptotic theory of Fourier transform, the frequency domain data is complex normally distributed regardless of the distribution in the time domain. In addition, frequency bins are mutually independent. These properties form the basis for broadband DOA estimation.

3.14.2.2.2 Narrow band data

In many applications, the signal of interest is modulated by a carrier frequency ![]() for transmission. At the receive side, the radio frequency signals are demodulated to baseband for further processing. Suppose the signal is band limited with the bandwidth

for transmission. At the receive side, the radio frequency signals are demodulated to baseband for further processing. Suppose the signal is band limited with the bandwidth ![]() , and the maximal travel time between two sensors of the array for a plane wave is

, and the maximal travel time between two sensors of the array for a plane wave is ![]() . The narrow band assumption is valid if

. The narrow band assumption is valid if ![]() . Then the complex baseband signal waveforms are approximately equal for all sensors. The general expression (14.10) can be simplified to the narrow band model

. Then the complex baseband signal waveforms are approximately equal for all sensors. The general expression (14.10) can be simplified to the narrow band model

![]() (14.11)

(14.11)

where the steering matrix ![]() is computed at the carrier frequency

is computed at the carrier frequency ![]() and

and ![]() represents the baseband signal waveform. The frequency dependence in (14.11) is omitted as relevant information is centered at

represents the baseband signal waveform. The frequency dependence in (14.11) is omitted as relevant information is centered at ![]() .

.

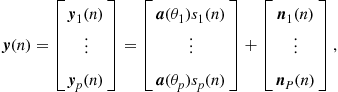

As DOA estimation is typically based on sampled values of ![]() at time instants

at time instants ![]() , we consider temporally discrete samples of (14.11) and replace

, we consider temporally discrete samples of (14.11) and replace ![]() with the index n. Then the snapshot model is given by

with the index n. Then the snapshot model is given by

![]() (14.12)

(14.12)

Many array processing methods exploit second order statistics of the data. Assume the signal and noise are independent, stationary random processes with zero mean, correlation matrices ![]() and

and ![]() , respectively where

, respectively where ![]() denotes Hermitian transposition. Then the array correlation matrix can be expressed as

denotes Hermitian transposition. Then the array correlation matrix can be expressed as

![]() (14.13)

(14.13)

The noise process is often considered as spatially white, i.e., ![]() , where

, where ![]() denotes the noise level and

denotes the noise level and ![]() is an

is an ![]() matrix. This assumption is valid for sensors with sufficient spacing. In the presence of colored noise, the noise correlation matrix is no longer diagonal. In such case, the data can be pre-whitened by multiplying (14.12) with the inverse square root of the noise correlation matrix,

matrix. This assumption is valid for sensors with sufficient spacing. In the presence of colored noise, the noise correlation matrix is no longer diagonal. In such case, the data can be pre-whitened by multiplying (14.12) with the inverse square root of the noise correlation matrix, ![]() .

.

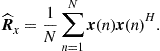

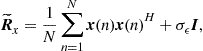

The array correlation matrix is estimated from array observations ![]() by the sample correlation matrix

by the sample correlation matrix

(14.14)

(14.14)

Under weak assumptions, the sample correlation matrix is a consistent estimator for the true correlation matrix, i.e., ![]() converges with probability one to

converges with probability one to ![]() as the sample size increases. More details can be found in the book [8].

as the sample size increases. More details can be found in the book [8].

In many applications, sensors are located on a plane, for example, the yz-plane. Setting the elevation to be ![]() in (14.5), one can easily see that the slowness vector is characterized by only the azimuthal parameter,

in (14.5), one can easily see that the slowness vector is characterized by only the azimuthal parameter, ![]() . In the two dimensional scenario, the uniform linear array (ULA) is one of the most popular array configurations. Let the sensors of a ULA be placed along the y-axis,

. In the two dimensional scenario, the uniform linear array (ULA) is one of the most popular array configurations. Let the sensors of a ULA be placed along the y-axis, ![]() where

where ![]() denotes or the inter-sensor distance. Then the phase shift term evaluated at

denotes or the inter-sensor distance. Then the phase shift term evaluated at ![]() and

and ![]() becomes

becomes ![]() , leading to the steering vector

, leading to the steering vector

![]() (14.15)

(14.15)

If ![]() is measured in wavelength,

is measured in wavelength, ![]() where

where ![]() can be expressed as

can be expressed as

![]() (14.16)

(14.16)

For a standard ULA, the inter-element spacing is half a wavelength, ![]() , the mth element in (14.16) becomes

, the mth element in (14.16) becomes ![]() .

.

For the 2D case, we shall use a shorter notation for the steering matrix, ![]() , in (14.11)–(14.13). More specifically, the sampled array outputs and correlation matrix are expressed as

, in (14.11)–(14.13). More specifically, the sampled array outputs and correlation matrix are expressed as

![]() (14.17)

(14.17)

and

![]() (14.18)

(14.18)

Given the observations ![]() , the primary interest is to estimate the DOA parameter. In the following, we will present DOA estimation methods based on various ideas ranging from nonparametric spectral analysis, high resolution methods to the parametric approach. Our discussion will focus on the mostly investigated 2D case. The majority of the methods can be extended to multiple parameters per source in a straightforward manner. The broadband case will be discussed in detail in a separate chapter.

, the primary interest is to estimate the DOA parameter. In the following, we will present DOA estimation methods based on various ideas ranging from nonparametric spectral analysis, high resolution methods to the parametric approach. Our discussion will focus on the mostly investigated 2D case. The majority of the methods can be extended to multiple parameters per source in a straightforward manner. The broadband case will be discussed in detail in a separate chapter.

3.14.2.3 Uniqueness

A fundamental issue in the direction finding problem is whether the DOA parameters can be identified unambiguously. From the ideal data model (14.12) or (14.17), we know that the array output lies in a subspace spanned by the columns of the ![]() array steering matrix if the noise part

array steering matrix if the noise part ![]() is ignored. For simplicity, we will present the results for the model (14.17). Assume any subset of vectors

is ignored. For simplicity, we will present the results for the model (14.17). Assume any subset of vectors ![]() , are linearly independent. The study in [9,10] specifies the conditions for the maximal number of sources that can be uniquely localized in terms of the number of sensors M and the rank of the signal correlation matrix R. The condition (1)

, are linearly independent. The study in [9,10] specifies the conditions for the maximal number of sources that can be uniquely localized in terms of the number of sensors M and the rank of the signal correlation matrix R. The condition (1) ![]() guarantees uniqueness for every batch of data, while the weaker condition (2)

guarantees uniqueness for every batch of data, while the weaker condition (2) ![]() guarantees uniqueness for almost every batch of data, with the exception of a set of batches of measure zero. When all sources are uncorrelated implying that

guarantees uniqueness for almost every batch of data, with the exception of a set of batches of measure zero. When all sources are uncorrelated implying that ![]() , condition (1) is always satisfied and uniqueness is always guaranteed. When the sources are fully correlated,

, condition (1) is always satisfied and uniqueness is always guaranteed. When the sources are fully correlated, ![]() , then uniqueness is ensured if

, then uniqueness is ensured if ![]() by condition (1). The weaker condition (2) leads to a less stringent condition

by condition (1). The weaker condition (2) leads to a less stringent condition ![]() . Details on the proof are to be found in [10].

. Details on the proof are to be found in [10].

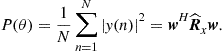

3.14.3 Beamforming methods

A homogenous wavefield ![]() has an interpretation as energy distribution in a frequency-wavenumber spectrum. The power spectrum of

has an interpretation as energy distribution in a frequency-wavenumber spectrum. The power spectrum of ![]() contains information about the source distribution over space [3]. From this perspective, estimation of DOA parameters is equivalent to finding the location in a spatial power spectrum where most power is concentrated. Beamforming techniques are spatial filters that combine weighted sensor outputs linearly

contains information about the source distribution over space [3]. From this perspective, estimation of DOA parameters is equivalent to finding the location in a spatial power spectrum where most power is concentrated. Beamforming techniques are spatial filters that combine weighted sensor outputs linearly

(14.19)

(14.19)

where ![]() . The weight vector is usually a function of the DOA parameter, i.e.,

. The weight vector is usually a function of the DOA parameter, i.e., ![]() . The power of the beamformer output

. The power of the beamformer output ![]() provides an estimate for the power spectrum at the direction

provides an estimate for the power spectrum at the direction ![]() :

:

(14.20)

(14.20)

where ![]() is the sample covariance matrix. The maximum of

is the sample covariance matrix. The maximum of ![]() is an indication for a signal source. Assuming P signals are present in the wavefield, we change the look direction

is an indication for a signal source. Assuming P signals are present in the wavefield, we change the look direction ![]() and evaluate

and evaluate ![]() over the range of interest. Then the P largest maxima are chosen as DOA estimates.

over the range of interest. Then the P largest maxima are chosen as DOA estimates.

The expected beamformer output (14.20) is a smoothed version of the true power spectrum. Smoothing is carried out with the kernel ![]() centered at the look direction

centered at the look direction ![]() , where

, where ![]() is the array beam pattern [3]. An ideal beam pattern

is the array beam pattern [3]. An ideal beam pattern ![]() would lead to an unbiased estimate for the power spectrum. However, in practice, due to finite array aperture, the beampattern consists of a main lobe and several sidelobes, leading to leakage from neighboring frequencies. Hence, the shape of the beampattern determines resolution capability and estimation accuracy of the spectral analysis based approach.

would lead to an unbiased estimate for the power spectrum. However, in practice, due to finite array aperture, the beampattern consists of a main lobe and several sidelobes, leading to leakage from neighboring frequencies. Hence, the shape of the beampattern determines resolution capability and estimation accuracy of the spectral analysis based approach.

We will introduce the conventional beamformer in Section 3.14.3.1 and minimum variance distortionless (MVDR) beamformer in Section 3.14.3.2, respectively. A recently proposed sparse data approach that matches array measurements to a set of candidate directions will be discussed in Section 3.14.3.3.

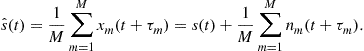

3.14.3.1 Conventional beamformer

The conventional beamformer, also termed as delay-and-sum beamformer, combines the output of each sensor (14.6) coherently to obtain an enhanced signal from the noisy observation. For simplicity, assume ![]() for now. In its most general form, the conventional beamformer compensates the time delayed observation at the mth sensor

for now. In its most general form, the conventional beamformer compensates the time delayed observation at the mth sensor ![]() by the amount of

by the amount of ![]() . The sum of aligned sensor outputs leads to an estimate for the signal:

. The sum of aligned sensor outputs leads to an estimate for the signal:

(14.21)

(14.21)

From the above equation, it is clear that the signal to noise ratio is improved by a factor of M. For wideband data, the sum in (14.21) is often called true time-delay beamforming. It can be approximately implemented using various filtering techniques, see e.g., [6,7]. For narrow band data, the shift in the time domain becomes phase shift in the frequency domain. Therefore, we have

(14.22)

(14.22)

where ![]() is the steering vector evaluated at the look direction

is the steering vector evaluated at the look direction ![]() . Since for any steering vectors,

. Since for any steering vectors, ![]() , the weight vector has unit length

, the weight vector has unit length ![]() and

and

(14.23)

(14.23)

An estimate for the power spectrum is then obtained as

![]() (14.24)

(14.24)

In the context of spectral analysis, the above expression corresponds to the periodogram in the spatial domain [3]. The expected value of ![]() is the convolution between the beam pattern and the true power spectrum. A good beam pattern should be as close to the delta function

is the convolution between the beam pattern and the true power spectrum. A good beam pattern should be as close to the delta function ![]() as possible to minimize leakage from neighboring frequencies.

as possible to minimize leakage from neighboring frequencies.

For a uniform linear array, the beam pattern ![]() has a main lobe around the look direction

has a main lobe around the look direction ![]() . The Rayleigh beamwidth, the distance between the first two nulls of

. The Rayleigh beamwidth, the distance between the first two nulls of ![]() , is approximately given by

, is approximately given by

![]() (14.25)

(14.25)

The beamformer can only distinguish two sources with DOA separation larger than half of ![]() . Note that

. Note that ![]() is the ratio between actual distance D and wavelength

is the ratio between actual distance D and wavelength ![]() . As

. As ![]() is inversely proportional to Md, the aperture of the array, the resolution capability improves with increasing number of sensors and sensor spacing. However,

is inversely proportional to Md, the aperture of the array, the resolution capability improves with increasing number of sensors and sensor spacing. However, ![]() is the maximally allowed sensor distance. For

is the maximally allowed sensor distance. For ![]() , grating lobes appear in the beampattern and create ambiguity in the DOA estimation.

, grating lobes appear in the beampattern and create ambiguity in the DOA estimation.

For a standard ULA with ![]() sensors,

sensors, ![]() , the beamwidth

, the beamwidth ![]() , meaning that the DOA separation between two sources must be larger than 11° to generate two peaks in the power spectrum

, meaning that the DOA separation between two sources must be larger than 11° to generate two peaks in the power spectrum ![]() .

.

3.14.3.2 Minimum variance distortionless response (MVDR) beamformer

The minimum variance distortionless response (MVDR) beamformer [1] alleviates the resolution limit of the conventional beamformer by considering the following constrained optimization problem:

![]() (14.26)

(14.26)

![]() (14.27)

(14.27)

The objective function (14.26) represents the output power to be minimized. The constraint (14.27) ensures that signals from the desired direction ![]() remain undistorted. In other words, while the power from all other directions is minimized, the beamformer concentrates only on the look direction. The behavior of the MVDR beamformer was also discussed by Lacoss [11]. The resulting beampattern has a sharp peak at the target DOA, leading to resolution capability beyond the Rayleigh beamwidth. Applying the method of Lagrange multipliers, we obtain the solution as

remain undistorted. In other words, while the power from all other directions is minimized, the beamformer concentrates only on the look direction. The behavior of the MVDR beamformer was also discussed by Lacoss [11]. The resulting beampattern has a sharp peak at the target DOA, leading to resolution capability beyond the Rayleigh beamwidth. Applying the method of Lagrange multipliers, we obtain the solution as

(14.28)

(14.28)

Replacing (14.28) into (14.20), one obtains the power function as

![]() (14.29)

(14.29)

A condition on the MVDR beamformer follows immediately from (14.28) where the inversion of the sample covariance matrix requires that ![]() is full rank, implying that the number of samples must be larger than the number of sensors, i.e.,

is full rank, implying that the number of samples must be larger than the number of sensors, i.e., ![]() . When rank deficiency occurs or the sample number is small compared to the number of sensors, a popular technique known as diagonal loading is often employed to improve robustness. As the name implies, the sample covariance matrix (14.14) is modified by adding a small perturbation term to improve the conditioning:

. When rank deficiency occurs or the sample number is small compared to the number of sensors, a popular technique known as diagonal loading is often employed to improve robustness. As the name implies, the sample covariance matrix (14.14) is modified by adding a small perturbation term to improve the conditioning:

(14.30)

(14.30)

where ![]() is a properly chosen small number and

is a properly chosen small number and ![]() is an

is an ![]() identity matrix. The choice of the coefficient

identity matrix. The choice of the coefficient ![]() is essential. Several criteria for optimal choice of diagonal loading have been reported in [12] and the references therein.

is essential. Several criteria for optimal choice of diagonal loading have been reported in [12] and the references therein.

A variant of the MVDR beamformer, proposed in [13], replaces the constraint (14.27) by ![]() . This formulation leads to the adapted angular response spectrum

. This formulation leads to the adapted angular response spectrum ![]() . This Borgiorri-Kaplan beamformer is known to provide higher spatial resolution than that of the MVDR beamformer. In [14], the denominator of (14.29) is replaced by

. This Borgiorri-Kaplan beamformer is known to provide higher spatial resolution than that of the MVDR beamformer. In [14], the denominator of (14.29) is replaced by ![]() where

where ![]() . Simulation results therein show that using higher order covariance matrix has superior resolution capability and robustness against signal correlation and low SNR.

. Simulation results therein show that using higher order covariance matrix has superior resolution capability and robustness against signal correlation and low SNR.

The performance of the MVDR beamformer depends on the number of snapshots, array aperture, and SNR. Several interesting results are reported in [15]. In the presence of coherent or strongly correlated interferences, the performance of the MVDR beamformer degrades dramatically. Alternative methods addressing this issue are reported in [16–21]. Due to the distortionless response constraint, the MVDR beamformer is sensitive to errors in the target direction and array response imperfection. Robust methods to tackle this problem have been suggested in [22,23].

3.14.3.3 Sparse data representation based approach

The beamforming methods localize the signal sources by estimating the power spectrum associated with various DOAs. Since the number of signals is usually small in array processing, the methods proposed in [24,25] view DOA estimation as sparse data reconstruction and assign DOA estimates to signals with nonzero amplitude. In this approach, the first step is to find a sparse representation of the array output data. The beamforming output in the frequency domain [24] or the array observation (14.12) [25] can be used to construct a sparse data representation. Then the underlying optimization problem (typically convex) will be solved to find nonzero components. DOA estimates are obtained from angles associated with nonzero components. Recently this approach has attracted many researchers’ attention thanks to advances in the theory and methodology of sparse data reconstruction [26].

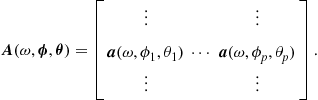

For representational convenience, we follow the formulation in [25]. Let ![]() be a sampling grid of source locations of interest. An important assumption here is that the number of signals is much smaller than the number of sample grids, i.e.,

be a sampling grid of source locations of interest. An important assumption here is that the number of signals is much smaller than the number of sample grids, i.e., ![]() . The overcomplete array manifold matrix

. The overcomplete array manifold matrix ![]() consists of

consists of ![]() steering vectors. The

steering vectors. The ![]() signal vector

signal vector ![]() has a nonzero component

has a nonzero component ![]() if a signal source is present at

if a signal source is present at ![]() . For a single snapshot, the array output (14.12) can be re-expressed in terms of the sparse vector

. For a single snapshot, the array output (14.12) can be re-expressed in terms of the sparse vector ![]() as:

as:

![]() (14.31)

(14.31)

Now the problem reduces to finding the nonzero component in ![]() . In the noiseless case, the ideal measure would be

. In the noiseless case, the ideal measure would be ![]() which counts the nonzero entries. This would in fact lead to the deterministic ML method over a grid search. But this metric will lead to a complicated combinatorial optimization problem. Therefore, one tries to approximate the solution by using

which counts the nonzero entries. This would in fact lead to the deterministic ML method over a grid search. But this metric will lead to a complicated combinatorial optimization problem. Therefore, one tries to approximate the solution by using ![]() norm,

norm, ![]() . The significant advantage of

. The significant advantage of ![]() relaxation is that the convex optimization problem,

relaxation is that the convex optimization problem,

![]() (14.32)

(14.32)

has a unique global minimum and can be solved by computationally efficient numerical methods such as linear programming. For noisy measurements (14.31), the constraint ![]() can not hold and needs to be relaxed. An appropriate objective function is suggested in [24,25]

can not hold and needs to be relaxed. An appropriate objective function is suggested in [24,25]

![]() (14.33)

(14.33)

where ![]() is a regularization parameter.

is a regularization parameter.

For multiple snapshots, we define the data matrix ![]() , the signal matrix

, the signal matrix ![]() and the noise matrix

and the noise matrix ![]() . They are related as follows:

. They are related as follows:

![]() (14.34)

(14.34)

To measure sparsity for multiple time samples, we define the ith row vector of ![]() corresponding to a particular DOA grid point

corresponding to a particular DOA grid point ![]() as

as ![]() and compute its

and compute its ![]() norm

norm ![]() . Then the spatial sparsity is imposed on the

. Then the spatial sparsity is imposed on the ![]() vector

vector ![]() . The multiple sample version of (14.33) becomes

. The multiple sample version of (14.33) becomes

![]() (14.35)

(14.35)

where ![]() is the Forbenius norm. The regularization parameter

is the Forbenius norm. The regularization parameter ![]() is a tradeoff between the fit to data and the sparsity. In [24,25], statistically motivated strategies for selecting

is a tradeoff between the fit to data and the sparsity. In [24,25], statistically motivated strategies for selecting ![]() are discussed.

are discussed.

The computational cost for solving (14.35) increases significantly with the number of snapshots N. A coherent combination based on singular value decomposition (SVD) of the data matrix is suggested in [25]. A mixed norm approach for joint recovery is proposed in [27]. This problem can be avoided when other forms of sparsity are used, for example, the beamforming output in [24] and the covariance matrix in [28]. The resolution capability of this approach is investigated in [29].

Simulation results in the above mentioned references show that the sparse data representation based approach has a much better resolution than the conventional and MVDR beamformers at the expense of increased computational cost. Another advantage over the subspace methods is the improved robustness against signal coherence. However, for low SNRs and closely located sources, the relatively high bias remains an challenging issue for this approach.

3.14.3.4 Numerical examples

In this section, the beamforming based methods discussed previously are tested by numerical experiments. In the simulation, a uniform linear array of 12 sensors with inter-element spacings of half a wavelength is employed. The narrow band signals are generated by ![]() uncorrelated signals of various strengths. The signal to noise ratio of the first and second signals are given by [5 0] dB, respectively. The number of snapshots is

uncorrelated signals of various strengths. The signal to noise ratio of the first and second signals are given by [5 0] dB, respectively. The number of snapshots is ![]() in each Monte Carlo trial.

in each Monte Carlo trial.

Figure 14.2 shows the normalized spectra obtained from conventional beamformer, MVDR beamformer and sparse data representation over ![]() to

to ![]() for well separated sources located

for well separated sources located ![]() relative to the broadside. All the three methods exhibit two peaks at the reference locations. For the second experiment, the reference DOA parameter is given by

relative to the broadside. All the three methods exhibit two peaks at the reference locations. For the second experiment, the reference DOA parameter is given by ![]() , which corresponds to closely located signals. As shown in Figure 14.3, the conventional beamformer only leads to one maximum between the true DOAs and does not recognize the existence of two signals. On the other hand, the MVDR beamformer and the sparse data representation based approach perform well in resolving two signals.

, which corresponds to closely located signals. As shown in Figure 14.3, the conventional beamformer only leads to one maximum between the true DOAs and does not recognize the existence of two signals. On the other hand, the MVDR beamformer and the sparse data representation based approach perform well in resolving two signals.

Figure 14.2 Normalized spectrum for well separated signals. Reference DOA parameter ![]() , SNR = [5 0] dB,

, SNR = [5 0] dB, ![]() .

.

Figure 14.3 Normalized spectrum for closely located signals. Reference DOA parameter ![]() , SNR = [5 0] dB,

, SNR = [5 0] dB, ![]() .

.

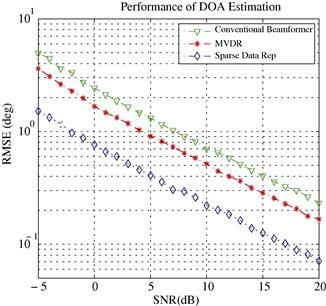

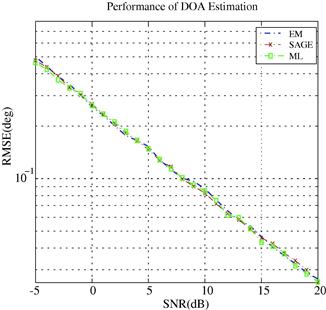

In the third experiment, we compare the estimation accuracy of these methods. To avoid resolution problem, the reference DOA parameter is chosen as ![]() . Both signals have equal power with SNR running from −5 dB to 20 dB in a 1 dB step. Figure 14.4 depicts the root mean squared error (RMSE) obtained from 1000 trials. For all three methods, RMSE decreases with increasing SNR. The sparse data representation based method has an overall best performance over the entire SNR range. The MVDR beamformer lies between the other two methods. The gap between these methods becomes most significant at low SNRs. For example, at SNR = −5 dB, the RMSE of conventional beamformer is

. Both signals have equal power with SNR running from −5 dB to 20 dB in a 1 dB step. Figure 14.4 depicts the root mean squared error (RMSE) obtained from 1000 trials. For all three methods, RMSE decreases with increasing SNR. The sparse data representation based method has an overall best performance over the entire SNR range. The MVDR beamformer lies between the other two methods. The gap between these methods becomes most significant at low SNRs. For example, at SNR = −5 dB, the RMSE of conventional beamformer is ![]() which is more than three times that of the sparse data representation based method with RMSE = 1.5°.

which is more than three times that of the sparse data representation based method with RMSE = 1.5°.

From the simulation results, we have observed the resolution limitation of the conventional beamformer. Improved resolution capability and estimation accuracy can be achieved by the MVDR beamformer and computationally involved sparse data representation based estimator.

3.14.4 Subspace methods

In an attempt to overcome the resolution limit of conventional beamforming, many spectral-like methods were introduced in seventies. They exploit the eigenstructure of the spatial correlation matrix (14.18) to form pseudo spectrum functions. These functions exhibit sharp peaks at the true parameters and lead to superior performance as compared to the Fourier based analysis. While the early work by Pisarenko [30] was devoted to harmonic retrieval, the MUSIC (Multiple SIgnal Classification) algorithm [2,31] was developed for array signal processing.

Recall that ![]() is a Hermitian symmetric matrix. Therefore, the eigenvectors are orthogonal. From (14.18), it is apparent that the eigenvalues induced by the signal part differs from the remaining ones by the noise level. More specifically, let

is a Hermitian symmetric matrix. Therefore, the eigenvectors are orthogonal. From (14.18), it is apparent that the eigenvalues induced by the signal part differs from the remaining ones by the noise level. More specifically, let ![]() denote eigenvalue/eigenvector pairs of

denote eigenvalue/eigenvector pairs of ![]() , the spectral decomposition of

, the spectral decomposition of ![]() can be expressed as

can be expressed as

(14.36)

(14.36)

where ![]() and

and ![]() . When the signal covariance matrix

. When the signal covariance matrix ![]() is full rank, i.e.,

is full rank, i.e., ![]() , the matrix

, the matrix ![]() has the rank of P. The eigenvalues satisfy the property:

has the rank of P. The eigenvalues satisfy the property: ![]() . The signal eigenvectors corresponding to the P largest eigenvalues span the same subspace as the steering matrix. The noise eigenvectors corresponding to the remaining

. The signal eigenvectors corresponding to the P largest eigenvalues span the same subspace as the steering matrix. The noise eigenvectors corresponding to the remaining ![]() eigenvalues are orthogonal to the signal subspace. Mathematically, the signal and noise subspaces are related to the steering matrix as follows:

eigenvalues are orthogonal to the signal subspace. Mathematically, the signal and noise subspaces are related to the steering matrix as follows:

![]() (14.37)

(14.37)

In practice, the analysis is based on the sample covariance matrix ![]() . The eigenvalues and eigenvectors in (14.36) are then replaced by their estimates

. The eigenvalues and eigenvectors in (14.36) are then replaced by their estimates ![]() . Similarly, the matrices on the right hand side of (14.36) are substituted by corresponding estimates

. Similarly, the matrices on the right hand side of (14.36) are substituted by corresponding estimates ![]() and

and ![]() , respectively. For finite samples,

, respectively. For finite samples, ![]() and

and ![]() , the property (14.37) is approximately valid. Many efforts have been made to find the best way of combining the estimated signal and noise eigenvectors to achieve high resolution capability and estimation accuracy. In the following, we assume that the number of signals is known so that the signal and noise subspaces can be separated. Methods for determination of the number of sources will be discussed separately in Section 3.14.7. In the following, we will present the well known MUSIC and ESPRIT algorithms in Sections 3.14.4.1 and 3.14.4.2, respectively. The important issue of signal coherence will be discussed in Section 3.14.4.3.

, the property (14.37) is approximately valid. Many efforts have been made to find the best way of combining the estimated signal and noise eigenvectors to achieve high resolution capability and estimation accuracy. In the following, we assume that the number of signals is known so that the signal and noise subspaces can be separated. Methods for determination of the number of sources will be discussed separately in Section 3.14.7. In the following, we will present the well known MUSIC and ESPRIT algorithms in Sections 3.14.4.1 and 3.14.4.2, respectively. The important issue of signal coherence will be discussed in Section 3.14.4.3.

3.14.4.1 MUSIC

The MUSIC algorithm suggested by Schmidt [2,32], and Bienvenu and Kopp [31] exploits the orthogonality between signal and noise subspaces. From (14.37), we know that any vector ![]() satisfies

satisfies

![]() (14.38)

(14.38)

Assume the array is unambiguous; that is, any collection of P distinct DOAs ![]() forms a linearly independent set

forms a linearly independent set ![]() . Then the above relation is valid for P distinct columns of

. Then the above relation is valid for P distinct columns of ![]() . Motivated by this observation, the MUSIC spectrum is defined in terms of the estimated noise eigenvectors as

. Motivated by this observation, the MUSIC spectrum is defined in terms of the estimated noise eigenvectors as

![]() (14.39)

(14.39)

For high SNR (or large N) and uncorrelated signal sources, the MUSIC spectrum exhibits high peaks near the true DOAs. To find the DOA estimates, we calculate ![]() over a fine grid of

over a fine grid of ![]() and locate the P largest maxima of

and locate the P largest maxima of ![]() . In comparison with the MVDR beamformer (14.29), the MUSIC spectrum uses the projection matrix

. In comparison with the MVDR beamformer (14.29), the MUSIC spectrum uses the projection matrix ![]() rather than

rather than ![]() . To get more insight, assume a perfect spatial correlation matrix. Then, for noise eigenvalues

. To get more insight, assume a perfect spatial correlation matrix. Then, for noise eigenvalues ![]() and

and ![]() approaches

approaches ![]() . Then MUSIC may be interpreted as a MVDR-like method with a correlation matrix calculated at infinite SNR. This explains the superior resolution of MUSIC than MVDR [3].

. Then MUSIC may be interpreted as a MVDR-like method with a correlation matrix calculated at infinite SNR. This explains the superior resolution of MUSIC than MVDR [3].

In [33,34], an alternative implementation of MUSIC is suggested to improve estimation accuracy. The idea behind the sequential MUSIC is to find the strongest signal in each iteration and then remove the estimated signal from the observation for the next iteration. As theoretical analysis and numerical results in [34] show the advantage of sequential MUSIC over standard MUSIC is significant for correlated signals. The Toeplitz approximation method [35] provides another implementation of MUSIC specific to uncorrelated sources with a ULA.

For a uniform linear array, MUSIC has a simple implementation. Let ![]() where

where ![]() , the steering vector (14.16) has the form:

, the steering vector (14.16) has the form:

![]() (14.40)

(14.40)

The inverse of the MUSIC spectrum (14.39) becomes

![]() (14.41)

(14.41)

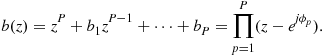

The root-MUSIC algorithm [36] finds the roots of the complex polynomial function ![]() rather than searching for maxima of the MUSIC spectrum. Among the

rather than searching for maxima of the MUSIC spectrum. Among the ![]() possible candidates, P roots

possible candidates, P roots ![]() that are closest to the unit circle on the complex plane are selected to obtain DOA estimates. Since

that are closest to the unit circle on the complex plane are selected to obtain DOA estimates. Since ![]() , the DOA parameters are given by

, the DOA parameters are given by ![]() . It is known that root-MUSIC has the same asymptotic performance as standard MUSIC. In the finite sample case, root-MUSIC has a much better threshold behavior and improved resolution capability [37]. This is explained by the fact that the radial component of the errors in

. It is known that root-MUSIC has the same asymptotic performance as standard MUSIC. In the finite sample case, root-MUSIC has a much better threshold behavior and improved resolution capability [37]. This is explained by the fact that the radial component of the errors in ![]() will not affect

will not affect ![]() . Since the search procedure in standard MUSIC is replaced by solving the roots of a polynomial in root-MUSIC, the computational cost is significantly reduced. However, while standard MUSIC is applicable to arbitrary array geometry, root-MUSIC requires a ULA. When ULAs are not available, one can apply array interpolation techniques [38] to approximate the array response. For more details on array interpolation, the reader is referred to Chapter 16 of this book.

. Since the search procedure in standard MUSIC is replaced by solving the roots of a polynomial in root-MUSIC, the computational cost is significantly reduced. However, while standard MUSIC is applicable to arbitrary array geometry, root-MUSIC requires a ULA. When ULAs are not available, one can apply array interpolation techniques [38] to approximate the array response. For more details on array interpolation, the reader is referred to Chapter 16 of this book.

The extension of standard MUSIC to the two dimensional case is straightforward. The 2D steering vector (14.8) is used in the MUSIC spectrum, searching for the P largest maxima over a two dimensional space. For root-MUSIC, an additional ULA is required to be able to resolve both azimuth and elevation [39]. More algorithms and results regarding two dimensional DOA estimation are to be found in Chapter 15 of this book.

While the MUSIC algorithm utilizes all noise eigenvectors, the Minimum Norm (Min-Norm) algorithm suggested in [40,41] uses a single vector in the noise space. A comprehensive study on the resolution capability of MUSIC and the Min-Norm algorithm can be found in [42].

3.14.4.2 ESPRIT

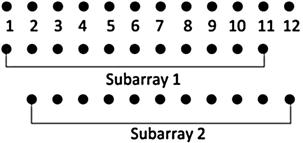

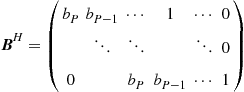

The ESPRIT (Estimation of Signal Parameters via Rotational Invariance Techniques) algorithm proposed by Roy and Kailath [43] exploits the rotational invariance property of two identical subarrays and solves the eigenvalues of a matrix relating two signal subspaces. A simple way to construct identical subarrays is to select the first ![]() elements and the second to the Mth elements of a ULA (see Figure 14.5). The array response matrices of the first and second subarrays are then expressed as

elements and the second to the Mth elements of a ULA (see Figure 14.5). The array response matrices of the first and second subarrays are then expressed as

![]() (14.42)

(14.42)

where ![]() and

and ![]() are selection matrices of the first and second subarrays. They consist of an

are selection matrices of the first and second subarrays. They consist of an ![]() identity matrix and an

identity matrix and an ![]() zero vector

zero vector

![]() (14.43)

(14.43)

Define ![]() . From (14.42), we know that the two subarrays have the same array response up to a phase shift due to the distance between them. This observation leads to the shift invariance property

. From (14.42), we know that the two subarrays have the same array response up to a phase shift due to the distance between them. This observation leads to the shift invariance property

![]() (14.44)

(14.44)

where ![]() . Note that ESPRIT is applicable for array geometries other than ULAs as long as the shift invariance property holds, see e.g., [43–45].

. Note that ESPRIT is applicable for array geometries other than ULAs as long as the shift invariance property holds, see e.g., [43–45].

Recall that the signal subspace of the original array and the signal eigenvectors span the same subspace; therefore, they are related through a nonsingular linear transformation ![]() :

:

![]() (14.45)

(14.45)

Multiplying both sides of (14.45) with ![]() and

and ![]() ,

,

![]() (14.46)

(14.46)

Combining (14.44) and (14.46) yields the relation between ![]() and

and ![]() :

:

![]() (14.47)

(14.47)

Since the matrix ![]() is similar to the diagonal matrix

is similar to the diagonal matrix ![]() , both matrices have the same eigenvalues,

, both matrices have the same eigenvalues, ![]() .

.

Using estimates ![]() in (14.47), one can apply LS (Least Squares) or TLS (Total Least Squares) to obtain

in (14.47), one can apply LS (Least Squares) or TLS (Total Least Squares) to obtain ![]() [46]. Finally, DOA estimates are obtained from eigenvalues of

[46]. Finally, DOA estimates are obtained from eigenvalues of ![]() by the formula

by the formula ![]() . The computational burden of the ESPRIT algorithm can be reduced by using real-valued operations as proposed in [44].

. The computational burden of the ESPRIT algorithm can be reduced by using real-valued operations as proposed in [44].

3.14.4.3 Signal coherence

In the development of subspace methods, we have made an important assumption that the rank of the signal covariance matrix ![]() equals the number of signals P so that the signal eigenvectors spans the same subspace as the column space of the array manifold matrix. However, this condition no longer holds when two signals are coherent, meaning that the magnitude of the correlation coefficient of the signals is one. Coherent signals are often encountered in wireless communications as a result of a multipath propagation effect or smart jamming in radar systems. In the presence of signal coherence,

equals the number of signals P so that the signal eigenvectors spans the same subspace as the column space of the array manifold matrix. However, this condition no longer holds when two signals are coherent, meaning that the magnitude of the correlation coefficient of the signals is one. Coherent signals are often encountered in wireless communications as a result of a multipath propagation effect or smart jamming in radar systems. In the presence of signal coherence, ![]() becomes rank deficient, leading to divergence of signal eigenvectors into the noise subspace. Since the property (14.38) is not satisfied, performance of subspace methods degrades significantly. To mitigate the effect of signal coherence, one could apply forward-backward averaging or spatial smoothing techniques. The former requires a ULA and can handle two coherent signals. The latter requires arrays with a translational invariance property and is able to deal with maximally P coherent signals.

becomes rank deficient, leading to divergence of signal eigenvectors into the noise subspace. Since the property (14.38) is not satisfied, performance of subspace methods degrades significantly. To mitigate the effect of signal coherence, one could apply forward-backward averaging or spatial smoothing techniques. The former requires a ULA and can handle two coherent signals. The latter requires arrays with a translational invariance property and is able to deal with maximally P coherent signals.

Let ![]() denote the exchange matrix comprised of ones on the anti-diagonal and zeros elsewhere. For a ULA, the steering vector (14.16) has an interesting property:

denote the exchange matrix comprised of ones on the anti-diagonal and zeros elsewhere. For a ULA, the steering vector (14.16) has an interesting property:

![]() (14.48)

(14.48)

which implies

![]() (14.49)

(14.49)

where ![]() . The backward covariance is defined as

. The backward covariance is defined as

![]() (14.50)

(14.50)

The forward-backward covariance matrix is obtained by averaging of ![]() and the standard array covariance matrix

and the standard array covariance matrix ![]() :

:

![]() (14.51)

(14.51)

Applying the property (14.49), the modified signal covariance matrix is then given by

![]() (14.52)

(14.52)

The coherent signals are de-correlated through phase modulation by the diagonal elements of ![]() . The forward-backward ESPRIT is equivalent to the unitary ESPRIT [44], because it only uses real valued components.

. The forward-backward ESPRIT is equivalent to the unitary ESPRIT [44], because it only uses real valued components.

In a general case where more than two coherent signals are present, we need a more powerful solution to restore the rank of the signal covariance matrix. The spatial smoothing technique, first proposed in [47] and extended in [38,48,49], exploits the degrees of freedom of a regular array by splitting it into several identical subarrays. Let ![]() denote the number of sensors of a subarray. For a ULA of M elements, the maximal number of subarrays is

denote the number of sensors of a subarray. For a ULA of M elements, the maximal number of subarrays is ![]() . The array response matrix of the lth subarray is related to

. The array response matrix of the lth subarray is related to ![]() as

as

![]() (14.53)

(14.53)

where the selection matrix is ![]() . The spatially averaged covariance matrix is then given by

. The spatially averaged covariance matrix is then given by

(14.54)

(14.54)

In the case of a ULA, one can combine the forward-backward averaging (14.51) with the spatial smoothing. In [48,50], it was shown that under mild conditions, the signal covariance matrix obtained from forward-backward averaging and spatial smoothing is nonsingular. Since the subarrays have a smaller aperture than the original array, the signal coherency is removed at the expense of resolution capability. The two dimensional extension of spatial smoothing is addressed in [51–53].

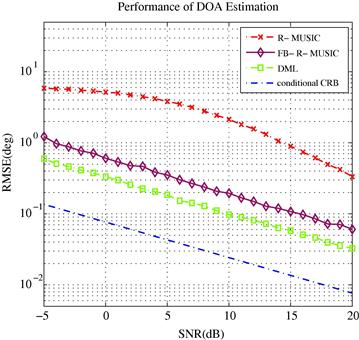

3.14.4.4 Numerical examples

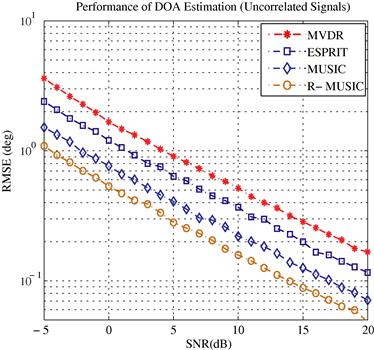

We demonstrate the performance of the subspace methods presented previously by numerical results. A uniform linear array of 12 sensors with inter-element spacings of half a wavelength is employed. In this case, the application of root-MUSIC is straightforward. Narrow band signals are generated by ![]() well separated sources of equal strengths located at

well separated sources of equal strengths located at ![]() . The number of snapshots is

. The number of snapshots is ![]() . Both signals are equal power with SNR running from −5 dB to 20 dB. The two subarrays used in ESPRIT are ULAs comprised of 11 elements.

. Both signals are equal power with SNR running from −5 dB to 20 dB. The two subarrays used in ESPRIT are ULAs comprised of 11 elements.

In the first experiment, we consider uncorrelated signals. For comparison, the MVDR beamformer is applied to the same batch of data. The empirical RMSE is obtained from 1000 trials. From Figure 14.6, one can observe that root-MUSIC, denoted by R-MUSIC, outperforms standard MUSIC and ESPRIT. All the subspace methods have lower estimation errors than the MVDR beamformer. The performance difference is most significant at low SNRs. For SNR close to 20 dB, all methods behave similarly. The superior performance of root-MUSIC compared to standard MUSIC is expected as predicted by the theoretical analysis [37]. The estimation error of ESPRIT is higher than both MUSIC algorithms due to the reduced aperture.

In the second experiment, two correlated signals are considered with real valued correlation coefficient ![]() . One can observe from Figure 14.7 that the performance of all methods degrade rapidly. At

. One can observe from Figure 14.7 that the performance of all methods degrade rapidly. At ![]() , the RMSE is more than twice the RMSE in the uncorrelated case. Both MUSIC-based algorithms have almost identical performance. They are slightly better than ESPRIT. The curve of ESPRIT almost coincides with that of MVDR. These observations indicate that subspace methods are very sensitive to signal correlation and can not provide accurate estimates when rank deficiency occurs. If we use the forward-backward averaged sample covariance matrix (14.51) in the subspace methods, denoted by FB-ESPRIT, FB-MUSIC, and FB-R-MUSIC, respectively, the estimation accuracy improves significantly as the three curves at the bottom show. In comparison with Figure 14.6, the RMSE increases only slightly when the FB technique is applied. For a detailed discussion on highly correlated signals, the reader is referred to Chapter 15 of this book.

, the RMSE is more than twice the RMSE in the uncorrelated case. Both MUSIC-based algorithms have almost identical performance. They are slightly better than ESPRIT. The curve of ESPRIT almost coincides with that of MVDR. These observations indicate that subspace methods are very sensitive to signal correlation and can not provide accurate estimates when rank deficiency occurs. If we use the forward-backward averaged sample covariance matrix (14.51) in the subspace methods, denoted by FB-ESPRIT, FB-MUSIC, and FB-R-MUSIC, respectively, the estimation accuracy improves significantly as the three curves at the bottom show. In comparison with Figure 14.6, the RMSE increases only slightly when the FB technique is applied. For a detailed discussion on highly correlated signals, the reader is referred to Chapter 15 of this book.

3.14.5 Parametric methods

The spectral-like methods presented previously treat the direction finding problem as spatial frequency estimation. Although subspace methods overcome the resolution limitation of beamforming techniques, and yield good estimates at reasonable computational cost, the performance degrades dramatically in the presence of correlated or coherent signals. Parametric methods exploit the data model directly and are usually statistically well motivated. The well known maximum likelihood (ML) approach is representative of this class of estimators. Since parametric methods are not dependent on the eigenstructure of the sample covariance matrix, they provide reasonable results in scenarios involving signal correlation/coherence, low SNRs and small data samples. The price for the improved robustness and accuracy is the increased computational complexity. We will introduce the maximum likelihood approach and the covariance matching estimation methods in Sections 3.14.5.1 and 3.14.5.4, respectively. Several numerical algorithms for efficient implementation of the ML estimator will be presented in Section 3.14.5.2. Analytical results on the performance of DOA estimators presented so far will be discussed in Section 3.14.5.5.

3.14.5.1 The maximum likelihood approach

The maximum likelihood method is a systematic tool for constructing estimators. Based on the statistical model for data samples, it maximizes the likelihood function over the parameters of interest to derive estimates. The well known properties of ML estimation include asymptotic normality and efficiency under proper conditions [54]. For DOA estimation, the application is straightforward. Recall the data model in (14.17)

![]() (14.55)

(14.55)

We assume the noise ![]() is temporally independent and complex normally distributed with zero mean and covariance matrix

is temporally independent and complex normally distributed with zero mean and covariance matrix ![]() , i.e.,

, i.e., ![]() . In the array processing literature, two different interpretations of the source signals lead to the deterministic and the stochastic ML estimators.

. In the array processing literature, two different interpretations of the source signals lead to the deterministic and the stochastic ML estimators.

3.14.5.1.1 Deterministic maximum likelihood

In the deterministic ML approach, the signal ![]() is viewed as a fixed realization of a stochastic process; the parameters

is viewed as a fixed realization of a stochastic process; the parameters ![]() are considered to be deterministic and unknown. With the above noise assumption, the array output

are considered to be deterministic and unknown. With the above noise assumption, the array output ![]() is complex normally distributed with mean

is complex normally distributed with mean ![]() and covariance matrix

and covariance matrix ![]() , i.e.,

, i.e., ![]() . Because the array outputs

. Because the array outputs ![]() are independent, the joint likelihood function is the product of the likelihood associated with each snapshot, i.e.,

are independent, the joint likelihood function is the product of the likelihood associated with each snapshot, i.e.,

(14.56)

(14.56)

where ![]() denotes the Euclidean norm. The log-likelihood function is then given by

denotes the Euclidean norm. The log-likelihood function is then given by

(14.57)

(14.57)

The unknown parameters ![]() include the DOA parameter and nuisance parameters. As the number of the signal waveform parameters increases with increasing number of snapshots, the high dimension of parameter space will make direct optimization of (14.57) infeasible. It is well known that the likelihood function is separable [55,3], and the likelihood can be concentrated with respect to the linear parameters. For a fixed, unknown

include the DOA parameter and nuisance parameters. As the number of the signal waveform parameters increases with increasing number of snapshots, the high dimension of parameter space will make direct optimization of (14.57) infeasible. It is well known that the likelihood function is separable [55,3], and the likelihood can be concentrated with respect to the linear parameters. For a fixed, unknown ![]() , the ML estimate of the signal is given by

, the ML estimate of the signal is given by

![]() (14.58)

(14.58)

where ![]() denotes the Moore-Penrose pseudo inverse. For a full column rank

denotes the Moore-Penrose pseudo inverse. For a full column rank ![]() , it is given by

, it is given by ![]() . Replacing

. Replacing ![]() with

with ![]() in (14.57), and maximizing the resulting likelihood over

in (14.57), and maximizing the resulting likelihood over ![]() , we obtain the ML estimate

, we obtain the ML estimate

![]() (14.59)

(14.59)

where ![]() is the orthogonal complement of the projection matrix

is the orthogonal complement of the projection matrix ![]() . Replacing

. Replacing ![]() with

with ![]() in the likelihood function again, we obtain the concentrated likelihood function:

in the likelihood function again, we obtain the concentrated likelihood function:

![]() (14.60)

(14.60)

Since ![]() is a monotonically increasing function, the ML estimate can be obtained from maximizing the function

is a monotonically increasing function, the ML estimate can be obtained from maximizing the function ![]() , or equivalently,

, or equivalently,

![]() (14.61)

(14.61)

The signal waveform and noise parameters can be computed by replacing ![]() with the estimate

with the estimate ![]() into (14.58) and (14.59), respectively. Combining the criterion (14.60) and the noise estimate (14.59) shows that the deterministic ML estimate minimizes the distance between the observation and the model which is represented by the estimated noise power.

into (14.58) and (14.59), respectively. Combining the criterion (14.60) and the noise estimate (14.59) shows that the deterministic ML estimate minimizes the distance between the observation and the model which is represented by the estimated noise power.

3.14.5.1.2 Stochastic maximum likelihood

In the stochastic ML, the signal ![]() is considered as a complex normal random process with zero mean and covariance matrix

is considered as a complex normal random process with zero mean and covariance matrix ![]() . Assuming independent, spatially white noise as in the deterministic case, the array observation

. Assuming independent, spatially white noise as in the deterministic case, the array observation ![]() is normally distributed with zero mean and covariance matrix

is normally distributed with zero mean and covariance matrix ![]() , i.e.,

, i.e., ![]() where

where ![]() . The joint log-likelihood function for the stochastic signal model is given by

. The joint log-likelihood function for the stochastic signal model is given by

(14.62)

(14.62)

where the vector ![]() includes

includes ![]() unknown entries in the signal covariance matrix

unknown entries in the signal covariance matrix ![]() . Taking logarithm of

. Taking logarithm of ![]() and omitting constants, we obtain

and omitting constants, we obtain

![]() (14.63)

(14.63)

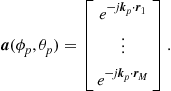

The parameter vector ![]() remains the same over the observation interval, unlike the growing parameter vector in the deterministic case. It was shown in [56,57] that the linear parameters in (14.63) have a closed form expression for the ML estimates at an unknown fixed nonlinear parameter

remains the same over the observation interval, unlike the growing parameter vector in the deterministic case. It was shown in [56,57] that the linear parameters in (14.63) have a closed form expression for the ML estimates at an unknown fixed nonlinear parameter ![]() as follows:

as follows:

![]() (14.64)

(14.64)

![]() (14.65)

(14.65)

Replacing ![]() and

and ![]() with

with ![]() and

and ![]() , one obtains the concentrated stochastic likelihood function as

, one obtains the concentrated stochastic likelihood function as

![]() (14.66)

(14.66)

![]() (14.67)

(14.67)

The ML estimate for the DOA parameter is derived by minimizing the negative likelihood function over ![]()

![]() (14.68)

(14.68)

Once ![]() is available, the signal and noise parameters can be computed from (14.64) and (14.65) by replacing the DOA parameter with its estimate. The criterion (14.66) has a nice interpretation as the generalized variance minimized by the estimated model parameter [3]. If there is only one signal source, the projection matrix is given by

is available, the signal and noise parameters can be computed from (14.64) and (14.65) by replacing the DOA parameter with its estimate. The criterion (14.66) has a nice interpretation as the generalized variance minimized by the estimated model parameter [3]. If there is only one signal source, the projection matrix is given by ![]() , and the criterion

, and the criterion ![]() is a monotonically increasing function of

is a monotonically increasing function of ![]() . The optimum wave parameter maximizes the conventional beamforming output and therefore results in the same estimate as the conventional beamformer.

. The optimum wave parameter maximizes the conventional beamforming output and therefore results in the same estimate as the conventional beamformer.

In the above discussion, the spectral covariance ![]() is not necessarily positive definite as the optimization was over Hermitian matrices. The positive definiteness of

is not necessarily positive definite as the optimization was over Hermitian matrices. The positive definiteness of ![]() is taken into account by imposing a constraint in the optimization process in [58,59]. Fortunately,

is taken into account by imposing a constraint in the optimization process in [58,59]. Fortunately, ![]() has full rank with probability 1 for sufficiently large N, provided the true

has full rank with probability 1 for sufficiently large N, provided the true ![]() has full rank. The ML estimator for known signal waveforms was developed in [60–62] under various assumptions. The problem of unknown noise structures was discussed in [63].

has full rank. The ML estimator for known signal waveforms was developed in [60–62] under various assumptions. The problem of unknown noise structures was discussed in [63].

3.14.5.2 Implementation

In the derivation of ML estimators, we have reduced the size of the problem by concentrating the signal and noise parameters. The resulting objective functions: (14.60) and (14.66) depend only on the nonlinear parameters. Maximization of both criteria still requires multi-dimensional searches. Hence, efficient implementation of the ML estimators becomes an important issue. The alternating projection algorithm [64] is an iterative technique for finding the maximum of the concentrated likelihood function (14.60). It performs maximization with respect to a single parameter, while all other parameters are held fixed. In [65], Newton-type methods are suggested for the large sample case.

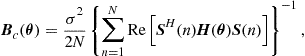

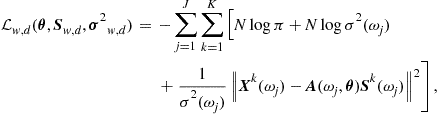

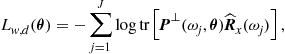

In the following, we will present the statistically motivated expectation and maximization (EM) and space alternating generalized EM (SAGE) algorithm. The multidimensional search in the original problem can be replaced by several one dimensional maximizations. Both algorithms assume the deterministic signal model so that the suggested augmentation scheme is valid. It is possible to derive EM and SAGE for stochastic ML for uncorrelated signals, see e.g., [66]. When a ULA is available, the iterative quadratic maximum likelihood (IQML) algorithm can be applied to minimize the deterministic likelihood function. A common feature of these methods is that a good initial estimate is required for the convergence to the global maximum. One way to obtain the initial estimate is via other simpler methods such beamforming techniques or subspace methods. Another approach is to optimize the ML criteria directly by stochastic optimization procedures such as the genetic algorithm (GA) [67], simulated annealing [68] and particle swarm method [69] prior to the local maximization algorithms.

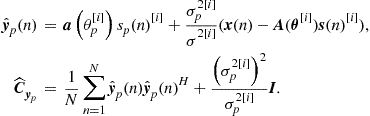

3.14.5.2.1 EM algorithm

The expectation and maximization (EM) algorithm [70] is a well known iterative algorithm in statistics for locating modes of likelihood functions. Because of its simplicity and stability, it has been applied to many problems since its first appearance. The idea behind EM is quite simple: rather than performing a complicated maximization of the observed data log-likelihood, one augments the observations with imputed values that simplify the maximization and applies the augmented data to estimate the unknown parameters. Because the imputed data are unknown, they are estimated from the observed data. This procedure continues to iterate between the E- and M-steps until no changes occur in the parameter estimates.

Let ![]() and

and ![]() denote the observed and augmented data, respectively. The corresponding density functions are denoted by

denote the observed and augmented data, respectively. The corresponding density functions are denoted by ![]() and

and ![]() . The augmented data

. The augmented data ![]() is specified so that

is specified so that ![]() is a many-to-one mapping. Starting from an initial guess

is a many-to-one mapping. Starting from an initial guess ![]() , each iteration of the EM algorithm consists of an expectation (E) step and a maximization (M) step. At the

, each iteration of the EM algorithm consists of an expectation (E) step and a maximization (M) step. At the ![]() st iteration,

st iteration, ![]() , the E-step evaluates the conditional expectation of the augmented data log-likelihood

, the E-step evaluates the conditional expectation of the augmented data log-likelihood ![]() given the observed data

given the observed data ![]() and the ith iterate

and the ith iterate ![]() :

:

![]() (14.69)

(14.69)

For notational simplicity, ![]() is also used to denote a random vector in expressions like (14.69). The M-step determines

is also used to denote a random vector in expressions like (14.69). The M-step determines ![]() by maximizing the expected augmented data log-likelihood

by maximizing the expected augmented data log-likelihood

![]() (14.70)

(14.70)

A simple proof based on Jensen’s inequality [71] shows that the observed data likelihood increases monotonically or never decreases with iterations [70]. As with most optimization techniques, EM is not guaranteed to always converge to a unique global maximum. In well-behaved problems ![]() is unimodel and concave over the entire parameter space, then EM converges to the maximum likelihood estimate from any starting value [70,72].

is unimodel and concave over the entire parameter space, then EM converges to the maximum likelihood estimate from any starting value [70,72].

For DOA estimation, the observed data consists of the array outputs ![]() . For the deterministic signal model, the augmented data

. For the deterministic signal model, the augmented data ![]() is constructed by decomposing

is constructed by decomposing ![]() virtually into its signal and noise parts [73]:

virtually into its signal and noise parts [73]:

(14.71)

(14.71)

where the ![]() vectors

vectors ![]() are independent and complex normally distributed as

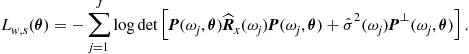

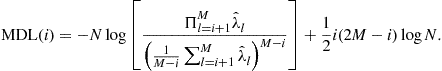

are independent and complex normally distributed as ![]() . The noise parameters are positive and must satisfy the constraint