Diffusion Adaptation Over Networks*

Ali H. Sayed, Electrical Engineering Department, University of California at Los Angeles, USA, [email protected]

Abstract

Adaptive networks are well-suited to perform decentralized information processing and optimization tasks and to model various types of self-organized and complex behavior encountered in nature. Adaptive networks consist of a collection of agents with processing and learning abilities. The agents are linked together through a connection topology, and they cooperate with each other through local interactions to solve distributed optimization, estimation, and inference problems in real-time. The continuous diffusion of information across the network enables agents to adapt their performance in relation to streaming data and network conditions; it also results in improved adaptation and learning performance relative to non-cooperative agents. This article provides an overview of diffusion strategies for adaptation and learning over networks, with emphasis on mean-square-error designs. Stability and performance analyses are provided and the benefits of cooperation are highlighted. Several supporting appendices are included in an effort to make the presentation self-contained for most readers.

Keywords

Distributed estimation; Distributed optimization; Diffusion adaptation; Distributed filtering; Diffusion strategy; Consensus strategy; Cooperative strategy; Adaptation; Learning; Adaptive networks; Cooperative networks; Diffusion networks; Steepest-descent; Stochastic gradient approximation; Mean-square-error; Energy conservation; LMS filter; RLS filter; Kalman filter; Combination rules; Graphs; Noisy links; Block maximum norm

Acknowledgments

The development of the theory and applications of diffusion adaptation over networks has benefited greatly from the insights and contributions of several UCLA Ph.D. students, and several visiting graduate students to the UCLA Adaptive Systems Laboratory (http://www.ee.ucla.edu/asl). The assistance and contributions of all students are hereby gratefully acknowledged, including Cassio G. Lopes, Federico S. Cattivelli, Sheng-Yuan Tu, Jianshu Chen, Xiaochuan Zhao, Zaid Towfic, Chung-Kai Yu, Noriyuki Takahashi, Jae-Woo Lee, Alexander Bertrand, and Paolo Di Lorenzo. The author is also particularly thankful to S.-Y. Tu, J. Chen, X. Zhao, Z. Towfic, and C.-K. Yu for their assistance in reviewing an earlier draft of this chapter.

Adaptive networks are well-suited to perform decentralized information processing and optimization tasks and to model various types of self-organized and complex behavior encountered in nature. Adaptive networks consist of a collection of agents with processing and learning abilities. The agents are linked together through a connection topology, and they cooperate with each other through local interactions to solve distributed optimization, estimation, and inference problems in real-time. The continuous diffusion of information across the network enables agents to adapt their performance in relation to streaming data and network conditions; it also results in improved adaptation and learning performance relative to non-cooperating agents. This chapter provides an overview of diffusion strategies for adaptation and learning over networks. The chapter is divided into several sections and includes appendices with supporting material intended to make the presentation rather self-contained for the benefit of the reader.

3.09.1 Motivation

Consider a collection of ![]() agents interested in estimating the same parameter vector,

agents interested in estimating the same parameter vector, ![]() , of size

, of size ![]() . The vector is the minimizer of some global cost function, denoted by

. The vector is the minimizer of some global cost function, denoted by ![]() , which the agents seek to optimize, say,

, which the agents seek to optimize, say,

![]() (9.1)

(9.1)

We are interested in situations where the individual agents have access to partial information about the global cost function. In this case, cooperation among the agents becomes beneficial. For example, by cooperating with their neighbors, and by having these neighbors cooperate with their neighbors, procedures can be devised that would enable all agents in the network to converge towards the global optimum ![]() through local interactions. The objective of decentralized processing is to allow spatially distributed agents to achieve a global objective by relying solely on local information and on in-network processing. Through a continuous process of cooperation and information sharing with neighbors, agents in a network can be made to approach the global performance level despite the localized nature of their interactions.

through local interactions. The objective of decentralized processing is to allow spatially distributed agents to achieve a global objective by relying solely on local information and on in-network processing. Through a continuous process of cooperation and information sharing with neighbors, agents in a network can be made to approach the global performance level despite the localized nature of their interactions.

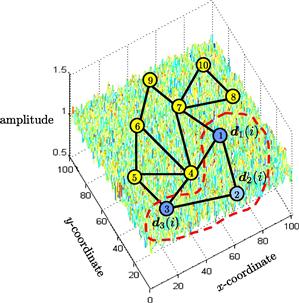

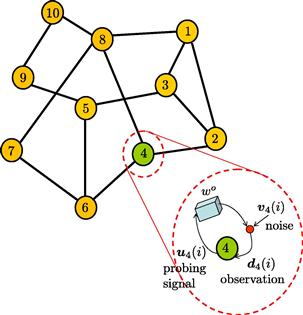

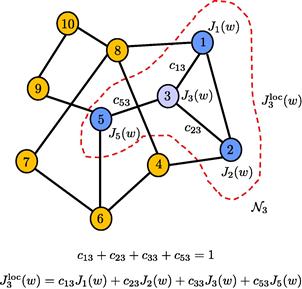

3.09.1.1 Networks and neighborhoods

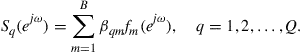

In this chapter we focus mainly on connected networks, although many of the results hold even if the network graph is separated into disjoint subgraphs. In a connected network, if we pick any two arbitrary nodes, then there will exist at least one path connecting them: the nodes may be connected directly by an edge if they are neighbors, or they may be connected by a path that passes through other intermediate nodes. Figure 9.1 provides a graphical representation of a connected network with ![]() nodes. Nodes that are able to share information with each other are connected by edges. The sharing of information over these edges can be unidirectional or bi-directional. The neighborhood of any particular node is defined as the set of nodes that are connected to it by edges; we include in this set the node itself. The figure illustrates the neighborhood of node 3, which consists of the following subset of nodes:

nodes. Nodes that are able to share information with each other are connected by edges. The sharing of information over these edges can be unidirectional or bi-directional. The neighborhood of any particular node is defined as the set of nodes that are connected to it by edges; we include in this set the node itself. The figure illustrates the neighborhood of node 3, which consists of the following subset of nodes: ![]() . For each node, the size of its neighborhood defines its degree. For example, node

. For each node, the size of its neighborhood defines its degree. For example, node ![]() in the figure has degree

in the figure has degree ![]() , while node 8 has degree

, while node 8 has degree ![]() . Nodes that are well connected have higher degrees. Note that we are denoting the neighborhood of an arbitrary node

. Nodes that are well connected have higher degrees. Note that we are denoting the neighborhood of an arbitrary node ![]() by

by ![]() and its size by

and its size by ![]() . We shall also use the notation

. We shall also use the notation ![]() to refer to

to refer to ![]() .

.

Figure 9.1 A network consists of a collection of cooperating nodes. Nodes that are linked by edges can share information. The neighborhood of any particular node consists of all nodes that are connected to it by edges (including the node itself). The figure illustrates the neighborhood of node ![]() , which consists of nodes

, which consists of nodes ![]() . Accordingly, node

. Accordingly, node ![]() has degree

has degree ![]() , which is the size of its neighborhood.

, which is the size of its neighborhood.

The neighborhood of any node ![]() therefore consists of all nodes with which node

therefore consists of all nodes with which node ![]() can exchange information. We assume a symmetric situation in relation to neighbors so that if node

can exchange information. We assume a symmetric situation in relation to neighbors so that if node ![]() is a neighbor of node

is a neighbor of node ![]() , then node

, then node ![]() is also a neighbor of node

is also a neighbor of node ![]() . This does not necessarily mean that the flow of information between these two nodes is symmetrical. For instance, in future sections, we shall assign pairs of nonnegative weights to each edge connecting two neighboring nodes—see Figure 9.2. In particular, we will assign the coefficient

. This does not necessarily mean that the flow of information between these two nodes is symmetrical. For instance, in future sections, we shall assign pairs of nonnegative weights to each edge connecting two neighboring nodes—see Figure 9.2. In particular, we will assign the coefficient ![]() to denote the weight used by node

to denote the weight used by node ![]() to scale the data it receives from node

to scale the data it receives from node ![]() ; this scaling can be interpreted as a measure of trustworthiness or reliability that node

; this scaling can be interpreted as a measure of trustworthiness or reliability that node ![]() assigns to its interaction with node

assigns to its interaction with node ![]() . Note that we are using two subscripts,

. Note that we are using two subscripts, ![]() , with the first subscript denoting the source node (where information originates from) and the second subscript denoting the sink node (where information moves to) so that:

, with the first subscript denoting the source node (where information originates from) and the second subscript denoting the sink node (where information moves to) so that:

![]() (9.2)

(9.2)

In this way, the alternative coefficient ![]() will denote the weight used to scale the data sent from node

will denote the weight used to scale the data sent from node ![]() to

to ![]() :

:

![]() (9.3)

(9.3)

The weights ![]() can be different, and one or both of them can be zero, so that the exchange of information over the edge connecting the neighboring nodes

can be different, and one or both of them can be zero, so that the exchange of information over the edge connecting the neighboring nodes ![]() need not be symmetric. When one of the weights is zero, say,

need not be symmetric. When one of the weights is zero, say, ![]() , then this situation means that even though nodes

, then this situation means that even though nodes ![]() are neighbors, node

are neighbors, node ![]() is either not receiving data from node

is either not receiving data from node ![]() or the data emanating from node

or the data emanating from node ![]() is being annihilated before reaching node

is being annihilated before reaching node ![]() . Likewise, when

. Likewise, when ![]() , then node

, then node ![]() scales its own data, whereas

scales its own data, whereas ![]() corresponds to the situation when node

corresponds to the situation when node ![]() does not use its own data. Usually, in graphical representations like those in Figure 9.1, edges are drawn between neighboring nodes that can share information. And, it is understood that the actual sharing of information is controlled by the values of the scaling weights that are assigned to the edge; these values can turn off communication in one or both directions and they can also scale one direction more heavily than the reverse direction, and so forth.

does not use its own data. Usually, in graphical representations like those in Figure 9.1, edges are drawn between neighboring nodes that can share information. And, it is understood that the actual sharing of information is controlled by the values of the scaling weights that are assigned to the edge; these values can turn off communication in one or both directions and they can also scale one direction more heavily than the reverse direction, and so forth.

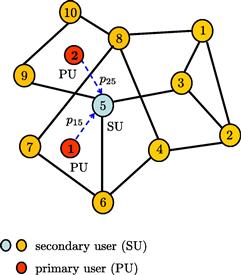

Figure 9.2 In the top part, and for emphasis purposes, we are representing the edge between nodes ![]() and

and ![]() by two separate directed links: one moving from

by two separate directed links: one moving from ![]() to

to ![]() and the other moving from

and the other moving from ![]() to

to ![]() . Two nonnegative weights are used to scale the sharing of information over these directed links. The scalar

. Two nonnegative weights are used to scale the sharing of information over these directed links. The scalar ![]() denotes the weight used to scale data sent from node

denotes the weight used to scale data sent from node ![]() to

to ![]() , while

, while ![]() denotes the weight used to scale data sent from node

denotes the weight used to scale data sent from node ![]() to

to ![]() . The weights

. The weights ![]() can be different, and one or both of them can be zero, so that the exchange of information over the edge connecting any two neighboring nodes need not be symmetric. The bottom part of the figure illustrates directed links arriving to node

can be different, and one or both of them can be zero, so that the exchange of information over the edge connecting any two neighboring nodes need not be symmetric. The bottom part of the figure illustrates directed links arriving to node ![]() from its neighbors

from its neighbors ![]() (left) and leaving from node

(left) and leaving from node ![]() towards these same neighbors (right).

towards these same neighbors (right).

3.09.1.2 Cooperation among agents

Now, depending on the application under consideration, the solution vector ![]() from (9.1) may admit different interpretations. For example, the entries of

from (9.1) may admit different interpretations. For example, the entries of ![]() may represent the location coordinates of a nutrition source that the agents are trying to find, or the location of an accident involving a dangerous chemical leak. The nodes may also be interested in locating a predator and tracking its movements over time. In these localization applications, the vector

may represent the location coordinates of a nutrition source that the agents are trying to find, or the location of an accident involving a dangerous chemical leak. The nodes may also be interested in locating a predator and tracking its movements over time. In these localization applications, the vector ![]() is usually two or three-dimensional. In other applications, the entries of

is usually two or three-dimensional. In other applications, the entries of ![]() may represent the parameters of some model that the network wishes to learn, such as identifying the model parameters of a biological process or the occupied frequency bands in a shared communications medium. There are also situations where different agents in the network may be interested in estimating different entries of

may represent the parameters of some model that the network wishes to learn, such as identifying the model parameters of a biological process or the occupied frequency bands in a shared communications medium. There are also situations where different agents in the network may be interested in estimating different entries of ![]() , or even different parameter vectors

, or even different parameter vectors ![]() altogether, say,

altogether, say, ![]() for node

for node ![]() , albeit with some relation among the different vectors so that cooperation among the nodes can still be rewarding. In this chapter, however, we focus exclusively on the special (yet frequent and important) case where all agents are interested in estimating the same parameter vector

, albeit with some relation among the different vectors so that cooperation among the nodes can still be rewarding. In this chapter, however, we focus exclusively on the special (yet frequent and important) case where all agents are interested in estimating the same parameter vector ![]() .

.

Since the agents have a common objective, it is natural to expect cooperation among them to be beneficial in general. One important question is therefore how to develop cooperation strategies that can lead to better performance than when each agent attempts to solve the optimization problem individually. Another important question is how to develop strategies that endow networks with the ability to adapt and learn in real-time in response to changes in the statistical properties of the data. This chapter provides an overview of results in the area of diffusion adaptation with illustrative examples. Diffusion strategies are powerful methods that enable adaptive learning and cooperation over networks. There have been other useful works in the literature on the use of alternative consensus strategies to develop distributed optimization solutions over networks. Nevertheless, we explain in Appendix E why diffusion strategies outperform consensus strategies in terms of their mean-square-error stability and performance. For this reason, we focus in the body of the chapter on presenting the theoretical foundations for diffusion strategies and their performance.

3.09.1.3 Notation

In our treatment, we need to distinguish between random variables and deterministic quantities. For this reason, we use boldface letters to represent random variables and normal font to represent deterministic (non-random) quantities. For example, the boldface letter ![]() denotes a random quantity, while the normal font letter

denotes a random quantity, while the normal font letter ![]() denotes an observation or realization for it. We also need to distinguish between matrices and vectors. For this purpose, we use CAPITAL letters to refer to matrices and small letters to refer to both vectors and scalars; Greek letters always refer to scalars. For example, we write

denotes an observation or realization for it. We also need to distinguish between matrices and vectors. For this purpose, we use CAPITAL letters to refer to matrices and small letters to refer to both vectors and scalars; Greek letters always refer to scalars. For example, we write ![]() to denote a covariance matrix and

to denote a covariance matrix and ![]() to denote a vector of parameters. We also write

to denote a vector of parameters. We also write ![]() to refer to the variance of a random variable. To distinguish between a vector

to refer to the variance of a random variable. To distinguish between a vector ![]() (small letter) and a scalar

(small letter) and a scalar ![]() (also a small letter), we use parentheses to index scalar quantities and subscripts to index vector quantities. Thus, we write

(also a small letter), we use parentheses to index scalar quantities and subscripts to index vector quantities. Thus, we write ![]() to refer to the value of a scalar quantity

to refer to the value of a scalar quantity ![]() at time

at time ![]() , and

, and ![]() to refer to the value of a vector quantity

to refer to the value of a vector quantity ![]() at time

at time ![]() . Thus,

. Thus, ![]() denotes a scalar while

denotes a scalar while ![]() denotes a vector. All vectors in our presentation are column vectors, with the exception of the regression vector (denoted by the letter

denotes a vector. All vectors in our presentation are column vectors, with the exception of the regression vector (denoted by the letter ![]() ), which will be taken to be a row vector for convenience of presentation. The symbol

), which will be taken to be a row vector for convenience of presentation. The symbol ![]() denotes transposition, and the symbol

denotes transposition, and the symbol ![]() denotes complex conjugation for scalars and complex-conjugate transposition for matrices. The notation

denotes complex conjugation for scalars and complex-conjugate transposition for matrices. The notation ![]() denotes a column vector with entries

denotes a column vector with entries ![]() and

and ![]() stacked on top of each other, and the notation

stacked on top of each other, and the notation ![]() denotes a diagonal matrix with entries

denotes a diagonal matrix with entries ![]() and

and ![]() . Likewise, the notation

. Likewise, the notation ![]() vectorizes its matrix argument and stacks the columns of

vectorizes its matrix argument and stacks the columns of ![]() on top of each other. The notation

on top of each other. The notation ![]() denotes the Euclidean norm of its vector argument, while

denotes the Euclidean norm of its vector argument, while ![]() denotes the block maximum norm of a block vector (defined in Appendix D). Similarly, the notation

denotes the block maximum norm of a block vector (defined in Appendix D). Similarly, the notation ![]() denotes the weighted square value,

denotes the weighted square value, ![]() . Moreover,

. Moreover, ![]() denotes the block maximum norm of a matrix (also defined in Appendix D), and

denotes the block maximum norm of a matrix (also defined in Appendix D), and ![]() denotes the spectral radius of the matrix (i.e., the largest absolute magnitude among its eigenvalues). Finally,

denotes the spectral radius of the matrix (i.e., the largest absolute magnitude among its eigenvalues). Finally, ![]() denotes the identity matrix of size

denotes the identity matrix of size ![]() ; sometimes, for simplicity of notation, we drop the subscript

; sometimes, for simplicity of notation, we drop the subscript ![]() from

from ![]() when the size of the identity matrix is obvious from the context. Table 9.1 provides a summary of the symbols used in the chapter for ease of reference.

when the size of the identity matrix is obvious from the context. Table 9.1 provides a summary of the symbols used in the chapter for ease of reference.

3.09.2 Mean-square-error estimation

Readers interested in the development of the distributed optimization strategies and their adaptive versions can move directly to Section 3.09.3. The purpose of the current section is to motivate the virtues of distributed in-network processing, and to provide illustrative examples in the context of mean-square-error estimation. Advanced readers may skip this section.

We start our development by associating with each agent ![]() an individual cost (or utility) function,

an individual cost (or utility) function, ![]() . Although the algorithms presented in this chapter apply to more general situations, we nevertheless assume in our presentation that the cost functions

. Although the algorithms presented in this chapter apply to more general situations, we nevertheless assume in our presentation that the cost functions ![]() are strictly convex so that each one of them has a unique minimizer. We further assume that, for all costs

are strictly convex so that each one of them has a unique minimizer. We further assume that, for all costs ![]() , the minimum occurs at the same value

, the minimum occurs at the same value ![]() . Obviously, the choice of

. Obviously, the choice of ![]() is limitless and is largely dependent on the application. It is sufficient for our purposes to illustrate the main concepts underlying diffusion adaptation by focusing on the case of mean-square-error (MSE) or quadratic cost functions. In the sequel, we provide several examples to illustrate how such quadratic cost functions arise in applications and how cooperative processing over networks can be beneficial. At the same time, we note that most of the arguments in this chapter can be extended beyond MSE optimization to more general cost functions and to situations where the minimizers of the individual costs

is limitless and is largely dependent on the application. It is sufficient for our purposes to illustrate the main concepts underlying diffusion adaptation by focusing on the case of mean-square-error (MSE) or quadratic cost functions. In the sequel, we provide several examples to illustrate how such quadratic cost functions arise in applications and how cooperative processing over networks can be beneficial. At the same time, we note that most of the arguments in this chapter can be extended beyond MSE optimization to more general cost functions and to situations where the minimizers of the individual costs ![]() need not agree with each other—as already shown in [1–3]; see also Section 3.09.10.4 for a brief summary.

need not agree with each other—as already shown in [1–3]; see also Section 3.09.10.4 for a brief summary.

In non-cooperative solutions, each agent would operate individually on its own cost function ![]() and optimize it to determines

and optimize it to determines ![]() , without any interaction with the other nodes. However, the analysis and derivations in future sections will reveal that nodes can benefit from cooperation between them in terms of better performance (such as converging faster to

, without any interaction with the other nodes. However, the analysis and derivations in future sections will reveal that nodes can benefit from cooperation between them in terms of better performance (such as converging faster to ![]() or tracking a changing

or tracking a changing ![]() more effectively)—see, e.g., Theorems 9.6.3–9.6.5 and Section 3.09.7.3.

more effectively)—see, e.g., Theorems 9.6.3–9.6.5 and Section 3.09.7.3.

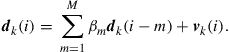

3.09.2.1 Application: autoregressive modeling

Our first example relates to identifying the parameters of an auto-regressive (AR) model from noisy data. Thus, consider a situation where agents are spread over some geographical region and each agent ![]() is observing realizations

is observing realizations ![]() of an AR zero-mean random process

of an AR zero-mean random process ![]() , which satisfies a model of the form:

, which satisfies a model of the form:

(9.4)

(9.4)

The scalars ![]() represent the model parameters that the agents wish to identify, and

represent the model parameters that the agents wish to identify, and ![]() represents an additive zero-mean white noise process with power:

represents an additive zero-mean white noise process with power:

![]() (9.5)

(9.5)

It is customary to assume that the noise process is temporally white and spatially independent so that noise terms across different nodes are independent of each other and, at the same node, successive noise samples are also independent of each other with a time-independent variance ![]() :

:

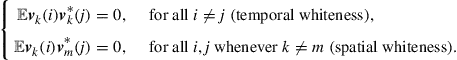

(9.6)

(9.6)

The noise process ![]() is further assumed to be independent of past signals

is further assumed to be independent of past signals ![]() across all nodes

across all nodes ![]() . Observe that we are allowing the noise power profile,

. Observe that we are allowing the noise power profile, ![]() , to vary with

, to vary with ![]() . In this way, the quality of the measurements is allowed to vary across the network with some nodes collecting noisier data than other nodes. Figure 9.3 illustrates an example of a network consisting of

. In this way, the quality of the measurements is allowed to vary across the network with some nodes collecting noisier data than other nodes. Figure 9.3 illustrates an example of a network consisting of ![]() nodes spread over a region in space. The figure shows the neighborhood of node

nodes spread over a region in space. The figure shows the neighborhood of node ![]() , which consists of nodes

, which consists of nodes ![]() .

.

Figure 9.3 A collection of nodes, spread over a geographic region, observes realizations of an AR random process and cooperates to estimate the underlying model parameters ![]() in the presence of measurement noise. The noise power profile can vary over space.

in the presence of measurement noise. The noise power profile can vary over space.

3.09.2.1.1 Linear model

To illustrate the difference between cooperative and non-cooperative estimation strategies, let us first explain that the data can be represented in terms of a linear model. To do so, we collect the model parameters ![]() into an

into an ![]() column vector

column vector ![]() :

:

![]() (9.7)

(9.7)

and the past data into a ![]() row regression vector

row regression vector ![]() :

:

![]() (9.8)

(9.8)

Then, we can rewrite the measurement equation (9.4) at each node ![]() in the equivalent linear model form:

in the equivalent linear model form:

![]() (9.9)

(9.9)

Linear relations of the form (9.9) are common in applications and arise in many other contexts (as further illustrated by the next three examples in this section).

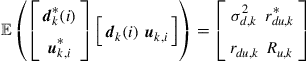

We assume the stochastic processes ![]() in (9.9) have zero means and are jointly wide-sense stationary. We denote their second-order moments by:

in (9.9) have zero means and are jointly wide-sense stationary. We denote their second-order moments by:

![]() (9.10)

(9.10)

![]() (9.11)

(9.11)

![]() (9.12)

(9.12)

As was the case with the noise power profile, we are allowing the moments ![]() to depend on the node index

to depend on the node index ![]() so that these moments can vary with the spatial dimension as well. The covariance matrix

so that these moments can vary with the spatial dimension as well. The covariance matrix ![]() is, by definition, always nonnegative definite. However, for convenience of presentation, we shall assume that

is, by definition, always nonnegative definite. However, for convenience of presentation, we shall assume that ![]() is actually positive-definite (and, hence, invertible):

is actually positive-definite (and, hence, invertible):

![]() (9.13)

(9.13)

3.09.2.1.2 Non-cooperative mean-square-error solution

One immediate result that follows from the linear model (9.9) is that the unknown parameter vector ![]() can be recovered exactly by each individual node from knowledge of the local moments

can be recovered exactly by each individual node from knowledge of the local moments ![]() alone. To see this, note that if we multiply both sides of (9.9) by

alone. To see this, note that if we multiply both sides of (9.9) by ![]() and take expectations we obtain

and take expectations we obtain

![]() (9.14)

(9.14)

so that

![]() (9.15)

(9.15)

It is seen from (9.15) that ![]() is the solution to a linear system of equations and that this solution can be computed by every node directly from its moments

is the solution to a linear system of equations and that this solution can be computed by every node directly from its moments ![]() . It is useful to re-interpret construction (9.15) as the solution to a minimum mean-square-error (MMSE) estimation problem [4,5]. Indeed, it can be verified that the quantity

. It is useful to re-interpret construction (9.15) as the solution to a minimum mean-square-error (MMSE) estimation problem [4,5]. Indeed, it can be verified that the quantity ![]() that appears in (9.15) is the unique solution to the following MMSE problem:

that appears in (9.15) is the unique solution to the following MMSE problem:

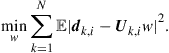

![]() (9.16)

(9.16)

To verify this claim, we denote the cost function that appears in (9.16) by

![]() (9.17)

(9.17)

and expand it to find that

![]() (9.18)

(9.18)

The cost function ![]() is quadratic in

is quadratic in ![]() and it has a unique minimizer since

and it has a unique minimizer since ![]() . Differentiating

. Differentiating ![]() with respect to

with respect to ![]() we find its gradient vector:

we find its gradient vector:

![]() (9.19)

(9.19)

It is seen that this gradient vector is annihilated at the same value ![]() given by (9.15). Therefore, we can equivalently state that if each node

given by (9.15). Therefore, we can equivalently state that if each node ![]() solves the MMSE problem (9.16), then the solution vector coincides with the desired parameter vector

solves the MMSE problem (9.16), then the solution vector coincides with the desired parameter vector ![]() . This observation explains why it is often justified to consider mean-square-error cost functions when dealing with estimation problems that involve data that satisfy linear models similar to (9.9).

. This observation explains why it is often justified to consider mean-square-error cost functions when dealing with estimation problems that involve data that satisfy linear models similar to (9.9).

Besides ![]() , the solution of the MMSE problem (9.16) also conveys information about the noise level in the data. Note that by substituting

, the solution of the MMSE problem (9.16) also conveys information about the noise level in the data. Note that by substituting ![]() from (9.15) into expression (9.16) for

from (9.15) into expression (9.16) for ![]() , the resulting minimum mean-square-error value that is attained by node

, the resulting minimum mean-square-error value that is attained by node ![]() is found to be:

is found to be:

(9.20)

(9.20)

![]()

We shall use the notation ![]() and

and ![]() interchangeably to denote the minimum cost value of

interchangeably to denote the minimum cost value of ![]() . The above result states that, when each agent

. The above result states that, when each agent ![]() recovers

recovers ![]() from knowledge of its moments

from knowledge of its moments ![]() using expression (9.15), then the agent attains an MSE performance level that is equal to the noise power at its location,

using expression (9.15), then the agent attains an MSE performance level that is equal to the noise power at its location, ![]() . An alternative useful expression for the minimum cost can be obtained by substituting expression (9.15) for

. An alternative useful expression for the minimum cost can be obtained by substituting expression (9.15) for ![]() into (9.18) and simplifying the expression to find that

into (9.18) and simplifying the expression to find that

![]() (9.21)

(9.21)

This second expression is in terms of the data moments ![]() .

.

3.09.2.1.3 Non-cooperative adaptive solution

The optimal MMSE implementation (9.15) for determining ![]() requires knowledge of the statistical information

requires knowledge of the statistical information ![]() . This information is usually not available beforehand. Instead, the agents are more likely to have access to successive time-indexed observations

. This information is usually not available beforehand. Instead, the agents are more likely to have access to successive time-indexed observations ![]() of the random processes

of the random processes ![]() for

for ![]() . In this case, it becomes necessary to devise a scheme that would allow each node to use its measurements to approximate

. In this case, it becomes necessary to devise a scheme that would allow each node to use its measurements to approximate ![]() . It turns out that an adaptive solution is possible. In this alternative implementation, each node

. It turns out that an adaptive solution is possible. In this alternative implementation, each node ![]() feeds its observations

feeds its observations ![]() into an adaptive filter and evaluates successive estimates for

into an adaptive filter and evaluates successive estimates for ![]() . As time passes by, the estimates would get closer to

. As time passes by, the estimates would get closer to ![]() .

.

The adaptive solution operates as follows. Let ![]() denote an estimate for

denote an estimate for ![]() that is computed by node

that is computed by node ![]() at time

at time ![]() based on all the observations

based on all the observations ![]() it has collected up to that time instant. There are many adaptive algorithms that can be used to compute

it has collected up to that time instant. There are many adaptive algorithms that can be used to compute ![]() ; some filters are more accurate than others (usually, at the cost of additional complexity) [4–7]. It is sufficient for our purposes to consider one simple (yet effective) filter structure, while noting that most of the discussion in this chapter can be extended to other structures. One of the simplest choices for an adaptive structure is the least-mean-squares (LMS) filter, where the data are processed by each node

; some filters are more accurate than others (usually, at the cost of additional complexity) [4–7]. It is sufficient for our purposes to consider one simple (yet effective) filter structure, while noting that most of the discussion in this chapter can be extended to other structures. One of the simplest choices for an adaptive structure is the least-mean-squares (LMS) filter, where the data are processed by each node ![]() as follows:

as follows:

![]() (9.22)

(9.22)

![]() (9.23)

(9.23)

Starting from some initial condition, say, ![]() , the filter iterates over

, the filter iterates over ![]() . At each time instant,

. At each time instant, ![]() , the filter uses the local data

, the filter uses the local data ![]() at node

at node ![]() to compute a local estimation error,

to compute a local estimation error, ![]() , via (9.22). The error is then used to update the existing estimate from

, via (9.22). The error is then used to update the existing estimate from ![]() to

to ![]() via (9.23). The factor

via (9.23). The factor ![]() that appears in (9.23) is a constant positive step-size parameter; usually chosen to be sufficiently small to ensure mean-square stability and convergence, as discussed further ahead in the chapter. The step-size parameter can be selected to vary with time as well; one popular choice is to replace

that appears in (9.23) is a constant positive step-size parameter; usually chosen to be sufficiently small to ensure mean-square stability and convergence, as discussed further ahead in the chapter. The step-size parameter can be selected to vary with time as well; one popular choice is to replace ![]() in (9.23) with the following construction:

in (9.23) with the following construction:

![]() (9.24)

(9.24)

where ![]() is a small positive parameter and

is a small positive parameter and ![]() . The resulting filter implementation is known as normalized LMS [5] since the step-size is normalized by the squared norm of the regression vector. Other choices include step-size sequences

. The resulting filter implementation is known as normalized LMS [5] since the step-size is normalized by the squared norm of the regression vector. Other choices include step-size sequences ![]() that satisfy both conditions:

that satisfy both conditions:

![]() (9.25)

(9.25)

Such sequences converge slowly towards zero. One example is the choice ![]() . However, by virtue of the fact that such step-sizes die out as

. However, by virtue of the fact that such step-sizes die out as ![]() , then these choices end up turning off adaptation. As such, step-size sequences satisfying (9.25) are not generally suitable for applications that require continuous learning, especially under non-stationary environments. For this reason, in this chapter, we shall focus exclusively on the constant step-size case (9.23) in order to ensure continuous adaptation and learning.

, then these choices end up turning off adaptation. As such, step-size sequences satisfying (9.25) are not generally suitable for applications that require continuous learning, especially under non-stationary environments. For this reason, in this chapter, we shall focus exclusively on the constant step-size case (9.23) in order to ensure continuous adaptation and learning.

Equations (9.22) and (9.23) are written in terms of the observed quantities ![]() ; these are deterministic values since they correspond to observations of the random processes

; these are deterministic values since they correspond to observations of the random processes ![]() . Often, when we are interested in highlighting the random nature of the quantities involved in the adaptation step, especially when we study the mean-square performance of adaptive filters, it becomes more useful to rewrite the recursions using the boldface notation to highlight the fact that the quantities that appear in (9.22) and (9.23) are actually realizations of random variables. Thus, we also write:

. Often, when we are interested in highlighting the random nature of the quantities involved in the adaptation step, especially when we study the mean-square performance of adaptive filters, it becomes more useful to rewrite the recursions using the boldface notation to highlight the fact that the quantities that appear in (9.22) and (9.23) are actually realizations of random variables. Thus, we also write:

![]() (9.26)

(9.26)

![]() (9.27)

(9.27)

where ![]() will be random variables as well.

will be random variables as well.

The performance of adaptive implementations of this kind are well-understood for both cases of stationary ![]() and changing

and changing ![]() [4–7]. For example, in the stationary case, if the adaptive implementation (9.26) and (9.27) were to succeed in having its estimator

[4–7]. For example, in the stationary case, if the adaptive implementation (9.26) and (9.27) were to succeed in having its estimator ![]() tend to

tend to ![]() with probability one as

with probability one as ![]() , then we would expect the error signal

, then we would expect the error signal ![]() in (9.26) to tend towards the noise signal

in (9.26) to tend towards the noise signal ![]() (by virtue of the linear model (9.9)). This means that, under this ideal scenario, the variance of the error signal

(by virtue of the linear model (9.9)). This means that, under this ideal scenario, the variance of the error signal ![]() would be expected to tend towards the noise variance,

would be expected to tend towards the noise variance, ![]() , as

, as ![]() . Recall from (9.20) that the noise variance is the least cost that the MSE solution can attain. Therefore, such limiting behavior by the adaptive filter would be desirable. However, it is well-known that there is always some loss in mean-square-error performance when adaptation is employed due to the effect of gradient noise, which is caused by the algorithm’s reliance on observations (or realizations)

. Recall from (9.20) that the noise variance is the least cost that the MSE solution can attain. Therefore, such limiting behavior by the adaptive filter would be desirable. However, it is well-known that there is always some loss in mean-square-error performance when adaptation is employed due to the effect of gradient noise, which is caused by the algorithm’s reliance on observations (or realizations) ![]() rather than on the actual moments

rather than on the actual moments ![]() . In particular, it is known that for LMS filters, the variance of

. In particular, it is known that for LMS filters, the variance of ![]() in steady-state will be larger than

in steady-state will be larger than ![]() by a small amount, and the size of the offset is proportional to the step-size parameter

by a small amount, and the size of the offset is proportional to the step-size parameter ![]() (so that smaller step-sizes lead to better mean-square-error (MSE) performance albeit at the expense of slower convergence). It is easy to see why the variance of

(so that smaller step-sizes lead to better mean-square-error (MSE) performance albeit at the expense of slower convergence). It is easy to see why the variance of ![]() will be larger than

will be larger than ![]() from the definition of the error signal in (9.26). Introduce the weight-error vector

from the definition of the error signal in (9.26). Introduce the weight-error vector

![]() (9.28)

(9.28)

and the so-called a priori error signal

![]() (9.29)

(9.29)

This second error measures the difference between the uncorrupted term ![]() and its estimator prior to adaptation,

and its estimator prior to adaptation, ![]() . It then follows from the data model (9.9) and from the defining expression (9.26) for

. It then follows from the data model (9.9) and from the defining expression (9.26) for ![]() that

that

(9.30)

(9.30)

Since the noise component, ![]() , is assumed to be zero-mean and independent of all other random variables, we conclude that

, is assumed to be zero-mean and independent of all other random variables, we conclude that

![]() (9.31)

(9.31)

This relation holds for any time instant ![]() ; it shows that the variance of the output error,

; it shows that the variance of the output error, ![]() is larger than

is larger than ![]() by an amount that is equal to the variance of the a priori error,

by an amount that is equal to the variance of the a priori error, ![]() . We define the filter mean-square-error (MSE) and excess-mean-square-error (EMSE) as the following steady-state measures:

. We define the filter mean-square-error (MSE) and excess-mean-square-error (EMSE) as the following steady-state measures:

![]() (9.32)

(9.32)

![]() (9.33)

(9.33)

so that, for the adaptive implementation (compare with (9.20)):

![]() (9.34)

(9.34)

Therefore, the EMSE term quantifies the size of the offset in the MSE performance of the adaptive filter. We also define the filter mean-square-deviation (MSD) as the steady-state measure:

![]() (9.35)

(9.35)

which measures how far ![]() is from

is from ![]() in the mean-square-error sense in steady-state. It is known that the MSD and EMSE of LMS filters of the form (9.26) and (9.27) can be approximated for sufficiently small-step sizes by the following expressions [4–7]:

in the mean-square-error sense in steady-state. It is known that the MSD and EMSE of LMS filters of the form (9.26) and (9.27) can be approximated for sufficiently small-step sizes by the following expressions [4–7]:

![]() (9.36)

(9.36)

![]() (9.37)

(9.37)

It is seen that the smaller the step-size parameter is, the better the performance of the adaptive solution.

3.09.2.1.4 Cooperative adaptation through diffusion

Observe from (9.36) and (9.37) that even if all nodes employ the same step-size, ![]() , and even if the regression data are spatially uniform so that

, and even if the regression data are spatially uniform so that ![]() for all

for all ![]() , the mean-square-error performance across the nodes still varies in accordance with the variation of the noise power profile,

, the mean-square-error performance across the nodes still varies in accordance with the variation of the noise power profile, ![]() , across the network. Nodes with larger noise power will perform worse than nodes with smaller noise power. However, since all nodes are observing data arising from the same underlying model

, across the network. Nodes with larger noise power will perform worse than nodes with smaller noise power. However, since all nodes are observing data arising from the same underlying model ![]() , it is natural to expect cooperation among the nodes to be beneficial. By cooperation we mean that neighboring nodes can share information (such as measurements or estimates) with each other as permitted by the network topology. Starting in the next section, we will motivate and describe algorithms that enable nodes to carry out adaptation and learning in a cooperative manner to enhance performance.

, it is natural to expect cooperation among the nodes to be beneficial. By cooperation we mean that neighboring nodes can share information (such as measurements or estimates) with each other as permitted by the network topology. Starting in the next section, we will motivate and describe algorithms that enable nodes to carry out adaptation and learning in a cooperative manner to enhance performance.

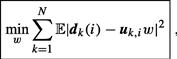

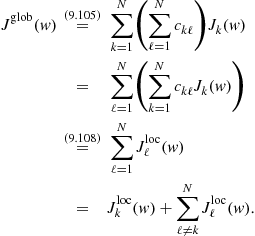

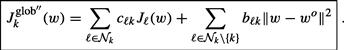

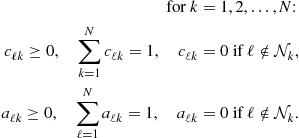

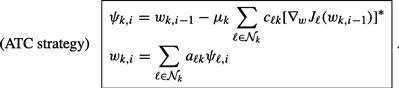

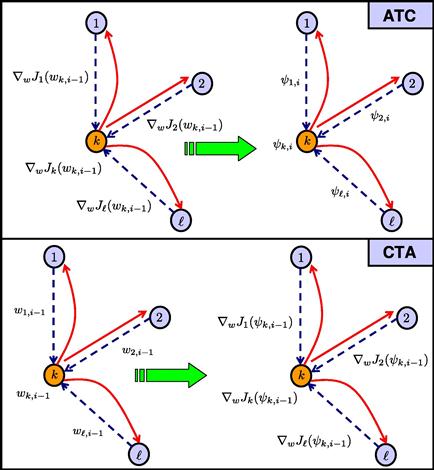

Specifically, we are going to see that one way to achieve cooperation is by developing adaptive algorithms that enable the nodes to optimize the following global cost function in a distributed manner:

(9.38)

(9.38)

where the global cost is the aggregate objective:

(9.39)

(9.39)

Comparing (9.38) with (9.16), we see that we are now adding the individual costs, ![]() , from across all nodes. Note that since the desired

, from across all nodes. Note that since the desired ![]() satisfies (9.15) at every node

satisfies (9.15) at every node ![]() , then it also satisfies

, then it also satisfies

(9.40)

(9.40)

But it can be verified that the optimal solution to (9.38) is given by the same ![]() that satisfies (9.40). Therefore, solving the global optimization problem (9.38) also leads to the desired

that satisfies (9.40). Therefore, solving the global optimization problem (9.38) also leads to the desired ![]() . In future sections, we will show how cooperative and distributed adaptive schemes for solving (9.38), such as (9.153) or (9.154) further ahead, lead to improved performance in estimating

. In future sections, we will show how cooperative and distributed adaptive schemes for solving (9.38), such as (9.153) or (9.154) further ahead, lead to improved performance in estimating ![]() (in terms of smaller mean-square-deviation and faster convergence rate) than the non-cooperative mode (9.26) and (9.27), where each agent runs its own individual adaptive filter—see, e.g., Theorems 9.6.3–9.6.5 and Section 3.09.7.3.

(in terms of smaller mean-square-deviation and faster convergence rate) than the non-cooperative mode (9.26) and (9.27), where each agent runs its own individual adaptive filter—see, e.g., Theorems 9.6.3–9.6.5 and Section 3.09.7.3.

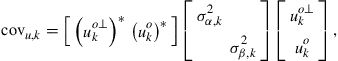

3.09.2.2 Application: tapped-delay-line models

Our second example to motivate MSE cost functions, ![]() , and linear models relates to identifying the parameters of a moving-average (MA) model from noisy data. MA models are also known as finite-impulse-response (FIR) or tapped-delay-line models. Thus, consider a situation where agents are interested in estimating the parameters of an FIR model, such as the taps of a communications channel or the parameters of some (approximate) model of interest in finance or biology. Assume the agents are able to independently probe the unknown model and observe its response to excitations in the presence of additive noise; this situation is illustrated in Figure 9.4, with the probing operation highlighted for one of the nodes (node 4).

, and linear models relates to identifying the parameters of a moving-average (MA) model from noisy data. MA models are also known as finite-impulse-response (FIR) or tapped-delay-line models. Thus, consider a situation where agents are interested in estimating the parameters of an FIR model, such as the taps of a communications channel or the parameters of some (approximate) model of interest in finance or biology. Assume the agents are able to independently probe the unknown model and observe its response to excitations in the presence of additive noise; this situation is illustrated in Figure 9.4, with the probing operation highlighted for one of the nodes (node 4).

Figure 9.4 The network is interested in estimating the parameter vector ![]() that describes an underlying tapped-delay-line model. The agents are assumed to be able to independently probe the unknown system, and observe its response to excitations, under noise, as indicated in the highlighted diagram for node

that describes an underlying tapped-delay-line model. The agents are assumed to be able to independently probe the unknown system, and observe its response to excitations, under noise, as indicated in the highlighted diagram for node ![]() .

.

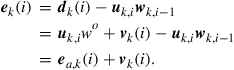

The schematics inside the enlarged diagram in Figure 9.4 is meant to convey that each node ![]() probes the model with an input sequence

probes the model with an input sequence ![]() and measures the resulting response sequence,

and measures the resulting response sequence, ![]() , in the presence of additive noise. The system dynamics for each agent

, in the presence of additive noise. The system dynamics for each agent ![]() is assumed to be described by a MA model of the form:

is assumed to be described by a MA model of the form:

(9.41)

(9.41)

In this model, the term ![]() again represents an additive zero-mean noise process that is assumed to be temporally white and spatially independent; it is also assumed to be independent of the input process,

again represents an additive zero-mean noise process that is assumed to be temporally white and spatially independent; it is also assumed to be independent of the input process, ![]() , for all

, for all ![]() , and

, and ![]() . The scalars

. The scalars ![]() represent the model parameters that the agents seek to identify. If we again collect the model parameters into an

represent the model parameters that the agents seek to identify. If we again collect the model parameters into an ![]() column vector

column vector ![]() :

:

![]() (9.42)

(9.42)

and the input data into a ![]() row regression vector:

row regression vector:

![]() (9.43)

(9.43)

then, we can again express the measurement equation (9.41) at each node ![]() in the same linear model as (9.9), namely,

in the same linear model as (9.9), namely,

![]() (9.44)

(9.44)

As was the case with model (9.9), we can likewise verify that, in view of (9.44), the desired parameter vector ![]() satisfies the same normal equations (9.15), i.e.,

satisfies the same normal equations (9.15), i.e.,

![]() (9.45)

(9.45)

where the moments ![]() continue to be defined by expressions (9.11) and (9.12) with

continue to be defined by expressions (9.11) and (9.12) with ![]() now defined by (9.43). Therefore, each node

now defined by (9.43). Therefore, each node ![]() can determine

can determine ![]() on its own by solving the same MMSE estimation problem (9.16). This solution method requires knowledge of the moments

on its own by solving the same MMSE estimation problem (9.16). This solution method requires knowledge of the moments ![]() and, according to (9.20), each agent

and, according to (9.20), each agent ![]() would then attain an MSE level that is equal to the noise power level at its location.

would then attain an MSE level that is equal to the noise power level at its location.

Alternatively, when the statistical information ![]() is not available, each agent

is not available, each agent ![]() can estimate

can estimate ![]() iteratively by feeding data

iteratively by feeding data ![]() into the adaptive implementation (9.26) and (9.27). In this way, each agent

into the adaptive implementation (9.26) and (9.27). In this way, each agent ![]() will achieve the same performance level shown earlier in (9.36) and (9.37), with the limiting performance being again dependent on the local noise power level,

will achieve the same performance level shown earlier in (9.36) and (9.37), with the limiting performance being again dependent on the local noise power level, ![]() . Therefore, nodes with larger noise power will perform worse than nodes with smaller noise power. However, since all nodes are observing data arising from the same underlying model

. Therefore, nodes with larger noise power will perform worse than nodes with smaller noise power. However, since all nodes are observing data arising from the same underlying model ![]() , it is natural to expect cooperation among the nodes to be beneficial. As we are going to see, starting from the next section, one way to achieve cooperation and improve performance is by developing algorithms that optimize the same global cost function (9.38) in an adaptive and distributed manner, such as algorithms (9.153) and (9.154) further ahead.

, it is natural to expect cooperation among the nodes to be beneficial. As we are going to see, starting from the next section, one way to achieve cooperation and improve performance is by developing algorithms that optimize the same global cost function (9.38) in an adaptive and distributed manner, such as algorithms (9.153) and (9.154) further ahead.

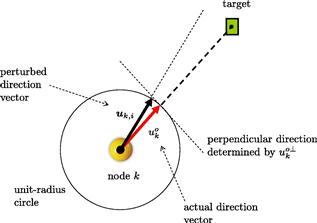

3.09.2.3 Application: target localization

Our third example relates to the problem of locating a destination of interest (such as the location of a nutrition source or a chemical leak) or locating and tracking an object of interest (such as a predator or a projectile). In several such localization applications, the agents in the network are allowed to move towards the target or away from it, in which case we would end up with a mobile adaptive network [8]. Biological networks behave in this manner such as networks representing fish schools, bird formations, bee swarms, bacteria motility, and diffusing particles [8–12]. The agents may move towards the target (e.g., when it is a nutrition source) or away from the target (e.g., when it is a predator). In other applications, the agents may remain static and are simply interested in locating a target or tracking it (such as tracking a projectile).

To motivate mean-square-error estimation in the context of localization problems, we consider the situation corresponding to a static target and static nodes. Thus, assume that the unknown location of the target in the cartesian plane is represented by the ![]() vector

vector ![]() . The agents are spread over the same region of space and are interested in locating the target. The location of every agent

. The agents are spread over the same region of space and are interested in locating the target. The location of every agent ![]() is denoted by the

is denoted by the ![]() vector

vector ![]() in terms of its

in terms of its ![]() and

and ![]() coordinates—see Figure 9.5. We assume the agents are aware of their location vectors. The distance between agent

coordinates—see Figure 9.5. We assume the agents are aware of their location vectors. The distance between agent ![]() and the target is denoted by

and the target is denoted by ![]() and is equal to:

and is equal to:

![]() (9.46)

(9.46)

The ![]() unit-norm direction vector pointing from agent

unit-norm direction vector pointing from agent ![]() towards the target is denoted by

towards the target is denoted by ![]() and is given by:

and is given by:

![]() (9.47)

(9.47)

Observe from (9.46) and (9.47) that ![]() can be expressed in the following useful inner-product form:

can be expressed in the following useful inner-product form:

![]() (9.48)

(9.48)

Figure 9.5 The distance from node ![]() to the target is denoted by

to the target is denoted by ![]() and the unit-norm direction vector from the same node to the target is denoted by

and the unit-norm direction vector from the same node to the target is denoted by ![]() . Node

. Node ![]() is assumed to have access to noisy measurements of

is assumed to have access to noisy measurements of ![]() .

.

In practice, agents have noisy observations of both their distance and direction vector towards the target. We denote the noisy distance measurement collected by node ![]() at time

at time ![]() by:

by:

![]() (9.49)

(9.49)

where ![]() denotes noise and is assumed to be zero-mean, and temporally white and spatially in-dependent with variance

denotes noise and is assumed to be zero-mean, and temporally white and spatially in-dependent with variance

![]() (9.50)

(9.50)

We also denote the noisy direction vector that is measured by node ![]() at time

at time ![]() by

by ![]() . This vector is a perturbed version of

. This vector is a perturbed version of ![]() . We assume that

. We assume that ![]() continues to start from the location of the node at

continues to start from the location of the node at ![]() , but that its tip is perturbed slightly either to the left or to the right relative to the tip of

, but that its tip is perturbed slightly either to the left or to the right relative to the tip of ![]() —see Figure 9.6. The perturbation to the tip of

—see Figure 9.6. The perturbation to the tip of ![]() is modeled as being the result of two effects: a small deviation that occurs along the direction that is perpendicular to

is modeled as being the result of two effects: a small deviation that occurs along the direction that is perpendicular to ![]() , and a smaller deviation that occurs along the direction of

, and a smaller deviation that occurs along the direction of ![]() . Since we are assuming that the tip of

. Since we are assuming that the tip of ![]() is only slightly perturbed relative to the tip of

is only slightly perturbed relative to the tip of ![]() , then it is reasonable to expect the amount of perturbation along the parallel direction to be small compared to the amount of perturbation along the perpendicular direction.

, then it is reasonable to expect the amount of perturbation along the parallel direction to be small compared to the amount of perturbation along the perpendicular direction.

Figure 9.6 The tip of the noisy direction vector is modeled as being approximately perturbed away from the actual direction by two effects: a larger effect caused by a deviation along the direction that is perpendicular to ![]() , and a smaller deviation along the direction that is parallel to

, and a smaller deviation along the direction that is parallel to ![]() .

.

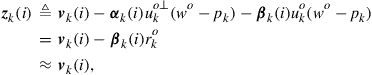

Thus, we write

![]() (9.51)

(9.51)

where ![]() denotes a unit-norm row vector that lies in the same plane and whose direction is perpendicular to

denotes a unit-norm row vector that lies in the same plane and whose direction is perpendicular to ![]() . The variables

. The variables ![]() and

and ![]() denote zero-mean independent random noises that are temporally white and spatially independent with variances:

denote zero-mean independent random noises that are temporally white and spatially independent with variances:

![]() (9.52)

(9.52)

We assume the contribution of ![]() is small compared to the contributions of the other noise sources,

is small compared to the contributions of the other noise sources, ![]() and

and ![]() , so that

, so that

![]() (9.53)

(9.53)

The random noises ![]() are further assumed to be independent of each other.

are further assumed to be independent of each other.

Using (9.48) we find that the noisy measurements ![]() are related to the unknown

are related to the unknown ![]() via:

via:

![]() (9.54)

(9.54)

where the modified noise term ![]() is defined in terms of the noises in

is defined in terms of the noises in ![]() and

and ![]() as follows:

as follows:

(9.55)

(9.55)

since, by construction,

![]() (9.56)

(9.56)

and the contribution by ![]() is assumed to be sufficiently small. If we now introduce the adjusted signal:

is assumed to be sufficiently small. If we now introduce the adjusted signal:

![]() (9.57)

(9.57)

then we arrive again from (9.54) and (9.55) at the following linear model for the available measurement variables ![]() in terms of the target location

in terms of the target location ![]() :

:

![]() (9.58)

(9.58)

There is one important difference in relation to the earlier linear models (9.9) and (9.44), namely, the variables ![]() in (9.58) do not have zero means any longer. It is nevertheless straightforward to determine the first and second-order moments of the variables

in (9.58) do not have zero means any longer. It is nevertheless straightforward to determine the first and second-order moments of the variables ![]() . First, note from (9.49), (9.51), and (9.57) that

. First, note from (9.49), (9.51), and (9.57) that

![]() (9.59)

(9.59)

Even in this case of non-zero means, and in view of (9.58), the desired parameter vector ![]() can still be shown to satisfy the same normal equations (9.15), i.e.,

can still be shown to satisfy the same normal equations (9.15), i.e.,

![]() (9.60)

(9.60)

where the moments ![]() continue to be defined as

continue to be defined as

![]() (9.61)

(9.61)

To verify that (9.60) holds, we simply multiply both sides of (9.58) by ![]() from the left, compute the expectations of both sides, and use the fact that

from the left, compute the expectations of both sides, and use the fact that ![]() has zero mean and is assumed to be independent of

has zero mean and is assumed to be independent of ![]() for all times

for all times ![]() and nodes

and nodes ![]() . However, the difference in relation to the earlier normal equations (9.15) is that the matrix

. However, the difference in relation to the earlier normal equations (9.15) is that the matrix ![]() is not the actual covariance matrix of

is not the actual covariance matrix of ![]() any longer. When

any longer. When ![]() is not zero mean, its covariance matrix is instead defined as:

is not zero mean, its covariance matrix is instead defined as:

![]() (9.62)

(9.62)

so that

![]() (9.63)

(9.63)

We conclude from this relation that ![]() is positive-definite (and, hence, invertible) so that expression (9.60) is justified. This is because the covariance matrix,

is positive-definite (and, hence, invertible) so that expression (9.60) is justified. This is because the covariance matrix, ![]() , is itself positive-definite. Indeed, some algebra applied to the difference

, is itself positive-definite. Indeed, some algebra applied to the difference ![]() from (9.51) shows that

from (9.51) shows that

(9.64)

(9.64)

where the matrix

(9.65)

(9.65)

is full rank since the rows ![]() are linearly independent vectors.

are linearly independent vectors.

Therefore, each node ![]() can determine

can determine ![]() on its own by solving the same minimum mean-square-error estimation problem (9.16). This solution method requires knowledge of the moments

on its own by solving the same minimum mean-square-error estimation problem (9.16). This solution method requires knowledge of the moments ![]() and, according to (9.20), each agent

and, according to (9.20), each agent ![]() would then attain an MSE level that is equal to the noise power level,

would then attain an MSE level that is equal to the noise power level, ![]() , at its location.

, at its location.

Alternatively, when the statistical information ![]() is not available beforehand, each agent

is not available beforehand, each agent ![]() can estimate

can estimate ![]() iteratively by feeding data

iteratively by feeding data ![]() into the adaptive implementation (9.26) and (9.27). In this case, each agent

into the adaptive implementation (9.26) and (9.27). In this case, each agent ![]() will achieve the performance level shown earlier in (9.36) and (9.37), with the limiting performance being again dependent on the local noise power level,

will achieve the performance level shown earlier in (9.36) and (9.37), with the limiting performance being again dependent on the local noise power level, ![]() . Therefore, nodes with larger noise power will perform worse than nodes with smaller noise power. However, since all nodes are observing distances and direction vectors towards the same target location

. Therefore, nodes with larger noise power will perform worse than nodes with smaller noise power. However, since all nodes are observing distances and direction vectors towards the same target location ![]() , it is natural to expect cooperation among the nodes to be beneficial. As we are going to see, starting from the next section, one way to achieve cooperation and improve performance is by developing algorithms that solve the same global cost function (9.38) in an adaptive and distributed manner, by using algorithms such as (9.153) and (9.154) further ahead.

, it is natural to expect cooperation among the nodes to be beneficial. As we are going to see, starting from the next section, one way to achieve cooperation and improve performance is by developing algorithms that solve the same global cost function (9.38) in an adaptive and distributed manner, by using algorithms such as (9.153) and (9.154) further ahead.

3.09.2.3.1 Role of adaptation

The localization application helps highlight one of the main advantages of adaptation, namely, the ability of adaptive implementations to learn and track changing statistical conditions. For example, in the context of mobile networks, where nodes can move closer or further away from a target, the location vector for each agent ![]() becomes time-dependent, say,

becomes time-dependent, say, ![]() . In this case, the actual distance and direction vector between agent

. In this case, the actual distance and direction vector between agent ![]() and the target also vary with time and become:

and the target also vary with time and become:

![]() (9.66)

(9.66)

The noisy distance measurement to the target is then:

![]() (9.67)

(9.67)

where the variance of ![]() now depends on time as well:

now depends on time as well:

![]() (9.68)

(9.68)

In the context of mobile networks, it is reasonable to assume that the variance of ![]() varies both with time and with the distance to the target: the closer the node is to the target, the less noisy the measurement of the distance is expected to be. Similar remarks hold for the variances of the noises

varies both with time and with the distance to the target: the closer the node is to the target, the less noisy the measurement of the distance is expected to be. Similar remarks hold for the variances of the noises ![]() and

and ![]() that perturb the measurement of the direction vector, say,

that perturb the measurement of the direction vector, say,

![]() (9.69)

(9.69)

where now

![]() (9.70)

(9.70)

The same arguments that led to (9.58) can be repeated to lead to the same model, except that now the means of the variables ![]() become time-dependent as well:

become time-dependent as well:

![]() (9.71)

(9.71)

Nevertheless, adaptive solutions (whether cooperative or non-cooperative), are able to track such time-variations because these solutions work directly with the observations ![]() and the successive observations will reflect the changing statistical profile of the data. In general, adaptive solutions are able to track changes in the underlying signal statistics rather well [4,5], as long as the rate of non-stationarity is slow enough for the filter to be able to follow the changes.

and the successive observations will reflect the changing statistical profile of the data. In general, adaptive solutions are able to track changes in the underlying signal statistics rather well [4,5], as long as the rate of non-stationarity is slow enough for the filter to be able to follow the changes.

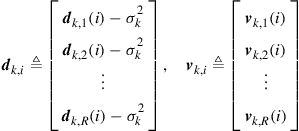

3.09.2.4 Application: collaborative spectral sensing

Our fourth and last example to illustrate the role of mean-square-error estimation and cooperation relates to spectrum sensing for cognitive radio applications. Cognitive radio systems involve two types of users: primary users and secondary users. To avoid causing harmful interference to incumbent primary users, unlicensed cognitive radio devices need to detect unused frequency bands even at low signal-to-noise (SNR) conditions [13–16]. One way to carry out spectral sensing is for each secondary user to estimate the aggregated power spectrum that is transmitted by all active primary users, and to locate unused frequency bands within the estimated spectrum. This step can be performed by the secondary users with or without cooperation.

Thus, consider a communications environment consisting of ![]() primary users and

primary users and ![]() secondary users. Let

secondary users. Let ![]() denote the power spectrum of the signal transmitted by primary user

denote the power spectrum of the signal transmitted by primary user ![]() . To facilitate estimation of the spectral profile by the secondary users, we assume that each

. To facilitate estimation of the spectral profile by the secondary users, we assume that each ![]() can be represented as a linear combination of some basis functions,

can be represented as a linear combination of some basis functions, ![]() , say,

, say, ![]() of them [17]:

of them [17]:

(9.72)

(9.72)

In this representation, the scalars ![]() denote the coefficients of the basis expansion for user

denote the coefficients of the basis expansion for user ![]() . The variable

. The variable ![]() denotes the normalized angular frequency measured in radians/sample. The power spectrum is often symmetric about the vertical axis,

denotes the normalized angular frequency measured in radians/sample. The power spectrum is often symmetric about the vertical axis, ![]() , and therefore it is sufficient to focus on the interval

, and therefore it is sufficient to focus on the interval ![]() . There are many ways by which the basis functions,

. There are many ways by which the basis functions, ![]() , can be selected. The following is one possible construction for illustration purposes. We divide the interval

, can be selected. The following is one possible construction for illustration purposes. We divide the interval ![]() into

into ![]() identical intervals and denote their center frequencies by

identical intervals and denote their center frequencies by ![]() . We then place a Gaussian pulse at each location

. We then place a Gaussian pulse at each location ![]() and control its width through the selection of its standard deviation,

and control its width through the selection of its standard deviation, ![]() , i.e.,

, i.e.,

![]() (9.73)

(9.73)

Figure 9.7 illustrates this construction. The parameters ![]() are selected by the designer and are assumed to be known. For a sufficiently large number,

are selected by the designer and are assumed to be known. For a sufficiently large number, ![]() , of basis functions, the representation (9.72) can approximate well a large class of power spectra.

, of basis functions, the representation (9.72) can approximate well a large class of power spectra.

Figure 9.7 The interval ![]() is divided into

is divided into ![]() sub-intervals of equal width; the center frequencies of the sub-intervals are denoted by

sub-intervals of equal width; the center frequencies of the sub-intervals are denoted by ![]() . A power spectrum

. A power spectrum ![]() is approximated as a linear combination of Gaussian basis functions centered on the

is approximated as a linear combination of Gaussian basis functions centered on the ![]() .

.

We collect the combination coefficients ![]() for primary user

for primary user ![]() into a column vector

into a column vector ![]() :

:

![]() (9.74)

(9.74)

and collect the basis functions into a row vector:

![]() (9.75)

(9.75)

Then, the power spectrum (9.72) can be expressed in the alternative inner-product form:

![]() (9.76)

(9.76)

Let ![]() denote the path loss coefficient from primary user

denote the path loss coefficient from primary user ![]() to secondary user

to secondary user ![]() . When the transmitted spectrum

. When the transmitted spectrum ![]() travels from primary user

travels from primary user ![]() to secondary user

to secondary user ![]() , the spectrum that is sensed by node

, the spectrum that is sensed by node ![]() is

is ![]() . We assume in this example that the path loss factors

. We assume in this example that the path loss factors ![]() are known and that they have been determined during a prior training stage involving each of the primary users with each of the secondary users. The training is usually repeated at regular intervals of time to accommodate the fact that the path loss coefficients can vary (albeit slowly) over time. Figure 9.8 depicts a cognitive radio system with

are known and that they have been determined during a prior training stage involving each of the primary users with each of the secondary users. The training is usually repeated at regular intervals of time to accommodate the fact that the path loss coefficients can vary (albeit slowly) over time. Figure 9.8 depicts a cognitive radio system with ![]() primary users and

primary users and ![]() secondary users. One of the secondary users (user

secondary users. One of the secondary users (user ![]() ) is highlighted and the path loss coefficients from the primary users to its location are indicated; similar path loss coefficients can be assigned to all other combinations involving primary and secondary users.

) is highlighted and the path loss coefficients from the primary users to its location are indicated; similar path loss coefficients can be assigned to all other combinations involving primary and secondary users.

Figure 9.8 A network of secondary users in the presence of two primary users. One of the secondary users is highlighted and the path loss coefficients from the primary users to its location are indicated as ![]() and

and ![]() .

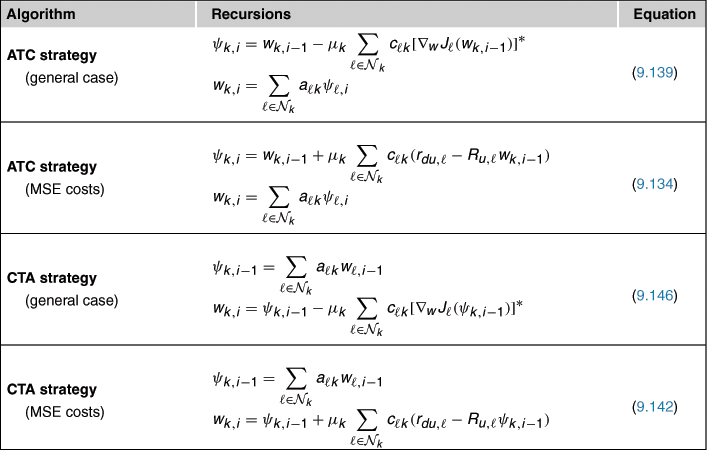

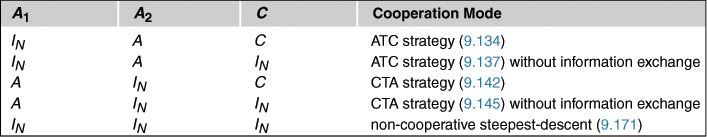

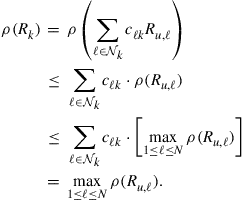

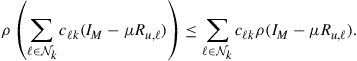

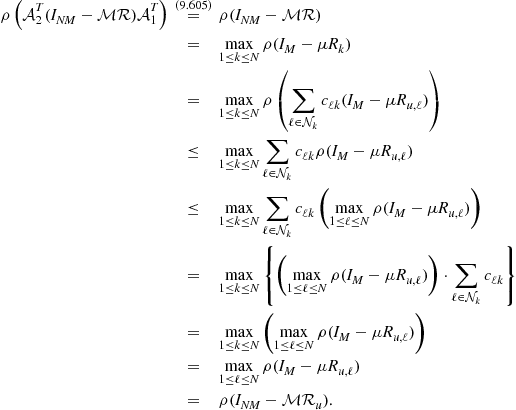

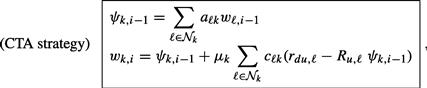

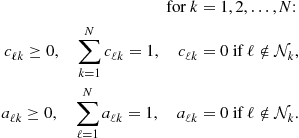

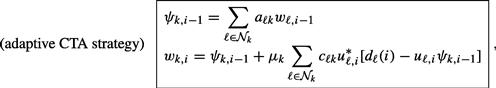

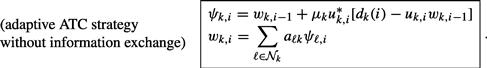

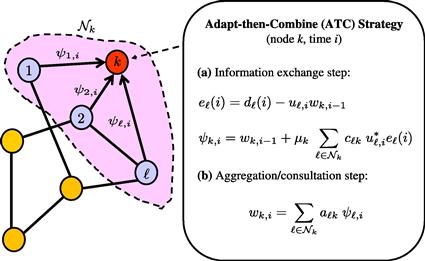

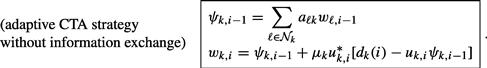

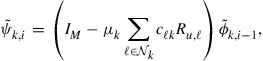

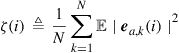

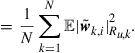

.