Array Processing in the Face of Nonidealities

Mário Costa*, Visa Koivunen* and Mats Viberg†, *Department of Signal Processing and Acoustics, School of Electrical Engineering, Aalto University/SMARAD CoE, Finland, †Division of Signal Processing and Antennas, Chalmers University of Technology, Sweden

Abstract

Real-world sensor arrays are typically composed of elements with individual directional beampatterns and are subject to mutual coupling, cross-polarization effects as well as mounting platform reflections. Errors in the array elements’ positions are also common in sensor arrays built in practice. Such nonidealities need to be taken into account for optimal array signal processing and in finding related performance bounds. Moreover, problems related to beam-steering and cancellation of the signal-of-interest in beamforming applications may be prevented. Otherwise, an array processor may experience a significant performance degradation. In this chapter we provide techniques that allow the practitioner to acquire the steering vector model of real-world sensor arrays so that various nonidealities are taken into account. Consequently, array processing algorithms may avoid performance losses caused by array modeling errors. These techniques include model-based calibration and auto-calibration methods, array interpolation, as well as the wavefield modeling principle or manifold separation technique. Robust methods are also briefly considered since they are useful when the array nonidealities are not described by the employed steering vector model. Extensive array processing examples related to direction-finding and beamforming are included demonstrating that optimal or close-to optimal performance may be achieved despite the array nonidealities.

Keywords

Sensor array; Array calibration; Array processor; Array nonidealities; Wavefield modeling; Manifold separation; Beamspace transform; Array interpolation; Beamforming; Direction finding

3.19.1 Introduction

In array signal processing one is typically interested in characterizing, synthesizing, enhancing or attenuating certain aspects of propagating wavefields by employing a collection of sensors, known as a sensor array. Characterizing a propagating wavefield refers to determining its spatial spectrum, i.e., the angular distribution of energy, so that information regarding the location of the sources generating the wavefield can be obtained, for example [1]. Synthesizing or producing a wavefield refers to generating a propagating wavefield with a desired spatial spectrum in order to focus the transmitted energy towards certain locations in space. Finally, attenuating or enhancing a received wavefield based on its spatial spectrum refers to the ability of canceling interfering sources or improving the signal-to-interference-plus-noise ratio (SINR) and maximizing the energy received from certain directions. Examples of characterization, synthesis and enhancement of propagating wavefields include direction-of-arrival (DoA) estimation, angle spread estimation in channel sounding as well as transmit and receive beamforming [2].

Traditionally, array signal processing has found applications in radar and sonar, defense systems, signal intelligence (SIGINT), and surveillance as well as imaging and biomedical applications. Radioastronomy also employs many array processing techniques [3]. More recently, it has been used in wireless communication systems, in particular in basestations that utilize beamforming techniques in controlling the interference and enhancing the signal quality. Moreover, creating advanced, measurement-based models of the radio channels in communication systems such as long-term evolution (LTE) requires capturing the directional properties of the propagation channel [4]. In global navigation satellite systems, receive beamforming techniques for anti-jamming purposes are often employed as well as DoA estimation techniques in indoor navigation systems [5]. See also Chapter 20 “Applications of Array Signal Processing” in this volume.

The propagating wavefield is typically parameterized by the angular location of sources generating such a wavefield, their polarization state, bandwidth, delay profile, and Doppler shift. These are called wavefield parameters and are given in terms of a reference system, which in the case of angular information is typically assumed to be the coordinate system common to the sensor array and propagating wavefield. Such a reference system is typically assumed to be within the physical extent of the sensor array, known as array aperture, and the angular parameterization of the propagating wavefield refers to the DoAs or directions-of-departure (DoDs), characterizing the spatial spectrum of the wavefield received or transmitted by the sensor array.

In addition to the propagating wavefield, a model describing the response of the sensor array as a function of the wavefield parameters, such as the angle-of-arrival or departure, is typically required in array processing. Such a model is defined in terms of array steering vectors and allows us to estimate the wavefield parameters from data acquired by the sensor array, and use them to design a beamformer at the receiver. Similarly, steering vectors are used to synthesize a desired wavefield and employ transmit beamforming techniques. In order to simplify these signal processing tasks and models, the standard approach to array signal processing assumes rather idealistic array steering vector models. In particular, all array elements are typically assumed to have similar omnidirectional gain patterns and the employed sensor array is assumed to have a regular geometry such as a uniform linear array (ULA), a uniform rectangular array (URA), or a uniform circular array (UCA). The resulting array steering vector models have then a very convenient form for various signal processing tasks; see Section 3.19.2. However, most of real-world sensor arrays are not well described using such an ideal array model. In fact, elements of real-world arrays have individual beampatterns, not necessarily omnidirectional, and may be subject to severe mutual coupling. Phase centers of the elements may not be exactly in the assumed positions. Moreover, mounting platform reflections and cross-polarization effects are also very common in real-world arrays.

In practical array processing applications employing ideal array steering vector models leads to a performance degradation and typically to loss of optimality for optimal array processors [6]. The limiting factor in the performance of high-resolution array processing algorithms as well as in the tightness of related theoretical performance bounds is known to be the accuracy of the employed array model rather than measurement noise [6,7]. Similarly, misspecified sensor array models may lead to a severe performance loss of beamforming techniques. Effects include steering energy towards unwanted directions, cancelation of the signal of interest (SOI) as well as amplification of interfering sources [8].

In this chapter we present techniques that allow the practitioner to acquire a realistic array steering vector model by taking into account array nonidealities such as mutual coupling, mounting platform reflections, cross-polarization effects, errors in element positions as well as individual directional beampatterns. This facilitates achieving optimal performance in the presence of nonidealities as well as mitigating problems related to beam-steering, SOI and interference cancellation. We also describe how the various approaches can be applied in the context of high-resolution direction finding and beamforming. Emphasis is given to the case when the array response, along with its nonidealities, is obtained from array calibration measurements. However, the methods and techniques discussed in this chapter are also applicable when the array response is obtained from EM simulation software, or even when ideal array models are employed. Typically, EM simulation software does not capture manufacturing errors while calibration measurement noise is unavoidable in array calibration measurements. Techniques for denoising array calibration measurements are included in this chapter as well. Sensor failure in array signal processing is not addressed herein, and the interested reader is referred to [9,10] and references therein. Many of such techniques aim at determining the inoperable sensors’ outputs from the available array snapshots, and proceed with the array processing tasks as if the sensor array were fully operable. Then, realistic array steering vector models are still required and the methods discussed herein may also be useful in such circumstances.

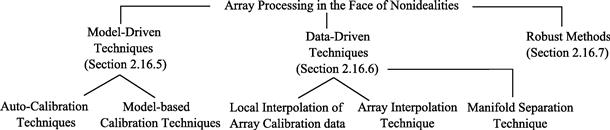

The classification used in this chapter for the various techniques capable of dealing with array nonidealities is given in Figure 19.1. We classify the methods trying to capture the nonidealities as model-driven and data-driven techniques. Robust methods are a third class of methods that acknowledge that the array model contains errors without trying to characterize such nonidealities. Instead, robust estimation methods trade-off desirable properties such as high-resolution or optimality for reliability in the face of uncertainties in the array response. In model-driven techniques, the array nonidealities are described using an explicit formulation for each nonideality. The parameters of such a formulation may be estimated from array calibration measurements or simultaneously with wavefield parameters. The latter approach is called auto-calibration technique [7,11–14]. Data-driven techniques use array calibration data as a starting point and capture the nonidealities implicitly by using basis function expansion, interpolation, approximation or nonparametric estimation techniques. Data-driven methods include local interpolation of array calibration data [6], array interpolation technique [15–17], and manifold separation technique [18,19] which stems from the wavefield modeling principle [20–22]. These techniques do not employ any explicit model for the array nonidealities. In data-driven techniques the array nonidealities are described by the basis function coefficients, which may be estimated from array calibration measurements. Hence, they allow the practitioner to develop array processing algorithms that do not require explicit formulation for the nonidealities, are independent of the sensor array, including its geometry and individual element beampatterns while obtaining close to optimal performance. Finally, robust methods try to bound the influence of modeling errors in the estimation process instead of trying to capture them.

This chapter is organized as follows. First, conventional array steering vector models and widely employed techniques in array processing are briefly described in Section 3.19.2. Then, typical explicit formulations for array nonidealities are described in Section 3.19.3. In Section 3.19.4, array calibration measurements in controlled environments are briefly described. Section 3.19.5 includes model-driven techniques that are based on explicit formulations of the array nonidealities. Section 3.19.6 considers data-driven techniques. In Section 3.19.7, robust methods are described. Section 3.19.8 includes extensive array processing examples. Conclusions are given in Section 3.19.9.

3.19.2 Ideal array signal models

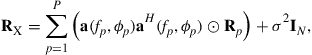

The conventional narrowband N-element array output model due to a propagating wavefield, generated by ![]() far-field sources, is

far-field sources, is

![]() (19.1)

(19.1)

where![]() , and

, and ![]() denote the array steering matrix, transmitted waveforms, and sensor noise, respectively. The discrete time instant is denoted by

denote the array steering matrix, transmitted waveforms, and sensor noise, respectively. The discrete time instant is denoted by ![]() while

while ![]() and

and ![]() represent the co-elevation and azimuth angles of the P sources generating the propagating wavefield, respectively. Typically, the co-elevation angle

represent the co-elevation and azimuth angles of the P sources generating the propagating wavefield, respectively. Typically, the co-elevation angle ![]() is measured down from the z-axis and the azimuth angle

is measured down from the z-axis and the azimuth angle ![]() is measured counter-clockwise in the xy-plane. In Eq. (19.1), the N-dimensional observation vector

is measured counter-clockwise in the xy-plane. In Eq. (19.1), the N-dimensional observation vector ![]() is known as array snapshot. The array steering matrix

is known as array snapshot. The array steering matrix ![]() is composed of P array steering vectors

is composed of P array steering vectors ![]() , each representing the array response to a plane-wave impinging on the sensor array from directions

, each representing the array response to a plane-wave impinging on the sensor array from directions ![]() . In array processing, the employed sensor array is typically assumed to be unambiguous in the sense that any collection of

. In array processing, the employed sensor array is typically assumed to be unambiguous in the sense that any collection of ![]() steering vectors with different angles forms a linearly independent set.

steering vectors with different angles forms a linearly independent set.

Assuming that the employed sensor array lies in the xy-plane, and is not subject to nonidealities such as mutual coupling or cross-polarization effects, the corresponding array steering vector model may be written as

![]() (19.2)

(19.2)

where ![]() denotes the gain function of the nth element. In (19.2),

denotes the gain function of the nth element. In (19.2), ![]() and

and ![]() denote the angular wavenumber and wavelength, respectively. Moreover,

denote the angular wavenumber and wavelength, respectively. Moreover, ![]() denote the location (in meters) of the nth element in the xy-plane, relative to the origin of the assumed coordinate system. Note that other wavefield parameters such as the polarization of the sources may also be included in the array steering vector model in (19.2). This is briefly discussed in Section 3.19.8.

denote the location (in meters) of the nth element in the xy-plane, relative to the origin of the assumed coordinate system. Note that other wavefield parameters such as the polarization of the sources may also be included in the array steering vector model in (19.2). This is briefly discussed in Section 3.19.8.

Typically, in array signal processing the steering vector model in (19.2) is further simplified by assuming that the array elements are all identical and have omnidirectional gain functions, i.e., ![]() , and are arranged in regular geometries. Commonly used ideal steering vector models include those of ULAs, UCAs, and URAs:

, and are arranged in regular geometries. Commonly used ideal steering vector models include those of ULAs, UCAs, and URAs:

![]() (19.3a)

(19.3a)

![]() (19.3b)

(19.3b)

![]() (19.3c)

(19.3c)

where ![]() and d denote the Kronecker product and the inter-element spacing, respectively. In (19.3b),

and d denote the Kronecker product and the inter-element spacing, respectively. In (19.3b), ![]() and

and ![]() denote the radius of the circular array and the angular position of the nth element, respectively.

denote the radius of the circular array and the angular position of the nth element, respectively.

Assuming wavefield propagation in the xy-plane as well as uncorrelation between transmitted signals and sensor noise, the array covariance matrix of (19.1) is given by

![]() (19.4)

(19.4)

where ![]() and

and ![]() denote the covariance matrices of the transmitted signals and sensor noise, respectively. The signal covariance matrix

denote the covariance matrices of the transmitted signals and sensor noise, respectively. The signal covariance matrix ![]() may be rank deficient, with rank

may be rank deficient, with rank ![]() , due to highly correlated or coherent sources that may be caused by specular multipath propagation, for example. Sensor noise is typically assumed to be zero-mean complex-circular Gaussian distributed

, due to highly correlated or coherent sources that may be caused by specular multipath propagation, for example. Sensor noise is typically assumed to be zero-mean complex-circular Gaussian distributed ![]() . In practice, the exact covariance matrix in (19.4) is unknown and it is typically estimated from a collection of

. In practice, the exact covariance matrix in (19.4) is unknown and it is typically estimated from a collection of ![]() array snapshots as

array snapshots as

(19.5)

(19.5)

Signal models (19.1) and (19.4) are used in most array processing tasks such as beamforming and direction finding. In estimation problems, maximum likelihood methods are commonly used to find the optimal parameter estimates whereas beamformers typically target at enhancing the signal by maximizing the SINR at the array output.

A popular criterion for evaluating the performance of beamformers is the array output SINR [8]:

(19.6)

(19.6)

where ![]() and

and ![]() denote the angle from where the SOI impinges on the sensor array and the corresponding signal power, respectively. Moreover,

denote the angle from where the SOI impinges on the sensor array and the corresponding signal power, respectively. Moreover, ![]() denotes the beamformer weight vector and

denotes the beamformer weight vector and ![]() the covariance matrix due to both interfering signals

the covariance matrix due to both interfering signals ![]() and sensor noise. The optimal weight vector that maximizes (19.6) is [8]

and sensor noise. The optimal weight vector that maximizes (19.6) is [8]

![]() (19.7)

(19.7)

where ![]() may be arbitrary since it does not affect the SINR in (19.6). Choosing

may be arbitrary since it does not affect the SINR in (19.6). Choosing ![]() leads to the well-known minimum variance distortionless response (MVDR) beamformer, also known as Capon beamformer [8,23]. Note that using the exact

leads to the well-known minimum variance distortionless response (MVDR) beamformer, also known as Capon beamformer [8,23]. Note that using the exact ![]() in place of

in place of ![]() in (19.7) does not affect the array output SINR.

in (19.7) does not affect the array output SINR.

In Section 3.19.1, we have mentioned that the DoAs of the sources generating the propagating wavefield may be found from its spatial spectrum. The location of the sources are associated with the angles of the spatial spectrum with larger power. We may therefore view DoA estimation as a spectrum estimation problem and employ beamforming techniques for estimating the angular distribution of power of the wavefield received by the sensor array. Such an approach is called nonparametric or spectral-based approach to DoA estimation since it does not require a parametric model for the sources, nor the number of sources generating the wavefield. These techniques are versatile but typically have poor resolution and lead to suboptimal DoA estimates. Informally, resolution refers to the ability of distinguishing between two closely spaced sources. The resolution of beamforming techniques is limited by the array aperture, i.e., the physical size of the array in wavelengths, as well as SNR, and does not improve with increasing number of array snapshots.

One way of improving the resolution limit imposed by the array aperture is by making further assumptions regarding the sources generating the propagating wavefield. In particular, we first assume that the number of sources P as well as rank of the signal covariance matrix ![]() are known or have been correctly estimated from the array output. The radiating sources are also assumed to be located in the far-field of the sensor arrays as well as point-emitters in the sense that the field radiated by each source can be assumed to have originated from a single location in space. Finally, we assume that the number of sources generating the wavefield is smaller than the number of array elements. Then, the array output in (19.1) is known as low-rank signal model and the array covariance matrix in (19.4) can be written as

are known or have been correctly estimated from the array output. The radiating sources are also assumed to be located in the far-field of the sensor arrays as well as point-emitters in the sense that the field radiated by each source can be assumed to have originated from a single location in space. Finally, we assume that the number of sources generating the wavefield is smaller than the number of array elements. Then, the array output in (19.1) is known as low-rank signal model and the array covariance matrix in (19.4) can be written as

![]() (19.8)

(19.8)

Here, ![]() and

and ![]() contain the eigenvectors of

contain the eigenvectors of ![]() spanning the so-called signal and noise subspaces while

spanning the so-called signal and noise subspaces while ![]() and

and ![]() contain the corresponding eigenvalues in their diagonal. Techniques employing the low-rank signal model are called subspace methods and are a class of high-resolution DoA estimation algorithms [2]. They exploit the fact that the columns of

contain the corresponding eigenvalues in their diagonal. Techniques employing the low-rank signal model are called subspace methods and are a class of high-resolution DoA estimation algorithms [2]. They exploit the fact that the columns of ![]() span the same subspace as the columns of the steering matrix

span the same subspace as the columns of the steering matrix ![]() (in the case of coherent signals

(in the case of coherent signals ![]() is contained in the subspace spanned by the columns of

is contained in the subspace spanned by the columns of ![]() ), and that both

), and that both ![]() and

and ![]() are orthogonal to

are orthogonal to ![]() . Unlike beamforming techniques, the resolution of subspace methods improves with increasing number of array snapshots.

. Unlike beamforming techniques, the resolution of subspace methods improves with increasing number of array snapshots.

A commonly used lower bound on the estimation error variance of any unbiased estimator is the Cramér-Rao lower Bound (CRB) [24]. Assuming that both signal and noise are zero-mean complex-circular Gaussian distributed, the unconditional CRB for azimuth angle estimation is [25]

![]() (19.9)

(19.9)

where ![]() . Moreover,

. Moreover, ![]() and

and ![]() denote the Hadamard-Schur product and a projection matrix onto the nullspace of

denote the Hadamard-Schur product and a projection matrix onto the nullspace of ![]() , respectively. An estimator with an error covariance matrix that equals (19.9) is called statistically efficient. In particular, the stochastic maximum likelihood estimator is asymptotically

, respectively. An estimator with an error covariance matrix that equals (19.9) is called statistically efficient. In particular, the stochastic maximum likelihood estimator is asymptotically ![]() statistically efficient, and the azimuth-angle estimates are obtained as [26]

statistically efficient, and the azimuth-angle estimates are obtained as [26]

![]() (19.10)

(19.10)

Here, ![]() denotes the determinant of a matrix. Moreover,

denotes the determinant of a matrix. Moreover, ![]() and

and ![]() denote estimates of the signal covariance matrix and sensor noise, respectively. See [26] and chapter DOA Estimation Methods and Algorithms of this book for details.

denote estimates of the signal covariance matrix and sensor noise, respectively. See [26] and chapter DOA Estimation Methods and Algorithms of this book for details.

Asymptotically optimal DoA estimation algorithms such as the stochastic maximum likelihood estimator, and beamforming techniques such as the Capon beamformer, are often very sensitive to uncertainties in the array steering vector model and sensor noise (and interferers) statistics [6,8]. Under these scenarios, optimal DoA estimators may be subject to bias and increased variance while optimum beamformers may suffer from SOI cancellation effect. Uncertainty in noise statistics may be due to outliers, i.e., highly deviating observations that do not follow the same pattern as the majority of the data, or incorrect assumptions on the noise environment. For example, man-made interference has typically a non-Gaussian heavy-tailed distribution, for which (19.5) may no longer be a consistent estimator of ![]() [27]. Uncertainties in the steering vector model are often due to misspecification or lack of knowledge of various array nonidealities. These include:

[27]. Uncertainties in the steering vector model are often due to misspecification or lack of knowledge of various array nonidealities. These include:

• Uncertainty in array elements’ beampatterns and positions.

• Mounting platform reflections.

• Departures from narrowband, far-field and point-source assumptions.

In subsequent sections we describe in detail various rigorous and practical approaches for taking the aforementioned nonidealities of real-world arrays into account by array processing techniques. For details on array processing under uncertainty in noise statistics the reader is referred to [27].

3.19.3 Examples of array nonidealities

This section describes the main nonidealities experienced in real-world sensor arrays. We discuss their effects in array processing techniques, and provide explicit formulations describing such array nonidealities that can be found in the literature. These models are rather specific but allow one to understand the departure from the ideal array model as well as to incorporate such nonidealities into both DoA estimators and beamforming techniques.

3.19.3.1 Mutual coupling

Mutual coupling (also known as cross-talk) refers to interactions among array elements. The signal received by an element affects the signals received by the other array elements, and similarly for signal transmission [28, Chapter 2]. Typically, mutual coupling is inversely proportional to the inter-element spacing as well as isolation among array elements. Mutual coupling distorts the elements’ radiation patterns and decreases the efficiency of sensor arrays. Mobile wireless terminals equipped with antenna arrays are a typical example where mutual coupling is significant since the whole chassis, along with its other components, can be considered part of the antenna [5]. Array processing algorithms typically experience a significant loss of performance when the employed array steering vector model does not account for mutual coupling [6,29].

The steering vector of a sensor array subject to mutual coupling is typically modeled as [6]

![]() (19.11)

(19.11)

where ![]() denotes the mutual coupling matrix and

denotes the mutual coupling matrix and ![]() is known as nominal array steering vector. The

is known as nominal array steering vector. The ![]() element describes the contribution of the nth array element to the output of the mth sensor. Nominal sensor arrays are typically considered to be ideal uniform arrays with regular geometries of the form (16.3), and the motivation for their use is based on the assumption that real-world arrays may be described as a perturbation from an ideal sensor array. For example, if the nominal array is assumed to be an ideal UCA the mutual coupling matrix takes the form of a circulant matrix [30]. Note that a diagonal matrix

element describes the contribution of the nth array element to the output of the mth sensor. Nominal sensor arrays are typically considered to be ideal uniform arrays with regular geometries of the form (16.3), and the motivation for their use is based on the assumption that real-world arrays may be described as a perturbation from an ideal sensor array. For example, if the nominal array is assumed to be an ideal UCA the mutual coupling matrix takes the form of a circulant matrix [30]. Note that a diagonal matrix ![]() in (19.11) may also describe errors due to the receiver front-end such as imbalance in the I/Q channels. This is also discussed in Section 3.19.3.5.

in (19.11) may also describe errors due to the receiver front-end such as imbalance in the I/Q channels. This is also discussed in Section 3.19.3.5.

In practice, the mutual coupling matrix needs to be determined from array calibration measurements and a nominal array steering vector should be specified by the practitioner. Typically, the mutual coupling matrix is obtained from the measured network parameters of the sensor array, including scattering and transmission coefficients, which may be a rather tedious task [31,32, Chapter 2]. Moreover, determining the nominal array steering vector is typically based on trial and error, and rely on visual inspection of the real-world sensor array.

3.19.3.2 Uncertainty in array elements’ beampatterns and positions

Often, elements’ beampatterns and positions in real-world arrays are not fully known. This may be caused by normal variability in the manufacturing process in the sense that each array element has an individual beampattern or may suffer from manufacturing errors. In fact, elements’ phase centers in real-world arrays do not typically correspond to their physical locations due to interactions with other array elements and mounting platform. Misspecification of the array element’s beampatterns and phase centers leads to loss of performance in array processing techniques.

A commonly employed model taking into account individual beampatterns and position errors is

![]() (19.12)

(19.12)

where ![]() , and

, and ![]() denote the error in the nth element’s position, with respect to the nominal array steering vector, and corresponding directional beampattern. Parameters

denote the error in the nth element’s position, with respect to the nominal array steering vector, and corresponding directional beampattern. Parameters ![]() may be estimated from calibration measurements taken in controlled environments while the gain function

may be estimated from calibration measurements taken in controlled environments while the gain function ![]() may be measured at a discrete set of points and interpolated using appropriate basis functions such as splines [6]. The latter approach leads to a technique known as array interpolation and it is described in Section 3.19.6.

may be measured at a discrete set of points and interpolated using appropriate basis functions such as splines [6]. The latter approach leads to a technique known as array interpolation and it is described in Section 3.19.6.

Alternatively, one may specify a parametric model for ![]() (i.e., functionally dependent on

(i.e., functionally dependent on ![]() ) by trial and error, and visual inspection. For example, in the case of electrically short (relative to the wavelength) x-oriented dipoles one can use the following approximation

) by trial and error, and visual inspection. For example, in the case of electrically short (relative to the wavelength) x-oriented dipoles one can use the following approximation ![]() . However, in the general case of electrically large antennas and patch elements, specifying a parametric model for

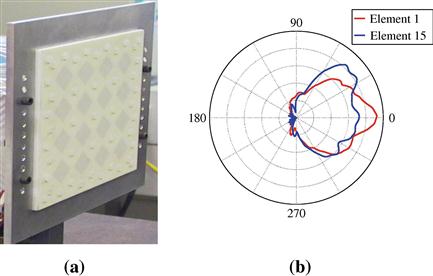

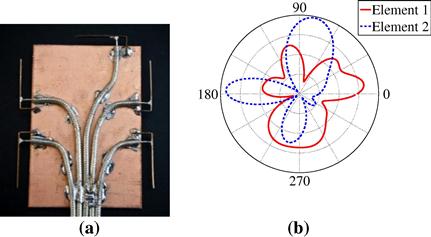

. However, in the general case of electrically large antennas and patch elements, specifying a parametric model for ![]() may be very challenging. An example of two gain functions of a real-world array is illustrated in Figure 19.2b.

may be very challenging. An example of two gain functions of a real-world array is illustrated in Figure 19.2b.

3.19.3.3 Cross-polarization effects

Cross-polarization effects refer to “leakage” that, for example, a vertically polarized element suffers from an horizontally polarized wavefield. They are typically characterized by the cross-polarization discrimination (XPD), denoting the ratio between the power received by an antenna due to co-polarized and cross-polarized wavefields. The ratio between the powers received in different polarizations is commonly expressed in dB scale. XPD defines quantitatively how well the two received channels that use different polarization orientations are isolated. Antennas with a large XPD are essentially insensitive to cross-polarization effects and the power received from cross-polarized wavefields may be neglected. When mounted on an array the antennas’ XPD may change significantly due to complex EM interactions among the array elements, scatterers, and mounting platform. In such cases, high-resolution DoA estimators that do not take cross-polarization effects into account typically lead to estimates that may contain significant bias and excess variance [33].

The steering vector of a sensor array that is subject to cross-polarization effects may be described as

![]() (19.13)

(19.13)

where ![]() and

and ![]() denote the array responses due to a co-polarized and cross-polarized wavefields, respectively. Moreover,

denote the array responses due to a co-polarized and cross-polarized wavefields, respectively. Moreover, ![]() define the polarization of the wavefield. For example, for a co-polarized wavefield Eq. (19.13) simplifies to

define the polarization of the wavefield. For example, for a co-polarized wavefield Eq. (19.13) simplifies to ![]() while for a cross-polarized wavefield we have

while for a cross-polarized wavefield we have ![]() .

.

Parametric modeling of ![]() and

and ![]() in (19.13) may now be done by employing models (19.11) and (19.12). However, specifying a nominal array steering vector model for the cross-polarized component

in (19.13) may now be done by employing models (19.11) and (19.12). However, specifying a nominal array steering vector model for the cross-polarized component ![]() is even more challenging than that of the co-polarized component, where one may approximate the element’s gain function as

is even more challenging than that of the co-polarized component, where one may approximate the element’s gain function as ![]() . Typically, visual inspection does not help much in determining a parametric model for

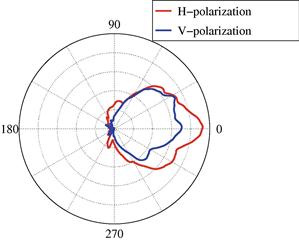

. Typically, visual inspection does not help much in determining a parametric model for ![]() . An example of gain functions corresponding to an horizontally and vertically polarized wavefield is illustrated in Figure 19.3.

. An example of gain functions corresponding to an horizontally and vertically polarized wavefield is illustrated in Figure 19.3.

Figure 19.3 Gain functions corresponding to the horizontal and vertical polarization components of an element of the real-world rectangular array from Figure 19.2a.

3.19.3.4 Departures from narrowband assumption

The narrowband signal model commonly used in array processing (see Section 3.19.2) assumes that the time-bandwidth product is “small,” i.e.,

![]() (19.14)

(19.14)

where ![]() and

and ![]() denote the bandwidth of the transmitted signal and the wavefront’s propagation delay across the array aperture, respectively. A rule of thumb for considering a signal narrowband is

denote the bandwidth of the transmitted signal and the wavefront’s propagation delay across the array aperture, respectively. A rule of thumb for considering a signal narrowband is ![]() [34]. In practice, the time-bandwidth product may be such that the narrowband assumption no longer holds true. This is also the case in focusing-based wideband array processing, where signals’ bandwidth is divided into a set of narrowband channels and narrowband processing is applied to each narrowband bin or to a focused covariance matrix [35]. Alternatively, genuine space-time signal processing can be employed as in STAP radar systems [36,37].

[34]. In practice, the time-bandwidth product may be such that the narrowband assumption no longer holds true. This is also the case in focusing-based wideband array processing, where signals’ bandwidth is divided into a set of narrowband channels and narrowband processing is applied to each narrowband bin or to a focused covariance matrix [35]. Alternatively, genuine space-time signal processing can be employed as in STAP radar systems [36,37].

Assuming an array with a flat frequency response (with linear phase) over the signals’ bandwidth, the array covariance matrix may be modeled as [34,38]

(19.15)

(19.15)

where ![]() denotes the element-wise Hadamard-Schur product. Moreover,

denotes the element-wise Hadamard-Schur product. Moreover, ![]() denotes the array steering vector with a linear phase response over frequency and

denotes the array steering vector with a linear phase response over frequency and ![]() contains the correlation of the pth signal among the array elements. For signals with small time-bandwidth product

contains the correlation of the pth signal among the array elements. For signals with small time-bandwidth product ![]() equals a matrix of ones and (19.15) reduces to (19.4). However, when

equals a matrix of ones and (19.15) reduces to (19.4). However, when ![]() is non-negligible the rank of

is non-negligible the rank of ![]() due to a single signal is larger than one, and the low-rank structure of the array covariance matrix in (19.4) is lost. Thus, high-resolution subspace methods may not be applicable anymore [34]. The influence of non-negligible time-bandwidth product on DoA estimators and beamforming techniques has been addressed in [34,38]. In most cases, the error due to non-negligible time-bandwidth product can be neglected when compared to finite sample effects. However, when sources are closely spaced or have large difference in power, such an error may be significant.

due to a single signal is larger than one, and the low-rank structure of the array covariance matrix in (19.4) is lost. Thus, high-resolution subspace methods may not be applicable anymore [34]. The influence of non-negligible time-bandwidth product on DoA estimators and beamforming techniques has been addressed in [34,38]. In most cases, the error due to non-negligible time-bandwidth product can be neglected when compared to finite sample effects. However, when sources are closely spaced or have large difference in power, such an error may be significant.

3.19.3.5 Errors due to receiver front-end architectures

Receiver architectures are commonly classified as superheterodyne, low-IF (intermediate frequency) or direct-conversion receivers. Each front-end architecture is subject to various nonidealities such as I/Q-imbalance, DC-offset, and interfering image frequencies that impact the performance of array processors.

Superheterodyne receivers typically consist of two or more IF stages in order to convert the radio-frequency (RF) signal to baseband. They require image rejection filters at each downconversion stage, which may be difficult to integrate on-chip with other components. Power consumption and size may be significant [39]. Low-IF receivers convert the RF signal to baseband in two IF stages, similarly to the superheterodyne receiver. The need for image rejection filters is overcome by the use of two mixers, one for each I/Q channels, in order to cancel the image. In practice, gain and phase imbalances in the I/Q channels limit the effectiveness of such image cancellation approach. Direct-conversion (or zero-IF) receivers downconvert the RF signal to baseband in a single stage. There is no need for image rejection filters nor image cancellation. However, they typically suffer from DC offset, and I/Q imbalances in the demodulation process are still present.

I/Q imbalances in the demodulation process are common to all of the aforementioned receivers and have been studied in the context of array signal processing in [40]. They appear as phase and amplitude distortions in the I and Q branches of the demodulated signal. Similarly to errors caused by departures from narrowband assumption, the rank of the covariance matrix due to received signals increases by two and the noise eigenvalues are no longer identical. The low-rank structure of the array covariance matrix may be lost and subspace-based array processing methods experience a performance degradation.

One should note that I/Q imbalances may be significantly mitigated by using advanced digital downconverters, either at intermediate or radio frequencies. The commonly used low-rank model is then a good approximation of the array covariance matrix, given that a sufficient number of quantization bits are used [41]. Power consumption and high cost of ADCs operating at GHz and large bandwidths may be a limiting factor in practice.

We also note that antenna arrays using a single receiver, known as switched or time division multiplexing receivers, are often employed in practice due to their low-power, cost, and size [41,42]. Typically, switched array receivers suffer from phase errors that need to be estimated and taken into account by array processing methods.

3.19.3.6 Effects of nonlinear elements

Linearity of the sensor array is among the most common assumptions in the array processing literature. Linearity, in the sense of superposition principle, essentially means that the array output due to a propagating wavefield generated by multiple sources equals the sum of array outputs due to a wavefield generated by each source separately. Most sensor arrays can be considered linear systems and the superposition principle may then be employed [32]. However, active elements such as low-noise amplifiers (LNAs) commonly used in receiver architectures are nonlinear systems and the superposition principle at the array output (after the RF front-end) may no longer hold true [43]. Typically, LNAs trade-off linearity for gain and noise figures. Some waveforms that have large peak to average power ratio such as OFDM (orthogonal frequency-division multiplexing) are particularly sensitive to nonlinearities of power amplifiers. Digital signal processing techniques for mitigating nonlinear effects due to RF front-end include pre- as well as post-distortion techniques [44].

3.19.4 Array calibration

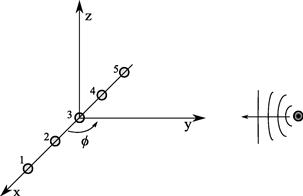

The goal of array calibration is to capture the combined effects of sensor positions, their unknown gain and phase, mutual coupling characteristics as well as cross-polarization effects and mounting platform reflections. In array calibration one typically acquires the so-called array measurement matrix ![]() . The array measurement matrix is composed of a collection of Q steering vectors corresponding to the angles contained in the vector

. The array measurement matrix is composed of a collection of Q steering vectors corresponding to the angles contained in the vector ![]() . The standard approach of obtaining the array measurement matrix is by taking the sensor array into an anechoic chamber and measuring its response to a source, known as probe, from

. The standard approach of obtaining the array measurement matrix is by taking the sensor array into an anechoic chamber and measuring its response to a source, known as probe, from ![]() different known angles. The antenna array is usually mounted on a mechanical device, called positioner, that rotates the sensor array in azimuth (and possibly in elevation) while the probe is held fixed; see Figure 19.4. Note that the coordinate system employed for DoA estimation is defined by both the positioner and probe.

different known angles. The antenna array is usually mounted on a mechanical device, called positioner, that rotates the sensor array in azimuth (and possibly in elevation) while the probe is held fixed; see Figure 19.4. Note that the coordinate system employed for DoA estimation is defined by both the positioner and probe.

Figure 19.4 Example of the standard array calibration setup. A ULA is rotated in the xy-plane around its center element while a probe is held fixed in the far-field of the ULA.

The array measurement matrix fully describes a given real-world sensor array as well as all its nonidealities. However, array calibration measurements are typically taken in controlled environments such as anechoic chambers, and may be subject to various errors including sensor noise, reflections from the anechoic chamber, imperfections of the employed positioner, attenuations and phase-drifts due to cabling, small distance between the antenna array and probe (i.e., not in far-field), and effects of the probe (e.g., not a point-source). These errors need to be corrected so that array processing techniques may employ an accurate model of the real-world array response. Approaches for reducing the aforementioned errors occurring during array calibration measurements can be found in [32,33,45]. Moreover, array calibration measurements do not provide any steering vector model, i.e., an explicit formulation describing the array response as a function of the wavefield parameters. Such difficulties may be alleviated by employing data-driven techniques described in Section 3.19.6.

Typically, the array measurement matrix contains the array response to angles spanning the whole angular region, such as ![]() in the azimuth-only case. However, in some applications the array response may only be measured over a small angular sector, and a partial array calibration is obtained. Examples include applications where the antenna array is deployed on an environment where the sources are known a priori to be confined to an angular sector or when the array dimensions do not allow a full calibration. Data-driven techniques described in Section 3.19.6 are also applicable in these cases.

in the azimuth-only case. However, in some applications the array response may only be measured over a small angular sector, and a partial array calibration is obtained. Examples include applications where the antenna array is deployed on an environment where the sources are known a priori to be confined to an angular sector or when the array dimensions do not allow a full calibration. Data-driven techniques described in Section 3.19.6 are also applicable in these cases.

3.19.5 Model-driven techniques

In this section, we describe techniques that assume an explicit model describing each array nonideality. The array nonidealities may be estimated, by employing such a model, from array calibration measurements or directly from the array output data, simultaneously with the wavefield parameters. The latter approach is known as auto-calibration technique. Moreover, the array nonidealities may be assumed to be unknown deterministic or random parameters with known prior distribution. Hence, methods from estimation theory may be applied to estimate the array nonidealities.

3.19.5.1 Deterministic approach

Consider an N-element planar array lying in the xy-plane and denote the uncertainty in the array elements’ positions by ![]() . In this case, the array is not assumed to be subject to other nonidealities such as cross-polarization effects or individual beampatterns. We may estimate the sensors’ misplacements

. In this case, the array is not assumed to be subject to other nonidealities such as cross-polarization effects or individual beampatterns. We may estimate the sensors’ misplacements ![]() from array calibration measurements as [6]

from array calibration measurements as [6]

![]() (19.16)

(19.16)

where ![]() denotes a matrix composed of a collection of array steering vectors describing

denotes a matrix composed of a collection of array steering vectors describing ![]() in a closed-form, such as in (19.12), and

in a closed-form, such as in (19.12), and ![]() denotes the Frobenius norm of a matrix. In some cases, a closed-form solution to (19.16) may be obtained. For example, using the mutual coupling matrix

denotes the Frobenius norm of a matrix. In some cases, a closed-form solution to (19.16) may be obtained. For example, using the mutual coupling matrix ![]() in place of

in place of ![]() in (19.16) yields the following solution:

in (19.16) yields the following solution:

![]() (19.17)

(19.17)

where we have used the array model (19.11) and assumed that ![]() has full row-rank. Notation

has full row-rank. Notation ![]() in (19.17) denotes the Moore-Penrose pseudo-inverse of a matrix. Similarly, by letting

in (19.17) denotes the Moore-Penrose pseudo-inverse of a matrix. Similarly, by letting ![]() denote angle-independent gain and phase errors such as

denote angle-independent gain and phase errors such as ![]() , we obtain the following solution:

, we obtain the following solution:

![]() (19.18)

(19.18)

where ![]() and

and ![]() denote the nth row of

denote the nth row of ![]() and

and ![]() , respectively. In case one is dealing with electrically large uniform linear arrays, the mutual coupling matrix may be approximated by a banded matrix. Recall that mutual coupling is typically inversely proportional to inter-element spacing thus, such an effect may be negligible among sensors located at both ends of the linear array. A least-squares estimator to such a structured mutual coupling matrix may also be found in a closed-form. Computationally efficient solutions may employ appropriate LU-factorization and back-substitution methods [46, Chapter 4]. A summary of model-driven calibration is given in Table 19.1.

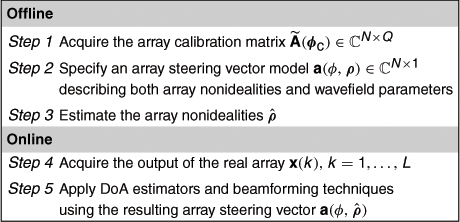

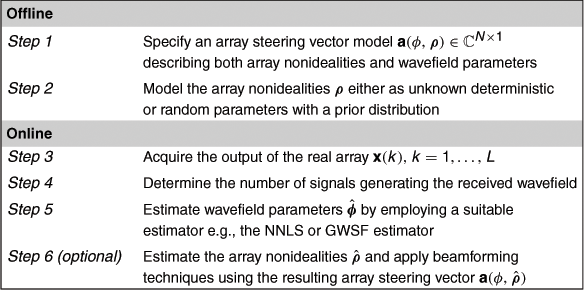

, respectively. In case one is dealing with electrically large uniform linear arrays, the mutual coupling matrix may be approximated by a banded matrix. Recall that mutual coupling is typically inversely proportional to inter-element spacing thus, such an effect may be negligible among sensors located at both ends of the linear array. A least-squares estimator to such a structured mutual coupling matrix may also be found in a closed-form. Computationally efficient solutions may employ appropriate LU-factorization and back-substitution methods [46, Chapter 4]. A summary of model-driven calibration is given in Table 19.1.

Alternatively, we may jointly estimate array nonidealities and wavefield parameters from the output of the sensor array [11–13]. For example, joint estimation of the nonidealities ![]() and azimuth angles of P sources

and azimuth angles of P sources ![]() generating a propagating wavefield may be accomplished by employing the following nonlinear least-squares estimator [12]

generating a propagating wavefield may be accomplished by employing the following nonlinear least-squares estimator [12]

![]() (19.19)

(19.19)

Typically, criterion in (19.19) is minimized in an alternating manner between the array nonidealities ![]() and the DoAs

and the DoAs ![]() [47]. Since (19.19) is highly nonlinear, and potentially with multiple local minima, the minimization should be initialized with “good enough” initial values so that the global minimum can be attained. Note that the array nonidealities

[47]. Since (19.19) is highly nonlinear, and potentially with multiple local minima, the minimization should be initialized with “good enough” initial values so that the global minimum can be attained. Note that the array nonidealities ![]() are typically nuisance parameters. The statistical performance of the wavefield parameter estimates may suffer due to the higher dimension of the parametric model as well as estimation errors in the nuisance parameters.

are typically nuisance parameters. The statistical performance of the wavefield parameter estimates may suffer due to the higher dimension of the parametric model as well as estimation errors in the nuisance parameters.

The main issue with auto-calibration techniques is that of parameter identifiability [6]. In general, both ![]() and

and ![]() cannot be uniquely estimated unless a nonlinear sensor array is employed and additional assumptions regarding sensors’ locations as well as DoAs are made [12,13]. For example, if the array orientation is unknown the DoAs may not be uniquely estimated since they represent the angles relative to the orientation of the sensor array. Alternatively, one may assume that the array nonidealities are random parameters with a known prior distribution, and employ Bayesian estimators. This is discussed next.

cannot be uniquely estimated unless a nonlinear sensor array is employed and additional assumptions regarding sensors’ locations as well as DoAs are made [12,13]. For example, if the array orientation is unknown the DoAs may not be uniquely estimated since they represent the angles relative to the orientation of the sensor array. Alternatively, one may assume that the array nonidealities are random parameters with a known prior distribution, and employ Bayesian estimators. This is discussed next.

3.19.5.2 Bayesian approach

In the previous section we have seen that the identifiability problem in auto-calibration techniques could be alleviated by making additional assumptions regarding the nonidealities or wavefield parameters. One such an assumption considers the array nonidealities to be random parameters with a known prior distribution. In addition to alleviating the identifiability problem, such an assumption allows one (at least in principle) to “integrate out” the array nonidealities and focus on the wavefield parameters, instead [24]. For example, in mass production of sensor arrays one could model array elements’ misplacements due to manufacturing errors as bivariate Gaussian distributed and proceed with Bayesian type of estimators for the wavefield parameters [7,14].

One such an estimator is the generalized weighted subspace fitting (GWSF) algorithm proposed in [7]. It extends the MODE [48] and WSF [49] by taking into account prior information (first and second moments) of the array nonidealities in an optimal manner. It provides asymptotically efficient estimates provided the assumption on the prior Gaussian distribution is valid and array parameterization is known. The DoA estimates obtained by the GWSF are given by [7]

![]() (19.20)

(19.20)

where ![]() and

and ![]() denotes a projection matrix onto the orthogonal complement of

denotes a projection matrix onto the orthogonal complement of

(19.21)

(19.21)

Furthermore, ![]() in (19.20) denotes a (positive-definite) weighting matrix that ensures asymptotically minimum variance unbiased estimates and the superscript

in (19.20) denotes a (positive-definite) weighting matrix that ensures asymptotically minimum variance unbiased estimates and the superscript ![]() denotes complex-conjugate. The first and second-order moments of the array nonidealities enter criterion (19.20) through

denotes complex-conjugate. The first and second-order moments of the array nonidealities enter criterion (19.20) through ![]() ; see [7] for details. Criterion (19.20) is an asymptotic approximation of the maximum a posteriori estimator for simultaneous estimation of array and wavefield parameters [14]. It may be implemented by means of polynomial rooting techniques when the nominal array steering vector has a form similar to that of an ideal ULA. A summary of auto-calibration techniques is given in Table 19.2.

; see [7] for details. Criterion (19.20) is an asymptotic approximation of the maximum a posteriori estimator for simultaneous estimation of array and wavefield parameters [14]. It may be implemented by means of polynomial rooting techniques when the nominal array steering vector has a form similar to that of an ideal ULA. A summary of auto-calibration techniques is given in Table 19.2.

The main difficulty with the Bayesian approach is related to the well-known problem of choosing appropriate prior distributions.1 Assumed prior knowledge may not exist or it may be difficult to express in the form of a pdf. Moreover, specifying a parametric model for the array nonidealities may be very challenging in practice, similarly to the deterministic approach. In case of uncertainties in the array elements’ locations, the nominal steering vector model may be obtained by visual inspection of the real-world sensor array [7]. However, when considering cross-polarization effects, sensors with individual beampatterns and mutual coupling, such a procedure is of little help in determining a nominal array steering vector.

In practice, array calibration measurements may be necessary even in auto-calibration techniques. Hence, it may be worth considering alternative techniques for dealing with array nonidealities that assume array calibration measurements but do not suffer from the difficulties in specifying explicit formulations for the nonidealities. This is discussed in the next section.

3.19.6 Data-driven techniques

Data-driven techniques take into account all array nonidealities simultaneously through array calibration measurements or synthesized array response using e.g., electromagnetic simulation software. These techniques do not require any explicit formulation describing the array nonidealities in a closed-form. Examples of nonidealities that may be handled with data-driven techniques include mutual coupling, individual beampatterns, mounting platform reflections, and cross-polarization effects. This section describes the array interpolation technique [15] and wavefield modeling principle [20], also known as manifold separation technique [18].

In particular, array interpolation technique may be understood as a linear interpolation method that fits array calibration measurements with some ideal array steering vector model. The manifold separation techniques stems from the wavefield modeling principle and can be seen as an orthogonal expansion in Fourier basis (in azimuth-angle processing) of each array element. The expansion coefficients describe the array nonidealities in a combined manner and may be estimated from array calibration measurements.

3.19.6.1 Local interpolation of the array calibration matrix

The columns of the array calibration matrix ![]() discussed in Section 3.19.4 describe the array response, with the combined effects due to array nonidealities, to a set of angles. The angular grid employed in array calibration measurements is typically sparse due to time and cost limitations. Hence, optimal array processing methods using the array calibration matrix may loose their high-resolution properties and suffer from SOI cancellation effects. Perhaps the most intuitive approach to overcome such a limitation consists in interpolating the array calibration matrix using local basis functions such as splines.

discussed in Section 3.19.4 describe the array response, with the combined effects due to array nonidealities, to a set of angles. The angular grid employed in array calibration measurements is typically sparse due to time and cost limitations. Hence, optimal array processing methods using the array calibration matrix may loose their high-resolution properties and suffer from SOI cancellation effects. Perhaps the most intuitive approach to overcome such a limitation consists in interpolating the array calibration matrix using local basis functions such as splines.

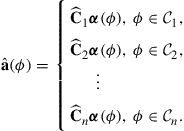

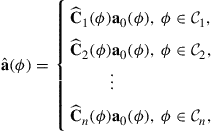

Let ![]() denote a vector composed of local basis functions such as splines or other polynomial functions. The practitioner should choose local basis functions with desirable properties such as smoothness, differentiability, and minimum energy. Also, let

denote a vector composed of local basis functions such as splines or other polynomial functions. The practitioner should choose local basis functions with desirable properties such as smoothness, differentiability, and minimum energy. Also, let ![]() denote a coefficient matrix obtained by interpolating the array calibration matrix

denote a coefficient matrix obtained by interpolating the array calibration matrix ![]() over an angular sector

over an angular sector ![]() using

using ![]() .

. ![]() may correspond to two or more columns of

may correspond to two or more columns of ![]() . Using local basis as the interpolating functions leads to the following piece-wise estimate of the real-world array steering vector:

. Using local basis as the interpolating functions leads to the following piece-wise estimate of the real-world array steering vector:

(19.22)

(19.22)

Alternatively, one may use a nominal array steering vector ![]() in place of

in place of ![]() , and use a local basis expansion for the coefficients matrices in (19.22), instead. More precisely, we may have [6]:

, and use a local basis expansion for the coefficients matrices in (19.22), instead. More precisely, we may have [6]:

(19.23)

(19.23)

where the nth matrix ![]() is modeled as

is modeled as

![]() (19.24)

(19.24)

Here, ![]() denotes a local coefficients matrix; see [6] and references therein for details. The rationale for (19.23) is that

denotes a local coefficients matrix; see [6] and references therein for details. The rationale for (19.23) is that ![]() is typically a smoother function of the angles that the array response, thus allowing for sparser calibration grids than those employed in (19.22). A summary of local interpolation technique is given in Table 19.3.

is typically a smoother function of the angles that the array response, thus allowing for sparser calibration grids than those employed in (19.22). A summary of local interpolation technique is given in Table 19.3.

Expressions (19.22) and (19.23) may be useful in cases when the sensor array is deployed on an environment where the sources are known to be confined to an angular sector. If this is not the case, and the sources may span the whole angular region, using local interpolation techniques may lead to a significant increase on the computationally complexity of array processing methods and may compromise the convergence rate of gradient-based optimization techniques. For example, using (19.22) with the root-MUSIC algorithm requires finding the roots of n different polynomials while the maximum step-size of gradient-based methods is limited by the size of each angular sector ![]() . Hence, wavefield modeling and manifold separation, discussed later in this section, are generally preferred when a sensor array is deployed on an environment where the sources may span the whole angular region.

. Hence, wavefield modeling and manifold separation, discussed later in this section, are generally preferred when a sensor array is deployed on an environment where the sources may span the whole angular region.

3.19.6.2 Array interpolation technique

The array interpolation technique was originally proposed in [15] and further studied in, e.g., [16,17,50]. The idea is to linearly transform the real-world array so that its response approximates that of a specified ideal array, such as an ULA, known as virtual array. The steering vector model of the virtual array needs to be specified by the designer, and it is typically based on some array processing technique. For example, if the virtual array is that of an ULA, one may employ polynomial rooting techniques for DoA estimation. We note that for UCAs a technique called Beamspace transform may be also employed [51]. However, we do not consider Beamspace transform in this chapter due to the restriction on the array geometry; see [51] and references therein.

Let ![]() denote the calibration measurement matrix of the real-world array and

denote the calibration measurement matrix of the real-world array and ![]() a collection of steering vectors of the virtual array. In its simplest form, the array interpolation technique consists in determining the transformation matrix

a collection of steering vectors of the virtual array. In its simplest form, the array interpolation technique consists in determining the transformation matrix ![]() that minimizes the following quadratic error:

that minimizes the following quadratic error:

![]() (19.25)

(19.25)

The solution to (19.25) is well-known to be

![]() (19.26)

(19.26)

Given the output of the real-world array ![]() , the output of the virtual array

, the output of the virtual array ![]() and its sample covariance matrix

and its sample covariance matrix ![]() are found as

are found as ![]() and

and ![]() , respectively. Array processing techniques may then be developed for the virtual array and be employed to real-world arrays without explicitly modeling their nonidealities. We note that the virtual array should be designed so that both

, respectively. Array processing techniques may then be developed for the virtual array and be employed to real-world arrays without explicitly modeling their nonidealities. We note that the virtual array should be designed so that both ![]() and

and ![]() are of full column-rank. In case the condition

are of full column-rank. In case the condition ![]() does not lead to a full-rank virtual array covariance matrix, the virtual array should be re-designed. A summary of array interpolation techniques is given in Table 19.4.

does not lead to a full-rank virtual array covariance matrix, the virtual array should be re-designed. A summary of array interpolation techniques is given in Table 19.4.

The array interpolation technique has two important drawbacks. First, the virtual array, including its configuration, orientation, number of elements, and inter-element spacing, needs to be specified by the designer. Even though this offers some versatility for employing low-complexity DoA estimators or beamforming techniques with arbitrary array configurations (e.g., root-MUSIC algorithm using virtual ULAs), designing virtual arrays is always a heuristic and subjective task. For example, suppose one wants to establish a performance bound such as the widely used Cramér-Rao lower Bound (CBR) for a specific real-world array [25]. In order to take into account the array nonidealities it would be appealing to use array calibration measurements and array interpolation techniques for guaranteeing the tightness of such a bound. However, the resulting CRB depends on the choice of the user-specified virtual array configuration and its parameterization, even though the true physical array remains unmodified. Typically, the specified virtual array employed in array interpolation does not provide insight into the achievable performance by an array built in practice.

Second, the quadratic error in the mapping (19.25) is typically very large if one considers the whole range of angles at once, i.e., ![]() . In order to reduce such an error, array interpolation technique typically proceed by dividing the visible region of the real-world array into angular sectors and optimizing a transformation matrix for each sector. In case the sensor array is deployed on an environment where the sources are known a priori to be confined to an angular sector such a requirement of array interpolation techniques is not a serious limitation. However, in environments where the sources may span the whole angular region, array interpolation technique require sector-by-sector processing, which is known to be sensitivity to out-of-sector sources [52]. Moreover, it may also need a prohibitively large number of sectors in azimuth and elevation processing.

. In order to reduce such an error, array interpolation technique typically proceed by dividing the visible region of the real-world array into angular sectors and optimizing a transformation matrix for each sector. In case the sensor array is deployed on an environment where the sources are known a priori to be confined to an angular sector such a requirement of array interpolation techniques is not a serious limitation. However, in environments where the sources may span the whole angular region, array interpolation technique require sector-by-sector processing, which is known to be sensitivity to out-of-sector sources [52]. Moreover, it may also need a prohibitively large number of sectors in azimuth and elevation processing.

Array interpolation techniques are typically more flexible than local interpolation of the array calibration matrix. For example, one cannot (in general) employ the ESPRIT algorithm with arbitrary array configurations using (19.22) simply by choosing a “shift-invariant” local basis vector. Typically, the approximation ![]() is not shift-invariant. On the other hand, array interpolation techniques requires more design parameters than local interpolation methods. Finally, array interpolation techniques, and to some extent local interpolation methods, typically interpolate exactly all of the measured data including calibration measurement noise. Next, we show that wavefield modeling and manifold separation can be formulated in a model fitting approach in order to minimize the contribution of calibration measurement noise.

is not shift-invariant. On the other hand, array interpolation techniques requires more design parameters than local interpolation methods. Finally, array interpolation techniques, and to some extent local interpolation methods, typically interpolate exactly all of the measured data including calibration measurement noise. Next, we show that wavefield modeling and manifold separation can be formulated in a model fitting approach in order to minimize the contribution of calibration measurement noise.

3.19.6.3 Wavefield modeling principle and manifold separation technique

The wavefield modeling principle was proposed in the seminal work of Doron and Doron [20–22]. It has been further studied and applied to high-resolution direction finding in [18,19], and extended to vector-fields such as completely polarized electromagnetic wavefields in [53].

Let us first recall some results regarding propagating wavefields and wave equation. For the sake of clarity, we consider scalar-fields such as acoustic pressure fields, narrowband signals, and drop the carrier term ![]() . The extension to completely polarized EM wavefields is briefly described in Section 3.19.8. Let

. The extension to completely polarized EM wavefields is briefly described in Section 3.19.8. Let ![]() represent a (scalar) wavefield propagating in the xy-plane and

represent a (scalar) wavefield propagating in the xy-plane and ![]() denote a point in 2-D Euclidean space. The propagating wavefield takes the form of

denote a point in 2-D Euclidean space. The propagating wavefield takes the form of ![]() in the case of P far-field point sources or

in the case of P far-field point sources or ![]() in the case of a (far-field) spatially distributed source.

in the case of a (far-field) spatially distributed source. ![]() denotes a density function and

denotes a density function and ![]() is known as the direction vector since it is a function of

is known as the direction vector since it is a function of ![]() . Spatially distributed sources may be caused by scattering nearby the transmitter. Wavefields of time-harmonic nature, i.e., that have a representation in terms of Fourier integral, may be written as

. Spatially distributed sources may be caused by scattering nearby the transmitter. Wavefields of time-harmonic nature, i.e., that have a representation in terms of Fourier integral, may be written as ![]() , where

, where ![]() and

and ![]() denote an orthogonal set of spatial basis functions and the coefficients of the expansion, respectively. An important outcome of such an expansion is that the coefficients

denote an orthogonal set of spatial basis functions and the coefficients of the expansion, respectively. An important outcome of such an expansion is that the coefficients ![]() uniquely describe the spatial characteristics of the propagating wavefield, such as the DoAs of the sources generating the wavefield, in addition to the transmitted signals. For example, by letting

uniquely describe the spatial characteristics of the propagating wavefield, such as the DoAs of the sources generating the wavefield, in addition to the transmitted signals. For example, by letting ![]() denote circular wave functions, the mth wavefield coefficient is given by

denote circular wave functions, the mth wavefield coefficient is given by ![]() for a spatially distributed source and

for a spatially distributed source and ![]() for P point-sources.

for P point-sources.

Let us now assume that the employed real-world array satisfies the superposition principle (see Section 3.19.3). Then, the wavefield modeling principle shows that the array output, at a given frequency, is a linear function of the wavefield coefficients ![]() . In particular, after discretization the narrowband array output in (19.1) can be written as

. In particular, after discretization the narrowband array output in (19.1) can be written as

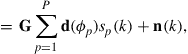

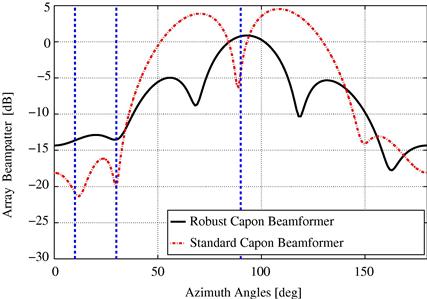

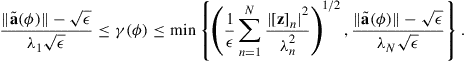

![]() (19.27a)

(19.27a)

(19.27b)

(19.27b)

where ![]() denotes the so-called array sampling matrix and

denotes the so-called array sampling matrix and ![]() contains the (discretized) wavefield coefficients

contains the (discretized) wavefield coefficients ![]() . Recall that, due to the circular wave basis function employed by the spatial decomposition of the propagating wavefield,

. Recall that, due to the circular wave basis function employed by the spatial decomposition of the propagating wavefield, ![]() in (19.27b) is a Vandermonde vector composed of Fourier basis. Hence,

in (19.27b) is a Vandermonde vector composed of Fourier basis. Hence, ![]() is called basis functions vector. In case of a spatially distributed source, the sum in (19.27a) is replaced by an integral over the angles, and weighted by

is called basis functions vector. In case of a spatially distributed source, the sum in (19.27a) is replaced by an integral over the angles, and weighted by ![]() . The number of coefficients employed in (19.27a and 19.27b) is denoted by

. The number of coefficients employed in (19.27a and 19.27b) is denoted by ![]() . Exact equality in (19.27a and 19.27b) is achieved with

. Exact equality in (19.27a and 19.27b) is achieved with ![]() but in practice a (very) accurate approximation of the array output can be obtained with a relatively small

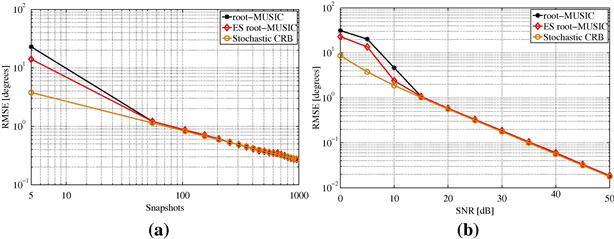

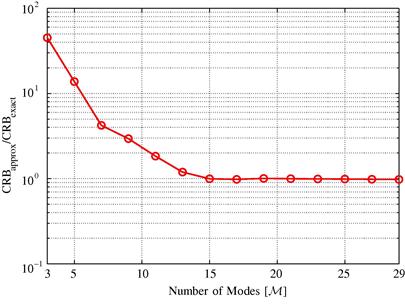

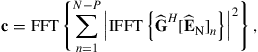

but in practice a (very) accurate approximation of the array output can be obtained with a relatively small ![]() (see Figure 19.7).

(see Figure 19.7).

Figure 19.5 Ideal uniform linear array with an inter-element spacing denoted by d. The smallest sphere (a circle in this case) enclosing the array structure is depicted as well. The concept of smallest sphere provides a measure of the array aperture, including the mounting platform.

Figure 19.6 Three first rows of the array sampling matrix corresponding to the first three elements of the ideal ULA depicted in Figure 19.5. They represent the spatial Fourier coefficients of the first three array elements. The coefficients of the third array element are zero except at ![]() whereas that of the outer elements exhibit the superexponential property.

whereas that of the outer elements exhibit the superexponential property.

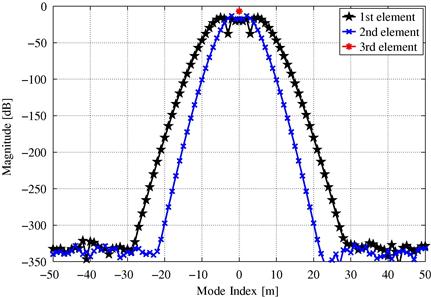

Figure 19.7 In (a) the norm of each column of the array sampling matrix for two ideal ULAs with different inter-element spacing. In (b) the average squared-residual of the manifold separation technique as a function of the number of modes. The saturation floor observed at ![]() is due to arithmetic precision of Matlab. The superexponential property of the array sampling matrix is a consequence of the finite aperture of sensor arrays.

is due to arithmetic precision of Matlab. The superexponential property of the array sampling matrix is a consequence of the finite aperture of sensor arrays.

The result in (19.27a and 19.27b) shows that the (noise-free) array output can be decomposed into two parts. One, represented by the array sampling matrix, characterizes the employed sensor array and it is independent of the wavefield. The second part, represented by the basis functions vector, characterizes the propagating wavefield and it is independent of the employed sensor array. In fact, a corollary of the wavefield modeling principle shows that the array steering vector may be decomposed as

![]() (19.28)

(19.28)

The result in (19.28) is known as manifold separation technique [18] and reveals an interesting interpretation for the array sampling matrix. It represents the spatial Fourier spectrum of the array steering vector

![]() (19.29)

(19.29)

where each row of the array sampling matrix contains the spatial Fourier coefficients of each array element. Hence, the array output (at each frequency) can be seen as the product between the spatial Fourier spectrum of the array steering vector and that of the propagating wavefield.

The array sampling matrix fully and uniquely characterizes a given sensor array since it contains the coefficients of an orthogonal spectral decomposition of the corresponding array steering vector. For example, ![]() contains information about the array configuration, sensors beampatterns (gain and phase response), mutual coupling, cross-polarization effects, mounting platform reflections, etc. In short, it contains all the effects that can be represented by the array steering vector

contains information about the array configuration, sensors beampatterns (gain and phase response), mutual coupling, cross-polarization effects, mounting platform reflections, etc. In short, it contains all the effects that can be represented by the array steering vector ![]() . Closed-form expressions for the array sampling matrix for some ideal sensor arrays can be found in [20]. However,

. Closed-form expressions for the array sampling matrix for some ideal sensor arrays can be found in [20]. However, ![]() may also be estimated in a non-parametric manner from array calibration measurements, without explicit formulations for the array nonidealities. In particular, the estimated array sampling matrix

may also be estimated in a non-parametric manner from array calibration measurements, without explicit formulations for the array nonidealities. In particular, the estimated array sampling matrix ![]() obtained as

obtained as

![]() (19.30a)

(19.30a)

![]() (19.30b)

(19.30b)

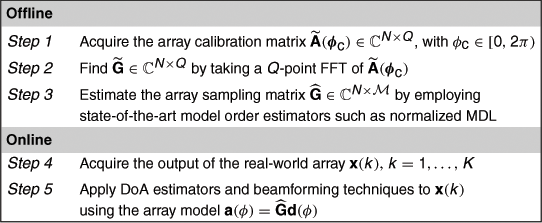

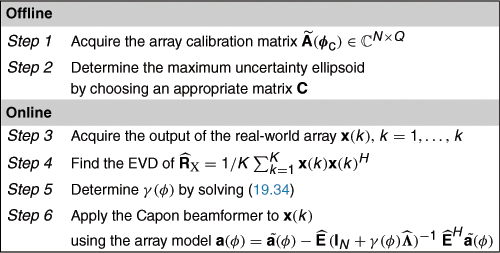

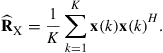

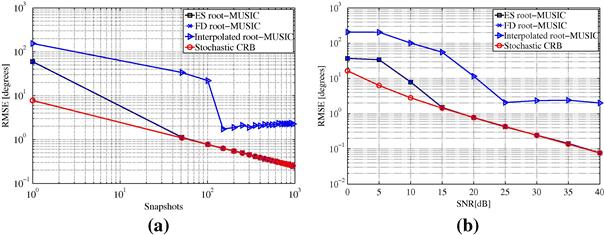

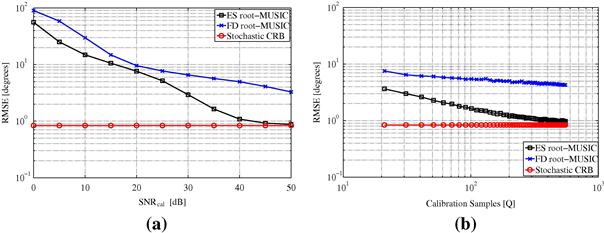

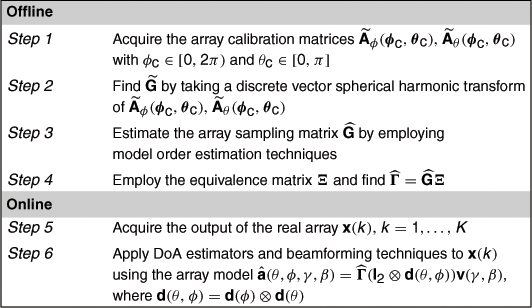

is known as effective aperture distribution function (EADF) [18,33]. In (19.30), ![]() denotes the unitary discrete Fourier transform (DFT) matrix and