Multiple Linear Regression

Chapter Outline

12-1 Multiple Linear Regression Model

12-1.2 Least Squares Estimation of the Parameters

12-1.3 Matrix Approach to Multiple Linear Regression

12-1.4 Properties of the Least Squares Estimators

12-2 Hypothesis Tests in Multiple Linear Regression

12-2.1 Test for Significance of Regression

12-2.2 Tests on Individual Regression Coefficients and Subsets of Coefficients

12-3 Confidence Intervals in Multiple Linear Regression

12-3.1 Confidence Intervals on Individual Regression Coefficients

12-3.2 Confidence Interval on the Mean Response

12-4 Prediction of New Observations

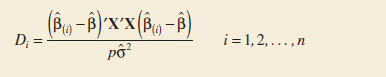

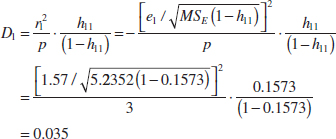

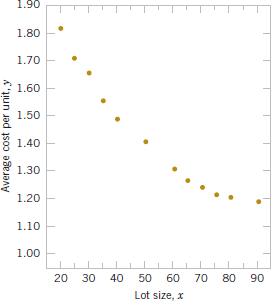

12-5.2 Influential Observations

12-6 Aspects of Multiple Regression Modeling

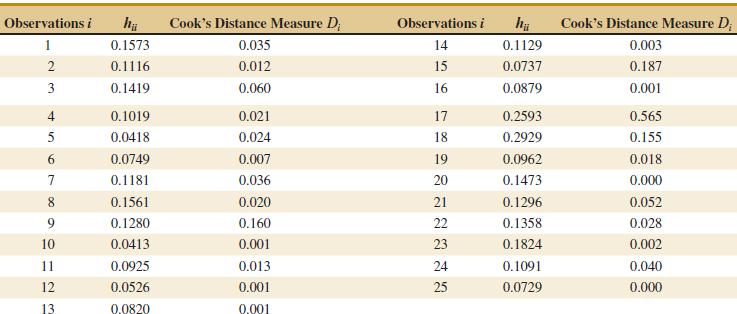

12-6.1 Polynomial Regression Models

12-6.2 Categorical Regressors and Indicator Variables

This chapter generalizes the simple linear regression to a situation that has more than one predictor or regressor variable. This situation occurs frequently in science and engineering; for example, in Chapter 1, we provided data on the pull strength of a wire bond on a semiconductor package and illustrated its relationship to the wire length and the die height. Understanding the relationship between strength and the other two variables may provide important insight to the engineer when the package is designed, or to the manufacturing personnel who assemble the die into the package. We used a multiple linear regression model to relate strength to wire length and die height. There are many examples of such relationships: The life of a cutting tool is related to the cutting speed and the tool angle; patient satisfaction in a hospital is related to patient age, type of procedure performed, and length of stay; and the fuel economy of a vehicle is related to the type of vehicle (car versus truck), engine displacement, horsepower, type of transmission, and vehicle weight. Multiple regression models give insight into the relationships between these variables that can have important practical implications.

In this chapter, we show how to fit multiple linear regression models, perform the statistical tests and confidence procedures that are analogous to those for simple linear regression, and check for model adequacy. We also show how models that have polynomial terms in the regressor variables are just multiple linear regression models. We also discuss some aspects of building a good regression model from a collection of candidate regressors.

![]() Learning Objectives

Learning Objectives

After careful study of this chapter, you should be able to do the following:

- Use multiple regression techniques to build empirical models to engineering and scientific data

- Understand how the method of least squares extends to fitting multiple regression models

- Assess regression model adequacy

- Test hypotheses and construct confidence intervals on the regression coefficients

- Use the regression model to estimate the mean response and to make predictions and to construct confidence intervals and prediction intervals

- Build regression models with polynomial terms

- Use indicator variables to model categorical regressors

- Use stepwise regression and other model building techniques to select the appropriate set of variables for a regression model

12-1 Multiple Linear Regression Model

12-1.1 INTRODUCTION

Many applications of regression analysis involve situations that have more than one regressor or predictor variable. A regression model that contains more than one regressor variable is called a multiple regression model.

As an example, suppose that the gasoline mileage performance of a vehicle depends on the vehicle weight and the engine displacement. A multiple regression model that might describe this relationship is

![]()

where Y represents the mileage, x1 represents the weight, x2 represents the engine displacement, and ![]() is a random error term. This is a multiple linear regression model with two regressors. The term linear is used because Equation 12-1 is a linear function of the unknown parameters β0, β1, and β2.

is a random error term. This is a multiple linear regression model with two regressors. The term linear is used because Equation 12-1 is a linear function of the unknown parameters β0, β1, and β2.

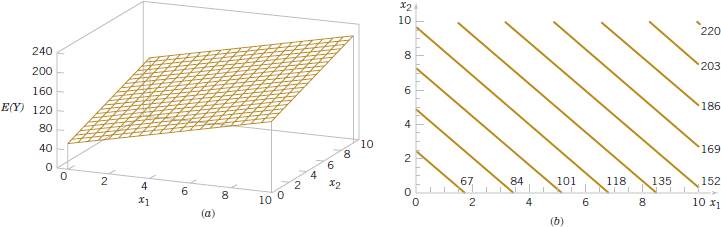

FIGURE 12-1 (a) The regression plane for the model E(Y) = 50 + 10x1 + 7x2. (b) The contour plot.

The regression model in Equation 12-1 describes a plane in the three-dimensional space of Y, x1, and x2. Figure 12-1(a) shows this plane for the regression model

![]()

where we have assumed that the expected value of the error term is zero; that is E(![]() ) = 0. The parameter β0 is the intercept of the plane. We sometimes call β1 and β2 partial regression coefficients because β1 measures the expected change in Y per unit change in x1 when x2 is held constant, and β2 measures the expected change in Y per unit change in x2 when x1 is held constant. Figure 12-1(b) shows a contour plot of the regression model—that is, lines of constant E(Y) as a function of x1 and x2. Notice that the contour lines in this plot are straight lines.

) = 0. The parameter β0 is the intercept of the plane. We sometimes call β1 and β2 partial regression coefficients because β1 measures the expected change in Y per unit change in x1 when x2 is held constant, and β2 measures the expected change in Y per unit change in x2 when x1 is held constant. Figure 12-1(b) shows a contour plot of the regression model—that is, lines of constant E(Y) as a function of x1 and x2. Notice that the contour lines in this plot are straight lines.

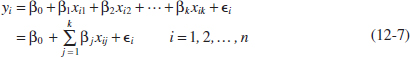

In general, the dependent variable or response Y may be related to k independent or regressor variables. The model

![]()

is called a multiple linear regression model with k regressor variables. The parameters βj, j = 0,1,..., k, are called the regression coefficients. This model describes a hyperplane in the k-dimensional space of the regressor variables {xj}. The parameter βj represents the expected change in response Y per unit change in xj when all the remaining regressors xi(i ≠ j) are held constant.

Multiple linear regression models are often used as approximating functions. That is, the true functional relationship between Y and x1, x2,..., xk is unknown, but over certain ranges of the independent variables, the linear regression model is an adequate approximation.

Models that are more complex in structure than Equation 12-2 may often still be analyzed by multiple linear regression techniques. For example, consider the cubic polynomial model in one regressor variable.

![]()

If we let x1 = x, x2 = x2, x3 = x3, Equation 12-3 can be written as

![]()

which is a multiple linear regression model with three regressor variables.

Models that include interaction effects may also be analyzed by multiple linear regression methods. Interaction effects are very common. For example, a vehicle's mileage may be impacted by an interaction between vehicle weight and engine displacement. An interaction between two variables can be represented by a cross-product term in the model, such as

![]()

If we let x3 = x1x2 and β3 = β12, Equation 12-5 can be written as

![]()

which is a linear regression model.

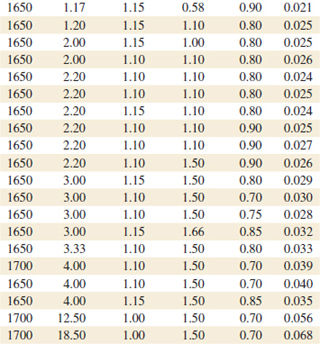

Figure 12-2(a) and (b) shows the three-dimensional plot of the regression model

![]()

and the corresponding two-dimensional contour plot. Notice that, although this model is a linear regression model, the shape of the surface that is generated by the model is not linear. In general, any regression model that is linear in parameters (the β's) is a linear regression model, regardless of the shape of the surface that it generates.

Figure 12-2 provides a nice graphical interpretation of an interaction. Generally, interaction implies that the effect produced by changing one variable (x1, say) depends on the level of the other variable (x2). For example, Fig. 12-2 shows that changing x1 from 2 to 8 produces a much smaller change in E(Y) when x2 = 2 than when x2 = 10. Interaction effects occur frequently in the study and analysis of real-world systems, and regression methods are one of the techniques that we can use to describe them.

As a final example, consider the second-order model with interaction

![]()

If we let x3 = ![]() , x4 =

, x4 = ![]() , x5 = x1x2, β3 = β11, β4 = β22, and β5 = β12, Equation 12-6 can be written as a multiple linear regression model as follows:

, x5 = x1x2, β3 = β11, β4 = β22, and β5 = β12, Equation 12-6 can be written as a multiple linear regression model as follows:

![]()

FIGURE 12-2 (a) Three-dimensional plot of the regression model. (b) The contour plot.

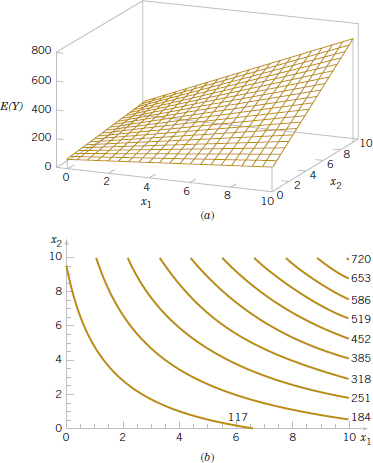

FIGURE 12-3 (a) Three-dimensional plot of the regression model E(Y) = 800 + 10x1 + 7x2 − 8.5![]() − 5

− 5![]() + 4x1x2. (b) The contour plot.

+ 4x1x2. (b) The contour plot.

Figure 12-3 parts (a) and (b) show the three-dimensional plot and the corresponding contour plot for

![]()

These plots indicate that the expected change in Y when xi is changed by one unit (say) is a function of and x2. The quadratic and interaction terms in this model produce a mound-shaped function. Depending on the values of the regression coefficients, the second-order model with interaction is capable of assuming a wide variety of shapes; thus, it is a very flexible regression model.

12-1.2 LEAST SQUARES ESTIMATION OF THE PARAMETERS

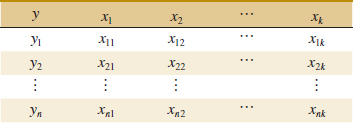

The method of least squares may be used to estimate the regression coefficients in the multiple regression model, Equation 12-2. Suppose that n > k observations are available, and let xij denote the ith observation or level of variable xj. The observations are

![]()

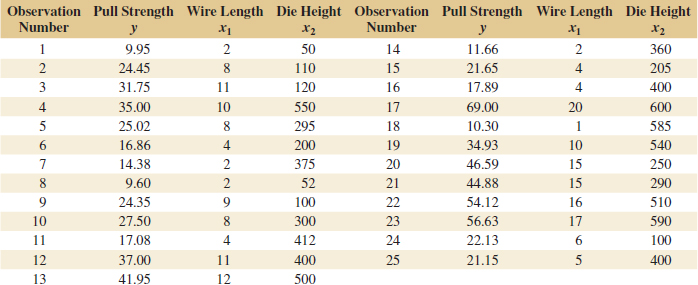

It is customary to present the data for multiple regression in a table such as Table 12-1.

Each observation (xi1, xi2, ..., xik, yi), satisfies the model in Equation 12-2, or

The least squares function is

![]()

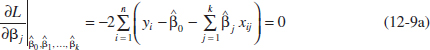

We want to minimize L with respect to β0, β1,..., βk. The least squares estimates of β0, β1,..., βk must satisfy

and

![]()

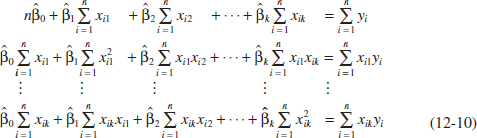

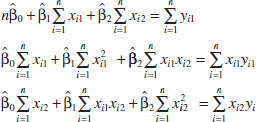

Simplifying Equation 12-9, we obtain the least squares normal equations

![]() TABLE • 12-1 Data for Multiple Linear Regression

TABLE • 12-1 Data for Multiple Linear Regression

Note that there are p = k + 1 normal equations, one for each of the unknown regression coefficients. The solution to the normal equations will be the least squares estimators of the regression coefficients, ![]() 0,

0, ![]() 1,...,

1,...,![]() k. The normal equations can be solved by any method appropriate for solving a system of linear equations.

k. The normal equations can be solved by any method appropriate for solving a system of linear equations.

Example 12-1 Wire Bond Strength In Chapter 1, we used data on pull strength of a wire bond in a semiconductor manufacturing process, wire length, and die height to illustrate building an empirical model. We will use the same data, repeated for convenience in Table 12-2, and show the details of estimating the model parameters. A three-dimensional scatter plot of the data is presented in Fig. 1-15. Figure 12-4 is a matrix of two-dimensional scatter plots of the data. These displays can be helpful in visualizing the relationships among variables in a multivariable data set. For example, the plot indicates that there is a strong linear relationship between strength and wire length.

Specifically, we will fit the multiple linear regression model

![]()

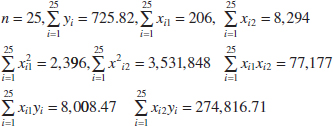

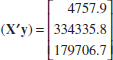

where Y = pull strength, x1 = wire length, and x2 = die height. From the data in Table 12-2, we calculate

For the model Y = β0 + β1x1 + β2x2 + ![]() , the normal Equations 12-10 are

, the normal Equations 12-10 are

![]() TABLE • 12-2 Wire Bond Data for Example 12-1

TABLE • 12-2 Wire Bond Data for Example 12-1

FIGURE 12-4 Matrix of computer-generated scatter plots for the wire bond pull strength data in Table 12-2.

Inserting the computed summations into the normal equations, we obtain

The solution to this set of equations is

![]()

Therefore, the fitted regression equation is

![]()

Practical Interpretation: This equation can be used to predict pull strength for pairs of values of the regressor variables wire length (x1) and die height (x2). This is essentially the same regression model given in Section 1-3. Figure 1-16 shows a three-dimensional plot of the plane of predicted values ![]() generated from this equation.

generated from this equation.

12-1.3 MATRIX APPROACH TO MULTIPLE LINEAR REGRESSION

In fitting a multiple regression model, it is much more convenient to express the mathematical operations using matrix notation. Suppose that there are k regressor varibles and n observations, (xi1, xi2,...,xik, yi), i = 1,2,...,n and that the model relating the regressors to the response is

![]()

This model is a system of n equations that can be expressed in matrix notation as

![]()

where

In general, y is an (n × 1) vector of the observations, X is an (n × p) matrix of the levels of the independent variables (assuming that the intercept is always multiplied by a constant value—unity), β is a (p × 1) vector of the regression coefficients, and ![]() is a (n × 1) vector of random errors. The X matrix is often called the model matrix.

is a (n × 1) vector of random errors. The X matrix is often called the model matrix.

We wish to find the vector of least squares estimators, ![]() , that minimizes

, that minimizes

![]()

The least squares estimator ![]() is the solution for β in the equations

is the solution for β in the equations

![]()

We will not give the details of taking the preceding derivatives; however, the resulting equations that must be solved are

Normal Equations

![]()

Equations 12-12 are the least squares normal equations in matrix form. They are identical to the scalar form of the normal equations given earlier in Equations 12-10. To solve the normal equations, multiply both sides of Equations 12-12 by the inverse of X′X. Therefore, the least squares estimate of β is

Least Squares Estimate of β

![]()

Note that there are p = k + 1 normal equations in p = k + 1 unknowns (the values of ![]() 0,

0, ![]() 1,...,

1,..., ![]() k). Furthermore, the matrix X′X is always nonsingular, as was assumed previously, so the methods described in textbooks on determinants and matrices for inverting these matrices can be used to find (X′X)−1. In practice, multiple regression calculations are almost always performed using a computer.

k). Furthermore, the matrix X′X is always nonsingular, as was assumed previously, so the methods described in textbooks on determinants and matrices for inverting these matrices can be used to find (X′X)−1. In practice, multiple regression calculations are almost always performed using a computer.

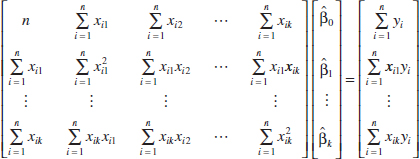

It is easy to see that the matrix form of the normal equations is identical to the scalar form. Writing out Equation 12-12 in detail, we obtain

If the indicated matrix multiplication is performed, the scalar form of the normal equations (that is, Equation 12-10) will result. In this form, it is easy to see that X′X is a (p × p) symmetric matrix and X′y is a (p × 1) column vector. Note the special structure of the X′X matrix. The diagonal elements of X′X are the sums of squares of the elements in the columns of X, and the off-diagonal elements are the sums of cross-products of the elements in the columns of X. Furthermore, note that the elements of X′y are the sums of cross-products of the columns of X and the observations {yi}.

The fitted regression model is

![]()

In matrix notation, the fitted model is

![]()

The difference between the observation yi and the fitted value ![]() i is a residual, say, ei = yi −

i is a residual, say, ei = yi − ![]() i.

i.

The (n × 1) vector of residuals is denoted by

![]()

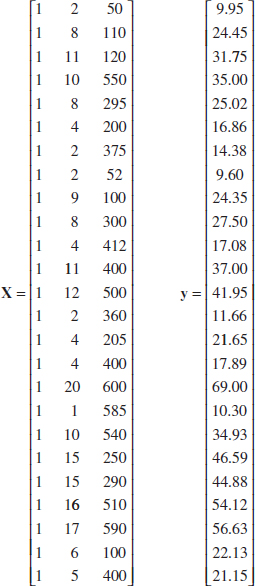

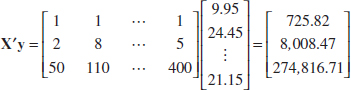

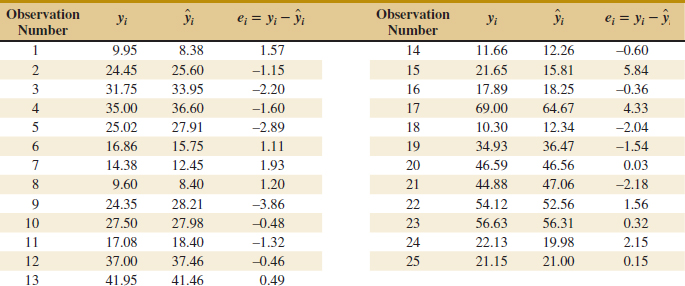

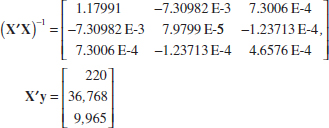

Example 12-2 Wire Bond Strength With Matrix Notation In Example 12-1, we illustrated fitting the multiple regression model

![]()

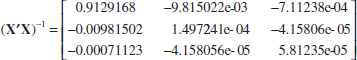

where y is the observed pull strength for a wire bond, x1 is the wire length, and x2 is the die height. The 25 observations are in Table 12-2. We will now use the matrix approach to fit the previous regression model to these data. The model matrix X and y vector for this model are

and the X′y vector is

The least squares estimates are found from Equation 12-13 as

![]()

or

Therefore, the fitted regression model with the regression coefficients rounded to five decimal places is

![]()

This is identical to the results obtained in Example 12-1.

This regression model can be used to predict values of pull strength for various values of wire length (x1) and die height (x2). We can also obtain the fitted values ![]() i by substituting each observation (xi1, xi2), i = 1,2,..., n, into the equation. For example, the first observation has x11 = 2 and x12 = 50, and the fitted value is

i by substituting each observation (xi1, xi2), i = 1,2,..., n, into the equation. For example, the first observation has x11 = 2 and x12 = 50, and the fitted value is

![]()

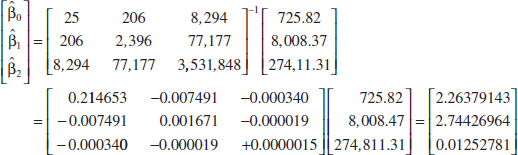

![]() TABLE • 12-3 Observations, Fitted Values, and Residuals for Example 12-2

TABLE • 12-3 Observations, Fitted Values, and Residuals for Example 12-2

The corresponding observed value is y1 = 9.95. The residual corresponding to the first observation is

![]()

Table 12-3 displays all 25 fitted values ![]() i and the corresponding residuals. The fitted values and residuals are calculated to the same accuracy as the original data.

i and the corresponding residuals. The fitted values and residuals are calculated to the same accuracy as the original data.

Computers are almost always used in fitting multiple regression models. See Table 12-4 for some annotated computer output for the least squares regression model for the wire bond pull strength data. The upper part of the table contains the numerical estimates of the regression coefficients. The computer also calculates several other quantities that reflect important information about the regression model. In subsequent sections, we will define and explain the quantities in this output.

Estimating σ2

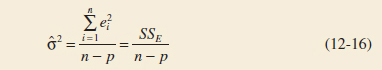

Just as in simple linear regression, it is important to estimate σ2, the variance of the error term ![]() , in a multiple regression model. Recall that in simple linear regression the estimate of σ2 was obtained by dividing the sum of the squared residuals by n − 2. Now there are two parameters in the simple linear regression model, so in multiple linear regression with p parameters, a logical estimator for σ2 is

, in a multiple regression model. Recall that in simple linear regression the estimate of σ2 was obtained by dividing the sum of the squared residuals by n − 2. Now there are two parameters in the simple linear regression model, so in multiple linear regression with p parameters, a logical estimator for σ2 is

Estimator of Variance

![]() TABLE • 12-4 Multiple Regression Output from Software for the Wire Bond Pull Strength Data

TABLE • 12-4 Multiple Regression Output from Software for the Wire Bond Pull Strength Data

This is an unbiased estimator of σ2. Just as in simple linear regression, the estimate of σ2 is usually obtained from the analysis of variance for the regression model. The numerator of Equation 12-16 is called the error or residual sum of squares, and the denominator n − p is called the error or residual degrees of freedom.

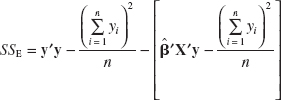

We can find a computing formula for SSE as follows:

![]()

Substituting e = y−![]() = y − X

= y − X![]() into the equation, we obtain

into the equation, we obtain

![]()

Table 12-4 shows that the estimate of σ2 for the wire bond pull strength regression model is ![]() 2 = 115.2/22 = 5.2364. The computer output rounds the estimate to

2 = 115.2/22 = 5.2364. The computer output rounds the estimate to ![]() 2 = 5.2.

2 = 5.2.

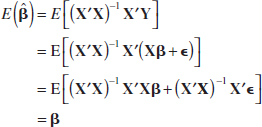

12-1.4 PROPERTIES OF THE LEAST SQUARES ESTIMATORS

The statistical properties of the least squares estimators ![]() 0,

0, ![]() 1,...,

1,..., ![]() k may be easily found under certain assumptions on the error terms ε1, ε2,..., εn, in the regression model. Paralleling the assumptions made in Chapter 11, we assume that the errors εi are statistically independent with mean zero and variance σ2. Under these assumptions, the least squares estimators

k may be easily found under certain assumptions on the error terms ε1, ε2,..., εn, in the regression model. Paralleling the assumptions made in Chapter 11, we assume that the errors εi are statistically independent with mean zero and variance σ2. Under these assumptions, the least squares estimators ![]() 0,

0, ![]() 1,...,

1,..., ![]() k are unbiased estimators of the regression coefficients β0, β1,..., βk. This property may be shown as follows:

k are unbiased estimators of the regression coefficients β0, β1,..., βk. This property may be shown as follows:

because E(![]() ) = 0 and (X′X)−1 X′X = I, the identity matrix. Thus,

) = 0 and (X′X)−1 X′X = I, the identity matrix. Thus, ![]() is an unbiased estimator of β.

is an unbiased estimator of β.

The variances of the ![]() 's are expressed in terms of the elements of the inverse of the X′X matrix. The inverse of X′X times the constant σ2 represents the covariance matrix of the regression coefficients

's are expressed in terms of the elements of the inverse of the X′X matrix. The inverse of X′X times the constant σ2 represents the covariance matrix of the regression coefficients ![]() . The diagonal elements of σ2 (X′X)−1 are the variances of

. The diagonal elements of σ2 (X′X)−1 are the variances of ![]() 0,

0, ![]() 1,...,

1,..., ![]() k, and the off-diagonal elements of this matrix are the covariances. For example, if we have k = 2 regressors, such as in the pull strength problem,

k, and the off-diagonal elements of this matrix are the covariances. For example, if we have k = 2 regressors, such as in the pull strength problem,

which is symmetric (C10 = C01, C20 = C02, and C21 = C12) because (X′X)−1 is symmetric, and we have

In general, the covariance matrix of ![]() is a (p × p) symmetric matrix whose jjth element is the variance of

is a (p × p) symmetric matrix whose jjth element is the variance of ![]() j and whose i, jth element is the covariance between

j and whose i, jth element is the covariance between ![]() i and

i and ![]() j, that is,

j, that is,

![]()

The estimates of the variances of these regression coefficients are obtained by replacing σ2 with an estimate. When σ2 is replaced by its estimate ![]() 2, the square root of the estimated variance of the jth regression coefficient is called the estimated standard error of

2, the square root of the estimated variance of the jth regression coefficient is called the estimated standard error of ![]() j or se(

j or se(![]() j) =

j) = ![]() . These standard errors are a useful measure of the precision of estimation for the regression coefficients; small standard errors imply good precision.

. These standard errors are a useful measure of the precision of estimation for the regression coefficients; small standard errors imply good precision.

Multiple regression computer programs usually display these standard errors. For example, the computer output in Table 12-4 reports se(![]() 0) = 1.060, se(

0) = 1.060, se(![]() 1) = 0.09352, and se(

1) = 0.09352, and se(![]() 2) = 0.002798. The intercept estimate is about twice the magnitude of its standard error, and

2) = 0.002798. The intercept estimate is about twice the magnitude of its standard error, and ![]() 1 and β are considerably larger than se(

1 and β are considerably larger than se(![]() 1) and se(

1) and se(![]() 2). This implies reasonable precision of estimation, although the parameters β1 and β2 are much more precisely estimated than the intercept (this is not unusual in multiple regression).

2). This implies reasonable precision of estimation, although the parameters β1 and β2 are much more precisely estimated than the intercept (this is not unusual in multiple regression).

Exercises FOR SECTION 12-1

![]() Problem available in WileyPLUS at instructor's discretion.

Problem available in WileyPLUS at instructor's discretion.

![]() Go Tutorial Tutoring problem available in WileyPLUS at instructor's discretion.

Go Tutorial Tutoring problem available in WileyPLUS at instructor's discretion.

12.1. Exercise 11.1 described a regression model between percent of body fat (%BF) as measured by immersion and BMI from a study on 250 male subjects. The researchers also measured 13 physical characteristics of each man, including his age (yrs), height (in), and waist size (in).

A regression of percent of body fat with both height and waist as predictors shows the following computer output:

(a) Write out the regression model if

and

(b) Verify that the model found from technology is correct to at least 2 decimal places.

(c) What is the predicted body fat of a man who is 6-ft tall with a 34-in waist?

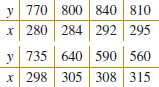

12.2. ![]() A class of 63 students has two hourly exams and a final exam. How well do the two hourly exams predict performance on the final?

A class of 63 students has two hourly exams and a final exam. How well do the two hourly exams predict performance on the final?

The following are some quantities of interest:

(a) Calculate the least squares estimates of the slopes for hourly 1 and hourly 2 and the intercept.

(b) Use the equation of the fitted line to predict the final exam score for a student who scored 70 on hourly 1 and 85 on hourly 2.

(c) If a student who scores 80 on hourly 1 and 90 on hourly 2 gets an 85 on the final, what is her residual?

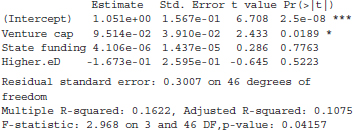

12.3. Can the percentage of the workforce who are engineers in each U.S. state be predicted by the amount of money spent in on higher education (as a percent of gross domestic product), on venture capital (dollars per $1000 of gross domestic product) for high-tech business ideas, and state funding (in dollars per student) for major research universities? Data for all 50 states and a software package revealed the following results:

(a) Write the equation predicting the percent of engineers in the workforce.

(b) For a state that has $1 per $1000 in venture capital, spends $10,000 per student on funding for major research universities, and spends 0.5% of its GDP on higher education, what percent of engineers do you expect to see in the workforce?

(c) If the state in part (b) actually had 1.5% engineers in the workforce, what would the residual be?

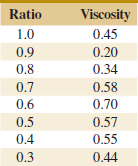

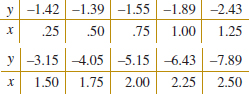

![]() 12-4. Hsuie, Ma, and Tsai (“Separation and Characterizations of Thermotropic Copolyesters of p-Hydroxybenzoic Acid, Sebacic Acid, and Hydroquinone,” (1995, Vol. 56) studied the effect of the molar ratio of sebacic acid (the regressor) on the intrinsic viscosity of copolyesters (the response). The following display presents the data.

12-4. Hsuie, Ma, and Tsai (“Separation and Characterizations of Thermotropic Copolyesters of p-Hydroxybenzoic Acid, Sebacic Acid, and Hydroquinone,” (1995, Vol. 56) studied the effect of the molar ratio of sebacic acid (the regressor) on the intrinsic viscosity of copolyesters (the response). The following display presents the data.

(a) Construct a scatterplot of the data.

(b) Fit a second-order prediction equation.

12-5. A study was performed to investigate the shear strength of soil (y) as it related to depth in feet (x1) and percent of moisture content (x2). Ten observations were collected, and the following summary quantities obtained: n = 10, Σxi1 = 223, Σxi2 = 553, Σyi = 1,916, ![]() = 5,200.9,

= 5,200.9, ![]() = 31,729, Σxi1xi2 = 12,352, Σxi1yi = 43,550.8, Σxi2yi = 104,736.8, and

= 31,729, Σxi1xi2 = 12,352, Σxi1yi = 43,550.8, Σxi2yi = 104,736.8, and ![]() = 371,595.6.

= 371,595.6.

(a) Set up the least squares normal equations for the model Y = β0 + β1x1 + β2x2 + ![]() .

.

(b) Estimate the parameters in the model in part (a).

(c) What is the predicted strength when x1 = 18 feet and x2 = 43%?

12-6. ![]() A regression model is to be developed for predicting the ability of soil to absorb chemical contaminants. Ten observations have been taken on a soil absorption index (y) and two regressors: x1 = amount of extractable iron ore and x2 = amount of bauxite. We wish to fit the model y = β0 + β1x1 + β2x2 +

A regression model is to be developed for predicting the ability of soil to absorb chemical contaminants. Ten observations have been taken on a soil absorption index (y) and two regressors: x1 = amount of extractable iron ore and x2 = amount of bauxite. We wish to fit the model y = β0 + β1x1 + β2x2 + ![]() .

.

Some necessary quantities are:

(a) Estimate the regression coefficients in the model specified.

(b) What is the predicted value of the absorption index y when x1 = 200 and x2 = 50?

12-7. A chemical engineer is investigating how the amount of conversion of a product from a raw material (y) depends on reaction temperature (x1) and the reaction time (x2). He has developed the following regression models:

= 100 + 2x1 + 4x2

= 100 + 2x1 + 4x2 = 95 + 1.5x1 + 3x2 + 2x1x2

= 95 + 1.5x1 + 3x2 + 2x1x2

Both models have been built over the range 0.5 ≤ x2 ≤ 10.

(a) What is the predicted value of conversion when x2 = 2? Repeat this calculation for x2 = 8. Draw a graph of the predicted values for both conversion models. Comment on the effect of the interaction term in model 2.

(b) Find the expected change in the mean conversion for a unit change in temperature x1 for model 1 when x2 = 5. Does this quantity depend on the specific value of reaction time selected? Why?

(c) Find the expected change in the mean conversion for a unit change in temperature x1 for model 2 when x2 = 5. Repeat this calculation for x2 = 2 and x2 = 8. Does the result depend on the value selected for x2? Why?

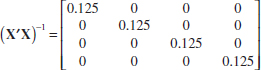

12-8. You have fit a multiple linear regression model and the (X′X)−1 matrix is:

(a) How many regressor variables are in this model?

(b) If the error sum of squares is 307 and there are 15 observations, what is the estimate of σ2?

(c) What is the standard error of the regression coefficient ![]() 1?

1?

![]()

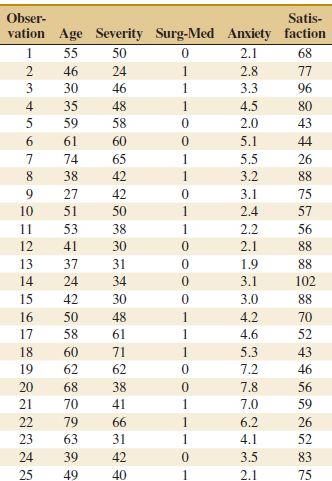

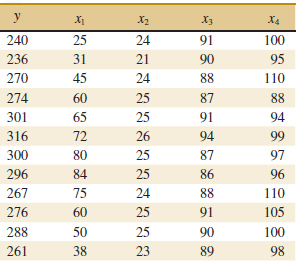

12-9. The data from a patient satisfaction survey in a hospital are in Table E12-1.

![]() TABLE • E12-1 Patient Satisfaction Data

TABLE • E12-1 Patient Satisfaction Data

The regressor variables are the patient's age, an illness severity index (higher values indicate greater severity), an indicator variable denoting whether the patient is a medical patient (0) or a surgical patient (1), and an anxiety index (higher values indicate greater anxiety).

(a) Fit a multiple linear regression model to the satisfaction response using age, illness severity, and the anxiety index as the regressors.

(b) Estimate σ2.

(c) Find the standard errors of the regression coefficients.

(d) Are all of the model parameters estimated with nearly the same precision? Why or why not?

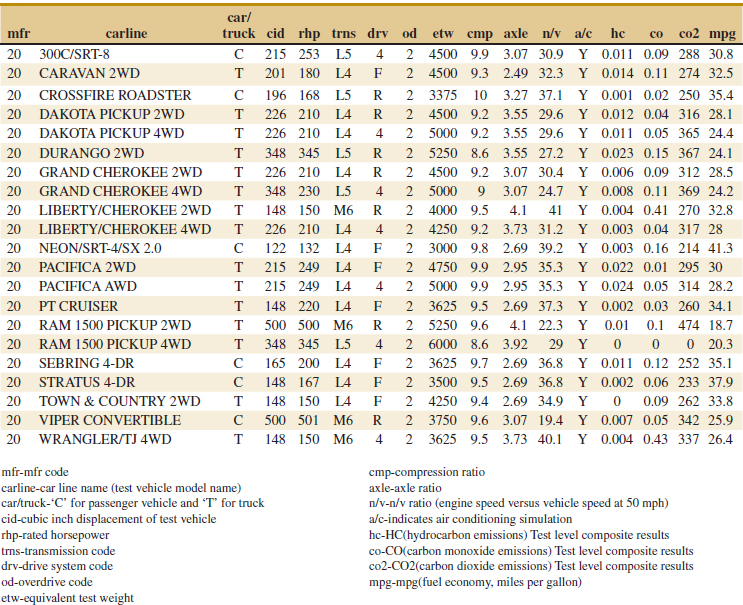

12-10. The electric power consumed each month by a chemical plant is thought to be related to the average ambient temperature (x1), the number of days in the month (x2), the average product purity (x3), and the tons of product produced (x4). The past year's historical data are available and are presented in Table E12-2.

(a) Fit a multiple linear regression model to these data.

(b) Estimate σ2.

(c) Compute the standard errors of the regression coefficients. Are all of the model parameters estimated with the same precision? Why or why not?

(d) Predict power consumption for a month in which x1 = 75°F, x2 = 24 days, x3 = 90%, and x4 = 98 tons.

![]() TABLE • E12-2 Power Consumption Data

TABLE • E12-2 Power Consumption Data

![]()

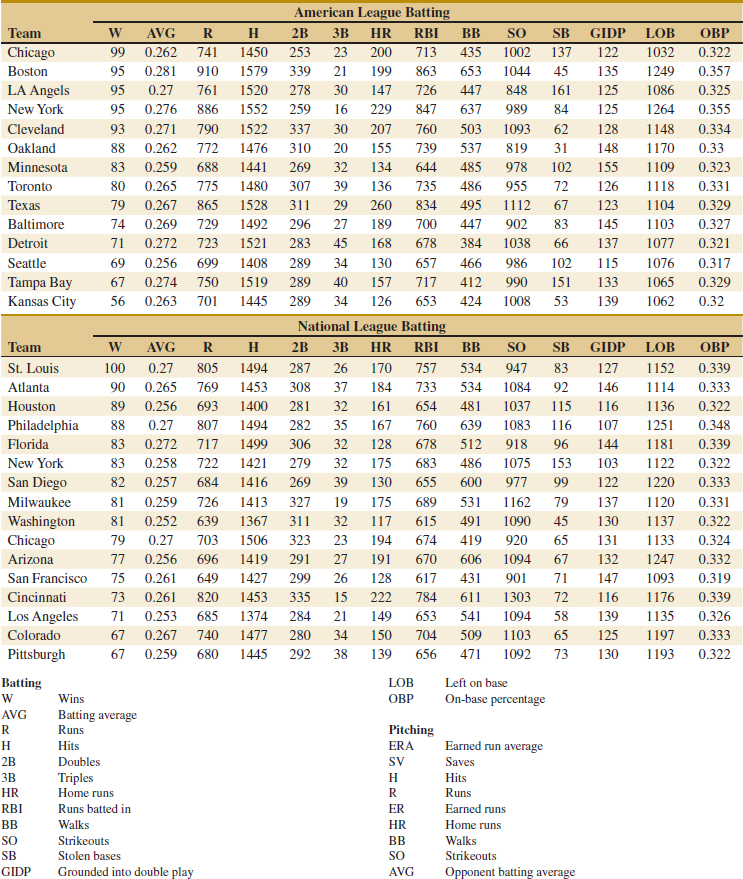

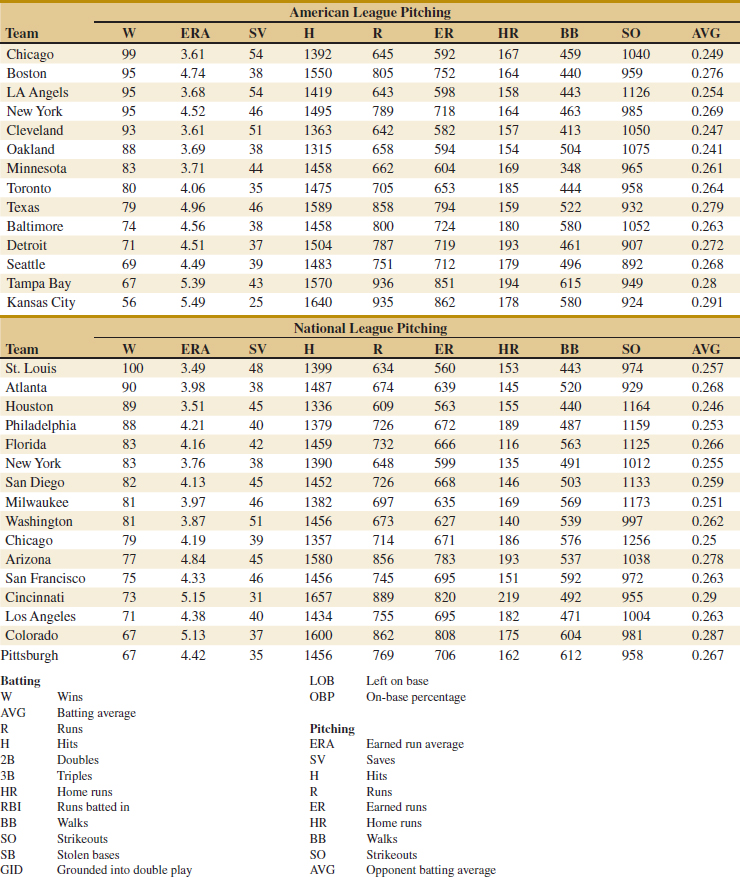

12-11. Table E12-3 provides the highway gasoline mileage test results for 2005 model year vehicles from DaimlerChrysler. The full table of data (available on the book's Web site) contains the same data for 2005 models from over 250 vehicles from many manufacturers (Environmental Protection Agency Web site www.epa.gov/otaq/cert/mpg/testcars/database).

![]() TABLE • E12-3 DaimlerChrysler Fuel Economy and Emissions

TABLE • E12-3 DaimlerChrysler Fuel Economy and Emissions

(a) Fit a multiple linear regression model to these data to estimate gasoline mileage that uses the following regressors:

cid, rhp, etw, cmp, axle, n/v

(b) Estimate σ2 and the standard errors of the regression coefficients.

(c) Predict the gasoline mileage for the first vehicle in the table.

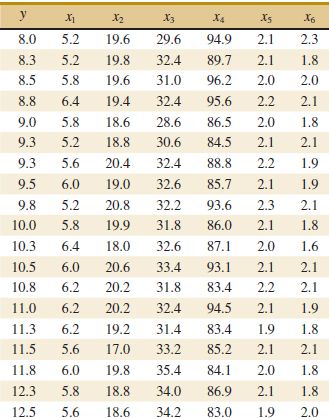

12-12. ![]() The pull strength of a wire bond is an important characteristic. Table E12-4 gives information on pull strength (y), die height (x1), post height (x2), loop height (x3), wire length (x4), bond width on the die (x5), and bond width on the post (x6).

The pull strength of a wire bond is an important characteristic. Table E12-4 gives information on pull strength (y), die height (x1), post height (x2), loop height (x3), wire length (x4), bond width on the die (x5), and bond width on the post (x6).

(a) Fit a multiple linear regression model using x2, x3, x4, and x5 as the regressors

(b) Estimate σ2.

(c) Find the se(![]() j). How precisely are the regression coefficients estimated in your opinion?

j). How precisely are the regression coefficients estimated in your opinion?

(d) Use the model from part (a) to predict pull strength when x2 = 20, x3 = 30, x4 = 90, and x5 = 2.0.

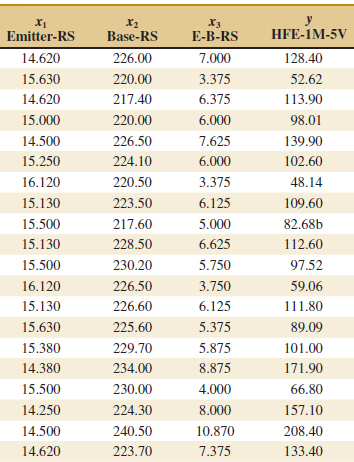

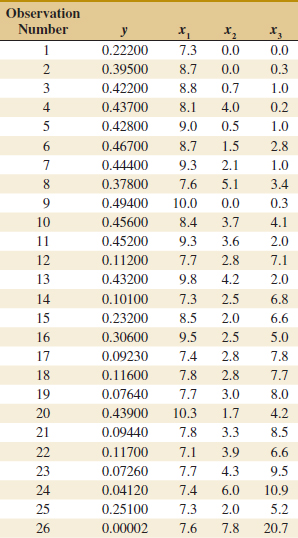

12-13. An engineer at a semiconductor company wants to model the relationship between the device HFE (y) and three parameters: Emitter-RS (x1), Base-RS (x2), and Emitter-to-Base RS (x3). The data are shown in the Table E12-5.

(a) Fit a multiple linear regression model to the data.

(b) Estimate σ2.

(c) Find the standard errors se(![]() j). Are all of the model parameters estimated with the same precision? Justify your answer.

j). Are all of the model parameters estimated with the same precision? Justify your answer.

(d) Predict HFE when x1 = 14.5, x2 = 220, and x3 = 5.0.

![]() TABLE • E12-5 Semiconductor Data

TABLE • E12-5 Semiconductor Data

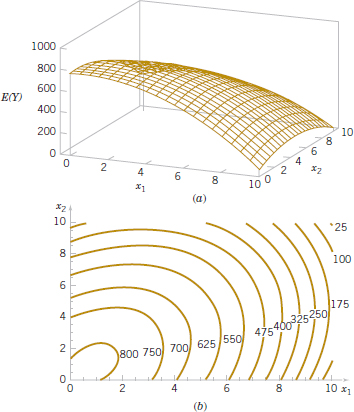

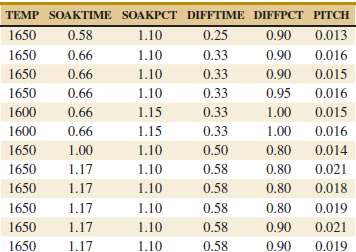

![]()

12-14. Heat treating is often used to carburize metal parts such as gears. The thickness of the carburized layer is considered a crucial feature of the gear and contributes to the overall reliability of the part. Because of the critical nature of this feature, two different lab tests are performed on each furnace load. One test is run on a sample pin that accompanies each load. The other test is a destructive test that cross-sections an actual part. This test involves running a carbon analysis on the surface of both the gear pitch (top of the gear tooth) and the gear root (between the gear teeth). Table E12-6 shows the results of the pitch carbon analysis test for 32 parts.

![]() TABLE • E12-6 Heat Treating Test

TABLE • E12-6 Heat Treating Test

The regressors are furnace temperature (TEMP), carbon concentration and duration of the carburizing cycle (SOAKPCT, SOAKTIME), and carbon concentration and duration of the diffuse cycle (DIFFPCT, DIFFTIME).

(a) Fit a linear regression model relating the results of the pitch carbon analysis test (PITCH) to the five regressor variables.

(b) Estimate σ2.

(c) Find the standard errors se(![]() j)

j)

(d) Use the model in part (a) to predict PITCH when TEMP = 1650, SOAKTIME = 1.00, SOAKPCT = 1.10, DIFFTIME = 1.00, and DIFFPCT = 0.80.

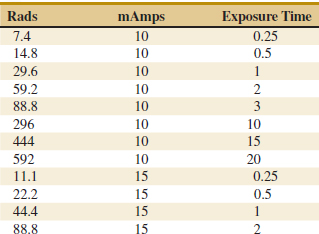

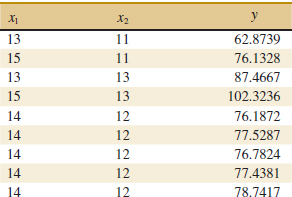

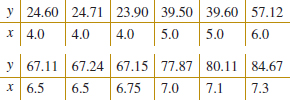

12-15. An article in Electronic Packaging and Production (2002, Vol. 42) considered the effect of X-ray inspection of integrated circuits. The rads (radiation dose) were studied as a function of current (in milliamps) and exposure time (in minutes). The data are in Table E12-7.

![]()

![]() TABLE • E12-7 X-ray Inspection Data

TABLE • E12-7 X-ray Inspection Data

(a) Fit a multiple linear regression model to these data with rads as the response.

(b) Estimate σ2 and the standard errors of the regression coefficients.

(c) Use the model to predict rads when the current is 15 milliamps and the exposure time is 5 seconds.

![]()

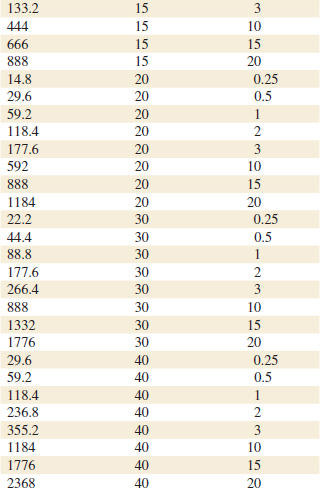

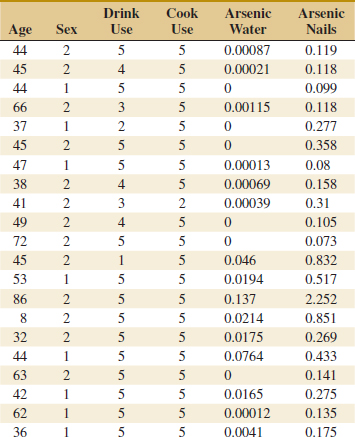

12-16. ![]() An article in Cancer Epidemiology, Biomarkers and Prevention (1996, Vol. 5, pp. 849–852) reported on a pilot study to assess the use of toenail arsenic concentrations as an indicator of ingestion of arsenic-containing water. Twenty-one participants were interviewed regarding use of their private (unregulated) wells for drinking and cooking, and each provided a sample of water and toenail clippings. Table 12-8 showed the data of age (years), sex of person (1 = male, 2 = female), proportion of times household well used for drinking (1 ≤ 1/4,2 = 1/4,3 = 1/2,4 = 3/4,5 ≥ 3/4), proportion of times household well used for cooking (1 ≤ 1/4,2 = 1/4,3 = 1/2,4 = 3/4,5 ≥ 3/4), arsenic in water (ppm), and arsenic in toenails (ppm) respectively.

An article in Cancer Epidemiology, Biomarkers and Prevention (1996, Vol. 5, pp. 849–852) reported on a pilot study to assess the use of toenail arsenic concentrations as an indicator of ingestion of arsenic-containing water. Twenty-one participants were interviewed regarding use of their private (unregulated) wells for drinking and cooking, and each provided a sample of water and toenail clippings. Table 12-8 showed the data of age (years), sex of person (1 = male, 2 = female), proportion of times household well used for drinking (1 ≤ 1/4,2 = 1/4,3 = 1/2,4 = 3/4,5 ≥ 3/4), proportion of times household well used for cooking (1 ≤ 1/4,2 = 1/4,3 = 1/2,4 = 3/4,5 ≥ 3/4), arsenic in water (ppm), and arsenic in toenails (ppm) respectively.

(a) Fit a multiple linear regression model using arsenic concentration in nails as the response and age, drink use, cook use, and arsenic in the water as the regressors.

(b) Estimate σ2 and the standard errors of the regression coefficients.

(c) Use the model to predict the arsenic in nails when the age is 30, the drink use is category 5, the cook use is category 5, and arsenic in the water is 0.135 ppm.

![]() 12-17. An article in IEEE Transactions on Instrumentation and Measurement (2001, Vol. 50, pp. 2033–2040) reported on a study that had analyzed powdered mixtures of coal and limestone for permittivity. The errors in the density measurement was the response. The data are reported in Table E12-9.

12-17. An article in IEEE Transactions on Instrumentation and Measurement (2001, Vol. 50, pp. 2033–2040) reported on a study that had analyzed powdered mixtures of coal and limestone for permittivity. The errors in the density measurement was the response. The data are reported in Table E12-9.

(a) Fit a multiple linear regression model to these data with the density as the response.

(b) Estimate σ2 and the standard errors of the regression coefficients.

(c) Use the model to predict the density when the dielectric constant is 2.5 and the loss factor is 0.03.

![]() 12-18. An article in Biotechnology Progress (2001, Vol. 17, pp. 366–368) reported on an experiment to investigate and optimize nisin extraction in aqueous two-phase systems (ATPS). The nisin recovery was the dependent variable (y). The two regressor variables were concentration (%) of PEG 4000 (denoted as x1 and concentration (%) of Na2SO4 (denoted as x2). The data are in Table E12-10.

12-18. An article in Biotechnology Progress (2001, Vol. 17, pp. 366–368) reported on an experiment to investigate and optimize nisin extraction in aqueous two-phase systems (ATPS). The nisin recovery was the dependent variable (y). The two regressor variables were concentration (%) of PEG 4000 (denoted as x1 and concentration (%) of Na2SO4 (denoted as x2). The data are in Table E12-10.

![]() TABLE • E12-10 Nisin Extraction Data

TABLE • E12-10 Nisin Extraction Data

(a) Fit a multiple linear regression model to these data.

(b) Estimate σ2 and the standard errors of the regression coefficients.

(c) Use the model to predict the nisin recovery when x1 = 14.5 and x2 = 12.5.

![]()

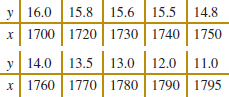

12-19. ![]() An article in Optical Engineering [“Operating Curve Extraction of a Correlator's Filter” (2004, Vol. 43, pp. 2775–2779)] reported on the use of an optical correlator to perform an experiment by varying brightness and contrast. The resulting modulation is characterized by the useful range of gray levels. The data follow:

An article in Optical Engineering [“Operating Curve Extraction of a Correlator's Filter” (2004, Vol. 43, pp. 2775–2779)] reported on the use of an optical correlator to perform an experiment by varying brightness and contrast. The resulting modulation is characterized by the useful range of gray levels. The data follow:

![]()

(a) Fit a multiple linear regression model to these data.

(b) Estimate σ2.

(c) Compute the standard errors of the regression coefficients.

(d) Predict the useful range when brightness = 80 and contrast = 75.

![]()

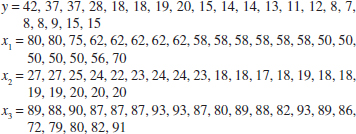

12-20. An article in Technometrics (1974, Vol. 16, pp. 523–531) considered the following stack-loss data from a plant oxidizing ammonia to nitric acid. Twenty-one daily responses of stack loss (the amount of ammonia escaping) were measured with air flow x1, temperature x2, and acid concentration x3.

(a) Fit a linear regression model relating the results of the stack loss to the three regressor varilables.

(b) Estimate σ2.

(c) Find the standard error se(![]() j).

j).

(d) Use the model in part (a) to predict stack loss when x1 = 60, x2 = 26, and x3 = 85.

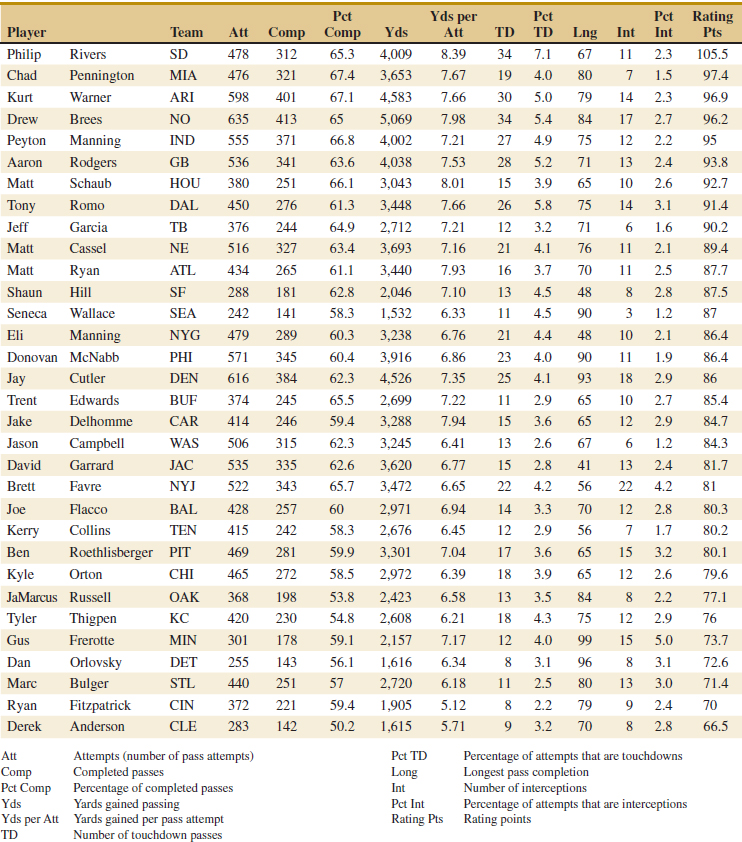

![]() 12-21. Table E12-11 presents quarterback ratings for the 2008 National Football League season (The Sports Network).

12-21. Table E12-11 presents quarterback ratings for the 2008 National Football League season (The Sports Network).

(a) Fit a multiple regression model to relate the quarterback rating to the percentage of completions, the percentage of TDs, and the percentage of interceptions.

(b) Estimate σ2.

(c) What are the standard errors of the regression coefficients?

(d) Use the model to predict the rating when the percentage of completions is 60%, the percentage of TDs is 4%, and the percentage of interceptions is 3%.

![]() TABLE • E12-11 Quarterback Ratings for the 2008 National Football League Season

TABLE • E12-11 Quarterback Ratings for the 2008 National Football League Season

![]() 12-22. Table E12-12 presents statistics for the National Hockey League teams from the 2008–2009 season (The Sports Network). Fit a multiple linear regression model that relates wins to the variables GF through FG Because teams play 82 game, W = 82 − L − T − OTL, but such a model does not help build a better team. Estimate σ2 and find the standard errors of the regression coefficients for your model.

12-22. Table E12-12 presents statistics for the National Hockey League teams from the 2008–2009 season (The Sports Network). Fit a multiple linear regression model that relates wins to the variables GF through FG Because teams play 82 game, W = 82 − L − T − OTL, but such a model does not help build a better team. Estimate σ2 and find the standard errors of the regression coefficients for your model.

![]() TABLE • E12-12 Team Statistics for the 2008–2009 National Hockey League Season

TABLE • E12-12 Team Statistics for the 2008–2009 National Hockey League Season

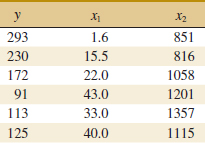

12-23. A study was performed on wear of a bearing and its relationship to x1 = oil viscosity and x2 = load. The following data were obtained.

(a) Fit a multiple linear regression model to these data.

(b) Estimate σ2 and the standard errors of the regression coefficients.

(c) Use the model to predict wear when x1 = 25 and x2 = 1000.

(d) Fit a multiple linear regression model with an interaction term to these data.

(e) Estimate σ2 and se(![]() j) for this new model. How did these quantities change? Does this tell you anything about the value of adding the interaction term to the model?

j) for this new model. How did these quantities change? Does this tell you anything about the value of adding the interaction term to the model?

(f) Use the model in part (d) to predict when x1 = 25 and x2 = 1000. Compare this prediction with the predicted value from part (c).

12-24. Consider the linear regression model

![]()

where ![]() 1 = Σxi1 / n and

1 = Σxi1 / n and ![]() 2 = Σxi2/n.

2 = Σxi2/n.

(a) Write out the least squares normal equations for this model.

(b) Verify that the least squares estimate of the intercept in this model is ![]() 0′ = Σyi / n =

0′ = Σyi / n = ![]() .

.

(c) Suppose that we use yi − ![]() as the response variable in this model. What effect will this have on the least squares estimate of the intercept?

as the response variable in this model. What effect will this have on the least squares estimate of the intercept?

12-2 Hypothesis Tests In Multiple Linear Regression

In multiple linear regression problems, certain tests of hypotheses about the model parameters are useful in measuring model adequacy. In this section, we describe several important hypothesis-testing procedures. As in the simple linear regression case, hypothesis testing requires that the error terms ![]() i in the regression model are normally and independently distributed with mean zero and variance σ2.

i in the regression model are normally and independently distributed with mean zero and variance σ2.

12-2.1 TEST FOR SIGNIFICANCE OF REGRESSION

The test for significance of regression is a test to determine whether a linear relationship exists between the response variable y and a subset of the regressor variables x1, x2,..., xk. The appropriate hypotheses are

Hypotheses for ANOVA Test

Rejection of H0:β1 = β2 = ··· = βk = 0 implies that at least one of the regressor variables x1, x2,..., xk contributes significantly to the model.

The test for significance of regression is a generalization of the procedure used in simple linear regression. The total sum of squares SST is partitioned into a sum of squares due to the model or to regression and a sum of squares due to error, say,

![]()

Now if H0: β1 = β2 = ··· = βk = 0 is true, SSR/σ2 is a chi-square random variable with k degrees of freedom. Note that the number of degrees of freedom for this chi-square random variable is equal to the number of regressor variables in the model. We can also show that the SSE / σ2 is a chi-square random variable with n − p degrees of freedom, and that SSE and SSR are independent. The test statistic for H0: β1 = β2 = ··· = βk = 0 is

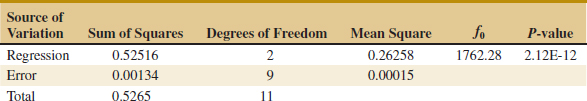

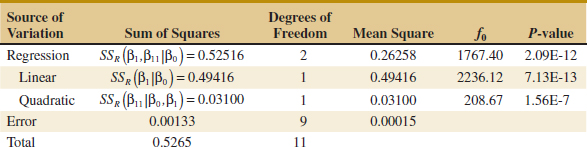

![]() TABLE • 12-5 Analysis of Variance for Testing Significance of Regression in Multiple Regression

TABLE • 12-5 Analysis of Variance for Testing Significance of Regression in Multiple Regression

Test Statistic for ANOVA

We should reject H0 if the computed value of the test statistic in Equation 12-19, f0, is greater than fα,k,n−p. The procedure is usually summarized in an analysis of variance table such as Table 12-5.

A computational formula for SSR may be found easily. Now because SST = ![]() −

− ![]() /n = y′y −

/n = y′y − ![]() /n, we may rewrite Equation 12-19 as

/n, we may rewrite Equation 12-19 as

or

![]()

Therefore, the regression sum of squares is

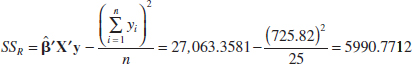

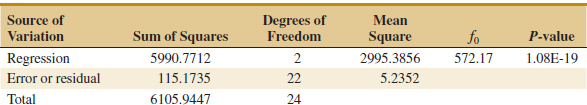

Example 12-3 Wire Bond Strength ANOVA We will test for significance of regression (with α = 0.05) using the wire bond pull strength data from Example 12-1. The total sum of squares is

The regression or model sum of squares is computed from Equation 12-21 as follows:

and by subtraction

![]()

The analysis of variance is shown in Table 12-6. To test H0: β1 = β2 = 0, we calculate the statistic

![]()

![]() TABLE • 12-6 Test for Significance of Regression for Example 12-3

TABLE • 12-6 Test for Significance of Regression for Example 12-3

Because f0 > f0.05,2,22 = 3.44 (or because the P-value is considerably smaller than α = 0.05), we reject the null hypothesis and conclude that pull strength is linearly related to either wire length or die height, or both.

Practical Interpretation: Rejection of H0 does not necessarily imply that the relationship found is an appropriate model for predicting pull strength as a function of wire length and die height. Further tests of model adequacy are required before we can be comfortable using this model in practice.

Most multiple regression computer programs provide the test for significance of regression in their output display. The middle portion of Table 12-4 is the computer output for this example. Compare Tables 12-4 and 12-6 and note their equivalence apart from rounding. The P-value is rounded to zero in the computer output.

R2 and Adjusted R2

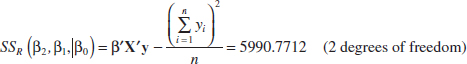

We may also use the coefficient of multiple determination R2 as a global statistic to assess the fit of the model. Computationally,

![]()

For the wire bond pull strength data, we find that R2 = SSR / SST = 5990.7712 / 6105.9447 = 0.9811. Thus, the model accounts for about 98% of the variability in the pull strength response (refer to the computer software output in Table 12-4). The R2 statistic is somewhat problematic as a measure of the quality of the fit for a multiple regression model because it never decreases when a variable is added to a model.

To illustrate, consider the model fit to the wire bond pull strength data in Example 11-8. This was a simple linear regression model with x1 = wire length as the regressor. The value of R2 for this model is R2 = 0.9640. Therefore, adding x2 = die height to the model increases R2 by 0.9811 − 0.9640 = 0.0171, a very small amount. Because R2 can never decrease when a regressor is added, it can be difficult to judge whether the increase is telling us anything useful about the new regressor. It is particularly hard to interpret a small increase, such as observed in the pull strength data.

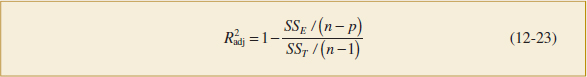

Many regression users prefer to use an adjusted R2 statistic:

Adjusted R2

Because SSE /(n − p) is the error or residual mean square and SST /(n − p) is a constant, ![]() will only increase when a variable is added to the model if the new variable reduces the error mean square. Note that for the multiple regression model for the pull strength data

will only increase when a variable is added to the model if the new variable reduces the error mean square. Note that for the multiple regression model for the pull strength data ![]() = 0.979 (see the output in Table 12-4), whereas in Example 11-8, the adjusted R2 for the one-variable model is

= 0.979 (see the output in Table 12-4), whereas in Example 11-8, the adjusted R2 for the one-variable model is ![]() = 0.962. Therefore, we would conclude that adding x2 = die height to the model does result in a meaningful reduction in unexplained variability in the response.

= 0.962. Therefore, we would conclude that adding x2 = die height to the model does result in a meaningful reduction in unexplained variability in the response.

The adjusted R2 statistic essentially penalizes the analyst for adding terms to the model. It is an easy way to guard against overfitting, that is, including regressors that are not really useful. Consequently, it is very useful in comparing and evaluating competing regression models. We will use ![]() for this when we discuss variable selection in regression in Section 12-6.3.

for this when we discuss variable selection in regression in Section 12-6.3.

12-2.2 TESTS ON INDIVIDUAL REGRESSION COEFFICIENTS AND SUBSETS OF COEFFICIENTS

We are frequently interested in testing hypotheses on the individual regression coefficients. Such tests would be useful in determining the potential value of each of the regressor variables in the regression model. For example, the model might be more effective with the inclusion of additional variables or perhaps with the deletion of one or more of the regressors presently in the model.

The hypothesis to test if an individual regression coefficient, say βj equals a value βj0 is

![]()

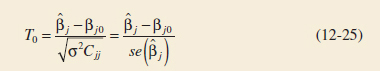

The test statistic for this hypothesis is

where Cjj is the diagonal element of (X′X)−1 corresponding to ![]() j Notice that the denominator of Equation 12-24 is the standard error of the regression coefficient

j Notice that the denominator of Equation 12-24 is the standard error of the regression coefficient ![]() j. The null hypothesis H0: βj = βj0 is rejected if |t0|>tα/2,n−p. This is called a partial or marginal test because the regression coefficient

j. The null hypothesis H0: βj = βj0 is rejected if |t0|>tα/2,n−p. This is called a partial or marginal test because the regression coefficient ![]() j depends on all the other regressor variables xi(i ≠ j) that are in the model. More will be said about this in the following example.

j depends on all the other regressor variables xi(i ≠ j) that are in the model. More will be said about this in the following example.

An important special case of the previous hypothesis occurs for βj = 0. If H0: βj = 0 is not rejected, this indicates that the regressor xj can be deleted from the model. Adding a variable to a regression model always causes the sum of squares for regression to increase and the error sum of squares to decrease (this is why R2 always increases when a variable is added). We must decide whether the increase in the regression sum of squares is large enough to justify using the additional variable in the model. Furthermore, adding an unimportant variable to the model can actually increase the error mean square, indicating that adding such a variable has actually made the model a poorer fit to the data (this is why ![]() is a better measure of global model fit then the ordinary R2).

is a better measure of global model fit then the ordinary R2).

Example 12-4Wire Bond Strength Coefficient Test Consider the wire bond pull strength data, and suppose that we want to test the hypothesis that the regression coefficient for x2 (die height) is zero. The hypotheses are

![]()

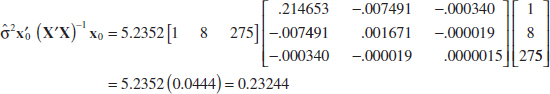

The main diagonal element of the (X′X)−1 matrix corresponding to ![]() 2 is C22 = 0.0000015, so the t-statistic in Equation 12-25 is

2 is C22 = 0.0000015, so the t-statistic in Equation 12-25 is

![]()

Note that we have used the estimate of σ2 reported to four decimal places in Table 12-6. Because t0.025,22 = 2.074, we reject H0: β2 = 0 and conclude that the variable x2 (die height) contributes significantly to the model. We could also have used a P-value to draw conclusions. The P-value for t0 = 4.477 is P = 0.0002, so with α = 0.05, we would reject the null hypothesis.

Practical Interpretation: Note that this test measures the marginal or partial contribution of x2 given that x1 is in the model. That is, the t-test measures the contribution of adding the variable x2 = die height to a model that already contains x1 = wire length. Table 12-4 shows the computer-generated value of the t-test computed. The computer software reports the t-test statistic to two decimal places. Note that the computer produces a t-test for each regression coefficient in the model. These t-tests indicate that both regressors contribute to the model.

Example 12-5 Wire Bond Strength One-Sided Coefficient Test There is an interest in the effect of die height on strength. This can be evaluated by the magnitude of the coefficient for die height. To conclude that the coefficient for die height exceeds 0.01, the hypotheses become

![]()

For such a test, computer software can complete much of the hard work. We need only to assemble the pieces. From the output in Table 12-4, ![]() 2 = 0.012528, and the standard error of

2 = 0.012528, and the standard error of ![]() 2 = 0.002798. Therefore, the t-statistic is

2 = 0.002798. Therefore, the t-statistic is

![]()

with 22 degrees of freedom (error degrees of freedom). From Table IV in Appendix A, t0.25,22 = 0.686 and t0.1,22 = 1.321. Therefore, the P-value can be bounded as 0.1 < P-value < 0.25. One cannot conclude that the coefficient exceeds 0.01 at common levels of significance.

There is another way to test the contribution of an individual regressor variable to the model. This approach determines the increase in the regression sum of squares obtained by adding a variable xj(say) to the model, given that other variables xi(i ≠ j) are already included in the regression equation.

The procedure used to do this is called the general regression significance test, or the extra sum of squares method. This procedure can also be used to investigate the contribution of a subset of the regressor variables to the model. Consider the regression model with k regressor variables

![]()

where y is (n × 1), X is (n × p), β is (p × 1), ![]() is (n × 1), and p = k + 1. We would like to determine whether the subset of regressor variables x1, x2,..., xr(r < k) as a whole contributes significantly to the regression model. Let the vector of regression coefficients be partitioned as follows:

is (n × 1), and p = k + 1. We would like to determine whether the subset of regressor variables x1, x2,..., xr(r < k) as a whole contributes significantly to the regression model. Let the vector of regression coefficients be partitioned as follows:

![]()

where β1 is (r × 1) and β2 is [(p − r) × 1]. We wish to test the hypotheses

Hypotheses for General Regression Test

![]()

where 0 denotes a vector of zeroes. The model may be written as

![]()

where X1 represents the columns of X associated with β1 and X2 represents the columns of X associated with β2.

For the full model (including both β1 and β2), we know that ![]() = (X′X)−1 X′y. In addition, the regression sum of squares for all variables including the intercept is

= (X′X)−1 X′y. In addition, the regression sum of squares for all variables including the intercept is

![]()

and

![]()

SSR(β) is called the regression sum of squares due to β. To find the contribution of the terms in β1 to the regression, fit the model assuming that the null hypothesis H0: β1 = 0 to be true. The reduced model is found from Equation 12-29 as

![]()

The least squares estimate of β2 is β2 = (X2′X2)−1 X2′ y, and

![]()

The regression sum of squares due to β1 given that β2 is already in the model is

![]()

The Extra Sum of Squares

This sum of squares has r degrees of freedom. It is sometimes called the extra sum of squares due to β1. Note that SSR(β1|β2) is the increase in the regression sum of squares due to including the variables x1, x2,..., xr in the model. Now SSR(β1|β2) is independent of MSE, and the null hypothesis β1 = 0 may be tested by the statistic.

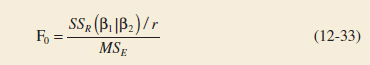

F Statistic for General Regression Tests

If the computed value of the test statistic f0 > fα,r,n−p, we reject H0, concluding that at least one of the parameters in β1 is not zero and, consequently, at least one of the variables x1, x2,..., xr in X1 contributes significantly to the regression model. Some authors call the test in Equation 12-33 a partial F-test.

The partial F-test is very useful. We can use it to measure the contribution of each individual regressor xj as if it were the last variable added to the model by computing

![]()

This is the increase in the regression sum of squares due to adding xj to a model that already includes x1,...,xj−1, xj+1,...,xk. The partial F-test is a more general procedure in that we can measure the effect of sets of variables. In Section 12-6.3, we show how the partial F-test plays a major role in model building—that is, in searching for the best set of regressor variables to use in the model.

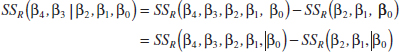

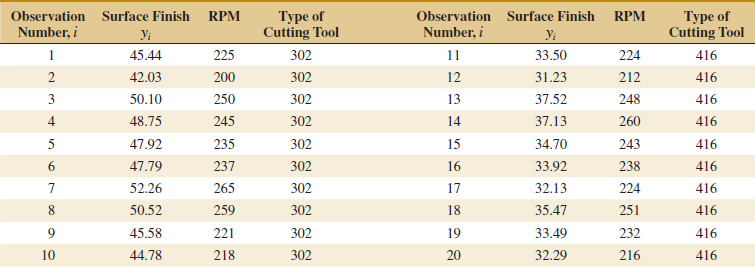

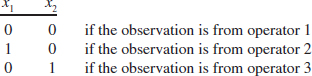

Example 12-6 Wire Bond Strength General Regression Test Consider the wire bond pull-strength data in Example 12-1. We will investigate the contribution of two new variables, x3 and x4, to the model using the partial F-test approach. The new variables are explained at the end of this example. That is, we wish to test

To test this hypothesis, we need the extra sum of squares due to β3 and β4 or

In Example 12-3, we calculated

Also, Table 12-4 shows the computer output for the model with only x1 and x2 as predictors. In the analysis of variance table, we can see that SSR = 5990.8, and this agrees with our calculation. In practice, the computer output would be used to obtain this sum of squares.

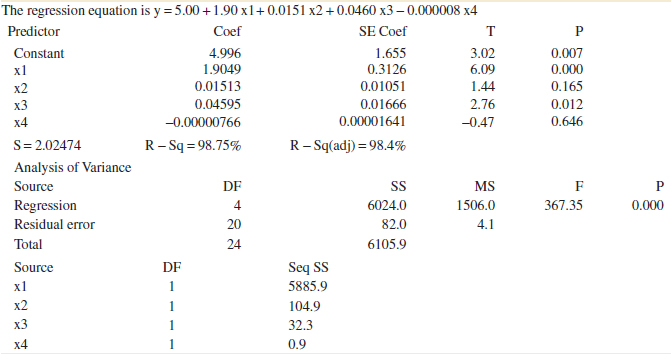

If we fit the model Y = β0 + β1x1 + β2x2 + β3x3 + β4x4, we can use the same matrix formula. Alternatively, we can look at SSR from computer output for this model. The analysis of variance table for this model is shown in Table 12-7 and we see that

![]()

Therefore,

![]()

This is the increase in the regression sum of squares due to adding x3 and x4 to a model already containing x1 and x2. To test H0, calculate the test statistic

![]()

Note that MSE from the full model using x1, x2, x3 and x4 is used in the denominator of the test statistic. Because f0.05,2, 20 = 3.49, we reject H0 and conclude that at least one of the new variables contributes significantly to the model. Further analysis and tests will be needed to refine the model and determine whether one or both of x3 and x4 are important.

![]() TABLE • 12-7 Regression Analysis: y versus x1, x2, x3, x4

TABLE • 12-7 Regression Analysis: y versus x1, x2, x3, x4

The mystery of the new variables can now be explained. These are quadratic powers of the original predictors of wire length and wire height. That is, x3 = ![]() and x4 =

and x4 = ![]() . A test for quadratic terms is a common use of partial F-tests. With this information and the original data for x1 and x2, we can use computer software to reproduce these calculations. Multiple regression allows models to be extended in such a simple manner that the real meaning of x3 and x4 did not even enter into the test procedure. Polynomial models such as this are discussed further in Section 12-6.

. A test for quadratic terms is a common use of partial F-tests. With this information and the original data for x1 and x2, we can use computer software to reproduce these calculations. Multiple regression allows models to be extended in such a simple manner that the real meaning of x3 and x4 did not even enter into the test procedure. Polynomial models such as this are discussed further in Section 12-6.

If a partial F-test is applied to a single variable, it is equivalent to a t-test. To see this, consider the computer software regression output for the wire bond pull strength in Table 12-4. Just below the analysis of variance summary in this table, the quantity labeled “‘SeqSS”’ shows the sum of squares obtained by fitting x1 alone (5885.9) and the sum of squares obtained by fitting x2 after x1 (104.9). In out notation, these are referred to as SSR(β1|β0) and SSR(β2, β1|β0), respectively. Therefore, to test H0: β2 = 0, H1: β2 ≠ 0, the partial F-test is

![]()

where MSE is the mean square for residual in the computer output in Table 12-4. This statistic should be compared to an F-distribution with 1 and 22 degrees of freedom in the numerator and denominator, respectively. From Table 12-4, the t-test for the same hypothesis is t0 = 4.48. Note that ![]() = 4.482 = 20.07 = f0, except for round-off error. Furthermore, the square of a t-random variable with ν degrees of freedom is an F-random variable with 1 and v degrees of freedom. Consequently, the t-test provides an equivalent method to test a single variable for contribution to a model. Because the t-test is typically provided by computer output, it is the preferred method to test a single variable.

= 4.482 = 20.07 = f0, except for round-off error. Furthermore, the square of a t-random variable with ν degrees of freedom is an F-random variable with 1 and v degrees of freedom. Consequently, the t-test provides an equivalent method to test a single variable for contribution to a model. Because the t-test is typically provided by computer output, it is the preferred method to test a single variable.

Exercises FOR SECTION 12-2

![]() Problem available in WileyPLUS at instructor's discretion.

Problem available in WileyPLUS at instructor's discretion.

![]() Go Tutorial Tutoring problem available in WileyPLUS at instructor's discretion.

Go Tutorial Tutoring problem available in WileyPLUS at instructor's discretion.

12-25. Recall the regression of percent of body fat on height and waist from Exercise 12-1. The simple regression model of percent of body fat on height alone shows the following:

![]()

(a) Test whether the coefficient of height is statistically significant.

(b) Looking at the model with both waist and height in the model, test whether the coefficient of height is significant in this model.

(c) Explain the discrepancy in your two answers.

![]() 12-26. Exercise 12-2 presented a regression model to predict final grade from two hourly tests.

12-26. Exercise 12-2 presented a regression model to predict final grade from two hourly tests.

(a) Test the hypotheses that each of the slopes is zero.

(b) What is the value of R2 for this model?

(c) What is the residual standard deviation?

(d) Do you believe that the professor can predict the final grade well enough from the two hourly tests to consider not giving the final exam? Explain.

![]() 12-27. Consider the regression model of Exercise 12-3 attempting to predict the percent of engineers in the workforce from various spending variables.

12-27. Consider the regression model of Exercise 12-3 attempting to predict the percent of engineers in the workforce from various spending variables.

(a) Are any of the variables useful for prediction? (Test an appropriate hypothesis).

(b) What percent of the variation in the percent of engineers is accounted for by the model?

(c) What might you do next to create a better model?

12-28. Consider the linear regression model from Exercise 12-4. Is the second-order term necessary in the regression model?

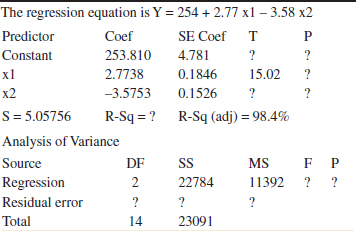

12-29. Consider the following computer output.

(a) Fill in the missing quantities. You may use bounds for the P-values.

(b) What conclusions can you draw about the significance of regression?

(c) What conclusions can you draw about the contributions of the individual regressors to the model?

12-30. You have fit a regression model with two regressors to a data set that has 20 observations. The total sum of squares is 1000 and the model sum of squares is 750.

(a) What is the value of R2 for this model?

(b) What is the adjusted R2 for this model?

(c) What is the value of the F-statistic for testing the significance of regression? What conclusions would you draw about this model if α = 0.05? What if α = 0.01?

(d) Suppose that you add a third regressor to the model and as a result, the model sum of squares is now 785. Does it seem to you that adding this factor has improved the model?

![]() 12-31. Consider the regression model fit to the soil shear strength data in Exercise 12-5.

12-31. Consider the regression model fit to the soil shear strength data in Exercise 12-5.

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Construct the t-test on each regression coefficient. What are your conclusions, using α = 0.05? Calculate P-values.

![]() 12-32. Consider the absorption index data in Exercise 12-6. The total sum of squares for y is SST = 742.00.

12-32. Consider the absorption index data in Exercise 12-6. The total sum of squares for y is SST = 742.00.

(a) Test for significance of regression using α = 0.01. What is the P-value for this test?

(b) Test the hypothesis H0: β1 = 0 versus H1: β1 ≠ 0 using α = 0.01. What is the P-value for this test?

(c) What conclusion can you draw about the usefulness of x1 as a regressor in this model?

12-33. ![]() A regression model Y = β0 + β1x1 + β2x2 + β3x3 +

A regression model Y = β0 + β1x1 + β2x2 + β3x3 + ![]() as been fit to a sample of n = 25 observations. The calculated t-ratios

as been fit to a sample of n = 25 observations. The calculated t-ratios ![]() j/se(

j/se(![]() j), j = 1, 2, 3 are as follows: for β1, t0 = 4.82, for β2, t0 = 8.21, and for β3, t0 = 0.98.

j), j = 1, 2, 3 are as follows: for β1, t0 = 4.82, for β2, t0 = 8.21, and for β3, t0 = 0.98.

(a) Find P-values for each of the t-statistics.

(b) Using α = 0.05, what conclusions can you draw about the regressor x3? Does it seem likely that this regressor contributes significantly to the model?

12-34. Consider the electric power consumption data in Exercise 12-10.

![]() (a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Use the t-test to assess the contribution of each regressor to the model. Using α = 0.05, what conclusions can you draw?

12-35. Consider the gasoline mileage data in Exercise 12-11.

![]() (a) Test for significance of regression using α = 0.05. What conclusions can you draw?

(a) Test for significance of regression using α = 0.05. What conclusions can you draw?

(b) Find the t-test statistic for each regressor. Using α = 0.05., what conclusions can you draw? Does each regressor contribute to the model?

![]() 12-36. Consider the wire bond pull strength data in Exercise 12-12.

12-36. Consider the wire bond pull strength data in Exercise 12-12.

(a) Test for significance of regression using α = 0.05. Find the P-value for this test. What conclusions can you draw?

(b) Calculate the t-test statistic for each regression coefficient. Using α = 0.05, what conclusions can you draw? Do all variables contribute to the model?

![]()

12-37. Reconsider the semiconductor data in Exercise 12-13.

(a) Test for significance of regression using α = 0.05. What conclusions can you draw?

(b) Calculate the t-test statistic and P-value for each regression coefficient. Using α = 0.05, what conclusions can you draw?

12-38. Consider the regression model fit to the arsenic data in Exercise 12-16. Use arsenic in nails as the response and age, drink use, and cook use as the regressors.

![]()

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Construct a t-test on each regression coefficient. What conclusions can you draw about the variables in this model? Use α = 0.05.

![]()

12-39. Consider the regression model fit to the X-ray inspection data in Exercise 12-15. Use rads as the response.

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Construct a t-test on each regression coefficient. What conclusions can you draw about the variables in this model? Use α = 0.05.

![]()

12-40. Consider the regression model fit to the nisin extraction data in Exercise 12-18. Use nisin extraction as the response.

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Construct a t-test on each regression coefficient. What conclusions can you draw about the variables in this model? Use α = 0.05.

(c) Comment on the effect of a small sample size to the tests in the previous parts.

12-41. Consider the regression model fit to the gray range modulation data in Exercise 12-19. Use the useful range as the response.

![]()

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Construct a t-test on each regression coefficient. What conclusions can you draw about the variables in this model? Use α = 0.05.

![]()

12-42. Consider the regression model fit to the stack loss data in Exercise 12-20. Use stack loss as the response.

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Construct a t-test on each regression coefficient. What conclusions can you draw about the variables in this model? Use α = 0.05.

![]()

12-43. Consider the NFL data in Exercise 12-21.

(a) Test for significance of regression using α = 0.05. What is the P-value for this test?

(b) Conduct the t-test for each regression coefficient. Using α = 0.05, what conclusions can you draw about the variables in this model?

(c) Find the amount by which the regressor x2 (TD percentage) increases the regression sum of squares, and conduct an F-test for H0: β2 = 0 versus H1: β2 ≠ 0 using α = 0.05. What is the P-value for this test? What conclusions can you draw?

![]() 12-44.

12-44. ![]() Go Tutorial Exercise 12-14 presents data on heat-treating gears.

Go Tutorial Exercise 12-14 presents data on heat-treating gears.

(a) Test the regression model for significance of regression. Using α = 0.05, find the P-value for the test and draw conclusions.

(b) Evaluate the contribution of each regressor to the model using the t-test with α = 0.05.

(c) Fit a new model to the response PITCH using new regressors x1 = SOAKTIME × SOAKPCT and x2 = DIFFTIME × DIFFPCT.

(d) Test the model in part (c) for significance of regression using α = 0.05. Also calculate the t-test for each regressor and draw conclusions.

(e) Estimate σ2 for the model from part (c) and compare this to the estimate of σ2 for the model in part (a). Which estimate is smaller? Does this offer any insight regarding which model might be preferable?

![]() 12-45. Consider the bearing wear data in Exercise 12-23.

12-45. Consider the bearing wear data in Exercise 12-23.

(a) For the model with no interaction, test for significance of regression using α = 0.05. What is the P-value for this test? What are your conclusions?

(b) For the model with no interaction, compute the t-statistics for each regression coefficient. Using α = 0.05, what conclusions can you draw?

(c) For the model with no interaction, use the extra sum of squares method to investigate the usefulness of adding x2 = load to a model that already contains x1 = oil viscosity. Use α = 0.05.

(d) Refit the model with an interaction term. Test for significance of regression using α = 0.05.

(e) Use the extra sum of squares method to determine whether the interaction term contributes significantly to the model. Use α = 0.05.

(f) Estimate σ2 for the interaction model. Compare this to the estimate of σ2 from the model in part (a).

![]()

12-46. Data on National Hockey League team performance were presented in Exercise 12-22.

(a) Test the model from this exercise for significance of regression using α = 0.05. What conclusions can you draw?

(b) Use the t-test to evaluate the contribution of each regressor to the model. Does it seem that all regressors are necessary? Use α = 0.05.

(c) Fit a regression model relating the number of games won to the number of goals for and the number of power play goals for. Does this seem to be a logical choice of regressors, considering your answer to part (b)? Test this new model for significance of regression and evaluate the contribution of each regressor to the model using the t-test. Use α = 0.05.

![]()

12-47. Data from a hospital patient satisfaction survey were presented in Exercise 12-9.

(a) Test the model from this exercise for significance of regression. What conclusions can you draw if α = 0.05? What if α = 0.01?

(b) Test the contribution of the individual regressors using the t-test. Does it seem that all regressors used in the model are really necessary?

![]()

12-48. Data from a hospital patient satisfaction survey were presented in Exercise 12-9.

(a) Fit a regression model using only the patient age and severity regressors. Test the model from this exercise for significance of regression. What conclusions can you draw if α = 0.05? What if α = 0.01?

(b) Test the contribution of the individual regressors using the t-test. Does it seem that all regressors used in the model are really necessary?

(c) Find an estimate of the error variance σ2. Compare this estimate of σ2 with the estimate obtained from the model containing the third regressor, anxiety. Which estimate is smaller? Does this tell you anything about which model might be preferred?

12-3 Confidence Intervals In Multiple Linear Regression

12-3.1 CONFIDENCE INTERVALS ON INDIVIDUAL REGRESSION COEFFICIENTS

In multiple regression models, is often useful to construct confidence interval estimates for the regression coefficients {βj}. The development of a procedure for obtaining these confidence intervals requires that the errors {![]() i} are normally and independently distributed with mean zero and variance σ2. This is the same assumption required in hypothesis testing. Therefore, the observations {Yi} are normally and independently distributed with mean β0 +

i} are normally and independently distributed with mean zero and variance σ2. This is the same assumption required in hypothesis testing. Therefore, the observations {Yi} are normally and independently distributed with mean β0 + ![]() and variance σ2. Because the least squares estimator

and variance σ2. Because the least squares estimator ![]() is a linear combination of the observations, it follows that

is a linear combination of the observations, it follows that ![]() is normally distributed with mean vector β and covariance atrix σ2(X′X)−1. Then each of the statistics

is normally distributed with mean vector β and covariance atrix σ2(X′X)−1. Then each of the statistics

![]()

has a t distribution with n − p degrees of freedom where Cjj is the jjth element of the (X′X)−1 matrix, and ![]() 2 is the estimate of the error variance, obtained from Equation 12-16. This leads to the following 100(1 − α)% confidence interval for the regression coefficient βj, j = 0, 1,..., k.

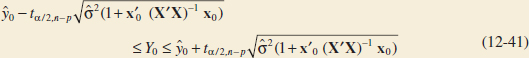

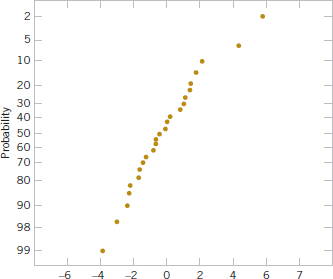

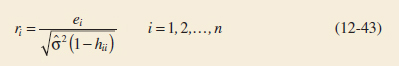

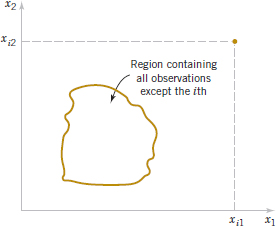

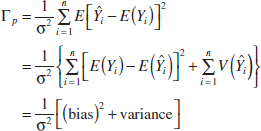

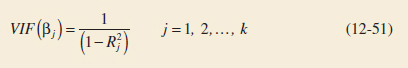

2 is the estimate of the error variance, obtained from Equation 12-16. This leads to the following 100(1 − α)% confidence interval for the regression coefficient βj, j = 0, 1,..., k.