Source Localization and Tracking

Yu Hen Hu, Department of Electronics and Communication Engineering, University of Wisconsin-Madison, Madison, WI, USA

Abstract

A high-level survey of existing source localization and tracking algorithms using multiple sensors is provided. This chapter begins with a review of triangulation based source localization methods. Then signal propagation models corresponding to several different signal modalities are surveyed. This is followed by derivations of existing source localization algorithms. Finally, traditional tracking algorithms are presented.

Keywords

Source localization; Tracking; Triangulation; Kalman filtering

3.18.1 Introduction

In this chapter, the task of source localization and tracking will be discussed. The goal of source localization is to estimate the location of one or more events (e.g., earthquake) or targets based on signals emitted from these locations and received at one or more sensors. It is often assumed that the cross section of the target or the event is very small compared to the spread of the sensors and hence the point source assumption is valid. If source locations move with respect to time, then tracking will be performed to facilitate accurate forecasting of future target positions. Diverse applications of source localizations have been found, such as Global Positioning System (GPS) [1,2], sonar [3,4], radar [5,6], seismic event localization [7–9], brain imaging [10], teleconference [11,12], wireless sensor networks [13–19], among many others.

The basic approach of source localization is geometric triangulation: Given the distance or angles between the unknown source location and known reference positions, the coordinates of the source locations can be computed algebraically. However, these distance or angle measurements often need to be inferred indirectly from a signal propagation model that describes the received source signal as a function of distance and incidence angle between the source and the sensor. Such a model facilitates statistical estimation of spatial locations of the sources based on features such as time of arrival, attenuation of signal intensity, or phase lags. Specific features that may be exploited are also dependent on the specific signal modalities such as acoustic signal, radio waves, seismic vibrations, infra-red light, or visible light. In this chapter, various source signal propagation models will be reviewed, and statistical inference methods will be surveyed.

Very often, source localization will be performed consecutively to track a moving source over time. Using a dynamic model to describe the movement of the source, one may predict the probability distribution function (pdf) of source location using previously received source signals. With this estimated prior distribution of source location, Bayesian estimation may be applied to update the source location using current sensory measurements. Thus, source localization and tracking are often intimately related.

In the remaining of this chapter, the basic idea of triangulation will first be reviewed. Next, the signal propagation and channel models will be introduced. Statistical methods that implicitly or explicitly leverage the triangulation approach to estimate source locations will then be presented. Bayesian tracking methods such as Kalman filter will also be briefly surveyed.

3.18.2 Problem formulation

Assume N sensors are deployed over a sensing field with known positions. Specifically, the position of the nth sensor is denoted by ![]() . It is also assumed that there are K targets in the sensing field with unknown locations

. It is also assumed that there are K targets in the sensing field with unknown locations ![]() . Each target is emitting a source signal denoted by

. Each target is emitting a source signal denoted by ![]() at time t. The nth sensor will receive a delayed, and sometimes distorted version of the

at time t. The nth sensor will receive a delayed, and sometimes distorted version of the ![]() th source signal

th source signal ![]() . In general,

. In general, ![]() is a function of the past source signal up to time

is a function of the past source signal up to time ![]() , the sensor locations

, the sensor locations ![]() , the target locations

, the target locations ![]() , as well as the propagation medium of the signal. This source signal propagation model will be discussed in a moment. It is noted that prior to target localization, a target detection task must be performed and the presence of K targets within the sensing field must have been estimated. The issues of target detection and target number estimation will not be included in this discussion.

, as well as the propagation medium of the signal. This source signal propagation model will be discussed in a moment. It is noted that prior to target localization, a target detection task must be performed and the presence of K targets within the sensing field must have been estimated. The issues of target detection and target number estimation will not be included in this discussion.

We further assume that the measurements at the nth sensor, denoted by ![]() is a superimposition of

is a superimposition of ![]() . That is,

. That is,

(18.1)

(18.1)

where ![]() is the observation noise at the nth sensor. The objective of source localization is to estimate the source locations

is the observation noise at the nth sensor. The objective of source localization is to estimate the source locations ![]() based on sensor readings

based on sensor readings ![]() given known sensor positions

given known sensor positions ![]() and the number of targets K.

and the number of targets K.

3.18.3 Triangulation

In a sensor network source localization problem setting, the sensor locations are the reference positions. Based on signals received at sensors from the sources, two types of measurements may be inferred: (i) distance between each sensor and each source and (ii) incidence angle of a wavefront of the source signal impinged upon a sensor relative to an absolute reference direction (e.g., north). In this section, we will derive three sets of formula that make use of (a) distance only, (b) angle only, and (c) distance and angle to deduce the source location. For convenience, the single source situation will be used, with discussions on potential generalization to multiple sources.

3.18.3.1 Distance based triangulation

Denote ![]() to be the Euclidean distance between the position of the nth sensor

to be the Euclidean distance between the position of the nth sensor ![]() and the (unknown) source location r, namely,

and the (unknown) source location r, namely, ![]() .

. ![]() may be estimated from the sensor observations

may be estimated from the sensor observations ![]() for certain type of sensors such as laser or infrared light. One may write down N quadratic equations:

for certain type of sensors such as laser or infrared light. One may write down N quadratic equations:

![]() (18.2)

(18.2)

Subtracting both sides of each pair of successive equations above to eliminate the unknown term ![]() , it leads to

, it leads to ![]() linear equations

linear equations

![]() (18.3)

(18.3)

These ![]() linear systems of equations may be expressed in a matrix form:

linear systems of equations may be expressed in a matrix form:

![]() (18.4)

(18.4)

In general, the accuracy of the distance estimate may be compromised by estimation errors. Thus, a more realistic model should include an estimation noise term:

![]() (18.5)

(18.5)

where e is a zero-mean, uncorrelated random noise vector such that

![]()

Then r may be estimated using weighted least square method that seeks to minimize a cost function

![]()

The leads to

![]() (18.6)

(18.6)

3.18.3.2 Angle based triangulation

Denote ![]() to be incidence angle from the source into the nth sensor using the north direction as the reference direction. We further assume that the angle is positive along the clock-wise direction. Referring to Figure 18.1, it is easily verified that

to be incidence angle from the source into the nth sensor using the north direction as the reference direction. We further assume that the angle is positive along the clock-wise direction. Referring to Figure 18.1, it is easily verified that

![]() (18.7)

(18.7)

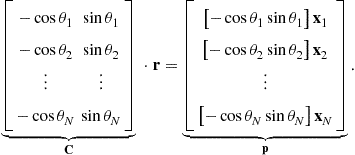

Rearranging terms and collecting all N equations, one has

(18.8)

(18.8)

Or, in matrix formation:

![]() (18.9)

(18.9)

Similar to Eq. (18.5), the observation p may be contaminated with noise. As such, the source location may be obtained via a weighted least square estimate. For the sake of notation simplicity, one may use e to denote the noise vector as in Eq. (18.5). Then, the weighted least square estimation of r becomes:

![]() (18.10)

(18.10)

3.18.3.3 Triangulation: generalizations

In Sections 3.18.3.1 and 3.18.3.2, single target triangulation localization algorithms for distance measurements and incidence angle measurements have been discussed. Both of these situations lead to an over-determined linear systems of Eqs. (18.5) and (18.9). Therefore, when both distance measurements and incidence angle measurements are available, these two equations may be combined and solved jointly.

When there are two or more sources (targets), a number of issues will need to be addressed. First of all, when a sensor receives signals emitted from two or more sources simultaneously, it may not be able to distinguish one signal from another if these signals overlap in time, space and frequency domains. This is the traditional signal (source) separation problem[20–23]. Secondly, even individual sources may be separated by individual sensors, which of the signals received by different sensors correspond to the same source is not always easy to tell. This is the so called data association problem [24,25]. A comprehensive survey of these two issues is beyond the scope of this chapter.

As discussed earlier, while localization may be accomplished via triangulation, the measurements of sensor to source distance or source to sensor incidence angle must be estimated based on received signals. The mathematical model of the received source signals, known as the signal propagation model will be surveyed in the next section.

3.18.4 Signal propagation models

Depending on specific applications, in many occasions, the source signal may be modeled as a narrow band signal characterized by a single sinusoid:

![]() (18.11)

(18.11)

where ![]() is the amplitude,

is the amplitude, ![]() is the frequency, and

is the frequency, and ![]() is the phase. While for certain special cases that all or a portions of these parameters are known, in most applications, they are assumed unknown.

is the phase. While for certain special cases that all or a portions of these parameters are known, in most applications, they are assumed unknown.

In other occasions, the source signal may be a broad band signal which contains numerous harmonics and is difficult to be expressed analytically.

Between each source-sensor pair there is a communication channel whose characteristics depend on specific medium (air, vacuum, water, etc.). The net effects of the communication channels on the received signal ![]() can be summarized in the following categories:

can be summarized in the following categories:

Attenuation: The amplitude of the source signal often attenuates rapidly as source to sensor distance increases. Hence, examining relative attenuation of signal strength provides an indirect way to estimate source to sensor distance. For a point source, the rate of attenuation is often inversely proportional to ![]() . The communication channel between the sensor and the source may also be frequency selective such that the attenuation rates are different from different frequency bands. For example, high frequency sound often attenuate much faster than low frequency sound. Thus the amplitude waveform as well as the energy of the source signal at the sensor may be distorted. If the signal propagation is subject to multi-path distortion, the attenuation rate may also be affected. Yet another factor that affects the measured amplitude of received signal is the sensor gain. Before the received analog signal is to be digitized, its magnitude will be amplified with adaptive gain control to ensure the dynamic range of the analog-to-digital converter (ADC) is not saturated. Furthermore, the signal strength attenuation may not be uniform over all directions, and the point source assumption may not be valid at short distance.

. The communication channel between the sensor and the source may also be frequency selective such that the attenuation rates are different from different frequency bands. For example, high frequency sound often attenuate much faster than low frequency sound. Thus the amplitude waveform as well as the energy of the source signal at the sensor may be distorted. If the signal propagation is subject to multi-path distortion, the attenuation rate may also be affected. Yet another factor that affects the measured amplitude of received signal is the sensor gain. Before the received analog signal is to be digitized, its magnitude will be amplified with adaptive gain control to ensure the dynamic range of the analog-to-digital converter (ADC) is not saturated. Furthermore, the signal strength attenuation may not be uniform over all directions, and the point source assumption may not be valid at short distance.

Time delay: Denote ![]() to be the signal propagation speed in the corresponding medium, the time for the source signal traveling to the sensor can be evaluated as

to be the signal propagation speed in the corresponding medium, the time for the source signal traveling to the sensor can be evaluated as

![]() (18.12)

(18.12)

However, the time delay due to signal propagation may be impacted by non-homogeneous mediums. The consequence may be refraction or deflection of signal propagation and the accuracy of time delay estimation may be compromised.

Phase distortion: The nonlinear phase distortion due to frequency selective channel property may also distort the morphology of the signal waveform, making it difficult to estimate any phase difference between received signals at different sensors.

Noise: While the source signal travels to each sensor, it may suffer from (additive) channel noise or interferences by other sources. It is often assumed that the background noise observed at each sensor is a zero-mean, un-correlated, and wide-sense stationary random process, having Gaussian distribution. Moreover, the background noise processes at different sensors are assumed to be statistically independent to each other. Such assumptions may need to be modified for situations when the background noise also include high energy impulsive noise or interferences.

A commonly used channel model in wireless communication theory is the convolution model that models the frequency selective characteristics of the channel as a finite impulse response digital filter ![]() . Thus, using the notation defined in this chapter,

. Thus, using the notation defined in this chapter,

(18.13)

(18.13)

The channel parameters are functions of both the sensor and source locations. For source localization application, above model is often simplified to emphasize specific features.

3.18.4.0.1 Received signal strength indicator (RSSI)

A popular simplified model concerns only amplitude attenuation:

![]() (18.14)

(18.14)

Here the propagation delay, phase distortion, and noise are all ignored. Instead, ![]() is used to denote the sensor gain of the nth sensor. Thus, one may write

is used to denote the sensor gain of the nth sensor. Thus, one may write

(18.15)

(18.15)

Averaging over a short time interval centered at the sampling time t, one may express the energy of the received signal during this short period as

(18.16)

(18.16)

where ![]() is the received signal energy at time t at sensor

is the received signal energy at time t at sensor ![]() , and

, and

![]()

It is assumed that the K source signals are statistically, mutually independent, such that

![]()

Moreover, the background noise ![]() is also independent to the signal, besides being

is also independent to the signal, besides being ![]() . random variables such that

. random variables such that

![]()

and

(18.17)

(18.17)

Here ![]() is a random variable with a

is a random variable with a ![]() distribution. However, as discussed in [19], for practical purposes,

distribution. However, as discussed in [19], for practical purposes, ![]() can be modeled as a Gaussian random variable with a positive mean value

can be modeled as a Gaussian random variable with a positive mean value ![]() and variance

and variance ![]() .

.

Equations (18.15) or (18.16) are often used in source localization algorithms that are based on Received Signal Strength Indicator (RSSI) [26,27] to infer the target location.

3.18.4.1 Time delay estimation

Time delay estimation [28,29] has a long history of signal processing applications [30–32]. It is assumed that

(18.18)

(18.18)

If ![]() can be estimated accurately, the source to sensor distance may be estimated using Eq. (18.12). Thus, the key issue is to estimate

can be estimated accurately, the source to sensor distance may be estimated using Eq. (18.12). Thus, the key issue is to estimate ![]() .

.

If the original source signal ![]() and the received signal

and the received signal ![]() are both available, then the time delay may be estimated using a number of approaches.

are both available, then the time delay may be estimated using a number of approaches.

Assume ![]() and

and ![]() are both zero-mean white sense stationary random processes over a time interval

are both zero-mean white sense stationary random processes over a time interval ![]() , the cross-correlation between them is defined as

, the cross-correlation between them is defined as

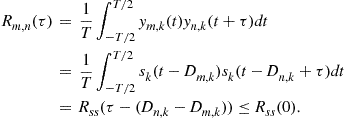

![]() (18.19)

(18.19)

Substituting ![]() in Eq. (18.18) into Eq. (18.19), and assume

in Eq. (18.18) into Eq. (18.19), and assume ![]() if

if ![]() and

and ![]() otherwise, one has

otherwise, one has

(18.20)

(18.20)

Therefore, a maximum likelihood estimate of the time delay ![]() will be

will be

![]() (18.21)

(18.21)

There is a fundamental difficulty in applying Eq. (18.21) to estimate source to sensor signal propagation delay: ![]() may not be available at the sensor. This is often the case when the source is non-cooperative such as an intruder. On the other hand, if the source is cooperative, it may transmit a signal that consists of a time stamp. If the clocks at the sensor and at the source are synchronized as in the case of the global positioning system (GPS) [1], the sensor can compare the receiving time stamp against the sending time stamp and deduce the transit time without using cross correlation.

may not be available at the sensor. This is often the case when the source is non-cooperative such as an intruder. On the other hand, if the source is cooperative, it may transmit a signal that consists of a time stamp. If the clocks at the sensor and at the source are synchronized as in the case of the global positioning system (GPS) [1], the sensor can compare the receiving time stamp against the sending time stamp and deduce the transit time without using cross correlation.

In some applications, the direction of the source is known but the distance between the source and the sensor is to be measured. Then, a round trip signal propagation delay may be estimated using cross-correlation method by emitting a signal ![]() from the sensor toward the source and bounding back to the sensor. Then, both the transmitted signal and received signal will be available at the sensor and above cross-correlation method may be applied to estimate the source to sensor distance.

from the sensor toward the source and bounding back to the sensor. Then, both the transmitted signal and received signal will be available at the sensor and above cross-correlation method may be applied to estimate the source to sensor distance.

In general, when ![]() is unavailable at the sensor, a difference of time of arrival from the same source at different sensors will allow one to estimate the incidence angle of source signal. This is the Time Difference of Arrival (TDoA) feature mentioned in literatures [33,34]. With TDoA, a Generalized Cross Correlation (GCC) [28] may be applied to estimate

is unavailable at the sensor, a difference of time of arrival from the same source at different sensors will allow one to estimate the incidence angle of source signal. This is the Time Difference of Arrival (TDoA) feature mentioned in literatures [33,34]. With TDoA, a Generalized Cross Correlation (GCC) [28] may be applied to estimate ![]() .

.

Ideally, one would hope ![]() and

and ![]() . As such, one computes the cross correlation

. As such, one computes the cross correlation

(18.22)

(18.22)

Thus,

![]() (18.23)

(18.23)

With the presence of channel noise ![]() and channel model

and channel model ![]() , Knapp and Carter [28] proposed to pre-filter

, Knapp and Carter [28] proposed to pre-filter ![]() and

and ![]() to improve the accuracy of the TDOA estimate by enhancing the signal to noise ratio (SNR) of

to improve the accuracy of the TDOA estimate by enhancing the signal to noise ratio (SNR) of ![]() estimate. Specifically, denote

estimate. Specifically, denote ![]() and

and ![]() respectively as the Fourier transform of the received signal

respectively as the Fourier transform of the received signal ![]() and

and ![]() , and also denote

, and also denote ![]() and

and ![]() respectively as the spectrum of the pre-filters for

respectively as the spectrum of the pre-filters for ![]() and

and ![]() , the GCC is defined as:

, the GCC is defined as:

![]() (18.24)

(18.24)

Once ![]() is estimated, one has the following relation:

is estimated, one has the following relation:

![]() (18.25)

(18.25)

Hence TDOA provides a relative distance measure from the source to two different sensors. We note by passing that Eq. (18.25) defines a parabolic trajectory for potential target location r.

3.18.4.2 Angle of arrival estimation

For narrow band source signal, if sensors are fully synchronized, phase difference between received sensor signals may be used to estimate the incidence angle of source signal.

Assume a point source emitting a narrow band (single harmonic) signal described in Eq. (18.11). Under a far field assumption, that is

![]() (18.26)

(18.26)

the waveform of the source signal can be modeled as a plane wave such that the incidence angle of the source to each of the sensor nodes will be identical. This makes it easier to represent the time difference of arrival as a steering vector which is a function of the incidence angle and the sensor array geometry.

Again, let north be a reference direction of the incidence angle ![]() which increases along the clock-wise direction. Then a unit vector along the incidence angle can be expressed as

which increases along the clock-wise direction. Then a unit vector along the incidence angle can be expressed as ![]() . The time difference of arrival between sensor nodes m and N can be expressed as:

. The time difference of arrival between sensor nodes m and N can be expressed as:

(18.27)

(18.27)

Substitute the expression of a narrow band signal as shown in Eq. (18.11) into the expression of received signal described in Eq. (18.18), one has

(18.28)

(18.28)

where ![]() is the frequency response of

is the frequency response of ![]() evaluated at

evaluated at ![]() and is independent of

and is independent of ![]() , and

, and ![]() under the far field assumption Eq. (18.26). Assume

under the far field assumption Eq. (18.26). Assume ![]() , then the received signal of all N sensors may be represented by

, then the received signal of all N sensors may be represented by

![]() (18.29)

(18.29)

where

![]()

is a steering vector with respect to a narrow band source with incidence angle ![]() using the signal received at the Nth sensor as a reference. It represents the phase difference of the received sensor signals with respect to a plane wave traveling at an incidence angle

using the signal received at the Nth sensor as a reference. It represents the phase difference of the received sensor signals with respect to a plane wave traveling at an incidence angle ![]() . With K narrow band sources, the received signal vector than may be expressed as:

. With K narrow band sources, the received signal vector than may be expressed as:

(18.30)

(18.30)

where

![]()

![]()

and

Equation (18.30) is the basis of many array signal processing algorithms [3,35,36] such as MUSIC [37]. Specifically, the covariance matrix of ![]() may be decomposed into two parts:

may be decomposed into two parts:

![]() (18.31)

(18.31)

where ![]() and

and ![]() are the signal and noise covariance matrix respectively. In particular,

are the signal and noise covariance matrix respectively. In particular, ![]() has a rank equal to K. In practice, one would estimate the covariance matrix from received signal and perform eigenvalue decomposition:

has a rank equal to K. In practice, one would estimate the covariance matrix from received signal and perform eigenvalue decomposition:

(18.32)

(18.32)

where ![]() are the largest K eigenvalues and columns of

are the largest K eigenvalues and columns of ![]() are corresponding eigenvectors. The K dimensional subspace spanned by columns of the

are corresponding eigenvectors. The K dimensional subspace spanned by columns of the ![]() matrix is called the signal subspace. On the other hand,

matrix is called the signal subspace. On the other hand, ![]() are the remaining

are the remaining ![]() eigenvalues. The

eigenvalues. The ![]() dimensional subspace spanned by columns of the

dimensional subspace spanned by columns of the ![]() matrix is called the noise subspace. Define

matrix is called the noise subspace. Define

![]() (18.33)

(18.33)

as a generic steering vector, the MUSIC method evaluates the function

![]() (18.34)

(18.34)

This MUSIC spectrum then will show high peaks at ![]() .

.

3.18.5 Source localization algorithms

3.18.5.1 Bayesian source localization based on RSSI

For convenience of discussion, let us rewrite Eq. (18.16) here after a linear transformation of the random variables

![]() (18.35)

(18.35)

Then,

(18.36)

(18.36)

or in matrix notation

![]() (18.37)

(18.37)

Since ![]() is a normalized Gaussian random vector with zero mean and identity matrix as its covariance, the likelihood function that z is observed at N sensors, given the K source signal energy and source locations

is a normalized Gaussian random vector with zero mean and identity matrix as its covariance, the likelihood function that z is observed at N sensors, given the K source signal energy and source locations ![]() can be expressed as:

can be expressed as:

![]() (18.38)

(18.38)

A maximum likelihood (ML) estimate of ![]() may be obtained by maximizing L or equivalently, minimizing the negative log likelihood function

may be obtained by maximizing L or equivalently, minimizing the negative log likelihood function

![]() (18.39)

(18.39)

Setting the gradient of ![]() against

against ![]() equal to 0, one may express the estimate of the source signal energy vector as

equal to 0, one may express the estimate of the source signal energy vector as

![]() (18.40)

(18.40)

where ![]() is the pseudo inverse of the H matrix. Substituting Eq. (18.40) into Eq. (18.39), one has

is the pseudo inverse of the H matrix. Substituting Eq. (18.40) into Eq. (18.39), one has

![]() (18.41)

(18.41)

Equation (18.41) is significant in several ways: (a) The number of unknown parameters is reduced from ![]() to

to ![]() assuming that the dimension of

assuming that the dimension of ![]() is 2. (b) To minimize

is 2. (b) To minimize ![]() , the source locations should be chosen such that the (normalized) energy vector z falls within the subspace spanned by columns of the H matrix as close as possible. In [19], this property is leveraged to derive a multi-resolution projection method for solving the source locations.

, the source locations should be chosen such that the (normalized) energy vector z falls within the subspace spanned by columns of the H matrix as close as possible. In [19], this property is leveraged to derive a multi-resolution projection method for solving the source locations.

In deriving the ML estimates of source locations, no prior information about source locations is used. If, as in a tracking scenario, the prior probability of source locations, ![]() is available, then the a posterior probability may be expressed as:

is available, then the a posterior probability may be expressed as:

![]() (18.42)

(18.42)

Maximizing above expression then will lead to the Bayesian estimate of the source locations and corresponding source emitted energies during ![]() .

.

3.18.5.2 Non-linear least square source localization using RSSI

Assume a scenario of a single source (![]() ). If one ignores the noise energy term, Eq. (18.16) can be expressed as (using notation in Eq. (18.35))

). If one ignores the noise energy term, Eq. (18.16) can be expressed as (using notation in Eq. (18.35))

![]() (18.43)

(18.43)

Based on this approximated distance, the source location r and the source energy S may be estimated.

3.18.5.2.1 Nonlinear quadratic optimization

Based on Eq. (18.43), one evaluate the ratio

![]() (18.44)

(18.44)

After simplification, above equation can be simplified as

(18.45)

(18.45)

For convenience, one may set ![]() and writes

and writes ![]() , and

, and ![]() instead of

instead of ![]() , and

, and ![]() . The target location may be solved by minimizing a nonlinear cost function:

. The target location may be solved by minimizing a nonlinear cost function:

(18.46)

(18.46)

In Eq. (18.46), it is assumed that ![]() . It can easily be updated to deal the situation when

. It can easily be updated to deal the situation when ![]() .

.

3.18.5.2.2 Least square solution

If one ignores the background noise, Eq. (18.45) can be seen as a distance measurement of the unknown source location r. Recall the distance based triangulation method described in Section 3.18.3.1. One may consider squaring both sides of Eq. (18.45) using sensor readings of sensor indices ![]() , and

, and ![]() :

:

After simplification, one has

![]() (18.47)

(18.47)

Combining above equations for different indices of ![]() , one may solve for r by solving an over-determined linear system

, one may solve for r by solving an over-determined linear system

![]() (18.48)

(18.48)

3.18.5.2.3 Source localization using table look-up

Consider a single target (![]() ) scenario. The normalized sensor observation vector

) scenario. The normalized sensor observation vector ![]() can be regarded as a signature vector of a source at location

can be regarded as a signature vector of a source at location ![]() . Hence, by collecting the corresponding signature vectors for all possible locations r in a sensing field, the source location may be estimated using table look-up method. To account for variations of source intensity, the signature vector may be normalized to have a unity norm.

. Hence, by collecting the corresponding signature vectors for all possible locations r in a sensing field, the source location may be estimated using table look-up method. To account for variations of source intensity, the signature vector may be normalized to have a unity norm.

3.18.5.3 Source localization using time difference of arrival

Denote

![]() (18.49)

(18.49)

Substituting Eq. (18.49) into Eq. (18.25) with ![]() , one has

, one has

![]() (18.50)

(18.50)

Squaring both sides of above equation for both indices m and n,

![]()

Subtracting both sides of above equations, it yields (for ![]() )

)

![]()

Restricting ![]() , one may express above into a matrix format:

, one may express above into a matrix format:

(18.51)

(18.51)

The source location r may be solved from above equation using least square estimate subject to the constraint quadratic equality constraint according to Eq. (18.49):

(18.52)

(18.52)

3.18.5.4 Source localization using angle of arrival

When there is only a single source in the sensing field, the angle based triangulation method discussed in Section 3.18.3.2 can be applied to estimate the source location. With more than one sources, a data correspondence problem must be resolved. Such a problem has been addressed in terms of N-Ocular stereo[38].

Assume that each of the nth sensor detects K distinct incidence angles from the K sources. Thus, there are ![]() incidence angles

incidence angles ![]() . If at the nth sensor, it receives a source signal with incidence angle

. If at the nth sensor, it receives a source signal with incidence angle ![]() , then the source location may be expressed as

, then the source location may be expressed as

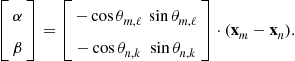

(18.53)

(18.53)

Similarly, for the mth sensor, for the ![]() th incidence angle, the source location is at

th incidence angle, the source location is at

![]()

Therefore, if these two sources are the same source (e.g., ![]() ), then one must have a valid solution, namely,

), then one must have a valid solution, namely, ![]() , and

, and ![]() to the following linear system of equations:

to the following linear system of equations:

(18.54)

(18.54)

The solution can be represented expressively as:

(18.55)

(18.55)

If both ![]() and

and ![]() are positive, then

are positive, then ![]() is a potential source location. Since it is derived from observation of two sensors, it is called a bi-ocular solution. Otherwise, the solution will be discarded. Substituting the

is a potential source location. Since it is derived from observation of two sensors, it is called a bi-ocular solution. Otherwise, the solution will be discarded. Substituting the ![]() pairs angles

pairs angles ![]() into Eq. (18.55), one may obtain up to

into Eq. (18.55), one may obtain up to ![]() valid solutions which are candidates for the K possible source locations, assuming there is no occlusion. The collection of these solutions will be denoted by

valid solutions which are candidates for the K possible source locations, assuming there is no occlusion. The collection of these solutions will be denoted by ![]() indicating they are consistent with two sensor observations.

indicating they are consistent with two sensor observations.

Now for a third sensor at ![]() with incidence angles

with incidence angles ![]() , one may test if any of the potential solutions in

, one may test if any of the potential solutions in ![]() , lies in any of the K incidence rays originated from

, lies in any of the K incidence rays originated from ![]() . This is easily accomplished by evaluating

. This is easily accomplished by evaluating

![]() (18.56)

(18.56)

If the resulting ![]() , then the candidate solution r will be promoted into a tri-ocular solution set

, then the candidate solution r will be promoted into a tri-ocular solution set ![]() for being consistent with 3 sensor observations. Repeat above procedure until

for being consistent with 3 sensor observations. Repeat above procedure until ![]() is obtained or when the size of the solution set reduces to K. Then the procedure is terminated and the K source positions are obtained.

is obtained or when the size of the solution set reduces to K. Then the procedure is terminated and the K source positions are obtained.

Two issues will need to be addressed when applying above procedures: (a) A false matching solution may be obtained where many incidence rays intersect but no source exists. Fortunately, each incidence ray passing through a false matching position should have two or more matching points. Thus, a false matching solution may be identified if every incidence rays passing through it have more than one matching point. (b) The azimuth incidence angle estimates may be inaccurate. Hence the intersections may not coincide at the same position. Several remedies of this problem have been discussed in [38]. In practice, one may partition the sensing field into mesh grids whose size roughly equal to the angle estimation accuracy. The position of a potential N-ocular solution then will be registered with the corresponding mesh grid rather than a specific point. A mesh grid will be included into ![]() if there are m intersections fall within its range.

if there are m intersections fall within its range.

3.18.6 Target tracking algorithm

So far, in this chapter, source localization is performed based solely on a single snapshot at time t of sensor readings at N sensors in the sensing field. If past sensor readings about the same sources can be incorporated, the source location estimates are likely to be much more accurate.

3.18.6.1 Dynamic and observation models

Statistically, tracking is modeled as a sequential Bayesian estimation problem of a dynamic system whose states obey a Markov model. In the context of source localization and tracking, the dynamic system that describes a single target moving in a 2D plane has the form

(18.57)

(18.57)

where ![]() is the time duration between t and

is the time duration between t and ![]() , and

, and ![]() is a zero mean Gaussian random number with variance

is a zero mean Gaussian random number with variance ![]() which models the acceleration.

which models the acceleration.

Signal received at N sensors as a function of source location ![]() and source signal

and source signal ![]() gives the observation model. Examples of observation model include Eqs. (18.15) and (18.18). These nonlinear models may be expressed as:

gives the observation model. Examples of observation model include Eqs. (18.15) and (18.18). These nonlinear models may be expressed as:

![]() (18.58)

(18.58)

where the observation noise![]() is a zero mean Gaussian random variable with variance

is a zero mean Gaussian random variable with variance ![]() . Sometimes, intermediate observation model, such as sensor to source distance estimate Eq. (18.12), Eq. (18.25), or source signal incidence angle estimate such as Eq. (18.53).

. Sometimes, intermediate observation model, such as sensor to source distance estimate Eq. (18.12), Eq. (18.25), or source signal incidence angle estimate such as Eq. (18.53).

In general, the sensor observation ![]() , or the intermediate measurements are highly nonlinear equations of the source position and speed

, or the intermediate measurements are highly nonlinear equations of the source position and speed ![]() . Alternatively, one may apply methods discussed in this chapter to estimate

. Alternatively, one may apply methods discussed in this chapter to estimate ![]() based only on current observation

based only on current observation ![]() , and express a derived observation equation as:

, and express a derived observation equation as:

![]() (18.59)

(18.59)

3.18.6.2 Sequential Bayesian estimation

The state transition described in Eqs. (18.57) and (18.58) can be described by a Markov chain model such that

![]()

and

![]()

As such, it is easily verified that

![]() (18.60)

(18.60)

Denote ![]() to be the observations up to time t, and

to be the observations up to time t, and ![]() to be the conditional probability of

to be the conditional probability of ![]() given

given ![]() . Given the state estimation (location and speed of the source) at previous time step

. Given the state estimation (location and speed of the source) at previous time step ![]() , the dynamic model Eq. (18.57) allows the prediction of the location and speed of the source at time t:

, the dynamic model Eq. (18.57) allows the prediction of the location and speed of the source at time t:

![]() (18.61)

(18.61)

where

![]() (18.62)

(18.62)

has a normal distribution. Similarly,

![]() (18.63)

(18.63)

Applying Bayesian rule, one has

(18.64)

(18.64)

3.18.6.3 Kalman filter

Based on the sequential Bayesian formulation, one may deduce the well-known Kalman filter for the linear observation model (Eq. (18.59)). Specifically, the Kalman filter computes the mean and covariance matrix of the probability distribution

![]()

where

![]()

3.18.6.3.1 Prediction phase

Given ![]() , the dynamic Eq. (18.57) allows one to predict the a priori estimate of the current state:

, the dynamic Eq. (18.57) allows one to predict the a priori estimate of the current state:

![]() (18.65)

(18.65)

Hence,

![]()

The corresponding prediction error covariance matrix is:

(18.66)

(18.66)

Equations (18.65) and (18.66) constitute the prediction phase of a Kalman filter.

3.18.6.3.2 Update phase

Given the predicted source position and speed ![]() , each sensor may compute an expected received signal

, each sensor may compute an expected received signal ![]() and the corresponding innovation (prediction error) when compared to the actual received signal

and the corresponding innovation (prediction error) when compared to the actual received signal ![]() as

as

![]() (18.67)

(18.67)

The corresponding covariance matrix then is

![]() (18.68)

(18.68)

Applying least square principle, the optimal Kalman gain matrix may be expressed as:

![]() (18.69)

(18.69)

Finally, the update equation of the state estimation is:

![]() (18.70)

(18.70)

In other words, the optimal estimate of the source location and speed is a linear combination of the predicted location and speed and a correction term based on sensor observations. Moreover, the covariance matrix of estimation error will also be updated:

![]() (18.71)

(18.71)

3.18.6.3.3 Nonlinear observation model

The Kalman filter tracking equations developed so far is based on the linear observation model Eq. (18.59). It facilitates the close-form expression of the prediction error covariance matrix Eq. (18.68). However, as discussed earlier, many practical observation models are non-linear in nature. A number of techniques have been developed to deal with this challenge.

With an extended Kalman filter (EKF), the nonlinear observation model will be replaced by an approximated linear model such that

where ![]() . There are also Unscented Kalman filter (UKF) [39] that further enhance the accuracy of the EKF.

. There are also Unscented Kalman filter (UKF) [39] that further enhance the accuracy of the EKF.

For extremely nonlinear models, particle filter[18,40,41] may be applied to facilitate more accurate, albeit more computationally intensive, tracking. Briefly speaking, in a particle filter, the probability distribution is approximated by a probability mass function (pmf) evaluated at a set of randomly sampled points (particles). Then, the sequential Bayesian estimation is carried out on individual particles.

3.18.7 Conclusion

In this chapter, source localization algorithms in the context of wireless sensor network are discussed. A distinct approach of this chapter is to separate the received source signal model from the basic triangulation algorithms. The development also revealed basic relations among several well studied families of localization algorithms. The significance of tracking algorithm in the localization task is also discussed and some basic tracking algorithms are reviewed.

Relevant Theory: Signal Processing Theory, Machine Learning Statistical Signal processing, and Array Signal Processing

See Vol. 1, Chapter 2, Continuous-Time Signals and Systems

See Vol. 1, Chapter 3, Discrete-Time Signals and Systems

See Vol. 1, Chapter 4, Random Signals and Stochastic Processes

See Vol. 1, Chapter 11, Parametric Estimation

See Vol. 1, Chapter 19 A Tutorial Introduction to Monte Carlo Methods

See this volume, Chapter 5, Distributed Signal Detection

See this volume, Chapter 7, Geolocation—Maps, Measurements, Models, and Methods

See this volume, Chapter 19, Array Processing in the Face of Nonidealities

References

1. Daly P. Electron Commun Eng J. 1993;5:349–357.

2. Parkinson BW, Spilker JJ, eds. Global Positioning System: Theory and Applications. vol. 1. American Institute of Astronautics and Aeronautics 1996.

3. Owsley NL. In: Haykin S, ed. Array Signal Processing. Englewood-Cliffs, NJ: Prentice-Hall; 1991.

4. Zhou S, Willett P. IEEE Trans Signal Process. 2007;55:3104–3115.

5. Valin P, Jouan A, Bosse E. In: Bellingham, WA, USA, Orlando, FL, USA: Society of Photo-Optical Instrumentation Engineers; 1999;126–138. Proc SPIE-1999 Sensor Fusion: Architectures, Algorithms, and Applications III. vol. 3719.

6. Duckworth GL, Frey ML, Remer CE, Ritter S, Vidaver G. In: The International Society for Optical Engineering 1995;16–29. Proc SPIE. vol. 2344.

7. Friedrich C, Wegler U. Geophys Res Lett. 2005;32:L14312.

8. Gajewski D, Sommer K, Vanelle C, Patzig R. Geophysics. 2009;74:WB55–WB61.

9. J. Zhao, Seismic signal processing for near-field source localization, Ph.D. Dissertation, University of California, Los Angeles, 2007.

10. Ramirez RR. Scholarpedia. 2008;3(11):1073.

11. B.C. Basu, S.A. Pentland, in: Proceedings of ICASSP’01, vol. 5, pp. 3361–3364.

12. Aarabi P, Mahdavi A. In: Proceedings of ICASSP’02. IEEE 2002;273–276.

13. Chen JC, Yao K, Hudson RE. IEEE Signal Process Mag. 2002;19:30–39.

14. Chen J, Hudson RE, Yao K. IEEE Trans Signal Process. 2002;50:1843–1854.

15. Hu YH, Sheng X, Li D. In: IEEE Workshop on Multimedia Signal Processing. St. Thomas, Virgin Island: IEEE; 2002.

16. Li D, Hu YH. EURASIP J Appl Signal Process. 2003;321–337.

17. Sheng X, Hu YH. In: Proceedings of the International Symposisum on Information Processing in Sensor Networks (IPSN’03). Palo Alto, CA: Springer-Verlag; 2003;285–300.

18. Sheng X, Hu YH. In: Montreal, Canada: IEEE; 2004;972–975. Proceedings of ICASSP’04. vol. 3.

19. Sheng X, Hu YH. IEEE Trans Signal Process. 2005;53:44–53.

20. Amari S, Cichocki A, Yang HH. In: Proceedings of Advances in Neural Information Processing Systems (NIPS’96). MIT Press 1996;757–763.

21. Cardoso JF. Proc IEEE. 1998;86:2009–2025.

22. Douglas SC. In: Hu YH, Hwang JN, eds. Handbook of Neural Network Signal Processing. Boca Raton, FL: CRC Press; 2001; Chapter 7.

23. Gelle G, Colas M, Delaunay G. Mech Syst Signal Process. 2000;14:427–442.

24. Chang K-C, Chong C-Y, Bar-Shalom Y. IEEE Trans Automatic Control. 1986;31:889–897.

25. M. Ito, S. Tsujimichi, Y. Kosuge, in: Proceedings of the International Conference on Industrial Electronics, Control and Instrumentation, New Orleans, LA, vol. 3, pp. 1260–1264.

26. Savarese C, Rabaey JM, Beutel J. In: Proceedings of ICASSP’2001. Salt Lake City, UT: IEEE; 2001;2676–2679.

27. Seshadri V, Zaruba G, Huber MA. In: Proceedings of the International Conference on Pervasive Computing and Communications (PerCom’05). IEEE 2005;75–84.

28. Knapp CH, Carter GC. IEEE Trans Acoust Speech Signal Process. 1976;24:320–327.

29. Carter GC, ed. Coherence and Time Delay Estimation. IEEE Press 1993.

30. Chan YT, Hattin RV, Plant JB. IEEE Trans Acoust Speech Signal Process. 1978;26:217–222.

31. Tung TL, Yao K, Reed CW, Hudson RE, Chen D, Chen JC. In: The International Society for Optical Engineering 1999;220–233. Proceedings of SPIE. vol. 3807.

32. Benesty J, Chen J, Huang Y. IEEE Trans Speech Audio Process. 2004;1542:509–519.

33. Gustafsson F, Gunnarsson F. In: IEEE 2003;553–556. Proceedings of ICASSP’03. vol. 6.

34. Yang L, Ho KC. IEEE Trans Signal Process. 2009;57:4598–4615.

35. Krim H, Viberg M. IEEE Signal Process Mag. 1996;1583:67–94.

36. Tuncer E, Friedlander B, eds. Classical and Modern Direction-of-Arrival Estimation. Academic Press 2010.

37. Stoica P, Nehorai A. IEEE Trans, Acoust Speech Signal Process. 1989;37:720–741.

38. Sogo T, Ishiguro H, Trivedi MM. In: Proceedings of IEEE Workshop Omnidirectional Vision. IEEE 2000;153–160.

39. Julier SJ, Uhlmann JK. Proc IEEE. 2004;92:401–422.

40. Arulampalam M, Maskell S, Gordon N, Clapp T. IEEE Trans Signal Process. 2002;50:174–188.

41. Sheng X, Hu YH. In: Proceedings of 4th International Symposium on Information Processing in Sensor Networks (IPSN ’05). Los Angeles, CA: IEEE; 2005.