In this recipe we will use one of the methods of finding characteristic features in the image. We will use the SURF algorithm implemented by the OpenCV library.

We will be using the OpenCV library, so please refer to the Integrating with OpenCV recipe for information on how to set up your project. We will need a sample image to proceed, so save it in your assets folder as image.png, then save a copy of the sample image as image2.png and perform some transformation on it, for example rotation.

We will create an application that visualizes matched features between two images. Perform the following steps to do so:

- Add the paths to the OpenCV library files in the Other Linker Flags section of your project's build settings, for example:

$(CINDER_PATH)/blocks/opencv/lib/macosx/libopencv_imgproc.a $(CINDER_PATH)/blocks/opencv/lib/macosx/libopencv_core.a $(CINDER_PATH)/blocks/opencv/lib/macosx/libopencv_objdetect.a $(CINDER_PATH)/blocks/opencv/lib/macosx/libopencv_features2d.a $(CINDER_PATH)/blocks/opencv/lib/macosx/libopencv_flann.a

- Include necessary headers:

#include "cinder/gl/Texture.h" #include "cinder/Surface.h" #include "cinder/ImageIo.h"

- In your main class declaration add the method and properties:

int matchImages(Surface8u img1, Surface8u img2); Surface8u mImage, mImage2; gl::Texture mMatchesImage;

- Inside the

setupmethod load the images and invoke the matching method:mImage = loadImage( loadAsset("image.png") ); mImage2 = loadImage( loadAsset("image2.png") ); int numberOfmatches = matchImages(mImage, mImage2); - Now you have to implement previously declared

matchImagesmethod:int MainApp::matchImages(Surface8u img1, Surface8u img2) { cv::Mat image1(toOcv(img1)); cv::cvtColor( image1, image1, CV_BGR2GRAY ); cv::Mat image2(toOcv(img2)); cv::cvtColor( image2, image2, CV_BGR2GRAY ); // Detect the keypoints using SURF Detector std::vector<cv::KeyPoint> keypoints1, keypoints2; cv::SurfFeatureDetector detector; detector.detect( image1, keypoints1 ); detector.detect( image2, keypoints2 ); // Calculate descriptors (feature vectors) cv::SurfDescriptorExtractor extractor; cv::Mat descriptors1, descriptors2; extractor.compute( image1, keypoints1, descriptors1 ); extractor.compute( image2, keypoints2, descriptors2 ); // Matching cv::FlannBasedMatcher matcher; std::vector<cv::DMatch> matches; matcher.match( descriptors1, descriptors2, matches ); double max_dist = 0; double min_dist = 100; for( int i = 0; i< descriptors1.rows; i++ ) { double dist = matches[i].distance; if( dist<min_dist ) min_dist = dist; if( dist>max_dist ) max_dist = dist; } std::vector<cv::DMatch> good_matches; for( int i = 0; i< descriptors1.rows; i++ ) { if( matches[i].distance<2*min_dist ) good_matches.push_back( matches[i]); } // Draw matches cv::Matimg_matches; cv::drawMatches(image1, keypoints1, image2, keypoints2, good_matches, img_matches, cv::Scalar::all(-1),cv::Scalar::all(-1), std::vector<char>(), cv::DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS ); mMatchesImage = gl::Texture(fromOcv(img_matches)); return good_matches.size(); } - The last thing is to visualize the matches, so put the following line of code inside the

drawmethod:gl::draw(mMatchesImage);

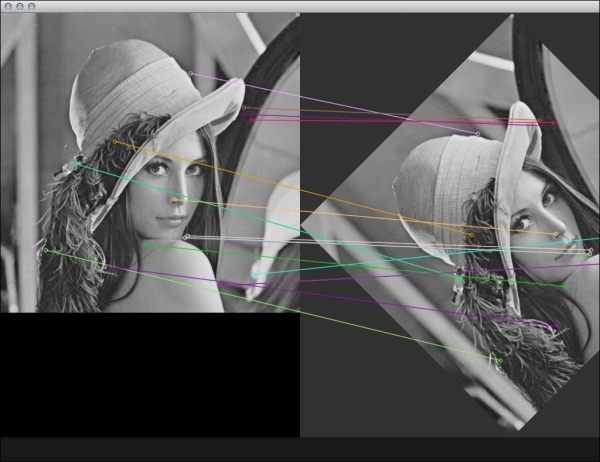

Let's discuss the code under step 5. First we are converting image1 and image2 to an OpenCV Mat structure. Then we are converting both images to grayscale. Now we can start processing images with SURF, so we are detecting keypoints – the characteristic points of the image calculated by this algorithm. We can use calculated keypoints from these two images and match them using FLANN, or more precisely the FlannBasedMatcher class. After filtering out the proper matches and storing them in the good_matches vector we can visualize them, as follows:

Please notice that second image is rotated, however the algorithm can still find and link the corresponding keypoints.

Detecting characteristic features in the images is crucial for matching pictures and is part of more advanced algorithms used in augmented reality applications.

- The comparison of the OpenCV's feature detection algorithms can be found at http://computer-vision-talks.com/2011/01/comparison-of-the-opencvs-feature-detection-algorithms-2/