CHAPTER 5

Statistical Estimation

PART I: THEORY

5.1 GENERAL DISCUSSION

Point estimators are sample statistics that are designed to yield numerical estimates of certain characteristics of interest of the parent distribution. While in testing hypotheses we are generally interested in drawing general conclusions about the characteristics of the distribution, for example, whether its expected value (mean) is positive or negative, in problems of estimation we are concerned with the actual value of the characteristic. Generally, we can formulate, as in testing of hypotheses, a statistical model that expresses the available information concerning the type of distribution under consideration. In this connection, we distinguish between parametric and nonparametric (or distribution free) models. Parametric models specify parametric families of distributions. It is assumed in these cases that the observations in the sample are generated from a parent distribution that belongs to the prescribed family. The estimators that are applied in parametric models depend in their structure and properties on the specific parametric family under consideration. On the other hand, if we do not wish, for various reasons, to subject the estimation procedure to strong assumptions concerning the family to which the parent distribution belongs, a distribution free procedure may be more reasonable. In Example 5.1, we illustrate some of these ideas.

This chapter is devoted to the theory and applications of these types of estimators: unbiased, maximum likelihood, equivariant, moment equations, pretest, and robust estimators.

5.2 UNBIASED ESTIMATORS

5.2.1 General Definition and Example

Unbiased estimators of a characteristic θ(F) of F in ![]() is an estimator

is an estimator ![]() (X) satisfying

(X) satisfying

(5.2.1) ![]()

where X is a random vector representing the sample random variables. For example, if θ (F) = EF{X}, assuming that EF{|X|} < ∞ for all F ![]()

![]() , then the sample mean

, then the sample mean ![]() is an unbiased estimator of θ(F). Moreover, if VF{X} < ∞ for all F

is an unbiased estimator of θ(F). Moreover, if VF{X} < ∞ for all F ![]()

![]() then the sample variance

then the sample variance ![]() is an unbiased estimator of VF{X}. We note that all the examples of unbiased estimators given here are distribution free. They are valid for any distribution for which the expectation or the variance exist. For parametric models one can do better by using unbiased estimators which are functions of the minimal sufficient statistics. The comparison of unbiased estimators is in terms of their variances. Of two unbiased estimators, the one having a smaller variance is considered better, or more efficient. One reason for preferring the unbiased estimator with the smaller variance is in the connection between the variance of the estimator and the probability that it belongs to a fixed–width interval centered at the unknown characteristic. In Example 5.2, we illustrate a case in which the distribution–free estimator of the expectation is inefficient.

is an unbiased estimator of VF{X}. We note that all the examples of unbiased estimators given here are distribution free. They are valid for any distribution for which the expectation or the variance exist. For parametric models one can do better by using unbiased estimators which are functions of the minimal sufficient statistics. The comparison of unbiased estimators is in terms of their variances. Of two unbiased estimators, the one having a smaller variance is considered better, or more efficient. One reason for preferring the unbiased estimator with the smaller variance is in the connection between the variance of the estimator and the probability that it belongs to a fixed–width interval centered at the unknown characteristic. In Example 5.2, we illustrate a case in which the distribution–free estimator of the expectation is inefficient.

5.2.2 Minimum Variance Unbiased Estimators

In Example 5.2, one can see a case where an unbiased estimator, which is not a function of the minimal sufficient statistic (m.s.s.), has a larger variance than the one based on the m.s.s. The question is whether this result holds generally. The main theorem of this section establishes that if a family of distribution functions admits a complete sufficient statistic then the minimum variance unbiased estimator (MVUE) is unique, with probability one, and is a function of that statistic. The following is the fundamental theorem of the theory of unbiased estimation. It was proven by Rao (1945, 1947, 1949), Blackwell (1947), and Lehmann and Scheffé (1950).

Theorem 5.2.1 (The Rao–Blackwell–Lehmann–Scheffé Theorem) Let ![]() = {F(x;θ);θ

= {F(x;θ);θ ![]() Θ} be a parametric family of distributions of a random vector X = (X1, …, Xn). Suppose that ω = g(θ) has an unbiased estimator

Θ} be a parametric family of distributions of a random vector X = (X1, …, Xn). Suppose that ω = g(θ) has an unbiased estimator ![]() (X). If

(X). If ![]() admits a (minimal) sufficient statistic T(X) then

admits a (minimal) sufficient statistic T(X) then

(5.2.2) ![]()

is an unbiased estimator of ω and

for all θ ![]() Θ. Furthermore, if T(X) is a complete sufficient statistic then

Θ. Furthermore, if T(X) is a complete sufficient statistic then ![]() is essentially the unique minimum variance, unbiased (MVU) estimator, for each θ in Θ.

is essentially the unique minimum variance, unbiased (MVU) estimator, for each θ in Θ.

Proof. (i) Since T(X) is a sufficient statistic, the conditional expectation E{![]() (X)| T(X)} does not depend on θ and is therefore a statistic. Moreover, according to the law of the iterated expectations and since

(X)| T(X)} does not depend on θ and is therefore a statistic. Moreover, according to the law of the iterated expectations and since ![]() (X) is unbiased, we obtain

(X) is unbiased, we obtain

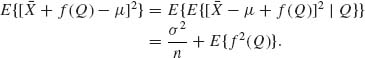

(5.2.4)

Hence, ![]() is an unbiased estimator of g(θ). By the law of the total variance,

is an unbiased estimator of g(θ). By the law of the total variance,

The second term on the RHS of (5.2.5) is the variance of ![]() . Moreover, Var{

. Moreover, Var{![]() (X)| T(X)} ≥ 0 with probability one for each θ in Θ. Hence, the first term on the RHS of (5.2.5) is nonnegative. This establishes (5.2.3).

(X)| T(X)} ≥ 0 with probability one for each θ in Θ. Hence, the first term on the RHS of (5.2.5) is nonnegative. This establishes (5.2.3).

(ii) Let T(X) be a complete sufficient statistic and assume that ![]() =

= ![]() 1(T(X)). Let

1(T(X)). Let ![]() (X) be any unbiased estimator of ω = g(θ), which depends on T(X), i.e.,

(X) be any unbiased estimator of ω = g(θ), which depends on T(X), i.e., ![]() (X) =

(X) = ![]() 2(T(X)). Then, Eθ {

2(T(X)). Then, Eθ {![]() } = Eθ {

} = Eθ {![]() (X)} for all θ. Or, equivalently

(X)} for all θ. Or, equivalently

(5.2.6) ![]()

Hence, from the completeness of T(X), ![]() 1(T) =

1(T) = ![]() 2(T) with probability one for each θ

2(T) with probability one for each θ ![]() Θ. This proves that

Θ. This proves that ![]() =

= ![]() 1(T) is essentially unique and implies also that

1(T) is essentially unique and implies also that ![]() has the minimal variance at each θ. QED

has the minimal variance at each θ. QED

Part (i) of the above theorem provides also a method of constructing MVUEs. One starts with any unbiased estimator, as simple as possible, and then determines its conditional expectation, given T(X). This procedure of deriving MVUEs is called in the literature “Rao–Blackwellization.” Example 5.3 illustrates this method.

In the following section, we prove and illustrate an information lower bound for variances of unbiased estimators. This lower bound plays an important role in the theory of statistical inference.

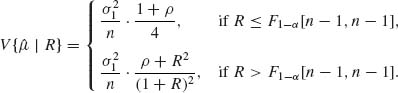

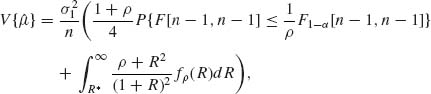

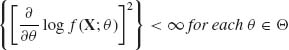

5.2.3 The Cramér–Rao Lower Bound for the One–Parameter Case

The following theorem was first proven by Fréchet (1943) and then by Rao (1945) and Cramér (1946). Although conditions (i)–(iii), (v) of the following theorem coincide with conditions (3.7.8) we restate them. Conditions (i)–(iv) will be labeled the Cramér–Rao (CR) regularity conditions.

Theorem 5.2.2. Let ![]() be a one–parameter family of distributions of a random vector X = (X1, …, Xn), having probability density functions (p.d.f.s) f(x;θ), θ

be a one–parameter family of distributions of a random vector X = (X1, …, Xn), having probability density functions (p.d.f.s) f(x;θ), θ ![]() Θ. Let ω (θ) be a differentiable function of θ and

Θ. Let ω (θ) be a differentiable function of θ and ![]() (X) an unbiased estimator of ω (θ). Assume that the following regularity conditions hold:

(X) an unbiased estimator of ω (θ). Assume that the following regularity conditions hold:

![]()

![]()

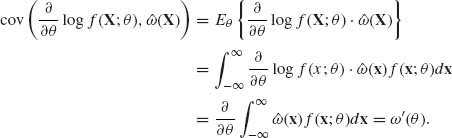

Proof. Consider the covariance, for a given θ value, between ![]() log f(X;θ) and

log f(X;θ) and ![]() (X). We have shown in (3.7.3) that under the above regularity conditions Eθ

(X). We have shown in (3.7.3) that under the above regularity conditions Eθ ![]() . Hence,

. Hence,

The interchange of differentiation and integration is justified by condition (iv). On the other hand, by the Schwarz inequality

since the variance of ![]() is equal to the Fisher information function In(θ), and the square of the coefficient of correlation between

is equal to the Fisher information function In(θ), and the square of the coefficient of correlation between ![]() (X) and

(X) and ![]() cannot exceed 1. From (5.2.8) and (5.2.9), we obtain the Cramér – Rao inequality (5.2.7). QED

cannot exceed 1. From (5.2.8) and (5.2.9), we obtain the Cramér – Rao inequality (5.2.7). QED

We show that if an unbiased estimator ![]() (X) has a distribution of the one–parameter exponential type, then the variance of

(X) has a distribution of the one–parameter exponential type, then the variance of ![]() (X) attains the Cramér – Rao lower bound. Indeed, let

(X) attains the Cramér – Rao lower bound. Indeed, let

(5.2.10) ![]()

where ![]() (θ) and K(θ) are differentiable, and

(θ) and K(θ) are differentiable, and ![]() ′(θ) ≠ 0 for all θ then

′(θ) ≠ 0 for all θ then

(5.2.11) ![]()

and

(5.2.12) ![]()

Since ![]() (X) is a sufficient statistic, In(θ) is equal to

(X) is a sufficient statistic, In(θ) is equal to

(5.2.13) ![]()

Moreover, ![]() (X) is an unbiased estimator of g(θ) = +K′(θ)/

(X) is an unbiased estimator of g(θ) = +K′(θ)/![]() ′(θ). Hence, we readily obtain that

′(θ). Hence, we readily obtain that

(5.2.14) ![]()

We ask now the question: if the variance of an unbiased estimator ![]() (X) attains the Cramér – Rao lower bound, can we infer that its distribution is of the one–parameter exponential type? Joshi (1976) provided a counter example. However, under the right regularity conditions the above implication can be made. These conditions were given first by Wijsman (1973) and then generalized by Joshi (1976).

(X) attains the Cramér – Rao lower bound, can we infer that its distribution is of the one–parameter exponential type? Joshi (1976) provided a counter example. However, under the right regularity conditions the above implication can be made. These conditions were given first by Wijsman (1973) and then generalized by Joshi (1976).

Bhattacharyya (1946) generalized the Cramér – Rao lower bound to (regular) cases where ω (θ) is k–times differentiable at all θ. This generalization shows that, under further regularity conditions, if ωi(θ) is the ith derivative of ω(θ) and V is a k × k positive definite matrix, for all θ, with elements

![]()

then

Fend (1959) has proven that if the distribution of X belongs to the one–parameter exponential family, and if the variance of an unbiased estimator of ω(θ), ![]() (X), attains the kth order Bhattacharyya lower bound (BLB) for all θ, but does not attain the (k – 1)st lower bound, then

(X), attains the kth order Bhattacharyya lower bound (BLB) for all θ, but does not attain the (k – 1)st lower bound, then ![]() (X) is a polynomial of degree k in U(X).

(X) is a polynomial of degree k in U(X).

5.2.4 Extension of the Cramér – Rao Inequality to Multiparameter Cases

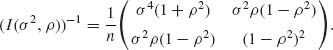

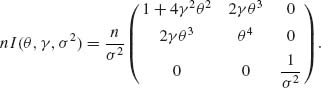

The Cramér – Rao inequality can be generalized to estimation problems in k–parameter models in the following manner. Suppose that ![]() is a family of distribution functions having density functions (or probability functions) f(x;θ) where θ = (θ1, …, θk)′ is a k–dimensional vector. Let I(θ) denote a k × k Fisher information matrix, with elements

is a family of distribution functions having density functions (or probability functions) f(x;θ) where θ = (θ1, …, θk)′ is a k–dimensional vector. Let I(θ) denote a k × k Fisher information matrix, with elements

![]()

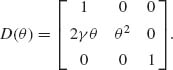

i, j = 1, …, k. We obviously assume that for each θ in the parameter space Θ, Iij(θ) is finite. It is easy to show that the matrix I(θ) is nonnegative definite. We will assume, however, that the Fisher information matrix is positive definite. Furthermore, let g1(θ), …, gr(θ) be r parametric functions r = 1, 2, …, k. Define the matrix of partial derivatives

(5.2.16) ![]()

where Dij(θ) = ![]() . Let

. Let ![]() (X) be an r–dimensional vector of unbiased estimators of g1(θ), …, gr(θ), i.e.,

(X) be an r–dimensional vector of unbiased estimators of g1(θ), …, gr(θ), i.e., ![]() (X) = (

(X) = (![]() 1(X), …,

1(X), …, ![]() r(X)). Let

r(X)). Let ![]() (

(![]() ) denote the variance – covariance matrix of

) denote the variance – covariance matrix of ![]() (X). The Cramér – Rao inequality can then be generalized, under regularity conditions similar to those of the theorem, to yield the inequality

(X). The Cramér – Rao inequality can then be generalized, under regularity conditions similar to those of the theorem, to yield the inequality

(5.2.17) ![]()

in the sense that ![]() (

(![]() ) – D(θ)(I(θ))−1D′(θ) is a nonnegative definite matrix. In the special case of one parameter function g(θ), if

) – D(θ)(I(θ))−1D′(θ) is a nonnegative definite matrix. In the special case of one parameter function g(θ), if ![]() (X) is an unbiased estimator of g(θ) then

(X) is an unbiased estimator of g(θ) then

where ![]() g(θ) =

g(θ) = ![]() .

.

5.2.5 General Inequalities of the Cramér – Rao Type

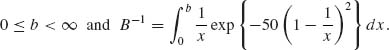

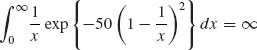

The Cramér – Rao inequality is based on four stringent assumptions concerning the family of distributions under consideration. These assumptions may not be fulfilled in cases of practical interest. In order to overcome this difficulty, several studies were performed and various different general inequalities were suggested. Blyth and Roberts (1972) provided a general theoretical framework for these generalizations. We present here the essential results.

Let X1, …, Xn be independent and identically distributed (i.i.d.) random variables having a common distribution F that belongs to a one–parameter family ![]() , having p.d.f. f(x;θ), θ

, having p.d.f. f(x;θ), θ ![]() Θ. Suppose that g(θ) is a parametric function considered for estimation. Let T(X) be a sufficient statistic for

Θ. Suppose that g(θ) is a parametric function considered for estimation. Let T(X) be a sufficient statistic for ![]() and let

and let ![]() (T) be an unbiased estimator of g(θ). Let W(T;θ) be a real–valued random variable such that Varθ {W(T;θ)} > 0 and finite for every θ. We also assume that 0 < Varθ {

(T) be an unbiased estimator of g(θ). Let W(T;θ) be a real–valued random variable such that Varθ {W(T;θ)} > 0 and finite for every θ. We also assume that 0 < Varθ {![]() (T)} < ∞ for each θ in Θ. Then, from the Schwarz inequality, we obtain

(T)} < ∞ for each θ in Θ. Then, from the Schwarz inequality, we obtain

for every θ ![]() Θ. We recall that for the Cramér – Rao inequality, we have used

Θ. We recall that for the Cramér – Rao inequality, we have used

(5.2.20) ![]()

where h(t;θ) is the p.d.f. of T at θ.

Chapman and Robbins (1951) and Kiefer (1952) considered a family of random variables W![]() (T;θ), where

(T;θ), where ![]() ranges over Θ and is given by the likelihood ratio W

ranges over Θ and is given by the likelihood ratio W![]() (T;θ) =

(T;θ) = ![]() . The inequality (5.2.19) then becomes

. The inequality (5.2.19) then becomes

One obtains then that (5.2.21) holds for each ![]() in Θ. Hence, considering the supremum of the RHS of (5.2.21) over all values of

in Θ. Hence, considering the supremum of the RHS of (5.2.21) over all values of ![]() , we obtain

, we obtain

(5.2.22) ![]()

where A(θ, ![]() ) = Varθ {W

) = Varθ {W![]() (T;θ)}. Indeed,

(T;θ)}. Indeed,

(5.2.23) ![]()

This inequality requires that all the p.d.f.s of T, i.e., h(t;θ), θ ![]() Θ, will be positive on the same set, which is independent of any unknown parameter. Such a condition restricts the application of the Chapman – Robbins inequality. We cannot consider it, for example, in the case of a life–testing model in which the family

Θ, will be positive on the same set, which is independent of any unknown parameter. Such a condition restricts the application of the Chapman – Robbins inequality. We cannot consider it, for example, in the case of a life–testing model in which the family ![]() is that of location–parameter exponential distributions, i.e., f(x;θ) = I { x ≥ θ } exp{-(x – θ)}, with 0 < θ < ∞. However, one can consider the variable W

is that of location–parameter exponential distributions, i.e., f(x;θ) = I { x ≥ θ } exp{-(x – θ)}, with 0 < θ < ∞. However, one can consider the variable W![]() (T;θ) for all

(T;θ) for all ![]() values such that h(t;

values such that h(t;![]() ) = 0 on the set Nθ = {t: h(t;θ) = 0}. In the above location–parameter example, we can restrict attention to the set of

) = 0 on the set Nθ = {t: h(t;θ) = 0}. In the above location–parameter example, we can restrict attention to the set of ![]() values that are greater than Θ. If we denote this set by C(θ) then we have the Chapman – Robbins inequality as follow:

values that are greater than Θ. If we denote this set by C(θ) then we have the Chapman – Robbins inequality as follow:

(5.2.24) ![]()

The Chapman – Robbins inequality is applicable, as we have seen in the previous example, in cases where the Cramér – Rao inequality is inapplicable. On the other hand, we can apply the Chapman – Robbins inequality also in cases satisfying the Cramér – Rao regularity conditions. The question is then, what is the relationship between the Chapman – Robbins lower bound and Cramér – Rao lower bound. Chapman and Robbins (1951) have shown that their lower bound is greater than or equal to the Cramér – Rao lower bound for all θ.

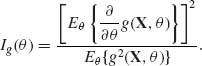

5.3 THE EFFICIENCY OF UNBIASED ESTIMATORS IN REGULAR CASES

Let ![]() 1(X) and

1(X) and ![]() 2(X) be two unbiased estimators of g(θ). Assume that the density functions and the estimators satisfy the Cramér – Rao regularity conditions. The relative efficiency of

2(X) be two unbiased estimators of g(θ). Assume that the density functions and the estimators satisfy the Cramér – Rao regularity conditions. The relative efficiency of ![]() 1(X) to

1(X) to ![]() 2(X) is defined as the ratio of their variances,

2(X) is defined as the ratio of their variances,

where ![]() is the variance of

is the variance of ![]() i(X) at θ. In order to compare all the unbiased estimators of g(θ) on the same basis, we replace

i(X) at θ. In order to compare all the unbiased estimators of g(θ) on the same basis, we replace ![]() by the Cramér – Rao lower bound (5.2.7). In this manner, we obtain the efficiency function

by the Cramér – Rao lower bound (5.2.7). In this manner, we obtain the efficiency function

(5.3.2) ![]()

for all θ ![]() Θ. This function assumes values between zero and one. It is equal to one, for all θ, if and only if

Θ. This function assumes values between zero and one. It is equal to one, for all θ, if and only if ![]() attains the Cramér – Rao lower bound, or equivalently, if the distribution of

attains the Cramér – Rao lower bound, or equivalently, if the distribution of ![]() (X) is of the exponential type.

(X) is of the exponential type.

Consider the covariance between ![]() (X) and the score function S(X;θ) =

(X) and the score function S(X;θ) = ![]() log f(x;θ). As we have shown in the proof of the Cramér – Rao inequality that

log f(x;θ). As we have shown in the proof of the Cramér – Rao inequality that

where ρθ (![]() , S) is the coefficient of correlation between the estimator

, S) is the coefficient of correlation between the estimator ![]() and the score function, S(X;θ), at θ. Hence, the efficiency function is

and the score function, S(X;θ), at θ. Hence, the efficiency function is

(5.3.4) ![]()

Moreover, the relative efficiency of two unbiased estimators ![]() 1 and

1 and ![]() 2 is given by

2 is given by

(5.3.5) ![]()

This relative efficiency can be expressed also in terms of the ratio of the Fisher information functions obtained from the corresponding distributions of the estimators. That is, if h(![]() i;θ), i = 1, 2, is the p.d.f. of

i;θ), i = 1, 2, is the p.d.f. of ![]() i and I

i and I![]() i (θ) =

i (θ) = ![]() then

then

It is a straightforward matter to show that for every unbiased estimator ![]() of g(θ) and under the Cramér – Rao regularity conditions

of g(θ) and under the Cramér – Rao regularity conditions

Thus, the relative efficiency function (5.3.6) can be written, for cases satisfying the Cramér – Rao regularity condition, in the form

where ![]() 1(X) and

1(X) and ![]() 2(X) are unbiased estimators of g1(θ) and g2(θ), respectively. If the two estimators are unbiased estimators of the same function g(θ) then (5.3.8) is reduced to (5.3.1). The relative efficiency function (5.3.8) is known as the Pitman relative efficiency. It relates both the variances and the derivatives of the bias functions of the two estimators (see Pitman, 1948).

2(X) are unbiased estimators of g1(θ) and g2(θ), respectively. If the two estimators are unbiased estimators of the same function g(θ) then (5.3.8) is reduced to (5.3.1). The relative efficiency function (5.3.8) is known as the Pitman relative efficiency. It relates both the variances and the derivatives of the bias functions of the two estimators (see Pitman, 1948).

The information function of an estimator can be generalized to the multiparameter regular case (see Bhapkar, 1972). Let θ = (θ1, …, θk) be a vector of k–parameters and I(θ) be the Fisher information matrix (corresponding to one observation). If g1(θ), …, gr(θ), 1 ≤ r ≤ k, are functions satisfying the required differentiability conditions and ![]() 1(X), …,

1(X), …, ![]() r(X) are the corresponding unbiased estimators then, from (5.2.18),

r(X) are the corresponding unbiased estimators then, from (5.2.18),

(5.3.9) ![]()

where n is the sample size. Note that if r = k then D(θ) is nonsingular (the parametric functions g1(θ), …, gk(θ) are linearly independent), and we can express the above inequality in the form

(5.3.10) ![]()

Accordingly, and in analogy to (5.3.7), we define the amount of information in the vector estimator ![]() as

as

(5.3.11) ![]()

If 1 ≤ r < k but D(θ) is of full rank r, then

The efficiency function of a multiparameter estimator is thus defined by DeGroot and Raghavachari (1970) as

In Example 5.9, we illustrate the computation needed to determine this efficiency function.

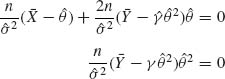

5.4 BEST LINEAR UNBIASED AND LEAST–SQUARES ESTIMATORS

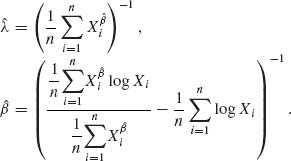

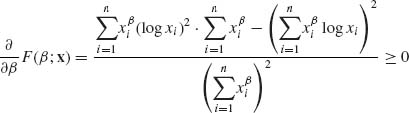

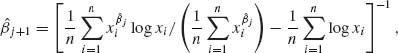

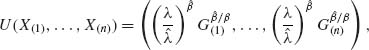

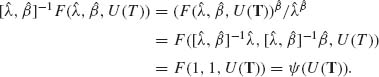

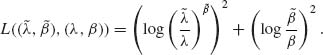

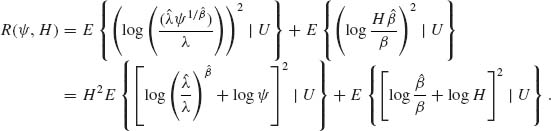

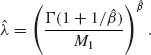

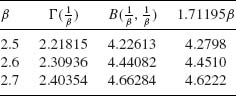

Best linear unbiased estimators (BLUEs) are linear combinations of the observations that yield unbiased estimates of the unknown parameters with minimal variance. As we have seen in Section 5.3, the uniformly minimum variance unbiased (UMVU) estimators (if they exist) are in many cases nonlinear functions of the observations. Accordingly, if we confine attention to linear estimators, the variance of the BLUE will not be smaller than that of the UMVU. On the other hand, BLUEs may exist when UMVU estimators do not exist. For example, if X1, …, Xn and i.i.d. random variables having a Weibull distribution G1/β(λ, 1) and both λ and β are unknown 0 < λ, β < ∞, the m.s.s. is the order statistic (X(1), …, X(n)). Suppose that we wish to estimate the parametric functions μ = ![]() log λ and σ =

log λ and σ = ![]() . There are no UMVU estimators of μ and σ. However, there are BLUEs of these parameters.

. There are no UMVU estimators of μ and σ. However, there are BLUEs of these parameters.

5.4.1 BLUEs of the Mean

We start with the case where the n random variables have the same unknown mean, μ and the covariance matrix is known. Thus, let X = (X1, …, Xn)′ be a random vector; E{X} = μ 1, 1′ = (1, 1, …, 1); μ is unknown (real). The covariance of X is ![]() . We assume that

. We assume that ![]() is finite and nonsingular. A linear estimator of μ is a linear function

is finite and nonsingular. A linear estimator of μ is a linear function ![]() = λ′X, where λ is a vector of known constants. The expected value of

= λ′X, where λ is a vector of known constants. The expected value of ![]() is μ if, and only if, λ′1 = 1. We thus consider the class of all such unbiased estimators and look for the one with the smallest variance. Such an estimator is called best linear unbiased (BLUE). The variance of

is μ if, and only if, λ′1 = 1. We thus consider the class of all such unbiased estimators and look for the one with the smallest variance. Such an estimator is called best linear unbiased (BLUE). The variance of ![]() is V {λ′X} = λ′

is V {λ′X} = λ′![]() , λ. We, therefore, determine λ0 that minimizes this variance and satisfies the condition of unbiasedness. Thus, we have to minimize the Lagrangian

, λ. We, therefore, determine λ0 that minimizes this variance and satisfies the condition of unbiasedness. Thus, we have to minimize the Lagrangian

(5.4.1) ![]()

It is simple to show that the minimizing vector is unique and is given by

Correspondingly, the BLUE is

Note that this BLUE can be obtained also by minimizing the quadratic form

In Example 5.12, we illustrate a BLUE of the form (5.4.3).

5.4.2 Least–Squares and BLUEs in Linear Models

Consider the problem of estimating a vector of parameters in cases where the means of the observations are linear combinations of the unknown parameters. Such models are called linear models. The literature on estimating parameters in linear models is so vast that it would be impractical to try listing here all the major studies. We mention, however, the books of Rao (1973), Graybill (1961, 1976), Anderson (1958), Searle (1971), Seber (1977), Draper and Smith (1966), and Sen and Srivastava (1990). We provide here a short exposition of the least–squares theory for cases of full linear rank.

Linear models of full rank. Suppose that the random vector X has expectation

(5.4.5) ![]()

where X is an n × 1 vector, A is an n × p matrix of known constants, and β a p × 1 vector of unknown parameters. We furthermore assume that 1 ≤ p ≤ n and A is a matrix of full rank, p. The covariance matrix of X is ![]() , = σ2I, where σ2 is unknown, 0 < σ2 < ∞. An estimator of β that minimizes the quadratic form

, = σ2I, where σ2 is unknown, 0 < σ2 < ∞. An estimator of β that minimizes the quadratic form

(5.4.6) ![]()

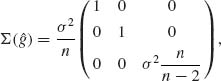

is called the least–squares estimator (LSE). This estimator was discussed in Example 2.13 and in Section 4.6 in connection with testing in normal regression models. The notation here is different from that of Section 4.6 in order to keep it in agreement with the previous notation of the present section. As given by (4.6.5), the LSE of β is

(5.4.7) ![]()

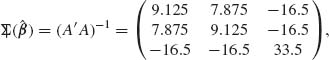

Note that ![]() is an unbiased estimator of β. To verify it, substitute Aβ in (5.3.7) instead of X. Furthermore, if BX is an arbitrary unbiased estimator of β (B a p × n matrix of specified constants) then B should satisfy the condition BA = I. Moreover, the covariance matrix of BX can be expressed in the following manner. Write B = B – S−1A′ + S−1A′, where S = A′A. Accordingly, the covariance matrix of BX is

is an unbiased estimator of β. To verify it, substitute Aβ in (5.3.7) instead of X. Furthermore, if BX is an arbitrary unbiased estimator of β (B a p × n matrix of specified constants) then B should satisfy the condition BA = I. Moreover, the covariance matrix of BX can be expressed in the following manner. Write B = B – S−1A′ + S−1A′, where S = A′A. Accordingly, the covariance matrix of BX is

(5.4.8) ![]()

where C = B – S−1A′, ![]() is the LSE and

is the LSE and ![]() (CX,

(CX, ![]() ) is the covariance matrix of CX and

) is the covariance matrix of CX and ![]() . This covariance matrix is

. This covariance matrix is

(5.4.9) ![]()

since BA = I. Thus, the covariance matrix of an arbitrary unbiased estimator of β can be expressed as the sum of two covariance matrices, one of the LSE, ![]() , and one of CX.

, and one of CX. ![]() ,(CX) is a nonnegative definite matrix. Obviously, when B = S−1A′ the covariance matrix of CX is 0. Otherwise, all the components of

,(CX) is a nonnegative definite matrix. Obviously, when B = S−1A′ the covariance matrix of CX is 0. Otherwise, all the components of ![]() have variances which are smaller than or equal to that of BX. Moreover, any linear combination of the components of

have variances which are smaller than or equal to that of BX. Moreover, any linear combination of the components of ![]() has a variance not exceeding that of BX. It means that the LSE,

has a variance not exceeding that of BX. It means that the LSE, ![]() , is also BLUE. We have thus proven the celebrated following theorem.

, is also BLUE. We have thus proven the celebrated following theorem.

Gauss – Markov Theorem If X = Aβ + ![]() , where A is a matrix of full rank, E{

, where A is a matrix of full rank, E{![]() } = 0 and

} = 0 and ![]() (

(![]() ) = σ2I, then the BLUE of any linear combination λ′β is λ′

) = σ2I, then the BLUE of any linear combination λ′β is λ′![]() , where λ is a vector of constants and

, where λ is a vector of constants and ![]() is the LSE of β. Moreover,

is the LSE of β. Moreover,

(5.4.10) ![]()

where S = A′A.

Note that an unbiased estimator of σ2 is

If the covariance of X is σ2V, where V is a known symmetric positive definite matrix then, after making the factorization V = DD′ and the transformation Y = D−1X the problem is reduced to the one with covariance matrix proportional to I. Substituting D−1X for X and D−1A for A in (5.3.7), we obtain the general formula

The estimator (5.4.12) is the BLUE of β and can be considered as the multidimensional generalization of (5.4.3).

As is illustrated in Example 5.10, when V is an arbitrary positive definite matrix, the BLUE (5.3.12) is not necessarily equivalent to the LSE (5.3.7). The conditions under which the two estimators are equivalent were studied by Watson (1967) and Zyskind (1967). The main result is that the BLUE and the LSE coincide when the rank of A is p, 1 ≤ p ≤ n, if and only if there exist p eigenvectors of V which form a basis in the linear space spanned by the columns of A. Haberman (1974) proved the following interesting inequality. Let ![]() , where (c1, …, cp) are given constants. Let

, where (c1, …, cp) are given constants. Let ![]() and θ* be, correspondingly, the BLUE and LSE of θ. If τ is the ratio of the largest to the smallest eigenvalues of V then

and θ* be, correspondingly, the BLUE and LSE of θ. If τ is the ratio of the largest to the smallest eigenvalues of V then

(5.4.13) ![]()

5.4.3 Best Linear Combinations of Order Statistics

Best linear combinations of order statistics are particularly attractive estimates when the family of distributions under consideration depends on location and scale parameters and the sample is relatively small. More specifically, suppose that ![]() is a location– and scale–parameter family, with p.d.f.s

is a location– and scale–parameter family, with p.d.f.s

![]()

where -∞ < μ < ∞ and 0 < σ < ∞. Let U = (X – μ)/σ be the standardized random variable corresponding to X. Suppose that X1, …, Xn are i.i.d. and let X* = (X(1), …, X(n))′ be the corresponding order statistic. Note that

![]()

where U1, …, Un are i.i.d. standard variables and (U(1), …, Un the corresponding order statistic. The p.d.f. of U is ![]() (u). If the covariance matrix, V, of the order statistic (U(1), …, Un exists, and if α = (α1, …, αn)′ denotes the vector of expectations of this order statistic, i.e., αi = E{U(i)}, i = 1, …, n, then we have the linear model

(u). If the covariance matrix, V, of the order statistic (U(1), …, Un exists, and if α = (α1, …, αn)′ denotes the vector of expectations of this order statistic, i.e., αi = E{U(i)}, i = 1, …, n, then we have the linear model

(5.4.14) ![]()

where E{![]() * } = 0 and

* } = 0 and ![]() (

(![]() * ) = V. This covariance matrix is known. Hence, according to (5.3.12), the BLUE of (μ, σ) is

* ) = V. This covariance matrix is known. Hence, according to (5.3.12), the BLUE of (μ, σ) is

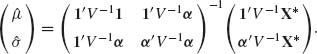

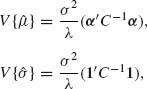

(5.4.15)

Let

![]()

and

![]()

then the BLUE can be written as

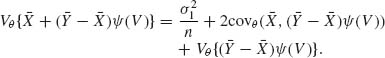

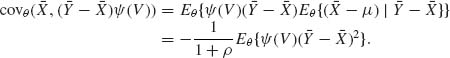

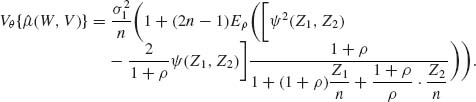

The variances and covariances of these BLUEs are

(5.4.17)

and

![]()

As will be illustrated in the following example the proposed BLUE, based on all the n order statistics, becomes impractical in certain situations.

Example 5.11 illustrates an estimation problem for which the BLUE based on all the n order statistics can be determined only numerically, provided the sample is not too large. Various methods have been developed to approximate the BLUEs by linear combinations of a small number of selected order statistics. Asymptotic (large sample) theory has been applied in the theory leading to the optimal choice of selected set of k, k < n, order statistics. This choice of order statistics is also called spacing. For the theories and methods used for the determination of the optimal spacing see the book of Sarhan and Greenberg (1962).

5.5 STABILIZING THE LSE: RIDGE REGRESSIONS

The method of ridge regression was introduced by Hoerl (1962) and by Hoerl and Kennard (1970). A considerable number of papers have been written on the subject since then. In particular see the papers of Marquardt (1970), Stone and Conniffe (1973), and others. The main objective of the ridge regression method is to overcome a phenomenon of possible instability of least–squares estimates, when the matrix of coefficients S = A′A has a large spread of the eigenvalues. To be more specific, consider again the linear model of full rank: X = Aβ + ![]() , where E{

, where E{![]() } = 0 and

} = 0 and ![]() , (

, (![]() ) = σ2I. We have seen that the LSE of β,

) = σ2I. We have seen that the LSE of β, ![]() = S−1A′X, minimizes the squared distance between the observed random vector X and the estimate of its expectation Aβ, i.e., ||X – AB||2. ||a|| denotes the Euclidean length of the vector a, i.e., ||a|| =

= S−1A′X, minimizes the squared distance between the observed random vector X and the estimate of its expectation Aβ, i.e., ||X – AB||2. ||a|| denotes the Euclidean length of the vector a, i.e., ||a|| =  . As we have shown in Section 5.3.2, the LSE in the present model is BLUE of β. However, if A is ill–conditioned, in the sense that the positive definite matrix S = A′A has large spread of the eigenvalues, with some being close to zero, then the LSE

. As we have shown in Section 5.3.2, the LSE in the present model is BLUE of β. However, if A is ill–conditioned, in the sense that the positive definite matrix S = A′A has large spread of the eigenvalues, with some being close to zero, then the LSE ![]() may be with high probability very far from β. Indeed, if L2 = ||

may be with high probability very far from β. Indeed, if L2 = ||![]() – β ||2 then

– β ||2 then

(5.5.1) ![]()

Let P be an orthogonal matrix that diagonalizes S, i.e., PSP′ = Λ, where Λ is a diagonal matrix consisting of the eigenvalues (λ1, …, λp) of S (all positive). Accordingly

(5.5.2) ![]()

We see that E{L2} ≥ ![]() , where λmin is the smallest eigenvalue. A very large value of E{L2} means that at least one of the components of β has a large variance. This implies that the corresponding value of βi may with high probability be far from the true value. The matrix A in experimental situations often represents the levels of certain factors and is generally under control of the experimenter. A good design will set the levels of the factors so that the columns of A will be orthogonal. In this case S = I, λ1 = … = λp = 1 and E{L2} attains the minimum possible value pσ2 for the LSE. In many practical cases, however, X is observed with an ill–conditioned coefficient matrix A. In this case, all the unbiased estimators of β are expected to have large values of L2. The way to overcome this deficiency is to consider biased estimators of β which are not affected strongly by small eigenvalues. Hoerl (1962) suggested the class of biased estimators

, where λmin is the smallest eigenvalue. A very large value of E{L2} means that at least one of the components of β has a large variance. This implies that the corresponding value of βi may with high probability be far from the true value. The matrix A in experimental situations often represents the levels of certain factors and is generally under control of the experimenter. A good design will set the levels of the factors so that the columns of A will be orthogonal. In this case S = I, λ1 = … = λp = 1 and E{L2} attains the minimum possible value pσ2 for the LSE. In many practical cases, however, X is observed with an ill–conditioned coefficient matrix A. In this case, all the unbiased estimators of β are expected to have large values of L2. The way to overcome this deficiency is to consider biased estimators of β which are not affected strongly by small eigenvalues. Hoerl (1962) suggested the class of biased estimators

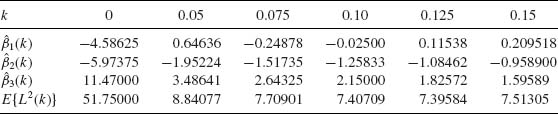

(5.5.3) ![]()

with k ≥ 0, called the ridge regression estimators. It can be shown for every k > 0, ![]() *(k) has smaller length than the LSE

*(k) has smaller length than the LSE ![]() , i.e., ||

, i.e., ||![]() *(k)|| < ||

*(k)|| < ||![]() ||. The ridge estimator is compared to the LSE. If we graph the values of

||. The ridge estimator is compared to the LSE. If we graph the values of ![]() (k) as functions of k we often see that the estimates are very sensitive to changes in the values of k close to zero, while eventually as k grows the estimates stabilize. The graphs of

(k) as functions of k we often see that the estimates are very sensitive to changes in the values of k close to zero, while eventually as k grows the estimates stabilize. The graphs of ![]() (k) for i = 1, …, k are called the ridge trace. It is recommended by Hoerl and Kennard (1970) to choose the value of k at which the estimates start to stabilize.

(k) for i = 1, …, k are called the ridge trace. It is recommended by Hoerl and Kennard (1970) to choose the value of k at which the estimates start to stabilize.

Among all (biased) estimators B of β that lie at a fixed distance from the origin the ridge estimator β*(k), for a proper choice of k, minimizes the residual sum of squares ||X – AB||2. For proofs of these geometrical properties, see Hoerl and Kennard (1970). The sum of mean–squared errors (MSEs) of the components of ![]() *(k) is

*(k) is

(5.5.4) ![]()

where γ = Hβ and H is the orthogonal matrix diagonalizing A′A. E{L2(k)} is a differentiable function of k, having a unique minimum k(0)(γ). Moreover, E{L2(k0(β))} < E{L2(0)}, where E{L2(0)} is the sum of variances of the LSE components, as in (5.4.2). The problem is that the value of k0(γ) depends on γ and if k is chosen too far from k0(γ), E{L2(k)} may be greater than E{L2(0)}. Thus, a crucial problem in applying the ridge–regression method is the choice of a flattening factor k. Hoerl, Kennard, and Baldwin (1975) studied the characteristics of the estimator obtained by substituting in (5.4.3) an estimate of the optimal k0(γ). They considered the estimator

where ![]() is the LSE and

is the LSE and ![]() 2 is the estimate of the variance around the regression line, as in (5.4.11). The estimator

2 is the estimate of the variance around the regression line, as in (5.4.11). The estimator ![]() *(

*(![]() ) is not linear in X, since k is a nonlinear function of X. Most of the results proven for a fixed value of k do not necessarily hold when k is random, as in (5.5.5). For this reason Hoerl, Kennard, and Baldwin performed extensive simulation experiments to obtain estimates of the important characteristics of

) is not linear in X, since k is a nonlinear function of X. Most of the results proven for a fixed value of k do not necessarily hold when k is random, as in (5.5.5). For this reason Hoerl, Kennard, and Baldwin performed extensive simulation experiments to obtain estimates of the important characteristics of ![]() *(

*(![]() ). They found that with probability greater than 0.5 the ridge–type estimator

). They found that with probability greater than 0.5 the ridge–type estimator ![]() *(

*(![]() ) is closer (has smaller distance norm) to the true β than the LSE. Moreover, this probability increases as the dimension p of the factor space increases and as the spread of the eigenvalues of S increases. The ridge type estimator

) is closer (has smaller distance norm) to the true β than the LSE. Moreover, this probability increases as the dimension p of the factor space increases and as the spread of the eigenvalues of S increases. The ridge type estimator ![]() *(

*(![]() ) are similar to other types of nonlinear estimators (James – Stein, Bayes, and other types) designed to reduce the MSE. These are discussed in Chapter 8.

) are similar to other types of nonlinear estimators (James – Stein, Bayes, and other types) designed to reduce the MSE. These are discussed in Chapter 8.

A more general class of ridge–type estimators called the generalized ridge regression estimators is given by

(5.5.6) ![]()

where C is a positive definite matrix chosen so that A′A + C is nonsingular. [The class is actually defined also for A′A + C singular with a Moore – Penrose generalized inverse replacing (A′A + C)−1; see Marquardt (1970).]

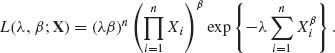

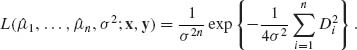

5.6 MAXIMUM LIKELIHOOD ESTIMATORS

5.6.1 Definition and Examples

In Section 3.3, we introduced the notion of the likelihood function, L(θ;x) defined over a parameter space Θ, and studied some of its properties. We develop here an estimation theory based on the likelihood function.

The maximum likelihood estimator (MLE) of θ is a value of θ at which the likelihood function L(θ;x) attains its supremum (or maximum). We remark that if the family ![]() admits a nontrivial sufficient statistic T(X) then the MLE is a function of T(X). This is implied immediately from the Neyman – Fisher Factorization Theorem. Indeed, in this case,

admits a nontrivial sufficient statistic T(X) then the MLE is a function of T(X). This is implied immediately from the Neyman – Fisher Factorization Theorem. Indeed, in this case,

![]()

where h(x) > 0 with probability one. Hence, the kernel of the likelihood function can be written as L*(θ;x) = g(T(x);θ). Accordingly, the value θ that maximizes it depends on T(X). We also notice that although the MLE is a function of the sufficient statistic, the converse is not always true. An MLE is not necessarily a sufficient statistic.

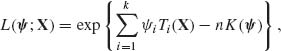

5.6.2 MLEs in Exponential Type Families

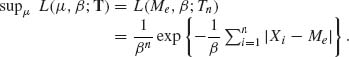

Let X1, …, Xn be i.i.d. random variables having a k–parameter exponential type family, with a p.d.f. of the form (2.16.2). The likelihood function of the natural parameters is

(5.6.1)

where

![]()

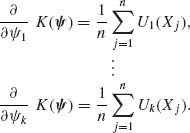

The MLEs of ![]() 1, …,

1, …, ![]() k are obtained by solving the system of k equations

k are obtained by solving the system of k equations

Note that whenever the expectations exist, E![]() {Ui(X)} = ∂ K(

{Ui(X)} = ∂ K(![]() )/∂

)/∂ ![]() i for each i = 1, …, k. Hence, if X1, …, Xn are i.i.d. E

i for each i = 1, …, k. Hence, if X1, …, Xn are i.i.d. E![]()

![]() , for each i = 1, …, k, where

, for each i = 1, …, k, where ![]() is the vector of MLEs. For all points

is the vector of MLEs. For all points ![]() in the interior of the parameter space n, the matrix

in the interior of the parameter space n, the matrix ![]() exists and is positive definite for all

exists and is positive definite for all ![]() since K(

since K(![]() ) is convex. Thus, the root

) is convex. Thus, the root ![]() of (5.6.2) is unique and is a m.s.s.

of (5.6.2) is unique and is a m.s.s.

5.6.3 The Invariance Principle

If the vector θ = (θ1, …, θk) is reparametrized by a one–to–one transformation ![]() 1 = g1(θ), …,

1 = g1(θ), …, ![]() k = gk(θ) then the MLEs of

k = gk(θ) then the MLEs of ![]() i are obtained by substituting in the g–functions the MLEs of θ. This is obviously true when the transformation θ →

i are obtained by substituting in the g–functions the MLEs of θ. This is obviously true when the transformation θ → ![]() is one–to–one. Indeed, if θ1 =

is one–to–one. Indeed, if θ1 = ![]() then the likelihood function L(θ;x) can be expressed as a function of

then the likelihood function L(θ;x) can be expressed as a function of ![]() ,

, ![]() . If (

. If (![]() 1, …,

1, …, ![]() k) is a point at which L(θ, x) attains its supremum, and if

k) is a point at which L(θ, x) attains its supremum, and if ![]() = (g1(

= (g1(![]() ), …, gk(

), …, gk(![]() )) then, since the transformation is one–to–one,

)) then, since the transformation is one–to–one,

(5.6.3) ![]()

where L*(![]() ;x) is the likelihood, as a function of

;x) is the likelihood, as a function of ![]() . This result can be extended to general transformations, not necessarily one–to–one, by a proper redefinition of the concept of MLE over the space of the

. This result can be extended to general transformations, not necessarily one–to–one, by a proper redefinition of the concept of MLE over the space of the ![]() –values. Let

–values. Let ![]() = g(θ) be a vector valued function of θ; i.e.,

= g(θ) be a vector valued function of θ; i.e., ![]() = g(θ) = (g1(θ), …, gk(θ)) where the dimension of g(θ), r, does not exceed that of θ, k.

= g(θ) = (g1(θ), …, gk(θ)) where the dimension of g(θ), r, does not exceed that of θ, k.

Following Zehna (1966), we introduce the notion of the profile likelihood function of ![]() = (

= (![]() 1, …,

1, …, ![]() r). Define the cosets of θ–values

r). Define the cosets of θ–values

(5.6.4) ![]()

and let L(θ;x) be the likelihood function of θ given X. The profile likelihood of ![]() given X is defined as

given X is defined as

(5.6.5) ![]()

Obviously, in the one–to–one case L*(θ;x) = ![]() . Generally, we define the MLE of

. Generally, we define the MLE of ![]() to be the value at which L*(

to be the value at which L*(![]() ; x) attains its supremum. It is easy then to prove that if

; x) attains its supremum. It is easy then to prove that if ![]() is an MLE of θ and

is an MLE of θ and ![]() = g(

= g(![]() ), then

), then ![]() is an MLE of

is an MLE of ![]() , i.e.,

, i.e.,

(5.6.6) ![]()

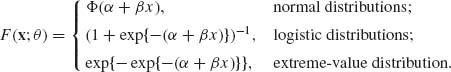

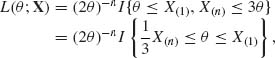

5.6.4 MLE of the Parameters of Tolerance Distributions

Suppose that k–independent experiments are performed at controllable real–valued experimental levels (dosages) -∞ < x1 < … < xk < ∞. At each of these levels nj Bernoulli trials are performed (j = 1, …, k). The success probabilities of these Bernoulli trials are increasing functions F(x) of x. These functions, called tolerance distributions, are the expected proportion of (individuals) units in a population whose tolerance against the applied dosage does not exceed the level x. The model thus consists of k–independent random variables J1, …, Jk such that Ji ∼ B(ni, F(xi;θ)), i = 1, …, k, where θ = (θ1, …, θr), 1 ≤ r < k, is a vector of unknown parameters. The problem is to estimate θ. Frequently applied models are

(5.6.7)

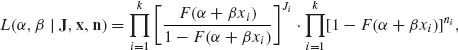

We remark that in some of the modern literature the tolerance distributions are called link functions (see Lindsey, 1996). Generally, if F(α + βxi) is the success probability at level xi, the likelihood function of (α, β), given J1, …, Jk and x1, …, xk, n1, …, nk, is

(5.6.8)

and the log–likelihood function is

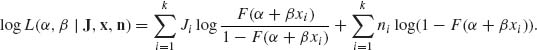

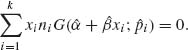

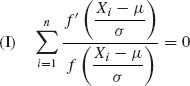

The MLE of α and β are the roots of the nonlinear equations

(5.6.9)

where f(z) = F′(z) is the p.d.f. of the standardized distribution F(z) and ![]() (z) = 1 – F(z).

(z) = 1 – F(z).

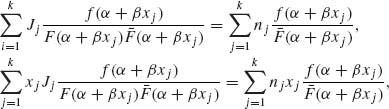

Let ![]() i = Ji/ni, i = 1, …, k, and define the function

i = Ji/ni, i = 1, …, k, and define the function

Accordingly, the MLEs of α and β are the roots ![]() and

and ![]() of the equations

of the equations

(5.6.11)

and

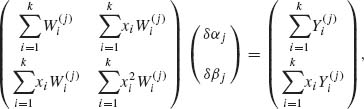

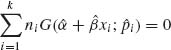

The solution of this system of (generally nonlinear) equations according to the Newton – Raphson method proceeds as follows. Let ![]() 0 and

0 and ![]() 0 be an initial solution. The adjustment after the jth iteration (j = 0, 1, …) is

0 be an initial solution. The adjustment after the jth iteration (j = 0, 1, …) is ![]() j + 1 =

j + 1 = ![]() j + δ αj and

j + δ αj and ![]() j + 1 =

j + 1 = ![]() j + δ βj, where δ αj and δ βj are solutions of the linear equations

j + δ βj, where δ αj and δ βj are solutions of the linear equations

where

(5.6.13) ![]()

and

![]()

and G′(z;![]() ) =

) = ![]() . The linear equations (5.6.12) resemble the normal equations in weighted least–squares estimation. However, in the present problems the weights depend on the unknown parameters α and β. In each iteration, the current estimates of α and β are substituted. For applications of this procedure in statistical reliability and bioassay quantal response analysis, see Finney (1964), Gross and Clark (1975), and Zacks (1997).

. The linear equations (5.6.12) resemble the normal equations in weighted least–squares estimation. However, in the present problems the weights depend on the unknown parameters α and β. In each iteration, the current estimates of α and β are substituted. For applications of this procedure in statistical reliability and bioassay quantal response analysis, see Finney (1964), Gross and Clark (1975), and Zacks (1997).

5.7 EQUIVARIANT ESTIMATORS

5.7.1 The Structure of Equivariant Estimators

Certain families of distributions have structural properties that are preserved under transformations of the random variables. For example, if X has an absolutely continuous distribution belonging to a family ![]() which depends on location and scale parameters, i.e., its p.d.f. is f(x;μ, σ) =

which depends on location and scale parameters, i.e., its p.d.f. is f(x;μ, σ) = ![]() , where -∞ < μ < ∞ and 0 < σ < ∞, then any real–affine transformation of X, given by

, where -∞ < μ < ∞ and 0 < σ < ∞, then any real–affine transformation of X, given by

![]()

yields a random variable Y = α + β X with p.d.f. f(y;μ, σ) = ![]() , where

, where ![]() = α + β μ and

= α + β μ and ![]() = β σ. Thus, the distribution of Y belongs to the same family

= β σ. Thus, the distribution of Y belongs to the same family ![]() . The family

. The family ![]() is preserved under transformations belonging to the group

is preserved under transformations belonging to the group ![]() = {[α, β]; -∞ < α < ∞, 0 < β < ∞ } of real–affine transformations.

= {[α, β]; -∞ < α < ∞, 0 < β < ∞ } of real–affine transformations.

In this section, we present the elements of the theory of families of distributions and corresponding estimators having structural properties that are preserved under certain groups of transformations. For a comprehensive treatment of the theory and its geometrical interpretation, see the book of Fraser (1968). Advanced treatment of the subject can be found in Berk (1967), Hall, Wijsman, and Ghosh (1965), Wijsman (1990), and Eaton (1989). We require that every element g of ![]() be a one–to–one transformation of

be a one–to–one transformation of ![]() onto

onto ![]() . Accordingly, the sample space structure does not change under these transformations. Moreover, if

. Accordingly, the sample space structure does not change under these transformations. Moreover, if ![]() is the Borel σ–field on

is the Borel σ–field on ![]() then, for all g

then, for all g ![]()

![]() , we require that Pθ [gB] will be well defined for all B

, we require that Pθ [gB] will be well defined for all B ![]()

![]() and θ

and θ ![]() Θ. Furthermore, as seen in the above example of the location and scale parameter distributions, if θ is a parameter of the distribution of X the parameter of Y = gX is

Θ. Furthermore, as seen in the above example of the location and scale parameter distributions, if θ is a parameter of the distribution of X the parameter of Y = gX is ![]() θ, where

θ, where ![]() is a transformation on the parameter space Θ defined by the relationship

is a transformation on the parameter space Θ defined by the relationship

(5.7.1) ![]()

In the example of real–affine transformations, if g = [α, β] and θ = (μ, σ), then ![]() (μ, σ) = (α + β μ, β σ). We note that

(μ, σ) = (α + β μ, β σ). We note that ![]() Θ = Θ for every

Θ = Θ for every ![]() corresponding to g in

corresponding to g in ![]() . Suppose that X1, …, Xn are i.i.d. random variables whose distribution F belongs to a family

. Suppose that X1, …, Xn are i.i.d. random variables whose distribution F belongs to a family ![]() that is preserved under transformations belonging to a group

that is preserved under transformations belonging to a group ![]() . If T(X1, …, Xn) is a statistic, then we define the transformations

. If T(X1, …, Xn) is a statistic, then we define the transformations ![]() on the range

on the range ![]() of T(X1, …, Xn), corresponding to transformations g of

of T(X1, …, Xn), corresponding to transformations g of ![]() , by

, by

(5.7.2) ![]()

A statistic S(X1, …, Xn) is called invariant with respect to ![]() if

if

(5.7.3) ![]()

A coset of x0 with respect to ![]() is the set of all points that can be obtained as images of x0, i.e.,

is the set of all points that can be obtained as images of x0, i.e.,

![]()

Such a coset is called also an orbit of ![]() in

in ![]() through x0. If x0 = (x01, …, x0n) is a given vector, the orbit of

through x0. If x0 = (x01, …, x0n) is a given vector, the orbit of ![]() in

in ![]() (n) through x0 is the coset

(n) through x0 is the coset

![]()

If x(1) and x(2) belong to the same orbit and S(x) = S(x1, …, xn) is invariant with respect to ![]() then S(x(1)) = S(x(2)). A statistic U(X) = U(X1, …, Xn) is called maximal invariant if it is invariant and if X(1) and X(2) belong to two different orbits then U(X(1)) ≠ U(X(2)). Every invariant statistic is a function of a maximal invariant statistic.

then S(x(1)) = S(x(2)). A statistic U(X) = U(X1, …, Xn) is called maximal invariant if it is invariant and if X(1) and X(2) belong to two different orbits then U(X(1)) ≠ U(X(2)). Every invariant statistic is a function of a maximal invariant statistic.

If ![]() (X1, …, Xn) is an estimator of θ, it would be often desirable to have the property that the estimator reacts to transformations of

(X1, …, Xn) is an estimator of θ, it would be often desirable to have the property that the estimator reacts to transformations of ![]() in the same manner as the parameters θ do, i.e.,

in the same manner as the parameters θ do, i.e.,

5.7.2 Minimum MSE Equivariant Estimators

Estimators satisfying (5.7.4) are called equivariant. The objective is to derive an equivariant estimator having a minimum MSE or another optimal property. The algebraic structure of the problem allows us often to search for such optimal estimators in a systematic manner.

5.7.3 Minimum Risk Equivariant Estimators

A loss function L(![]() (X), θ) is called invariant under

(X), θ) is called invariant under ![]() if

if

(5.7.5) ![]()

for all θ ![]() Θ and all g

Θ and all g ![]()

![]() .

.

The coset C(θ0) = {θ;θ = ![]() θ0, g

θ0, g ![]()

![]() } is called an orbit of

} is called an orbit of ![]() through θ0 in Θ. We show now that if

through θ0 in Θ. We show now that if ![]() (X) is an equivariant estimator and L(

(X) is an equivariant estimator and L(![]() (X), θ) is an invariant loss function then the risk function R(

(X), θ) is an invariant loss function then the risk function R(![]() , θ) = E{L(

, θ) = E{L(![]() (X), θ)} is constant on each orbit of

(X), θ)} is constant on each orbit of ![]() in Θ. Indeed, for any g

in Θ. Indeed, for any g ![]()

![]() , if the distribution of X is F(x;θ) and the distribution of Y = gX is F(y;

, if the distribution of X is F(x;θ) and the distribution of Y = gX is F(y;![]() θ), then if

θ), then if ![]() is equivariant

is equivariant

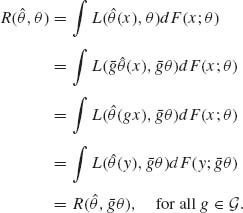

(5.7.6)

Thus, whenever the structure of the model is such that Θ contains only one orbit with respect to ![]() , and there exist equivariant estimators with finite risk, then each such equivariant estimator has a constant risk function. In Example 5.23, we illustrate such cases. We consider there the location and scale parameter family of the normal distributions N(μ, σ). This family has a parameter space Θ, which has only one orbit with respect to the group

, and there exist equivariant estimators with finite risk, then each such equivariant estimator has a constant risk function. In Example 5.23, we illustrate such cases. We consider there the location and scale parameter family of the normal distributions N(μ, σ). This family has a parameter space Θ, which has only one orbit with respect to the group ![]() of real–affine transformations. If the parameter space has various orbits, as in the case of Example 5.24, there is no global uniformly minimum risk equivariant estimator, but only locally for each orbit. In Example 5.26, we construct uniformly minimum risk equivariant estimators of the scale and shape parameters of Weibull distributions for a group of transformations and a corresponding invariant loss function.

of real–affine transformations. If the parameter space has various orbits, as in the case of Example 5.24, there is no global uniformly minimum risk equivariant estimator, but only locally for each orbit. In Example 5.26, we construct uniformly minimum risk equivariant estimators of the scale and shape parameters of Weibull distributions for a group of transformations and a corresponding invariant loss function.

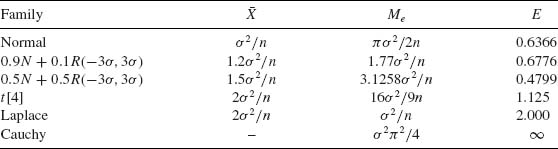

5.7.4 The Pitman Estimators

We develop here the minimum MSE equivariant estimators for the special models of location parameters and location and scale parameters. These estimators are called the Pitman estimators.

Consider first the family ![]() of location parameters distributions, i.e., every p.d.f. of

of location parameters distributions, i.e., every p.d.f. of ![]() is given by f(x;θ) =

is given by f(x;θ) = ![]() (x-θ), -∞ < θ < ∞.

(x-θ), -∞ < θ < ∞. ![]() (x) is the standard p.d.f. According to our previous discussion, we consider the group

(x) is the standard p.d.f. According to our previous discussion, we consider the group ![]() of real translations. Let

of real translations. Let ![]() (X) be an equivariant estimator of θ. Then, writing T = (

(X) be an equivariant estimator of θ. Then, writing T = (![]() , X(1)-

, X(1)-![]() , …, X(n)-

, …, X(n)-![]() ), where X(1) ≤ … ≤ X(n), for any equivariant estimator, d(X), of θ, we have

), where X(1) ≤ … ≤ X(n), for any equivariant estimator, d(X), of θ, we have

![]()

Note that U = (X(1) – ![]() , …, X(n) –

, …, X(n) – ![]() has a distribution that does not depend on θ. Moreover, since

has a distribution that does not depend on θ. Moreover, since ![]() (X) is an equivariant estimator, we can write

(X) is an equivariant estimator, we can write

![]()

Thus, the MSE of d(X) is

(5.7.7) ![]()

It follows immediately that the function ![]() (U) which minimizes the MSE is the conditional expectation

(U) which minimizes the MSE is the conditional expectation

(5.7.8) ![]()

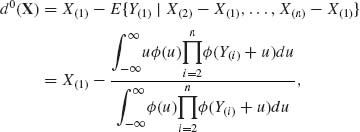

Thus, the minimum MSE equivariant estimator is

This is a generalized form of the Pitman estimator. The well–known specific form of the Pitman estimator is obtained by starting with ![]() (X) = X(1). In this case, F(Y) = Y(1), where Y(1) is the minimum of a sample from a standard distribution. Formula (5.7.9) is then reduced to the special form

(X) = X(1). In this case, F(Y) = Y(1), where Y(1) is the minimum of a sample from a standard distribution. Formula (5.7.9) is then reduced to the special form

(5.7.10)

where Y(i) = X(i) – X(1), i = 2, …, n. In the derivation of (5.7.9), we have assumed that the MSE of d(X) exists. A minimum risk equivariant estimator may not exist. Finally, we mentioned that the minimum MSE equivariant estimators are unbiased. Indeed

(5.7.11) ![]()

If ![]() is a scale and location family of distribution, with p.d.f.s of the form

is a scale and location family of distribution, with p.d.f.s of the form

![]()

where ![]() (u) is a p.d.f., then every equivariant estimator of μ with respect to the group

(u) is a p.d.f., then every equivariant estimator of μ with respect to the group ![]() of real–affine transformations can be expressed in the form

of real–affine transformations can be expressed in the form

(5.7.12) ![]()

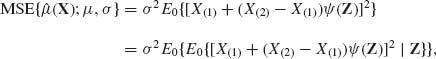

where X(1) ≤ … ≤ X(n) is the order statistic, X(2) – X(1) > 0 and Z = (Z3, …, Zn)′, with Zi = (X(i) – X(1))/(X(2) – X(1)). The MSE of ![]() (X) is given by

(X) is given by

(5.7.13)

where E0{·} designates an expectation with respect to the standard distribution (μ = 0, σ = 1). An optimal choice of ![]() (Z) is such for which E0{[X(1) + (X(2) – X(1))

(Z) is such for which E0{[X(1) + (X(2) – X(1))![]() (Z)]2| Z} is minimal. Thus, the minimum MSE equivariant estimator of μ is

(Z)]2| Z} is minimal. Thus, the minimum MSE equivariant estimator of μ is

(5.7.14) ![]()

where

(5.7.15) ![]()

Equivalently, the Pitman estimator of the location parameter is expressed as

(5.7.16)

In a similar manner, we show that the minimum MSE equivariant estimator for σ is ![]() 0(Xn) = (X(2)-X(1))

0(Xn) = (X(2)-X(1))![]() 0(Z3, …, Zn), where

0(Z3, …, Zn), where

(5.7.17) ![]()

Indeed, ![]() 0(Z) minimizes E0{(U2

0(Z) minimizes E0{(U2![]() (Z) – 1)2| Z}. Accordingly, the Pitman estimator of the scale parameter, σ, is

(Z) – 1)2| Z}. Accordingly, the Pitman estimator of the scale parameter, σ, is

(5.7.18)

5.8 ESTIMATING EQUATIONS

5.8.1 Moment–Equations Estimators

Suppose that ![]() is a family of distributions depending on k real parameters, θ1, …, θk, 1 ≤ k. Suppose that the moments μr, 1 ≤ r ≤ k, exist and are given by some specified functions

is a family of distributions depending on k real parameters, θ1, …, θk, 1 ≤ k. Suppose that the moments μr, 1 ≤ r ≤ k, exist and are given by some specified functions

![]()

If X1, …, Xn are i.i.d. random variables having a distribution in ![]() , the sample moments Mr =

, the sample moments Mr = ![]() are unbiased estimators of μ r (1 ≤ r ≤ k) and by the laws of large numbers (see Section 1.11) they converge almost surely to μr as n → ∞. The roots of the system of equations

are unbiased estimators of μ r (1 ≤ r ≤ k) and by the laws of large numbers (see Section 1.11) they converge almost surely to μr as n → ∞. The roots of the system of equations

(5.8.1) ![]()

are called the moment–equations estimators (MEEs) of θ1, …, θk.

In Examples 5.28 – 5.29, we discuss cases where both the MLE and the MEE can be easily determined, but the MLE exhibiting better characteristics. The question is then, why should we consider the MEEs at all? The reasons for considering MEEs are as follows:

5.8.2 General Theory of Estimating Functions

Both the MLE and the MME are special cases of a class of estimators called estimating functions estimator. A function g(X;θ), X ![]()

![]() (n) and θ

(n) and θ ![]() Θ, is called an estimating function, if the root

Θ, is called an estimating function, if the root ![]() (X) of the equation

(X) of the equation

belongs to Θ; i.e., ![]() (X) is an estimator of θ. Note that if θ is a k–dimensional vector then (5.8.2) is a system of k–independent equations in θ. In other words, g(X, θ) is a k–dimensional vector function, i.e.,

(X) is an estimator of θ. Note that if θ is a k–dimensional vector then (5.8.2) is a system of k–independent equations in θ. In other words, g(X, θ) is a k–dimensional vector function, i.e.,

![]()

![]() (X) is the simultaneous solution of

(X) is the simultaneous solution of

(5.8.3)

In the MEE case, gi(X, θ) = Mi(θ1, …, θk) – mi (i = 1, …, k). In the MLE case,

![]()

In both cases, Eθ{g(X, θ)} = 0 for all θ, under the CR regularity conditions (see Theorem 5.2.2).

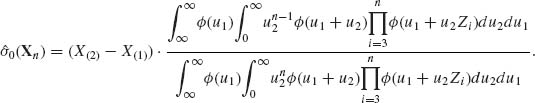

An estimating function g(X, θ) is called unbiased if Eθ {g(X;θ)} = 0 for all θ. The information in an estimating function g(X, θ) is defined as

(5.8.4)

For example, if g(X, θ) is the score function S(X, θ), then under the regularity conditions (3.7.2), ![]() = -I(θ) and Eθ {S2(X;θ)} = I(θ), where I(θ) is the Fisher information function. A basic result of is that Ig(θ) ≤ I(θ) for all unbiased estimating functions.

= -I(θ) and Eθ {S2(X;θ)} = I(θ), where I(θ) is the Fisher information function. A basic result of is that Ig(θ) ≤ I(θ) for all unbiased estimating functions.

The CR regularity conditions are now generalized for estimating functions. The regularity conditions for estimating functions are as follows:

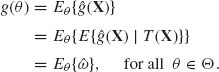

Let T be a sufficient statistic for a parametric family ![]() . Bhapkar (1972) proved that, for any unbiased estimating function g, if

. Bhapkar (1972) proved that, for any unbiased estimating function g, if

![]()

then Ig(θ) ≤ Ig* (θ) for all θ with equality if and only if g* ![]()

![]() T. This is a generalization of the Blackwell – Rao Theorem to unbiased estimating functions. Under the regularity conditions, the score function S(X, θ) =

T. This is a generalization of the Blackwell – Rao Theorem to unbiased estimating functions. Under the regularity conditions, the score function S(X, θ) = ![]() log f(X, θ) depends on X only through the likelihood statistic T(X), which is minimal sufficient. Thus, the score function is most informative among the unbiased estimating functions that satisfy the regularity conditions. If θ is a vector parameter, then the information in g is

log f(X, θ) depends on X only through the likelihood statistic T(X), which is minimal sufficient. Thus, the score function is most informative among the unbiased estimating functions that satisfy the regularity conditions. If θ is a vector parameter, then the information in g is

where

(5.8.6) ![]()

and

(5.8.7) ![]()

where g(X, θ) = (g1(X, θ), …, gk(X, θ))′ is a vector of k estimating functions, for estimating the k components of θ.

We can show that I(θ) = Ig(θ) is a nonnegative definite matrix, and I(θ) is the Fisher information matrix.

Various applications of the theory of estimating functions can be found in Godambe (1991).

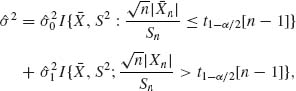

5.9 PRETEST ESTIMATORS

Pretest estimators (PTEs) are estimators of the parameters, or functions of the parameters of a distribution, which combine testing of some hypothesis (es) and estimation for the purpose of reducing the MSE of the estimator. The idea of preliminary testing has been employed informally in statistical methodology in many different ways and forms. Statistical inference is often based on some model, which assumes a certain set of assumptions. If the model is correct, or adequately fits the empirical data, the statistician may approach the problem of estimating the parameters of interest in a certain manner. However, if the model is rejectable by the data the estimation of the parameter of interest may have to follow a different procedure. An estimation procedure that assumes one of two alternative forms, according to the result of a test of some hypothesis, is called a pretest estimation procedure.

PTEs have been studied in various estimation problems, in particular in various least–squares estimation problems for linear models. As we have seen in Section 4.6, if some of the parameters of a linear model can be assumed to be zero (or negligible), the LSE should be modified, according to formula (4.6.14). Accordingly, if ![]() denotes the unconstrained LSE of a full–rank model and β* the constrained LSE (4.6.14), the PRE of β is

denotes the unconstrained LSE of a full–rank model and β* the constrained LSE (4.6.14), the PRE of β is

(5.9.1) ![]()

where A denotes the acceptance set of the hypothesis H0: βr + 1 = βr + 2 = … = β p = 0; and ![]() the complement of A. An extensive study of PREs for linear models, of the form (5.8.5), is presented in the book of Judge and Bock (1978). The reader is referred also to the review paper of Billah and Saleh (1998).

the complement of A. An extensive study of PREs for linear models, of the form (5.8.5), is presented in the book of Judge and Bock (1978). The reader is referred also to the review paper of Billah and Saleh (1998).

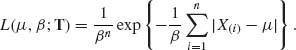

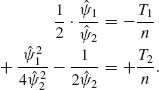

5.10 ROBUST ESTIMATION OF THE LOCATION AND SCALE PARAMETERS OF SYMMETRIC DISTRIBUTIONS

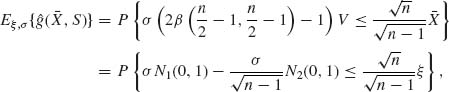

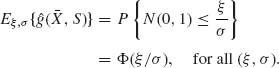

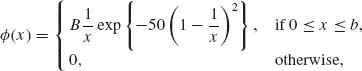

In this section, we provide some new developments concerning the estimation of the location parameter, μ, and the scale parameter, σ, in a parametric family, ![]() , whose p.d.f.s are of the form f(x;μ, σ) =

, whose p.d.f.s are of the form f(x;μ, σ) = ![]() , and f(-x) = f(x) for all -∞ < x < ∞. We have seen in various examples before that an estimator of μ, or of σ, which has small MSE for one family may not be as good for another. We provide below some variance comparisons of the sample mean,

, and f(-x) = f(x) for all -∞ < x < ∞. We have seen in various examples before that an estimator of μ, or of σ, which has small MSE for one family may not be as good for another. We provide below some variance comparisons of the sample mean, ![]() , and the sample median, Me, for the following families: normal, mixture of normal and rectangular, t[ν], Laplace and Cauchy. The mixtures of normal and rectangular distributions will be denoted by (1 – α)N + α R(-3σ, 3σ). Such a family of mixtures has the standard density function

, and the sample median, Me, for the following families: normal, mixture of normal and rectangular, t[ν], Laplace and Cauchy. The mixtures of normal and rectangular distributions will be denoted by (1 – α)N + α R(-3σ, 3σ). Such a family of mixtures has the standard density function

![]()

The t[ν] distributions have a standard p.d.f. as given in (2.13.5). The asymptotic (large sample) variance of the sample median, Me, is given by the formula (7.9.3)

(5.10.1) ![]()

provided f(0) > 0, and f(x) is continuous at x = 0.

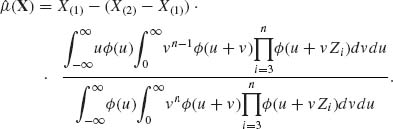

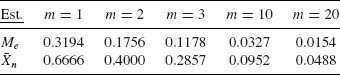

Table 5.1 Asymptotic Variances of ![]() and Me

and Me

In Table 5.1, we provide the asymptotic variances of ![]() and Me and their ratio E = AV{

and Me and their ratio E = AV{![]() }/AV{Me}, for the families mentioned above. We see that the sample mean

}/AV{Me}, for the families mentioned above. We see that the sample mean ![]() which is a very good estimator of the location parameter, μ, when

which is a very good estimator of the location parameter, μ, when ![]() is the family of normal distributions loses its efficiency when

is the family of normal distributions loses its efficiency when ![]() deviates from normality. The reason is that the sample mean is very sensitive to deviations in the sample of the extreme values. The sample mean performs badly when the sample is drawn from a distribution having heavy tails (relatively high probabilities of large deviations from the median of the distribution). This phenomenon becomes very pronounced in the case of the Cauchy family. One can verify (Fisz, 1963, p. 156) that if X1, …, Xn are i.i.d. random variables having a common Cauchy distribution than the sample mean

deviates from normality. The reason is that the sample mean is very sensitive to deviations in the sample of the extreme values. The sample mean performs badly when the sample is drawn from a distribution having heavy tails (relatively high probabilities of large deviations from the median of the distribution). This phenomenon becomes very pronounced in the case of the Cauchy family. One can verify (Fisz, 1963, p. 156) that if X1, …, Xn are i.i.d. random variables having a common Cauchy distribution than the sample mean ![]() has the same Cauchy distribution, irrespective of the sample size. Furthermore, the Cauchy distribution does not have moments, or we can say that the variance of

has the same Cauchy distribution, irrespective of the sample size. Furthermore, the Cauchy distribution does not have moments, or we can say that the variance of ![]() is infinite. In order to avoid such possibly severe consequences due to the use of

is infinite. In order to avoid such possibly severe consequences due to the use of ![]() as an estimator of μ, when the statistician specifies the model erroneously, several types of less sensitive estimators of μ and σ were developed. These estimators are called robust in the sense that their performance is similar, in terms of the sampling variances and other characteristics, over a wide range of families of distributions. We provide now a few such robust estimators of the location parameter:

as an estimator of μ, when the statistician specifies the model erroneously, several types of less sensitive estimators of μ and σ were developed. These estimators are called robust in the sense that their performance is similar, in terms of the sampling variances and other characteristics, over a wide range of families of distributions. We provide now a few such robust estimators of the location parameter:

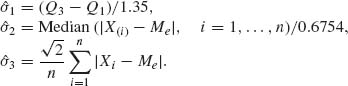

(5.10.2) ![]()

The median, Me is a special case, when α → 0.5.

(5.10.3) ![]()

Another such estimator is called the trimean and is given by

![]()

(5.10.4)

and

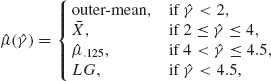

In analogy to the MLE solution and, in order to avoid strong dependence on a particular form of f(x), a general class of M–estimators is defined as the simultaneous solution of

(5.10.5) ![]()

and

![]()

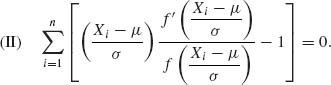

for suitably chosen ![]() (·) and χ(·) functions. Huber (1964) proposed the M–estimators for which

(·) and χ(·) functions. Huber (1964) proposed the M–estimators for which

(5.10.6)

and

(5.10.7) ![]()

where

![]()

The determination of Huber’s M–estimators requires numerical iterative solutions. It is customary to start with the initial solution of μ = Me and σ = (Q3 – Q1)/1.35, where Q3 – Q1 is the interquartile range, or ![]() . Values of k are usually taken in the interval [1, 2].

. Values of k are usually taken in the interval [1, 2].

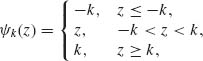

Other M–estimators were introduced by considering a different kind of ![]() (·) function. Having estimated the value of γ by

(·) function. Having estimated the value of γ by ![]() , use the estimator

, use the estimator

where the “outer–mean” is the mean of the extreme values in the sample. The reader is referred to the Princeton Study (Andrews et al., 1972) for a comprehensive examination of these and many other robust estimators of the location parameter. Another important article on the subject is that of Huber (1964, 1967).

Robust estimators of the scale parameter, σ, are not as well developed as those of the location parameter. The estimators that are used are

Further developments have been recently attained in the area of robust estimation of regression coefficients in multiple regression problems.

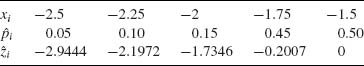

PART II: EXAMPLES

Example 5.1. In the production of concrete, it is required that the proportion of concrete cubes (of specified dimensions) having compressive strength not smaller than ξ0 be at least 0.95. In other words, if X is a random variable representing the compressive strength of a concrete cube, we require that P{X ≥ ξ0} = 0.95. This probability is a numerical characteristic of the distribution of X. Let X1, …, Xn be a sample of i.i.d. random variables representing the compressive strength of n randomly chosen cubes from the production process under consideration. If we do not wish to subject the estimation of p0 = P{X ≥ ξ0} to strong assumptions concerning the distribution of X we can estimate this probability by the proportion of cubes in the sample whose strength is at least ξ0; i.e.,

![]()

We note that n![]() has the binomial distribution B(n, p0). Thus, properties of the estimator

has the binomial distribution B(n, p0). Thus, properties of the estimator ![]() can be deduced from this binomial distribution.

can be deduced from this binomial distribution.

A commonly accepted model for the compressive strength is the family of log–normal distributions. If we are willing to commit the estimation procedure to this model we can obtain estimators of p0 which are more efficient than ![]() , provided the model is correct. Let Yi = log Xi, i = 1, …, and let

, provided the model is correct. Let Yi = log Xi, i = 1, …, and let ![]() n =

n = ![]() . Let η0 = log ξ0. Then, an estimator of p0 can be

. Let η0 = log ξ0. Then, an estimator of p0 can be

![]()

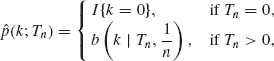

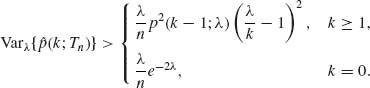

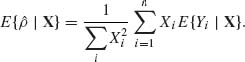

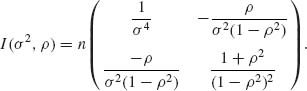

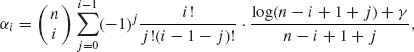

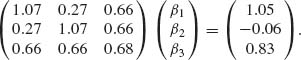

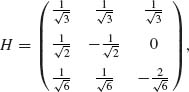

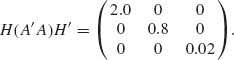

where Φ(u) is the standard normal c.d.f. Note that ![]() n and Sn are the sample statistics that are substituted to estimate the unknown parameters (ξ, σ). Moreover, (