CHAPTER 8

Bayesian Analysis in Testing and Estimation

PART I: THEORY

This chapter is devoted to some topics of estimation and testing hypotheses from the point of view of statistical decision theory. The decision theoretic approach provides a general framework for both estimation of parameters and testing hypotheses. The objective is to study classes of procedures in terms of certain associated risk functions and determine the existence of optimal procedures. The results that we have presented in the previous chapters on minimum mean–squared–error (MSE) estimators and on most powerful tests can be considered as part of the general statistical decision theory. We have seen that uniformly minimum MSE estimators and uniformly most powerful tests exist only in special cases. One could overcome this difficulty by considering procedures that yield minimum average risk, where the risk is defined as the expected loss due to erroneous decision, according to the particular distribution Fθ. The MSE in estimation and the error probabilities in testing are special risk functions. The risk functions depend on the parameters θ of the parent distribution. The average risk can be defined as an expected risk according to some probability distribution on the parameter space. Statistical inference that considers the parameter(s) as random variables is called a Bayesian inference. The expected risk with respect to the distribution of θ is called in Bayesian theory the prior risk, and the probability measure on the parameter space is called a prior distribution. The estimators or test functions that minimize the prior risk, with respect to some prior distribution, are called Bayes procedures for the specified prior distribution. Bayes procedures have certain desirable properties. This chapter is devoted, therefore, to the study of the structure of optimal decision rules in the framework of Bayesian theory. We start Section 8.1 with a general discussion of the basic Bayesian tools and information functions. We outline the decision theory and provide an example of an optimal statistical decision procedure. In Section 8.2, we discuss testing of hypotheses from the Bayesian point of view, and in Section 8.3, we present Bayes credibility intervals. The Bayesian theory of point estimation is discussed in Section 8.4. Section 8.5 discusses analytical and numerical techniques for evaluating posterior distributions on complex cases. Section 8.6 is devoted to empirical Bayes procedures.

8.1 THE BAYESIAN FRAMEWORK

8.1.1 Prior, Posterior, and Predictive Distributions

In the previous chapters, we discussed problems of statistical inference, testing hypotheses, and estimation, considering the parameters of the statistical models as fixed unknown constants. This is the so–called classical approach to the problems of statistical inference. In the Bayesian approach, the unknown parameters are considered as values determined at random according to some specified distribution, called the prior distribution. This prior distribution can be conceived as a normalized nonnegative weight function that the statistician assigns to the various possible parameter values. It can express his degree of belief in the various parameter values or the amount of prior information available on the parameters. For the philosophical foundations of the Bayesian theory, see the books of DeFinneti (1974), Barnett (1973), Hacking (1965), Savage (1962), and Schervish (1995). We discuss here only the basic mathematical structure.

Let ![]() = {F(x; θ); θ

= {F(x; θ); θ ![]() Θ} be a family of distribution functions specified by the statistical model. The parameters θ of the elements of

Θ} be a family of distribution functions specified by the statistical model. The parameters θ of the elements of ![]() are real or vector valued parameters. The parameter space Θ is specified by the model. Let

are real or vector valued parameters. The parameter space Θ is specified by the model. Let ![]() be a family of distribution functions defined on the parameter space Θ. The statistician chooses an element H(θ) of

be a family of distribution functions defined on the parameter space Θ. The statistician chooses an element H(θ) of ![]() and assigns it the role of a prior distribution. The actual parameter value θ0 of the distribution of the observable random variable X is considered to be a realization of a random variable having the distribution H(θ). After observing the value of X the statistician adjusts his prior information on the value of the parameter θ by converting H(θ) to the posterior distribution H(θ | X). This is done by Bayes Theorem according to which if h(θ) is the prior probability density function (p.d.f.) of θ and f(x; θ) the p.d.f. of X under θ, then the posterior p.d.f. of θ is

and assigns it the role of a prior distribution. The actual parameter value θ0 of the distribution of the observable random variable X is considered to be a realization of a random variable having the distribution H(θ). After observing the value of X the statistician adjusts his prior information on the value of the parameter θ by converting H(θ) to the posterior distribution H(θ | X). This is done by Bayes Theorem according to which if h(θ) is the prior probability density function (p.d.f.) of θ and f(x; θ) the p.d.f. of X under θ, then the posterior p.d.f. of θ is

(8.1.1) ![]()

If we are given a sample of n observations or random variables X1, X2, …, Xn, whose distributions belong to a family ![]() , the question is whether these random variables are independent identically distributed (i.i.d.) given θ, or whether θ might be randomly chosen from H(θ) for each observation.

, the question is whether these random variables are independent identically distributed (i.i.d.) given θ, or whether θ might be randomly chosen from H(θ) for each observation.

At the beginning, we study the case that X1, …, Xn are conditionally i.i.d., given θ. This is the classical Bayesian model. In Section 8.6, we study the so–called empirical Bayes model, in which θ is randomly chosen from H(θ) for each observation. In the classical model, if the family ![]() admits a sufficient statistic T(X), then for any prior distribution H(θ), the posterior distribution is a function of T(X), and can be determined from the distribution of T(X) under θ. Indeed, by the Neyman–Fisher Factorization Theorem, if T(X) is sufficient for

admits a sufficient statistic T(X), then for any prior distribution H(θ), the posterior distribution is a function of T(X), and can be determined from the distribution of T(X) under θ. Indeed, by the Neyman–Fisher Factorization Theorem, if T(X) is sufficient for ![]() then f(x; θ) = k(x)g(T(x); θ). Hence,

then f(x; θ) = k(x)g(T(x); θ). Hence,

(8.1.2) ![]()

Thus, the posterior p.d.f. is a function of T(X). Moreover, the p.d.f. of T(X) is g* (t; θ) = k* (t)g(t; θ), where k* (t) is independent of θ. It follows that the conditional p.d.f. of θ given {T(X) = t} coincides with h(θ | x) on the sets {x; T(x) = t} for all t.

Bayes predictive distributions are the marginal distributions of the observed random variables, according to the model. More specifically, if a random vector X has a joint distribution F(x; θ) and the prior distribution of θ is H(θ) then the joint predictive distribution of X under H is

(8.1.3) ![]()

A most important question in Bayesian analysis is what prior distribution to choose. The answer is, generally, that the prior distribution should reflect possible prior knowledge available on possible values of the parameter. In many situations, the prior information on the parameters is vague. In such cases, we may use formal prior distributions, which are discussed in Section 8.1.3. On the other hand, in certain scientific or technological experiments much is known about possible values of the parameters. This may guide in selecting a prior distribution, as illustrated in the examples.

There are many examples of posterior distribution that belong to the same parametric family of the prior distribution. Generally, if the family of prior distributions ![]() relative to a specific family

relative to a specific family ![]() yields posteriors in

yields posteriors in ![]() , we say that

, we say that ![]() and

and ![]() are conjugate families. For more discussion on conjugate prior distributions, see Raiffa and Schlaifer (1961). In Example 8.2, we illustrate a few conjugate prior families.

are conjugate families. For more discussion on conjugate prior distributions, see Raiffa and Schlaifer (1961). In Example 8.2, we illustrate a few conjugate prior families.

The situation when conjugate prior structure exists is relatively simple and generally leads to analytic expression of the posterior distribution. In research, however, we often encounter much more difficult problems, as illustrated in Example 8.3. In such cases, we cannot often express the posterior distribution in analytic form, and have to resort to numerical evaluations to be discussed in Section 8.5.

8.1.2 Noninformative and Improper Prior Distributions

It is sometimes tempting to obtain posterior densities by multiplying the likelihood function by a function h(θ), which is not a proper p.d.f. For example, suppose that X | θ ∼ N(θ, 1). In this case L(θ; X) = exp ![]() . This likelihood function is integrable with respect to dθ. Indeed,

. This likelihood function is integrable with respect to dθ. Indeed,

![]()

Thus, if we consider formally the function h(θ)dθ = cdθ or h(θ) = c then

which is the p.d.f. of N(X, 1). The function h(θ) = c, c > 0 for all θ is called an improper prior density since ![]() . Another example is when X | λ ∼ P(λ), i.e., L(λ | X) = e−λ λx. If we use the improper prior density h(λ) = c > 0 for all λ > 0 then the posterior p.d.f. is

. Another example is when X | λ ∼ P(λ), i.e., L(λ | X) = e−λ λx. If we use the improper prior density h(λ) = c > 0 for all λ > 0 then the posterior p.d.f. is

This is a proper p.d.f. of G(1, X + 1) despite the fact that h(λ) is an improper prior density. Some people justify the use of an improper prior by arguing that it provides a “diffused” prior, yielding an equal weight to all points in the parameter space. For example, the improper priors that lead to the proper posterior densities (8.1.4) and (8.1.5) may reflect a state of ignorance, in which all points θ in (−∞, ∞) or λ in (0, ∞) are “equally” likely.

Lindley (1956) defines a prior density h(θ) to be noninformative, if it maximizes the predictive gain in information on θ when a random sample of size n is observed. He shows then that, in large samples, if the family ![]() satisfies the Cramer–Rao regularity conditions, and the maximum likelihood estimator (MLE)

satisfies the Cramer–Rao regularity conditions, and the maximum likelihood estimator (MLE) ![]() n is minimal sufficient for

n is minimal sufficient for ![]() , then the noninformative prior density is proportional to |I(θ)|1/2, where |I(θ)| is the determinant of the Fisher information matrix. As will be shown in Example 8.4, h(θ)

, then the noninformative prior density is proportional to |I(θ)|1/2, where |I(θ)| is the determinant of the Fisher information matrix. As will be shown in Example 8.4, h(θ) ![]() |I(θ)|1/2 is sometimes a proper p.d.f. and sometimes an improper one.

|I(θ)|1/2 is sometimes a proper p.d.f. and sometimes an improper one.

Jeffreys (1961) justified the use of the noninformative prior |I(θ)|1/2 on the basis of invariance. He argued that if a statistical model ![]() = {f(x;θ); θ

= {f(x;θ); θ ![]() Θ} is reparametrized to

Θ} is reparametrized to ![]() * = {f* (x; ω); ω

* = {f* (x; ω); ω ![]() Ω}, where ω =

Ω}, where ω = ![]() (θ) then the prior density h(θ) should be chosen so that h(θ | X) = h(ω | X).

(θ) then the prior density h(θ) should be chosen so that h(θ | X) = h(ω | X).

Let θ = ![]() −1(ω) and let J(ω) be the Jacobian of the transformation, then the posterior p.d.f. of ω is

−1(ω) and let J(ω) be the Jacobian of the transformation, then the posterior p.d.f. of ω is

(8.1.6) ![]()

Recall that the Fisher information matrix of ω is

Thus, if h(θ) ![]() |I(θ)|1/2 then from (8.1.7) and (8.1.8), since

|I(θ)|1/2 then from (8.1.7) and (8.1.8), since

we obtain

(8.1.9) ![]()

The structure of h(θ | X) and of h* (ω | X) is similar. This is the “invariance” property of the posterior, with respect to transformations of the parameter.

A prior density proportional to |I(θ)|1/2 is called a Jeffreys prior density.

8.1.3 Risk Functions and Bayes Procedures

In statistical decision theory, we consider the problems of inference in terms of a specified set of actions, ![]() , and their outcomes. The outcome of the decision is expressed in terms of some utility function, which provides numerical quantities associated with actions of

, and their outcomes. The outcome of the decision is expressed in terms of some utility function, which provides numerical quantities associated with actions of ![]() and the given parameters, θ, characterizing the elements of the family

and the given parameters, θ, characterizing the elements of the family ![]() specified by the model. Instead of discussing utility functions, we discuss here loss functions, L(a, θ), a

specified by the model. Instead of discussing utility functions, we discuss here loss functions, L(a, θ), a ![]()

![]() , θ

, θ ![]() Θ, associated with actions and parameters. The loss functions are nonnegative functions that assume the value zero if the action chosen does not imply some utility loss when θ is the true state of Nature. One of the important questions is what type of loss function to consider. The answer to this question depends on the decision problem and on the structure of the model. In the classical approach to testing hypotheses, the loss function assumes the value zero if no error is committed and the value one if an error of either kind is done. In a decision theoretic approach, testing hypotheses can be performed with more general loss functions, as will be shown in Section 8.2. In estimation theory, the squared–error loss function (

Θ, associated with actions and parameters. The loss functions are nonnegative functions that assume the value zero if the action chosen does not imply some utility loss when θ is the true state of Nature. One of the important questions is what type of loss function to consider. The answer to this question depends on the decision problem and on the structure of the model. In the classical approach to testing hypotheses, the loss function assumes the value zero if no error is committed and the value one if an error of either kind is done. In a decision theoretic approach, testing hypotheses can be performed with more general loss functions, as will be shown in Section 8.2. In estimation theory, the squared–error loss function (![]() (x) − θ)2 is frequently applied, when

(x) − θ)2 is frequently applied, when ![]() (x) is an estimator of θ. A generalization of this type of loss function, which is of theoretical importance, is the general class of quadratic loss function, given by

(x) is an estimator of θ. A generalization of this type of loss function, which is of theoretical importance, is the general class of quadratic loss function, given by

(8.1.10) ![]()

where Q(θ) > 0 is an appropriate function of θ. For example, (![]() (x)−θ)2/θ2 is a quadratic loss function. Another type of loss function used in estimation theory is the type of function that depends on

(x)−θ)2/θ2 is a quadratic loss function. Another type of loss function used in estimation theory is the type of function that depends on ![]() (x) and θ only through the absolute value of their difference. That is, L(

(x) and θ only through the absolute value of their difference. That is, L(![]() (x), θ) = W(|

(x), θ) = W(|![]() (x) − θ|). For example, |

(x) − θ|). For example, |![]() (x) − θ|ν where ν > 0, or log (1 + |

(x) − θ|ν where ν > 0, or log (1 + |![]() (x) − θ|). Bilinear convex functions of the form

(x) − θ|). Bilinear convex functions of the form

(8.1.11) ![]()

are also in use, where a1, a2 are positive constants; (![]() − θ)− = − min (

− θ)− = − min (![]() − θ, 0) and (

− θ, 0) and (![]() − θ)+ = max(

− θ)+ = max(![]() − θ, 0). If the value of θ is known one can always choose a proper action to insure no loss. The essence of statistical decision problems is that the true parameter θ is unknown and decisions are made under uncertainty. The random vector X = (X1, …, Xn) provides information about the unknown value of θ. A function from the sample space

− θ, 0). If the value of θ is known one can always choose a proper action to insure no loss. The essence of statistical decision problems is that the true parameter θ is unknown and decisions are made under uncertainty. The random vector X = (X1, …, Xn) provides information about the unknown value of θ. A function from the sample space ![]() of X into the action space

of X into the action space ![]() is called a decision function. We denote it by d(X) and require that it should be a statistic. Let

is called a decision function. We denote it by d(X) and require that it should be a statistic. Let ![]() denotes a specified set or class of proper decision functions. Using a decision function d(X) the associated loss L(d(X), θ) is a random variable, for each θ. The expected loss under θ, associated with a decision function d(X), is called the risk function and is denoted by R(d, θ) = Eθ {L(d(X), θ)}. Given the structure of a statistical decision problem, the objective is to select an optimal decision function from

denotes a specified set or class of proper decision functions. Using a decision function d(X) the associated loss L(d(X), θ) is a random variable, for each θ. The expected loss under θ, associated with a decision function d(X), is called the risk function and is denoted by R(d, θ) = Eθ {L(d(X), θ)}. Given the structure of a statistical decision problem, the objective is to select an optimal decision function from ![]() . Ideally, we would like to choose a decision function d0(X) that minimizes the associated risk function R(d, θ) uniformly in θ. Such a uniformly optimal decision function may not exist, since the function d0 for which R(d0, θ) =

. Ideally, we would like to choose a decision function d0(X) that minimizes the associated risk function R(d, θ) uniformly in θ. Such a uniformly optimal decision function may not exist, since the function d0 for which R(d0, θ) = ![]() R(d, θ) generally depends on the particular value of θ under consideration. There are several ways to overcome this difficulty. One approach is to restrict attention to a subclass of decision functions, like unbiased or invariant decision functions. Another approach for determining optimal decision functions is the Bayesian approach. We define here the notion of Bayes decision function in a general context.

R(d, θ) generally depends on the particular value of θ under consideration. There are several ways to overcome this difficulty. One approach is to restrict attention to a subclass of decision functions, like unbiased or invariant decision functions. Another approach for determining optimal decision functions is the Bayesian approach. We define here the notion of Bayes decision function in a general context.

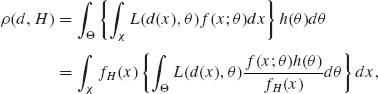

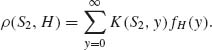

Consider a specified prior distribution, H(θ), defined over the parameter space Θ. With respect to this prior distribution, we define the prior risk, ρ(d, H), as the expected risk value when θ varies over Θ, i.e.,

(8.1.12) ![]()

where h(θ) is the corresponding p.d.f. A Bayes decision function, with respect to a prior distribution H, is a decision function dH(x) that minimizes the prior risk ρ(d, H), i.e.,

(8.1.13) ![]()

Under some general conditions, a Bayes decision function dH(x) exists. The Bayes decision function can be generally determined by minimizing the posterior expectation of the loss function for a given value x of the random variable X. Indeed, since L(d, θ) ≥ 0 one can interchange the integration operations below and write

(8.1.14)

where fH(x) = ![]() f(x; τ) h(τ)dτ is the predictive p.d.f. The conditional p.d.f. h(θ | x) = f(x; θ)h(θ)/fH(x) is the posterior p.d.f. of θ, given X = x. Similarly, the conditional expectation

f(x; τ) h(τ)dτ is the predictive p.d.f. The conditional p.d.f. h(θ | x) = f(x; θ)h(θ)/fH(x) is the posterior p.d.f. of θ, given X = x. Similarly, the conditional expectation

(8.1.15) ![]()

is called the posterior risk of d(x) under H. Thus, for a given X = x, we can choose d(x) to minimize R(d(x), H). Since L(d(x), θ) ≥ 0 for all θ ![]() Θ and d

Θ and d ![]()

![]() , the minimization of the posterior risk minimizes also the prior risk ρ (d, H). Thus, dH(X) is a Bayes decision function.

, the minimization of the posterior risk minimizes also the prior risk ρ (d, H). Thus, dH(X) is a Bayes decision function.

8.2 BAYESIAN TESTING OF HYPOTHESIS

8.2.1 Testing Simple Hypothesis

We start with the problem of testing two simple hypotheses H0 and H1. Let F0(x) and F1(x) be two specified distribution functions. The hypothesis H0 specifies the parent distribution of X as F0(x), H1 specified it as F1(x). Let f0(x) and f1(x) be the p.d.f.s corresponding to F0(x) and F1(x), respectively. Let π, 0 ≤ π ≤ 1, be the prior probability that H0 is true. In the special case of two simple hypotheses, the loss function can assign 1 unit to the case of rejecting H0 when it is true and b units to the case of rejecting H1 when it is true. The prior risks associated with accepting H0 and H1 are, respectively, ρ0(π) = (1 − π)b and ρ1(π) = π. For a given value of π, we accept hypothesis Hi (i = 0, 1) if ρi(π) is the minimal prior risk. Thus, a Bayes rule, prior to making observations is

where d = i is the decision to accept Hi (i = 0, 1).

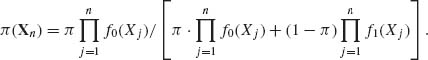

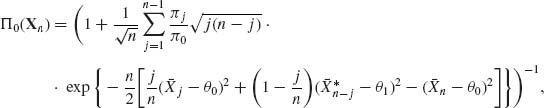

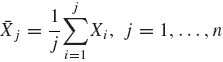

Suppose that a sample of n i.i.d. random variables X1, …, Xn has been observed. After observing the sample, we determine the posterior probability π (Xn) that H0 is true. This posterior probability is given by

(8.2.2)

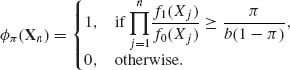

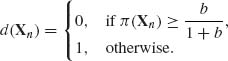

We use the decision rule (8.2.1) with π replaced by π(Xn). Thus, the Bayes decision function is

(8.2.3)

The Bayes decision function can be written in terms of the test function discussed in Chapter 4 as

The Bayes test function ![]() π (Xn) is similar to the Neyman–Pearson most powerful test, except that the Bayes test is not necessarily randomized even if the distributions Fi(x) are discrete. Moreover, the likelihood ratio

π (Xn) is similar to the Neyman–Pearson most powerful test, except that the Bayes test is not necessarily randomized even if the distributions Fi(x) are discrete. Moreover, the likelihood ratio ![]() f1(Xj)/f0(Xj) is compared to the ratio of the prior risks.

f1(Xj)/f0(Xj) is compared to the ratio of the prior risks.

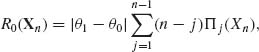

We discuss now some of the important optimality characteristics of Bayes tests of two simple hypotheses. Let R0(![]() ) and R1(

) and R1(![]() ) denote the risks associated with an arbitrary test statistic

) denote the risks associated with an arbitrary test statistic ![]() , when H0 or H1 are true, respectively. Let R0(π) and R1(π) denote the corresponding risk values of a Bayes test function, with respect to a prior probability π. Generally

, when H0 or H1 are true, respectively. Let R0(π) and R1(π) denote the corresponding risk values of a Bayes test function, with respect to a prior probability π. Generally

![]()

and

![]()

where ![]() 0(

0(![]() ) and

) and ![]() 1(

1(![]() ) are the error probabilities of the test statistic

) are the error probabilities of the test statistic ![]() , c1 and c2 are costs of erroneous decisions. The set R = {R0(

, c1 and c2 are costs of erroneous decisions. The set R = {R0(![]() ), R1(

), R1(![]() )); all test functions

)); all test functions ![]() } is called the risk set. Since for every 0 ≤ α ≤ 1 and any functions

} is called the risk set. Since for every 0 ≤ α ≤ 1 and any functions ![]() (1) and

(1) and ![]() (2), α

(2), α ![]() (1) + (1-α)

(1) + (1-α)![]() (2) is also a test function, and since

(2) is also a test function, and since

(8.2.5) ![]()

the risk set R is convex. Moreover, the set

(8.2.6) ![]()

of all risk points corresponding to the Bayes tests is the lower boundary for R. Indeed, according to (8.2.4) and the Neyman–Pearson Lemma, R1(π) is the smallest possible risk of all test functions ![]() with R0(

with R0(![]() ) = R0(π). Accordingly, all the Bayes tests constitute a complete class in the sense that, for any test function outside the class, there exists a corresponding Bayes test with a risk point having component smaller or equal to those of that particular test and at least one component is strictly smaller (Ferguson, 1967, Ch. 2). From the decision theoretic point of view there is no sense in considering test functions that do not belong to the complete class. These results can be generalized to the case of testing k simple hypotheses (Blackwell and Girshick, 1954; Ferguson, 1967).

) = R0(π). Accordingly, all the Bayes tests constitute a complete class in the sense that, for any test function outside the class, there exists a corresponding Bayes test with a risk point having component smaller or equal to those of that particular test and at least one component is strictly smaller (Ferguson, 1967, Ch. 2). From the decision theoretic point of view there is no sense in considering test functions that do not belong to the complete class. These results can be generalized to the case of testing k simple hypotheses (Blackwell and Girshick, 1954; Ferguson, 1967).

8.2.2 Testing Composite Hypotheses

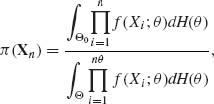

Let Θ0 and Θ1 be the sets of θ–points corresponding to the (composite) hypotheses H0 and H1, respectively. These sets contain finite or infinite number of points. Let H(θ) be a prior distribution function specified over Θ = Θ0 ![]() Θ1. The posterior probability of H0, given n i.i.d. random variables X1, …, Xn, is

Θ1. The posterior probability of H0, given n i.i.d. random variables X1, …, Xn, is

where f(x; θ) is the p.d.f. of X under θ. The notation in (8.2.7) signifies that if the sets are discrete the corresponding integrals are sums and dH(θ) are prior probabilities, otherwise dH(θ) = h(θ)dθ, where h(θ) is a p.d.f. The Bayes decision rule is obtained by computing the posterior risk associated with accepting H0 or with accepting H1 and making the decision associated with the minimal posterior risk. The form of the Bayes test depends, therefore, on the loss function employed.

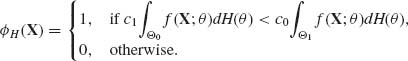

If the loss functions associated with accepting H0 or H1 are

![]()

then the associated posterior risk functions are

(8.2.8) ![]()

and

![]()

In this case, the Bayes test function is

In other words, the hypothesis H0 is rejected if the predictive likelihood ratio

is greater than the loss ratio c1/c0. This can be considered as a generalization of (8.2.4). The predictive likelihood ratio ΛH(X) is called also the Bayes Factor in favor of H1 against H0 (Good, 1965, 1967).

Cornfield (1969) suggested as a test function the ratio of the posterior odds in favor of H0, i.e., P[H0| X]/(1 − P[H0| X]), to the prior odds π /(1 − π) where π = P[H0] is the prior probability of H0. The rule is to reject H0 when this ratio is smaller than a suitable constant. Cornfield called this statistic the relative betting odds. Note that this relative betting odds is [ΛH (X)π /(1 − π)]−1. We see that Cornfield’s test function is equivalent to (8.2.9) for suitably chosen cost factors.

Karlin (l956) and Karlin and Rubin (1956) proved that in monotone likelihood ratio families the Bayes test function is monotone in the sufficient statistic T(X). For testing H0: θ ≤ θ0 against H1: θ > θ0, the Bayes procedure rejects H0 whenever T(X) ≥ ξ0. The result can be further generalized to the problem of testing multiple hypotheses (Zacks, 1971; Ch. 10).

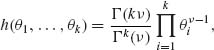

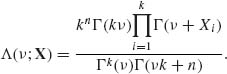

The problem of testing the composite hypothesis that all the probabilities in a multinomial distribution have the same value has drawn considerable attention in the statistical literature; see in particular the papers of Good (1967), Good and Crook (1974), and Good (1975). The Bayes test procedure proposed by Good (1967) is based on the symmetric Dirichlet prior distribution. More specifically if X = (X1, …, Xk)′ is a random vector having the multinomial distribution M(n, θ) then the parameter vector θ is ascribed the prior distribution with p.d.f.

(8.2.11)

0 < θ1, …, θk < 1 and ![]() = 1. The Bayes factor for testing h0: θ =

= 1. The Bayes factor for testing h0: θ = ![]() 1 against the composite alternative hypothesis H1: θ ≠

1 against the composite alternative hypothesis H1: θ ≠ ![]() 1, where 1 = (1, …, 1)′, according to (8.2.10) is

1, where 1 = (1, …, 1)′, according to (8.2.10) is

(8.2.12)

From the purely Bayesian point of view, the statistician should be able to choose an appropriate value of ν and some cost ratio c1/c0 for erroneous decisions, according to subjective judgment, and reject H0 if Λ (ν; X) ≥ c1/c0. In practice, it is generally not so simple to judge what are the appropriate values of ν and c1/c0. Good and Crook (1974) suggested two alternative ways to solve this problem. One suggestion is to consider an integrated Bayes factor

(8.2.13) ![]()

where ![]() (ν) is the p.d.f. of a log–Cauchy distribution, i.e.,

(ν) is the p.d.f. of a log–Cauchy distribution, i.e.,

(8.2.14) ![]()

The second suggestion is to find the value ν0 for which Λ (ν; X) is maximized and reject H0 if Λ* = (2log Λ (ν0; X))1/2 exceeds the (1 − α)–quantile of the asymptotic distribution of Λ* under H0. We see that non–Bayesian (frequentists) considerations are introduced in order to arrive at an appropriate critical level for Λ*. Good and Crook call this approach a “Bayes/Non–Bayes compromise.” We have presented this problem and the approaches suggested for its solution to show that in practical work a nondogmatic approach is needed. It may be reasonable to derive a test statistic in a Bayesian framework and apply it in a non–Bayesian manner.

8.2.3 Bayes Sequential Testing of Hypotheses

We consider in the present section an application of the general theory of Section 8.1.5 to the case of testing two simple hypotheses. We have seen in Section 8.2.1 that the Bayes decision test function, after observing Xn, is to reject H0 if the posterior probability, π(Xn), that H0 is true is less than or equal to a constant π*. The associated Bayes risk is ρ(0) (π (Xn)) = π (Xn)I{π (Xn) ≤ π* } + b(1 − π(Xn))I{π(Xn) > π* }, where π* = b/(1 + b). If π (Xn) = π then the posterior probability of H0 after the (n + 1)st observation is ![]() (π, Xn + 1) =

(π, Xn + 1) = ![]() , where R(x) =

, where R(x) = ![]() is the likelihood ratio. The predictive risk associated with an additional observation is

is the likelihood ratio. The predictive risk associated with an additional observation is

(8.2.15) ![]()

where c is the cost of one observation, and the expectation is with respect to the predictive distribution of X given π. We can show that the function ![]() 1(π) is concave on [0, 1] and thus continuous on (0, 1). Moreover,

1(π) is concave on [0, 1] and thus continuous on (0, 1). Moreover, ![]() 1(0) ≥ c and

1(0) ≥ c and ![]() 1(1) ≥ c. Note that the function

1(1) ≥ c. Note that the function ![]() (π, X) → 0 w.p.l if π → 0 and

(π, X) → 0 w.p.l if π → 0 and ![]() (π, X) → 1 w.p.l if π → 1. Since ρ(0)(π) is bounded by π*, we obtain by the Lebesgue Dominated Convergence Theorem that E{ρ0(

(π, X) → 1 w.p.l if π → 1. Since ρ(0)(π) is bounded by π*, we obtain by the Lebesgue Dominated Convergence Theorem that E{ρ0(![]() (π, X))} → 0 as π → 0 or as π → 1. The Bayes risk associated with an additional observation is

(π, X))} → 0 as π → 0 or as π → 1. The Bayes risk associated with an additional observation is

(8.2.16) ![]()

Thus, if c ≥ b/(1 + b) it is not optimal to make any observation. On the other hand, if c < b/(1 + b) there exist two points ![]() and

and ![]() , such that 0 <

, such that 0 < ![]() < π* <

< π* < ![]() < 1, and

< 1, and

(8.2.17) ![]()

Let

(8.2.18) ![]()

and let

(8.2.19) ![]()

Since ρ(1)(![]() (π, X)) ≤ ρ0(

(π, X)) ≤ ρ0(![]() (π, X)) for each π with probability one, we obtain that

(π, X)) for each π with probability one, we obtain that ![]() 2(π) ≤

2(π) ≤ ![]() 1(π) for all 0 ≤ π ≤ 1. Thus, ρ(2)(π) ≤ ρ(1)(π) for all π, 0 ≤ π ≤ 1.

1(π) for all 0 ≤ π ≤ 1. Thus, ρ(2)(π) ≤ ρ(1)(π) for all π, 0 ≤ π ≤ 1. ![]() 2(π) is also a concave function of π on [0, 1] and

2(π) is also a concave function of π on [0, 1] and ![]() 2(0) =

2(0) =![]() 2(1) = c. Thus, there exists

2(1) = c. Thus, there exists ![]() ≤

≤ ![]() and

and ![]() ≥

≥ ![]() such that

such that

(8.2.20) ![]()

We define now recursively, for each π on [0, 1],

(8.2.21) ![]()

and

(8.2.22) ![]()

These functions constitute for each π monotone sequences ![]() n(π) ≤

n(π) ≤ ![]() n−1 and ρ(n)(π) ≤ ρ(n−1) (π) for every n≥ 1. Moreover, for each n there exist 0 <

n−1 and ρ(n)(π) ≤ ρ(n−1) (π) for every n≥ 1. Moreover, for each n there exist 0 < ![]() ≤

≤ ![]() <

<![]() ≤

≤ ![]() < 1 such that

< 1 such that

(8.2.23) ![]()

Let ρ (π) = ![]() ρ(n)(π) for each π in [0, 1] and

ρ(n)(π) for each π in [0, 1] and ![]() (π) = E{ρ (

(π) = E{ρ (![]() (π, X))}. By the Lebesgue Monotone Convergence Theorem, we prove that

(π, X))}. By the Lebesgue Monotone Convergence Theorem, we prove that ![]() (π) =

(π) = ![]()

![]() n(π) for each π

n(π) for each π ![]() [0, 1]. The boundary points

[0, 1]. The boundary points ![]() and

and ![]() converge to π1 and π2, respectively, where 0 < π1 < π2 < 1. Consider now a nontruncated Bayes sequential procedure, with the stopping variable

converge to π1 and π2, respectively, where 0 < π1 < π2 < 1. Consider now a nontruncated Bayes sequential procedure, with the stopping variable

where X0 ≡ 0 and π (X0) ≡ π. Since under H0, π (Xn) → 1 with probability one and under H1, π (Xn) → 0 with probability 1, the stopping variable (8.2.24) is finite with probability one.

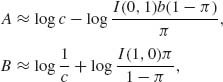

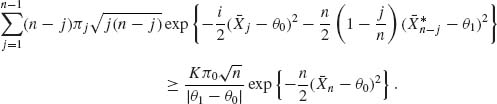

It is generally very difficult to determine the exact Bayes risk function ρ (π) and the exact boundary points π1 and π2. One can prove, however, that the Wald sequential probability ratio test (SPRT) (see Section 4.8.1) is a Bayes sequential procedure in the class of all stopping variables for which N≥ 1, corresponding to some prior probability π and cost parameter b. For a proof of this result, see Ghosh (1970, p. 93) or Zacks (1971, p. 456). A large sample approximation to the risk function ρ (π) was given by Chernoff (1959). Chernoff has shown that in the SPRT given by the boundaries (A, B) if A → −∞ and B → ∞, we have

(8.2.25)

where the cost of observations c→ 0 and I(0, 1), I(1, 0) are the Kullback–Leibler information numbers. Moreover, as c→ 0

(8.2.26) ![]()

Shiryayev (1973, p. 127) derived an expression for the Bayes risk ρ (π) associated with a continuous version of the Bayes sequential procedure related to a Wiener process. Reduction of the testing problem for the mean of a normal distribution to a free boundary problem related to the Wiener process was done also by Chernoff (1961, 1965, 1968); see also the book of Dynkin and Yushkevich (1969).

A simpler sequential stopping rule for testing two simple hypotheses is

(8.2.27) ![]()

If π (XN) ≤ ![]() then H0 is rejected, and if π (XN) ≥ 1 −

then H0 is rejected, and if π (XN) ≥ 1 − ![]() then H0 is accepted. This stopping rule is equivalent to a Wald SPRT (A, B) with the limits

then H0 is accepted. This stopping rule is equivalent to a Wald SPRT (A, B) with the limits

![]()

If π = ![]() then, according to the results of Section 4.8.1, the average error probability is less than or equal to

then, according to the results of Section 4.8.1, the average error probability is less than or equal to ![]() . This result can be extended to the problem of testing k simple hypotheses (k≥ 2), as shown in the following.

. This result can be extended to the problem of testing k simple hypotheses (k≥ 2), as shown in the following.

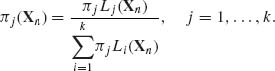

Let H1, …, Hk be k hypotheses (k ≥ 2) concerning the distribution of a random variable (vector) X. According to Hj, the p.d.f. of X is fj(x; θ), θ![]() Θ j, j = 1, …, k. The parameter θ is a nuisance parameter, whose parameter space Θ j may depend on Hj. Let Gj(θ), j = 1, …, k, be a prior distribution on Θ j, and let π j be the prior probability that Hj is the true hypothesis,

Θ j, j = 1, …, k. The parameter θ is a nuisance parameter, whose parameter space Θ j may depend on Hj. Let Gj(θ), j = 1, …, k, be a prior distribution on Θ j, and let π j be the prior probability that Hj is the true hypothesis, ![]() π j = 1. Given n observations on X1, …, Xn, which are assumed to be conditionally i.i.d., we compute the predictive likelihood of Hj, namely,

π j = 1. Given n observations on X1, …, Xn, which are assumed to be conditionally i.i.d., we compute the predictive likelihood of Hj, namely,

(8.2.28) ![]()

j = 1, …, k. Finally, the posterior probability of Hj, after n observations, is

(8.2.29)

We consider the following Bayesian stopping variable, for some 0 < ![]() < 1.

< 1.

(8.2.30) ![]()

Obviously, one considers small values of ![]() , 0 <

, 0 < ![]() < 1/2, and for such

< 1/2, and for such ![]() , there is a unique value

, there is a unique value ![]() such that π

such that π ![]() (XN

(XN![]() ) ≥ 1 −

) ≥ 1 − ![]() . At stopping, hypothesis H

. At stopping, hypothesis H![]() is accepted.

is accepted.

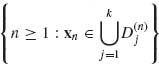

For each n ≥ 1, partition the sample space ![]() (n) of Xn to (k + 1) disjoint sets

(n) of Xn to (k + 1) disjoint sets

![]()

and ![]() =

= ![]() n −

n − ![]()

![]() . As long as xn

. As long as xn ![]()

![]() we continue sampling. Thus, N

we continue sampling. Thus, N![]() = min

= min  . In this sequential testing procedure, decision errors occur at stopping, when the wrong hypothesis is accepted. Thus, let δij denote the predictive probability of accepting Hi when Hj is the correct hypothesis. That is,

. In this sequential testing procedure, decision errors occur at stopping, when the wrong hypothesis is accepted. Thus, let δij denote the predictive probability of accepting Hi when Hj is the correct hypothesis. That is,

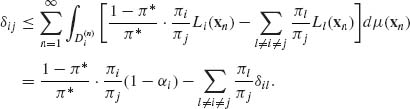

(8.2.31) ![]()

Note that, for π* = 1−![]() , πj(xn) ≥ π* if, and only if,

, πj(xn) ≥ π* if, and only if,

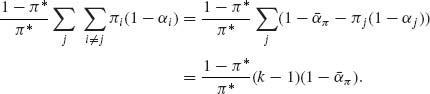

Let αj denote the predictive error probability of rejecting Hj when it is true, i.e., α j = ![]() δij.

δij.

The average predictive error probability is ![]() π =

π = ![]() πjαj.

πjαj.

Theorem 8.2.1. For the stopping variable N![]() , the average predictive error probability is

, the average predictive error probability is ![]() π ≤

π ≤ ![]() .

.

Proof. From the inequality (8.2.32), we obtain

(8.2.33)

Summing over i, we get

![]()

or

![]()

Summing over j, we obtain

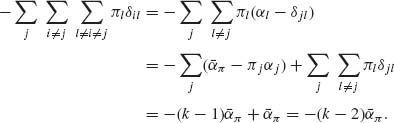

The first term on the RHS of (8.2.34) is

The second term on the RHS of (8.2.34) is

Substitution of (8.2.35) and (8.2.36) into (8.2.34) yields

![]()

or

![]() QED

QED

Thus, the Bayes sequential procedure given by the stopping variable N![]() and the associated decision rule can provide an excellent testing procedure when the number of hypothesis k is large. Rogatko and Zacks (1993) applied this procedure for testing the correct gene order. In this problem, if one wishes to order m gene loci on a chromosome, the number of hypotheses to test is k = m!/2.

and the associated decision rule can provide an excellent testing procedure when the number of hypothesis k is large. Rogatko and Zacks (1993) applied this procedure for testing the correct gene order. In this problem, if one wishes to order m gene loci on a chromosome, the number of hypotheses to test is k = m!/2.

8.3 BAYESIAN CREDIBILITY AND PREDICTION INTERVALS

8.3.1 Credibility Intervals

Let ![]() = {F(x; θ); θ

= {F(x; θ); θ ![]() Θ} be a parametric family of distribution functions. Let H(θ) be a specified prior distribution of θ and H(θ | X) be the corresponding posterior distribution, given X. If θ is real then an interval (Lα (X),

Θ} be a parametric family of distribution functions. Let H(θ) be a specified prior distribution of θ and H(θ | X) be the corresponding posterior distribution, given X. If θ is real then an interval (Lα (X), ![]() α (X)) is called a Bayes credibility interval of level 1 − α if for all X (with probability 1)

α (X)) is called a Bayes credibility interval of level 1 − α if for all X (with probability 1)

(8.3.1) ![]()

In multiparameter cases, we can speak of Bayes credibility regions. Bayes tolerance intervals are defined similarly.

Box and Tiao (1973) discuss Bayes intervals, called highest posterior density (HPD) intervals. These intervals are defined as θ intervals for which the posterior coverage probability is at least (1−α) and every θ–point within the interval has a posterior density not smaller than that of any θ–point outside the interval. More generally, a region RH(X) is called a (1 − α) HPD region if

The HPD intervals in cases of unimodal posterior distributions provide in nonsymmetric cases Bayes credibility intervals that are not equal tail ones. For various interesting examples, see Box and Tiao (1973).

8.3.2 Prediction Intervals

Suppose X is a random variable (vector) having a p.d.f. f(x;θ), θ ![]() Θ. If θ is known, an interval Iα (θ) is called a prediction interval for X, at level (1 − α) if

Θ. If θ is known, an interval Iα (θ) is called a prediction interval for X, at level (1 − α) if

(8.3.2) ![]()

When θ is unknown, one can use a Bayesian predictive distribution to determine an interval Iα (H) such that the predictive probability of {X![]() Iα (H)} is at least 1 − α. This predictive interval depends on the prior distribution H(θ). After observing X1, …, Xn, one can determine prediction interval (region) for (Xn+1, …, Xn+m) by using the posterior distribution H(θ| Xn) for the predictive distribution fH(x| xn) =

Iα (H)} is at least 1 − α. This predictive interval depends on the prior distribution H(θ). After observing X1, …, Xn, one can determine prediction interval (region) for (Xn+1, …, Xn+m) by using the posterior distribution H(θ| Xn) for the predictive distribution fH(x| xn) = ![]() f(x;θ) dH(θ| xn). In Example 8.12, we illustrate such prediction intervals. For additional theory and examples, see Geisser (1993).

f(x;θ) dH(θ| xn). In Example 8.12, we illustrate such prediction intervals. For additional theory and examples, see Geisser (1993).

8.4 BAYESIAN ESTIMATION

8.4.1 General Discussion and Examples

When the objective is to provide a point estimate of the parameter θ or a function ω = g(θ) we identify the action space with the parameter space. The decision function d(X) is an estimator with domain χ and range Θ, or Ω = g(Θ). For various loss functions the Bayes decision is an estimator ![]() H(X) that minimizes the posterior risk. In the following table, we present some loss functions and the corresponding Bayes estimators.

H(X) that minimizes the posterior risk. In the following table, we present some loss functions and the corresponding Bayes estimators.

In the examples, we derived Bayesian estimators for several models of interest, and show the dependence of the resulting estimators on the loss function and on the prior distributions.

| Loss Function | Bayes Estimator |

| ( |

|

| (The posterior expectation) | |

| Q(θ)( |

EH{θ Q(θ)| X} /EH{Q(θ)| X} |

| | |

|

| distribution, i.e., H−1(.5| X). | |

| a( |

The |

| i.e., H−1( |

8.4.2 Hierarchical Models

Lindley and Smith (1972) and Smith (1973a, b) advocated a somewhat more complicated methodology. They argue that the choice of a proper prior should be based on the notion of exchangeability. Random variables W1, W2, …, Wk are called exchangeable if the joint distribution of (W1, …, Wk) is the same as that of (Wi1, …, Wik), where (i1, …, ik) is any permutation of (1, 2, …, k). The joint p.d.f. of exchangeable random variables can be represented as a mixture of appropriate p.d.f.s of i.i.d. random variables. More specifically, if, conditional on w, W1, …, Wk are i.i.d. with p.d.f. f(W1, …, Wk;w) = ![]() g(Wi, w), and if w is given a probability distribution P(w) then the p.d.f.

g(Wi, w), and if w is given a probability distribution P(w) then the p.d.f.

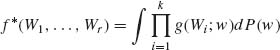

(8.4.1)

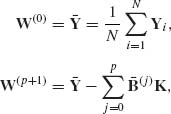

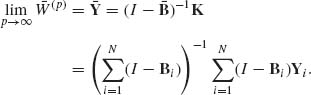

represents a distribution of exchangeable random variables. If the vector X represents the means of k independent samples the present model coincides with the Model II of ANOVA, with known variance components and an unknown grand mean μ. This model is a special case of a Bayesian linear model called by Lindley and Smith a three–stage linear model or hierarchical models. The general formulation of such a model is

![]()

and

![]()

where X is an n × 1 vector, θi are pi × 1 (i = 1, 2, 3), A1, A2, A3 are known constant matrices, and V, ![]() , C are known covariance matrices. Lindley and Smith (1972) have shown that for a noninformative prior for θ2 obtained by letting C−1 → 0, the Bayes estimator of θ, for the loss function L(

, C are known covariance matrices. Lindley and Smith (1972) have shown that for a noninformative prior for θ2 obtained by letting C−1 → 0, the Bayes estimator of θ, for the loss function L(![]() 1, θ) = ||

1, θ) = ||![]() 1 − θ1||2, is given by

1 − θ1||2, is given by

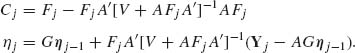

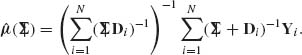

(8.4.2) ![]()

where

(8.4.3) ![]()

We see that this Bayes estimator coincides with the LSE, (A′A)−1A′X, when V = I and ![]() ,−1→ 0. This result depends very strongly on the knowledge of the covariance matrix V. Lindley and Smith (1972) suggested an iterative solution for a Bayesian analysis when V is unknown. Interesting special results for models of one way and two–way ANOVA can be found in Smith (1973b).

,−1→ 0. This result depends very strongly on the knowledge of the covariance matrix V. Lindley and Smith (1972) suggested an iterative solution for a Bayesian analysis when V is unknown. Interesting special results for models of one way and two–way ANOVA can be found in Smith (1973b).

A comprehensive Bayesian analysis of the hierarchical Model II of ANOVA is given in Chapter 5 of Box and Tiao (1973).

In Gelman et al. (1995, pp. 129–134), we find an interesting example of a hierarchical model in which

![]()

θ1, …, θk are conditionally i.i.d., with

![]()

and (α, β) have an improper prior p.d.f.

![]()

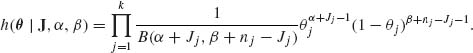

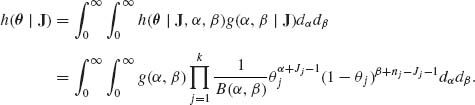

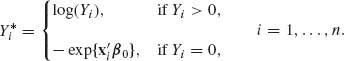

According to this model, θ = (θ1, …, θ k) is a vector of priorly exchangeable (not independent) parameters. We can easily show that the posterior joint p.d.f. of θ, given J = (J1, …, Jk) and (α, β) is

(8.4.4)

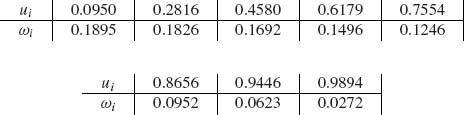

In addition, the posterior p.d.f. of (α, β) is

(8.4.5)

The objective is to obtain the joint posterior p.d.f.

From h(θ| J) one can derive a credibility region for θ, etc.

8.4.3 The Normal Dynamic Linear Model

In time–series analysis for econometrics, signal processing in engineering and other areas of applications, one often encounters series of random vectors that are related according to the following linear dynamic model

where A and G are known matrices, which are (for simplicity) fixed. {![]() n} is a sequence of i.i.d. random vectors; {ωn} is a sequence of i.i.d. random vectors; {

n} is a sequence of i.i.d. random vectors; {ωn} is a sequence of i.i.d. random vectors; {![]() n} and {ωn} are independent sequences, and

n} and {ωn} are independent sequences, and

(8.4.7) ![]()

We further assume that θ0 has a prior normal distribution, i.e.,

(8.4.8) ![]()

and that θ0 is independent of {![]() t} and {ω t}. This model is called the normal random walk model.

t} and {ω t}. This model is called the normal random walk model.

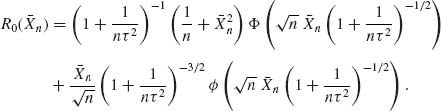

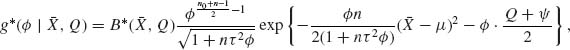

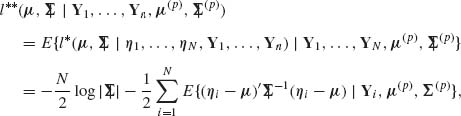

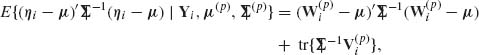

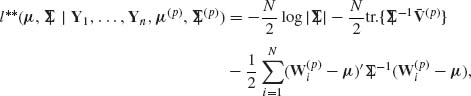

We compute now the posterior distribution of θ1, given Y1. From multivariate normal theory, since

![]()

and

![]()

we obtain

![]()

Let F1 = Ω + GC0G′. Then, we obtain after some manipulations

![]()

where

(8.4.9) ![]()

and

(8.4.10) ![]()

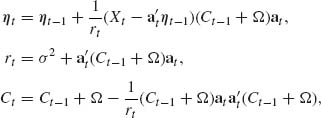

Define, recursively for j ≥ 1

![]()

and

The recursive equations (8.4.11) are called the Kalman filter. Note that, for each n ≥ 1, ηn depends on ![]() n = (Y1, …, Yn). Moreover, we can prove by induction on n, that

n = (Y1, …, Yn). Moreover, we can prove by induction on n, that

(8.4.12) ![]()

for all n ≥ 1. For additional theory and applications in Bayesian forecasting and smoothing, see Harrison and Stevens (1976), West, Harrison, and Migon (1985), and the book of West and Harrison (1997). We illustrate this sequential Bayesian process in Example 8.19.

8.5 APPROXIMATION METHODS

In this section, we discuss two types of methods to approximate posterior distributions and posterior expectations. The first type is analytical, which is usually effective in large samples. The second type of approximation is numerical. The numerical approximations are based either on numerical integration or on simulations. Approximations are required when an exact functional form for the factor of proportionality in the posterior density is not available. We have seen such examples earlier, like the posterior p.d.f. (8.1.4).

8.5.1 Analytical Approximations

The analytic approximations are saddle–point approximations, based on variations of the Laplace method, which is explained now.

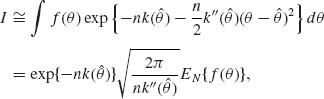

Consider the problem of evaluating the integral

where θ is m–dimensional, and k(θ) has sufficiently high–order continuous partial derivatives. Consider first the case of m = 1. Let ![]() be an argument maximizing −k(θ). Make a Taylor expansion of k(θ) around

be an argument maximizing −k(θ). Make a Taylor expansion of k(θ) around ![]() , i.e.,

, i.e.,

k′(![]() ) = 0 and k″(

) = 0 and k″(![]() ) > 0. Thus, substituting (8.5.2) in (8.5.1), the integral I is approximated by

) > 0. Thus, substituting (8.5.2) in (8.5.1), the integral I is approximated by

(8.5.3)

where EN{f(θ)} is the expected value of f(θ), with respect to the normal distribution with mean ![]() and variance

and variance ![]() . The expectation EN{f(θ)} can be sometimes computed exactly, or one can apply the delta method to obtain the approximation

. The expectation EN{f(θ)} can be sometimes computed exactly, or one can apply the delta method to obtain the approximation

(8.5.4) ![]()

Often we see the simpler approximation, in which f(![]() ) is used for EN{f(

) is used for EN{f(![]() )}. In this case, the approximation error is O(n−1). If we use f(

)}. In this case, the approximation error is O(n−1). If we use f(![]() ) for EN{f(θ)}, we obtain the approximation

) for EN{f(θ)}, we obtain the approximation

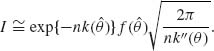

(8.5.5)

In the m > 1 case, the approximating formula becomes

(8.5.6) ![]()

where

(8.5.7) ![]()

These approximating formulae can be applied in Bayesian analysis, by letting −nk(θ) be the log–likelihood function, l(θ* | Xn); ![]() be the MLE,

be the MLE, ![]() n, and

n, and ![]() , −1(

, −1(![]() ) be J(

) be J(![]() n) given in (7.7.15). Accordingly, the posterior p.d.f., when the prior p.d.f. is h(θ), is approximated by

n) given in (7.7.15). Accordingly, the posterior p.d.f., when the prior p.d.f. is h(θ), is approximated by

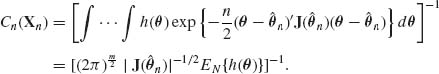

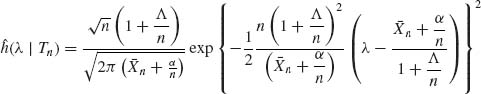

(8.5.8) ![]()

In this formula, ![]() n is the MLE of θ and

n is the MLE of θ and

If we approximate EN{h(θ)} by h(![]() n), then the approximating formula reduces to

n), then the approximating formula reduces to

(8.5.10) ![]()

This is a large sample normal approximation to the posterior density of θ. We can write this, for large samples, as

Note that Equation (8.5.11) does not depend on the prior distribution, and is not expected therefore to yield good approximation to h(θ| Xn) if the samples are not very large.

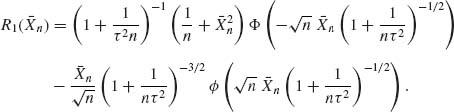

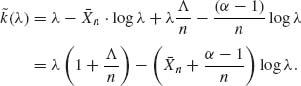

One can improve upon the normal approximation (8.5.11) by combining the likelihood function and the prior density h(θ) in the definition of k(θ). Thus, let

(8.5.12) ![]()

Let ![]() be a value of θ maximizing −n

be a value of θ maximizing −n ![]() (θ), or

(θ), or ![]() n the root of

n the root of

(8.5.13) ![]()

Let

(8.5.14) ![]()

Then, the saddle–point approximation to the posterior p.d.f. h(θ | Xn) is

This formula is similar to Barndorff–Nielsen p*–formula (7.7.15) and reduces to the p*–formula if h(θ)dθ ![]() dθ. The normal approximation is given by (8.5.11), in which

dθ. The normal approximation is given by (8.5.11), in which ![]() n is replaced by

n is replaced by ![]() n and J(

n and J(![]() n) is replaced by

n) is replaced by ![]() (

(![]() n).

n).

For additional reading on analytic approximation for large samples, see Gamerman (1997, Ch. 3), Reid (1995, pp. 351–368), and Tierney and Kadane (1986).

8.5.2 Numerical Approximations

In this section, we discuss two types of numerical approximations: numerical integrations and simulations. The reader is referred to Evans and Swartz (2001).

I. Numerical Integrations

We have seen in the previous sections that, in order to evaluate posterior p.d.f., one has to evaluate integrals of the form

Sometimes these integrals are quite complicated, like that of the RHS of Equa-tion (8.1.4).

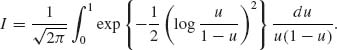

Suppose that, as in (8.5.16), the range of integration is from −∞ to ∞ and I < ∞. Consider first the case where θ is real. Making the one–to–one transformation ω = eθ /(1+eθ), the integral of (8.5.16) is reduced to

where q(θ) = L(θ | Xn)h(θ). There are many different methods of numerical integration. A summary of various methods and their accuracy is given in Abramowitz and Stegun (1968, p. 885). The reader is referred also to the book of Davis and Rabinowitz (1984).

If we define f(ω) so that

(8.5.18) ![]()

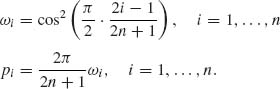

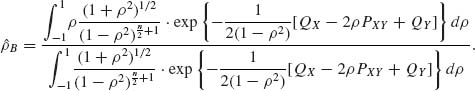

then, an n–point approximation to I is given by

where

(8.5.20)

The error in this approximation is

(8.5.21) ![]()

Integrals of the form

Thus, (8.5.22) can be computed according to (8.5.19). Another method is to use an n–points Gaussian quadrature formula:

where ui and wi are tabulated in Table 25.4 of Abramowitz and Stegun (1968, p. 916). Often it suffices to use n = 8 or n = 12 points in (8.5.23).

II. Simulation

The basic theorem applied in simulations to compute an integral I = ![]() f(θ) dH(θ) is the strong law of large numbers (SLLN). We have seen in Chapter 1 that if X1, X2, … is a sequence of i.i.d. random variables having a distribution FX(x), and if

f(θ) dH(θ) is the strong law of large numbers (SLLN). We have seen in Chapter 1 that if X1, X2, … is a sequence of i.i.d. random variables having a distribution FX(x), and if ![]() |g(x)|dF(x) < ∞ then

|g(x)|dF(x) < ∞ then

![]()

This important result is applied to approximate an integral ![]() f(θ)dH(θ) by a sequence θ1, θ2, … of i.i.d. random variables, generated from the prior distribution H(θ). Thus, for large n,

f(θ)dH(θ) by a sequence θ1, θ2, … of i.i.d. random variables, generated from the prior distribution H(θ). Thus, for large n,

(8.5.24) ![]()

Computer programs are available in all statistical packages that simulate realizations of a sequence of i.i.d. random variables, having specified distributions. All programs use linear congruential generators to generate “pseudo” random numbers that have approximately uniform distribution on (0, 1). For discussion of these generators, see Bratley, Fox, and Schrage (1983).

Having generated i.i.d. uniform R(0, 1) random variables U1, U2, …, Un, one can obtain a simulation of i.i.d. random variables having a specific c.d.f. F, by the transformation

(8.5.25) ![]()

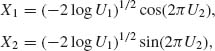

In some special cases, one can use different transformations. For example, if U1, U2 are independent R(0, 1) random variables then the Box–Muller transformation

yields two independent random variables having a standard normal distribution. It is easier to simulate a N(0, 1) random variable according to (8.5.26) than according to X =Φ −1(U). In today’s technology, one could choose from a rich menu of simulation procedures for many of the common distributions.

If a prior distribution H(θ) is not in a simulation menu, or if h(θ)dθ is not proper, one can approximate ![]() f(θ)h(θ)dθ by generating θ1, …, θn from another convenient distribution, λ (θ)dθ say, and using the formula

f(θ)h(θ)dθ by generating θ1, …, θn from another convenient distribution, λ (θ)dθ say, and using the formula

(8.5.27) ![]()

The method of simulating from a substitute p.d.f. λ (θ) is called importance sampling, and λ (θ) is called an importance density. The choice of λ (θ) should follow the following guidelines:

The second guideline is sometimes complicated. For example, if h(θ) d(θ) is the improper prior dθ and I = ![]() f(θ)dθ, where

f(θ)dθ, where ![]() |f(θ)|dθ < ∞, one could use first the monotone transformation x = eθ /(1+eθ) to reduce I to I =

|f(θ)|dθ < ∞, one could use first the monotone transformation x = eθ /(1+eθ) to reduce I to I = ![]() . One can use then a beta, β (p, q), importance density to simulate from, and approximate I by

. One can use then a beta, β (p, q), importance density to simulate from, and approximate I by

![]()

It would be simpler to use β (1, 1), which is the uniform R(0, 1).

An important question is, how large should the simulation sample be, so that the approximation will be sufficiently precise. For large values of n, the approximation ![]() f(θi) h(θi) is, by Central Limit Theorem, approximately distributed like

f(θi) h(θi) is, by Central Limit Theorem, approximately distributed like ![]() , where

, where

![]()

VS(·) is the variance according to the simulation density. Thus, n could be chosen sufficiently large, so that Z1−α /2 · ![]() < δ. This will guarantee that with confidence probability close to (1 − α) the true value of I is within

< δ. This will guarantee that with confidence probability close to (1 − α) the true value of I is within ![]() ± δ. The problem, however, is that generally τ2 is not simple or is unknown. To overcome this problem one could use a sequential sampling procedure, which attains asymptotically the fixed width confidence interval. Such a procedure was discussed in Section 6.7.

± δ. The problem, however, is that generally τ2 is not simple or is unknown. To overcome this problem one could use a sequential sampling procedure, which attains asymptotically the fixed width confidence interval. Such a procedure was discussed in Section 6.7.

We should remark in this connection that simulation results are less accurate than those of numerical integration. One should use, as far as possible, numerical integration rather than simulation.

To illustrate this point, suppose that we wish to compute numerically

![]()

Reduce I, as in (8.5.17), to

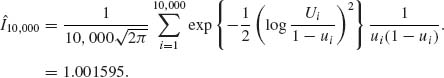

Simulation of N = 10, 000 random variables Ui ∼ R(0, 1) yields the approximation

On the other hand, a 10–point numerical integration, according to (8.5.29), yields

![]()

When θ is m–dimensional, m ≥ 2, numerical integration might become too difficult. In such cases, simulations might be the answer.

8.6 EMPIRICAL BAYES ESTIMATORS

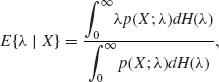

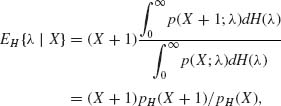

Empirical Bayes estimators were introduced by Robbins (1956) for cases of repetitive estimation under similar conditions, when Bayes estimators are desired but the statistician does not wish to make specific assumptions about the prior distribution. The following example illustrates this approach. Suppose that X has a Poisson distribution P(λ), and λ has some prior distribution H(λ), 0 < λ < ∞. The Bayes estimator of λ for the squared–error loss function is

where p(x;λ) denotes the p.d.f. of P(λ) at the point x. Since λ p(x;λ) = (x + 1)· p(x + 1;λ) for every λ and each x = 0, 1, … we can express the above Bayes estimator in the form

(8.6.1)

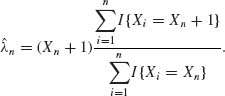

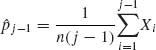

where pH(x) is the predictive p.d.f. at x. Obviously, in order to determine the posterior expectation we have to know the prior distribution H(λ). On the other hand, if the problem is repetitive in the sense that a sequence (X1, λ1), (X2, λ2), …, (Xn, λn), …, is generated independently so that λ1, λ2, … are i.i.d. having the same prior distribution H(λ), and X1, …, Xn are conditionally independent, given λ1, …, λn, then we consider the sequence of observable random variables X1, …, Xn, … as i.i.d. from the mixture of Poisson distribution with p.d.f. pH(j), j = 0, 1, 2, …. Thus, if on the nth epoch, we observe Xn = i0 we estimate, on the basis of all the data, the value of pH(i0 + 1)/pH(i0). A consistent estimator of pH(j), for any j = 0, 1, … is ![]() , where I{Xi = j} is the indicator function of {Xi = j}. This follows from the SLLN. Thus, a consistent estimator of the Bayes estimator EH{λ | Xn} is

, where I{Xi = j} is the indicator function of {Xi = j}. This follows from the SLLN. Thus, a consistent estimator of the Bayes estimator EH{λ | Xn} is

(8.6.2)

This estimator is independent of the unknown H(λ), and for large values of n is approximately equal to EH{λ | Xn}. The estimator ![]() n is called an empirical Bayes estimator. The question is whether the prior risks, under the true H(λ), of the estimators λn converge, as n → ∞, to the Bayes risk under H(λ). A general discussion of this issue with sufficient conditions for such convergence of the associated prior risks is given in the paper of Robbins (1964).

n is called an empirical Bayes estimator. The question is whether the prior risks, under the true H(λ), of the estimators λn converge, as n → ∞, to the Bayes risk under H(λ). A general discussion of this issue with sufficient conditions for such convergence of the associated prior risks is given in the paper of Robbins (1964).

Many papers were written on the application of the empirical Bayes estimation method to repetitive estimation problems in which it is difficult or impossible to specify the prior distribution exactly. We have to remark in this connection that the empirical Bayes estimators are only asymptotically optimal. We have an adaptive decision process which corrects itself and approaches the optimal decisions only when n grows. How fast does it approach the optimal decisions? It depends on the amount of a priori knowledge of the true prior distribution. The initial estimators may be far from the true Bayes estimators. A few studies have been conducted to estimate the rate of approach of the prior risks associated with the empirical Bayes decisions to the true Bayes risk. Lin (1974) considered the one parameter exponential family and the estimation of a function λ (θ) under squared–error loss. The true Bayes estimator is

![]()

and it is assumed that ![]() (x)fH(x) can be expressed in the form

(x)fH(x) can be expressed in the form ![]() , where

, where ![]() is the ith order derivative of fH(x) with respect to x. The empirical Bayes estimators considered are based on consistent estimators of the p.d.f. fH(x) and its derivatives. For the particular estimators suggested it is shown that the rate of approach is of the order 0(n–α) with 0 < α ≤ 1/3, where n is the number of observations.

is the ith order derivative of fH(x) with respect to x. The empirical Bayes estimators considered are based on consistent estimators of the p.d.f. fH(x) and its derivatives. For the particular estimators suggested it is shown that the rate of approach is of the order 0(n–α) with 0 < α ≤ 1/3, where n is the number of observations.

In Example 8.26, we show that if the form of the prior is known, the rate of approach becomes considerably faster. When the form of the prior distribution is known the estimators are called semi–empirical Bayes, or parametric empirical Bayes.

For further reading on the empirical Bayes method, see the book of Maritz (1970) and the papers of Casella (1985), Efron and Morris (1971, l972a, 1972b), and Susarla (1982).

The E–M algorithm discussed in Example 8.27 is a very important procedure for estimation and overcoming problems of missing values. The book by McLachlan and Krishnan (1997) provides the theory and many interesting examples.

PART II: EXAMPLES

Example 8.1. The experiment under consideration is to produce concrete under certain conditions of mixing the ingredients, temperature of the air, humidity, etc. Prior experience shows that concrete cubes manufactured in that manner will have a compressive strength X after 3 days of hardening, which has a log–normal distribution LN(μ, σ2). Furthermore, it is expected that 95% of such concrete cubes will have compressive strength in the range of 216–264 (kg/cm2).

According to our model, Y = log X ∼ N(μ, σ2). Taking the (natural) logarithms of the range limits, we expect most Y values to be within the interval (5.375, 5.580).

The conditional distribution of Y given (μ, σ2) is

![]()

Suppose that σ2 is fixed at σ2 = 0.001, and μ has a prior normal distribution μ ∼ N(μ0, τ2), then the predictive distribution of Y is N(μ0, σ2 + τ2). Substituting μ0 = 5.475, the predictive probability that Y ![]() (5.375, 5.580), if

(5.375, 5.580), if ![]() = 0.051 is 0.95. Thus, we choose τ2 = 0.0015 for the prior distribution of μ.

= 0.051 is 0.95. Thus, we choose τ2 = 0.0015 for the prior distribution of μ.

From this model of Y | μ, σ2 ∼ N(μ, σ2) and μ ∼ N(μ0, τ2). The bivariate distribution of (Y, μ) is

![]()

Hence, the conditional distribution of μ given {Y = y} is, as shown in Section 2.9,

![]()

The posterior distribution of μ, given {Y = y} is normal. ![]()

Example 8.2. (a) X1, X2, …, Xn given λ are conditionally i.i.d., having a Poisson distribution P(λ), i.e., ![]() = {P(λ), 0 < λ < ∞}.

= {P(λ), 0 < λ < ∞}.

Let ![]() = {G(Λ, α), 0 < α, Λ < ∞}, i.e.,

= {G(Λ, α), 0 < α, Λ < ∞}, i.e., ![]() is a family of prior gamma distributions for λ. The minimal sufficient statistics, given λ, is

is a family of prior gamma distributions for λ. The minimal sufficient statistics, given λ, is ![]() . Tn| λ ∼ P(λ n). Thus, the posterior p.d.f. of λ, given Tn, is

. Tn| λ ∼ P(λ n). Thus, the posterior p.d.f. of λ, given Tn, is

![]()

Hence, λ | Tn ∼ G(n + Λ, Tn + α). The posterior distribution belongs to ![]() .

.

(b) ![]() = {G(λ, α), 0 < λ < ∞}, α fixed.

= {G(λ, α), 0 < λ < ∞}, α fixed. ![]() = {G(Λ, ν), 0 < ν, Λ < ∞}.

= {G(Λ, ν), 0 < ν, Λ < ∞}.

![]()

Thus, λ | X ∼ G(X + Λ, ν + α). ![]()

Example 8.3. The following problem is often encountered in high technology industry.

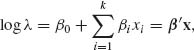

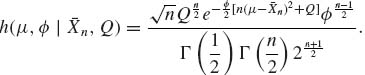

The number of soldering points on a typical printed circut board (PCB) is often very large. There is an automated soldering technology, called “wave soldering, ” which involves a large number of different factors (conditions) represented by variables X1, X2, …, Xk. Let J denote the number of faults in the soldering points on a PCB. One can model J as having conditional Poisson distribution with mean λ, which depends on the manufacturing conditions X1, …, Xk according to a log–linear relationship

where β′ = (β0, …, βk) and x = (1, x1, …, xk). β is generally an unknown parametric vector. In order to estimate β, one can design an experiment in which the values of the control variables X1, …, Xk are changed.

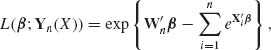

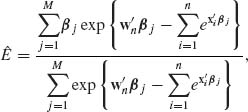

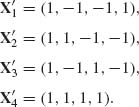

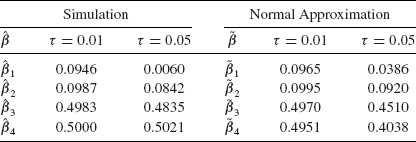

Let Ji be the number of observed faulty soldering points on a PCB, under control conditions given by xi (i = 1, …, N). The likelihood function of β, given J1, …, JN and x1, …, xN, is

where ![]() . If we ascribe β a prior multinormal distribution, i.e., β ∼ N(β0, V) then the posterior p.d.f. of β, given

. If we ascribe β a prior multinormal distribution, i.e., β ∼ N(β0, V) then the posterior p.d.f. of β, given ![]() N = (J1, …, JN, x1, …, xN), is

N = (J1, …, JN, x1, …, xN), is

It is very difficult to express analytically the proportionality factor, even in special cases, to make the RHS of h(β| ![]() N)a p.d.f.

N)a p.d.f. ![]()

Example 8.4. In this example, we derive the Jeffreys prior density for several models.

A. ![]() = {b(x;n, θ), 0 < θ < 1}.

= {b(x;n, θ), 0 < θ < 1}.

This is the family of binomial probability distributions. The Fisher information function is

![]()

Thus, the Jeffreys prior for θ is

![]()

In this case, the prior density is

![]()

This is a proper prior density. The posterior distribution of θ, given X, under the above prior is Beta ![]() .

.

B. ![]() = {N(μ, σ2);−∞ < μ < ∞, 0 < σ < ∞}.

= {N(μ, σ2);−∞ < μ < ∞, 0 < σ < ∞}.

The Fisher information matrix is given in (3.8.8). The determinant of this matrix is |I(μ, σ2)| = 1/2σ6. Thus, the Jeffreys prior for this model is

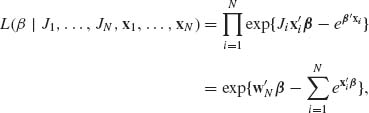

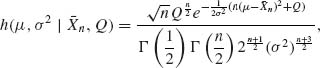

![]()

Using this improper prior density the posterior p.d.f. of (μ, σ2), given X1, …, Xn, is

where ![]() . The parameter

. The parameter ![]() is called the precision parameter. In terms of μ and

is called the precision parameter. In terms of μ and ![]() , the improper prior density is

, the improper prior density is

![]()

The posterior density of (μ, ![]() ) correspondingly is

) correspondingly is

![]()

Example 8.5. Consider a simple inventory system in which a certain commodity is stocked at the beginning of every day, according to a policy determined by the following considerations. The daily demand (in number of units) is a random variable X whose distribution belongs to a specified parametric family ![]() . Let X1, X2, … denote a sequence of i.i.d. random variables, whose common distribution F(x;θ) belongs to

. Let X1, X2, … denote a sequence of i.i.d. random variables, whose common distribution F(x;θ) belongs to ![]() and which represent the observed demand on consecutive days. The stock level at the beginning of each day, Sn, n = 1, 2, … can be adjusted by increasing or decreasing the available stock at the end of the previous day. We consider the following inventory cost function

and which represent the observed demand on consecutive days. The stock level at the beginning of each day, Sn, n = 1, 2, … can be adjusted by increasing or decreasing the available stock at the end of the previous day. We consider the following inventory cost function

![]()

where c, 0 < c < ∞, is the daily cost of holding a unit in stock and h, 0 < h < ∞ is the cost (or penalty) for a shortage of one unit. Here (s − x)+ = max(0, s − x) and (s − x)- = -min(0, s − x). If the distribution of X, F(x;θ) is known, then the expected cost R(S, θ) = Eθ {K(S, X)} is minimized by

![]()

where F-1(γ ;θ) is the γ–quantile of F(x;θ). If θ is unknown we cannot determine S0(θ). We show now a Bayesian approach to the determination of the stock levels. Let H(θ) be a specific prior distribution of θ. The prior expected daily cost is

![]()

or, since all the terms are nonnegative

The value of S which minimizes ρ (S, H) is similar to (8.1.27),

![]()

i.e., the h/(c + h) th–quantile of the predictive distribution FH(x).

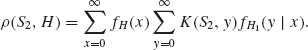

After observing the value x1 of X1, we convert the prior distribution H(θ) to a posterior distribution H1(θ | x1) and determine the predictive p.d.f. for the second day, namely

![]()

The expected cost for the second day is

Moreover, by the law of the iterated expectations

![]()

Hence,

The conditional expectation  is the posterior expected cost given X1 = x; or the predictive cost for the second day. The optimal choice of S2 given X1 = x is, therefore, the h/(c + h)–quantile of the predictive distribution FH1(y | x) i.e.,

is the posterior expected cost given X1 = x; or the predictive cost for the second day. The optimal choice of S2 given X1 = x is, therefore, the h/(c + h)–quantile of the predictive distribution FH1(y | x) i.e., ![]() . Since this function minimizes the predictive risk for every x, it minimizes ρ(S2, H). In the same manner, we prove that after n days, given Xn = (x1, …, xn) the optimal stock level for the beginning of the (n + 1)st day is the

. Since this function minimizes the predictive risk for every x, it minimizes ρ(S2, H). In the same manner, we prove that after n days, given Xn = (x1, …, xn) the optimal stock level for the beginning of the (n + 1)st day is the ![]() –quantile of the predictive distribution of Xn + 1, given Xn = xn, i.e.,

–quantile of the predictive distribution of Xn + 1, given Xn = xn, i.e., ![]() , where the predictive p.d.f. of Xn + 1, given Xn = x is

, where the predictive p.d.f. of Xn + 1, given Xn = x is

![]()

and h(θ | x) is the posterior p.d.f. of θ given Xn = x. The optimal stock levels are determined sequentially for each day on the basis of the demand of the previous days. Such a procedure is called an adaptive procedure. In particular, if X1, X2, … is a sequence of i.i.d. Poisson random variables (r.v.s), P(θ) and if the prior distribution H(θ) is the gamma distribution, ![]() , the posterior distribution of θ after n observations is the gamma distribution

, the posterior distribution of θ after n observations is the gamma distribution ![]() , where

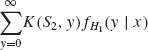

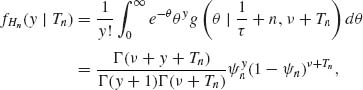

, where ![]() . Let

. Let ![]() denote the p.d.f. of this posterior distribution. The predictive distribution of Xn + 1 given Xn, which actually depends only on Tn, is

denote the p.d.f. of this posterior distribution. The predictive distribution of Xn + 1 given Xn, which actually depends only on Tn, is

where ![]() n = τ/(1 + (n + 1)τ). This is the p.d.f. of the negative binomial NB(

n = τ/(1 + (n + 1)τ). This is the p.d.f. of the negative binomial NB(![]() n, ν + Tn). It is interesting that in the present case the predictive distribution belongs to the family of the negative–binomial distributions for all n = 1, 2, …. We can also include the case of n = 0 by defining T0 = 0. What changes from one day to another are the parameters (

n, ν + Tn). It is interesting that in the present case the predictive distribution belongs to the family of the negative–binomial distributions for all n = 1, 2, …. We can also include the case of n = 0 by defining T0 = 0. What changes from one day to another are the parameters (![]() n, ν + Tn). Thus, the optimal stock level at the beginning of the (n + 1)st day is the h/(c + h)–quantile of the NB(

n, ν + Tn). Thus, the optimal stock level at the beginning of the (n + 1)st day is the h/(c + h)–quantile of the NB(![]() n, ν + Tn).

n, ν + Tn). ![]()

Example 8.6. Consider the testing problem connected with the problem of detecting disturbances in a manufacturing process. Suppose that the quality of a product is presented by a random variable X having a normal distribution N(θ, 1). When the manufacturing process is under control the value of θ should be θ0. Every hour an observation is taken on a product chosen at random from the process. Consider the situation after n hours. Let X1, …, Xn be independent random variables representing the n observations. It is suspected that after k hours of operation 1 < k < n a malfunctioning occurred and the expected value θ shifted to a value θ1 greater than θ0. The loss due to such a shift is (θ1 – θ0) [$] per hour. If a shift really occurred the process should be stopped and rectified. On the other hand, if a shift has not occurred and the process is stopped a loss of K [$] is charged. The prior probability that the shift occurred is ![]() . We present here the Bayes test of the two hypotheses

. We present here the Bayes test of the two hypotheses

![]()

against

![]()

for a specified k, 1 ≤ k ≤ n − 1; which is performed after the nth observation.

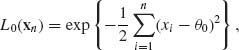

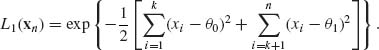

The likelihood functions under H0 and under H1 are, respectively, when Xn = xn

and

Thus, the posterior probability that H0 is true is

![]()

where π = 1 –![]() . The ratio of prior risks is in the present case K π/((1-π)(n − k)(θ1 – θ0)). The Bayes test implies that H0 should be rejected if

. The ratio of prior risks is in the present case K π/((1-π)(n − k)(θ1 – θ0)). The Bayes test implies that H0 should be rejected if

![]()

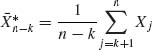

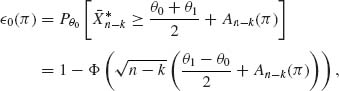

where  .

.

The Bayes (minimal prior) risk associated with this test is

![]()

where ![]() 0(π) and

0(π) and ![]() 1(π) are the error probabilities of rejecting H0 or H1 when they are true. These error probabilities are given by

1(π) are the error probabilities of rejecting H0 or H1 when they are true. These error probabilities are given by

where Φ(z) is the standard normal integral and

![]()

Similarly,

![]()

The function An − k(π) is monotone increasing in π and ![]() . Accordingly,

. Accordingly, ![]() 0(0) = 1,

0(0) = 1, ![]() 1(0) = 0 and

1(0) = 0 and ![]() 0(1) = 0,

0(1) = 0, ![]() 1(1) = 1.

1(1) = 1. ![]()

Example 8.7. Consider the detection problem of Example 8.6 but now the point of shift k is unknown. If θ0 and θ1 are known then we have a problem of testing the simple hypothesis H0 (of Example 8.6) against the composite hypothesis

![]()

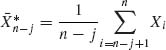

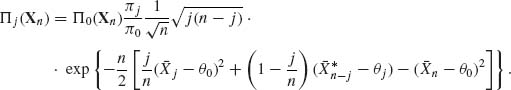

Let π0 be the prior probability of H0 and πj, j = 1, …, n − 1, the prior probabilities under H1 that {k = j}. The posterior probability of H0 is then

where  and

and  . The posterior probability of {k = j} is, for j = 1, …, n − 1,

. The posterior probability of {k = j} is, for j = 1, …, n − 1,

Let Ri(Xn) (i = 0, 1) denote the posterior risk associated with accepting Hi. These functions are given by

and

![]()

H0 is rejected if R1(Xn) ≤ R0(Xn), or when

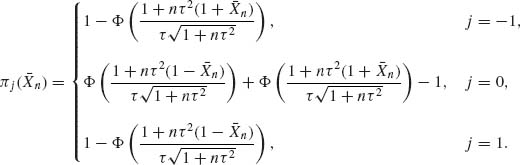

![]()