Chapter 1

VR and AR

From the elemental beginnings of the Google Cardboard project to the powerful Daydream and ARCore systems, Google’s virtual and augmented reality platforms form the heart of its revolutionary Immersive Computing vision. This chapter introduces these flagship systems, not only from a technical and product standpoint, but also how they fit into an important historical continuum of innovation and dialogue around computer simulation and its evolution.

Immersive Computing

In 2014, Google released the Cardboard VR headset, shown in Figure 1.1. Made from a pizza box and a couple of cheap hardware store lenses, it required only the most basic and readily available components. Google’s cardboard construction set the tone for its approach to VR and AR: It was affordable, simple to use, and worked on a mobile phone. By essentially democratizing virtual reality, the cardboard headset proudly trumpeted Google’s mission of making all the world’s information accessible to everyone. This disarmingly simple but elegant cardboard creation was a factor in reigniting public interest in VR and kick-started a spike in technological innovation and industry investment at all levels of the product pipeline. From software development through to hardware manufacturing, this whimsical, almost childlike object had set the stage for Google’s Daydream VR platform.

VR and AR

Although the terms virtual reality (VR) and augmented reality (AR) are frequently categorized as distinct and competing technologies, at Google they are considered to be of equal importance. According to Clay Bavor, Google’s VP of Virtual and Augmented Reality, AR and VR are no more than “labels for points on what we call the spectrum of Immersive Computing…VR transports you somewhere else; while AR leaves you where you are but brings objects and information to you...[and] AR and VR both give you superpowers.”1

It is perhaps not surprising that a technology capable of investing the user with superpowers has engendered a tumultuous history that has been marked by notable successes and singular disappointments. In this chapter, as a preliminary to introducing Google’s main AR and VR platforms—Daydream and ARCore—we will discuss some of the historical thinking about AR and VR and the early inventions that prefigured the current excitement that has been generated around immersive computing. But first, let’s expand on Bavor’s definitions of what exactly is meant by VR and AR.

Virtual Reality

VR takes you somewhere else. VR is a computer-generated simulation of an environment generally experienced through a headset as if it were genuinely taking place and in real time.

This book discusses VR through the lens of Google’s Daydream VR platform and products. It includes mobile phone-based VR headsets with hand-held controllers, and self-contained units with inside-out tracking.

Inside-Out Tracking

Inside-out tracking refers to the placement of the tracking sensors on the headset. These sensors track the outward movement of the user in the world from inside the headset. Google Daydream’s inside-out tracking technology is known as World Sense, and is discussed later in this chapter.

Augmented Reality

AR brings things to you. Augmented reality allows us to add a new layer of contextual meaning over the world we see, in real time. It is an enhanced version of reality where real-world environments are overlaid with digital content in such a way that this content credibly appears to be present in the viewer’s environment.

In AR, the digitally overlaid objects are geo-locked to the real-world environment, meaning they remain stationary at that physical location even as the user moves their phone around. Google’s ARCore brings this ability to Android phones through its accurate and robust real-world positional tracking system.

Media Archaeology

To appreciate these two technologies more deeply, and to more fully understand how they came about, this section sets out to frame them within an historical context. Digital media’s intriguing and often overlooked past, particularly in the period leading up to the invention of cinema, is essential knowledge for anyone seriously interested in working with or designing the future of immersive computing.

The history of AR and VR involves the evolution and the convergence of several initially unconnected media technologies that stretch back much further than does the history of modern computing.

Equally importantly, VR and AR sit within a long history of philosophical dialogue around the representations of reality and the form and meanings that are engendered by such representations. To quote a well-known example: in Plato’s “Allegory of the Cave” ( The Republic Book VII c. 380 BC) the chained prisoners strain to make meaning from the flickering shadows on the cave’s back wall, unaware that behind them is the clear light of day. Plato’s observation, most often used as a metaphor of the cinematic experience itself, revealed a profound engagement with the complexities and contrasting reality in perception and in truth, as well as his speculations on how to obtain the knowledge to recognize the difference between the two. This brings us back to the current world of immersive computing where it is possible to pose these same historically profound questions about what we see and what meaning we might attribute to such vistas.

In a continuation of a centuries-long dialogue, inventors have tinkered with elaborate optical contraptions in an attempt to replicate their surrounding worlds’ images and striking forms. Creating illusions by imitating movement, light, and the world’s surrounding color, these proto-cinematic inventors laid down important technological stepping stones that have given rise to the moving image of cinema, computer-generated images (CGI), and conditions that would eventually lead to VR/AR.

What follows is a brief chronology of the work that led to the current environment of AR and VR, with a focus on three central historical strands. These are precinema animation devices that eventually led to the moving image of cinema and the film industry of the twentieth century as we know it; stereo photography, an early experimental offshoot of classic photography; and the progression of computing from theoretical punch card tabulation systems to modern machines capable of rendering 3D graphics. It is by no means an exhaustive timeline but is offered in order to highlight some of the key moments and the richness of media history; it can also serve as a starting point for readers who want to research further the intriguing story that led to the advent of Immersive Computing.

Stereoptics

Stereo photography came about pretty soon after the invention of photography (Daguerre, 1839); however; the mathematical theories that underpin stereo perception predate photography by many decades. The concept of depth perception was first identified by Euclid in 280 AD when he realized that human eyes work together to achieve a sense of depth over the same object by combining two different angles.

Leonardo Da Vinci in the fifteenth century was one of the first great artists to truly understand and apply the elements of linear perspective in his art by using shading and scale to create the illusion of depth, size, and distance. His paintings The Last Supper and Annunciation are exemplars of the phenomenon.

Around 1600, based on Euclid’s theory of stereo imagery and depth perspective, the Italian scientist and philosopher, Giovanni Battista della Porta, created the first attempt of stereo imagery by laying two accurately hand-drawn images from slightly different perspectives side by side. Throughout the 1600s, the astronomers Galileo and then Kepler improved the theory, practice, and science of telescopes, lenses, and optics. Kepler even provided a precise explanation of the phenomenon of human binocular vision in his thesis Dioptrice (1611).

It was not until the advent of photography that stereo imagery as we now know it today took off. Charles Wheatstone was the first person to invent a physical device specifically created for viewing stereo imagery. In 1838, in Britain, at the Royal Scottish Society of the Arts, he presented a device he described as a reflecting mirror stereoscope that reflected two images side by side, giving the impression of a stereo image, as shown in Figure 1.2. In 1849, David Brewster created a stereoscope that took Wheatstone’s design and improved on it by replacing mirrors with prisms. These two inventions became the model for stereoscopic viewing devices for decades to come, and we are indebted to them.

Numerous important advancements in the engineering and design of optics for photography and stereo photography were made throughout the nineteenth and into the twentieth centuries. As always, the expansions in such technology was often prompted by the exigencies of war. Both World War I, but especially World War II, prompted huge expansion in the use of stereoscopic aerial photography, in the hopes of using this technology to best their opponents.

Of note, because of its massive place in popular culture, was William Gruber’s View-Master company, formed in 1940.

Most notably for the later VR/AR, the View-Master was the first mass produced headset for viewing stereo imagery that captured the imagination of mainstream culture. Since its launch, millions of View-Masters have been sold around the world and remain instantly recognizable today. Along with the View-Master, the other culturally important 3D phenomenon of the twentieth century were the disposable polarized 3D glasses, with their iconic red and blue lenses made famous in the classic environment of the 1950s drive-in.

Pre-Cinema Animation

Around the same time that stereo photography was being developed, innovations and an understanding of the moving image were being advanced through the invention of various proto-cinema devices.

The animation devices from this period were integral in forming the underlying engineering and motion theory that eventually led to cinema and influenced modern-day computer monitors and eventually mobile phone screens. Concepts such as looping animation and the persistence of vision and technical achievements, such as the use of shutters and systems of projection, formed an integral part of the underpinnings of cinema and the moving image.

Prior to the arrival of the optical toys and pre-cinema animation devices of the early nineteenth century a whole range of devices were produced for audiences that we now would see as proto-cinematic experiences. The phantasmagoria was a type of special effects theatre that used magic lantern projections, optical illusions, shadows, smoke, sound effects, and even scents to create what could be described as an early type of virtual reality. It also offered the audience an early group cinematic type of escapism that became familiar in the mid-twentieth century. Then, just as it is with VR/AR today, audiences brought to the experience a yearning to escape the humdrum of the quotidian into an enthralling imaginary world.

Magic lanterns, such as the ones used in phantasmagoria performances, were a common play toy throughout the eighteenth century, projecting spooky optical illusions onto the viewer’s walls. By the nineteenth century other experimental optical devices began to appear. Similarly, the thaumatrope (1825, John Ayrton Paris) was a popular Georgian toy that is a notable example of a primitive animation device. Made up of a disk with an image on both sides and a piece of string, the thaumatrope when twirled very fast could blend the two images together as one. Using a two-frame animation, the thaumatrope represents the smallest unit of information needed to create an animation effect. Although no motion was ever represented in the classic thaumatropes, only additive still images, they are an important precursor to the subsequent generation of animation devices.

An important advancement in the history of the moving image to capture people’s imagination was the invention of the phenakistiscope a circular disk with animated frames drawn around its circumference that when spun would produce the illusion of movement. It most usually contained titillating, bizarre, and sometimes racist imagery that would be considered offensive by today’s standards. It brought about two foundational technical and conceptual breakthroughs. The first was the looping animation, repeating cycles of motion giving the effect of continuous movement. The other important invention was a primitive shutter system. Made from a separate disk that sat on top of the animated disk, using slits to obscure the main disk, it allowed only for a small section of the image to be seen at one time, thereby tricking the brain into piecing together the change in movement into a single animation that ensured the persistence of vision.

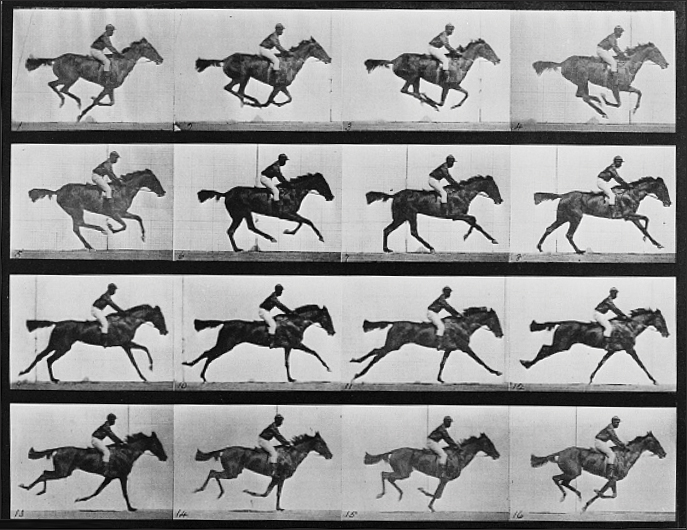

The phenakistiscope gave way to the better known zoetrope, a spinning cylinder with a strip of animated frames attached to the inside. Much like the phenakistiscope, it was spun and viewed through side slits, producing the illusion of movement. Many other analogous devices continued to be developed throughout the latter half of the nineteenth century, including the flip book (1868), kineograph (1868), the praxinoscope (1877), and the zoopraxiscope that was developed by Eadweard Muybridge in 1879 (see Figure 1.3).

Muybridge is also recognized in the history of pre-cinema as the creator of chronophotography (see Figure 1.4). Although seemingly a step back from the motion-based animation devices of the early nineteenth century, Muybridge’s static photographic studies brought a new temporal aspect to the production of the moving image. By capturing discrete units of movement and time, and then laying them out in a linear montage he was able to challenge the viewer to visually piece together a kind of cerebral animation.

This temporal montage with its particular focus on human and animal forms as the subject represented a new progression in the way that time, movement, and subject matter were photographically depicted. Viewed with hindsight, Muybridge’s photographic studies are simply rolls of film laid out, one small step away from the contemporary cinematic moving image.

All of these devices eventually led to the creation of the Lumière cinematograph in 1895, the well-known precursor to the modern film projector (and camera).

Computing

The purpose of computer technology has always been to enable the efficient storage and retrieval of information. From the traditional abacus to the giant mainframes and the modern personal computer (PC), the computer’s history has been the story of innovation to apply the most efficient modalities to the reading and writing of data.

In the 1830s, the decade that also saw the invention of the phenakistiscope and the first stereoscopic viewer, Charles Babbage, inventor and mathematician, described a future machine that he called the Analytical Engine. The Analytical Engine was capable of computing numbers and storing information on punch cards. Although Babbage’s concept was never realized in practice, his is the first modern reference to a computational machine specifically designed to process and store data. It was not until the late nineteenth century that Herman Hollerith invented the first punch card-based electronic tabulating machine. In 1890 the U.S. government used his invention to simplify the process of calculating the mass of data generated by the census. Hollerith’s electromechanical machine was able to compute the data of every person in the country using punch cards, thus drastically simplifying the mammoth task of handling census data that previously had to be processed by hand. The U.S. government’s use of this machine marked a turning point for the adoption of computers in American business, and in the following decades, electronic tabulators increasingly became the norm in large data-driven organizations. In the mid-1920s Hollerith’s own company became the International Business Machines Corporation, now known as IBM.

In the twentieth century, we see an interesting coalescence of media5 with the design of the theoretical universal Turing machine. Designed by Alan Turing, who famously cracked the Enigma code in World War II, the machine worked by storing and retrieving information on reels of tape in a similar fashion to the classic film projector, instead of using punch cards. Many years earlier the Lumière brothers had unwittingly developed a system for efficiently storing and retrieving large amounts of data as part of their attempt to achieve the holy grail of continuous, long-form animation. A number of media theorists have noted the parallels in the methodologies between data storage in the universal Turing machine (and early mainframe computers) and the film projectors used in cinemas, and that a convergence of technologies was taking place in these two seemingly disparate fields.

It is interesting to consider how interactive, mobile, and looping animation devices of pre-cinema times presaged the digital technology of today. In 1962, Morton Heilig created a proto-VR with the Sensorama, which was a hybrid between an early arcade machine and a sideshow attraction (see https://www.daydreamvrbook.com/images/sensorama.png). Having echoes of the phantasmagorical experience from the eighteenth century, it used stereoscopic imagery, sound, vibrations, and even a sensation of wind with a promise to “take you into another world,” the content of which replicated a motorbike ride through modern-day New York City.

This theme of similarities and amalgamations of disparate technological modalities can be seen throughout modern media history and continues to appear in the present day. In the mid-twentieth century, however, innovations in the fields of animation, stereo photography, and computing were transformed by a convergence that was eventually to turn into digital media and today’s virtual reality.

Twentieth Century VR

The first modern VR headset proper (see https://www.daydreamvrbook.com/images/sutherland.png) was built in 1968 by Ivan Sutherland at the University of Utah. It is often given the sobriquet of the Sword of Damocles, because of the bulky rig that hung from a pole above the user’s head. However, Ivan Sutherland has pointed out6 that his team gave the whole device the mundane name of the head-mounted display. The Sword of Damocles was simply the mechanical head position sensor that looks like a pole hanging from the roof (in the background of the left picture, see https://www.daydreamvrbook.com/images/sutherland.png). Although frequently mislabeled, the name is still a fitting reminder of the moral message in Cicero’s parable of Damocles, which is that with the possession of power must come responsibility and restraint, a salutary reminder of which present-day ethical VR and AR creators will be acutely aware.

Throughout the twentieth century, computer hardware and graphics software have become exponentially more powerful in both the storage and retrieval of information. Computers ventured from mainframes, punch cards, and business calculations into the realm of science, computer graphics, and design. In 1972, also at the University of Utah, Edwin Catmull, in a seminal moment in the history of CGI, created the world’s first computer-generated animation. Catmull, who went on to become the president of Pixar, made numerous other integral contributions to the field of computer graphics; among the most noteworthy, perhaps, was the invention of texture maps.

Although important advancements continued to be made in VR technology throughout the latter half of the twentieth century, in the public sphere VR became less prominent. However, in the VR industry development continued, particularly in gaming, entertainment, and theme parks being pushed forward by companies such as Disney and Nintendo.

Uncanny Valley

The uncanny valley is a term commonly used in computer graphics. It describes the phenomenon that occurs as simulations of human facial features move closer and closer to realism they suddenly taken on unusual and grotesque characteristics. Although the term traditionally relates to facial features and the human form, it is frequently used to denote anything that looks awkwardly hyper-real in 3D, VR, and AR.

Several factors hindered VR technology in the late ’90s and early 2000s. The size of VR rigs was too cumbersome to appeal to the general public, plus the cost remained prohibitive. As well, many mainstream computer users had lost interest in clunky technology, poorly designed by engineers. By the last decade of the century, however, ’80s technology had given way to sleek and simple forms that were portable, personal, mobile, and designed to appeal to discerning humans. The uncanny valley of awkward 3D worlds was replaced by Web 2.0, social media, and the rise of mobile technology.

Significant change to deal with consumer interest, however, was not fully achieved until the second decade of the twenty-first century. By then, mobile technology had become cheaper, more powerful, and packed with sufficient sensors to make it possible for any DIY enthusiast armed only with a mobile phone and a cardboard box to create their own simple VR rig. After 200 years of imbricated but parallel trajectories, photography, animation, and computing had been brought together in the novel invention of the mobile VR headset. This opened the door for a new wave of interest in virtual reality, which, in turn, laid the foundations and set the stage for Google’s immersive computing platforms.

Google VR and AR

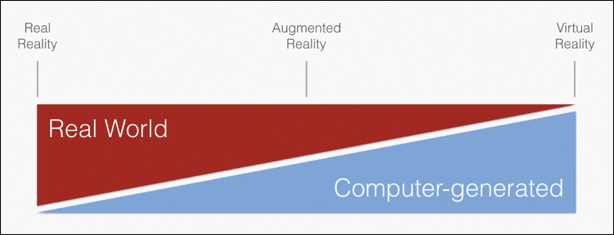

At Google’s 2017 Developer Conference, various speakers from the Daydream team put forth Google’s over-arching philosophy toward AR and VR and laid out a plan for the future of Google’s Immersive Computing platforms, the foundations of which are Daydream and ARCore. As discussed earlier, Google does not view augmented reality and virtual reality as separate entities, but instead as two equally important points located on the spectrum of immersive computing. On one end of the spectrum is the real world, and on the other end is fully immersive virtual reality; somewhere in the middle is augmented reality (see Figure 1.5).

Clay Bavor has described Google’s immersive computing ecosystem as being based on two core focuses: platforms and building blocks. The platforms are Daydream VR and ARCore, each of which hosts products and services that are intrinsically embedded into each ecosystem. The building blocks are made up of Google software applications that sit on top of these platforms and others that exist to push the broader VR and AR ecosystems forward. Let’s look at Google’s two main immersive computing platforms.

Daydream VR

Daydream was released in November 2016 with the goal of bringing high-performance smartphone VR to Android, as shown in Figure 1.6. The original platform had three core elements:

Hardware component, including the Daydream View headset and controller

Daydream-ready spec for phones, including high-performance VR mode in Android

Actual Daydream VR experience, made up of the Daydream Home World and all the VR apps and games built on top of the platform

In 2017, a major update to the Daydream View headset was released, giving it a more comfortable fit and larger, custom fresnel lenses that enable a wider field of view.

In 2018, Lenovo added the Lenovo Mirage Solo headset to the Daydream ecosystem: an innovative untethered standalone headset with PC quality tracking (see Figure 1.7). The Lenovo Mirage Solo continues Daydream’s goal of creating diverse, practical, and entertaining experiences driven by innovative hardware. The headset uses WorldSense tracking with everything integrated into one unit. The Mirage Solo is one of the world’s first commercially available, high-quality, untethered VR headsets. Being a standalone unit changes the user experience of the product, allowing “frictionless” entry to VR by simply slipping on the headset. The use of inside-out positional tracking is a massive evolution, enabling a user to move around freely in VR space, while no longer being rotationally headlocked.

WorldSense

With its roots in Google’s Tango technology, WorldSense uses depth sensors attached to the VR headset that build up an understanding of users’ physical space, allowing them to move around in VR with full free range of motion, not just headlocked rotation.

WorldSense on the Daydream headset uses two wide-angle cameras to identify movement of objects in the room as users move their heads. It tracks high-contrast points in the room such as the corner of a table, paintings hanging on the wall, or objects placed on the floor.

By tracking these objects and points over time, WorldSense gains a sense of position in the room. This information is then tightly coupled with the motion sensor information on the device to provide low-latency positional tracking information that is then handed off to games and apps in fewer than 5 milliseconds, thus achieving an overall screen refresh latency of approximately 20 milliseconds.

ARCore

Announced in 2017, ARCore is Google’s mobile phone-based augmented reality platform. As of this writing, it is available as a developer preview for the creation of AR games and apps on Android. ARCore works by utilizing three key technologies in conjunction with the Android’s phone camera:

Motion tracking Using the phone’s sensors, ARCore builds up a sense of the user’s position in the world.

Environmental understanding Real-time image analysis from the camera allows the system to detect surfaces for the placement and geolocking of digital artifacts.

Light estimation ARCore is able to approximate the lighting of the current environment and dynamically apply these values to digitally overlaid objects in the scene.

Tango

Google’s Tango project is generally considered to be the genesis of all of Google’s inside-out tracking and mobile AR technology. Tango was Google’s original mobile AR platform running on dedicated hardware devices that contained a wide-angle, feature-tracking camera and a depth sensor. This multi-year investment in AR by Google led to the launch of two consumer phones in 2016 and 2017: the Lenovo Phab2Pro and the Asus Zenfone AR. The Tango was perfect for large-scale indoor activations and enabled a number of location-based museum experiences, including at the Detroit Institute of Arts and the ArtScience Museum in Singapore.

By being able to place 3D objects accurately enough in the environment to seem realistic, ARCore adds a new informational layer of context over the world that is set to be transformational for education, gaming, and entertainment, enabling us to interact with digital content in a physical way. ARCore enables developers not just to add a layer of context over the world, but to interact with a layer of shared meaning between people.

Summary

This chapter introduced Google and the industry’s vision for AR and VR through Google’s two main immersive computing platforms: Daydream and ARCore. Together they build on a long and interwoven history of media technology and philosophical discussion, and promise to be inclusive, diverse, and accessible technologies, echoing Google’s mission. In subsequent chapters, you deepen your knowledge of these platforms by delving into the practicalities of actually building games and apps in VR and AR.

1. Google IO 2016—Clay Bavor, Daydream VR keynote

2. Image via Library of Congress Digital Collection. http://www.loc.gov/pictures/resource/stereo.1s02655/

3. Image via Library of Congress Digital Collection. https://www.loc.gov/pictures/related/?va=exact&sp=3&st=gallery&pk=00650865&sb=call_number&sg=true&op=EQUAL

4. Image via Library of Congress Digital Collection. http://www.loc.gov/pictures/resource/cph.3b00681/

5. Manovich, Lev (2002-02-22). The Language of New Media (Leonardo Book Series) (Kindle Locations 760–765). The MIT Press. Kindle Edition.

6. Email communication between the author and Ivan Sutherland.