Example 7.5 Iteration of particle filter.

Consider the 1-D case, where xt and st are just scalar real numbers. Suppose at time t, xt has a displacement vt, and it is subject to a zero-mean Gaussian noise e, that is, . Suppose further the observation zt has Gaussian distribution centered at x and with variance . The particle filter will make N times “guess” to obtain S1 = {s11,s12,..., s1N}.

Now to generate S2. Selecting a sj from S1 (irrespective of the value of w1i), so that s21 = sj + v1 + e, where . The above-described process is repeated N times to generate the particles at time t = 2. At this time, . Renormalizing w2i, and the iteration ends. The obtained estimation for x2 is ![]()

7.5.1.3Mean Shift and Kernel Tracking

Mean shift refers to the mean vector shifting. It is a nonparametric technique that can be used to analyze complex multimodal feature spaces and determine feature clustering. Mean shift technique can also be used to track moving objects; in this case, the region of interest corresponds to the tracking window, while the feature model should be built for tracked object. The basic idea in using mean shift technique for object tracking is to move and search the object model within the tracking window, and to calculate the correlation place with largest value. This is equivalent to determine the cluster center and move the window to the position coincides with the center of gravity (convergence).

To continuously track object from the previous frame to the current frame, the object model determined in the previous frame can be put on the center position xc of local coordinate system in the tracking window, and let the candidate object in current frame be at position y. Description of the property of the candidate object can be characterized by taking advantage of the probability density function p(y) estimated from the data out of current frame. The object model Q and the probability density function of candidate object P(y) are defined as follows:

where v = 1,..., m, m is the number of features. Let S(y) be the similarity function between P(y) and Q:

For an object-tracking task, the similarity function S(y) is the likelihood that an object to be tracked in the previous frame is at position y in the current frame. Therefore, the local extreme value of S(y) corresponds to the object position in the current frame.

To define the similarity function, the isotropic kernel can be used (Comaniciu 2000), in which the description of feature space is represented with kernel weights, then S(y) is a smooth function of y. If n is the total number of pixels in the tracking window, wherein xi is the ith pixel location, then the probability estimation for the candidate feature vector Qv in candidate object window is

where b(xi) is the value of the object characteristic function at pixel xi; the role of δ function is to determine whether the value of xi is the quantization results of feature vector Qv; K(x) is a convex and monotonically decreasing kernel function; Cq is the normalization constant:

Similarly, the probability estimation of the feature model vectors Pv for candidate object P(y)

where Cp is a normalization constant (for a given kernel function, it can be calculated in advance):

Bhattacharyya coefficient is commonly used to estimate the degree of similarity between the density of object mask and the density of candidate region. The more similar the distributions between the two densities is, the greater the degree of similarity. The center of the object position is

where wi is a weighting coefficient. Note that from eq. (7.59), the analytic solution of y cannot be obtained, so an iterative approach is needed. This iterative process corresponds to a process of looking for the maximum value in neighborhood. The characteristics of kernel tracking method are: operating efficiency is high, easy modular, especially for objects with regular movement and low speed. It is possible to successively acquire new center of the object, and achieve the object tracking.

Example 7.6 Feature selection during tracking.

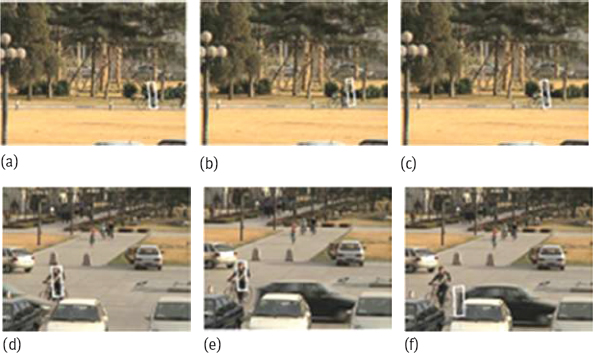

In object tracking, in addition to tracking strategies and methods, the choice of which kinds of object features is also very important (Liu, 2007). One example of using color histogram and edge direction histogram (EOH) under the mean shift framework is shown in Figure 7.15. Figure 7.15(a) is a frame image of a video sequence, in which the object to be tracked has similar color with background, the result obtained with color histogram is unsuccessful as shown in Figure 7.15(b), while the result obtained with edge direction histogram can catch the object as shown in Figure 7.15(c). Figure 7.15(d) is a frame image of another video sequence, in which the edge direction of object to be tracked is not obvious, the result obtained with color histogram can catch object as shown in Figure 7.15(e), while the result obtained with edge direction histogram is not reliable as shown in Figure 7.15(f). It is seen that using a feature alone under certain circumstances can lead to failure of the tracking result.

The color histogram mainly reveals information inside object, while the edge direction histogram mostly reflects information of object boundary. Combining the two, it is possible to obtain a more general effect. One example is shown in Figure 7.16, where the goal is to track the moving car. Since there are changes of object size, changes of viewing angle and object partial occlusion, etc., in the video sequence, so the car’s color or outline will change over time. By combining color histogram and edge direction histogram, good result has been obtained.![]()

7.5.2Subsequences Decision Strategy

The method described in the previous subsection is conducted frame by frame during object tracking, the possible problem is only few information used to make decisions, and the small errors may spread and cannot be controlled. An improved strategy is to divide the entire tracking sequence into several sub-sequences (each with a number of frames), then an optimal decision for each frame is made on the basis of the information provided by each subsequence, and this policy is called subsequences decision strategy (Shen 2009b).

Subsequences decision includes the following steps:

1.The input video is divided into several subsequences;

2.The tracking is conducted in each subsequence;

3.If there is an overlap for adjacent subsequences, their results will be integrated together.

Subsequence decision can also be seen as an extension of traditional decision by frame. If each subsequence obtained by division is just one frame, the subsequence decision becomes a frame by frame decision.

Let Si be the ith subsequence, wherein the jth frame is represented as fi ,j, the whole Si comprises a total Ji frames. If the input video contains N frames and is divided into M subsequence, then

To ensure that any subsequence is not a subset of another sub-sequence, the following constraints are defined:

Let Pj = {Pj,k}, k = 1,2,..., Kj represent the Kj states of possible positions in the jth frame, then the frame by frame decision can be expressed as follows:

The subsequence decision can be expressed as follows:

For subsequence Si, it comprises a total of Ji frames, and there are Kj states of possible positions in each frame. The optimal search problem can be represented by graph structure, and then, this problem can be solved by means of dynamic programming (search for an optimal path).

Example 7.7 An example of subsequences decision.

One example of subsequences decision is illustrated in Figure 7.17, in which the results obtained by three methods for a same video sequence are presented. The object to be tracked is a hand-held mobile mouse, which moves pretty fast and has similar colors with background. The dark box or an oval marks the final tracking results, while the light boxes mark the candidate positions.

The images in the first line are obtained by using mean shift method. The images in the second line are obtained by using particle filter-based approach. The images in the third line are obtained by using subsequence decision process (which only uses a simple color histogram to help detect the position of the candidate). It is seen from these images that both mean shift method and particle filter method have failed to maintain a continuous track, while only the subsequence decision method completes the entire track. ![]()

7.6Problems and Questions

![]()

7-1Summarize what factors make the gray value of the corresponding position ![]() in the temporally adjacent image change.

in the temporally adjacent image change.

7-2Why does the method based on the median maintenance context described in Section 7.3.1 could not achieve very good results when there are a number of objects at the same time in the scene or the motion of object is very slow?

7-3Use induction to list the main steps and tasks for calculating and maintaining a dynamic background frame using the Gaussian mixture model.

7-4What assumptions are made in deriving the optical flow equation? What would happen when these assumptions are not satisfied?

7-5Refer to Figure 7.10 to create a lookup table whose entries are needed to calculate the rectangle features in Figure 7.11.

7-6Use the following two figures in Figure Problem 7-6 to explain the aperture problem.

7-7What are the consequences of aperture problems and how can they be overcome?

7-8If the observer and the observed object are both moving, how can the optical flow calculation be used to obtain the relative velocity between them?

7-9*Suppose that the motion vector of each point in an image is [2, 4], find the coefficient values in the six-parameter motion model.

7-10Why is it possible to use the average gradient, instead of the partial derivative, for better approximating the larger magnitude of the motion vector? What could be the problem, and how to solve?

7-11*In most statistics-related applications, the total variance is the sum of the variances of the components. But why in eq. (7.48), the variance before the observation and the original measurement are so combined into the observed variance (equivalent to the parallel connection of resistance)?

7-12Make a list to compare the suitable applications, the application requirements, the calculation speed, the robustness (anti-interference ability) and so on of Kalman filter and particle filter.

7.7Further Reading

![]()

1.The Purpose and Subject of Motion Analysis

–More information on motion analysis can be found in Gonzalez (2008), Sonka (2008), and Davies (2012).

–A survey of face recognition based on motion information in video can be found in Yan (2009).

2.Motion Detection

–More discussion on global motion detection and application can see Yu (2001b).

–A method for fast prediction and localization of moving objects by combining the curve fitting of motion trajectories with the correlation motion detection tracking can be seen in Qin (2003).

3.Moving Object Detection

–An adaptive subsampling method for moving object detection can be found in Paulus (2009).

–Detection of pedestrians (a type of particular moving objects) can be seen in Jia (2008) and Li (2010).

–One example of moving shadow detection is in Li (2011).

4.Moving Object Segmentation

–An approach to global motion estimation can be found in Chen (2010).

–The temporal segmentation of video image by means of the spatial domain information can be found in Zhang (2001c, 2003b, 2006b).

5.Moving Object Tracking

–Examples of tracking the balls and players of table tennis (particular types of moving objects) can be found in Chen (2006).

–A tracking method based on edge-color histogram and Kalman filter can be found in Liu (2008).

–A multiple kernels tracking method based on gradient direction histogram features (Dalai 2005) can be found in Jia (2009).

–A 4-D scale space approach to solve the moving object tracking problem of adaptive scale can see Wang (2010).

–A method of combining both mean shift and particle filter for the object tracking can be found in Tang (2011).