24.2 Error Correcting Codes

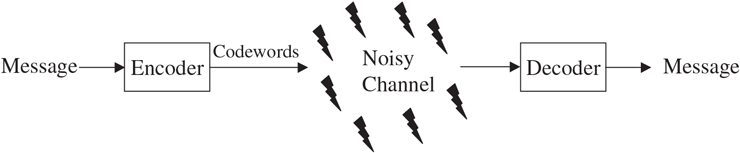

A sender starts with a message and encodes it to obtain codewords consisting of sequences of symbols. These are transmitted over a noisy channel, depicted in Figure 24.1, to the receiver. Often the sequences of symbols that are received contain errors and therefore might not be codewords. The receiver must decode, which means correct the errors in order to change what is received back to codewords and then recover the original message.

Figure 24.1 Encoding and Decoding

The symbols used to construct the codewords belong to an alphabet. When the alphabet consists of the binary bits 0 and 1, the code is called a binary code. A code that uses sequences of three symbols, often represented as integers mod 3, is called a ternary code. In general, a code that uses an alphabet consisting of symbols is called a -ary code.

Definition

Let be an alphabet and let denote the set of -tuples of elements of . A code of length is a nonempty subset of .

The -tuples that make up a code are called codewords, or code vectors. For example, in a binary repetition code where each symbol is repeated three times, the alphabet is the set and the code is the set .

Strictly speaking, the codes in the definition are called block codes. Other codes exist where the codewords can have varying lengths. These will be mentioned briefly at the end of this chapter, but otherwise we focus only on block codes.

For a code that is a random subset of , decoding could be a time-consuming procedure. Therefore, most useful codes are subsets of satisfying additional conditions. The most common is to require that be a finite field, so that is a vector space, and require that the code be a subspace of this vector space. Such codes are called linear and will be discussed in Section 24.4.

For the rest of this section, however, we work with arbitrary, possibly nonlinear, codes. We always assume that our codewords are -dimensional vectors.

In order to decode, it will be useful to put a measure on how close two vectors are to each other. This is provided by the Hamming distance. Let be two vectors in . The Hamming distance is the number of places where the two vectors differ. For example, if we use binary vectors and have the vectors and , then and differ in two places (the 4th and the 7th) so . As another example, suppose we are working with the usual English alphabet. Then since the two strings differ in four places.

The importance of the Hamming distance is that it measures the minimum number of “errors” needed for to be changed to . The following gives some of its basic properties.

Proposition

is a metric on , which means that it satisfies

, and if and only if

for all

for all .

The third property is often called the triangle inequality.

Proof. (1) is exactly the same as saying that and differ in no places, which means that . Part (2) is obvious. For part (3), observe that if and differ in a place, then either and differ at that place, or and differ at that place, or both. Therefore, the number of places where and differ is less than or equal to the number of places where and differ, plus the number of places where and differ.

For a code , one can calculate the Hamming distance between any two distinct codewords. Out of this table of distances, there is a minimum value , which is called the minimum distance of . In other words,

The minimum distance of is very important number, since it gives the smallest number of errors needed to change one codeword into another.

When a codeword is transmitted over a noisy channel, errors are introduced into some of the entries of the vector. We correct these errors by finding the codeword whose Hamming distance from the received vector is as small as possible. In other words, we change the received vector to a codeword by changing the fewest places possible. This is called nearest neighbor decoding.

We say that a code can detect up to errors if changing a codeword in at most places cannot change it to another codeword. The code can correct up to errors if, whenever changes are made at or fewer places in a codeword , then the closest codeword is still . This definition says nothing about an efficient algorithm for correcting the errors. It simply requires that nearest neighbor decoding gives the correct answer when there are at most errors. An important result from the theory of error correcting codes is the following.

Theorem

1. A code can detect up to errors if . 2. A code can correct up to errors if .

Proof.

Suppose that . If a codeword is sent and or fewer errors occur, then the received message cannot be a different codeword. Hence, an error is detected.

Suppose that . Assume that the codeword is sent and the received word has or fewer errors; that is, . If is any other codeword besides , we claim that . To see this, suppose that . Then, by applying the triangle inequality, we have

This is a contradiction, so . Since has or fewer errors, nearest neighbor decoding successfully decodes to .

How does one find the nearest neighbor? One way is to calculate the distance between the received message and each of the codewords, then select the codeword with the smallest Hamming distance. In practice, this is impractical for large codes. In general, the problem of decoding is challenging, and considerable research effort is devoted to looking for fast decoding algorithms. In later sections, we’ll discuss a few decoding techniques that have been developed for special classes of codes.

Before continuing, it is convenient to introduce some notation.

Notation

A code of length , with codewords, and with minimum distance , is called an code.

When we discuss linear codes, we’ll have a similar notation, namely, an code. Note that this latter notation uses square brackets, while the present one uses curved parentheses. (These two similar notations cause less confusion than one might expect!) The relation is that an binary linear code is an code.

The binary repetition code is a code. The Hadamard code of Exercise 6, Section 24.1, is a code (it could correct up to 7 errors because ).

If we have a -ary code, then we define the code rate, or information rate, by

For example, for the Hadamard code, . The code rate represents the ratio of the number of input data symbols to the number of transmitted code symbols. It is an important parameter to consider when implementing real-world systems, as it represents what fraction of the bandwidth is being used to transmit actual data. The code rate was mentioned in Examples 4 and 6 in Section 24.1. A few limitations on the code rate will be discussed in Section 24.3.

Given a code, it is possible to construct other codes that are essentially the same. Suppose that we have a codeword that is expressed as . Then we may define a positional permutation of by permuting the order of the entries of . For example, the new vector is a positional permutation of . Another type of operation that can be done is a symbol permutation. Suppose that we have a permutation of the -ary symbols. Then we may fix a position and apply this permutation of symbols to that fixed position for every codeword. For example, suppose that we have the following permutation of the ternary symbols , and that we have the following codewords: , , and . Then applying the permutation to the second position of all of the codewords gives the following vectors: , , and .

Formally, we say that two codes are equivalent if one code can be obtained from the other by a series of the following operations:

Permuting the positions of the code

Permuting the symbols appearing in a fixed position of all codewords

It is easy to see that all codes equivalent to an code are also codes. However, for certain choices of , there can be several inequivalent codes.