20.3 Huffman Codes

Information theory originated in the late 1940s from the seminal papers by Claude Shannon. One of the primary motivations behind Shannon’s mathematical theory of information was the problem of finding a more compact way of representing data. In short, he was concerned with the problem of compression. In this section we briefly touch on the relationship between entropy and compression and introduce Huffman codes as a method for more succinctly representing data.

For more on how to compress data, see [Cover-Thomas] or [Nelson-Gailly].

Example

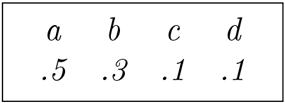

Suppose we have an alphabet with four letters , and suppose these letters appear in a text with frequencies as follows.

We could represent as the binary string 00, as 01, as 10, and as 11. This means that the message would average two bits per letter. However, suppose we represent as 1, as 01, as 001, and as 000. Then the average number of bits per letter is

(the number of bits for times the frequency of , plus the number of bits for times the frequency of , etc.). This encoding of the letters is therefore more efficient.

In general, we have a random variable with outputs in a set . We want to represent the outputs in binary in an efficient way; namely, the average number of bits per output should be as small as possible.

An early example of such a procedure is Morse code, which represents letters as sequences of dots and dashes and was developed to send messages by telegraph. Morse asked printers which letters were used most, and made the more frequent letters have smaller representations. For example, is represented as and as . But is and is .

A more recent method was developed by Huffman. The idea is to list all the outputs and their probabilities. The smallest two are assigned 1 and 0 and then combined to form an output with a larger probability. The same procedure is then applied to the new list, assigning 1 and 0 to the two smallest, then combining them to form a new list. This procedure is continued until there is only one output remaining. The binary strings are then obtained by reading backward through the procedure, recording the bits that have been assigned to a given output and to combinations containing it. This is best explained by an example.

Suppose we have outputs with probabilities , as in the preceding example. The diagram in Figure 20.1 gives the procedure.

Figure 20.1 An Example of Huffman Encoding

Note that when there were two choices for the lowest, we made a random choice for which one received 0 and which one received 1. Tracing backward through the table, we see that only received a 1, received 01, received 001, and received 000. These are exactly the assignments made previously that gave a low number of bits per letter.

A useful feature of Huffman encoding is that it is possible to read a message one letter at a time. For example, the string 011000 can only be read as ; moreover, as soon as we have read the first two bits 01, we know that the first letter is .

Suppose instead that we wrote the bits assigned to letters in reverse order, so is 10 and is 001. Then the message 101000 cannot be determined until all bits have been read, since it potentially could start with or .

Even worse, suppose we had assigned 0 to instead of 1. Then the messages and would be the same. It is possible to show that Huffman encoding avoids these two problems.

The average number of bits per output is closely related to the entropy.

Theorem

Let be the average number of bits per output for Huffman encoding for the random variable . Then

This result agrees with the interpretation that the entropy measures how many bits of information are contained in the output of . We omit the proof. In our example, the entropy is